repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

sequencelengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

deepset-ai/haystack | nlp | 9,104 | DOCX Converter does not resolve link information | **Is your feature request related to a problem? Please describe.**

DOCX Converter does not resolve the link information of named links. Link information is dropped and unavailable for chunking.

**Describe the solution you'd like**

As a user I would like to specify the link format (similar to table_format), like markdown: [name-of-link](link-target).

**Describe alternatives you've considered**

A clear and concise description of any alternative solutions or features you've considered.

**Additional context**

https://github.com/deepset-ai/haystack/blob/6db8f0a40d85f08c4f29a0dc951b79e55cbbf606/haystack/components/converters/docx.py#L205 | open | 2025-03-24T18:35:35Z | 2025-03-24T18:35:35Z | https://github.com/deepset-ai/haystack/issues/9104 | [] | deep-rloebbert | 0 |

mitmproxy/mitmproxy | python | 6,235 | Certificate has expired | #### Problem Description

I have renewed my certificates because I have encountered this message, and after generating a new one, I still get the message.

<img width="508" alt="image" src="https://github.com/mitmproxy/mitmproxy/assets/3736805/7a9c8e42-2a14-4cf0-a344-649bd523fab9">

When I try to use mitm:

`[11:28:54.204][127.0.0.1:49414] Server TLS handshake failed. Certificate verify failed: certificate has expired`

#### System Information

Mitmproxy: 9.0.1

Python: 3.9.1

OpenSSL: OpenSSL 3.0.7 1 Nov 2022

Platform: macOS-13.4.1-arm64-arm-64bit

| closed | 2023-07-08T10:36:14Z | 2023-07-08T10:50:13Z | https://github.com/mitmproxy/mitmproxy/issues/6235 | [

"kind/triage"

] | AndreMPCosta | 1 |

openapi-generators/openapi-python-client | rest-api | 675 | VSCode pylance raised `reportPrivateImportUsage` error. | **Describe the bug**

In vscode,

current templates will cause pylance to raises the `reportPrivateImportUsage`.

**To Reproduce**

try.py

```py

from the_client import AuthenticatedClient

from the_client.api.the_tag import get_stuff

from the_client.models import Stuff

```

pylance message:

```

"AuthenticatedClient" is not exported from module "the_client"

Import from "the_client.client" instead

```

```

"Stuff" is not exported from module "the_client.models"

Import from "the_client.models.stuff" instead`

```

**Expected behavior**

The class should be imported without any error message.

It can be fixed by adding `__all__` symbol to solve this issue.

ref: https://github.com/microsoft/pyright/blob/main/docs/typed-libraries.md#library-interface

**OpenAPI Spec File**

Not related to OpenAPI spec.

**Desktop (please complete the following information):**

- OS: win10

- Python Version: 3.10

- openapi-python-client version 0.11.6

**Additional context**

Add any other context about the problem here.

| closed | 2022-09-26T18:42:32Z | 2022-09-26T23:22:43Z | https://github.com/openapi-generators/openapi-python-client/issues/675 | [

"🐞bug"

] | EltonChou | 1 |

chaoss/augur | data-visualization | 2,160 | Installation Extremely Difficult for Mac Users | Please help us help you by filling out the following sections as thoroughly as you can.

**Description:**

Hello, I am working on installing and building augur from my mac and am running into numerous issues. I have been trying to follow the documentation as closely as possible, but haven't been able to install correctly.

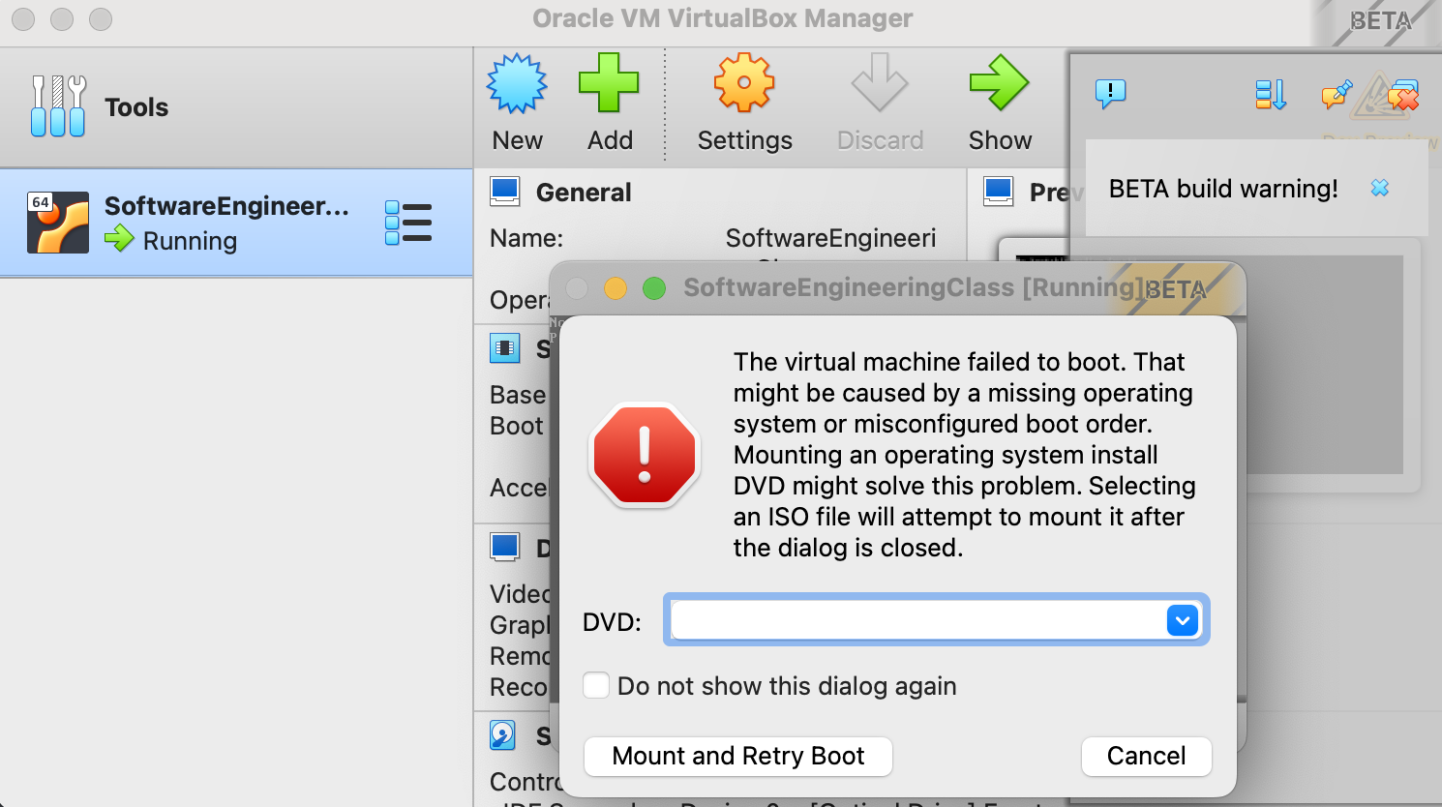

At first I tried installing using virtual box, which I downloaded and set up exactly how the documentation described. However, I could not get the machine to boot up. I got the error that: "The virtual machine failed to boot"... (screenshot below). I am confident that I followed the documentation to a tee and have retried installing 3 different times with the same result

After that failed, I just tried to clone and install from the command line. I was able to install the repo successfully and create a virtual environment. However, when I ran `make install` I got the error that `Failed to build pandas psutil h5py greenlet` I do not understand why this is the case, no error message was ever printed during installation and I have downloaded the packages just fine outside of the virtual environment. Everything looks like it was running fine until I get the error message at the end. Any ideas on how to debug either one of these 2 issues?

**Software versions:**

- Augur: v0.43.10

- OS: macOS (M1 chip) | closed | 2023-01-30T00:34:44Z | 2023-07-18T11:23:01Z | https://github.com/chaoss/augur/issues/2160 | [] | NerdyBaseballDude | 1 |

art049/odmantic | pydantic | 420 | Datetime not updating and not updating frequently | Hi all,

not sure if this is my pydantic model configuration problem, but i found some datetime fields not updating correctly and consistently (inside Mongodb Compass)

# Bug

2 Problems:

1. When using Pydantic V2.5 having the `@model_validator` or `@field_validator` decorator in the respective model to handle datetime update, Mongodb engine does not update `datetime` fields with `session.save()` . I tried / experimented using this for multiple fields for other datatypes, i.e., `string`, and `UUID`, both update consistently into the database.

2. Using `<collection>.model_update()` as recommended in the docs, does it correctly to update `datetime` fields. However the frequency of update is inconsistent.

**Temporary fix**

To overcome this (for the time being), i used `<collection>.model_copy()` to make a quick copy with `session.save()` . This now updates the document datetime field at the consistent rate.

**Things to note:**

I'm saving / updating documents in mongodb at the rate of 1 doc per sec and 3 doc per sec.

What i noticed after digging around is, inside the `_BaseODMModel` it does not capture the fields as `__fields_modified__` and `is_type_mutable` returns as false. Not sure if that helps

### Current Behavior

```

### Schema

from odmantic import Model, Field, bson

from odmantic.bson import BSON_TYPES_ENCODERS

from odmantic.config import ODMConfigDict

from pydantic import field_validator, UUID4, model_validator, BaseModel

from bson.binary import Binary

from datetime import datetime

from typing import Any

from uuid import uuid4

from typing_extensions import Self

class BookCollection(Model):

id: UUID4 = Field(primary_field=True, default_factory=uuid4)

book_id: UUID4

borrorwer: str

created_at: datetime = Field(default_factory=datetime.utcnow)

updated_at: datetime = Field(default_factory=datetime.utcnow)

model_config = ODMConfigDict(

collection="BOOK_COLLECTION",

)

@model_validator(mode="before") # type: ignore

def update_field_updated_at(cls, input: dict) -> dict:

input["updated_at"] = datetime.utcnow()

return input

# > This one works. (Above does not), Also did this using @field_validator, only book_id works, not for updated_at (datetime)

# @model_validator(mode="before")

# def validate_field_book_id(cls, input: dict) -> dict:

# input["book_id"] = uuid4()

# return input

```

``````

@asynccontextmanager

async def get_mongodb() -> AsyncGenerator[MongoDB, None]:

try:

mongodb = MongoDB()

# 01 Start session

await mongodb.start_session()

yield mongodb

finally:

# 02 gracefully end session

await mongodb.end_session()

def func_update_id(new_id: string) -> None:

async with get_mongodb() as mongodb:

result = await mongodb.session.find_one(

BookCollection,

BookCollection.book_id == UUID("69e4ea5b-1751-4579-abb1-60c31e939c0f")

)

if result is None:

logger.error("Book Doc not found!")

return None

else:

# Method 1

# Bug 1 > datetime does not save into mongodb, but returned model shows its updated.

result.model_update()

result.book_id = new_id

data = await mongodb.session.save(result)

# Method 2

# Bug 2 > datetime saves into mongodb, but not consistently.

result.model_update({"book_id": new_id})

data = await mongodb.session.save(result)

# quick fix

result.book_id = new_id

data = await mongodb.session.save(result.model_copy())

### Expected behavior

To update `datetime` fields in mongodb engine at a consistent rate using pydantic decorators

### Environment

- ODMantic version: "^1.0.0"

- MongoDB version: [6.00](mongo:6.0) Docker

- Pydantic: ^2.5

- Mongodb Compass: 1.35 | open | 2024-02-24T08:33:13Z | 2024-02-26T01:58:40Z | https://github.com/art049/odmantic/issues/420 | [

"bug"

] | TyroneTang | 0 |

keras-team/keras | machine-learning | 20,750 | Unrecognized keyword arguments passed to GRU: {'time_major': False} using TensorFlow 2.18 and Python 3.12 |

Hi everyone,

I encountered an issue when trying to load a Keras model saved as an `.h5` file. Here's the setup:

- **Python version**: 3.12

- **TensorFlow version**: 2.18

- **Code**:

```python

from tensorflow.keras.models import load_model

# Trying to load a pre-saved model

model = load_model('./resources/pd_voice_gru.h5')

```

- **Error message**:

```

ValueError: Unrecognized keyword arguments passed to GRU: {'time_major': False}

```

**What I've tried**:

1. Checked the TensorFlow and Keras versions compatibility.

2. Attempted to manually modify the `.h5` file (unsuccessfully).

3. Tried to re-save the model in a different format but don't have the original training script.

**Questions**:

1. Why is `time_major` causing this error in TensorFlow 2.18?

2. Is there a way to ignore or bypass this parameter during model loading?

3. If the issue is due to version incompatibility, which TensorFlow version should I use?

Any help or suggestions would be greatly appreciated!

Thank you! | closed | 2025-01-11T08:31:25Z | 2025-02-10T02:02:08Z | https://github.com/keras-team/keras/issues/20750 | [

"type:support",

"stat:awaiting response from contributor",

"stale"

] | HkThinker | 4 |

robotframework/robotframework | automation | 4,437 | The language(s) used to create the AST should be set as an attribute in the generated `File`. | I think this is interesting in general and needed if the language is ever going to be set in the `File` itself (because afterwards additional processing is needed to deal with bdd prefixes and this would need to be done based on information from the file and not from a global setting). | closed | 2022-08-16T17:59:54Z | 2022-08-19T12:13:58Z | https://github.com/robotframework/robotframework/issues/4437 | [] | fabioz | 1 |

2noise/ChatTTS | python | 756 | passing `past_key_values` as a tuple | We detected that you are passing `past_key_values` as a tuple and this is deprecated and will be removed in v4.43. Please use an appropriate `Cache` class (https://huggingface.co/docs/transformers/v4.41.3/en/internal/generation_utils#transformers.Cache) | open | 2024-09-16T06:55:11Z | 2024-09-17T12:58:01Z | https://github.com/2noise/ChatTTS/issues/756 | [

"enhancement",

"help wanted",

"algorithm"

] | xjlv1113 | 1 |

python-gino/gino | sqlalchemy | 191 | CRUDModel.get() does not work for string keys | * GINO version: 0.6.2

* Python version: 3.6.0

* Operating System: Ubuntu 17.10

### Description

await SomeModel.get('this key is a string') fails because the param is a string. gino thinks the param is a sequence of keys and tries to iterate it.

| closed | 2018-04-04T16:28:02Z | 2018-04-06T11:32:37Z | https://github.com/python-gino/gino/issues/191 | [

"bug"

] | aglassdarkly | 1 |

521xueweihan/HelloGitHub | python | 2,234 | 推荐个最好的Vue方向table库 VXE-table,甚至不止于table | ## 推荐项目

<!-- 这里是 HelloGitHub 月刊推荐项目的入口,欢迎自荐和推荐开源项目,唯一要求:请按照下面的提示介绍项目。-->

<!-- 点击上方 “Preview” 立刻查看提交的内容 -->

<!--仅收录 GitHub 上的开源项目,请填写 GitHub 的项目地址-->

- 项目地址:https://github.com/x-extends/vxe-table

<!--请从中选择(C、C#、C++、CSS、Go、Java、JS、Kotlin、Objective-C、PHP、Python、Ruby、Swift、其它、书籍、机器学习)-->

- 类别:JS

<!--请用 20 个左右的字描述它是做什么的,类似文章标题让人一目了然 -->

- 项目标题:

Vue生态内最好的表格和表单组件

<!--这是个什么项目、能用来干什么、有什么特点或解决了什么痛点,适用于什么场景、能够让初学者学到什么。长度 32-256 字符-->

- 项目描述:

无限长能渲染大数据的虚拟滚动表格(包括树形和行扩展)。大数据懒加载的树形表格。配置式书写表单表格等。全局任意组件封装渲染器,以声明使用在表单表格内。

<!--令人眼前一亮的点是什么?类比同类型项目有什么特点!-->

- 亮点:除了UI组件没有,差不多你能想到的需求都能实现,比起Element和antdv的表格高出好几个大气层

- 示例代码:(可选)

-- DOM:

<vxe-grid v-bind="gridSettings" class="mg-top-md" @cell-dblClick="editRow"></vxe-grid>

-- JS:

computed:{

gridSettings () {

return {

ref: 'mainGrid',

data: this.shiftData,

height: 600,

border: 'full',

align: 'center',

columns: [

{ field: 'shiftName', title: '班次名称', width: 180 },

{ field: 'dept', title: '班次部门', minWidth: 180 },

{ field: 'isFixed', title: '考勤分类', width: 100, formatter: ({ cellValue }) => cellValue ? '固定班次' : '其他班次' },

{

field: 'startAt', title: '上班时间', width: 100,

formatter: ({ cellValue, row }) => this.getFixedTime(cellValue, row.flexMinutes)

},

{

field: 'endAt', title: '下班时间', width: 100,

formatter: ({ cellValue, row }) => this.getFixedTime(cellValue, row.flexMinutes)

},

{ field: 'breakFrom', title: '间休开始时间', width: 120, formatter: ({ cellValue }) => this.formatTime(cellValue) },

{ field: 'breakTo', title: '间休结束时间', width: 120, formatter: ({ cellValue }) => this.formatTime(cellValue) },

{

field: 'weekendType',

title: '休息日',

width: 260,

formatter: ({ cellValue }) => cellValue ? this.weekendTypeOptions.find(f => f.value === cellValue).label : '周末双休'

},

{

field: 'operation',

title: '操作',

width: 220,

fixed: 'right',

cellRender: {

name: '$buttons',

children: [

{

props: { content: '分配部门', status: 'primary', icon: 'fa fa-wrench' },

events: { click: this.startDistribution }

},

{

props: { content: '删除', status: 'danger', icon: 'fa fa-trash' },

events: { click: this.deleteShift }

}

]

}

}

]

}

},

}

- 截图:(可选)gif/png/jpg

- 后续更新计划:

| closed | 2022-06-01T06:27:44Z | 2022-07-28T01:31:03Z | https://github.com/521xueweihan/HelloGitHub/issues/2234 | [

"已发布",

"JavaScript 项目"

] | adoin | 1 |

lux-org/lux | pandas | 362 | [BUG] Matplotlib code missing computed data for BarChart, LineChart and ScatterChart | **Describe the bug**

Without `self.code += f”df = pd.DataFrame({str(self.data.to_dict())})\n”`, exported BarChart, LineChart and ScatterChart that contain computed data throw an error.

**To Reproduce**

```

df = pd.read_csv('https://github.com/lux-org/lux-datasets/blob/master/data/hpi.csv?raw=true')

df

```

```

vis = df.recommendation["Occurrence"][0]

vis

print (vis.to_code("matplotlib"))

```

**Expected behavior**

Should render single BarChart

**Screenshots**

<img width="827" alt="Screen Shot 2021-04-15 at 11 22 25 AM" src="https://user-images.githubusercontent.com/11529801/114919503-2b04cc00-9ddd-11eb-90b1-1db3e59caa68.png">

Expected:

<img width="856" alt="Screen Shot 2021-04-15 at 11 22 51 AM" src="https://user-images.githubusercontent.com/11529801/114919507-2d672600-9ddd-11eb-8206-10801c9eb055.png">

| open | 2021-04-15T18:25:33Z | 2021-04-15T20:47:32Z | https://github.com/lux-org/lux/issues/362 | [

"bug"

] | caitlynachen | 0 |

docarray/docarray | fastapi | 1,824 | Integrate new Vector search library from Spotify | ### Initial Checks

- [X] I have searched Google & GitHub for similar requests and couldn't find anything

- [X] I have read and followed [the docs](https://docs.docarray.org) and still think this feature is missing

### Description

Spotify has released VOYAGER (https://github.com/spotify/voyager) a new vector search library

I propose to have a VoyagerIndex expecting to be very similar to HNSWIndex.

### Affected Components

- [X] [Vector Database / Index](https://docs.docarray.org/user_guide/storing/docindex/)

- [ ] [Representing](https://docs.docarray.org/user_guide/representing/first_step)

- [ ] [Sending](https://docs.docarray.org/user_guide/sending/first_step/)

- [ ] [storing](https://docs.docarray.org/user_guide/storing/first_step/)

- [ ] [multi modal data type](https://docs.docarray.org/data_types/first_steps/) | open | 2023-10-21T06:57:44Z | 2023-12-12T08:23:27Z | https://github.com/docarray/docarray/issues/1824 | [] | JoanFM | 3 |

pydantic/pydantic-ai | pydantic | 915 | Evals | (Or Evils as I'm coming to think of them)

We want to build an open-source, sane way to score the performance of LLM calls that is:

* local first - so you don't need to use a service

* flexible enough to work with whatever best practice emerges — ideally usable for any code that is stochastic enough to require scoring beyond passed/failed (that means LLM SDKs directly or even other agent frameworks)

* usable both for "offline evals" (unit-test style checks on performance) and "online evals" measuring performance in production or equivalent (presumably using an observability platform like Pydantic Logfire)

* usable with Pydantic Logfire when and where that actually helps

I believe @dmontagu has a plan. | open | 2025-02-12T20:43:19Z | 2025-02-12T21:06:33Z | https://github.com/pydantic/pydantic-ai/issues/915 | [

"Feature request"

] | samuelcolvin | 0 |

AutoGPTQ/AutoGPTQ | nlp | 20 | Install fails due to missing nvcc | Hi,

Thanks for this package, it seems very promising!

I've followed the instructions to install from source, but I get an error. Here are what I believe are the relevant lines:

```

copying auto_gptq/nn_modules/triton_utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

copying auto_gptq/nn_modules/triton_utils/custom_autotune.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

running build_ext

/opt/miniconda3/lib/python3.10/site-packages/torch/utils/cpp_extension.py:476: UserWarning: Attempted to use ninja as the BuildExtension backend but we could not find ninja.. Falling back to using the slow distutils backend.

warnings.warn(msg.format('we could not find ninja.'))

error: [Errno 2] No such file or directory: '/usr/local/cuda/bin/nvcc'

```

It looks like I don't have nvcc installed, but apparently nvcc [isn't necessary](https://discuss.pytorch.org/t/pytorch-finds-cuda-despite-nvcc-not-found/166754) for PyTorch to run fine. My `nvidia-smi` command works fine.

Is there some way to remove the dependency on nvcc?

Thanks,

Dave | closed | 2023-04-26T18:22:51Z | 2023-04-26T19:47:21Z | https://github.com/AutoGPTQ/AutoGPTQ/issues/20 | [] | dwadden | 2 |

deepset-ai/haystack | nlp | 8,624 | Pyright type check breaks with version 2.8.0 | **Describe the bug**

The instantiation of a class decorated with the `@component` decorator fails with a type check error. Also, the type checker does not recognize the parameters.

This seems related to the deprecation fo the `is_greedy` parameter, as it is the only change between this release and the previous one (2.7.0)

**Error message**

`Argument missing for parameter "cls"`

**Expected behavior**

No type-check error.

**To Reproduce**

Type-check any pipeline instantiated with the library using Pyright

**FAQ Check**

- [x ] Have you had a look at [our new FAQ page](https://docs.haystack.deepset.ai/docs/faq)?

**System:**

- OS: linux

- GPU/CPU: cpu

- Haystack version (commit or version number): 2.8.0

- DocumentStore: N/A

- Reader: N/A

- Retriever: N/A

| closed | 2024-12-11T11:23:06Z | 2025-01-27T13:52:25Z | https://github.com/deepset-ai/haystack/issues/8624 | [

"P2"

] | Calcifer777 | 2 |

QuivrHQ/quivr | api | 3,456 | Enable text-to-database retrieval | We should enable the connection of our RAG system with our customers' internal databases.

This requires developing capabilities for text-to-sql, text-to-cypher, etc…

* text-to-sql:

* [https://medium.com/pinterest-engineering/how-we-built-text-to-sql-at-pinterest-30bad30dabff](https://medium.com/pinterest-engineering/how-we-built-text-to-sql-at-pinterest-30bad30dabff)

* [https://vanna.ai/docs/](https://vanna.ai/docs/)

* benchmark: [https://bird-bench.github.io/](https://bird-bench.github.io/) | closed | 2024-11-04T16:37:18Z | 2025-02-07T20:06:30Z | https://github.com/QuivrHQ/quivr/issues/3456 | [

"Stale",

"area: backend",

"rag: retrieval"

] | jacopo-chevallard | 2 |

jacobgil/pytorch-grad-cam | computer-vision | 483 | Memory leakage during multiple loss calculations | Thank you for publishing this software package, it is very useful for the community. However, when I perform multiple loss calculations, the memory will continue to grow and will not be released in the end, resulting in the memory continuously increasing during the training process. When I restart a CAM object in a loop, even if the object is deleted at the end of the loop, memory accumulates. Have you ever thought about why this situation happened and how can I avoid it?

| open | 2024-02-27T04:08:26Z | 2025-03-14T12:00:44Z | https://github.com/jacobgil/pytorch-grad-cam/issues/483 | [] | IzumiKDl | 2 |

airtai/faststream | asyncio | 1,676 | Feature: Add ability to specify on_assign, on_revoke, and on_lost callbacks for a Confluent subscriber | **Is your feature request related to a problem? Please describe.**

Yes, I want to know when my confluent Consumer gets topic partitions assigned and removed.

Currently, I reach through FastStream into confluent_kafka.Consumer.assignment() every time my k8s liveness probe runs, but its noisy and most notably, not *right* when it happens.

I may even, at times, want to do something with the informaiton beyond logging. Potentially clear some cached state, cancel some running threads/processes, etc...

**Describe the solution you'd like**

I want to specify at the subscriber registration level the callbacks that I want called, and for FastStream to pass them into the

confluent_kafka.Consumer.subscribe() call inside AsyncConfluentConsumer.

**Feature code example**

```python

from faststream import FastStream

...

broker = KafkaBroker(...)

@broker.subscriber(

"my-topic",

on_assign=lambda consumer, partitions: ...,

on_revoke=lambda consumer, partitions: ...,

)

def my_handler(body: str):

print(body)

```

**Describe alternatives you've considered**

I monkey patch AsyncConfluentConsumer at import time in the FastStream library.

```python

import faststream.confluent.broker.broker

from faststream.confluent.broker.broker import AsyncConfluentConsumer

from observing.observing import logger

class PatchedAsyncConfluentConsumer(AsyncConfluentConsumer):

"""A patched version of the AsyncConfluentConsumer class."""

def __init__(self, *topics, **kwargs):

super().__init__(*topics, **kwargs)

self.topic_partitions = set()

def on_revoke(self, consumer, partitions):

"""Conditionally pauses the consumer when partitions are revoked."""

self.topic_partitions -= set(partitions)

logger.info(

"Consumer rebalance event: partitions revoked.",

topic_partitions=dict(

n_revoked=len(partitions),

revoked=[

dict(topic=tp.topic, partition=tp.partition) for tp in partitions

],

n_current=len(self.topic_partitions),

current=[

dict(topic=tp.topic, partition=tp.partition)

for tp in self.topic_partitions

],

),

memberid=self.consumer.memberid(),

topics=self.topics,

config=dict(

group_id=self.config.get("group.id"),

group_instance_id=self.config.get("group.instance.id"),

),

)

def on_assign(self, consumer, partitions):

"""Conditionally resumes the consumer when partitions are assigned."""

self.topic_partitions |= set(partitions)

logger.info(

"Consumer rebalance event: partitions assigned.",

topic_partitions=dict(

n_assigned=len(partitions),

assigned=[

dict(topic=tp.topic, partition=tp.partition) for tp in partitions

],

n_current=len(self.topic_partitions),

current=[

dict(topic=tp.topic, partition=tp.partition)

for tp in self.topic_partitions

],

),

memberid=self.consumer.memberid(),

topics=self.topics,

config=dict(

group_id=self.config.get("group.id"),

group_instance_id=self.config.get("group.instance.id"),

),

)

async def start(self) -> None:

"""Starts the Kafka consumer and subscribes to the specified topics."""

self.consumer.subscribe(

self.topics, on_revoke=self.on_revoke, on_assign=self.on_assign

)

def patch_async_confluent_consumer():

logger.info("Patching AsyncConfluentConsumer.")

faststream.confluent.broker.broker.AsyncConfluentConsumer = (

PatchedAsyncConfluentConsumer

)

```

Obviously, this is ideal for no one.

**Additional context**

| open | 2024-08-12T20:14:54Z | 2024-08-21T18:49:45Z | https://github.com/airtai/faststream/issues/1676 | [

"enhancement",

"good first issue",

"Confluent"

] | andreaimprovised | 0 |

tqdm/tqdm | jupyter | 1,539 | Feature proposal: JSON output to arbitrary FD to allow programmatic consumers | This ticket is spawned by a recent Stack Overflow question, [grep a live progress bar from a running program](https://stackoverflow.com/questions/77699411/linux-grep-a-live-progress-bar-from-a-running-program). The party asking this question was looking for a way to run a piece of software -- which, as it turns out, uses tqdm for its progress bar -- and monitor its progress programmatically, triggering an event when that progress exceeded a given threshold.

This is not an unreasonable request, and I've occasionally had the need for similar things in my career. Generally, solving such problems ends up going one of a few routes:

- Patching the software being run to add hooks that trigger when progress meets desired milestones (unfortunate because invasive).

- Parsing the output stream being generated (unfortunate because fragile -- prone to break when the progress bar format is updated, the curses or similar library being used to generate events changes, the terminal type or character set in use differs from that which the folks building the parser anticipated, etc).

- Leveraging support for writing progress information in a well-defined format to a well-defined destination.

This is a feature request to make the 3rd of those available by default across all applications using TQDM.

One reasonable interface accessible to non-Python consumers would be environment variables, such as:

```bash

TQDM_FD=3 TQDM_FORMAT=json appname...

```

...to instruct progress data to be written to file descriptor 3 (aka the file-like object returned by `os.fdopen(3, 'a')`) in JSONL form, such that whatever software is spawning `appname` can feed contents through a JSON parser. Some examples from the README might in this form look like:

```json

{"units": "bytes", "total_units": 369098752, "curr_units": 160432128, "pct": 44, "units_per_second": 11534336, "state": "Processing", "time_spent": "00:14", "time_remaining": "00:18"}

{"units": "bytes", "total_units": 369098752, "curr_units": 155189248, "pct": 42, "units_per_second": 11429478, "state": "Compressed", "time_spent": "00:14", "time_remaining": "00:19"}

```

...allowing out-of-process tools written in non-Python languages to perform arbitrary operations based on that status.

---

- [X] I have marked all applicable categories:

+ [ ] documentation request (i.e. "X is missing from the documentation." If instead I want to ask "how to use X?" I understand [StackOverflow#tqdm] is more appropriate)

+ [X] new feature request

- [ ] I have visited the [source website], and in particular

read the [known issues]

- [X] I have searched through the [issue tracker] for duplicates

- [ ] I have mentioned version numbers, operating system and

environment, where applicable:

```python

import tqdm, sys

print(tqdm.__version__, sys.version, sys.platform)

```

[source website]: https://github.com/tqdm/tqdm/

[known issues]: https://github.com/tqdm/tqdm/#faq-and-known-issues

[issue tracker]: https://github.com/tqdm/tqdm/issues?q=

[StackOverflow#tqdm]: https://stackoverflow.com/questions/tagged/tqdm

| open | 2023-12-22T14:23:55Z | 2023-12-22T14:23:55Z | https://github.com/tqdm/tqdm/issues/1539 | [] | charles-dyfis-net | 0 |

lepture/authlib | flask | 423 | Backport fix to support Django 4 | Authlib 0.15.5 does not work with Django 4 due to the removal of `HttpRequest.get_raw_uri`. This is already fixed in master via https://github.com/lepture/authlib/commit/b3847d89dcd4db3a10c9b828de4698498a90d28c. Please backport the fix.

**To Reproduce**

With the latest release 0.15.5, run `tox -e py38-django` and see tests failing.

**Expected behavior**

All tests pass.

**Environment:**

- OS: Fedora

- Python Version: 3.8

- Authlib Version: 0.15.5 | closed | 2022-01-28T15:14:41Z | 2022-11-01T12:57:08Z | https://github.com/lepture/authlib/issues/423 | [

"bug"

] | V02460 | 1 |

flairNLP/flair | pytorch | 3,151 | [Question]: | ### Question

How can I create a custom evaluation metric for the SequenceTagger? I would like to use F05 and not F1-score.

I can't find any option to the trainer for alternative scoring during training. | closed | 2023-03-16T12:29:48Z | 2023-08-21T09:09:31Z | https://github.com/flairNLP/flair/issues/3151 | [

"question",

"wontfix"

] | larsbun | 3 |

pytorch/pytorch | numpy | 149,635 | avoid guarding on max() unnecessarily | here's a repro. theoretically the code below should not require a recompile. We are conditionally padding, producing an output tensor of shape max(input_size, 16). Instead though, we specialize on the pad value, and produce separate graphs for the `size_16` and `size_greater_than_16` cases

```

import torch

@torch.compile(backend="eager")

def f(x):

padded_size = max(x.shape[0], 16)

padded_tensor = torch.ones(padded_size, *x.shape[1:])

return padded_tensor + x.sum()

x = torch.arange(15)

torch._dynamo.mark_dynamic(x, 0)

out = f(x)

x = torch.arange(17)

torch._dynamo.mark_dynamic(x, 0)

out = f(x)

```

cc @chauhang @penguinwu @ezyang @bobrenjc93 @zou3519 | open | 2025-03-20T17:08:26Z | 2025-03-24T09:52:13Z | https://github.com/pytorch/pytorch/issues/149635 | [

"triaged",

"oncall: pt2",

"module: dynamic shapes",

"vllm-compile"

] | bdhirsh | 5 |

biolab/orange3 | numpy | 6,514 | Installation problem "Cannot continue" | <!--

Thanks for taking the time to report a bug!

If you're raising an issue about an add-on (i.e., installed via Options > Add-ons), raise an issue in the relevant add-on's issue tracker instead. See: https://github.com/biolab?q=orange3

To fix the bug, we need to be able to reproduce it. Please answer the following questions to the best of your ability.

-->

```

Creating an new conda env in "C:\Program Files\Orange"

Output folder: C:\Users\OGBOLU~1\AppData\Local\Temp\nsxC898.tmp\Orange-installer-data\conda-pkgs

Extract: _py-xgboost-mutex-2.0-cpu_0.tar.bz2

Extract: anyio-3.6.2-pyhd8ed1ab_0.tar.bz2

Extract: anyqt-0.2.0-pyh6c4a22f_0.tar.bz2

Extract: asttokens-2.2.1-pyhd8ed1ab_0.conda

Extract: backcall-0.2.0-pyh9f0ad1d_0.tar.bz2

Extract: backports-1.0-pyhd8ed1ab_3.conda

Extract: backports.functools_lru_cache-1.6.4-pyhd8ed1ab_0.tar.bz2

Extract: baycomp-1.0.2-py_1.tar.bz2

Extract: blas-2.116-openblas.tar.bz2

Extract: blas-devel-3.9.0-16_win64_openblas.tar.bz2

Extract: bottleneck-1.3.7-py39hc266a54_0.conda

Extract: brotli-1.0.9-hcfcfb64_8.tar.bz2

Extract: brotli-bin-1.0.9-hcfcfb64_8.tar.bz2

Extract: brotlipy-0.7.0-py39ha55989b_1005.tar.bz2

Extract: bzip2-1.0.8-h8ffe710_4.tar.bz2

Extract: ca-certificates-2022.12.7-h5b45459_0.conda

Extract: cachecontrol-0.12.11-pyhd8ed1ab_1.conda

Extract: cairo-1.16.0-hdecc03f_1015.conda

Extract: catboost-1.2-py39hcbf5309_4.conda

Extract: certifi-2022.12.7-pyhd8ed1ab_0.conda

Extract: cffi-1.15.1-py39h68f70e3_3.conda

Extract: chardet-5.1.0-py39hcbf5309_0.conda

Extract: charset-normalizer-3.1.0-pyhd8ed1ab_0.conda

Extract: colorama-0.4.6-pyhd8ed1ab_0.tar.bz2

Extract: commonmark-0.9.1-py_0.tar.bz2

Extract: conda-spec.txt

Extract: contourpy-1.0.7-py39h1f6ef14_0.conda

Extract: cryptography-40.0.2-py39hb6bd5e6_0.conda

Extract: cycler-0.11.0-pyhd8ed1ab_0.tar.bz2

Extract: debugpy-1.6.7-py39h99910a6_0.conda

Extract: decorator-5.1.1-pyhd8ed1ab_0.tar.bz2

Extract: dictdiffer-0.9.0-pyhd8ed1ab_0.tar.bz2

Extract: docutils-0.19-py39hcbf5309_1.tar.bz2

Extract: et_xmlfile-1.1.0-pyhd8ed1ab_0.conda

Extract: executing-1.2.0-pyhd8ed1ab_0.tar.bz2

Extract: expat-2.5.0-h63175ca_1.conda

Extract: font-ttf-dejavu-sans-mono-2.37-hab24e00_0.tar.bz2

Extract: font-ttf-inconsolata-3.000-h77eed37_0.tar.bz2

Extract: font-ttf-source-code-pro-2.038-h77eed37_0.tar.bz2

Extract: font-ttf-ubuntu-0.83-hab24e00_0.tar.bz2

Extract: fontconfig-2.14.2-hbde0cde_0.conda

Extract: fonts-conda-ecosystem-1-0.tar.bz2

Extract: fonts-conda-forge-1-0.tar.bz2

Extract: fonttools-4.39.3-py39ha55989b_0.conda

Extract: freetype-2.12.1-h546665d_1.conda

Extract: fribidi-1.0.10-h8d14728_0.tar.bz2

Extract: future-0.18.3-pyhd8ed1ab_0.conda

Extract: getopt-win32-0.1-h8ffe710_0.tar.bz2

Extract: gettext-0.21.1-h5728263_0.tar.bz2

Extract: glib-2.76.2-h12be248_0.conda

Extract: glib-tools-2.76.2-h12be248_0.conda

Extract: graphite2-1.3.13-1000.tar.bz2

Extract: graphviz-8.0.5-h51cb2cd_0.conda

Extract: gst-plugins-base-1.22.0-h001b923_2.conda

Extract: gstreamer-1.22.0-h6b5321d_2.conda

Extract: gts-0.7.6-h7c369d9_2.tar.bz2

Extract: h11-0.14.0-pyhd8ed1ab_0.tar.bz2

Extract: h2-4.1.0-py39hcbf5309_0.tar.bz2

Extract: harfbuzz-7.2.0-h196d34a_0.conda

Extract: hpack-4.0.0-pyh9f0ad1d_0.tar.bz2

Extract: httpcore-0.17.0-pyhd8ed1ab_0.conda

Extract: httpx-0.24.0-pyhd8ed1ab_1.conda

Extract: hyperframe-6.0.1-pyhd8ed1ab_0.tar.bz2

Extract: icu-72.1-h63175ca_0.conda

Extract: idna-3.4-pyhd8ed1ab_0.tar.bz2

Extract: importlib-metadata-6.6.0-pyha770c72_0.conda

Extract: importlib-resources-5.12.0-pyhd8ed1ab_0.conda

Extract: importlib_metadata-6.6.0-hd8ed1ab_0.conda

Extract: importlib_resources-5.12.0-pyhd8ed1ab_0.conda

Extract: install.bat

Extract: ipykernel-6.14.0-py39h832f523_0.tar.bz2

Extract: ipython-8.4.0-py39hcbf5309_0.tar.bz2

Extract: ipython_genutils-0.2.0-py_1.tar.bz2

Extract: jaraco.classes-3.2.3-pyhd8ed1ab_0.tar.bz2

Extract: jedi-0.18.2-pyhd8ed1ab_0.conda

Extract: joblib-1.2.0-pyhd8ed1ab_0.tar.bz2

Extract: jupyter_client-8.2.0-pyhd8ed1ab_0.conda

Extract: jupyter_core-5.3.0-py39hcbf5309_0.conda

Extract: keyring-23.13.1-py39hcbf5309_0.conda

Extract: keyrings.alt-4.2.0-pyhd8ed1ab_0.conda

Extract: kiwisolver-1.4.4-py39h1f6ef14_1.tar.bz2

Extract: krb5-1.20.1-heb0366b_0.conda

Extract: lcms2-2.15-h3e3b177_1.conda

Extract: lerc-4.0.0-h63175ca_0.tar.bz2

Extract: libblas-3.9.0-16_win64_openblas.tar.bz2

Extract: libbrotlicommon-1.0.9-hcfcfb64_8.tar.bz2

Extract: libbrotlidec-1.0.9-hcfcfb64_8.tar.bz2

Extract: libbrotlienc-1.0.9-hcfcfb64_8.tar.bz2

Extract: libcblas-3.9.0-16_win64_openblas.tar.bz2

Extract: libclang-16.0.3-default_h8b4101f_0.conda

Extract: libclang13-16.0.3-default_h45d3cf4_0.conda

Extract: libdeflate-1.18-hcfcfb64_0.conda

Extract: libexpat-2.5.0-h63175ca_1.conda

Extract: libffi-3.4.2-h8ffe710_5.tar.bz2

Extract: libflang-5.0.0-h6538335_20180525.tar.bz2

Extract: libgd-2.3.3-h2ed9e1d_6.conda

Extract: libglib-2.76.2-he8f3873_0.conda

Extract: libiconv-1.17-h8ffe710_0.tar.bz2

Extract: libjpeg-turbo-2.1.5.1-hcfcfb64_0.conda

Extract: liblapack-3.9.0-16_win64_openblas.tar.bz2

Extract: liblapacke-3.9.0-16_win64_openblas.tar.bz2

Extract: libogg-1.3.4-h8ffe710_1.tar.bz2

Extract: libopenblas-0.3.21-pthreads_h02691f0_0.tar.bz2

Extract: libpng-1.6.39-h19919ed_0.conda

Extract: libsodium-1.0.18-h8d14728_1.tar.bz2

Extract: libsqlite-3.40.0-hcfcfb64_1.conda

Extract: libtiff-4.5.0-h6c8260b_6.conda

Extract: libvorbis-1.3.7-h0e60522_0.tar.bz2

Extract: libwebp-1.3.0-hcfcfb64_0.conda

Extract: libwebp-base-1.3.0-hcfcfb64_0.conda

Extract: libxcb-1.13-hcd874cb_1004.tar.bz2

Extract: libxgboost-1.7.4-cpu_h20390bd_0.conda

Extract: libxml2-2.10.4-hc3477c8_0.conda

Extract: libzlib-1.2.13-hcfcfb64_4.tar.bz2

Extract: llvm-meta-5.0.0-0.tar.bz2

Extract: lockfile-0.12.2-py_1.tar.bz2

Extract: m2w64-gcc-libgfortran-5.3.0-6.tar.bz2

Extract: m2w64-gcc-libs-5.3.0-7.tar.bz2

Extract: m2w64-gcc-libs-core-5.3.0-7.tar.bz2

Extract: m2w64-gmp-6.1.0-2.tar.bz2

Extract: m2w64-libwinpthread-git-5.0.0.4634.697f757-2.tar.bz2

Extract: matplotlib-base-3.7.1-py39haf65ace_0.conda

Extract: matplotlib-inline-0.1.6-pyhd8ed1ab_0.tar.bz2

Extract: more-itertools-9.1.0-pyhd8ed1ab_0.conda

Extract: msgpack-python-1.0.5-py39h1f6ef14_0.conda

Extract: msys2-conda-epoch-20160418Janez-1.tar.bz2

Extract: munkres-1.1.4-pyh9f0ad1d_0.tar.bz2

Extract: nest-asyncio-1.5.6-pyhd8ed1ab_0.tar.bz2

Extract: networkx-3.1-pyhd8ed1ab_0.conda

Extract: numpy-1.24.3-py39h816b6a6_0.conda

Extract: openblas-0.3.21-pthreads_ha35c500_0.tar.bz2

Extract: openjpeg-2.5.0-ha2aaf27_2.conda

Extract: openmp-5.0.0-vc14_1.tar.bz2

Extract: openpyxl-3.1.2-py39ha55989b_0.conda

Extract: openssl-3.1.0-hcfcfb64_3.conda

Extract: opentsne-0.7.1-py39hbd792c9_0.conda

Extract: orange-canvas-core-0.1.31-pyhd8ed1ab_0.conda

Extract: orange-widget-base-4.21.0-pyhd8ed1ab_0.conda

Extract: orange3-3.35.0-py39h1679cfb_0.conda

Extract: packaging-23.1-pyhd8ed1ab_0.conda

Extract: pandas-1.5.3-py39h2ba5b7c_1.conda

Extract: pango-1.50.14-hd64ce24_1.conda

Extract: parso-0.8.3-pyhd8ed1ab_0.tar.bz2

Extract: pcre2-10.40-h17e33f8_0.tar.bz2

Extract: pickleshare-0.7.5-py_1003.tar.bz2

Extract: pillow-9.5.0-py39haa1d754_0.conda

Extract: pip-23.1.2-pyhd8ed1ab_0.conda

Extract: pixman-0.40.0-h8ffe710_0.tar.bz2

Extract: platformdirs-3.5.0-pyhd8ed1ab_0.conda

Extract: plotly-5.14.1-pyhd8ed1ab_0.conda

Extract: ply-3.11-py_1.tar.bz2

Extract: pooch-1.7.0-pyha770c72_3.conda

Extract: prompt-toolkit-3.0.38-pyha770c72_0.conda

Extract: psutil-5.9.5-py39ha55989b_0.conda

Extract: pthread-stubs-0.4-hcd874cb_1001.tar.bz2

Extract: pure_eval-0.2.2-pyhd8ed1ab_0.tar.bz2

Extract: py-xgboost-1.7.4-cpu_py39ha538f94_0.conda

Extract: pycparser-2.21-pyhd8ed1ab_0.tar.bz2

Extract: pygments-2.15.1-pyhd8ed1ab_0.conda

Extract: pyopenssl-23.1.1-pyhd8ed1ab_0.conda

Extract: pyparsing-3.0.9-pyhd8ed1ab_0.tar.bz2

Extract: pyqt-5.15.7-py39hb77abff_3.conda

Extract: pyqt5-sip-12.11.0-py39h99910a6_3.conda

Extract: pyqtgraph-0.13.3-pyhd8ed1ab_0.conda

Extract: pyqtwebengine-5.15.7-py39h2f4a3f1_3.conda

Extract: pysocks-1.7.1-py39hcbf5309_5.tar.bz2

Extract: python-3.9.12-hcf16a7b_1_cpython.tar.bz2

Extract: python-dateutil-2.8.2-pyhd8ed1ab_0.tar.bz2

Extract: python-graphviz-0.20.1-pyh22cad53_0.tar.bz2

Extract: python-louvain-0.16-pyhd8ed1ab_0.conda

Extract: python_abi-3.9-3_cp39.conda

Extract: pytz-2023.3-pyhd8ed1ab_0.conda

Extract: pywin32-304-py39h99910a6_2.tar.bz2

Extract: pywin32-ctypes-0.2.0-py39hcbf5309_1006.tar.bz2

Extract: pyyaml-6.0-py39ha55989b_5.tar.bz2

Extract: pyzmq-25.0.2-py39hea35a22_0.conda

Extract: qasync-0.24.0-pyhd8ed1ab_0.conda

Extract: qt-main-5.15.8-h7f2b912_9.conda

Extract: qt-webengine-5.15.8-h5b1ea0b_0.tar.bz2

Extract: qtconsole-5.4.3-pyhd8ed1ab_0.conda

Extract: qtconsole-base-5.4.3-pyha770c72_0.conda

Extract: qtpy-2.3.1-pyhd8ed1ab_0.conda

Extract: requests-2.29.0-pyhd8ed1ab_0.conda

Extract: scikit-learn-1.1.3-py39h6fe01c0_1.tar.bz2

Extract: scipy-1.10.1-py39hde5eda1_1.conda

Extract: serverfiles-0.3.0-py_0.tar.bz2

Extract: setuptools-67.7.2-pyhd8ed1ab_0.conda

Extract: sip-6.7.9-py39h99910a6_0.conda

Extract: sitecustomize.py

Extract: six-1.16.0-pyh6c4a22f_0.tar.bz2

Extract: sniffio-1.3.0-pyhd8ed1ab_0.tar.bz2

Extract: sqlite-3.40.0-hcfcfb64_1.conda

Extract: stack_data-0.6.2-pyhd8ed1ab_0.conda

Extract: tenacity-8.2.2-pyhd8ed1ab_0.conda

Extract: threadpoolctl-3.1.0-pyh8a188c0_0.tar.bz2

Extract: tk-8.6.12-h8ffe710_0.tar.bz2

Extract: toml-0.10.2-pyhd8ed1ab_0.tar.bz2

Extract: tomli-2.0.1-pyhd8ed1ab_0.tar.bz2

Extract: tornado-6.3-py39ha55989b_0.conda

Extract: traitlets-5.9.0-pyhd8ed1ab_0.conda

Extract: typing-extensions-4.5.0-hd8ed1ab_0.conda

Extract: typing_extensions-4.5.0-pyha770c72_0.conda

Extract: tzdata-2023c-h71feb2d_0.conda

Extract: ucrt-10.0.22621.0-h57928b3_0.tar.bz2

Extract: unicodedata2-15.0.0-py39ha55989b_0.tar.bz2

Extract: urllib3-1.26.15-pyhd8ed1ab_0.conda

Extract: vc-14.3-hb25d44b_16.conda

Extract: vc14_runtime-14.34.31931-h5081d32_16.conda

Extract: vs2015_runtime-14.34.31931-hed1258a_16.conda

Extract: wcwidth-0.2.6-pyhd8ed1ab_0.conda

Extract: wheel-0.40.0-pyhd8ed1ab_0.conda

Extract: win_inet_pton-1.1.0-py39hcbf5309_5.tar.bz2

Extract: xgboost-1.7.4-cpu_py39ha538f94_0.conda

Extract: xlrd-2.0.1-pyhd8ed1ab_3.tar.bz2

Extract: xlsxwriter-3.1.0-pyhd8ed1ab_0.conda

Extract: xorg-kbproto-1.0.7-hcd874cb_1002.tar.bz2

Extract: xorg-libice-1.0.10-hcd874cb_0.tar.bz2

Extract: xorg-libsm-1.2.3-hcd874cb_1000.tar.bz2

Extract: xorg-libx11-1.8.4-hcd874cb_0.conda

Extract: xorg-libxau-1.0.9-hcd874cb_0.tar.bz2

Extract: xorg-libxdmcp-1.1.3-hcd874cb_0.tar.bz2

Extract: xorg-libxext-1.3.4-hcd874cb_2.conda

Extract: xorg-libxpm-3.5.13-hcd874cb_0.tar.bz2

Extract: xorg-libxt-1.2.1-hcd874cb_2.tar.bz2

Extract: xorg-xextproto-7.3.0-hcd874cb_1003.conda

Extract: xorg-xproto-7.0.31-hcd874cb_1007.tar.bz2

Extract: xz-5.2.6-h8d14728_0.tar.bz2

Extract: yaml-0.2.5-h8ffe710_2.tar.bz2

Extract: zeromq-4.3.4-h0e60522_1.tar.bz2

Extract: zipp-3.15.0-pyhd8ed1ab_0.conda

Extract: zlib-1.2.13-hcfcfb64_4.tar.bz2

Extract: zstd-1.5.2-h12be248_6.conda

Output folder: C:\Users\OGBOLU~1\AppData\Local\Temp\nsxC898.tmp\Orange-installer-data\conda-pkgs

Installing packages (this might take a while)

Executing: cmd.exe /c install.bat "C:\Program Files\Orange" "C:\Users\Ogbolu Ify\miniconda3\condabin\conda.bat"

Creating a conda env in "C:\Program Files\Orange"

failed to create process.

Appending conda-forge channel

The system cannot find the path specified.

The system cannot find the path specified.

The system cannot find the path specified.

failed to create process.

The system cannot find the path specified.

The system cannot find the path specified.

The system cannot find the path specified.

The system cannot find the path specified.

The system cannot find the path specified.

0 file(s) copied.

"conda" command exited with 1. Cannot continue.

```

**What's wrong?**

<!-- Be specific, clear, and concise. Include screenshots if relevant. -->

<!-- If you're getting an error message, copy it, and enclose it with three backticks (```). -->

**How can we reproduce the problem?**

<!-- Upload a zip with the .ows file and data. -->

<!-- Describe the steps (open this widget, click there, then add this...) -->

**What's your environment?**

<!-- To find your Orange version, see "Help → About → Version" or `Orange.version.full_version` in code -->

- Operating system:

- Orange version:

- How you installed Orange:

| closed | 2023-07-19T10:20:29Z | 2023-08-18T09:27:39Z | https://github.com/biolab/orange3/issues/6514 | [

"bug report"

] | IfyOgbolu | 3 |

plotly/plotly.py | plotly | 4,111 | FigureWidget modifies the position of Title | Dear Plotly,

I have encountered the issue that when using FigureWidget ans subplot while keeping shared_yaxis=True, the title of a second subplot is changed to the left side.

This is a make_subplot object

And this is what occurs when the Figure object is pass to FigureWidget

Is there any solution to keep shared_yaxis=True and get the title of the second subplot on the right side [YAxis side option was used to generate the first figure) | closed | 2023-03-16T14:55:49Z | 2024-07-11T14:01:13Z | https://github.com/plotly/plotly.py/issues/4111 | [] | AndresOrtegaGuerrero | 1 |

keras-team/keras | pytorch | 20,490 | ModelCheckpoint loses .h5 save support, breaking retrocompatibility | **Title:** ModelCheckpoint Callback Fails to Save Models in .h5 Format in TensorFlow 2.17.0+

**Description:**

I'm experiencing an issue with TensorFlow's `tf.keras.callbacks.ModelCheckpoint` across different TensorFlow versions on different platforms.

**Background:**

* **Platform 1:** Windows with TensorFlow 2.10.0 (GPU-enabled).

* **Platform 2:** Docker container on Linux using TensorFlow 2.3.0 (nvcr.io/nvidia/tensorflow:20.09-tf2-py3).

With versions up to TensorFlow 2.15.0, I was able to save models in `.h5` format using `tf.keras.callbacks.ModelCheckpoint` with the `save_weights_only=False` parameter. This allowed for easy cross-platform loading of saved models.

**Problem:** Since TensorFlow 2.17.0, `tf.keras.callbacks.ModelCheckpoint` appears unable to save models in `.h5` format, breaking backward compatibility. Models can only be saved in the `.keras` format, which versions prior to 2.17.0 cannot load, creating a compatibility issue for users maintaining models across different TensorFlow versions.

**Steps to Reproduce:**

1. Use TensorFlow 2.17.0 or later.

2. Try saving a model with `tf.keras.callbacks.ModelCheckpoint` using `save_weights_only=False` and specifying `.h5` as the file format.

3. Load the model in a previous version, such as TensorFlow 2.10.0 or earlier.

**Expected Behavior:** The model should be saved in `.h5` format without error, maintaining backward compatibility with earlier versions.

**Actual Behavior:** The model cannot be saved in `.h5` format, only in `.keras` format, making it incompatible with TensorFlow versions prior to 2.17.0.

**Question:** Is there a workaround to save models in `.h5` format in TensorFlow 2.17.0+? Or, is there a plan to restore `.h5` support in future updates for backward compatibility?

**Environment:**

* TensorFlow version: 2.17.0+

* Operating systems: Windows, Linux (Docker)

**Thank you for your help and for maintaining this project!** | closed | 2024-11-13T09:56:49Z | 2024-11-28T17:41:41Z | https://github.com/keras-team/keras/issues/20490 | [

"type:Bug"

] | TeoCavi | 3 |

JaidedAI/EasyOCR | machine-learning | 570 | Detected short number | Hello. I have image:

but easyOCR do not work.

I try use `detect` with diferrent parameters, but method return empty.

then i tried to make my simple-detector:

```

def recognition_number(self, img: np.ndarray) -> str:

image = self._filtration(img)

thresh = cv2.adaptiveThreshold(image, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 3, 1)

cnts, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

ox = [i[0][0] for i in cnts[0]]

oy = [i[0][1] for i in cnts[0]]

data = self.reader.recognize(image, horizontal_list=[[min(ox), max(ox), min(oy), max(oy)]], free_list=[], allowlist="1234567890.,")

return "".join([el[1] for el in data])

```

this solved the problem and now the number is recognized.

is it possible to do something with a easyOCR `detect`? | closed | 2021-10-18T12:39:08Z | 2021-10-19T09:02:56Z | https://github.com/JaidedAI/EasyOCR/issues/570 | [] | sas9mba | 2 |

d2l-ai/d2l-en | deep-learning | 2,473 | GNNs theory and practice | Hi,

This is an excellent book to learn and practice Deep Learning. I have seen Attention, GANs, NLP, etc. but GNNs are not covered in the book. Will be included?

Best. | open | 2023-04-27T21:44:22Z | 2023-05-15T12:47:23Z | https://github.com/d2l-ai/d2l-en/issues/2473 | [

"feature request"

] | Cram3r95 | 1 |

mljar/mljar-supervised | scikit-learn | 488 | elementwise comparison fails on predict functions | ```

predictions_compete = automl_compete.predict_all(X_test)

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

/var/folders/kv/_qlrk0nj7zld91vzy1fj3hkr0000gn/T/ipykernel_48603/1194736169.py in <module>

----> 1 predictions_compete = automl_compete.predict_all(X_test)

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/automl.py in predict_all(self, X)

394

395 """

--> 396 return self._predict_all(X)

397

398 def score(self, X, y=None, sample_weight=None):

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/base_automl.py in _predict_all(self, X)

1380 def _predict_all(self, X):

1381 # Make and return predictions

-> 1382 return self._base_predict(X)

1383

1384 def _score(self, X, y=None, sample_weight=None):

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/base_automl.py in _base_predict(self, X, model)

1313 if model._is_stacked:

1314 self._perform_model_stacking()

-> 1315 X_stacked = self.get_stacked_data(X, mode="predict")

1316

1317 if model.get_type() == "Ensemble":

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/base_automl.py in get_stacked_data(self, X, mode)

418 oof = m.get_out_of_folds()

419 else:

--> 420 oof = m.predict(X)

421 if self._ml_task == BINARY_CLASSIFICATION:

422 cols = [f for f in oof.columns if "prediction" in f]

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/ensemble.py in predict(self, X, X_stacked)

306 y_predicted_from_model = model.predict(X_stacked)

307 else:

--> 308 y_predicted_from_model = model.predict(X)

309

310 prediction_cols = []

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/model_framework.py in predict(self, X)

426 for ind, learner in enumerate(self.learners):

427 # preprocessing goes here

--> 428 X_data, _, _ = self.preprocessings[ind].transform(X.copy(), None)

429 y_p = learner.predict(X_data)

430 y_p = self.preprocessings[ind].inverse_scale_target(y_p)

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/preprocessing/preprocessing.py in transform(self, X_validation, y_validation, sample_weight_validation)

401 for convert in self._categorical:

402 if X_validation is not None and convert is not None:

--> 403 X_validation = convert.transform(X_validation)

404

405 for dtt in self._datetime_transforms:

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/preprocessing/preprocessing_categorical.py in transform(self, X)

77 lbl = LabelEncoder()

78 lbl.from_json(lbl_params)

---> 79 X.loc[:, column] = lbl.transform(X.loc[:, column])

80

81 return X

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/supervised/preprocessing/label_encoder.py in transform(self, x)

31 def transform(self, x):

32 try:

---> 33 return self.lbl.transform(x) # list(x.values))

34 except ValueError as ve:

35 # rescue

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/sklearn/preprocessing/_label.py in transform(self, y)

136 return np.array([])

137

--> 138 return _encode(y, uniques=self.classes_)

139

140 def inverse_transform(self, y):

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/sklearn/utils/_encode.py in _encode(values, uniques, check_unknown)

185 else:

186 if check_unknown:

--> 187 diff = _check_unknown(values, uniques)

188 if diff:

189 raise ValueError(f"y contains previously unseen labels: {str(diff)}")

~/code_projects/chs_kaggle/.venv/lib/python3.9/site-packages/sklearn/utils/_encode.py in _check_unknown(values, known_values, return_mask)

259

260 # check for nans in the known_values

--> 261 if np.isnan(known_values).any():

262 diff_is_nan = np.isnan(diff)

263 if diff_is_nan.any():

TypeError: ufunc 'isnan' not supported for the input types, and the inputs could not be safely coerced to any supported types according to the casting rule ''safe''

``` | closed | 2021-11-24T09:08:07Z | 2021-11-24T11:46:13Z | https://github.com/mljar/mljar-supervised/issues/488 | [

"bug"

] | cibic89 | 5 |

lepture/authlib | flask | 720 | error_description should not include invalid characters for oidc certification | [RFC6749](https://datatracker.ietf.org/doc/html/rfc6749#section-5.2) states that `error_description` field MUST NOT include characters outside the set %09-0A (Tab and LF) / %x0D (CR) / %x20-21 / %x23-5B / %x5D-7E.

For example the Token endpoint response returns this error, which makes the open id connect certification fail :

https://github.com/lepture/authlib/blob/4eafdc21891e78361f478479efe109ff0fb2f661/authlib/jose/errors.py#L85

The quotes should be removed from `error_descriptions` | open | 2025-03-14T15:22:38Z | 2025-03-15T01:58:29Z | https://github.com/lepture/authlib/issues/720 | [

"bug"

] | funelie | 0 |

tortoise/tortoise-orm | asyncio | 1,342 | Model printing requires the output of the primary key value | **Is your feature request related to a problem? Please describe.**

When I print a model,I can only got model's name.

The __str__ function should have the same logic as the __repr__ function.

In the past, every time I printed a model, I had to print an extra pk field.

**Describe alternatives you've considered**

Rewrite the Model's function:__str__

like:

```python

class Model(metaclass=ModelMeta):

"""

Base class for all Tortoise ORM Models.

"""

...

def __str__(self) -> str:

if self.pk:

return f"<{self.__class__.__name__}: {self.pk}>"

return f"<{self.__class__.__name__}>"

```

| open | 2023-02-16T05:52:38Z | 2023-03-01T03:14:17Z | https://github.com/tortoise/tortoise-orm/issues/1342 | [

"enhancement"

] | Chise1 | 6 |

ultralytics/ultralytics | computer-vision | 19,710 | Using `single_cls` while loading the data the first time causes warnings and errors | ### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

Hi,

I have a dataset with two classes: 0 and 1.

In my data.yaml file I have the following class def:

# class names

names:

0: 'cat'

1: 'duck'

Now I'm testing with the single_class flag set to true and I get a ton of errors complaining about the labels exceeding the maixmum class label. Since I set the single_class = True, shouldn't it change all my labels to zero?

### Additional

_No response_ | closed | 2025-03-15T04:54:32Z | 2025-03-21T14:06:16Z | https://github.com/ultralytics/ultralytics/issues/19710 | [

"bug",

"question",

"fixed",

"detect"

] | Nau3D | 11 |

widgetti/solara | jupyter | 640 | Feature Request: Programmatically focus on a component | It would be nice to make available the functionality to focus on a component (say a text input component) after interacting with another component (i.e. a button).

An example of this would be something like:

- user enters some text in an input text field

- user presses some button

- the same (or another) text field is automatically in focus -> no need to use the mouse to select that component in order to enter text.

I saw that this is possible via the `.focus()` method in `ipyvuetify`, but that is not exposed in solara. There is a kwarg that makes a certain input text field be in focus when the app starts, but that is lost once the user interacts with other components (buttons, sliders etc..). | closed | 2024-05-09T12:43:22Z | 2024-08-25T15:28:00Z | https://github.com/widgetti/solara/issues/640 | [] | JovanVeljanoski | 8 |

hankcs/HanLP | nlp | 1,937 | 汉语转拼音功能,涉及到比较复杂的多音字 就不行了 | <!--

感谢找出bug,请认真填写下表:

-->

**Describe the bug**

A clear and concise description of what the bug is.

引用maven,使用

`

<dependency>

<groupId>com.hankcs</groupId>

<artifactId>hanlp</artifactId>

<version>portable-1.8.5</version>

</dependency>

`

`

public static void main(String[] args) {

String text = "厦门行走";

// 使用 HanLP 进行拼音转换,HanLP 会处理多音字

String pinyin = HanLP.convertToPinyinString(text, "", true);

System.out.println(pinyin); // xiàmén xíngzǒu

System.out.println(HanLP.convertToPinyinFirstCharString(text, "", true));

System.out.println(HanLP.convertToPinyinString("人要是行,干一行行一行,一行行行行行。人要是不行,干一行不行一行,一行不行行行不行。", " ", false));

}

`

输出不对

`

ren yao shi xing , gan yi xing xing yi xing , yi xing xing xing xing xing 。 ren yao shi bu xing , gan yi xing bu xing yi xing , yi xing bu xing xing xing bu xing 。

`

**Code to reproduce the issue**

Provide a reproducible test case that is the bare minimum necessary to generate the problem.

```python

```

**Describe the current behavior**

A clear and concise description of what happened.

**Expected behavior**

A clear and concise description of what you expected to happen.

**System information**

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04):

- Python version:

- HanLP version:

**Other info / logs**

Include any logs or source code that would be helpful to diagnose the problem. If including tracebacks, please include the full traceback. Large logs and files should be attached.

* [x] I've completed this form and searched the web for solutions.

<!-- ⬆️此处务必勾选,否则你的issue会被机器人自动删除! -->

<!-- ⬆️此处务必勾选,否则你的issue会被机器人自动删除! -->

<!-- ⬆️此处务必勾选,否则你的issue会被机器人自动删除! --> | closed | 2024-12-25T02:44:25Z | 2024-12-25T04:06:40Z | https://github.com/hankcs/HanLP/issues/1937 | [

"invalid"

] | jsl1992 | 1 |

jofpin/trape | flask | 164 | Idk why is this happening, any solution? | C:\Users\Usuario\Desktop\trape>python trape.py -h

Traceback (most recent call last):

File "trape.py", line 23, in <module>

from core.utils import utils #

File "C:\Users\Usuario\Desktop\trape\core\utils.py", line 23, in <module>

import httplib

ModuleNotFoundError: No module named 'httplib' | open | 2019-06-03T05:13:06Z | 2019-06-03T20:21:11Z | https://github.com/jofpin/trape/issues/164 | [] | origmz | 2 |

ScrapeGraphAI/Scrapegraph-ai | machine-learning | 853 | I am trying to run SmartScraperGraph() using ollama with llama3.2 model but i am getting this warning that "Token indices sequence length is longer than the specified maximum sequence length for this model (7678 > 1024)." and the whole website is not being scraped. | import json

from scrapegraphai.graphs import SmartScraperGraph

from ollama import Client

ollama_client = Client(host='http://localhost:11434')

# Define the configuration for the scraping pipeline

graph_config = {

"llm": {

"model": "ollama/llama3.2",

"temperature": 0.0,

"format": "json",

"model_tokens": 4096,

"base_url": "http://localhost:11434",

},

"embeddings": {

"model": "nomic-embed-text",

},

}

# Create the SmartScraperGraph instance

smart_scraper_graph = SmartScraperGraph(

prompt="Extract me all the news from the website along with headlines",

source="https://www.bbc.com/",

config=graph_config

)

# Run the pipeline

result = smart_scraper_graph.run()

print(json.dumps(result, `indent=4))`

Output*********************************************

>> from langchain_community.callbacks.manager import get_openai_callback

You can use the langchain cli to **automatically** upgrade many imports. Please see documentation here <https://python.langchain.com/docs/versions/v0_2/>

from langchain.callbacks import get_openai_callback

Token indices sequence length is longer than the specified maximum sequence length for this model (7678 > 1024). Running this sequence through the model will result in indexing errors

{

"headlines": [

"Life is not easy - Haaland penalty miss sums up Man City crisis",

"How a 1990s Swan Lake changed dance forever"

],

"articles": [

{

"title": "BBC News",

"url": "https://www.bbc.com/news/world-europe-63711133"

},

{

"title": "Matthew Bourne on his male Swan Lake - the show that shook up the dance world",

"url": "https://www.bbc.com/culture/article/20241126-matthew-bourne-on-his-male-swan-lake-the-show-that-shook-up-the-dance-world-forever"

}

]

}

Even after specifying that model tokens = 4096 it is not effecting its maximum sequence length(1024). How can i increase it ? How can i chunk the website into size of its max_sequence_length so that i can scrape the whole website.

PS: Also having the option to further crawl the links and scrape subsequent websites would be great. Thanks

Ubuntu 22.04 LTS

GPU : RTX 4070 12GB VRAM

RAM : 16GB DDR5

Ollama/Llama3.2:3B model | closed | 2024-12-27T03:55:42Z | 2025-01-06T19:19:40Z | https://github.com/ScrapeGraphAI/Scrapegraph-ai/issues/853 | [] | GODCREATOR333 | 3 |

miguelgrinberg/microblog | flask | 97 | Can't find flask_migrate for the import | From flask_migrate import Migrate

Gives me E0401:Unable to import 'flask-migrate'. But pip installed and upgraded flask-migrate just fine. | closed | 2018-04-14T15:14:53Z | 2019-04-07T10:04:56Z | https://github.com/miguelgrinberg/microblog/issues/97 | [

"question"

] | tnovak123 | 23 |

ageitgey/face_recognition | machine-learning | 665 | 在Ubuntu下重新搭好的环境,相同机器下的人脸检测卡顿 | * face_recognition version:1.2.3

* Python version:Python3.6.6

* Operating System:Ubuntu18

### Description

> 由于显卡驱动出现了问题,重装了ubuntu系统,再次使用face_recognition包进行人脸检测和识别时,会特别卡顿,重装之前不会出现这样的问题,单CPU占用达到90%以上,其他cpu不到5%,会不会是dlib没有使用GPU加速?在我使用model='cnn'时,发现没有使用gpu,检测会变得更加卡顿。这个问题用该如何解决?

### The Code is:

```

def thread(id, label_list, known_faces):

# for id in index_th:

video_catch = cv2.VideoCapture(id)

# video_catch.set(cv2.CAP_PROP_FRAME_WIDTH, 640)

# video_catch.set(cv2.CAP_PROP_FRAME_HEIGHT, 480)

# video_catch.set(cv2.CAP_PROP_FPS, 30.0)

face_locations = []

face_encodings = []

face_names = []

frame_number = 0

print('%d isOpened!'%id, video_catch.isOpened())

if not video_catch.isOpened():

return

while True:

ret, frame = video_catch.read()

# print('%s' % id, ret)

frame_number += 1

if not ret:

break

small_frame = cv2.resize(frame, (0, 0), fx=0.25, fy=0.25)

rgb_frame = small_frame[:, :, ::-1]

face_locations = fr.face_locations(rgb_frame) # ,model="cnn"

face_encodings = fr.face_encodings(rgb_frame, face_locations)

face_names = []

for face_encoding in face_encodings:

match = fr.compare_faces(known_faces, face_encoding, tolerance=0.45)

name = "???"

for i in range(len(label_list)):

if match[i]:

name = label_list[i]

face_names.append(name)

for (top, right, bottom, left), name in zip(face_locations, face_names):

if not name:

continue

top *= 4

right *= 4

bottom *= 4

left *= 4

pil_im = Image.fromarray(frame)

draw = ImageDraw.Draw(pil_im)

font = ImageFont.truetype('/home/jonty/Documents/Project_2018/database/STHeiti_Medium.ttc', 24,

encoding='utf-8')

draw.text((left + 6, bottom - 25), name, (0, 0, 255), font=font)

frame = np.array(pil_im)

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

cv2.imshow('Video_%s' % id, frame)

c = cv2.waitKey(5)

if c & 0xFF == ord("q"):

break

video_catch.release()

cv2.destroyWindow('Video_%s' % id)

```

| closed | 2018-11-04T04:20:22Z | 2019-08-20T03:26:53Z | https://github.com/ageitgey/face_recognition/issues/665 | [] | JalexDooo | 3 |

d2l-ai/d2l-en | deep-learning | 1,807 | Tokenization in 8.2.2 | I think in tokenize function, if it's tokenizing words, it should add space_character to tokens too. Otherwise, in predict function, it will assume '<unk>' for spaces and the predictions doesn't have spaces between them (which can be solved by manipulating the predict function to this line: `return ''.join([vocab.idx_to_token[i] + ' ' for i in outputs])`)

I think tokenized should change like this:

`[line.split() for line in lines] + [[' ']]`

If I'm right, I can make a pr for both tokenize and predict funcs. (although for predict I might have to change inputs of function as well to recognize if it's a char level or word lever rnn) | closed | 2021-06-22T07:53:02Z | 2022-12-16T00:24:13Z | https://github.com/d2l-ai/d2l-en/issues/1807 | [] | armingh2000 | 2 |

tableau/server-client-python | rest-api | 1,167 | [2022.3] parent_id lost when creating project | **Describe the bug**

`parent_id` is `None` in the `ProjectItem` object returned by `projects.create()` even if it was specified when creating the new instance.

**Versions**

- Tableau Server 2022.3.1

- Python 3.10.9

- TSC library 0.23.4

**To Reproduce**

```

>>> new_project = TSC.ProjectItem('New Project', parent_id='c7fc0e49-8d67-4211-9623-dd9237bf3cda')

>>> new_project.id

>>> new_project.parent_id

'c7fc0e49-8d67-4211-9623-dd9237bf3cda'

>>> new_project = server.projects.create(new_project)

INFO:tableau.endpoint.projects:Created new project (ID: e0e88607-abe5-4548-963e-b5f5054c5cbe)

>>> new_project.id

'e0e88607-abe5-4548-963e-b5f5054c5cbe'

>>> new_project.parent_id

>>>

```

**Results**

What are the results or error messages received?

`new_project.parent_id` should be preserved, but it is reset to `None`

| closed | 2023-01-05T16:06:46Z | 2024-09-20T08:22:27Z | https://github.com/tableau/server-client-python/issues/1167 | [

"bug",

"Server-Side Enhancement",

"fixed"

] | nosnilmot | 5 |

sigmavirus24/github3.py | rest-api | 665 | how to check if no resource was found? | How do I check if github3 does not find resource?

for example:

```Python

import github3

g = github3.GitHub(token='mytoken')

i1 = g.issue('rmamba', 'sss3', 1) #exists, returns Issue

i2 = g.issue('rmamba', 'sss3', 1000) #does not exists, return NullObject

```

how do I do this:

```

if i is None:

handle none

```

I can do `if i2 is i2.Empty`. But then with i1 it does not work because it returns valid issue and i1.Empty does not exist. I tried with NullObject but it always says it's false.

```

i1 is NullObject #false

i1 is NullObject('Issue') #false

```

so I had to do this for now:

```

if '%s' % i2 == '':

handle None

```

because it works for both NullObjects and valid results returned | closed | 2017-01-04T12:22:52Z | 2017-01-21T17:10:22Z | https://github.com/sigmavirus24/github3.py/issues/665 | [] | rmamba | 1 |

HumanSignal/labelImg | deep-learning | 603 | [Feature request] Landmarks labelling in addition to rects. | <!--

Request to add marking of only points instead of rect boxes.

This will be useful for marking landmarks like facial and pose landmarks.

-->

| open | 2020-06-12T18:33:19Z | 2020-06-12T18:33:19Z | https://github.com/HumanSignal/labelImg/issues/603 | [] | poornapragnarao | 0 |

numba/numba | numpy | 9,986 | `np.left_shift` behaves differntly with it using `njit` | <!--

Thanks for opening an issue! To help the Numba team handle your information

efficiently, please first ensure that there is no other issue present that

already describes the issue you have

(search at https://github.com/numba/numba/issues?&q=is%3Aissue).

-->

## Reporting a bug

<!--

Before submitting a bug report please ensure that you can check off these boxes:

-->

- [x] I have tried using the latest released version of Numba (most recent is

visible in the release notes

(https://numba.readthedocs.io/en/stable/release-notes-overview.html).

- [x] I have included a self contained code sample to reproduce the problem.

i.e. it's possible to run as 'python bug.py'.

<!--

Please include details of the bug here, including, if applicable, what you

expected to happen!

-->

`np.left_shift` behaves differntly with it using `njit`

```python

from numba import njit

import numpy as np

@njit

def left_shift_njit():

return np.left_shift(np.uint8(255),1)

print(np.left_shift(np.uint8(255),1))

print(left_shift_njit())

```

Output:

```bash

254

510

```

Version information:

```bash

python: 3.10.16

numpy: 2.1.3

numba: 0.61.0

```

| open | 2025-03-16T08:58:22Z | 2025-03-17T17:30:21Z | https://github.com/numba/numba/issues/9986 | [

"bug - numerically incorrect",

"NumPy 2.0"

] | apiqwe | 1 |

tqdm/tqdm | pandas | 941 | Shorter description when console is not wide enough | Related to: https://github.com/tqdm/tqdm/issues/630

When my console width is too small compared to the description (e.g., set via ```tqdm.set_description()```), I often get a multiline output, adding many new lines to stdout. I would rather have a shorter description to display instead. For example:

```one very long description: ██████████| 10000/10000 [00:13<00:00, 737.46it/s]```

```short one: █████████████████| 10000/10000 [00:13<00:00, 737.46it/s]```

It could be an optional argument to ```tqdm.__init__()``` and/or to ```tqdm.set_description()```. If you think it has an interest, I can do a PR. | closed | 2020-04-18T23:30:50Z | 2020-04-21T07:37:17Z | https://github.com/tqdm/tqdm/issues/941 | [

"question/docs ‽"

] | rronan | 1 |

voila-dashboards/voila | jupyter | 894 | Echart's panel extension does not load in voila | Hi there,

I am trying to render a notebook with panel's extension `echart`.

The code:

```python

import panel as pn

pn.extension('echarts')

gauge = {

'tooltip': {

'formatter': '{a} <br/>{b} : {c}%'

},

'series': [

{

'name': 'Gauge',

'type': 'gauge',

'detail': {'formatter': '{value}%'},

'data': [{'value': 50, 'name': 'Value'}]

}

]

};

gauge_pane = pn.pane.ECharts(gauge, width=400, height=400)

slider = pn.widgets.IntSlider(start=0, end=100, width = 200)

slider.jscallback(args={'gauge': gauge_pane}, value="""

gauge.data.series[0].data[0].value = cb_obj.value

gauge.properties.data.change.emit()

""")

pn.Column(slider, gauge_pane)

```

On jupyter lab, this works fine, but on voilà, it fails.

When looking at the web console I see that voila tries to load `echart.js` locally instead of using CDN (while `echarts-gl` is always loaded via CDN.

echarts loading in jupyter:

echarts loading in voilà:

## Question

How can I force voila to load `echarts` from CDN (something like `pn.extension('echarts', fromCDN=True)`, or even better, serve the echart.js staticly ?

## Configuration

Jupyter and voila runs in separated docker container behind a proxy, note that other panel widgets works fine

+ Voila

+ version 0.2.7 (with more recent version panel interaction does not work but I have not identified why yet)

+ Command: `/usr/local/bin/python /usr/local/bin/voila --no-browser --enable_nbextensions=True --strip_sources=False --port 8866 --server_url=/voila/ --base_url=/voila/ /opt/dashboards`

+ `jupyter nbextension list`

```

Known nbextensions:

config dir: /usr/local/etc/jupyter/nbconfig

notebook section

jupyter-matplotlib/extension enabled

- Validating: OK

jupyter_bokeh/extension enabled

- Validating: OK

nbdime/index enabled

- Validating: OK

voila/extension enabled

- Validating: OK

jupyter-js-widgets/extension enabled

- Validating: OK

```

+ `jupyter labextension list`

```

JupyterLab v2.3.1

Known labextensions:

app dir: /usr/local/share/jupyter/lab

@bokeh/jupyter_bokeh v2.0.4 enabled OK

@jupyter-widgets/jupyterlab-manager v2.0.0 enabled OK

@pyviz/jupyterlab_pyviz v1.0.4 enabled OK

nbdime-jupyterlab v2.1.0 enabled OK

```

+ Jupyter

+ version 2.3.1

+ `jupyter nbextension list`

```

Known nbextensions:

config dir: /usr/local/etc/jupyter/nbconfig

notebook section

jupyter-matplotlib/extension enabled

- Validating: OK

jupyter_bokeh/extension enabled

- Validating: OK

nbdime/index enabled

- Validating: OK

jupyter-js-widgets/extension enabled

- Validating: OK

```

+ `jupyter labextension list`

```

JupyterLab v2.3.1

Known labextensions:

app dir: /usr/local/share/jupyter/lab

@bokeh/jupyter_bokeh v2.0.4 enabled OK

@jupyter-widgets/jupyterlab-manager v2.0.0 enabled OK

@pyviz/jupyterlab_pyviz v1.0.4 enabled OK

nbdime-jupyterlab v2.1.0 enabled OK

```

| closed | 2021-05-25T16:19:53Z | 2023-08-03T13:47:49Z | https://github.com/voila-dashboards/voila/issues/894 | [] | dbeniamine | 2 |

jonra1993/fastapi-alembic-sqlmodel-async | sqlalchemy | 55 | Questions concerning Relationship definition | Hi @jonra1993 ,

I have questions on the Relationship usage, class [Hero](https://github.com/jonra1993/fastapi-alembic-sqlmodel-async/blob/9cc2566e5e5a38611e2d8d4e0f9d3bdc473db7bc/backend/app/app/models/hero_model.py#L14):

Why not add a created_by_id to the HeroBase instead of Hero? Why is it Optional, or can it be required, since we always have a user that created a hero?

Where can I find information, which sa_relationship_kwargs I should use, and should there be another one to define what happens if records are deleted?

And also: why no backpopulates with the created_by relationship?

Do we really need the primaryjoin argument? | closed | 2023-03-21T08:48:19Z | 2023-03-22T09:25:42Z | https://github.com/jonra1993/fastapi-alembic-sqlmodel-async/issues/55 | [] | vi-klaas | 2 |

open-mmlab/mmdetection | pytorch | 11,850 | Use get_flops.py problem | I use this command

python tools/analysis_tools/get_flops.py configs/glip/glip_atss_swin-t_a_fpn_dyhead_16xb2_ms-2x_funtune_coco.py

I want to get GFLOPs and parameters,but have error

mmcv == 2.1.0

mmdet == 3.3.0

mmengine == 0.10.4

loading annotations into memory...

Done (t=0.41s)

creating index...

index created!

Traceback (most recent call last):

File "tools/analysis_tools/get_flops.py", line 140, in <module>

main()

File "tools/analysis_tools/get_flops.py", line 120, in main

result = inference(args, logger)

File "tools/analysis_tools/get_flops.py", line 98, in inference

outputs = get_model_complexity_info(

File "/home/dsic/.virtualenvs/mmdet/lib/python3.8/site-packages/mmengine/analysis/print_helper.py", line 748, in get_model_complexity_info

flops = flop_handler.total()

File "/home/dsic/.virtualenvs/mmdet/lib/python3.8/site-packages/mmengine/analysis/jit_analysis.py", line 268, in total

stats = self._analyze()