text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

(Gluon) SE-ResNeXt

**SE ResNeXt** is a variant of a [ResNext](https://www.paperswithcode.com/method/resnext) that employs [squeeze-and-excitation blocks](https://paperswithcode.com/method/squeeze-and-excitation-block) to enable the network to perform dynamic channel-wise feature recalibration.

The weights from this model were ported from [Gluon](https://cv.gluon.ai/model_zoo/classification.html).

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('gluon_seresnext101_32x4d', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `gluon_seresnext101_32x4d`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('gluon_seresnext101_32x4d', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@misc{hu2019squeezeandexcitation,

title={Squeeze-and-Excitation Networks},

author={Jie Hu and Li Shen and Samuel Albanie and Gang Sun and Enhua Wu},

year={2019},

eprint={1709.01507},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

<!--

Type: model-index

Collections:

- Name: Gloun SEResNeXt

Paper:

Title: Squeeze-and-Excitation Networks

URL: https://paperswithcode.com/paper/squeeze-and-excitation-networks

Models:

- Name: gluon_seresnext101_32x4d

In Collection: Gloun SEResNeXt

Metadata:

FLOPs: 10302923504

Parameters: 48960000

File Size: 196505510

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Global Average Pooling

- Grouped Convolution

- Max Pooling

- ReLU

- ResNeXt Block

- Residual Connection

- Softmax

- Squeeze-and-Excitation Block

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: gluon_seresnext101_32x4d

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/gluon_resnet.py#L219

Weights: https://github.com/rwightman/pytorch-pretrained-gluonresnet/releases/download/v0.1/gluon_seresnext101_32x4d-cf52900d.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 80.87%

Top 5 Accuracy: 95.29%

- Name: gluon_seresnext101_64x4d

In Collection: Gloun SEResNeXt

Metadata:

FLOPs: 19958950640

Parameters: 88230000

File Size: 353875948

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Global Average Pooling

- Grouped Convolution

- Max Pooling

- ReLU

- ResNeXt Block

- Residual Connection

- Softmax

- Squeeze-and-Excitation Block

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: gluon_seresnext101_64x4d

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/gluon_resnet.py#L229

Weights: https://github.com/rwightman/pytorch-pretrained-gluonresnet/releases/download/v0.1/gluon_seresnext101_64x4d-f9926f93.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 80.88%

Top 5 Accuracy: 95.31%

- Name: gluon_seresnext50_32x4d

In Collection: Gloun SEResNeXt

Metadata:

FLOPs: 5475179184

Parameters: 27560000

File Size: 110578827

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Global Average Pooling

- Grouped Convolution

- Max Pooling

- ReLU

- ResNeXt Block

- Residual Connection

- Softmax

- Squeeze-and-Excitation Block

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: gluon_seresnext50_32x4d

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/gluon_resnet.py#L209

Weights: https://github.com/rwightman/pytorch-pretrained-gluonresnet/releases/download/v0.1/gluon_seresnext50_32x4d-90cf2d6e.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.92%

Top 5 Accuracy: 94.82%

--> | huggingface/pytorch-image-models/blob/main/hfdocs/source/models/gloun-seresnext.mdx |

Getting Started with Repositories

This beginner-friendly guide will help you get the basic skills you need to create and manage your repository on the Hub. Each section builds on the previous one, so feel free to choose where to start!

## Requirements

This document shows how to handle repositories through the web interface as well as through the terminal. There are no requirements if working with the UI. If you want to work with the terminal, please follow these installation instructions.

If you do not have `git` available as a CLI command yet, you will need to [install Git](https://git-scm.com/downloads) for your platform. You will also need to [install Git LFS](https://git-lfs.github.com/), which will be used to handle large files such as images and model weights.

To be able to push your code to the Hub, you'll need to authenticate somehow. The easiest way to do this is by installing the [`huggingface_hub` CLI](https://huggingface.co/docs/huggingface_hub/index) and running the login command:

```bash

python -m pip install huggingface_hub

huggingface-cli login

```

**The content in the Getting Started section of this document is also available as a video!**

<iframe width="560" height="315" src="https://www.youtube-nocookie.com/embed/rkCly_cbMBk" title="Managing a repo" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

## Creating a repository

Using the Hub's web interface you can easily create repositories, add files (even large ones!), explore models, visualize diffs, and much more. There are three kinds of repositories on the Hub, and in this guide you'll be creating a **model repository** for demonstration purposes. For information on creating and managing models, datasets, and Spaces, refer to their respective documentation.

1. To create a new repository, visit [huggingface.co/new](http://huggingface.co/new):

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/new_repo.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/new_repo-dark.png"/>

</div>

2. Specify the owner of the repository: this can be either you or any of the organizations you’re affiliated with.

3. Enter your model’s name. This will also be the name of the repository.

4. Specify whether you want your model to be public or private.

5. Specify the license. You can leave the *License* field blank for now. To learn about licenses, visit the [**Licenses**](repositories-licenses) documentation.

After creating your model repository, you should see a page like this:

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/empty_repo.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/empty_repo-dark.png"/>

</div>

Note that the Hub prompts you to create a *Model Card*, which you can learn about in the [**Model Cards documentation**](./model-cards). Including a Model Card in your model repo is best practice, but since we're only making a test repo at the moment we can skip this.

## Adding files to a repository (Web UI)

To add files to your repository via the web UI, start by selecting the **Files** tab, navigating to the desired directory, and then clicking **Add file**. You'll be given the option to create a new file or upload a file directly from your computer.

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repositories-add_file.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repositories-add_file-dark.png"/>

</div>

### Creating a new file

Choosing to create a new file will take you to the following editor screen, where you can choose a name for your file, add content, and save your file with a message that summarizes your changes. Instead of directly committing the new file to your repo's `main` branch, you can select `Open as a pull request` to create a [Pull Request](./repositories-pull-requests-discussions).

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repositories-create_file.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repositories-create_file-dark.png"/>

</div>

### Uploading a file

If you choose _Upload file_ you'll be able to choose a local file to upload, along with a message summarizing your changes to the repo.

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repositories-upload_file.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repositories-upload_file-dark.png"/>

</div>

As with creating new files, you can select `Open as a pull request` to create a [Pull Request](./repositories-pull-requests-discussions) instead of adding your changes directly to the `main` branch of your repo.

## Adding files to a repository (terminal)[[terminal]]

### Cloning repositories

Downloading repositories to your local machine is called *cloning*. You can use the following commands to load your repo and navigate to it:

```bash

git clone https://huggingface.co/<your-username>/<your-model-name>

cd <your-model-name>

```

You can clone over SSH with the following command:

```bash

git clone git@hf.co:<your-username>/<your-model-name>

cd <your-model-name>

```

You'll need to add your SSH public key to [your user settings](https://huggingface.co/settings/keys) to push changes or access private repositories.

### Set up

Now's the time, you can add any files you want to the repository! 🔥

Do you have files larger than 10MB? Those files should be tracked with `git-lfs`, which you can initialize with:

```bash

git lfs install

```

Note that if your files are larger than **5GB** you'll also need to run:

```bash

huggingface-cli lfs-enable-largefiles .

```

When you use Hugging Face to create a repository, Hugging Face automatically provides a list of common file extensions for common Machine Learning large files in the `.gitattributes` file, which `git-lfs` uses to efficiently track changes to your large files. However, you might need to add new extensions if your file types are not already handled. You can do so with `git lfs track "*.your_extension"`.

### Pushing files

You can use Git to save new files and any changes to already existing files as a bundle of changes called a *commit*, which can be thought of as a "revision" to your project. To create a commit, you have to `add` the files to let Git know that we're planning on saving the changes and then `commit` those changes. In order to sync the new commit with the Hugging Face Hub, you then `push` the commit to the Hub.

```bash

# Create any files you like! Then...

git add .

git commit -m "First model version" # You can choose any descriptive message

git push

```

And you're done! You can check your repository on Hugging Face with all the recently added files. For example, in the screenshot below the user added a number of files. Note that some files in this example have a size of `1.04 GB`, so the repo uses Git LFS to track it.

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repo_with_files.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repo_with_files-dark.png"/>

</div>

## Viewing a repo's history

Every time you go through the `add`-`commit`-`push` cycle, the repo will keep track of every change you've made to your files. The UI allows you to explore the model files and commits and to see the difference (also known as *diff*) introduced by each commit. To see the history, you can click on the **History: X commits** link.

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repo_history.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/repo_history-dark.png"/>

</div>

You can click on an individual commit to see what changes that commit introduced:

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/explore_history.gif"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/explore_history-dark.gif"/>

</div>

| huggingface/hub-docs/blob/main/docs/hub/repositories-getting-started.md |

Tests examples taken from the original great gltflib

Find the great gltflib by Lukas Shawford here: https://github.com/lukas-shawford/gltflib

| huggingface/simulate/blob/main/tests/test_gltflib/README.md |

RegNetX

**RegNetX** is a convolutional network design space with simple, regular models with parameters: depth \\( d \\), initial width \\( w\_{0} > 0 \\), and slope \\( w\_{a} > 0 \\), and generates a different block width \\( u\_{j} \\) for each block \\( j < d \\). The key restriction for the RegNet types of model is that there is a linear parameterisation of block widths (the design space only contains models with this linear structure):

\\( \\) u\_{j} = w\_{0} + w\_{a}\cdot{j} \\( \\)

For **RegNetX** we have additional restrictions: we set \\( b = 1 \\) (the bottleneck ratio), \\( 12 \leq d \leq 28 \\), and \\( w\_{m} \geq 2 \\) (the width multiplier).

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('regnetx_002', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `regnetx_002`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('regnetx_002', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@misc{radosavovic2020designing,

title={Designing Network Design Spaces},

author={Ilija Radosavovic and Raj Prateek Kosaraju and Ross Girshick and Kaiming He and Piotr Dollár},

year={2020},

eprint={2003.13678},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

<!--

Type: model-index

Collections:

- Name: RegNetX

Paper:

Title: Designing Network Design Spaces

URL: https://paperswithcode.com/paper/designing-network-design-spaces

Models:

- Name: regnetx_002

In Collection: RegNetX

Metadata:

FLOPs: 255276032

Parameters: 2680000

File Size: 10862199

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_002

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 1024

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L337

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_002-e7e85e5c.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 68.75%

Top 5 Accuracy: 88.56%

- Name: regnetx_004

In Collection: RegNetX

Metadata:

FLOPs: 510619136

Parameters: 5160000

File Size: 20841309

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_004

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 1024

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L343

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_004-7d0e9424.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 72.39%

Top 5 Accuracy: 90.82%

- Name: regnetx_006

In Collection: RegNetX

Metadata:

FLOPs: 771659136

Parameters: 6200000

File Size: 24965172

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_006

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 1024

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L349

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_006-85ec1baa.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 73.84%

Top 5 Accuracy: 91.68%

- Name: regnetx_008

In Collection: RegNetX

Metadata:

FLOPs: 1027038208

Parameters: 7260000

File Size: 29235944

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_008

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 1024

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L355

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_008-d8b470eb.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 75.05%

Top 5 Accuracy: 92.34%

- Name: regnetx_016

In Collection: RegNetX

Metadata:

FLOPs: 2059337856

Parameters: 9190000

File Size: 36988158

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_016

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 1024

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L361

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_016-65ca972a.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 76.95%

Top 5 Accuracy: 93.43%

- Name: regnetx_032

In Collection: RegNetX

Metadata:

FLOPs: 4082555904

Parameters: 15300000

File Size: 61509573

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_032

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 512

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L367

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_032-ed0c7f7e.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 78.15%

Top 5 Accuracy: 94.09%

- Name: regnetx_040

In Collection: RegNetX

Metadata:

FLOPs: 5095167744

Parameters: 22120000

File Size: 88844824

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_040

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 512

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L373

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_040-73c2a654.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 78.48%

Top 5 Accuracy: 94.25%

- Name: regnetx_064

In Collection: RegNetX

Metadata:

FLOPs: 8303405824

Parameters: 26210000

File Size: 105184854

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_064

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 512

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L379

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_064-29278baa.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.06%

Top 5 Accuracy: 94.47%

- Name: regnetx_080

In Collection: RegNetX

Metadata:

FLOPs: 10276726784

Parameters: 39570000

File Size: 158720042

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_080

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 512

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L385

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_080-7c7fcab1.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.21%

Top 5 Accuracy: 94.55%

- Name: regnetx_120

In Collection: RegNetX

Metadata:

FLOPs: 15536378368

Parameters: 46110000

File Size: 184866342

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_120

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 512

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L391

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_120-65d5521e.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.61%

Top 5 Accuracy: 94.73%

- Name: regnetx_160

In Collection: RegNetX

Metadata:

FLOPs: 20491740672

Parameters: 54280000

File Size: 217623862

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_160

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 512

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L397

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_160-c98c4112.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.84%

Top 5 Accuracy: 94.82%

- Name: regnetx_320

In Collection: RegNetX

Metadata:

FLOPs: 40798958592

Parameters: 107810000

File Size: 431962133

Architecture:

- 1x1 Convolution

- Batch Normalization

- Convolution

- Dense Connections

- Global Average Pooling

- Grouped Convolution

- ReLU

Tasks:

- Image Classification

Training Techniques:

- SGD with Momentum

- Weight Decay

Training Data:

- ImageNet

Training Resources: 8x NVIDIA V100 GPUs

ID: regnetx_320

Epochs: 100

Crop Pct: '0.875'

Momentum: 0.9

Batch Size: 256

Image Size: '224'

Weight Decay: 5.0e-05

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/d8e69206be253892b2956341fea09fdebfaae4e3/timm/models/regnet.py#L403

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-regnet/regnetx_320-8ea38b93.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 80.25%

Top 5 Accuracy: 95.03%

-->

| huggingface/pytorch-image-models/blob/main/hfdocs/source/models/regnetx.mdx |

Gradio Demo: titanic_survival

```

!pip install -q gradio scikit-learn numpy pandas

```

```

# Downloading files from the demo repo

import os

os.mkdir('files')

!wget -q -O files/titanic.csv https://github.com/gradio-app/gradio/raw/main/demo/titanic_survival/files/titanic.csv

```

```

import os

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

import gradio as gr

current_dir = os.path.dirname(os.path.realpath(__file__))

data = pd.read_csv(os.path.join(current_dir, "files/titanic.csv"))

def encode_age(df):

df.Age = df.Age.fillna(-0.5)

bins = (-1, 0, 5, 12, 18, 25, 35, 60, 120)

categories = pd.cut(df.Age, bins, labels=False)

df.Age = categories

return df

def encode_fare(df):

df.Fare = df.Fare.fillna(-0.5)

bins = (-1, 0, 8, 15, 31, 1000)

categories = pd.cut(df.Fare, bins, labels=False)

df.Fare = categories

return df

def encode_df(df):

df = encode_age(df)

df = encode_fare(df)

sex_mapping = {"male": 0, "female": 1}

df = df.replace({"Sex": sex_mapping})

embark_mapping = {"S": 1, "C": 2, "Q": 3}

df = df.replace({"Embarked": embark_mapping})

df.Embarked = df.Embarked.fillna(0)

df["Company"] = 0

df.loc[(df["SibSp"] > 0), "Company"] = 1

df.loc[(df["Parch"] > 0), "Company"] = 2

df.loc[(df["SibSp"] > 0) & (df["Parch"] > 0), "Company"] = 3

df = df[

[

"PassengerId",

"Pclass",

"Sex",

"Age",

"Fare",

"Embarked",

"Company",

"Survived",

]

]

return df

train = encode_df(data)

X_all = train.drop(["Survived", "PassengerId"], axis=1)

y_all = train["Survived"]

num_test = 0.20

X_train, X_test, y_train, y_test = train_test_split(

X_all, y_all, test_size=num_test, random_state=23

)

clf = RandomForestClassifier()

clf.fit(X_train, y_train)

predictions = clf.predict(X_test)

def predict_survival(passenger_class, is_male, age, company, fare, embark_point):

if passenger_class is None or embark_point is None:

return None

df = pd.DataFrame.from_dict(

{

"Pclass": [passenger_class + 1],

"Sex": [0 if is_male else 1],

"Age": [age],

"Fare": [fare],

"Embarked": [embark_point + 1],

"Company": [

(1 if "Sibling" in company else 0) + (2 if "Child" in company else 0)

]

}

)

df = encode_age(df)

df = encode_fare(df)

pred = clf.predict_proba(df)[0]

return {"Perishes": float(pred[0]), "Survives": float(pred[1])}

demo = gr.Interface(

predict_survival,

[

gr.Dropdown(["first", "second", "third"], type="index"),

"checkbox",

gr.Slider(0, 80, value=25),

gr.CheckboxGroup(["Sibling", "Child"], label="Travelling with (select all)"),

gr.Number(value=20),

gr.Radio(["S", "C", "Q"], type="index"),

],

"label",

examples=[

["first", True, 30, [], 50, "S"],

["second", False, 40, ["Sibling", "Child"], 10, "Q"],

["third", True, 30, ["Child"], 20, "S"],

],

live=True,

)

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/titanic_survival/run.ipynb |

Embed your Space in another website

Once your Space is up and running you might wish to embed it in a website or in your blog.

Embedding or sharing your Space is a great way to allow your audience to interact with your work and demonstrations without requiring any setup on their side.

To embed a Space its visibility needs to be public.

## Direct URL

A Space is assigned a unique URL you can use to share your Space or embed it in a website.

This URL is of the form: `"https://<space-subdomain>.hf.space"`. For instance, the Space [NimaBoscarino/hotdog-gradio](https://huggingface.co/spaces/NimaBoscarino/hotdog-gradio) has the corresponding URL of `"https://nimaboscarino-hotdog-gradio.hf.space"`. The subdomain is unique and only changes if you move or rename your Space.

Your space is always served from the root of this subdomain.

You can find the Space URL along with examples snippets of how to embed it directly from the options menu:

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/spaces-embed-option.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/spaces-embed-option-dark.png"/>

</div>

## Embedding with IFrames

The default embedding method for a Space is using IFrames. Add in the HTML location where you want to embed your Space the following element:

```html

<iframe

src="https://<space-subdomain>.hf.space"

frameborder="0"

width="850"

height="450"

></iframe>

```

For instance using the [NimaBoscarino/hotdog-gradio](https://huggingface.co/spaces/NimaBoscarino/hotdog-gradio) Space:

<iframe src="https://nimaboscarino-hotdog-gradio.hf.space"frameborder="0"width="850"height="500" ></iframe>

## Embedding with WebComponents

If the Space you wish to embed is Gradio-based, you can use Web Components to embed your Space. WebComponents are faster than IFrames and automatically adjust to your web page so that you do not need to configure `width` or `height` for your element.

First, you need to import the Gradio JS library that corresponds to the Gradio version in the Space by adding the following script to your HTML.

<div class="flex justify-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/spaces-embed-gradio-module.png"/>

</div>

Then, add a `gradio-app` element where you want to embed your Space.

```html

<gradio-app src="https://<space-subdomain>.hf.space"></gradio-app>

```

Check out the [Gradio documentation](https://gradio.app/sharing_your_app/#embedding-hosted-spaces) for more details. | huggingface/hub-docs/blob/main/docs/hub/spaces-embed.md |

Tabby on Spaces

[Tabby](https://tabby.tabbyml.com) is an open-source, self-hosted AI coding assistant. With Tabby, every team can set up its own LLM-powered code completion server with ease.

In this guide, you will learn how to deploy your own Tabby instance and use it for development directly from the Hugging Face website.

## Your first Tabby Space

In this section, you will learn how to deploy a Tabby Space and use it for yourself or your orgnization.

### Deploy Tabby on Spaces

You can deploy Tabby on Spaces with just a few clicks:

[](https://huggingface.co/spaces/TabbyML/tabby-template-space?duplicate=true)

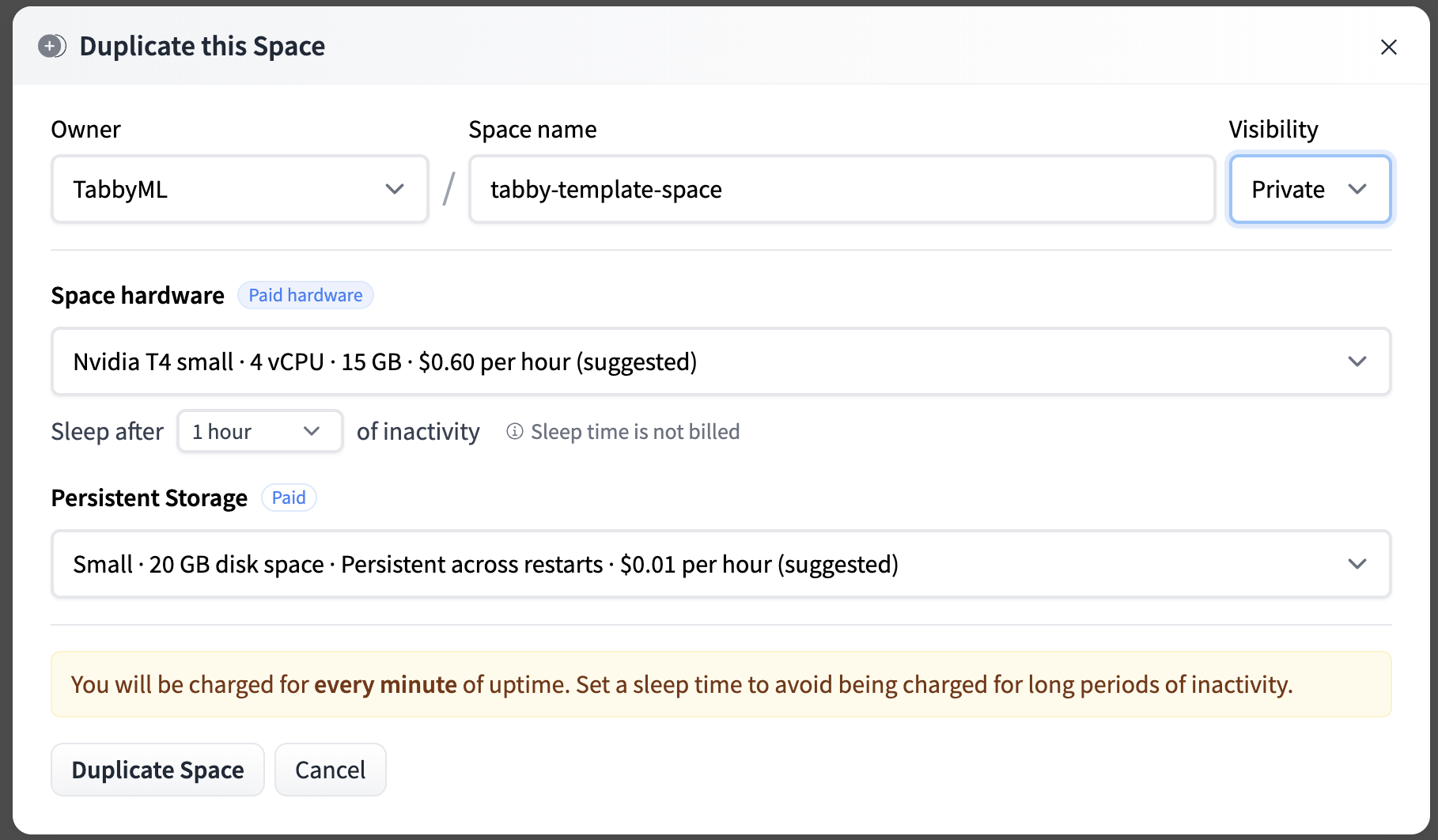

You need to define the Owner (your personal account or an organization), a Space name, and the Visibility. To secure the api endpoint, we're configuring the visibility as Private.

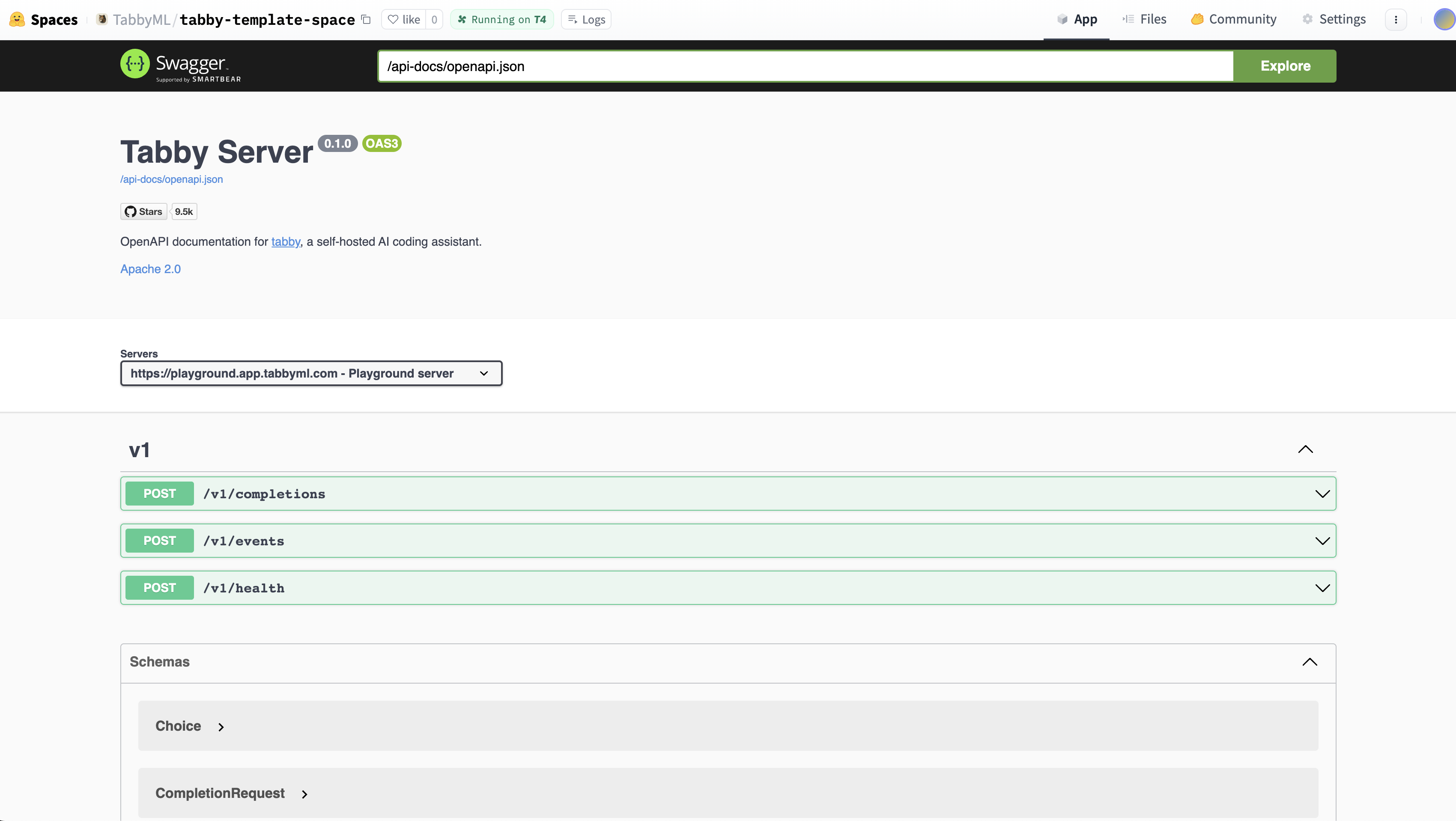

You’ll see the *Building status*. Once it becomes *Running*, your Space is ready to go. If you don’t see the Tabby Swagger UI, try refreshing the page.

<Tip>

If you want to customize the title, emojis, and colors of your space, go to "Files and Versions" and edit the metadata of your README.md file.

</Tip>

### Your Tabby Space URL

Once Tabby is up and running, for a space link such as https://huggingface.com/spaces/TabbyML/tabby, the direct URL will be https://tabbyml-tabby.hf.space.

This URL provides access to a stable Tabby instance in full-screen mode and serves as the API endpoint for IDE/Editor Extensions to talk with.

### Connect VSCode Extension to Space backend

1. Install the [VSCode Extension](https://marketplace.visualstudio.com/items?itemName=TabbyML.vscode-tabby).

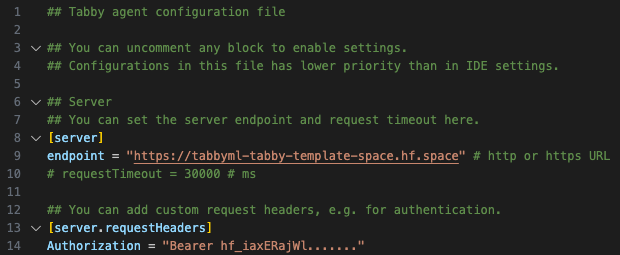

2. Open the file located at `~/.tabby-client/agent/config.toml`. Uncomment both the `[server]` section and the `[server.requestHeaders]` section.

* Set the endpoint to the Direct URL you found in the previous step, which should look something like `https://UserName-SpaceName.hf.space`.

* As the Space is set to **Private**, it is essential to configure the authorization header for accessing the endpoint. You can obtain a token from the [Access Tokens](https://huggingface.co/settings/tokens) page.

3. You'll notice a ✓ icon indicating a successful connection.

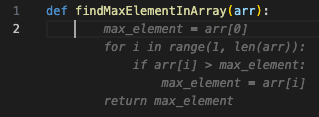

4. You've complete the setup, now enjoy tabing!

You can also utilize Tabby extensions in other IDEs, such as [JetBrains](https://plugins.jetbrains.com/plugin/22379-tabby).

## Feedback and support

If you have improvement suggestions or need specific support, please join [Tabby Slack community](https://join.slack.com/t/tabbycommunity/shared_invite/zt-1xeiddizp-bciR2RtFTaJ37RBxr8VxpA) or reach out on [Tabby’s GitHub repository](https://github.com/TabbyML/tabby).

| huggingface/hub-docs/blob/main/docs/hub/spaces-sdks-docker-tabby.md |

!--Copyright 2021 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# BigBirdPegasus

## Overview

The BigBird model was proposed in [Big Bird: Transformers for Longer Sequences](https://arxiv.org/abs/2007.14062) by

Zaheer, Manzil and Guruganesh, Guru and Dubey, Kumar Avinava and Ainslie, Joshua and Alberti, Chris and Ontanon,

Santiago and Pham, Philip and Ravula, Anirudh and Wang, Qifan and Yang, Li and others. BigBird, is a sparse-attention

based transformer which extends Transformer based models, such as BERT to much longer sequences. In addition to sparse

attention, BigBird also applies global attention as well as random attention to the input sequence. Theoretically, it

has been shown that applying sparse, global, and random attention approximates full attention, while being

computationally much more efficient for longer sequences. As a consequence of the capability to handle longer context,

BigBird has shown improved performance on various long document NLP tasks, such as question answering and

summarization, compared to BERT or RoBERTa.

The abstract from the paper is the following:

*Transformers-based models, such as BERT, have been one of the most successful deep learning models for NLP.

Unfortunately, one of their core limitations is the quadratic dependency (mainly in terms of memory) on the sequence

length due to their full attention mechanism. To remedy this, we propose, BigBird, a sparse attention mechanism that

reduces this quadratic dependency to linear. We show that BigBird is a universal approximator of sequence functions and

is Turing complete, thereby preserving these properties of the quadratic, full attention model. Along the way, our

theoretical analysis reveals some of the benefits of having O(1) global tokens (such as CLS), that attend to the entire

sequence as part of the sparse attention mechanism. The proposed sparse attention can handle sequences of length up to

8x of what was previously possible using similar hardware. As a consequence of the capability to handle longer context,

BigBird drastically improves performance on various NLP tasks such as question answering and summarization. We also

propose novel applications to genomics data.*

The original code can be found [here](https://github.com/google-research/bigbird).

## Usage tips

- For an in-detail explanation on how BigBird's attention works, see [this blog post](https://huggingface.co/blog/big-bird).

- BigBird comes with 2 implementations: **original_full** & **block_sparse**. For the sequence length < 1024, using

**original_full** is advised as there is no benefit in using **block_sparse** attention.

- The code currently uses window size of 3 blocks and 2 global blocks.

- Sequence length must be divisible by block size.

- Current implementation supports only **ITC**.

- Current implementation doesn't support **num_random_blocks = 0**.

- BigBirdPegasus uses the [PegasusTokenizer](https://github.com/huggingface/transformers/blob/main/src/transformers/models/pegasus/tokenization_pegasus.py).

- BigBird is a model with absolute position embeddings so it's usually advised to pad the inputs on the right rather than

the left.

## Resources

- [Text classification task guide](../tasks/sequence_classification)

- [Question answering task guide](../tasks/question_answering)

- [Causal language modeling task guide](../tasks/language_modeling)

- [Translation task guide](../tasks/translation)

- [Summarization task guide](../tasks/summarization)

## BigBirdPegasusConfig

[[autodoc]] BigBirdPegasusConfig

- all

## BigBirdPegasusModel

[[autodoc]] BigBirdPegasusModel

- forward

## BigBirdPegasusForConditionalGeneration

[[autodoc]] BigBirdPegasusForConditionalGeneration

- forward

## BigBirdPegasusForSequenceClassification

[[autodoc]] BigBirdPegasusForSequenceClassification

- forward

## BigBirdPegasusForQuestionAnswering

[[autodoc]] BigBirdPegasusForQuestionAnswering

- forward

## BigBirdPegasusForCausalLM

[[autodoc]] BigBirdPegasusForCausalLM

- forward

| huggingface/transformers/blob/main/docs/source/en/model_doc/bigbird_pegasus.md |

`@gradio/uploadbutton`

```html

<script>

import { BaseUploadButton } from "@gradio/uploadbutton";

</script>

```

BaseUploadButton

```javascript

export let elem_id = "";

export let elem_classes: string[] = [];

export let visible = true;

export let label: string;

export let value: null | FileData | FileData[];

export let file_count: string;

export let file_types: string[] = [];

export let root: string;

export let size: "sm" | "lg" = "lg";

export let scale: number | null = null;

export let min_width: number | undefined = undefined;

export let variant: "primary" | "secondary" | "stop" = "secondary";

export let disabled = false;

``` | gradio-app/gradio/blob/main/js/uploadbutton/README.md |

Metric Card for SuperGLUE

## Metric description

This metric is used to compute the SuperGLUE evaluation metric associated to each of the subsets of the [SuperGLUE dataset](https://huggingface.co/datasets/super_glue).

SuperGLUE is a new benchmark styled after GLUE with a new set of more difficult language understanding tasks, improved resources, and a new public leaderboard.

## How to use

There are two steps: (1) loading the SuperGLUE metric relevant to the subset of the dataset being used for evaluation; and (2) calculating the metric.

1. **Loading the relevant SuperGLUE metric** : the subsets of SuperGLUE are the following: `boolq`, `cb`, `copa`, `multirc`, `record`, `rte`, `wic`, `wsc`, `wsc.fixed`, `axb`, `axg`.

More information about the different subsets of the SuperGLUE dataset can be found on the [SuperGLUE dataset page](https://huggingface.co/datasets/super_glue) and on the [official dataset website](https://super.gluebenchmark.com/).

2. **Calculating the metric**: the metric takes two inputs : one list with the predictions of the model to score and one list of reference labels. The structure of both inputs depends on the SuperGlUE subset being used:

Format of `predictions`:

- for `record`: list of question-answer dictionaries with the following keys:

- `idx`: index of the question as specified by the dataset

- `prediction_text`: the predicted answer text

- for `multirc`: list of question-answer dictionaries with the following keys:

- `idx`: index of the question-answer pair as specified by the dataset

- `prediction`: the predicted answer label

- otherwise: list of predicted labels

Format of `references`:

- for `record`: list of question-answers dictionaries with the following keys:

- `idx`: index of the question as specified by the dataset

- `answers`: list of possible answers

- otherwise: list of reference labels

```python

from datasets import load_metric

super_glue_metric = load_metric('super_glue', 'copa')

predictions = [0, 1]

references = [0, 1]

results = super_glue_metric.compute(predictions=predictions, references=references)

```

## Output values

The output of the metric depends on the SuperGLUE subset chosen, consisting of a dictionary that contains one or several of the following metrics:

`exact_match`: A given predicted string's exact match score is 1 if it is the exact same as its reference string, and is 0 otherwise. (See [Exact Match](https://huggingface.co/metrics/exact_match) for more information).

`f1`: the harmonic mean of the precision and recall (see [F1 score](https://huggingface.co/metrics/f1) for more information). Its range is 0-1 -- its lowest possible value is 0, if either the precision or the recall is 0, and its highest possible value is 1.0, which means perfect precision and recall.

`matthews_correlation`: a measure of the quality of binary and multiclass classifications (see [Matthews Correlation](https://huggingface.co/metrics/matthews_correlation) for more information). Its range of values is between -1 and +1, where a coefficient of +1 represents a perfect prediction, 0 an average random prediction and -1 an inverse prediction.

### Values from popular papers

The [original SuperGLUE paper](https://arxiv.org/pdf/1905.00537.pdf) reported average scores ranging from 47 to 71.5%, depending on the model used (with all evaluation values scaled by 100 to make computing the average possible).

For more recent model performance, see the [dataset leaderboard](https://super.gluebenchmark.com/leaderboard).

## Examples

Maximal values for the COPA subset (which outputs `accuracy`):

```python

from datasets import load_metric

super_glue_metric = load_metric('super_glue', 'copa') # any of ["copa", "rte", "wic", "wsc", "wsc.fixed", "boolq", "axg"]

predictions = [0, 1]

references = [0, 1]

results = super_glue_metric.compute(predictions=predictions, references=references)

print(results)

{'accuracy': 1.0}

```

Minimal values for the MultiRC subset (which outputs `pearson` and `spearmanr`):

```python

from datasets import load_metric

super_glue_metric = load_metric('super_glue', 'multirc')

predictions = [{'idx': {'answer': 0, 'paragraph': 0, 'question': 0}, 'prediction': 0}, {'idx': {'answer': 1, 'paragraph': 2, 'question': 3}, 'prediction': 1}]

references = [1,0]

results = super_glue_metric.compute(predictions=predictions, references=references)

print(results)

{'exact_match': 0.0, 'f1_m': 0.0, 'f1_a': 0.0}

```

Partial match for the COLA subset (which outputs `matthews_correlation`)

```python

from datasets import load_metric

super_glue_metric = load_metric('super_glue', 'axb')

references = [0, 1]

predictions = [1,1]

results = super_glue_metric.compute(predictions=predictions, references=references)

print(results)

{'matthews_correlation': 0.0}

```

## Limitations and bias

This metric works only with datasets that have the same format as the [SuperGLUE dataset](https://huggingface.co/datasets/super_glue).

The dataset also includes Winogender, a subset of the dataset that is designed to measure gender bias in coreference resolution systems. However, as noted in the SuperGLUE paper, this subset has its limitations: *"It offers only positive predictive value: A poor bias score is clear evidence that a model exhibits gender bias, but a good score does not mean that the model is unbiased.[...] Also, Winogender does not cover all forms of social bias, or even all forms of gender. For instance, the version of the data used here offers no coverage of gender-neutral they or non-binary pronouns."

## Citation

```bibtex

@article{wang2019superglue,

title={Super{GLUE}: A Stickier Benchmark for General-Purpose Language Understanding Systems},

author={Wang, Alex and Pruksachatkun, Yada and Nangia, Nikita and Singh, Amanpreet and Michael, Julian and Hill, Felix and Levy, Omer and Bowman, Samuel R},

journal={arXiv preprint arXiv:1905.00537},

year={2019}

}

```

## Further References

- [SuperGLUE benchmark homepage](https://super.gluebenchmark.com/)

| huggingface/datasets/blob/main/metrics/super_glue/README.md |

--

title: "Introducing Decision Transformers on Hugging Face 🤗"

thumbnail: /blog/assets/58_decision-transformers/thumbnail.jpg

authors:

- user: edbeeching

- user: ThomasSimonini

---

# Introducing Decision Transformers on Hugging Face 🤗

At Hugging Face, we are contributing to the ecosystem for Deep Reinforcement Learning researchers and enthusiasts. Recently, we have integrated Deep RL frameworks such as [Stable-Baselines3](https://github.com/DLR-RM/stable-baselines3).

And today we are happy to announce that we integrated the [Decision Transformer](https://arxiv.org/abs/2106.01345), an Offline Reinforcement Learning method, into the 🤗 transformers library and the Hugging Face Hub. We have some exciting plans for improving accessibility in the field of Deep RL and we are looking forward to sharing them with you over the coming weeks and months.

- [What is Offline Reinforcement Learning?](#what-is-offline-reinforcement-learning?)

- [Introducing Decision Transformers](#introducing-decision-transformers)

- [Using the Decision Transformer in 🤗 Transformers](#using-the-decision-transformer-in--transformers)

- [Conclusion](#conclusion)

- [What's next?](#whats-next)

- [References](#references)

## What is Offline Reinforcement Learning?

Deep Reinforcement Learning (RL) is a framework to build decision-making agents. These agents aim to learn optimal behavior (policy) by interacting with the environment through trial and error and receiving rewards as unique feedback.

The agent’s goal is to maximize **its cumulative reward, called return.** Because RL is based on the reward hypothesis: **all goals can be described as the maximization of the expected cumulative reward.**

Deep Reinforcement Learning agents **learn with batches of experience.** The question is, how do they collect it?:

*A comparison between Reinforcement Learning in an Online and Offline setting, figure taken from [this post](https://offline-rl.github.io/)*

In online reinforcement learning, **the agent gathers data directly**: it collects a batch of experience by interacting with the environment. Then, it uses this experience immediately (or via some replay buffer) to learn from it (update its policy).

But this implies that either you train your agent directly in the real world or have a simulator. If you don’t have one, you need to build it, which can be very complex (how to reflect the complex reality of the real world in an environment?), expensive, and insecure since if the simulator has flaws, the agent will exploit them if they provide a competitive advantage.

On the other hand, in offline reinforcement learning, the agent only uses data collected from other agents or human demonstrations. **It does not interact with the environment**.

The process is as follows:

1. Create a dataset using one or more policies and/or human interactions.

2. Run offline RL on this dataset to learn a policy

This method has one drawback: the counterfactual queries problem. What do we do if our agent decides to do something for which we don’t have the data? For instance, turning right on an intersection but we don’t have this trajectory.

There’s already exists some solutions on this topic, but if you want to know more about offline reinforcement learning you can watch [this video](https://www.youtube.com/watch?v=k08N5a0gG0A)

## Introducing Decision Transformers

The Decision Transformer model was introduced by [“Decision Transformer: Reinforcement Learning via Sequence Modeling” by Chen L. et al](https://arxiv.org/abs/2106.01345). It abstracts Reinforcement Learning as a **conditional-sequence modeling problem**.

The main idea is that instead of training a policy using RL methods, such as fitting a value function, that will tell us what action to take to maximize the return (cumulative reward), we use a sequence modeling algorithm (Transformer) that, given a desired return, past states, and actions, will generate future actions to achieve this desired return. It’s an autoregressive model conditioned on the desired return, past states, and actions to generate future actions that achieve the desired return.

This is a complete shift in the Reinforcement Learning paradigm since we use generative trajectory modeling (modeling the joint distribution of the sequence of states, actions, and rewards) to replace conventional RL algorithms. It means that in Decision Transformers, we don’t maximize the return but rather generate a series of future actions that achieve the desired return.

The process goes this way:

1. We feed the last K timesteps into the Decision Transformer with 3 inputs:

- Return-to-go

- State

- Action

2. The tokens are embedded either with a linear layer if the state is a vector or CNN encoder if it’s frames.

3. The inputs are processed by a GPT-2 model which predicts future actions via autoregressive modeling.

*Decision Transformer architecture. States, actions, and returns are fed into modality specific linear embeddings and a positional episodic timestep encoding is added. Tokens are fed into a GPT architecture which predicts actions autoregressively using a causal self-attention mask. Figure from [1].*

## Using the Decision Transformer in 🤗 Transformers

The Decision Transformer model is now available as part of the 🤗 transformers library. In addition, we share [nine pre-trained model checkpoints for continuous control tasks in the Gym environment](https://huggingface.co/models?other=gym-continous-control).

<figure class="image table text-center m-0 w-full">

<video

alt="WalkerEd-expert"

style="max-width: 70%; margin: auto;"

autoplay loop autobuffer muted playsinline

>

<source src="assets/58_decision-transformers/walker2d-expert.mp4" type="video/mp4">

</video>

</figure>

*An “expert” Decision Transformers model, learned using offline RL in the Gym Walker2d environment.*

### Install the package

`````python

pip install git+https://github.com/huggingface/transformers

`````

### Loading the model

Using the Decision Transformer is relatively easy, but as it is an autoregressive model, some care has to be taken in order to prepare the model’s inputs at each time-step. We have prepared both a [Python script](https://github.com/huggingface/transformers/blob/main/examples/research_projects/decision_transformer/run_decision_transformer.py) and a [Colab notebook](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing) that demonstrates how to use this model.

Loading a pretrained Decision Transformer is simple in the 🤗 transformers library:

`````python

from transformers import DecisionTransformerModel

model_name = "edbeeching/decision-transformer-gym-hopper-expert"

model = DecisionTransformerModel.from_pretrained(model_name)

``````

### Creating the environment

We provide pretrained checkpoints for the Gym Hopper, Walker2D and Halfcheetah. Checkpoints for Atari environments will soon be available.

`````python

import gym

env = gym.make("Hopper-v3")

state_dim = env.observation_space.shape[0] # state size

act_dim = env.action_space.shape[0] # action size

``````

### Autoregressive prediction function

The model performs an [autoregressive prediction](https://en.wikipedia.org/wiki/Autoregressive_model); that is to say that predictions made at the current time-step **t** are sequentially conditioned on the outputs from previous time-steps. This function is quite meaty, so we will aim to explain it in the comments.

`````python

# Function that gets an action from the model using autoregressive prediction

# with a window of the previous 20 timesteps.

def get_action(model, states, actions, rewards, returns_to_go, timesteps):

# This implementation does not condition on past rewards

states = states.reshape(1, -1, model.config.state_dim)

actions = actions.reshape(1, -1, model.config.act_dim)

returns_to_go = returns_to_go.reshape(1, -1, 1)

timesteps = timesteps.reshape(1, -1)

# The prediction is conditioned on up to 20 previous time-steps

states = states[:, -model.config.max_length :]

actions = actions[:, -model.config.max_length :]

returns_to_go = returns_to_go[:, -model.config.max_length :]

timesteps = timesteps[:, -model.config.max_length :]

# pad all tokens to sequence length, this is required if we process batches

padding = model.config.max_length - states.shape[1]

attention_mask = torch.cat([torch.zeros(padding), torch.ones(states.shape[1])])

attention_mask = attention_mask.to(dtype=torch.long).reshape(1, -1)

states = torch.cat([torch.zeros((1, padding, state_dim)), states], dim=1).float()

actions = torch.cat([torch.zeros((1, padding, act_dim)), actions], dim=1).float()

returns_to_go = torch.cat([torch.zeros((1, padding, 1)), returns_to_go], dim=1).float()

timesteps = torch.cat([torch.zeros((1, padding), dtype=torch.long), timesteps], dim=1)

# perform the prediction

state_preds, action_preds, return_preds = model(

states=states,

actions=actions,

rewards=rewards,

returns_to_go=returns_to_go,

timesteps=timesteps,

attention_mask=attention_mask,

return_dict=False,)

return action_preds[0, -1]

``````

### Evaluating the model

In order to evaluate the model, we need some additional information; the mean and standard deviation of the states that were used during training. Fortunately, these are available for each of the checkpoint’s [model card](https://huggingface.co/edbeeching/decision-transformer-gym-hopper-expert) on the Hugging Face Hub!

We also need a target return for the model. This is the power of return conditioned Offline Reinforcement Learning: we can use the target return to control the performance of the policy. This could be really powerful in a multiplayer setting, where we would like to adjust the performance of an opponent bot to be at a suitable difficulty for the player. The authors show a great plot of this in their paper!

*Sampled (evaluation) returns accumulated by Decision Transformer when conditioned on

the specified target (desired) returns. Top: Atari. Bottom: D4RL medium-replay datasets. Figure from [1].*

``````python

TARGET_RETURN = 3.6 # This was normalized during training

MAX_EPISODE_LENGTH = 1000

state_mean = np.array(

[1.3490015, -0.11208222, -0.5506444, -0.13188992, -0.00378754, 2.6071432,

0.02322114, -0.01626922, -0.06840388, -0.05183131, 0.04272673,])

state_std = np.array(

[0.15980862, 0.0446214, 0.14307782, 0.17629202, 0.5912333, 0.5899924,

1.5405099, 0.8152689, 2.0173461, 2.4107876, 5.8440027,])

state_mean = torch.from_numpy(state_mean)

state_std = torch.from_numpy(state_std)

state = env.reset()

target_return = torch.tensor(TARGET_RETURN).float().reshape(1, 1)

states = torch.from_numpy(state).reshape(1, state_dim).float()

actions = torch.zeros((0, act_dim)).float()

rewards = torch.zeros(0).float()

timesteps = torch.tensor(0).reshape(1, 1).long()

# take steps in the environment

for t in range(max_ep_len):

# add zeros for actions as input for the current time-step

actions = torch.cat([actions, torch.zeros((1, act_dim))], dim=0)

rewards = torch.cat([rewards, torch.zeros(1)])

# predicting the action to take

action = get_action(model,

(states - state_mean) / state_std,

actions,

rewards,

target_return,

timesteps)

actions[-1] = action

action = action.detach().numpy()

# interact with the environment based on this action

state, reward, done, _ = env.step(action)

cur_state = torch.from_numpy(state).reshape(1, state_dim)

states = torch.cat([states, cur_state], dim=0)

rewards[-1] = reward

pred_return = target_return[0, -1] - (reward / scale)

target_return = torch.cat([target_return, pred_return.reshape(1, 1)], dim=1)

timesteps = torch.cat([timesteps, torch.ones((1, 1)).long() * (t + 1)], dim=1)

if done:

break

``````

You will find a more detailed example, with the creation of videos of the agent in our [Colab notebook](https://colab.research.google.com/drive/1K3UuajwoPY1MzRKNkONNRS3gS5DxZ-qF?usp=sharing).

## Conclusion

In addition to Decision Transformers, we want to support more use cases and tools from the Deep Reinforcement Learning community. Therefore, it would be great to hear your feedback on the Decision Transformer model, and more generally anything we can build with you that would be useful for RL. Feel free to **[reach out to us](mailto:thomas.simonini@huggingface.co)**.

## What’s next?

In the coming weeks and months, we plan on supporting other tools from the ecosystem:

- Integrating **[RL-baselines3-zoo](https://github.com/DLR-RM/rl-baselines3-zoo)**

- Uploading **[RL-trained-agents models](https://github.com/DLR-RM/rl-trained-agents)** into the Hub: a big collection of pre-trained Reinforcement Learning agents using stable-baselines3

- Integrating other Deep Reinforcement Learning libraries

- Implementing Convolutional Decision Transformers For Atari

- And more to come 🥳

The best way to keep in touch is to **[join our discord server](https://discord.gg/YRAq8fMnUG)** to exchange with us and with the community.

## References

[1] Chen, Lili, et al. "Decision transformer: Reinforcement learning via sequence modeling." *Advances in neural information processing systems* 34 (2021).

[2] Agarwal, Rishabh, Dale Schuurmans, and Mohammad Norouzi. "An optimistic perspective on offline reinforcement learning." *International Conference on Machine Learning*. PMLR, 2020.

### Acknowledgements

We would like to thank the paper’s first authors, Kevin Lu and Lili Chen, for their constructive conversations.

| huggingface/blog/blob/main/decision-transformers.md |

Gradio Demo: translation

### This translation demo takes in the text, source and target languages, and returns the translation. It uses the Transformers library to set up the model and has a title, description, and example.

```

!pip install -q gradio git+https://github.com/huggingface/transformers gradio torch

```

```

import gradio as gr

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, pipeline

import torch

# this model was loaded from https://hf.co/models

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/nllb-200-distilled-600M")

tokenizer = AutoTokenizer.from_pretrained("facebook/nllb-200-distilled-600M")

device = 0 if torch.cuda.is_available() else -1

LANGS = ["ace_Arab", "eng_Latn", "fra_Latn", "spa_Latn"]

def translate(text, src_lang, tgt_lang):

"""

Translate the text from source lang to target lang

"""

translation_pipeline = pipeline("translation", model=model, tokenizer=tokenizer, src_lang=src_lang, tgt_lang=tgt_lang, max_length=400, device=device)

result = translation_pipeline(text)

return result[0]['translation_text']

demo = gr.Interface(

fn=translate,

inputs=[

gr.components.Textbox(label="Text"),

gr.components.Dropdown(label="Source Language", choices=LANGS),

gr.components.Dropdown(label="Target Language", choices=LANGS),

],

outputs=["text"],

examples=[["Building a translation demo with Gradio is so easy!", "eng_Latn", "spa_Latn"]],

cache_examples=False,

title="Translation Demo",

description="This demo is a simplified version of the original [NLLB-Translator](https://huggingface.co/spaces/Narrativaai/NLLB-Translator) space"

)

demo.launch()

```

| gradio-app/gradio/blob/main/demo/translation/run.ipynb |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Distributed inference with multiple GPUs

On distributed setups, you can run inference across multiple GPUs with 🤗 [Accelerate](https://huggingface.co/docs/accelerate/index) or [PyTorch Distributed](https://pytorch.org/tutorials/beginner/dist_overview.html), which is useful for generating with multiple prompts in parallel.

This guide will show you how to use 🤗 Accelerate and PyTorch Distributed for distributed inference.

## 🤗 Accelerate

🤗 [Accelerate](https://huggingface.co/docs/accelerate/index) is a library designed to make it easy to train or run inference across distributed setups. It simplifies the process of setting up the distributed environment, allowing you to focus on your PyTorch code.

To begin, create a Python file and initialize an [`accelerate.PartialState`] to create a distributed environment; your setup is automatically detected so you don't need to explicitly define the `rank` or `world_size`. Move the [`DiffusionPipeline`] to `distributed_state.device` to assign a GPU to each process.

Now use the [`~accelerate.PartialState.split_between_processes`] utility as a context manager to automatically distribute the prompts between the number of processes.

```py

import torch

from accelerate import PartialState

from diffusers import DiffusionPipeline

pipeline = DiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True

)

distributed_state = PartialState()

pipeline.to(distributed_state.device)

with distributed_state.split_between_processes(["a dog", "a cat"]) as prompt:

result = pipeline(prompt).images[0]

result.save(f"result_{distributed_state.process_index}.png")

```

Use the `--num_processes` argument to specify the number of GPUs to use, and call `accelerate launch` to run the script:

```bash

accelerate launch run_distributed.py --num_processes=2

```

<Tip>

To learn more, take a look at the [Distributed Inference with 🤗 Accelerate](https://huggingface.co/docs/accelerate/en/usage_guides/distributed_inference#distributed-inference-with-accelerate) guide.

</Tip>

## PyTorch Distributed

PyTorch supports [`DistributedDataParallel`](https://pytorch.org/docs/stable/generated/torch.nn.parallel.DistributedDataParallel.html) which enables data parallelism.

To start, create a Python file and import `torch.distributed` and `torch.multiprocessing` to set up the distributed process group and to spawn the processes for inference on each GPU. You should also initialize a [`DiffusionPipeline`]:

```py

import torch

import torch.distributed as dist

import torch.multiprocessing as mp

from diffusers import DiffusionPipeline

sd = DiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True

)

```

You'll want to create a function to run inference; [`init_process_group`](https://pytorch.org/docs/stable/distributed.html?highlight=init_process_group#torch.distributed.init_process_group) handles creating a distributed environment with the type of backend to use, the `rank` of the current process, and the `world_size` or the number of processes participating. If you're running inference in parallel over 2 GPUs, then the `world_size` is 2.

Move the [`DiffusionPipeline`] to `rank` and use `get_rank` to assign a GPU to each process, where each process handles a different prompt:

```py

def run_inference(rank, world_size):

dist.init_process_group("nccl", rank=rank, world_size=world_size)

sd.to(rank)

if torch.distributed.get_rank() == 0:

prompt = "a dog"

elif torch.distributed.get_rank() == 1:

prompt = "a cat"

image = sd(prompt).images[0]

image.save(f"./{'_'.join(prompt)}.png")

```

To run the distributed inference, call [`mp.spawn`](https://pytorch.org/docs/stable/multiprocessing.html#torch.multiprocessing.spawn) to run the `run_inference` function on the number of GPUs defined in `world_size`:

```py

def main():

world_size = 2

mp.spawn(run_inference, args=(world_size,), nprocs=world_size, join=True)

if __name__ == "__main__":

main()

```

Once you've completed the inference script, use the `--nproc_per_node` argument to specify the number of GPUs to use and call `torchrun` to run the script:

```bash

torchrun run_distributed.py --nproc_per_node=2

```

| huggingface/diffusers/blob/main/docs/source/en/training/distributed_inference.md |

T2I-Adapter training example for Stable Diffusion XL (SDXL)

The `train_t2i_adapter_sdxl.py` script shows how to implement the [T2I-Adapter training procedure](https://hf.co/papers/2302.08453) for [Stable Diffusion XL](https://huggingface.co/papers/2307.01952).

## Running locally with PyTorch

### Installing the dependencies

Before running the scripts, make sure to install the library's training dependencies:

**Important**

To make sure you can successfully run the latest versions of the example scripts, we highly recommend **installing from source** and keeping the install up to date as we update the example scripts frequently and install some example-specific requirements. To do this, execute the following steps in a new virtual environment:

```bash

git clone https://github.com/huggingface/diffusers

cd diffusers

pip install -e .

```

Then cd in the `examples/t2i_adapter` folder and run

```bash

pip install -r requirements.txt

```

And initialize an [🤗Accelerate](https://github.com/huggingface/accelerate/) environment with:

```bash

accelerate config

```