init dataset

Browse files- .gitattributes +1 -1

- README.md +29 -0

- assets/dataset.png +0 -0

- rendering/render_albedo.py +212 -0

- rendering/render_color.py +279 -0

- rendering/render_normal_orm.py +228 -0

.gitattributes

CHANGED

|

@@ -48,7 +48,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 48 |

# Image files - uncompressed

|

| 49 |

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 50 |

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 51 |

-

*.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

*.tiff filter=lfs diff=lfs merge=lfs -text

|

| 53 |

# Image files - compressed

|

| 54 |

*.jpg filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 48 |

# Image files - uncompressed

|

| 49 |

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 50 |

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

# *.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

*.tiff filter=lfs diff=lfs merge=lfs -text

|

| 53 |

# Image files - compressed

|

| 54 |

*.jpg filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,3 +1,32 @@

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

---

|

| 4 |

+

|

| 5 |

+

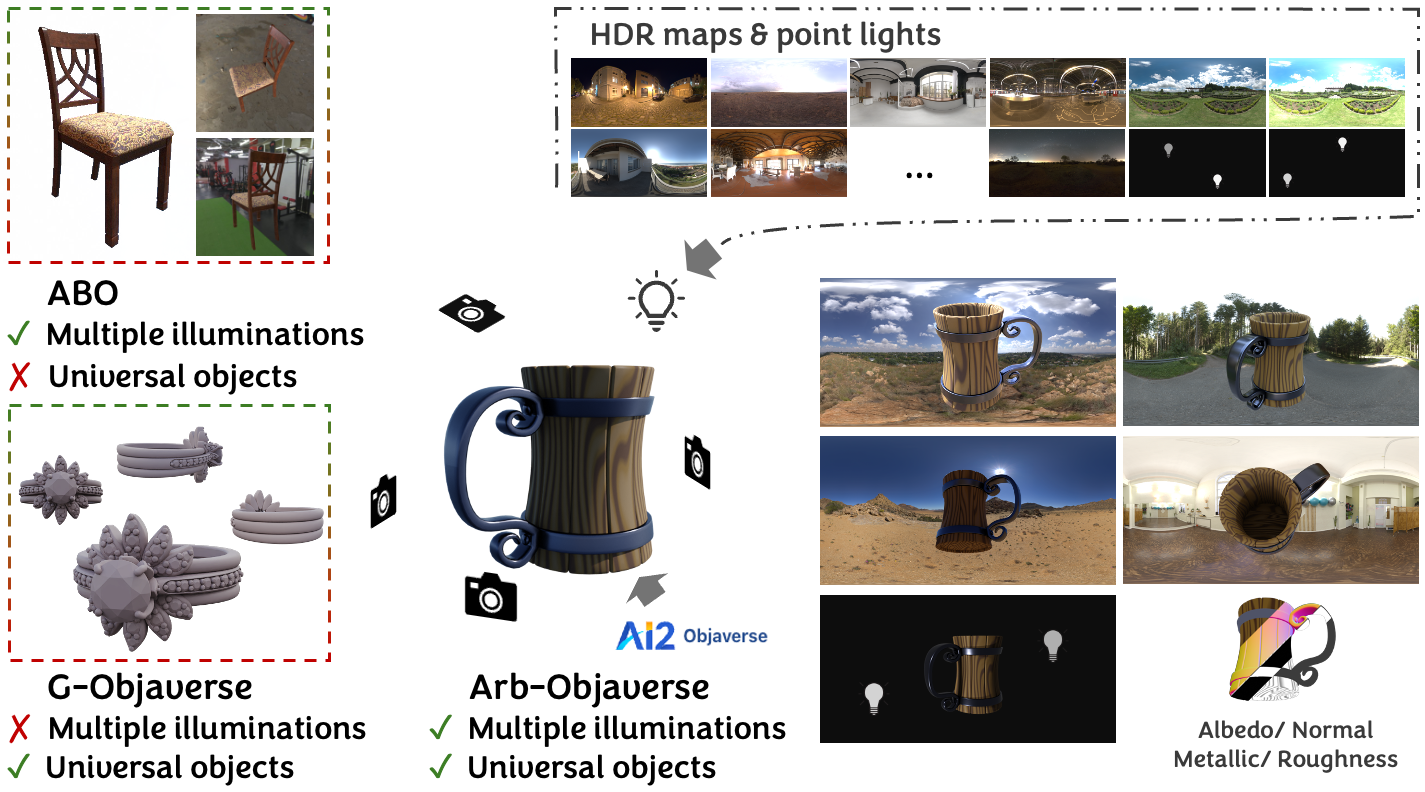

# Arb-Objaverse Dataset

|

| 6 |

+

|

| 7 |

+

[Project page](https://lizb6626.github.io/IDArb/) | [Paper](https://lizb6626.github.io/IDArb/) | [Code](https://github.com/Lizb6626/IDArb)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## News

|

| 13 |

+

|

| 14 |

+

- [2024-12] We are in the process of uploading the complete dataset. Stay tuned!

|

| 15 |

+

|

| 16 |

+

## Dataset Format

|

| 17 |

+

|

| 18 |

+

```

|

| 19 |

+

Arb-Objaverse Dataset

|

| 20 |

+

├── 000-000

|

| 21 |

+

│ ├── 0a3dd21606a84a449bb22f597c34bab7 // object uid

|

| 22 |

+

│ │ ├── albedo_<view_idx>.png // albedo image

|

| 23 |

+

│ │ ├── color_<light_idx>_<view_idx>.png // rendered rgb image

|

| 24 |

+

│ │ ├── normal_<view_idx>.exr

|

| 25 |

+

│ │ ├── orm_<view_idx>.png // we store material as (o, roughness, metallic), where o is left unused

|

| 26 |

+

│ │ ├── camera.json // camera poses

|

| 27 |

+

│ │ ├── lighting.json // rendering light

|

| 28 |

+

```

|

| 29 |

+

|

| 30 |

+

## Rendering Scripts

|

| 31 |

+

|

| 32 |

+

Rendering scripts for generating this dataset are available in the `./rendering` directory. We utilize `blenderproc==2.5.0` for Blender rendering. Environmental HDRs are sourced from the [Haven Dataset](https://dlr-rm.github.io/BlenderProc/examples/datasets/haven/README.html).

|

assets/dataset.png

ADDED

|

rendering/render_albedo.py

ADDED

|

@@ -0,0 +1,212 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import blenderproc as bproc

|

| 2 |

+

import numpy as np

|

| 3 |

+

import os

|

| 4 |

+

os.environ["OPENCV_IO_ENABLE_OPENEXR"]="1"

|

| 5 |

+

import json

|

| 6 |

+

import bpy

|

| 7 |

+

import cv2

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import math

|

| 10 |

+

from mathutils import Vector, Matrix

|

| 11 |

+

import argparse

|

| 12 |

+

|

| 13 |

+

parser = argparse.ArgumentParser()

|

| 14 |

+

parser.add_argument('--glb_path', type=str)

|

| 15 |

+

parser.add_argument('--output_dir', type=str, default='output')

|

| 16 |

+

args = parser.parse_args()

|

| 17 |

+

|

| 18 |

+

# load the glb model

|

| 19 |

+

def load_object(object_path: str) -> None:

|

| 20 |

+

"""Loads a glb model into the scene."""

|

| 21 |

+

if object_path.endswith(".glb") or object_path.endswith(".gltf"):

|

| 22 |

+

bpy.ops.import_scene.gltf(filepath=object_path, merge_vertices=False)

|

| 23 |

+

elif object_path.endswith(".fbx"):

|

| 24 |

+

bpy.ops.import_scene.fbx(filepath=object_path)

|

| 25 |

+

elif object_path.endswith(".obj"):

|

| 26 |

+

bpy.ops.import_scene.obj(filepath=object_path)

|

| 27 |

+

elif object_path.endswith(".ply"):

|

| 28 |

+

bpy.ops.import_mesh.ply(filepath=object_path)

|

| 29 |

+

else:

|

| 30 |

+

raise ValueError(f"Unsupported file type: {object_path}")

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

def load_envmap(envmap_path: str) -> None:

|

| 34 |

+

"""Loads an environment map into the scene."""

|

| 35 |

+

world = bpy.context.scene.world

|

| 36 |

+

world.use_nodes = True

|

| 37 |

+

bg = world.node_tree.nodes['Background']

|

| 38 |

+

envmap = world.node_tree.nodes.new('ShaderNodeTexEnvironment')

|

| 39 |

+

envmap.image = bpy.data.images.load(envmap_path)

|

| 40 |

+

world.node_tree.links.new(bg.inputs['Color'], envmap.outputs['Color'])

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def turn_off_metallic():

|

| 44 |

+

# use for albedo rendering, metallic will affect the color

|

| 45 |

+

materials = bproc.material.collect_all()

|

| 46 |

+

for mat in materials:

|

| 47 |

+

mat.set_principled_shader_value('Metallic', 0.0)

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def camera_pose(azimuth, elevation, radius=1.5):

|

| 51 |

+

"""Camera look at the origin."""

|

| 52 |

+

azimuth = np.deg2rad(azimuth)

|

| 53 |

+

elevation = np.deg2rad(elevation)

|

| 54 |

+

|

| 55 |

+

x = radius * np.cos(azimuth) * np.cos(elevation)

|

| 56 |

+

y = -radius * np.sin(azimuth) * np.cos(elevation)

|

| 57 |

+

z = radius * np.sin(elevation)

|

| 58 |

+

camera_position = np.array([x, y, z])

|

| 59 |

+

|

| 60 |

+

camera_forward = camera_position / np.linalg.norm(camera_position)

|

| 61 |

+

camera_right = np.cross(np.array([0.0, 0.0, 1.0]), camera_forward)

|

| 62 |

+

camera_right /= np.linalg.norm(camera_right)

|

| 63 |

+

camera_up = np.cross(camera_forward, camera_right)

|

| 64 |

+

camera_up /= np.linalg.norm(camera_up)

|

| 65 |

+

|

| 66 |

+

camera_pose = np.eye(4)

|

| 67 |

+

camera_pose[:3, 0] = camera_right

|

| 68 |

+

camera_pose[:3, 1] = camera_up

|

| 69 |

+

camera_pose[:3, 2] = camera_forward

|

| 70 |

+

camera_pose[:3, 3] = camera_position

|

| 71 |

+

|

| 72 |

+

return camera_pose

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def scene_root_objects():

|

| 76 |

+

for obj in bpy.context.scene.objects.values():

|

| 77 |

+

if not obj.parent:

|

| 78 |

+

yield obj

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

def scene_meshes():

|

| 82 |

+

for obj in bpy.context.scene.objects.values():

|

| 83 |

+

if isinstance(obj.data, (bpy.types.Mesh)):

|

| 84 |

+

yield obj

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

def scene_bbox(single_obj=None, ignore_matrix=False):

|

| 88 |

+

bbox_min = (math.inf,) * 3

|

| 89 |

+

bbox_max = (-math.inf,) * 3

|

| 90 |

+

found = False

|

| 91 |

+

for obj in scene_meshes() if single_obj is None else [single_obj]:

|

| 92 |

+

found = True

|

| 93 |

+

for coord in obj.bound_box:

|

| 94 |

+

coord = Vector(coord)

|

| 95 |

+

if not ignore_matrix:

|

| 96 |

+

coord = obj.matrix_world @ coord

|

| 97 |

+

bbox_min = tuple(min(x, y) for x, y in zip(bbox_min, coord))

|

| 98 |

+

bbox_max = tuple(max(x, y) for x, y in zip(bbox_max, coord))

|

| 99 |

+

if not found:

|

| 100 |

+

raise RuntimeError("no objects in scene to compute bounding box for")

|

| 101 |

+

return Vector(bbox_min), Vector(bbox_max)

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

def normalize_scene():

|

| 105 |

+

bbox_min, bbox_max = scene_bbox()

|

| 106 |

+

|

| 107 |

+

scale = 1 / max(bbox_max - bbox_min)

|

| 108 |

+

for obj in scene_root_objects():

|

| 109 |

+

obj.scale = obj.scale * scale

|

| 110 |

+

# Apply scale to matrix_world.

|

| 111 |

+

bpy.context.view_layer.update()

|

| 112 |

+

bbox_min, bbox_max = scene_bbox()

|

| 113 |

+

offset = -(bbox_min + bbox_max) / 2

|

| 114 |

+

for obj in scene_root_objects():

|

| 115 |

+

obj.matrix_world.translation += offset

|

| 116 |

+

bpy.ops.object.select_all(action="DESELECT")

|

| 117 |

+

|

| 118 |

+

return scale, offset

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

def reset_normal():

|

| 122 |

+

"""experimental!!!

|

| 123 |

+

reset normal

|

| 124 |

+

"""

|

| 125 |

+

for obj in scene_meshes():

|

| 126 |

+

bpy.context.view_layer.objects.active = obj

|

| 127 |

+

bpy.ops.object.mode_set(mode='EDIT')

|

| 128 |

+

bpy.ops.mesh.normals_tools(mode='RESET')

|

| 129 |

+

bpy.ops.object.mode_set(mode='OBJECT')

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

def change_to_orm():

|

| 133 |

+

materials = bproc.material.collect_all()

|

| 134 |

+

for mat in materials:

|

| 135 |

+

orm_color = mat.nodes.new(type='ShaderNodeCombineRGB')

|

| 136 |

+

principled_bsdf = mat.get_the_one_node_with_type("BsdfPrincipled")

|

| 137 |

+

|

| 138 |

+

# metallic -> Blue

|

| 139 |

+

if principled_bsdf.inputs['Metallic'].links:

|

| 140 |

+

metallic_from_socket = principled_bsdf.inputs['Metallic'].links[0].from_socket

|

| 141 |

+

metallic_link = mat.links.new(metallic_from_socket, orm_color.inputs['B'])

|

| 142 |

+

mat.links.remove(principled_bsdf.inputs['Metallic'].links[0])

|

| 143 |

+

else:

|

| 144 |

+

metallic_value = principled_bsdf.inputs['Metallic'].default_value

|

| 145 |

+

orm_color.inputs['B'].default_value = metallic_value

|

| 146 |

+

principled_bsdf.inputs['Metallic'].default_value = 0.0

|

| 147 |

+

# roughness -> Green

|

| 148 |

+

if principled_bsdf.inputs['Roughness'].links:

|

| 149 |

+

roughness_from_socket = principled_bsdf.inputs['Roughness'].links[0].from_socket

|

| 150 |

+

roughness_link = mat.links.new(roughness_from_socket, orm_color.inputs['G'])

|

| 151 |

+

mat.links.remove(principled_bsdf.inputs['Roughness'].links[0])

|

| 152 |

+

else:

|

| 153 |

+

roughness_value = principled_bsdf.inputs['Roughness'].default_value

|

| 154 |

+

orm_color.inputs['G'].default_value = roughness_value

|

| 155 |

+

principled_bsdf.inputs['Roughness'].default_value = 0.5

|

| 156 |

+

|

| 157 |

+

# link orm_color to principled_bsdf as base color

|

| 158 |

+

mat.links.new(orm_color.outputs['Image'], principled_bsdf.inputs['Base Color'])

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

def is_bsdf():

|

| 162 |

+

for mat in bproc.material.collect_all():

|

| 163 |

+

node = mat.get_nodes_with_type('BsdfPrincipled')

|

| 164 |

+

if node and len(node) == 1:

|

| 165 |

+

pass

|

| 166 |

+

else:

|

| 167 |

+

return False

|

| 168 |

+

return True

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

def sample_sphere(num_samples):

|

| 172 |

+

radiuss = np.random.uniform(1.5, 2.0, size=num_samples)

|

| 173 |

+

azimuth = np.random.uniform(0, 360, size=num_samples)

|

| 174 |

+

elevation = np.random.uniform(-90, 90, size=num_samples)

|

| 175 |

+

return radiuss, azimuth, elevation

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

bproc.init()

|

| 179 |

+

# bproc.renderer.set_render_devices(use_only_cpu=True)

|

| 180 |

+

# bpy.context.scene.view_settings.view_transform = 'Raw' # do not use sRGB

|

| 181 |

+

|

| 182 |

+

glb_path = args.glb_path

|

| 183 |

+

output_dir = args.output_dir

|

| 184 |

+

obj_name = os.path.basename(glb_path).split('.')[0]

|

| 185 |

+

load_object(glb_path)

|

| 186 |

+

|

| 187 |

+

scale, offset = normalize_scene()

|

| 188 |

+

|

| 189 |

+

reset_normal()

|

| 190 |

+

turn_off_metallic()

|

| 191 |

+

|

| 192 |

+

# load camera info

|

| 193 |

+

with open(os.path.join(output_dir, obj_name, 'camera.json'), 'r') as f:

|

| 194 |

+

meta_info = json.load(f)

|

| 195 |

+

radiuss = np.array(meta_info['raidus'])

|

| 196 |

+

azimuths = np.array(meta_info['azimuths'])

|

| 197 |

+

elevations = np.array(meta_info['elevations'])

|

| 198 |

+

# set camera

|

| 199 |

+

bproc.camera.set_resolution(512, 512)

|

| 200 |

+

for radius, azimuth, elevation in zip(radiuss, azimuths, elevations):

|

| 201 |

+

cam_pose = camera_pose(azimuth, elevation, radius=radius)

|

| 202 |

+

bproc.camera.add_camera_pose(cam_pose)

|

| 203 |

+

|

| 204 |

+

bproc.renderer.enable_diffuse_color_output()

|

| 205 |

+

|

| 206 |

+

bproc.renderer.set_output_format(enable_transparency=True)

|

| 207 |

+

|

| 208 |

+

data = bproc.renderer.render()

|

| 209 |

+

|

| 210 |

+

for i in range(len(azimuths)):

|

| 211 |

+

image = data['diffuse'][i]

|

| 212 |

+

Image.fromarray(image).save(os.path.join(output_dir, obj_name, f'albedo_{i:02d}.png'))

|

rendering/render_color.py

ADDED

|

@@ -0,0 +1,279 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import blenderproc as bproc

|

| 2 |

+

import numpy as np

|

| 3 |

+

import os

|

| 4 |

+

os.environ["OPENCV_IO_ENABLE_OPENEXR"]="1"

|

| 5 |

+

import json

|

| 6 |

+

import bpy

|

| 7 |

+

import cv2

|

| 8 |

+

import glob

|

| 9 |

+

import random

|

| 10 |

+

from PIL import Image

|

| 11 |

+

import math

|

| 12 |

+

from mathutils import Vector, Matrix

|

| 13 |

+

import argparse

|

| 14 |

+

|

| 15 |

+

parser = argparse.ArgumentParser()

|

| 16 |

+

parser.add_argument('--glb_path', type=str)

|

| 17 |

+

parser.add_argument('--hdri_path', type=str, default=None)

|

| 18 |

+

parser.add_argument('--output_dir', type=str, default='output')

|

| 19 |

+

args = parser.parse_args()

|

| 20 |

+

|

| 21 |

+

# load the glb model

|

| 22 |

+

def load_object(object_path: str) -> None:

|

| 23 |

+

"""Loads a glb model into the scene."""

|

| 24 |

+

if object_path.endswith(".glb") or object_path.endswith(".gltf"):

|

| 25 |

+

bpy.ops.import_scene.gltf(filepath=object_path, merge_vertices=False)

|

| 26 |

+

elif object_path.endswith(".fbx"):

|

| 27 |

+

bpy.ops.import_scene.fbx(filepath=object_path)

|

| 28 |

+

elif object_path.endswith(".obj"):

|

| 29 |

+

bpy.ops.import_scene.obj(filepath=object_path)

|

| 30 |

+

elif object_path.endswith(".ply"):

|

| 31 |

+

bpy.ops.import_mesh.ply(filepath=object_path)

|

| 32 |

+

else:

|

| 33 |

+

raise ValueError(f"Unsupported file type: {object_path}")

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def load_envmap(envmap_path: str) -> None:

|

| 37 |

+

"""Loads an environment map into the scene."""

|

| 38 |

+

world = bpy.context.scene.world

|

| 39 |

+

world.use_nodes = True

|

| 40 |

+

bg = world.node_tree.nodes['Background']

|

| 41 |

+

envmap = world.node_tree.nodes.new('ShaderNodeTexEnvironment')

|

| 42 |

+

envmap.image = bpy.data.images.load(envmap_path)

|

| 43 |

+

world.node_tree.links.new(bg.inputs['Color'], envmap.outputs['Color'])

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

def turn_off_metallic():

|

| 47 |

+

# use for albedo rendering, metallic will affect the color

|

| 48 |

+

materials = bproc.material.collect_all()

|

| 49 |

+

for mat in materials:

|

| 50 |

+

mat.set_principled_shader_value('Metallic', 0.0)

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

def camera_pose(azimuth, elevation, radius=1.5):

|

| 54 |

+

"""Camera look at the origin."""

|

| 55 |

+

azimuth = np.deg2rad(azimuth)

|

| 56 |

+

elevation = np.deg2rad(elevation)

|

| 57 |

+

|

| 58 |

+

x = radius * np.cos(azimuth) * np.cos(elevation)

|

| 59 |

+

y = -radius * np.sin(azimuth) * np.cos(elevation)

|

| 60 |

+

z = radius * np.sin(elevation)

|

| 61 |

+

camera_position = np.array([x, y, z])

|

| 62 |

+

|

| 63 |

+

camera_forward = camera_position / np.linalg.norm(camera_position)

|

| 64 |

+

camera_right = np.cross(np.array([0.0, 0.0, 1.0]), camera_forward)

|

| 65 |

+

camera_right /= np.linalg.norm(camera_right)

|

| 66 |

+

camera_up = np.cross(camera_forward, camera_right)

|

| 67 |

+

camera_up /= np.linalg.norm(camera_up)

|

| 68 |

+

|

| 69 |

+

camera_pose = np.eye(4)

|

| 70 |

+

camera_pose[:3, 0] = camera_right

|

| 71 |

+

camera_pose[:3, 1] = camera_up

|

| 72 |

+

camera_pose[:3, 2] = camera_forward

|

| 73 |

+

camera_pose[:3, 3] = camera_position

|

| 74 |

+

|

| 75 |

+

return camera_pose

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def scene_root_objects():

|

| 79 |

+

for obj in bpy.context.scene.objects.values():

|

| 80 |

+

if not obj.parent:

|

| 81 |

+

yield obj

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

def scene_meshes():

|

| 85 |

+

for obj in bpy.context.scene.objects.values():

|

| 86 |

+

if isinstance(obj.data, (bpy.types.Mesh)):

|

| 87 |

+

yield obj

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

def scene_bbox(single_obj=None, ignore_matrix=False):

|

| 91 |

+

bbox_min = (math.inf,) * 3

|

| 92 |

+

bbox_max = (-math.inf,) * 3

|

| 93 |

+

found = False

|

| 94 |

+

for obj in scene_meshes() if single_obj is None else [single_obj]:

|

| 95 |

+

found = True

|

| 96 |

+

for coord in obj.bound_box:

|

| 97 |

+

coord = Vector(coord)

|

| 98 |

+

if not ignore_matrix:

|

| 99 |

+

coord = obj.matrix_world @ coord

|

| 100 |

+

bbox_min = tuple(min(x, y) for x, y in zip(bbox_min, coord))

|

| 101 |

+

bbox_max = tuple(max(x, y) for x, y in zip(bbox_max, coord))

|

| 102 |

+

if not found:

|

| 103 |

+

raise RuntimeError("no objects in scene to compute bounding box for")

|

| 104 |

+

return Vector(bbox_min), Vector(bbox_max)

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

def normalize_scene():

|

| 108 |

+

bbox_min, bbox_max = scene_bbox()

|

| 109 |

+

|

| 110 |

+

scale = 1 / max(bbox_max - bbox_min)

|

| 111 |

+

for obj in scene_root_objects():

|

| 112 |

+

obj.scale = obj.scale * scale

|

| 113 |

+

# Apply scale to matrix_world.

|

| 114 |

+

bpy.context.view_layer.update()

|

| 115 |

+

bbox_min, bbox_max = scene_bbox()

|

| 116 |

+

offset = -(bbox_min + bbox_max) / 2

|

| 117 |

+

for obj in scene_root_objects():

|

| 118 |

+

obj.matrix_world.translation += offset

|

| 119 |

+

bpy.ops.object.select_all(action="DESELECT")

|

| 120 |

+

|

| 121 |

+

return scale, offset

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

def reset_normal():

|

| 125 |

+

"""experimental!!!

|

| 126 |

+

reset normal

|

| 127 |

+

"""

|

| 128 |

+

for obj in scene_meshes():

|

| 129 |

+

bpy.context.view_layer.objects.active = obj

|

| 130 |

+

bpy.ops.object.mode_set(mode='EDIT')

|

| 131 |

+

bpy.ops.mesh.normals_tools(mode='RESET')

|

| 132 |

+

bpy.ops.object.mode_set(mode='OBJECT')

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

def change_to_orm():

|

| 136 |

+

materials = bproc.material.collect_all()

|

| 137 |

+

for mat in materials:

|

| 138 |

+

orm_color = mat.nodes.new(type='ShaderNodeCombineRGB')

|

| 139 |

+

principled_bsdf = mat.get_the_one_node_with_type("BsdfPrincipled")

|

| 140 |

+

|

| 141 |

+

# metallic -> Blue

|

| 142 |

+

if principled_bsdf.inputs['Metallic'].links:

|

| 143 |

+

metallic_from_socket = principled_bsdf.inputs['Metallic'].links[0].from_socket

|

| 144 |

+

metallic_link = mat.links.new(metallic_from_socket, orm_color.inputs['B'])

|

| 145 |

+

mat.links.remove(principled_bsdf.inputs['Metallic'].links[0])

|

| 146 |

+

else:

|

| 147 |

+

metallic_value = principled_bsdf.inputs['Metallic'].default_value

|

| 148 |

+

orm_color.inputs['B'].default_value = metallic_value

|

| 149 |

+

principled_bsdf.inputs['Metallic'].default_value = 0.0

|

| 150 |

+

# roughness -> Green

|

| 151 |

+

if principled_bsdf.inputs['Roughness'].links:

|

| 152 |

+

roughness_from_socket = principled_bsdf.inputs['Roughness'].links[0].from_socket

|

| 153 |

+

roughness_link = mat.links.new(roughness_from_socket, orm_color.inputs['G'])

|

| 154 |

+

mat.links.remove(principled_bsdf.inputs['Roughness'].links[0])

|

| 155 |

+

else:

|

| 156 |

+

roughness_value = principled_bsdf.inputs['Roughness'].default_value

|

| 157 |

+

orm_color.inputs['G'].default_value = roughness_value

|

| 158 |

+

principled_bsdf.inputs['Roughness'].default_value = 0.5

|

| 159 |

+

|

| 160 |

+

# link orm_color to principled_bsdf as base color

|

| 161 |

+

mat.links.new(orm_color.outputs['Image'], principled_bsdf.inputs['Base Color'])

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

def is_bsdf():

|

| 165 |

+

for mat in bproc.material.collect_all():

|

| 166 |

+

node = mat.get_nodes_with_type('BsdfPrincipled')

|

| 167 |

+

if node and len(node) == 1:

|

| 168 |

+

pass

|

| 169 |

+

else:

|

| 170 |

+

return False

|

| 171 |

+

return True

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

def sample_sphere(num_samples):

|

| 175 |

+

radiuss = np.random.uniform(1.5, 2.0, size=num_samples)

|

| 176 |

+

azimuth = np.random.uniform(0, 360, size=num_samples)

|

| 177 |

+

elevation = np.random.uniform(-90, 90, size=num_samples)

|

| 178 |

+

return radiuss, azimuth, elevation

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

def remove_lighting():

|

| 182 |

+

# remove world background lighting

|

| 183 |

+

world = bpy.context.scene.world

|

| 184 |

+

nodes = world.node_tree.nodes

|

| 185 |

+

for node in nodes:

|

| 186 |

+

if node.type == 'TEX_ENVIRONMENT':

|

| 187 |

+

nodes.remove(node)

|

| 188 |

+

# remove all lights

|

| 189 |

+

for obj in bpy.context.scene.objects.values():

|

| 190 |

+

if obj.type == 'LIGHT':

|

| 191 |

+

bpy.data.objects.remove(obj)

|

| 192 |

+

|

| 193 |

+

|

| 194 |

+

def set_point_light():

|

| 195 |

+

light = bproc.types.Light()

|

| 196 |

+

light.set_type('POINT')

|

| 197 |

+

light.set_location(bproc.sampler.shell(

|

| 198 |

+

center=[0, 0, 0],

|

| 199 |

+

radius_min=5,

|

| 200 |

+

radius_max=7,

|

| 201 |

+

elevation_min=-35,

|

| 202 |

+

elevation_max=70

|

| 203 |

+

))

|

| 204 |

+

light.set_energy(500)

|

| 205 |

+

return light

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

bproc.init()

|

| 209 |

+

# bproc.renderer.set_render_devices(use_only_cpu=True)

|

| 210 |

+

# bpy.context.scene.view_settings.view_transform = 'Raw' # do not use sRGB

|

| 211 |

+

|

| 212 |

+

glb_path = args.glb_path

|

| 213 |

+

output_dir = args.output_dir

|

| 214 |

+

obj_name = os.path.basename(glb_path).split('.')[0]

|

| 215 |

+

load_object(glb_path)

|

| 216 |

+

|

| 217 |

+

scale, offset = normalize_scene()

|

| 218 |

+

|

| 219 |

+

reset_normal()

|

| 220 |

+

|

| 221 |

+

# load camera info

|

| 222 |

+

with open(os.path.join(output_dir, obj_name, 'camera.json'), 'r') as f:

|

| 223 |

+

meta_info = json.load(f)

|

| 224 |

+

radiuss = np.array(meta_info['raidus'])

|

| 225 |

+

azimuths = np.array(meta_info['azimuths'])

|

| 226 |

+

elevations = np.array(meta_info['elevations'])

|

| 227 |

+

|

| 228 |

+

# set camera

|

| 229 |

+

bproc.camera.set_resolution(512, 512)

|

| 230 |

+

for radius, azimuth, elevation in zip(radiuss, azimuths, elevations):

|

| 231 |

+

cam_pose = camera_pose(azimuth, elevation, radius=radius)

|

| 232 |

+

bproc.camera.add_camera_pose(cam_pose)

|

| 233 |

+

|

| 234 |

+

lighting_fn = os.path.join(output_dir, obj_name, 'lighting.json')

|

| 235 |

+

if os.path.exists(lighting_fn):

|

| 236 |

+

with open(lighting_fn, 'r') as f:

|

| 237 |

+

lighting_info = json.load(f)

|

| 238 |

+

else:

|

| 239 |

+

lighting_info = []

|

| 240 |

+

|

| 241 |

+

hdr_file = args.hdri_path

|

| 242 |

+

|

| 243 |

+

if hdr_file is not None:

|

| 244 |

+

bproc.world.set_world_background_hdr_img(hdr_file)

|

| 245 |

+

|

| 246 |

+

bproc.renderer.set_output_format(enable_transparency=True)

|

| 247 |

+

data = bproc.renderer.render()

|

| 248 |

+

|

| 249 |

+

for i in range(len(azimuths)):

|

| 250 |

+

image = data['colors'][i]

|

| 251 |

+

Image.fromarray(image).save(os.path.join(output_dir, obj_name, f'color_{len(lighting_info)}_{i:02d}.png'))

|

| 252 |

+

|

| 253 |

+

lighting_info.append(

|

| 254 |

+

{

|

| 255 |

+

'type': 'hdri',

|

| 256 |

+

'path': os.path.basename(hdr_file),

|

| 257 |

+

}

|

| 258 |

+

)

|

| 259 |

+

|

| 260 |

+

else:

|

| 261 |

+

# Point lighting, 1 sample

|

| 262 |

+

remove_lighting()

|

| 263 |

+

light1 = set_point_light()

|

| 264 |

+

light2 = set_point_light()

|

| 265 |

+

bproc.renderer.set_output_format(enable_transparency=True)

|

| 266 |

+

data = bproc.renderer.render()

|

| 267 |

+

for i in range(len(azimuths)):

|

| 268 |

+

image = data['colors'][i]

|

| 269 |

+

Image.fromarray(image).save(os.path.join(output_dir, obj_name, f'color_{len(lighting_info)}_{i:02d}.png'))

|

| 270 |

+

lighting_info.append(

|

| 271 |

+

{

|

| 272 |

+

'type': 'point',

|

| 273 |

+

'locations': [light1.get_location().tolist(), light2.get_location().tolist()],

|

| 274 |

+

'energys': [light1.get_energy(), light2.get_energy()],

|

| 275 |

+

}

|

| 276 |

+

)

|

| 277 |

+

|

| 278 |

+

with open(lighting_fn, 'w') as f:

|

| 279 |

+

json.dump(lighting_info, f, indent=4)

|

rendering/render_normal_orm.py

ADDED

|

@@ -0,0 +1,228 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import blenderproc as bproc

|

| 2 |

+

import numpy as np

|

| 3 |

+

import os

|

| 4 |

+

os.environ["OPENCV_IO_ENABLE_OPENEXR"]="1"

|

| 5 |

+

import json

|

| 6 |

+

import bpy

|

| 7 |

+

import cv2

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import math

|

| 10 |

+

from mathutils import Vector, Matrix

|

| 11 |

+

import argparse

|

| 12 |

+

|

| 13 |

+

parser = argparse.ArgumentParser()

|

| 14 |

+

parser.add_argument('--glb_path', type=str)

|

| 15 |

+

parser.add_argument('--output_dir', type=str, default='output')

|

| 16 |

+

args = parser.parse_args()

|

| 17 |

+

|

| 18 |

+

# load the glb model

|

| 19 |

+

def load_object(object_path: str) -> None:

|

| 20 |

+

"""Loads a glb model into the scene."""

|

| 21 |

+

if object_path.endswith(".glb") or object_path.endswith(".gltf"):

|

| 22 |

+

bpy.ops.import_scene.gltf(filepath=object_path, merge_vertices=False)

|

| 23 |

+

elif object_path.endswith(".fbx"):

|

| 24 |

+

bpy.ops.import_scene.fbx(filepath=object_path)

|

| 25 |

+

elif object_path.endswith(".obj"):

|

| 26 |

+

bpy.ops.import_scene.obj(filepath=object_path)

|

| 27 |

+

elif object_path.endswith(".ply"):

|

| 28 |

+

bpy.ops.import_mesh.ply(filepath=object_path)

|

| 29 |

+

else:

|

| 30 |

+

raise ValueError(f"Unsupported file type: {object_path}")

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

def load_envmap(envmap_path: str) -> None:

|

| 34 |

+

"""Loads an environment map into the scene."""

|

| 35 |

+

world = bpy.context.scene.world

|

| 36 |

+

world.use_nodes = True

|

| 37 |

+

bg = world.node_tree.nodes['Background']

|

| 38 |

+

envmap = world.node_tree.nodes.new('ShaderNodeTexEnvironment')

|

| 39 |

+

envmap.image = bpy.data.images.load(envmap_path)

|

| 40 |

+

world.node_tree.links.new(bg.inputs['Color'], envmap.outputs['Color'])

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def turn_off_metallic():

|

| 44 |

+

# use for albedo rendering, metallic will affect the color

|

| 45 |

+

materials = bproc.material.collect_all()

|

| 46 |

+

for mat in materials:

|

| 47 |

+

mat.set_principled_shader_value('Metallic', 0.0)

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def camera_pose(azimuth, elevation, radius=1.5):

|

| 51 |

+

"""Camera look at the origin."""

|

| 52 |

+

azimuth = np.deg2rad(azimuth)

|

| 53 |

+

elevation = np.deg2rad(elevation)

|

| 54 |

+

|

| 55 |

+

x = radius * np.cos(azimuth) * np.cos(elevation)

|

| 56 |

+

y = -radius * np.sin(azimuth) * np.cos(elevation)

|

| 57 |

+

z = radius * np.sin(elevation)

|

| 58 |

+

camera_position = np.array([x, y, z])

|

| 59 |

+

|

| 60 |

+

camera_forward = camera_position / np.linalg.norm(camera_position)

|

| 61 |

+

camera_right = np.cross(np.array([0.0, 0.0, 1.0]), camera_forward)

|

| 62 |

+

camera_right /= np.linalg.norm(camera_right)

|

| 63 |

+

camera_up = np.cross(camera_forward, camera_right)

|

| 64 |

+

camera_up /= np.linalg.norm(camera_up)

|

| 65 |

+

|

| 66 |

+

camera_pose = np.eye(4)

|

| 67 |

+

camera_pose[:3, 0] = camera_right

|

| 68 |

+

camera_pose[:3, 1] = camera_up

|

| 69 |

+

camera_pose[:3, 2] = camera_forward

|

| 70 |

+

camera_pose[:3, 3] = camera_position

|

| 71 |

+

|

| 72 |

+

return camera_pose

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def scene_root_objects():

|

| 76 |

+

for obj in bpy.context.scene.objects.values():

|

| 77 |

+

if not obj.parent:

|

| 78 |

+

yield obj

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

def scene_meshes():

|

| 82 |

+

for obj in bpy.context.scene.objects.values():

|

| 83 |

+

if isinstance(obj.data, (bpy.types.Mesh)):

|

| 84 |

+

yield obj

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

def scene_bbox(single_obj=None, ignore_matrix=False):

|

| 88 |

+

bbox_min = (math.inf,) * 3

|

| 89 |

+

bbox_max = (-math.inf,) * 3

|

| 90 |

+

found = False

|

| 91 |

+

for obj in scene_meshes() if single_obj is None else [single_obj]:

|

| 92 |

+

found = True

|

| 93 |

+

for coord in obj.bound_box:

|

| 94 |

+

coord = Vector(coord)

|

| 95 |

+

if not ignore_matrix:

|

| 96 |

+

coord = obj.matrix_world @ coord

|

| 97 |

+

bbox_min = tuple(min(x, y) for x, y in zip(bbox_min, coord))

|

| 98 |

+

bbox_max = tuple(max(x, y) for x, y in zip(bbox_max, coord))

|

| 99 |

+

if not found:

|

| 100 |

+

raise RuntimeError("no objects in scene to compute bounding box for")

|

| 101 |

+

return Vector(bbox_min), Vector(bbox_max)

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

def normalize_scene():

|

| 105 |

+

bbox_min, bbox_max = scene_bbox()

|

| 106 |

+

|

| 107 |

+

scale = 1 / max(bbox_max - bbox_min)

|

| 108 |

+

for obj in scene_root_objects():

|

| 109 |

+

obj.scale = obj.scale * scale

|

| 110 |

+

# Apply scale to matrix_world.

|

| 111 |

+

bpy.context.view_layer.update()

|

| 112 |

+

bbox_min, bbox_max = scene_bbox()

|

| 113 |

+

offset = -(bbox_min + bbox_max) / 2

|

| 114 |

+

for obj in scene_root_objects():

|

| 115 |

+

obj.matrix_world.translation += offset

|

| 116 |

+

bpy.ops.object.select_all(action="DESELECT")

|

| 117 |

+

|

| 118 |

+

return scale, offset

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

def reset_normal():

|

| 122 |

+

"""experimental!!!

|

| 123 |

+

reset normal

|

| 124 |

+

"""

|

| 125 |

+

for obj in scene_meshes():

|

| 126 |

+

bpy.context.view_layer.objects.active = obj

|

| 127 |

+

bpy.ops.object.mode_set(mode='EDIT')

|

| 128 |

+

bpy.ops.mesh.normals_tools(mode='RESET')

|

| 129 |

+

bpy.ops.object.mode_set(mode='OBJECT')

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

def change_to_orm():

|

| 133 |

+

materials = bproc.material.collect_all()

|

| 134 |

+

for mat in materials:

|

| 135 |

+

orm_color = mat.nodes.new(type='ShaderNodeCombineRGB')

|

| 136 |

+

principled_bsdf = mat.get_the_one_node_with_type("BsdfPrincipled")

|

| 137 |

+

|

| 138 |

+

# metallic -> Blue

|

| 139 |

+

if principled_bsdf.inputs['Metallic'].links:

|

| 140 |

+

metallic_from_socket = principled_bsdf.inputs['Metallic'].links[0].from_socket

|

| 141 |

+

metallic_link = mat.links.new(metallic_from_socket, orm_color.inputs['B'])

|

| 142 |

+

mat.links.remove(principled_bsdf.inputs['Metallic'].links[0])

|

| 143 |

+

else:

|

| 144 |

+

metallic_value = principled_bsdf.inputs['Metallic'].default_value

|

| 145 |

+

orm_color.inputs['B'].default_value = metallic_value

|

| 146 |

+

principled_bsdf.inputs['Metallic'].default_value = 0.0

|

| 147 |

+

# roughness -> Green

|

| 148 |

+

if principled_bsdf.inputs['Roughness'].links:

|

| 149 |

+

roughness_from_socket = principled_bsdf.inputs['Roughness'].links[0].from_socket

|

| 150 |

+

roughness_link = mat.links.new(roughness_from_socket, orm_color.inputs['G'])

|

| 151 |

+

mat.links.remove(principled_bsdf.inputs['Roughness'].links[0])

|

| 152 |

+

else:

|

| 153 |

+

roughness_value = principled_bsdf.inputs['Roughness'].default_value

|

| 154 |

+

orm_color.inputs['G'].default_value = roughness_value

|

| 155 |

+

principled_bsdf.inputs['Roughness'].default_value = 0.5

|

| 156 |

+

|

| 157 |

+

# link orm_color to principled_bsdf as base color

|

| 158 |

+

mat.links.new(orm_color.outputs['Image'], principled_bsdf.inputs['Base Color'])

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

def is_bsdf():

|

| 162 |

+

for mat in bproc.material.collect_all():

|

| 163 |

+

node = mat.get_nodes_with_type('BsdfPrincipled')

|

| 164 |

+

if node and len(node) == 1:

|

| 165 |

+

pass

|

| 166 |

+

else:

|

| 167 |

+

return False

|

| 168 |

+

return True

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

def sample_sphere(num_samples):

|

| 172 |

+

radiuss = np.random.uniform(1.5, 2.0, size=num_samples)

|

| 173 |

+

azimuth = np.random.uniform(0, 360, size=num_samples)

|

| 174 |

+

elevation = np.random.uniform(-90, 90, size=num_samples)

|

| 175 |

+

return radiuss, azimuth, elevation

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

NUM_IMAGES = 12

|

| 179 |

+

|

| 180 |

+

meta_info = {'transform_matrix': []}

|

| 181 |

+

|

| 182 |

+

bproc.init()

|

| 183 |

+

# bproc.renderer.set_render_devices(use_only_cpu=True)

|

| 184 |

+

bpy.context.scene.view_settings.view_transform = 'Raw' # do not use sRGB

|

| 185 |

+

|

| 186 |

+

glb_path = args.glb_path

|

| 187 |

+

obj_name = os.path.basename(glb_path).split('.')[0]

|

| 188 |

+

load_object(glb_path)

|

| 189 |

+

|

| 190 |

+

if not is_bsdf():

|

| 191 |

+

exit()

|

| 192 |

+

|

| 193 |

+

scale, offset = normalize_scene()

|

| 194 |

+

meta_info['scale'] = scale

|

| 195 |

+

meta_info['offset'] = offset.to_tuple()

|

| 196 |

+

|

| 197 |

+

reset_normal()

|

| 198 |

+

change_to_orm()

|

| 199 |

+

|

| 200 |

+

radiuss, azimuths, elevations = sample_sphere(NUM_IMAGES)

|

| 201 |

+

meta_info['raidus'] = radiuss.tolist()

|

| 202 |

+

meta_info['azimuths'] = azimuths.tolist()

|

| 203 |

+

meta_info['elevations'] = elevations.tolist()

|

| 204 |

+

|

| 205 |

+

# set camera

|

| 206 |

+

bproc.camera.set_resolution(512, 512)

|

| 207 |

+

for radius, azimuth, elevation in zip(radiuss, azimuths, elevations):

|

| 208 |

+

cam_pose = camera_pose(azimuth, elevation, radius=radius)

|

| 209 |

+

bproc.camera.add_camera_pose(cam_pose)

|

| 210 |

+

meta_info['transform_matrix'].append(cam_pose.tolist())

|

| 211 |

+

|

| 212 |

+

bproc.renderer.enable_normals_output()

|

| 213 |

+

bproc.renderer.enable_diffuse_color_output()

|

| 214 |

+

|

| 215 |

+

bproc.renderer.set_output_format(enable_transparency=True)

|

| 216 |

+

|

| 217 |

+

data = bproc.renderer.render()

|

| 218 |

+

|

| 219 |

+

output_dir = args.output_dir

|

| 220 |

+

os.makedirs(os.path.join(output_dir, obj_name), exist_ok=True)

|

| 221 |

+

for i in range(len(azimuths)):

|

| 222 |

+

image = data['diffuse'][i]

|

| 223 |

+

Image.fromarray(image).save(os.path.join(output_dir, obj_name, f'orm_{i:02d}.png'))

|

| 224 |

+

normal = data['normals'][i]

|

| 225 |

+

cv2.imwrite(os.path.join(output_dir, obj_name, f'normal_{i:02d}.exr'), normal)

|

| 226 |

+

|

| 227 |

+

with open(os.path.join(output_dir, obj_name, 'camera.json'), 'w') as f:

|

| 228 |

+

json.dump(meta_info, f, indent=4)

|