url

stringlengths 59

59

| repository_url

stringclasses 1

value | labels_url

stringlengths 73

73

| comments_url

stringlengths 68

68

| events_url

stringlengths 66

66

| html_url

stringlengths 49

49

| id

int64 782M

1.89B

| node_id

stringlengths 18

24

| number

int64 4.97k

9.98k

| title

stringlengths 2

306

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

sequence | created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 4

values | active_lock_reason

null | body

stringlengths 0

63.6k

⌀ | reactions

dict | timeline_url

stringlengths 68

68

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

bool 0

classes | pull_request

dict | is_pull_request

bool 1

class |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/kubeflow/pipelines/issues/7095 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7095/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7095/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7095/events | https://github.com/kubeflow/pipelines/issues/7095 | 1,085,616,611 | I_kwDOB-71UM5AtTHj | 7,095 | test: error: deployment "ml-pipeline" exceeded its progress deadline | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | null | [] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

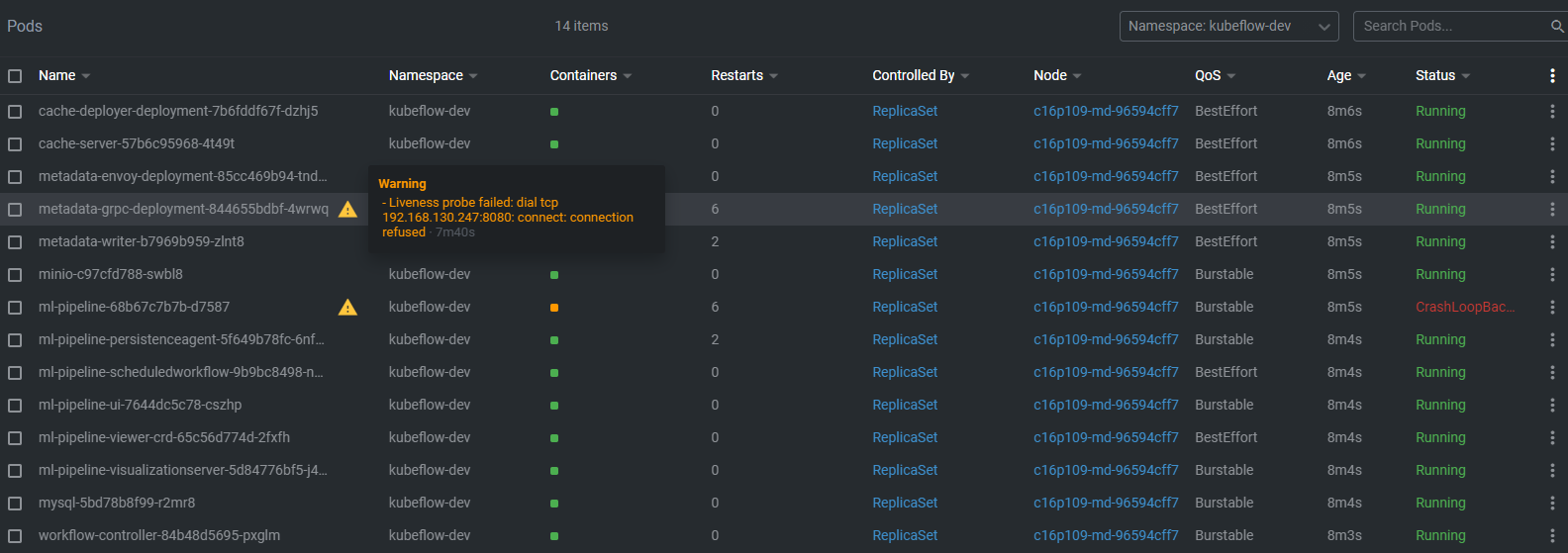

] | "2021-12-21T09:26:06" | "2022-04-17T06:27:49" | null | CONTRIBUTOR | null | All sample/e2e tests are failing with either

* metadata-grpc deployment cannot rollout

* ml-pipeline deployment cannot rollout

because of connection timeout to the in-cluster mysql DB. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7095/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7095/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7093 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7093/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7093/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7093/events | https://github.com/kubeflow/pipelines/issues/7093 | 1,085,591,326 | I_kwDOB-71UM5AtM8e | 7,093 | [backend] cache-deployer generate CSR with wrong usage | {

"login": "jomenxiao",

"id": 4003391,

"node_id": "MDQ6VXNlcjQwMDMzOTE=",

"avatar_url": "https://avatars.githubusercontent.com/u/4003391?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jomenxiao",

"html_url": "https://github.com/jomenxiao",

"followers_url": "https://api.github.com/users/jomenxiao/followers",

"following_url": "https://api.github.com/users/jomenxiao/following{/other_user}",

"gists_url": "https://api.github.com/users/jomenxiao/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jomenxiao/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jomenxiao/subscriptions",

"organizations_url": "https://api.github.com/users/jomenxiao/orgs",

"repos_url": "https://api.github.com/users/jomenxiao/repos",

"events_url": "https://api.github.com/users/jomenxiao/events{/privacy}",

"received_events_url": "https://api.github.com/users/jomenxiao/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"cc @chensun ",

"I also bumped into the exact same issue while testing the KF 1.5 RC0 manifests https://github.com/kubeflow/manifests/issues/2099\r\n\r\nI think this has definitely something to do with KinD, but I couldn't get to the bottom of it. For me it was:\r\n* KinD cluster with K8s 1.20.7\r\n* `1.8.0-rc.1` commit\r\n\r\nBUT, when testing this with:\r\n* EKS with K8s 1.19\r\n* KFP 1.7.0\r\n\r\nThen the CertificateSigningRequest would get into `Approved` state, but the cache-deployer would still complain that a certificate would not appear.\r\n```\r\nERROR: After approving csr cache-server.kubeflow, the signed certificate did not appear on the resource. Giving up after 10 attempts.\r\n```"

] | "2021-12-21T08:59:01" | "2022-02-08T08:13:42" | "2022-02-08T08:13:42" | NONE | null | ### Environment

* How did you deploy Kubeflow Pipelines (KFP)?

kind

* KFP version:

brunch `master`

### Steps to reproduce

follow README `kustomize`

https://github.com/kubeflow/pipelines/blob/master/manifests/kustomize/README.md

* error message

`"message": "invalid usage for client certificate: server auth",`

### describe csr

```

➜ .kind kubectl get csr cache-server.kubeflow

NAME AGE SIGNERNAME REQUESTOR CONDITION

cache-server.kubeflow 6m38s kubernetes.io/kube-apiserver-client system:serviceaccount:kubeflow:kubeflow-pipelines-cache-deployer-sa Approved,Failed

➜ .kind kubectl get csr cache-server.kubeflow -o json

{

"apiVersion": "certificates.k8s.io/v1",

"kind": "CertificateSigningRequest",

"metadata": {

"creationTimestamp": "2021-12-21T06:48:46Z",

"name": "cache-server.kubeflow",

"resourceVersion": "1485",

"uid": "bece32dd-b0f2-4d31-9e1c-2aafa656945e"

},

"spec": {

"extra": {

"authentication.kubernetes.io/pod-name": [

"cache-deployer-deployment-578ffc9d46-5bjml"

],

"authentication.kubernetes.io/pod-uid": [

"4866ee10-fb48-4034-9c36-bf519e0b81f1"

]

},

"groups": [

"system:serviceaccounts",

"system:serviceaccounts:kubeflow",

"system:authenticated"

],

"request": "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQzlEQ0NBZHdDQVFBd0pERWlNQ0FHQTFVRUF3d1pZMkZqYUdVdGMyVnlkbVZ5TG10MVltVm1iRzkzTG5OMgpZekNDQVNJd0RRWUpLb1pJaHZjTkFRRUJCUUFEZ2dFUEFEQ0NBUW9DZ2dFQkFLajcrZWtTRDA0Tm54YTczeXNLCnF6eVV4T1lnczEvUzJoZU9BMG1TVlVucXJsNm5ZVDRZWjBDWnViaFRvWUp0UEN2b0dSVXdoQ2ZsNE9nMFIzc1YKQ2VBdGpHT0RPR1BFdituUjJoTEgrSkFYZFIyM01sdzduOWhiQ0VhVWVONFNxWVdUK1BWbVhCV0RkdC90WVJPWQpuZnVzOFRqejJNbWgzN1ZUaUhqUGdDVlVoQTBIZHFkTms0VUMzb3UyL21PMHZYNXRLVTF5bWE2cEYyTUhMM1BtCnJzdDhzMmZGbnhkd0xlWFJlTzlPRm5xMlZzTHN2NGR5azE0SG5SWW82dER5eGwvWkpDYWNnTmNaYmVLeWNCVjcKYkhPOGVUbktoN20ydmppR0p2QnRnVG1NTzlpOWNLbzdzVS9YakROdyt0MVFMaFBxcDNHUkZITXVXUzEyMFAwNAovMFVDQXdFQUFhQ0JpakNCaHdZSktvWklodmNOQVFrT01Yb3dlREFKQmdOVkhSTUVBakFBTUFzR0ExVWREd1FFCkF3SUY0REFUQmdOVkhTVUVEREFLQmdnckJnRUZCUWNEQVRCSkJnTlZIUkVFUWpCQWdneGpZV05vWlMxelpYSjIKWlhLQ0ZXTmhZMmhsTFhObGNuWmxjaTVyZFdKbFpteHZkNElaWTJGamFHVXRjMlZ5ZG1WeUxtdDFZbVZtYkc5MwpMbk4yWXpBTkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQVRXL1dvdHpQRGtnNE1YbndyNUhxU2wxZ0FWOHBJM1dnCkpZRGdNMXRvdU1pTkZZaWZjVkRkRDZVNGhlblZBR0pTWSt2T1pXQkRoYTIvbVpNemROUXkybEZ1MDlOOHJ5eVYKcFpPTnBIWnRVZXloNTU1ZDdwWjVzNEtiRGFhd0RXV25tejBIWEk0SUJlY3FYSzRNVENsSVgrNXlLL2ZSZFd6RQo1RFNvcE9pWHQvMGxuMTJYNzZNcEV5WG5obDRQenpieG5wdFRJUjFPRU9Gb1pFUzdETkltbTcrQzRMNDVpSDhkCnBFSnhqQ0grcFVueVBWMkZLYUJnTHAzR2JHUDZlaTJ2eFdiRFVGWjhJdHNyNlpKdXNlU3Fib3pGV2lQOHBGYUMKdDhrUXNsRWlKSjFEellKdk5reXE3Wm5iMURRK09ESGZiV1IxdEtOdnJJaW1aSGJ4YVBxV3NBPT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==",

"signerName": "kubernetes.io/kube-apiserver-client",

"uid": "3796d0f3-65b1-443a-9b13-97e9a61e381b",

"usages": [

"digital signature",

"key encipherment",

"server auth"

],

"username": "system:serviceaccount:kubeflow:kubeflow-pipelines-cache-deployer-sa"

},

"status": {

"conditions": [

{

"lastTransitionTime": "2021-12-21T06:48:46Z",

"lastUpdateTime": "2021-12-21T06:48:46Z",

"message": "This CSR was approved by kubectl certificate approve.",

"reason": "KubectlApprove",

"status": "True",

"type": "Approved"

},

{

"lastTransitionTime": "2021-12-21T06:48:46Z",

"lastUpdateTime": "2021-12-21T06:48:46Z",

"message": "invalid usage for client certificate: server auth",

"reason": "SignerValidationFailure",

"status": "True",

"type": "Failed"

}

]

}

}

``` | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7093/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7093/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7089 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7089/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7089/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7089/events | https://github.com/kubeflow/pipelines/issues/7089 | 1,084,398,941 | I_kwDOB-71UM5Aop1d | 7,089 | test: tests fail with "AccessDeniedException: 403 There is an account problem for the requested project." | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Ohh, my guess was wrong. The project ml-pipeline-test itself is suspended.",

"Recovered"

] | "2021-12-20T06:11:50" | "2021-12-20T07:15:14" | "2021-12-20T07:15:14" | CONTRIBUTOR | null | This includes sample test, postsubmit tests.

However, samples-v2 test is still passing.

https://oss-prow.knative.dev/view/gs/oss-prow/pr-logs/pull/kubeflow_pipelines/7088/kubeflow-pipeline-sample-test/1472757856003428352

Therefore, my initial guess is that the image we use is too outdated, so that it can no longer authenticate with Google Cloud.

Looking at https://github.com/GoogleCloudPlatform/oss-test-infra/blob/18c1b811dfaf8b07d83dccd73120049991424750/prow/prowjobs/kubeflow/pipelines/kubeflow-pipelines-presubmits.yaml, most tests use gcr.io/k8s-testimages/kubekins-e2e:v20210113-cc576af-master image, but samples v2 test is using python:3.7 image. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7089/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7089/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7078 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7078/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7078/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7078/events | https://github.com/kubeflow/pipelines/issues/7078 | 1,082,387,910 | I_kwDOB-71UM5Ag-3G | 7,078 | [feature] Flexible tensorboard images | {

"login": "casassg",

"id": 6912589,

"node_id": "MDQ6VXNlcjY5MTI1ODk=",

"avatar_url": "https://avatars.githubusercontent.com/u/6912589?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/casassg",

"html_url": "https://github.com/casassg",

"followers_url": "https://api.github.com/users/casassg/followers",

"following_url": "https://api.github.com/users/casassg/following{/other_user}",

"gists_url": "https://api.github.com/users/casassg/gists{/gist_id}",

"starred_url": "https://api.github.com/users/casassg/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/casassg/subscriptions",

"organizations_url": "https://api.github.com/users/casassg/orgs",

"repos_url": "https://api.github.com/users/casassg/repos",

"events_url": "https://api.github.com/users/casassg/events{/privacy}",

"received_events_url": "https://api.github.com/users/casassg/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 930476737,

"node_id": "MDU6TGFiZWw5MzA0NzY3Mzc=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/help%20wanted",

"name": "help wanted",

"color": "db1203",

"default": true,

"description": "The community is welcome to contribute."

},

{

"id": 930619516,

"node_id": "MDU6TGFiZWw5MzA2MTk1MTY=",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/frontend",

"name": "area/frontend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"any update on this? i have to include our antifactory images in our organization "

] | "2021-12-16T16:12:56" | "2022-09-14T15:38:34" | null | CONTRIBUTOR | null | ### Feature Area

/area frontend

### What feature would you like to see?

Allow installations to modify the images available on tensorboard. This would allow us to have images with tftext or tensorboard expansions as needed.

Currently tensorboard image list is a HTML embedded in the frontend.

### What is the use case or pain point?

Use tensorboard from KFP with different variations/versions and custom images.

### Is there a workaround currently?

Not possible, really manual deploy of an instance from kubectl.

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7078/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7078/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7072 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7072/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7072/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7072/events | https://github.com/kubeflow/pipelines/issues/7072 | 1,081,883,211 | I_kwDOB-71UM5AfDpL | 7,072 | how to set restartPolicy "Never" to init containers | {

"login": "changhoekim",

"id": 33795112,

"node_id": "MDQ6VXNlcjMzNzk1MTEy",

"avatar_url": "https://avatars.githubusercontent.com/u/33795112?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/changhoekim",

"html_url": "https://github.com/changhoekim",

"followers_url": "https://api.github.com/users/changhoekim/followers",

"following_url": "https://api.github.com/users/changhoekim/following{/other_user}",

"gists_url": "https://api.github.com/users/changhoekim/gists{/gist_id}",

"starred_url": "https://api.github.com/users/changhoekim/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/changhoekim/subscriptions",

"organizations_url": "https://api.github.com/users/changhoekim/orgs",

"repos_url": "https://api.github.com/users/changhoekim/repos",

"events_url": "https://api.github.com/users/changhoekim/events{/privacy}",

"received_events_url": "https://api.github.com/users/changhoekim/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"As far as I'm aware, we don't support setting restartPolicy for either main container nor init container via SDK. \r\nBTW, ContainerOp is deprecated, you should received a warning for the code above.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | "2021-12-16T08:01:33" | "2022-04-17T06:27:34" | null | NONE | null | ```

kfp.dsl.ContainerOp(

name = 'spark-test',

image = 'blahblah',

init_containers=[ kfp.dsl.UserContainer(name='sdfs', image='sdfs', command=['sh', '-c'], args=['sdfsf'])],

command = ["sh", "-c"])

```

this is my operation.

I tested wrong init containers and then pipeline retry my pipeline so many times.

I want to test just one try.

how to set restartPolicy to my pipeline container ? | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7072/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7072/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7064 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7064/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7064/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7064/events | https://github.com/kubeflow/pipelines/issues/7064 | 1,080,775,922 | I_kwDOB-71UM5Aa1Ty | 7,064 | parse pipeline argument string 'None' to None | {

"login": "iuiu34",

"id": 30587996,

"node_id": "MDQ6VXNlcjMwNTg3OTk2",

"avatar_url": "https://avatars.githubusercontent.com/u/30587996?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/iuiu34",

"html_url": "https://github.com/iuiu34",

"followers_url": "https://api.github.com/users/iuiu34/followers",

"following_url": "https://api.github.com/users/iuiu34/following{/other_user}",

"gists_url": "https://api.github.com/users/iuiu34/gists{/gist_id}",

"starred_url": "https://api.github.com/users/iuiu34/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/iuiu34/subscriptions",

"organizations_url": "https://api.github.com/users/iuiu34/orgs",

"repos_url": "https://api.github.com/users/iuiu34/repos",

"events_url": "https://api.github.com/users/iuiu34/events{/privacy}",

"received_events_url": "https://api.github.com/users/iuiu34/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"pr #7065",

"Thank you for raising the issue.\r\n\r\nKFP is language-agnostic pipeline system. `None` is a python-specific object that is not present in other languages. Im'm not sure it's a good idea to tie KFP to a specific language.\r\n\r\n>the component recieve 'None'.\r\n\r\nKFP orchestrates containerized command-line programs. Command-line programs, as defined by their POSIX standard, receive command-line arguments as an array of null-terminated strings. Command-line programs cannot receive \"None\" objects.\r\n\r\nThere is a way for a command-line program to distinguish between an empty string argument and a missing argument. Conditional placeholders in the component definitions help with that (`{if: {cond: {isPresent: Input1}, then: [\"--input-1\", {inputValue: Input1}}]}`).\r\n\r\nSDK automatically handles cases where an optional input argument was not specified when instantiating a component.\r\n\r\nHowever, as you've discovered, this might not work as well for pipeline arguments. The reason is that currently the conditional placeholders are evaluated at compile time, but the pipeline arguments can be specified after the compilation.\r\n\r\nThe specification of the compiled pipeline does not allow passing NoneType objects - only strings. So, if you want to be able to pass some value from pipeline parameter to a component and have the component interpret is as `None`, you have to do that on the component side.",

"but when you say in the component side what do you mean?\r\na) manually inside the python code, adding `arg1 = None if arg1 == 'None' else arg1` in the pertinent functions\r\nb) automatically inside the component; in the container:command of the json. Which for py func would be something like adding a line inside the body as `locals() = {k:None if v == 'None' else v for v in locals()}` , but for components from yaml i don't know\r\nc) inside the inputs:parameters. Like the parsing that you do from str to bool (which i don't understand how it works). \r\n```\r\n\"input1\": { # string\r\n \"runtimeValue\": {\r\n \"constantValue\": {\r\n \"intValue\": \"1\"\r\n }\r\n }\r\n },```",

"Alexey is correct that `None` is not something you can pass via command-line arguments.\r\nThat said, what you get is expected because you are passing string literal `\"None\"` which overrides the default value `None`. The way to receive a Python `None` object is to not pass anything at all, then the default value will be used.\r\n\r\nTry the following:\r\n```python\r\n@component\r\ndef task1(arg1: str = None):\r\n if arg1 is None:\r\n print('arg1 is None')\r\n@pipeline\r\ndef pipeline():\r\n task1()\r\n```",

"But that's precisely the problem. The \"not passing anything at all\" is not an option in a function, it's only in the entrypoint.\r\n\r\nWhat I want is setting args with default None (not 'None') in the pipeline (not the component)\r\n\r\nBasically\r\n```py\r\n@component\r\ndef task1(arg1: str = None):\r\n if arg1 is None:\r\n print('arg1 is None')\r\n else:\r\n print(f\"arg1 value = '{arg1}'\")\r\n@pipeline\r\n pipeline(arg1: str = None):\r\n task1(arg1)\r\n```\r\npipeline() prints \"arg1 value = 'None'\" instead of the desired \"arg1 is None\"",

"> What I want is setting args with default None (not 'None') in the pipeline (not the component)\r\n\r\nThat's not a supported scenario. As Alexey mentioned above, `None` is a Python-specific object, whereas KFP components are language-agnostic. \r\n\r\nIn your code sample, by passing object` None` to `task1` component, you're assuming that `task1` is a Python program. But that's not necessarily true. Albeit you write `task1` implementation using Python above, that doesn't mean all components are Python-based. In fact, we see quite a lot components written as bash commands.\r\n",

"Yep, so agreed that in the pipeline arg1='None'.\r\n\r\nBut again; then, the parsing from 'None' to None, should be done (only) inside the py component? With something like `locals() = {k:None if v == 'None' else v for v in locals()} ` (option b)\r\n\r\nOr not parsing at all, and that py component should recieve 'None' and be treated by the user inside the function? (option a)",

"> the parsing from 'None' to None, should be done (only) inside the py component?\r\n\r\nNo, I don't think we should ever convert `'None'` to `None`. It could be a legit user intention that they may want to pass `'None'` and consume it as string. We don't want to be \"oversmart\".\r\n\r\n> Or not parsing at all, and that py component should recieve 'None' and be treated by the user inside the function? (option a)\r\n\r\nYes, users can choose whatever they want, `'None'`, `'null'`, `'NoValue'`, etc. The system doesn't need to be aware of the user-chosen contract.\r\n\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | "2021-12-15T09:13:20" | "2022-04-17T06:27:23" | null | NONE | null | When definining a kfp pipeline, with an str argument, if the value is 'None', then the component recieve 'None'.

Wouldn't be better, to parse str 'None' to None (NoneType)?

Now:

```py

@component

def task1(arg1: str = None):

if arg1 is None or arg1 == 'None':

print('arg1 is None')

@pipeline

pipeline(arg1: str = 'None'):

task1(arg1)

```

expected:

```py

@component

def task1(arg1: str = None):

if arg1 is None:

print('arg1 is None')

@pipeline

pipeline(arg1: str = 'None'):

task1(arg1)

``` | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7064/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7064/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7063 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7063/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7063/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7063/events | https://github.com/kubeflow/pipelines/issues/7063 | 1,080,606,943 | I_kwDOB-71UM5AaMDf | 7,063 | [backend] Runs triggered by jobs don't increase the Prometheus run counter | {

"login": "markwinter",

"id": 4998112,

"node_id": "MDQ6VXNlcjQ5OTgxMTI=",

"avatar_url": "https://avatars.githubusercontent.com/u/4998112?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/markwinter",

"html_url": "https://github.com/markwinter",

"followers_url": "https://api.github.com/users/markwinter/followers",

"following_url": "https://api.github.com/users/markwinter/following{/other_user}",

"gists_url": "https://api.github.com/users/markwinter/gists{/gist_id}",

"starred_url": "https://api.github.com/users/markwinter/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/markwinter/subscriptions",

"organizations_url": "https://api.github.com/users/markwinter/orgs",

"repos_url": "https://api.github.com/users/markwinter/repos",

"events_url": "https://api.github.com/users/markwinter/events{/privacy}",

"received_events_url": "https://api.github.com/users/markwinter/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

}

] | closed | false | null | [] | null | [

"Maybe using argo workflows `argo_workflows_count` is a better way to track runs"

] | "2021-12-15T06:05:41" | "2021-12-15T06:55:59" | "2021-12-15T06:39:12" | CONTRIBUTOR | null | ### What steps did you take

1. Create a recurring run (seems to be called a Job in the backend?)

2. Enable the recurring run so that runs are generated

<!-- A clear and concise description of what the bug is.-->

### What happened:

- `job_server_job_count` correctly increases when creating a recurring run

- `run_server_run_count` does not increase when runs started by recurring runs occur

### What did you expect to happen:

I expected `run_server_run_count` to also increase for each run started by a recurring run so that I can track the total amount of runs. Currently that counter only increases from one-off runs.

### Environment:

<!-- Please fill in those that seem relevant. -->

* How do you deploy Kubeflow Pipelines (KFP)? Initially Kubeflow 1.3 but we have since updated individual components

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

* KFP version: 1.7

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

* KFP SDK version: 1.7

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

### Anything else you would like to add:

<!-- Miscellaneous information that will assist in solving the issue.-->

### Labels

<!-- Please include labels below by uncommenting them to help us better triage issues -->

<!-- /area frontend -->

/area backend

<!-- /area sdk -->

<!-- /area testing -->

<!-- /area samples -->

<!-- /area components -->

---

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7063/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7063/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7058 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7058/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7058/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7058/events | https://github.com/kubeflow/pipelines/issues/7058 | 1,079,202,685 | I_kwDOB-71UM5AU1N9 | 7,058 | v2 - input parameter type auto conversion | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2186355346,

"node_id": "MDU6TGFiZWwyMTg2MzU1MzQ2",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/good%20first%20issue",

"name": "good first issue",

"color": "fef2c0",

"default": true,

"description": ""

}

] | open | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"hi @Bobgy @zijianjoy, can I take this one? I'm looking for a first issue, to get familiar with the code."

] | "2021-12-14T01:20:58" | "2022-02-15T08:05:04" | null | CONTRIBUTOR | null | When a component gets input parameters, its input parameters may not exactly match the component input spec's requirements.

In such a case, we should consider adding auto type conversions for possible type mismatches.

Right now, string to other type conversions have been implemented at [code link](https://github.com/kubeflow/pipelines/blob/ca6e05591d922f6958a7681827aea25c41b94573/v2/driver/driver.go#L634-L703).

The issue tracks considering implementation of other type conversions like bool to int. | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7058/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 1

} | https://api.github.com/repos/kubeflow/pipelines/issues/7058/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7048 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7048/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7048/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7048/events | https://github.com/kubeflow/pipelines/issues/7048 | 1,078,060,845 | I_kwDOB-71UM5AQect | 7,048 | v2 control flow - iterator (parallel for) advanced features | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | null | [] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | "2021-12-13T04:07:19" | "2022-04-17T06:27:46" | null | CONTRIBUTOR | null | * [ ] P1 https://github.com/kubeflow/pipelines/issues/6161

* [ ] P2 https://github.com/kubeflow/pipelines/tree/master/samples/core/loop_parallelism | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7048/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7048/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7047 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7047/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7047/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7047/events | https://github.com/kubeflow/pipelines/issues/7047 | 1,078,027,893 | I_kwDOB-71UM5AQWZ1 | 7,047 | feat(backend): support resource requests | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2152751095,

"node_id": "MDU6TGFiZWwyMTUyNzUxMDk1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/frozen",

"name": "lifecycle/frozen",

"color": "ededed",

"default": false,

"description": null

}

] | closed | false | {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

},

{

"login": "gkcalat",

"id": 35157096,

"node_id": "MDQ6VXNlcjM1MTU3MDk2",

"avatar_url": "https://avatars.githubusercontent.com/u/35157096?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gkcalat",

"html_url": "https://github.com/gkcalat",

"followers_url": "https://api.github.com/users/gkcalat/followers",

"following_url": "https://api.github.com/users/gkcalat/following{/other_user}",

"gists_url": "https://api.github.com/users/gkcalat/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gkcalat/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gkcalat/subscriptions",

"organizations_url": "https://api.github.com/users/gkcalat/orgs",

"repos_url": "https://api.github.com/users/gkcalat/repos",

"events_url": "https://api.github.com/users/gkcalat/events{/privacy}",

"received_events_url": "https://api.github.com/users/gkcalat/received_events",

"type": "User",

"site_admin": false

}

] | {

"url": "https://api.github.com/repos/kubeflow/pipelines/milestones/11",

"html_url": "https://github.com/kubeflow/pipelines/milestone/11",

"labels_url": "https://api.github.com/repos/kubeflow/pipelines/milestones/11/labels",

"id": 9154677,

"node_id": "MI_kwDOB-71UM4Ai7B1",

"number": 11,

"title": "KFP 2.0.0-beta.3",

"description": null,

"creator": {

"login": "chensun",

"id": 2043310,

"node_id": "MDQ6VXNlcjIwNDMzMTA=",

"avatar_url": "https://avatars.githubusercontent.com/u/2043310?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chensun",

"html_url": "https://github.com/chensun",

"followers_url": "https://api.github.com/users/chensun/followers",

"following_url": "https://api.github.com/users/chensun/following{/other_user}",

"gists_url": "https://api.github.com/users/chensun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chensun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chensun/subscriptions",

"organizations_url": "https://api.github.com/users/chensun/orgs",

"repos_url": "https://api.github.com/users/chensun/repos",

"events_url": "https://api.github.com/users/chensun/events{/privacy}",

"received_events_url": "https://api.github.com/users/chensun/received_events",

"type": "User",

"site_admin": false

},

"open_issues": 0,

"closed_issues": 1,

"state": "open",

"created_at": "2023-03-13T21:27:51",

"updated_at": "2023-04-25T17:48:42",

"due_on": null,

"closed_at": null

} | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"/lifecycle frozen\r\n\r\n"

] | "2021-12-13T03:02:29" | "2023-04-10T00:51:12" | "2023-04-10T00:51:11" | CONTRIBUTOR | null | KFP pipeline spec only has resource limit fields right now, consider also adding support for request fields.

https://github.com/kubeflow/pipelines/blob/1e032f550ce23cd40bfb6827b995248537b07d08/api/v2alpha1/pipeline_spec.proto#L632-L653 | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7047/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7047/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7046 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7046/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7046/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7046/events | https://github.com/kubeflow/pipelines/issues/7046 | 1,078,027,307 | I_kwDOB-71UM5AQWQr | 7,046 | feat(v2): support dynamic resource limits set by parameters | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | null | [] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | "2021-12-13T03:01:31" | "2022-04-17T07:27:22" | null | CONTRIBUTOR | null | These samples should pass:

* [ ] https://github.com/kubeflow/pipelines/blob/master/samples/core/resource_spec/runtime_resource_request.py

* [ ] https://github.com/kubeflow/pipelines/blob/master/samples/core/resource_spec/runtime_resource_request_gpu.py | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7046/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7046/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7043 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7043/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7043/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7043/events | https://github.com/kubeflow/pipelines/issues/7043 | 1,077,821,895 | I_kwDOB-71UM5APkHH | 7,043 | feat(backend): support accelerator resources | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2152751095,

"node_id": "MDU6TGFiZWwyMTUyNzUxMDk1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/frozen",

"name": "lifecycle/frozen",

"color": "ededed",

"default": false,

"description": null

}

] | closed | false | {

"login": "gkcalat",

"id": 35157096,

"node_id": "MDQ6VXNlcjM1MTU3MDk2",

"avatar_url": "https://avatars.githubusercontent.com/u/35157096?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gkcalat",

"html_url": "https://github.com/gkcalat",

"followers_url": "https://api.github.com/users/gkcalat/followers",

"following_url": "https://api.github.com/users/gkcalat/following{/other_user}",

"gists_url": "https://api.github.com/users/gkcalat/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gkcalat/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gkcalat/subscriptions",

"organizations_url": "https://api.github.com/users/gkcalat/orgs",

"repos_url": "https://api.github.com/users/gkcalat/repos",

"events_url": "https://api.github.com/users/gkcalat/events{/privacy}",

"received_events_url": "https://api.github.com/users/gkcalat/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "gkcalat",

"id": 35157096,

"node_id": "MDQ6VXNlcjM1MTU3MDk2",

"avatar_url": "https://avatars.githubusercontent.com/u/35157096?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gkcalat",

"html_url": "https://github.com/gkcalat",

"followers_url": "https://api.github.com/users/gkcalat/followers",

"following_url": "https://api.github.com/users/gkcalat/following{/other_user}",

"gists_url": "https://api.github.com/users/gkcalat/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gkcalat/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gkcalat/subscriptions",

"organizations_url": "https://api.github.com/users/gkcalat/orgs",

"repos_url": "https://api.github.com/users/gkcalat/repos",

"events_url": "https://api.github.com/users/gkcalat/events{/privacy}",

"received_events_url": "https://api.github.com/users/gkcalat/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"/lifecycle frozen"

] | "2021-12-12T14:34:37" | "2023-03-07T01:22:14" | "2023-03-07T01:22:14" | CONTRIBUTOR | null | null | {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7043/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7043/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7040 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7040/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7040/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7040/events | https://github.com/kubeflow/pipelines/issues/7040 | 1,077,273,630 | I_kwDOB-71UM5ANeQe | 7,040 | [bug] Idempotency in kubeflow pipeline sagemaker component. | {

"login": "goswamig",

"id": 3092152,

"node_id": "MDQ6VXNlcjMwOTIxNTI=",

"avatar_url": "https://avatars.githubusercontent.com/u/3092152?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/goswamig",

"html_url": "https://github.com/goswamig",

"followers_url": "https://api.github.com/users/goswamig/followers",

"following_url": "https://api.github.com/users/goswamig/following{/other_user}",

"gists_url": "https://api.github.com/users/goswamig/gists{/gist_id}",

"starred_url": "https://api.github.com/users/goswamig/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/goswamig/subscriptions",

"organizations_url": "https://api.github.com/users/goswamig/orgs",

"repos_url": "https://api.github.com/users/goswamig/repos",

"events_url": "https://api.github.com/users/goswamig/events{/privacy}",

"received_events_url": "https://api.github.com/users/goswamig/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

},

{

"id": 2415263031,

"node_id": "MDU6TGFiZWwyNDE1MjYzMDMx",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/components/aws/sagemaker",

"name": "area/components/aws/sagemaker",

"color": "0263f4",

"default": false,

"description": "AWS SageMaker components"

}

] | open | false | null | [] | null | [

"@akartsky @surajkota @mbaijal @ryansteakley FYI.",

"https://github.com/kubeflow/pipelines/issues/6465",

"/area components/aws/sagemaker",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | "2021-12-10T22:14:18" | "2022-04-28T04:59:41" | null | CONTRIBUTOR | null | ### What steps did you take

If node scales/up down, the sagemaker component tries to create the same job which fails. Since sagemaker does not let create the same name job. Component controller should be able to detect this and resume the job from existing state.

### What happened:

the job hangs/fail

### What did you expect to happen:

I expect the job to resume from previous state.

### Environment:

kfp-1.6

<!-- Don't delete message below to encourage users to support your issue! -->

Impacted by this bug? Give it a 👍. We prioritise the issues with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7040/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7040/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7029 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7029/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7029/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7029/events | https://github.com/kubeflow/pipelines/issues/7029 | 1,075,415,737 | I_kwDOB-71UM5AGYq5 | 7,029 | [feature] upgrade MLMD to 1.5.0 | {

"login": "Bobgy",

"id": 4957653,

"node_id": "MDQ6VXNlcjQ5NTc2NTM=",

"avatar_url": "https://avatars.githubusercontent.com/u/4957653?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Bobgy",

"html_url": "https://github.com/Bobgy",

"followers_url": "https://api.github.com/users/Bobgy/followers",

"following_url": "https://api.github.com/users/Bobgy/following{/other_user}",

"gists_url": "https://api.github.com/users/Bobgy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Bobgy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Bobgy/subscriptions",

"organizations_url": "https://api.github.com/users/Bobgy/orgs",

"repos_url": "https://api.github.com/users/Bobgy/repos",

"events_url": "https://api.github.com/users/Bobgy/events{/privacy}",

"received_events_url": "https://api.github.com/users/Bobgy/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | closed | false | {

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "zijianjoy",

"id": 37026441,

"node_id": "MDQ6VXNlcjM3MDI2NDQx",

"avatar_url": "https://avatars.githubusercontent.com/u/37026441?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zijianjoy",

"html_url": "https://github.com/zijianjoy",

"followers_url": "https://api.github.com/users/zijianjoy/followers",

"following_url": "https://api.github.com/users/zijianjoy/following{/other_user}",

"gists_url": "https://api.github.com/users/zijianjoy/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zijianjoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zijianjoy/subscriptions",

"organizations_url": "https://api.github.com/users/zijianjoy/orgs",

"repos_url": "https://api.github.com/users/zijianjoy/repos",

"events_url": "https://api.github.com/users/zijianjoy/events{/privacy}",

"received_events_url": "https://api.github.com/users/zijianjoy/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"/assign @zijianjoy \r\n/cc @haoxins ",

"All clients for MLMD have been updated."

] | "2021-12-09T10:36:31" | "2022-01-01T19:26:12" | "2022-01-01T19:26:12" | CONTRIBUTOR | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

/area backend

<!-- /area sdk -->

<!-- /area samples -->

<!-- /area components -->

instructions: https://github.com/kubeflow/pipelines/tree/master/third_party/ml-metadata

Tasks

* [x] #6996

* [x] regenerate golang client

* [x] regenerate frontend client

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7029/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7029/timeline | null | completed | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7028 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7028/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7028/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7028/events | https://github.com/kubeflow/pipelines/issues/7028 | 1,075,363,752 | I_kwDOB-71UM5AGL-o | 7,028 | [feature] Make func_to_container_op functions ready for autogenerated docs | {

"login": "hahamark1",

"id": 12664815,

"node_id": "MDQ6VXNlcjEyNjY0ODE1",

"avatar_url": "https://avatars.githubusercontent.com/u/12664815?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/hahamark1",

"html_url": "https://github.com/hahamark1",

"followers_url": "https://api.github.com/users/hahamark1/followers",

"following_url": "https://api.github.com/users/hahamark1/following{/other_user}",

"gists_url": "https://api.github.com/users/hahamark1/gists{/gist_id}",

"starred_url": "https://api.github.com/users/hahamark1/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/hahamark1/subscriptions",

"organizations_url": "https://api.github.com/users/hahamark1/orgs",

"repos_url": "https://api.github.com/users/hahamark1/repos",

"events_url": "https://api.github.com/users/hahamark1/events{/privacy}",

"received_events_url": "https://api.github.com/users/hahamark1/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1118896905,

"node_id": "MDU6TGFiZWwxMTE4ODk2OTA1",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/backend",

"name": "area/backend",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 1289588140,

"node_id": "MDU6TGFiZWwxMjg5NTg4MTQw",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/feature",

"name": "kind/feature",

"color": "2515fc",

"default": false,

"description": ""

}

] | open | false | null | [] | null | [

"cc @chensun ",

"Hi @hahamark1, \r\n\r\n`func_to_container_op` function is actually deprecated. To generate the docstrings, we need some amount of work to generate kfp-specific docstrings. We will work on this in the future. Thanks!",

"Hi @ji-yaqi , came across this and was wondering whether there is a way users could find out what are the deprecated aspects of v1 SDK from documentation/library itself. Would you be amenable to a PR that adds docstring updates/deprecation warnings to this function/other deprecated functions?"

] | "2021-12-09T09:44:38" | "2022-02-17T20:26:00" | null | NONE | null | ### Feature Area

<!-- Uncomment the labels below which are relevant to this feature: -->

<!-- /area frontend -->

/area backend

<!-- /area sdk -->

<!-- /area samples -->

<!-- /area components -->

### What feature would you like to see?

Currently the function wrapper (`func_to_container_op`) Does not copy the docstring of the function to the new wrapper object. Due to this, autogenerated docs cannot deal with kubeflow components.

### What is the use case or pain point?

It would help us drastically in spending less time on writing docs.

### Is there a workaround currently?

Currently, we write are docs by hand.

### How to solve it?

By adding the following to the function `func_to_container_op` in `components._python_op.py`

```

task_factory = _create_task_factory_from_component_spec(component_spec)

task_factory.__name__ = func.__name__

task_factory.__doc__ = func.__doc__

return task_factory

---

<!-- Don't delete message below to encourage users to support your feature request! -->

Love this idea? Give it a 👍. We prioritize fulfilling features with the most 👍.

| {

"url": "https://api.github.com/repos/kubeflow/pipelines/issues/7028/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/kubeflow/pipelines/issues/7028/timeline | null | null | null | null | false |

https://api.github.com/repos/kubeflow/pipelines/issues/7023 | https://api.github.com/repos/kubeflow/pipelines | https://api.github.com/repos/kubeflow/pipelines/issues/7023/labels{/name} | https://api.github.com/repos/kubeflow/pipelines/issues/7023/comments | https://api.github.com/repos/kubeflow/pipelines/issues/7023/events | https://github.com/kubeflow/pipelines/issues/7023 | 1,074,912,007 | I_kwDOB-71UM5AEdsH | 7,023 | [sdk] Wrong IPython url links | {

"login": "juliadeclared",

"id": 71798184,

"node_id": "MDQ6VXNlcjcxNzk4MTg0",

"avatar_url": "https://avatars.githubusercontent.com/u/71798184?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/juliadeclared",

"html_url": "https://github.com/juliadeclared",

"followers_url": "https://api.github.com/users/juliadeclared/followers",

"following_url": "https://api.github.com/users/juliadeclared/following{/other_user}",

"gists_url": "https://api.github.com/users/juliadeclared/gists{/gist_id}",

"starred_url": "https://api.github.com/users/juliadeclared/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/juliadeclared/subscriptions",

"organizations_url": "https://api.github.com/users/juliadeclared/orgs",

"repos_url": "https://api.github.com/users/juliadeclared/repos",

"events_url": "https://api.github.com/users/juliadeclared/events{/privacy}",

"received_events_url": "https://api.github.com/users/juliadeclared/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1073153908,

"node_id": "MDU6TGFiZWwxMDczMTUzOTA4",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/kind/bug",

"name": "kind/bug",

"color": "fc2515",

"default": false,

"description": ""

},

{

"id": 1136110037,

"node_id": "MDU6TGFiZWwxMTM2MTEwMDM3",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/area/sdk",

"name": "area/sdk",

"color": "d2b48c",

"default": false,

"description": ""

},

{

"id": 2157634204,

"node_id": "MDU6TGFiZWwyMTU3NjM0MjA0",

"url": "https://api.github.com/repos/kubeflow/pipelines/labels/lifecycle/stale",

"name": "lifecycle/stale",

"color": "bbbbbb",

"default": false,

"description": "The issue / pull request is stale, any activities remove this label."

}

] | open | false | null | [] | null | [

"cc @chensun ",

"Hi @juliadeclared I saw you closed the PR. Is the issue still valid? \r\n",

"@chensun yes, the issue is still valid. For KF installations, the generated URI should include domain/_/pipeline since this is how its mapped for KF full installation.\r\n\r\nMaybe this can be a small doc update or something similar. But it was quite tricky for us to debug.",

"> id. For KF installations, the generated URI should include domain/_/pipeline since this is how its mapped for KF full installation.\r\n\r\nI see. yes, I recall the URL for standalone KFP deployment and full fledge KF deployment are different. And currently we don't have a way to tell from the SDK client side, so we can only return one form of the URL. \r\nYou're probably right that this might need a doc update. Any suggestion where the change could be? Please feel free to create doc update PRs as well. Thanks!",

"@chensun here is the doc update PR: https://github.com/kubeflow/website/pull/3105",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | "2021-12-08T22:49:30" | "2022-04-17T06:27:47" | null | NONE | null | ### Environment

Running on a full KF 1.4 installation on top of GKE.

* KFP version: 1.7.1

<!-- For more information, see an overview of KFP installation options: https://www.kubeflow.org/docs/pipelines/installation/overview/. -->

* KFP SDK version: 1.6.0

<!-- Specify the version of Kubeflow Pipelines that you are using. The version number appears in the left side navigation of user interface.

To find the version number, See version number shows on bottom of KFP UI left sidenav. -->

* All dependencies version:

<!-- Specify the output of the following shell command: $pip list | grep kfp -->

kfp 1.6.0

kfp-pipeline-spec 0.1.7

kfp-server-api 1.6.0

### Steps to reproduce

<!--

Specify how to reproduce the problem.

This may include information such as: a description of the process, code snippets, log output, or screenshots.

-->

Create an experiment `kfp.Client.create_experiment() `in a notebook

Click the generated link in Ipython

### Expected result

<!-- What should the correct behavior be? -->