datasetId

stringlengths 2

81

| card

stringlengths 20

977k

|

|---|---|

teragron/reviews | ---

license: mit

language:

- en

tags:

- finance

pretty_name: review_me

size_categories:

- 1M<n<10M

task_categories:

- text-generation

---

Following packages are necessary to compile the model in C:

```bash

sudo apt install gcc-7

```

```bash

sudo apt-get install build-essential

```

```python

for i in range(1,21):

!wget https://huggingface.co/datasets/teragron/reviews/resolve/main/chunk_{i}.bin

```

```bash

git clone https://github.com/karpathy/llama2.c.git

```

```bash

cd llama2.c

```

```bash

pip install -r requirements.txt

```

Path: data/TinyStories_all_data |

tahrirchi/uz-books | ---

configs:

- config_name: default

data_files:

- split: original

path: data/original-*

- split: lat

path: data/lat-*

dataset_info:

features:

- name: text

dtype: string

splits:

- name: original

num_bytes: 19244856855

num_examples: 39712

- name: lat

num_bytes: 13705512346

num_examples: 39712

download_size: 16984559355

dataset_size: 32950369201

annotations_creators:

- no-annotation

task_categories:

- text-generation

- fill-mask

task_ids:

- language-modeling

- masked-language-modeling

multilinguality:

- monolingual

language:

- uz

size_categories:

- 10M<n<100M

pretty_name: UzBooks

license: apache-2.0

tags:

- uz

- books

---

# Dataset Card for BookCorpus

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://tahrirchi.uz/grammatika-tekshiruvi](https://tahrirchi.uz/grammatika-tekshiruvi)

- **Repository:** [More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

- **Paper:** [More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

- **Point of Contact:** [More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

- **Size of downloaded dataset files:** 16.98 GB

- **Size of the generated dataset:** 32.95 GB

- **Total amount of disk used:** 49.93 GB

### Dataset Summary

In an effort to democratize research on low-resource languages, we release UzBooks dataset, a cleaned book corpus consisting of nearly 40000 books in Uzbek Language divided into two branches: "original" and "lat," representing the OCRed (Latin and Cyrillic) and fully Latin versions of the texts, respectively.

Please refer to our [blogpost](https://tahrirchi.uz/grammatika-tekshiruvi) and paper (Coming soon!) for further details.

To load and use dataset, run this script:

```python

from datasets import load_dataset

uz_books=load_dataset("tahrirchi/uz-books")

```

## Dataset Structure

### Data Instances

#### plain_text

- **Size of downloaded dataset files:** 16.98 GB

- **Size of the generated dataset:** 32.95 GB

- **Total amount of disk used:** 49.93 GB

An example of 'train' looks as follows.

```

{

"text": "Hamsa\nAlisher Navoiy ..."

}

```

### Data Fields

The data fields are the same among all splits.

#### plain_text

- `text`: a `string` feature that contains text of the books.

### Data Splits

| name | |

|-----------------|--------:|

| original | 39712 |

| lat | 39712 |

## Dataset Creation

The books have been crawled from various internet sources and preprocessed using Optical Character Recognition techniques in [Tesseract OCR Engine](https://github.com/tesseract-ocr/tesseract). The latin version is created by converting the original dataset with highly curated scripts in order to put more emphasis on the research and development of the field.

## Citation

Please cite this model using the following format:

```

@online{Mamasaidov2023UzBooks,

author = {Mukhammadsaid Mamasaidov and Abror Shopulatov},

title = {UzBooks dataset},

year = {2023},

url = {https://huggingface.co/datasets/tahrirchi/uz-books},

note = {Accessed: 2023-10-28}, % change this date

urldate = {2023-10-28} % change this date

}

```

## Gratitude

We are thankful to these awesome organizations and people for helping to make it happen:

- [Ilya Gusev](https://github.com/IlyaGusev/): for advise throughout the process

- [David Dale](https://daviddale.ru): for advise throughout the process

## Contacts

We believe that this work will enable and inspire all enthusiasts around the world to open the hidden beauty of low-resource languages, in particular Uzbek.

For further development and issues about the dataset, please use m.mamasaidov@tahrirchi.uz or a.shopolatov@tahrirchi.uz to contact. |

ubaada/booksum-complete-cleaned | ---

task_categories:

- summarization

- text-generation

language:

- en

pretty_name: BookSum Summarization Dataset Clean

size_categories:

- 1K<n<10K

configs:

- config_name: books

data_files:

- split: train

path: "books/train.jsonl"

- split: test

path: "books/test.jsonl"

- split: validation

path: "books/val.jsonl"

- config_name: chapters

data_files:

- split: train

path: "chapters/train.jsonl"

- split: test

path: "chapters/test.jsonl"

- split: validation

path: "chapters/val.jsonl"

---

# Table of Contents

1. [Description](#description)

2. [Usage](#usage)

3. [Distribution](#distribution)

- [Chapters Dataset](#chapters-dataset)

- [Books Dataset](#books-dataset)

4. [Structure](#structure)

5. [Results and Comparison with kmfoda/booksum](#results-and-comparison-with-kmfodabooksum)

# Description:

This repository contains the Booksum dataset introduced in the paper [BookSum: A Collection of Datasets for Long-form Narrative Summarization

](https://arxiv.org/abs/2105.08209).

This dataset includes both book and chapter summaries from the BookSum dataset (unlike the kmfoda/booksum one which only contains the chapter dataset). Some mismatched summaries have been corrected. Uneccessary columns has been discarded. Contains minimal text-to-summary rows. As there are multiple summaries for a given text, each row contains an array of summaries.

# Usage

Note: Make sure you have [>2.14.0 version of "datasets" library](https://github.com/huggingface/datasets/releases/tag/2.14.0) installed to load the dataset successfully.

```

from datasets import load_dataset

book_data = load_dataset("ubaada/booksum-complete-cleaned", "books")

chapter_data = load_dataset("ubaada/booksum-complete-cleaned", "chapters")

# Print the 1st book

print(book_data["train"][0]['text'])

# Print the summary of the 1st book

print(book_data["train"][0]['summary'][0]['text'])

```

# Distribution

<div style="display: inline-block; vertical-align: top; width: 45%;">

## Chapters Dataset

| Split | Total Sum. | Missing Sum. | Successfully Processed | Chapters |

|---------|------------|--------------|------------------------|------|

| Train | 9712 | 178 | 9534 (98.17%) | 5653 |

| Test | 1432 | 0 | 1432 (100.0%) | 950 |

| Val | 1485 | 0 | 1485 (100.0%) | 854 |

</div>

<div style="display: inline-block; vertical-align: top; width: 45%; margin-left: 5%;">

## Books Dataset

| Split | Total Sum. | Missing Sum. | Successfully Processed | Books |

|---------|------------|--------------|------------------------|------|

| Train | 314 | 0 | 314 (100.0%) | 151 |

| Test | 46 | 0 | 46 (100.0%) | 17 |

| Val | 45 | 0 | 45 (100.0%) | 19 |

</div>

# Structure:

```

Chapters Dataset

0 - bid (book id)

1 - book_title

2 - chapter_id

3 - text (raw chapter text)

4 - summary (list of summaries from different sources)

- {source, text (summary), analysis}

...

5 - is_aggregate (bool) (if true, then the text contains more than one chapter)

Books Dataset:

0 - bid (book id)

1 - title

2 - text (raw text)

4 - summary (list of summaries from different sources)

- {source, text (summary), analysis}

...

```

# Reults and Comparison with kmfoda/booksum

Tested on the 'test' split of chapter sub-dataset. There are slight improvement on R1/R2 scores compared to another BookSum repo likely due to the work done on cleaning the misalignments in the alignment file. In the plot for this dataset, first summary \[0\] is chosen for each chapter. If best reference summary is chosen from the list for each chapter, theere are further improvements but are not shown here for fairness.

|

p1atdev/open2ch | ---

language:

- ja

license: apache-2.0

size_categories:

- 1M<n<10M

task_categories:

- text-generation

- text2text-generation

dataset_info:

- config_name: all-corpus

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

- name: board

dtype: string

splits:

- name: train

num_bytes: 1693355620

num_examples: 8134707

download_size: 868453263

dataset_size: 1693355620

- config_name: all-corpus-cleaned

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

- name: board

dtype: string

splits:

- name: train

num_bytes: 1199092499

num_examples: 6192730

download_size: 615570076

dataset_size: 1199092499

- config_name: livejupiter

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

splits:

- name: train

num_bytes: 1101433134

num_examples: 5943594

download_size: 592924274

dataset_size: 1101433134

- config_name: livejupiter-cleaned

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

splits:

- name: train

num_bytes: 807499499

num_examples: 4650253

download_size: 437414714

dataset_size: 807499499

- config_name: news4vip

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

splits:

- name: train

num_bytes: 420403926

num_examples: 1973817

download_size: 240974172

dataset_size: 420403926

- config_name: news4vip-cleaned

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

splits:

- name: train

num_bytes: 269941607

num_examples: 1402903

download_size: 156934128

dataset_size: 269941607

- config_name: newsplus

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

splits:

- name: train

num_bytes: 56071294

num_examples: 217296

download_size: 32368053

dataset_size: 56071294

- config_name: newsplus-cleaned

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

splits:

- name: train

num_bytes: 33387874

num_examples: 139574

download_size: 19556120

dataset_size: 33387874

- config_name: ranking

features:

- name: dialogue

sequence:

- name: speaker

dtype: int8

- name: content

dtype: string

- name: next

struct:

- name: speaker

dtype: int8

- name: content

dtype: string

- name: random

sequence: string

splits:

- name: train

num_bytes: 1605628

num_examples: 2000

- name: test

num_bytes: 1604356

num_examples: 1953

download_size: 2127033

dataset_size: 3209984

configs:

- config_name: all-corpus

data_files:

- split: train

path: all-corpus/train-*

- config_name: all-corpus-cleaned

data_files:

- split: train

path: all-corpus-cleaned/train-*

- config_name: livejupiter

data_files:

- split: train

path: livejupiter/train-*

- config_name: livejupiter-cleaned

data_files:

- split: train

path: livejupiter-cleaned/train-*

- config_name: news4vip

data_files:

- split: train

path: news4vip/train-*

- config_name: news4vip-cleaned

data_files:

- split: train

path: news4vip-cleaned/train-*

- config_name: newsplus

data_files:

- split: train

path: newsplus/train-*

- config_name: newsplus-cleaned

data_files:

- split: train

path: newsplus-cleaned/train-*

- config_name: ranking

data_files:

- split: train

path: ranking/train-*

- split: test

path: ranking/test-*

tags:

- not-for-all-audiences

---

# おーぷん2ちゃんねる対話コーパス

## Dataset Details

### Dataset Description

[おーぷん2ちゃんねる対話コーパス](https://github.com/1never/open2ch-dialogue-corpus) を Huggingface Datasets 向けに変換したものになります。

- **Curated by:** [More Information Needed]

- **Language:** Japanese

- **License:** Apache-2.0

### Dataset Sources

- **Repository:** https://github.com/1never/open2ch-dialogue-corpus

## Dataset Structure

- `all-corpus`: `livejupiter`, `news4vip`, `newsplus` サブセットを連結したもの

- `dialogue`: 対話データ (`list[dict]`)

- `speaker`: 話者番号。`1` または `2`。

- `content`: 発言内容

- `board`: 連結元のサブセット名

- `livejupiter`: オリジナルのデータセットでの `livejupiter.tsv` から変換されたデータ。

- `dialogue`: 対話データ (`list[dict]`)

- `speaker`: 話者番号。`1` または `2`。

- `content`: 発言内容

- `news4vip`: オリジナルのデータセットでの `news4vip.tsv` から変換されたデータ。

- 構造は同上

- `newsplus`: オリジナルのデータセットでの `newsplus.tsv` から変換されたデータ。

- 構造は同上

- `ranking`: 応答順位付けタスク用データ (オリジナルデータセットでの `ranking.zip`)

- `train` と `test` split があり、それぞれはオリジナルデータセットの `dev.tsv` と `test.tsv` に対応します。

- `dialogue`: 対話データ (`list[dict]`)

- `speaker`: 話者番号。`1` または `2`。

- `content`: 発言内容

- `next`: 対話の次に続く正解の応答 (`dict`)

- `speaker`: 話者番号。`1` または `2`

- `content`: 発言内容

- `random`: ランダムに選ばれた応答 9 個 (`list[str]`)

また、`all-corpus`, `livejupiter`, `news4vip`, `newsplus` にはそれぞれ名前に `-cleaned` が付与されたバージョンがあり、これらのサブセットではオリジナルのデータセットで配布されていた NG ワードリストを利用してフィルタリングされたものです。

オリジナルのデータセットでは各発言内の改行は `__BR__` に置換されていますが、このデータセットではすべて `\n` に置き換えられています。

## Dataset Creation

### Source Data

(オリジナルのデータセットの説明より)

> おーぷん2ちゃんねるの「なんでも実況(ジュピター)」「ニュー速VIP」「ニュース速報+」の3つの掲示板をクロールして作成した対話コーパスです. おーぷん2ちゃんねる開設時から2019年7月20日までのデータを使用して作成しました.

#### Data Collection and Processing

[オリジナルのデータセット](https://github.com/1never/open2ch-dialogue-corpus) を参照。

#### Personal and Sensitive Information

`-cleaned` ではないサブセットでは、非常に不適切な表現が多いため注意が必要です。

## Usage

```py

from datasets import load_dataset

ds = load_dataset(

"p1atdev/open2ch",

name="all-corpus",

)

print(ds)

print(ds["train"][0])

# DatasetDict({

# train: Dataset({

# features: ['dialogue', 'board'],

# num_rows: 8134707

# })

# })

# {'dialogue': {'speaker': [1, 2], 'content': ['実況スレをたてる', 'おんj民の鑑']}, 'board': 'livejupiter'}

``` |

open-llm-leaderboard/details_01-ai__Yi-34B | ---

pretty_name: Evaluation run of 01-ai/Yi-34B

dataset_summary: "Dataset automatically created during the evaluation run of model\

\ [01-ai/Yi-34B](https://huggingface.co/01-ai/Yi-34B) on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).\n\

\nThe dataset is composed of 3 configuration, each one coresponding to one of the\

\ evaluated task.\n\nThe dataset has been created from 1 run(s). Each run can be\

\ found as a specific split in each configuration, the split being named using the\

\ timestamp of the run.The \"train\" split is always pointing to the latest results.\n\

\nAn additional configuration \"results\" store all the aggregated results of the\

\ run (and is used to compute and display the aggregated metrics on the [Open LLM\

\ Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)).\n\

\nTo load the details from a run, you can for instance do the following:\n```python\n\

from datasets import load_dataset\ndata = load_dataset(\"open-llm-leaderboard/details_01-ai__Yi-34B_public\"\

,\n\t\"harness_winogrande_5\",\n\tsplit=\"train\")\n```\n\n## Latest results\n\n\

These are the [latest results from run 2023-11-08T19:46:38.378007](https://huggingface.co/datasets/open-llm-leaderboard/details_01-ai__Yi-34B_public/blob/main/results_2023-11-08T19-46-38.378007.json)(note\

\ that their might be results for other tasks in the repos if successive evals didn't\

\ cover the same tasks. You find each in the results and the \"latest\" split for\

\ each eval):\n\n```python\n{\n \"all\": {\n \"em\": 0.6081166107382551,\n\

\ \"em_stderr\": 0.004999326629880105,\n \"f1\": 0.6419882550335565,\n\

\ \"f1_stderr\": 0.004748239351156368,\n \"acc\": 0.6683760448499347,\n\

\ \"acc_stderr\": 0.012160441706531726\n },\n \"harness|drop|3\": {\n\

\ \"em\": 0.6081166107382551,\n \"em_stderr\": 0.004999326629880105,\n\

\ \"f1\": 0.6419882550335565,\n \"f1_stderr\": 0.004748239351156368\n\

\ },\n \"harness|gsm8k|5\": {\n \"acc\": 0.5064442759666414,\n \

\ \"acc_stderr\": 0.013771340765699767\n },\n \"harness|winogrande|5\"\

: {\n \"acc\": 0.8303078137332282,\n \"acc_stderr\": 0.010549542647363686\n\

\ }\n}\n```"

repo_url: https://huggingface.co/01-ai/Yi-34B

leaderboard_url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

point_of_contact: clementine@hf.co

configs:

- config_name: harness_drop_3

data_files:

- split: 2023_11_08T19_46_38.378007

path:

- '**/details_harness|drop|3_2023-11-08T19-46-38.378007.parquet'

- split: latest

path:

- '**/details_harness|drop|3_2023-11-08T19-46-38.378007.parquet'

- config_name: harness_gsm8k_5

data_files:

- split: 2023_11_08T19_46_38.378007

path:

- '**/details_harness|gsm8k|5_2023-11-08T19-46-38.378007.parquet'

- split: latest

path:

- '**/details_harness|gsm8k|5_2023-11-08T19-46-38.378007.parquet'

- config_name: harness_winogrande_5

data_files:

- split: 2023_11_08T19_46_38.378007

path:

- '**/details_harness|winogrande|5_2023-11-08T19-46-38.378007.parquet'

- split: latest

path:

- '**/details_harness|winogrande|5_2023-11-08T19-46-38.378007.parquet'

- config_name: results

data_files:

- split: 2023_11_08T19_46_38.378007

path:

- results_2023-11-08T19-46-38.378007.parquet

- split: latest

path:

- results_2023-11-08T19-46-38.378007.parquet

---

# Dataset Card for Evaluation run of 01-ai/Yi-34B

## Dataset Description

- **Homepage:**

- **Repository:** https://huggingface.co/01-ai/Yi-34B

- **Paper:**

- **Leaderboard:** https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

- **Point of Contact:** clementine@hf.co

### Dataset Summary

Dataset automatically created during the evaluation run of model [01-ai/Yi-34B](https://huggingface.co/01-ai/Yi-34B) on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).

The dataset is composed of 3 configuration, each one coresponding to one of the evaluated task.

The dataset has been created from 1 run(s). Each run can be found as a specific split in each configuration, the split being named using the timestamp of the run.The "train" split is always pointing to the latest results.

An additional configuration "results" store all the aggregated results of the run (and is used to compute and display the aggregated metrics on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)).

To load the details from a run, you can for instance do the following:

```python

from datasets import load_dataset

data = load_dataset("open-llm-leaderboard/details_01-ai__Yi-34B_public",

"harness_winogrande_5",

split="train")

```

## Latest results

These are the [latest results from run 2023-11-08T19:46:38.378007](https://huggingface.co/datasets/open-llm-leaderboard/details_01-ai__Yi-34B_public/blob/main/results_2023-11-08T19-46-38.378007.json)(note that their might be results for other tasks in the repos if successive evals didn't cover the same tasks. You find each in the results and the "latest" split for each eval):

```python

{

"all": {

"em": 0.6081166107382551,

"em_stderr": 0.004999326629880105,

"f1": 0.6419882550335565,

"f1_stderr": 0.004748239351156368,

"acc": 0.6683760448499347,

"acc_stderr": 0.012160441706531726

},

"harness|drop|3": {

"em": 0.6081166107382551,

"em_stderr": 0.004999326629880105,

"f1": 0.6419882550335565,

"f1_stderr": 0.004748239351156368

},

"harness|gsm8k|5": {

"acc": 0.5064442759666414,

"acc_stderr": 0.013771340765699767

},

"harness|winogrande|5": {

"acc": 0.8303078137332282,

"acc_stderr": 0.010549542647363686

}

}

```

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

[More Information Needed] |

matheusrdgsf/re_dial_ptbr | ---

dataset_info:

features:

- name: conversationId

dtype: int32

- name: messages

list:

- name: messageId

dtype: int64

- name: senderWorkerId

dtype: int64

- name: text

dtype: string

- name: timeOffset

dtype: int64

- name: messages_translated

list:

- name: messageId

dtype: int64

- name: senderWorkerId

dtype: int64

- name: text

dtype: string

- name: timeOffset

dtype: int64

- name: movieMentions

list:

- name: movieId

dtype: string

- name: movieName

dtype: string

- name: respondentQuestions

list:

- name: liked

dtype: int64

- name: movieId

dtype: string

- name: seen

dtype: int64

- name: suggested

dtype: int64

- name: respondentWorkerId

dtype: int32

- name: initiatorWorkerId

dtype: int32

- name: initiatorQuestions

list:

- name: liked

dtype: int64

- name: movieId

dtype: string

- name: seen

dtype: int64

- name: suggested

dtype: int64

splits:

- name: train

num_bytes: 26389658

num_examples: 9005

- name: test

num_bytes: 3755474

num_examples: 1342

download_size: 11072939

dataset_size: 30145132

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

license: mit

task_categories:

- text-classification

- text2text-generation

- conversational

- translation

language:

- pt

- en

tags:

- conversational recommendation

- recommendation

- conversational

pretty_name: ReDial (Recommendation Dialogues) PTBR

size_categories:

- 10K<n<100K

---

# Dataset Card for ReDial - PTBR

- **Original dataset:** [Redial Huggingface](https://huggingface.co/datasets/re_dial)

- **Homepage:** [ReDial Dataset](https://redialdata.github.io/website/)

- **Repository:** [ReDialData](https://github.com/ReDialData/website/tree/data)

- **Paper:** [Towards Deep Conversational Recommendations](https://proceedings.neurips.cc/paper/2018/file/800de15c79c8d840f4e78d3af937d4d4-Paper.pdf)

### Dataset Summary

The ReDial (Recommendation Dialogues) PTBR dataset is an annotated collection of dialogues where users recommend movies to each other translated to brazilian portuguese.

The adapted version of this dataset in Brazilian Portuguese was translated by the [Maritalk](https://www.maritaca.ai/). This translated version opens up opportunities fo research at the intersection of goal-directed dialogue systems (such as restaurant recommendations) and free-form, colloquial dialogue systems.

Some samples from the original dataset have been removed as we've reached the usage limit in Maritalk. Consequently, the training set has been reduced by nearly 10%.

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

English and Portuguese.

## Dataset Structure

### Data Instances

```

{

"conversationId": 391,

"messages": [

{

"messageId": 1021,

"senderWorkerId": 0,

"text": "Hi there, how are you? I\'m looking for movie recommendations",

"timeOffset": 0

},

{

"messageId": 1022,

"senderWorkerId": 1,

"text": "I am doing okay. What kind of movies do you like?",

"timeOffset": 15

},

{

"messageId": 1023,

"senderWorkerId": 0,

"text": "I like animations like @84779 and @191602",

"timeOffset": 66

},

{

"messageId": 1024,

"senderWorkerId": 0,

"text": "I also enjoy @122159",

"timeOffset": 86

},

{

"messageId": 1025,

"senderWorkerId": 0,

"text": "Anything artistic",

"timeOffset": 95

},

{

"messageId": 1026,

"senderWorkerId": 1,

"text": "You might like @165710 that was a good movie.",

"timeOffset": 135

},

{

"messageId": 1027,

"senderWorkerId": 0,

"text": "What\'s it about?",

"timeOffset": 151

},

{

"messageId": 1028,

"senderWorkerId": 1,

"text": "It has Alec Baldwin it is about a baby that works for a company and gets adopted it is very funny",

"timeOffset": 207

},

{

"messageId": 1029,

"senderWorkerId": 0,

"text": "That seems like a nice comedy",

"timeOffset": 238

},

{

"messageId": 1030,

"senderWorkerId": 0,

"text": "Do you have any animated recommendations that are a bit more dramatic? Like @151313 for example",

"timeOffset": 272

},

{

"messageId": 1031,

"senderWorkerId": 0,

"text": "I like comedies but I prefer films with a little more depth",

"timeOffset": 327

},

{

"messageId": 1032,

"senderWorkerId": 1,

"text": "That is a tough one but I will remember something",

"timeOffset": 467

},

{

"messageId": 1033,

"senderWorkerId": 1,

"text": "@203371 was a good one",

"timeOffset": 509

},

{

"messageId": 1034,

"senderWorkerId": 0,

"text": "Ooh that seems cool! Thanks for the input. I\'m ready to submit if you are.",

"timeOffset": 564

},

{

"messageId": 1035,

"senderWorkerId": 1,

"text": "It is animated, sci fi, and has action",

"timeOffset": 571

},

{

"messageId": 1036,

"senderWorkerId": 1,

"text": "Glad I could help",

"timeOffset": 579

},

{

"messageId": 1037,

"senderWorkerId": 0,

"text": "Nice",

"timeOffset": 581

},

{

"messageId": 1038,

"senderWorkerId": 0,

"text": "Take care, cheers!",

"timeOffset": 591

},

{

"messageId": 1039,

"senderWorkerId": 1,

"text": "bye",

"timeOffset": 608

}

],

"messages_translated": [

{

"messageId": 1021,

"senderWorkerId": 0,

"text": "Olá, como você está? Estou procurando recomendações de filmes.",

"timeOffset": 0

},

{

"messageId": 1022,

"senderWorkerId": 1,

"text": "Eu estou indo bem. Qual tipo de filmes você gosta?",

"timeOffset": 15

},

{

"messageId": 1023,

"senderWorkerId": 0,

"text": "Eu gosto de animações como @84779 e @191602.",

"timeOffset": 66

},

{

"messageId": 1024,

"senderWorkerId": 0,

"text": "Eu também gosto de @122159.",

"timeOffset": 86

},

{

"messageId": 1025,

"senderWorkerId": 0,

"text": "Qualquer coisa artística",

"timeOffset": 95

},

{

"messageId": 1026,

"senderWorkerId": 1,

"text": "Você pode gostar de saber que foi um bom filme.",

"timeOffset": 135

},

{

"messageId": 1027,

"senderWorkerId": 0,

"text": "O que é isso?",

"timeOffset": 151

},

{

"messageId": 1028,

"senderWorkerId": 1,

"text": "Tem um bebê que trabalha para uma empresa e é adotado. É muito engraçado.",

"timeOffset": 207

},

{

"messageId": 1029,

"senderWorkerId": 0,

"text": "Isso parece ser uma comédia legal.",

"timeOffset": 238

},

{

"messageId": 1030,

"senderWorkerId": 0,

"text": "Você tem alguma recomendação animada que seja um pouco mais dramática, como por exemplo @151313?",

"timeOffset": 272

},

{

"messageId": 1031,

"senderWorkerId": 0,

"text": "Eu gosto de comédias, mas prefiro filmes com um pouco mais de profundidade.",

"timeOffset": 327

},

{

"messageId": 1032,

"senderWorkerId": 1,

"text": "Isso é um desafio, mas eu me lembrarei de algo.",

"timeOffset": 467

},

{

"messageId": 1033,

"senderWorkerId": 1,

"text": "@203371 Foi um bom dia.",

"timeOffset": 509

},

{

"messageId": 1034,

"senderWorkerId": 0,

"text": "Ah, parece legal! Obrigado pela contribuição. Estou pronto para enviar se você estiver.",

"timeOffset": 564

},

{

"messageId": 1035,

"senderWorkerId": 1,

"text": "É animado, de ficção científica e tem ação.",

"timeOffset": 571

},

{

"messageId": 1036,

"senderWorkerId": 1,

"text": "Fico feliz em poder ajudar.",

"timeOffset": 579

},

{

"messageId": 1037,

"senderWorkerId": 0,

"text": "Legal",

"timeOffset": 581

},

{

"messageId": 1038,

"senderWorkerId": 0,

"text": "Cuide-se, abraços!",

"timeOffset": 591

},

{

"messageId": 1039,

"senderWorkerId": 1,

"text": "Adeus",

"timeOffset": 608

}

],

"movieMentions": [

{

"movieId": "203371",

"movieName": "Final Fantasy: The Spirits Within (2001)"

},

{

"movieId": "84779",

"movieName": "The Triplets of Belleville (2003)"

},

{

"movieId": "122159",

"movieName": "Mary and Max (2009)"

},

{

"movieId": "151313",

"movieName": "A Scanner Darkly (2006)"

},

{

"movieId": "191602",

"movieName": "Waking Life (2001)"

},

{

"movieId": "165710",

"movieName": "The Boss Baby (2017)"

}

],

"respondentQuestions": [

{

"liked": 1,

"movieId": "203371",

"seen": 0,

"suggested": 1

},

{

"liked": 1,

"movieId": "84779",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "122159",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "151313",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "191602",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "165710",

"seen": 0,

"suggested": 1

}

],

"respondentWorkerId": 1,

"initiatorWorkerId": 0,

"initiatorQuestions": [

{

"liked": 1,

"movieId": "203371",

"seen": 0,

"suggested": 1

},

{

"liked": 1,

"movieId": "84779",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "122159",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "151313",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "191602",

"seen": 1,

"suggested": 0

},

{

"liked": 1,

"movieId": "165710",

"seen": 0,

"suggested": 1

}

]

}

```

### Data Fields

The dataset is published in the “jsonl” format, i.e., as a text file where each line corresponds to a Dialogue given as a valid JSON document.

A Dialogue contains these fields:

**conversationId:** an integer

**initiatorWorkerId:** an integer identifying to the worker initiating the conversation (the recommendation seeker)

**respondentWorkerId:** an integer identifying the worker responding to the initiator (the recommender)

**messages:** a list of Message objects

**messages_translated:** a list of Message objects

**movieMentions:** a dict mapping movie IDs mentioned in this dialogue to movie names

**initiatorQuestions:** a dictionary mapping movie IDs to the labels supplied by the initiator. Each label is a bool corresponding to whether the initiator has said he saw the movie, liked it, or suggested it.

**respondentQuestions:** a dictionary mapping movie IDs to the labels supplied by the respondent. Each label is a bool corresponding to whether the initiator has said he saw the movie, liked it, or suggested it.

Each Message of **messages** contains these fields:

**messageId:** a unique ID for this message

**text:** a string with the actual message. The string may contain a token starting with @ followed by an integer. This is a movie ID which can be looked up in the movieMentions field of the Dialogue object.

**timeOffset:** time since start of dialogue in seconds

**senderWorkerId:** the ID of the worker sending the message, either initiatorWorkerId or respondentWorkerId.

Each Message of **messages_translated** contains the same struct with the text translated to portuguese.

The labels in initiatorQuestions and respondentQuestions have the following meaning:

*suggested:* 0 if it was mentioned by the seeker, 1 if it was a suggestion from the recommender

*seen:* 0 if the seeker has not seen the movie, 1 if they have seen it, 2 if they did not say

*liked:* 0 if the seeker did not like the movie, 1 if they liked it, 2 if they did not say

### Data Splits

The original dataset contains a total of 11348 dialogues, 10006 for training and model selection, and 1342 for testing.

This translated version has near values but 10% reduced in train split.

### Contributions

This work have has done by [matheusrdg](https://github.com/matheusrdg) and [wfco](https://github.com/willianfco).

The translation of this dataset was made possible thanks to the Maritalk API.

|

rishiraj/hindichat | ---

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

dataset_info:

features:

- name: prompt

dtype: string

- name: prompt_id

dtype: string

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: category

dtype: string

- name: text

dtype: string

splits:

- name: train

num_bytes: 64144365

num_examples: 9500

- name: test

num_bytes: 3455962

num_examples: 500

download_size: 27275492

dataset_size: 67600327

task_categories:

- conversational

- text-generation

language:

- hi

pretty_name: Hindi Chat

license: cc-by-nc-4.0

---

# Dataset Card for Hindi Chat

We know that current English-first LLMs don’t work well for many other languages, both in terms of performance, latency, and speed. Building instruction datasets for non-English languages is an important challenge that needs to be solved.

Dedicated towards addressing this problem, I release 2 new datasets [rishiraj/bengalichat](https://huggingface.co/datasets/rishiraj/bengalichat/) & [rishiraj/hindichat](https://huggingface.co/datasets/rishiraj/hindichat/) of 10,000 instructions and demonstrations each. This data can be used for supervised fine-tuning (SFT) to make language multilingual models follow instructions better.

### Dataset Summary

[rishiraj/hindichat](https://huggingface.co/datasets/rishiraj/hindichat/) was modelled after the instruction dataset described in OpenAI's [InstructGPT paper](https://huggingface.co/papers/2203.02155), and is translated from [HuggingFaceH4/no_robots](https://huggingface.co/datasets/HuggingFaceH4/no_robots/) which comprised mostly of single-turn instructions across the following categories:

| Category | Count |

|:-----------|--------:|

| Generation | 4560 |

| Open QA | 1240 |

| Brainstorm | 1120 |

| Chat | 850 |

| Rewrite | 660 |

| Summarize | 420 |

| Coding | 350 |

| Classify | 350 |

| Closed QA | 260 |

| Extract | 190 |

### Languages

The data in [rishiraj/hindichat](https://huggingface.co/datasets/rishiraj/hindichat/) are in Hindi (BCP-47 hi).

### Data Fields

The data fields are as follows:

* `prompt`: Describes the task the model should perform.

* `prompt_id`: A unique ID for the prompt.

* `messages`: An array of messages, where each message indicates the role (system, user, assistant) and the content.

* `category`: Which category the example belongs to (e.g. `Chat` or `Coding`).

* `text`: Content of `messages` in a format that is compatible with dataset_text_field of SFTTrainer.

### Data Splits

| | train_sft | test_sft |

|---------------|------:| ---: |

| hindichat | 9500 | 500 |

### Licensing Information

The dataset is available under the [Creative Commons NonCommercial (CC BY-NC 4.0)](https://creativecommons.org/licenses/by-nc/4.0/legalcode).

### Citation Information

```

@misc{hindichat,

author = {Rishiraj Acharya},

title = {Hindi Chat},

year = {2023},

publisher = {Hugging Face},

journal = {Hugging Face repository},

howpublished = {\url{https://huggingface.co/datasets/rishiraj/hindichat}}

}

``` |

argilla/ultrafeedback-curated | ---

language:

- en

license: mit

size_categories:

- 10K<n<100K

task_categories:

- text-generation

pretty_name: UltraFeedback Curated

dataset_info:

features:

- name: source

dtype: string

- name: instruction

dtype: string

- name: models

sequence: string

- name: completions

list:

- name: annotations

struct:

- name: helpfulness

struct:

- name: Rating

dtype: string

- name: Rationale

dtype: string

- name: Rationale For Rating

dtype: string

- name: Type

sequence: string

- name: honesty

struct:

- name: Rating

dtype: string

- name: Rationale

dtype: string

- name: instruction_following

struct:

- name: Rating

dtype: string

- name: Rationale

dtype: string

- name: truthfulness

struct:

- name: Rating

dtype: string

- name: Rationale

dtype: string

- name: Rationale For Rating

dtype: string

- name: Type

sequence: string

- name: critique

dtype: string

- name: custom_system_prompt

dtype: string

- name: model

dtype: string

- name: overall_score

dtype: float64

- name: principle

dtype: string

- name: response

dtype: string

- name: correct_answers

sequence: string

- name: incorrect_answers

sequence: string

- name: updated

struct:

- name: completion_idx

dtype: int64

- name: distilabel_rationale

dtype: string

splits:

- name: train

num_bytes: 843221341

num_examples: 63967

download_size: 321698501

dataset_size: 843221341

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Ultrafeedback Curated

This dataset is a curated version of [UltraFeedback](https://huggingface.co/datasets/openbmb/UltraFeedback) dataset performed by Argilla (using [distilabel](https://github.com/argilla-io/distilabel)).

## Introduction

You can take a look at [argilla/ultrafeedback-binarized-preferences](https://huggingface.co/datasets/argilla/ultrafeedback-binarized-preferences) for more context on the UltraFeedback error, but the following excerpt sums up the problem found:

*After visually browsing around some examples using the sort and filter feature of Argilla (sort by highest rating for chosen responses), we noticed a strong mismatch between the `overall_score` in the original UF dataset (and the Zephyr train_prefs dataset) and the quality of the chosen response.*

*By adding the critique rationale to our Argilla Dataset, we confirmed the critique rationale was highly negative, whereas the rating was very high (the highest in fact: `10`). See screenshot below for one example of this issue. After some quick investigation, we identified hundreds of examples having the same issue and a potential bug on the UltraFeedback repo.*

## Differences with `openbmb/UltraFeedback`

This version of the dataset has replaced the `overall_score` of the responses identified as "wrong", and a new column `updated` to keep track of the updates.

It contains a dict with the following content `{"completion_idx": "the index of the modified completion in the completion list", "distilabel_rationale": "the distilabel rationale"}`, and `None` if nothing was modified.

Other than that, the dataset can be used just like the original.

## Dataset processing

1. Starting from `argilla/ultrafeedback-binarized-curation` we selected all the records with `score_best_overall` equal to 10, as those were the problematic ones.

2. We created a new dataset using the `instruction` and the response from the model with the `best_overall_score_response` to be used with [distilabel](https://github.com/argilla-io/distilabel).

3. Using `gpt-4` and a task for `instruction_following` we obtained a new *rating* and *rationale* of the model for the 2405 "questionable" responses.

```python

import os

from distilabel.llm import OpenAILLM

from distilabel.pipeline import Pipeline

from distilabel.tasks import UltraFeedbackTask

from datasets import load_dataset

# Create the distilabel Pipeline

pipe = Pipeline(

labeller=OpenAILLM(

model="gpt-4",

task=UltraFeedbackTask.for_instruction_following(),

max_new_tokens=256,

num_threads=8,

openai_api_key=os.getenv("OPENAI_API_KEY") or "sk-...",

temperature=0.3,

),

)

# Download the original dataset:

ds = load_dataset("argilla/ultrafeedback-binarized-curation", split="train")

# Prepare the dataset in the format required by distilabel, will need the columns "input" and "generations"

def set_columns_for_distilabel(example):

input = example["instruction"]

generations = example["best_overall_score_response"]["response"]

return {"input": input, "generations": [generations]}

# Filter and prepare the dataset

ds_to_label = ds.filter(lambda ex: ex["score_best_overall"] == 10).map(set_columns_for_distilabel).select_columns(["input", "generations"])

# Label the dataset

ds_labelled = pipe.generate(ds_to_label, num_generations=1, batch_size=8)

```

4. After visual inspection, we decided to remove those answers that were rated as a 1, plus some extra ones rated as 2 and 3, as those were also not a real 10.

The final dataset has a total of 1968 records updated from a 10 to a 1 in the `overall_score` field of the corresponding model (around 3% of the dataset), and a new column "updated" with the rationale of `gpt-4` for the new rating, as well as the index in which the model can be found in the "models" and "completions" columns.

## Reproduce

<a target="_blank" href="https://colab.research.google.com/drive/10R6uxb-Sviv64SyJG2wuWf9cSn6Z1yow?usp=sharing">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

To reproduce the data processing, feel free to run the attached Colab Notebook or just view it at [notebook](./ultrafeedback_curation_distilabel.ipynb) within this repository.

From Argilla we encourage anyone out there to play around, investigate, and experiment with the data, and we firmly believe on open sourcing what we do, as ourselves, as well as the whole community, benefit a lot from open source and we also want to give back.

|

lowres/anime | ---

size_categories:

- 1K<n<10K

task_categories:

- text-to-image

pretty_name: anime

dataset_info:

features:

- name: image

dtype: image

- name: text

dtype: string

splits:

- name: train

num_bytes: 744102225.832

num_examples: 1454

download_size: 742020583

dataset_size: 744102225.832

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

tags:

- art

---

# anime characters datasets

This is an anime/manga/2D characters dataset, it is intended to be an encyclopedia for anime characters.

The dataset is open source to use without limitations or any restrictions.

## how to use

```python

from datasets import load_dataset

dataset = load_dataset("lowres/anime")

```

## how to contribute

* to add your own dataset, simply join the organization and create a new dataset repo and upload your images there. else you can open a new discussion and we'll check it out |

Query-of-CC/Knowledge_Pile | ---

license: apache-2.0

language:

- en

tags:

- knowledge

- Retrieval

- Reasoning

- Common Crawl

- MATH

size_categories:

- 100B<n<1T

---

Knowledge Pile is a knowledge-related data leveraging [Query of CC](https://arxiv.org/abs/2401.14624).

This dataset is a partial of Knowledge Pile(about 40GB disk size), full datasets have been released in [\[🤗 knowledge_pile_full\]](https://huggingface.co/datasets/Query-of-CC/knowledge_pile_full/), a total of 735GB disk size and 188B tokens (using Llama2 tokenizer).

## *Query of CC*

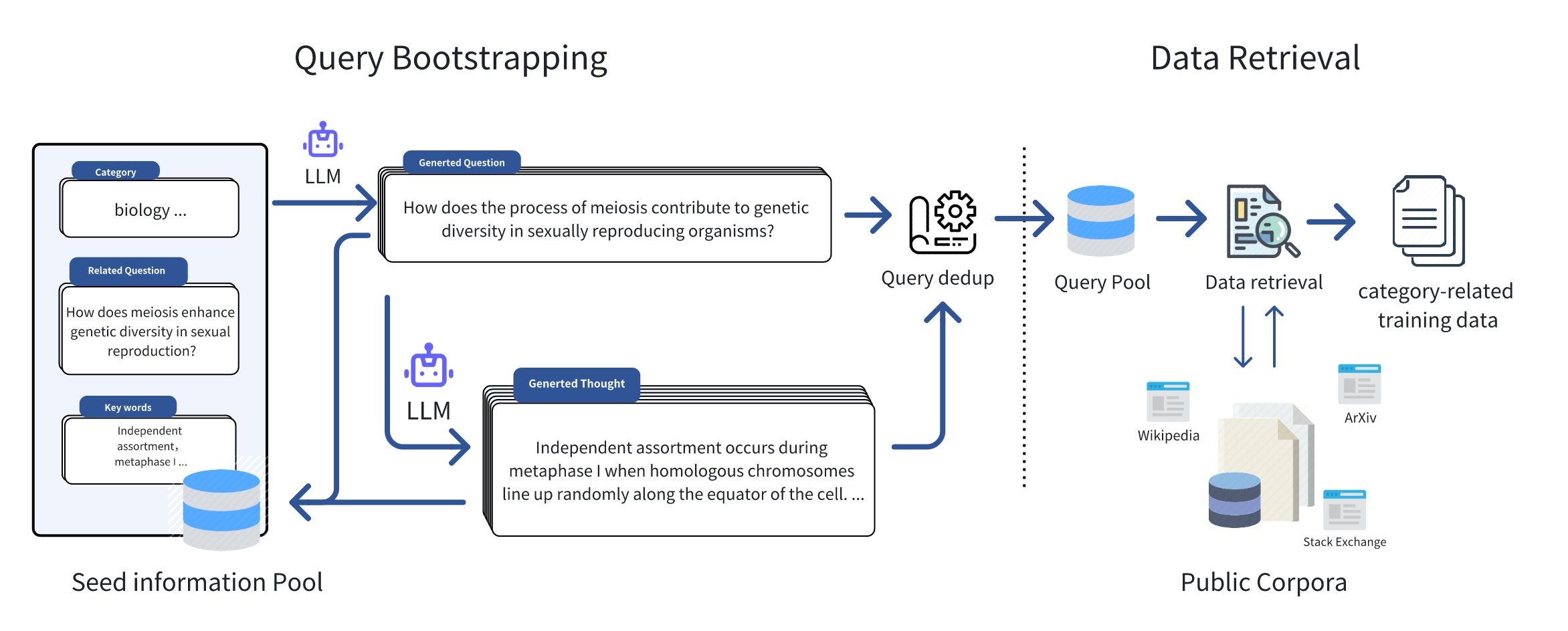

Just like the figure below, we initially collected seed information in some specific domains, such as keywords, frequently asked questions, and textbooks, to serve as inputs for the Query Bootstrapping stage. Leveraging the great generalization capability of large language models, we can effortlessly expand the initial seed information and extend it to an amount of domain-relevant queries. Inspiration from Self-instruct and WizardLM, we encompassed two stages of expansion, namely **Question Extension** and **Thought Generation**, which respectively extend the queries in terms of breadth and depth, for retrieving the domain-related data with a broader scope and deeper thought. Subsequently, based on the queries, we retrieved relevant documents from public corpora, and after performing operations such as duplicate data removal and filtering, we formed the final training dataset.

## **Knowledge Pile** Statistics

Based on *Query of CC* , we have formed a high-quality knowledge dataset **Knowledge Pile**. As shown in Figure below, comparing with other datasets in academic and mathematical reasoning domains, we have acquired a large-scale, high-quality knowledge dataset at a lower cost, without the need for manual intervention. Through automated query bootstrapping, we efficiently capture the information about the seed query. **Knowledge Pile** not only covers mathematical reasoning data but also encompasses rich knowledge-oriented corpora spanning various fields such as biology, physics, etc., enhancing its comprehensive research and application potential.

<img src="https://github.com/ngc7292/query_of_cc/blob/master/images/query_of_cc_timestamp_prop.png?raw=true" width="300px" style="center"/>

This table presents the top 10 web domains with the highest proportion of **Knowledge Pile**, primarily including academic websites, high-quality forums, and some knowledge domain sites. Table provides a breakdown of the data sources' timestamps in **Knowledge Pile**, with statistics conducted on an annual basis. It is evident that a significant portion of **Knowledge Pile** is sourced from recent years, with a decreasing proportion for earlier timestamps. This trend can be attributed to the exponential growth of internet data and the inherent timeliness introduced by the **Knowledge Pile**.

| **Web Domain** | **Count** |

|----------------------------|----------------|

|en.wikipedia.org | 398833 |

|www.semanticscholar.org | 141268 |

|slideplayer.com | 108177 |

|www.ncbi.nlm.nih.gov | 97009 |

|link.springer.com | 85357 |

|www.ipl.org | 84084 |

|pubmed.ncbi.nlm.nih.gov | 68934 |

|www.reference.com | 61658 |

|www.bartleby.com | 60097 |

|quizlet.com | 56752 |

### cite

```

@article{fei2024query,

title={Query of CC: Unearthing Large Scale Domain-Specific Knowledge from Public Corpora},

author={Fei, Zhaoye and Shao, Yunfan and Li, Linyang and Zeng, Zhiyuan and Yan, Hang and Qiu, Xipeng and Lin, Dahua},

journal={arXiv preprint arXiv:2401.14624},

year={2024}

}

``` |

Locutusque/Hercules-v3.0 | ---

license: other

task_categories:

- text-generation

- question-answering

- conversational

language:

- en

tags:

- not-for-all-audiences

- chemistry

- biology

- code

- medical

- synthetic

---

# Hercules-v3.0

- **Dataset Name:** Hercules-v3.0

- **Version:** 3.0

- **Release Date:** 2024-2-14

- **Number of Examples:** 1,637,895

- **Domains:** Math, Science, Biology, Physics, Instruction Following, Conversation, Computer Science, Roleplay, and more

- **Languages:** Mostly English, but others can be detected.

- **Task Types:** Question Answering, Conversational Modeling, Instruction Following, Code Generation, Roleplay

## Data Source Description

Hercules-v3.0 is an extensive and diverse dataset that combines various domains to create a powerful tool for training artificial intelligence models. The data sources include conversations, coding examples, scientific explanations, and more. The dataset is sourced from multiple high-quality repositories, each contributing to the robustness of Hercules-v3.0 in different knowledge domains.

## Included Data Sources

- `cognitivecomputations/dolphin`

- `Evol Instruct 70K & 140K`

- `teknium/GPT4-LLM-Cleaned`

- `jondurbin/airoboros-3.2`

- `AlekseyKorshuk/camel-chatml`

- `CollectiveCognition/chats-data-2023-09-22`

- `Nebulous/lmsys-chat-1m-smortmodelsonly`

- `glaiveai/glaive-code-assistant-v2`

- `glaiveai/glaive-code-assistant`

- `glaiveai/glaive-function-calling-v2`

- `garage-bAInd/Open-Platypus`

- `meta-math/MetaMathQA`

- `teknium/GPTeacher-General-Instruct`

- `GPTeacher roleplay datasets`

- `BI55/MedText`

- `pubmed_qa labeled subset`

- `Unnatural Instructions`

- `M4-ai/LDJnr_combined_inout_format`

- `CollectiveCognition/chats-data-2023-09-27`

- `CollectiveCognition/chats-data-2023-10-16`

- `NobodyExistsOnTheInternet/sharegptPIPPA`

- `yuekai/openchat_sharegpt_v3_vicuna_format`

- `ise-uiuc/Magicoder-Evol-Instruct-110K`

- `Squish42/bluemoon-fandom-1-1-rp-cleaned`

- `sablo/oasst2_curated`

Note: I would recommend filtering out any bluemoon examples because it seems to cause performance degradation.

## Data Characteristics

The dataset amalgamates text from various domains, including structured and unstructured data. It contains dialogues, instructional texts, scientific explanations, coding tasks, and more.

## Intended Use

Hercules-v3.0 is designed for training and evaluating AI models capable of handling complex tasks across multiple domains. It is suitable for researchers and developers in academia and industry working on advanced conversational agents, instruction-following models, and knowledge-intensive applications.

## Data Quality

The data was collected from reputable sources with an emphasis on diversity and quality. It is expected to be relatively clean but may require additional preprocessing for specific tasks.

## Limitations and Bias

- The dataset may have inherent biases from the original data sources.

- Some domains may be overrepresented due to the nature of the source datasets.

## X-rated Content Disclaimer

Hercules-v3.0 contains X-rated content. Users are solely responsible for the use of the dataset and must ensure that their use complies with all applicable laws and regulations. The dataset maintainers are not responsible for the misuse of the dataset.

## Usage Agreement

By using the Hercules-v3.0 dataset, users agree to the following:

- The dataset is used at the user's own risk.

- The dataset maintainers are not liable for any damages arising from the use of the dataset.

- Users will not hold the dataset maintainers responsible for any claims, liabilities, losses, or expenses.

Please make sure to read the license for more information.

## Citation

```

@misc{sebastian_gabarain_2024,

title = {Hercules-v3.0: The "Golden Ratio" for High Quality Instruction Datasets},

author = {Sebastian Gabarain},

publisher = {HuggingFace},

year = {2024},

url = {https://huggingface.co/datasets/Locutusque/Hercules-v3.0}

}

``` |

sanjay920/gemma-function-calling | ---

dataset_info:

features:

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: tools

dtype: string

- name: text

dtype: string

splits:

- name: train

num_bytes: 540487055

num_examples: 111944

download_size: 193212415

dataset_size: 540487055

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

alvarobartt/openhermes-preferences-metamath | ---

license: other

task_categories:

- text-generation

language:

- en

source_datasets:

- argilla/OpenHermesPreferences

annotations_creators:

- Argilla

- HuggingFaceH4

tags:

- dpo

- synthetic

- metamath

size_categories:

- 10K<n<100K

dataset_info:

features:

- name: chosen

list:

- name: content

dtype: string

- name: role

dtype: string

- name: rejected

list:

- name: content

dtype: string

- name: role

dtype: string

splits:

- name: train

num_bytes: 169676613.83305642

num_examples: 50799

- name: test

num_bytes: 18855183.863611557

num_examples: 5645

download_size: 44064373

dataset_size: 188531797.69666797

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

# Dataset Card for OpenHermes Preferences - MetaMath

This dataset is a subset from [`argilla/OpenHermesPreferences`](https://hf.co/datasets/argilla/OpenHermesPreferences),

only keeping the preferences of `metamath`, and removing all the columns besides the `chosen` and `rejected` ones, that

come in OpenAI chat formatting, so that's easier to fine-tune a model using tools like: [`huggingface/alignment-handbook`](https://github.com/huggingface/alignment-handbook)

or [`axolotl`](https://github.com/OpenAccess-AI-Collective/axolotl), among others.

## Reference

[`argilla/OpenHermesPreferences`](https://hf.co/datasets/argilla/OpenHermesPreferences) dataset created as a collaborative

effort between Argilla and the HuggingFaceH4 team from HuggingFace. |

gorilla-llm/Berkeley-Function-Calling-Leaderboard | ---

license: apache-2.0

language:

- en

---

# Berkeley Function Calling Leaderboard

<!-- Provide a quick summary of the dataset. -->

The Berkeley function calling leaderboard is a live leaderboard to evaluate the ability of different LLMs to call functions (also referred to as tools).

We built this dataset from our learnings to be representative of most users' function-calling use-cases, for example, in agents, as a part of enterprise workflows, etc.

To this end, our evaluation dataset spans diverse categories, and across multiple programming languages.

Checkout the Leaderboard at [gorilla.cs.berkeley.edu/leaderboard.html](https://gorilla.cs.berkeley.edu/leaderboard.html)

and our [release blog](https://gorilla.cs.berkeley.edu/blogs/8_berkeley_function_calling_leaderboard.html)!

***Latest Version Release Date***: 4/09/2024

***Original Release Date***: 02/26/2024

### Change Log

The Berkeley Function Calling Leaderboard is a continually evolving project. We are committed to regularly updating the dataset and leaderboard by introducing new models and expanding evaluation categories. Below is an overview of the modifications implemented in the most recent version:

* [April 10, 2024] [#339](https://github.com/ShishirPatil/gorilla/pull/339): Introduce REST API sanity check for the executable test category. It ensures that all the API endpoints involved during the execution evaluation process are working properly. If any of them are not behaving as expected, the evaluation process will be stopped by default as the result will be inaccurate. Users can choose to bypass this check by setting the `--skip-api-sanity-check` flag.

* [April 9, 2024] [#338](https://github.com/ShishirPatil/gorilla/pull/338): Bug fix in the evaluation datasets (including both prompts and function docs). Bug fix for possible answers as well.

* [April 8, 2024] [#330](https://github.com/ShishirPatil/gorilla/pull/330): Fixed an oversight that was introduced in [#299](https://github.com/ShishirPatil/gorilla/pull/299). For function-calling (FC) models that cannot take `float` type in input, when the parameter type is a `float`, the evaluation procedure will convert that type to `number` in the model input and mention in the parameter description that `This is a float type value.`. An additional field `format: float` will also be included in the model input to make it clear about the type. Updated the model handler for Claude, Mistral, and OSS to better parse the model output.

* [April 3, 2024] [#309](https://github.com/ShishirPatil/gorilla/pull/309): Bug fix for evaluation dataset possible answers. Implement **string standardization** for the AST evaluation pipeline, i.e. removing white spaces and a subset of punctuations `,./-_*^` to make the AST evaluation more robust and accurate. Fixed AST evaluation issue for type `tuple`. Add 2 new models `meetkai/functionary-small-v2.4 (FC)`, `meetkai/functionary-medium-v2.4 (FC)` to the leaderboard.

* [April 1, 2024] [#299](https://github.com/ShishirPatil/gorilla/pull/299): Leaderboard update with new models (`Claude-3-Haiku`, `Databrick-DBRX-Instruct`), more advanced AST evaluation procedure, and updated evaluation datasets. Cost and latency statistics during evaluation are also measured. We also released the manual that our evaluation procedure is based on, available [here](https://gorilla.cs.berkeley.edu/blogs/8_berkeley_function_calling_leaderboard.html#metrics).

* [Mar 11, 2024] [#254](https://github.com/ShishirPatil/gorilla/pull/254): Leaderboard update with 3 new models: `Claude-3-Opus-20240229 (Prompt)`, `Claude-3-Sonnet-20240229 (Prompt)`, and `meetkai/functionary-medium-v2.2 (FC)`

* [Mar 5, 2024] [#237](https://github.com/ShishirPatil/gorilla/pull/237) and [238](https://github.com/ShishirPatil/gorilla/pull/238): leaderboard update resulting from [#223](https://github.com/ShishirPatil/gorilla/pull/223); 3 new models: `mistral-large-2402`, `gemini-1.0-pro`, and `gemma`.

* [Feb 29, 2024] [#223](https://github.com/ShishirPatil/gorilla/pull/223): Modifications to REST evaluation.

* [Feb 27, 2024] [#215](https://github.com/ShishirPatil/gorilla/pull/215): BFCL first release.

## Dataset Composition

| # | Category |

|---|----------|

|200 | Chatting Capability|

|100 | Simple (Exec)|

|50 | Multiple (Exec)|

|50 | Parallel (Exec)|

|40 | Parallel & Multiple (Exec)|

|400 | Simple (AST)|

|200 | Multiple (AST)|

|200 | Parallel (AST)|

|200 | Parallel & Multiple (AST)|

|240 | Relevance|

|70 | REST|

|100 | Java|

|100 | SQL|

|50 | Javascript|

### Dataset Description

We break down the majority of the evaluation into two categories:

- **Python**: Simple Function, Multiple Function, Parallel Function, Parallel Multiple Function

- **Non-Python**: Chatting Capability, Function Relevance Detection, REST API, SQL, Java, Javascript

#### Python

**Simple**: Single function evaluation contains the simplest but most commonly seen format, where the user supplies a single JSON function document, with one and only one function call being invoked.

**Multiple Function**: Multiple function category contains a user question that only invokes one function call out of 2 to 4 JSON function documentations. The model needs to be capable of selecting the best function to invoke according to user-provided context.

**Parallel Function**: Parallel function is defined as invoking multiple function calls in parallel with one user query. The model needs to digest how many function calls need to be made and the question to model can be a single sentence or multiple sentence.

**Parallel Multiple Function**: Parallel Multiple function is the combination of parallel function and multiple function. In other words, the model is provided with multiple function documentation, and each of the corresponding function calls will be invoked zero or more times.

Each category has both AST and its corresponding executable evaluations. In the executable evaluation data, we manually write Python functions drawing inspiration from free REST API endpoints (e.g. get weather) and functions (e.g. linear regression) that compute directly. The executable category is designed to understand whether the function call generation is able to be stably utilized in applications utilizing function calls in the real world.

#### Non-Python Evaluation

While the previous categories consist of the majority of our evaluations, we include other specific categories, namely Chatting Capability, Function Relevance Detection, REST API, SQL, Java, and JavaScript, to evaluate model performance on diverse scenarios and support of multiple programming languages, and are resilient to irrelevant questions and function documentations.

**Chatting Capability**: In Chatting Capability, we design scenarios where no functions are passed in, and the users ask generic questions - this is similar to using the model as a general-purpose chatbot. We evaluate if the model is able to output chat messages and recognize that it does not need to invoke any functions. Note the difference with “Relevance” where the model is expected to also evaluate if any of the function inputs are relevant or not. We include this category for internal model evaluation and exclude the statistics from the live leaderboard. We currently are working on a better evaluation of chat ability and ensuring the chat is relevant and coherent with users' requests and open to suggestions and feedback from the community.

**Function Relevance Detection**: In function relevance detection, we design scenarios where none of the provided functions are relevant and supposed to be invoked. We expect the model's output to be a non-function-call response. This scenario provides insight into whether a model will hallucinate on its functions and parameters to generate function code despite lacking the function information or instructions from the users to do so.

**REST API**: A majority of the real-world API calls are from REST API calls. Python mainly makes REST API calls through `requests.get()`, `requests.post()`, `requests.delete()`, etc that are included in the Python requests library. `GET` requests are the most common ones used in the real world. As a result, we include real-world `GET` requests to test the model's capabilities to generate executable REST API calls through complex function documentation, using `requests.get()` along with the API's hardcoded URL and description of the purpose of the function and its parameters. Our evaluation includes two variations. The first type requires passing the parameters inside the URL, called path parameters, for example, the `{Year}` and `{CountryCode}` in `GET` `/api/v3/PublicHolidays/{Year}/{CountryCode}`. The second type requires the model to put parameters as key/value pairs into the params and/or headers of `requests.get(.)`. For example, `params={'lang': 'fr'}` in the function call. The model is not given which type of REST API call it is going to make but needs to make a decision on how it's going to be invoked.

For REST API, we use an executable evaluation to check for the executable outputs' effective execution, response type, and response JSON key consistencies. On the AST, we chose not to perform AST evaluation on REST mainly because of the immense number of possible answers; the enumeration of all possible answers is exhaustive for complicated defined APIs.

**SQL**: SQL evaluation data includes our customized `sql.execute` functions that contain sql_keyword, table_name, columns, and conditions. Those four parameters provide the necessary information to construct a simple SQL query like `SELECT column_A from table_B where column_C == D` Through this, we want to see if through function calling, SQL query can be reliably constructed and utilized rather than training a SQL-specific model. In our evaluation dataset, we restricted the scenarios and supported simple keywords, including `SELECT`, `INSERT INTO`, `UPDATE`, `DELETE`, and `CREATE`. We included 100 examples for SQL AST evaluation. Note that SQL AST evaluation will not be shown in our leaderboard calculations. We use SQL evaluation to test the generalization ability of function calling for programming languages that are not included in the training set for Gorilla OpenFunctions-v2. We opted to exclude SQL performance from the AST evaluation in the BFCL due to the multiplicity of methods to construct SQL function calls achieving identical outcomes. We're currently working on a better evaluation of SQL and are open to suggestions and feedback from the community. Therefore, SQL has been omitted from the current leaderboard to pave the way for a more comprehensive evaluation in subsequent iterations.

**Java and Javascript**: Despite function calling formats being the same across most programming languages, each programming language has language-specific types. For example, Java has the `HashMap` type. The goal of this test category is to understand how well the function calling model can be extended to not just Python type but all the language-specific typings. We included 100 examples for Java AST evaluation and 70 examples for Javascript AST evaluation.

The categories outlined above provide insight into the performance of different models across popular API call scenarios, offering valuable perspectives on the potential of function-calling models.

### Evaluation

This dataset serves as the question + function documentation pairs for Berkeley Function-Calling Leaderboard (BFCL) evaluation. The source code for the evaluation process can be found [here](https://github.com/ShishirPatil/gorilla/tree/main/berkeley-function-call-leaderboard) with detailed instructions on how to use this dataset to compare LLM tool use capabilities across different models and categories.

More details on evaluation metrics, i.e. rules for the Abstract Syntax Tree (AST) and executable evaluation can be found in the [release blog](https://gorilla.cs.berkeley.edu/blogs/8_berkeley_function_calling_leaderboard.html#metrics).

### Contributing

All the models, and data used to train the models are released under Apache 2.0.

Gorilla is an open source effort from UC Berkeley and we welcome contributors.

Please email us your comments, criticisms, and questions.

More information about the project can be found at https://gorilla.cs.berkeley.edu/

### BibTex

```bibtex

@misc{berkeley-function-calling-leaderboard,

title={Berkeley Function Calling Leaderboard},

author={Fanjia Yan and Huanzhi Mao and Charlie Cheng-Jie Ji and Tianjun Zhang and Shishir G. Patil and Ion Stoica and Joseph E. Gonzalez},

howpublished={\url{https://gorilla.cs.berkeley.edu/blogs/8_berkeley_function_calling_leaderboard.html}},

year={2024},

}

```

|

0-hero/Matter-0.1 | ---

license: apache-2.0

---

# Matter 0.1

Curated top quality records from 35 other datasets. Extracted from [prompt-perfect](https://huggingface.co/datasets/0-hero/prompt-perfect)

This is just a consolidation of all the score 5s. Fine-tuning models with various subsets and combinations to create a best performing v1 dataset

### ~1.4B Tokens, ~2.5M records

Dataset has been deduped, decontaminated with [bagel script from Jon Durbin](https://github.com/jondurbin/bagel/blob/main/bagel/data_sources/__init__.py)

Download using the below command to avoid unecessary files

```

from huggingface_hub import snapshot_download

snapshot_download(repo_id="0-hero/Matter-0.1",repo_type="dataset", allow_patterns=["final_set_cleaned/*"], local_dir=".", local_dir_use_symlinks=False)

```

|

Superar/Puntuguese | ---

license: cc-by-sa-4.0

task_categories:

- text-classification

- token-classification

language:

- pt

pretty_name: Puntuguese - A Corpus of Puns in Portuguese with Micro-editions

tags:

- humor

- puns

- humor-recognition

- pun-location

---

# Puntuguese - A Corpus of Puns in Portuguese with Micro-editions

Puntuguese is a corpus of Portuguese punning texts, including Brazilian and European Portuguese jokes. The data has been manually gathered and curated according to our [guidelines](https://github.com/Superar/Puntuguese/blob/main/data/GUIDELINES.md). It also contains some layers of annotation:

- Every pun is classified as homophonic, homographic, both, or none according to their specific punning signs;

- The punning and alternative signs were made explicit for every joke;

- We also mark potentially problematic puns from an ethical perspective, so it is easier to filter them out if needed.

Additionally, every joke in the corpus has a non-humorous counterpart, obtained via micro-editing, to enable Machine Learning systems to be trained.

### Dataset Description

- **Curated by:** [Marcio Lima Inácio](https://eden.dei.uc.pt/~mlinacio/)

- **Funded by:** FCT - Foundation for Science and Technology, I.P. (grant number UI/BD/153496/2022) and the Portuguese Recovery and Resilience Plan (project C645008882-00000055, Center for Responsible AI).

- **Languages:** Brazilian Portuguese; European Portuguese

- **License:** CC-BY-SA-4.0

### Dataset Sources

The puns were collected from three sources: the "Maiores e melhores" web blog, the "O Sagrado Caderno das Piadas Secas" Instagram page, and from the "UTC - Ultimate Trocadilho Challenge" by Castro Brothers on Youtube.

- **Repository:** https://github.com/Superar/Puntuguese

- **Paper:** To be announced

## Dataset Structure

The dataset provided via Hugging Face Hub contains two tasks: humor recognition and pun location. The first task uses the `text` and `label` columns. For pun location, the columns to be used are `tokens` and `labels`. An instance example can be seen below:

```json

{

"id": "1.1.H",

"text": "Deve ser difícil ser professor de natação. Você ensina, ensina, e o aluno nada.",

"label": 1,

"tokens": ["Deve", "ser", "difícil", "ser", "professor", "de", "natação", ".", "Você", "ensina", ",", "ensina", ",", "e", "o", "aluno", "nada", "."],

"labels": [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0]

}

```

## Dataset Creation

#### Data Collection and Processing

The data was manually gathered and curated to ensure that all jokes followed our chosen definition of pun by Miller et al. (2017):

> "A pun is a form of wordplay in which one sign (e.g., a word or phrase) suggests two or more meanings by exploiting polysemy, homonymy, or phonological similarity to another sign, for an intended humorous or rhetorical effect."

Every selected pun must satisfy this definition. Gatherers were also provided some hints for this process:

- A sign can be a single word (or token), a phrase (a sequence of tokens), or a part of a word (a subtoken);

- The humorous effect must rely on the ambiguity of said sign;

- The ambiguity must originate from the word's form (written or spoken);

- Every pun must have a "pun word" (the ambiguous sign that is in the text) and an "alternative word" (the sign's ambiguous interpretation) identified. If it is not possible to identify both, the text is not considered a pun and should not be included.

#### Who are the source data producers?

The original data was produced by professional comedians from the mentioned sources.

## Bias, Risks, and Limitations

As in every real-life scenario, the data can contain problematic and insensitive jokes about delicate subjects. In this sense, we provide in out GitHub repository a list of jokes that the gatherers, personally, thought to be problematic.

## Citation

**BibTeX:**

```

@inproceedings{InacioEtAl2024,

title = {Puntuguese: A Corpus of Puns in {{P}}ortuguese with Micro-editions},

author = {In{\'a}cio, Marcio Lima and {Wick-pedro}, Gabriela and Ramisch, Renata and Esp{\'i}rito Santo, Lu{\'i}s and Chacon, Xiomara S. Q. and Santos, Roney and Sousa, Rog{\'e}rio and Anchi{\^e}ta, Rafael and Gon{\c c}alo Oliveira, Hugo},