datasetId

stringlengths 2

81

| card

stringlengths 20

977k

|

|---|---|

amphora/lmsys-finance | ---

dataset_info:

features:

- name: conversation_id

dtype: string

- name: model

dtype: string

- name: conversation

dtype: string

- name: turn

dtype: int64

- name: language

dtype: string

- name: openai_moderation

dtype: string

- name: redacted

dtype: bool

- name: count

dtype: int64

- name: __index_level_0__

dtype: int64

splits:

- name: train

num_bytes: 10328855

num_examples: 735

download_size: 3912614

dataset_size: 10328855

task_categories:

- conversational

language:

- en

tags:

- finance

size_categories:

- n<1K

---

# Dataset Card for "lmsys-finance"

This dataset is a curated version of the [lmsys-chat-1m](https://huggingface.co/datasets/lmsys/lmsys-chat-1m) dataset,

focusing solely on finance-related conversations. The refinement process encompassed:

1. Removing non-English conversations.

2. Selecting conversations from models: "vicuna-33b", "wizardlm-13b", "gpt-4", "gpt-3.5-turbo", "claude-2", "palm-2", and "claude-instant-1".

3. Excluding conversations with responses under 30 characters.

4. Using 100 financial keywords, choosing conversations with at least 10 keywords. |

wenge-research/yayi2_pretrain_data | ---

license: apache-2.0

language:

- zh

- en

size_categories:

- 100B<n<1T

---

## 介绍/Introduction

本数据集源自雅意训练语料,我们精选了约100B数据,数据大小约为500GB。我们期望通过雅意预训练数据的开源推动中文预训练大模型开源社区的发展,并积极为此贡献力量。通过开源,我们与每一位合作伙伴共同构建雅意大模型生态。

We opensource the pre-trained dataset in this release, it should contain more than 100B tokens depending on the tokenizer you use, requiring more than 500GB of local storage. By open-sourcing the pre-trained dataset, we aim to contribute to the development of the Chinese pre-trained large language model open-source community. Through open-source, we aspire to collaborate with every partner in building the YAYI large language model ecosystem.

## 组成

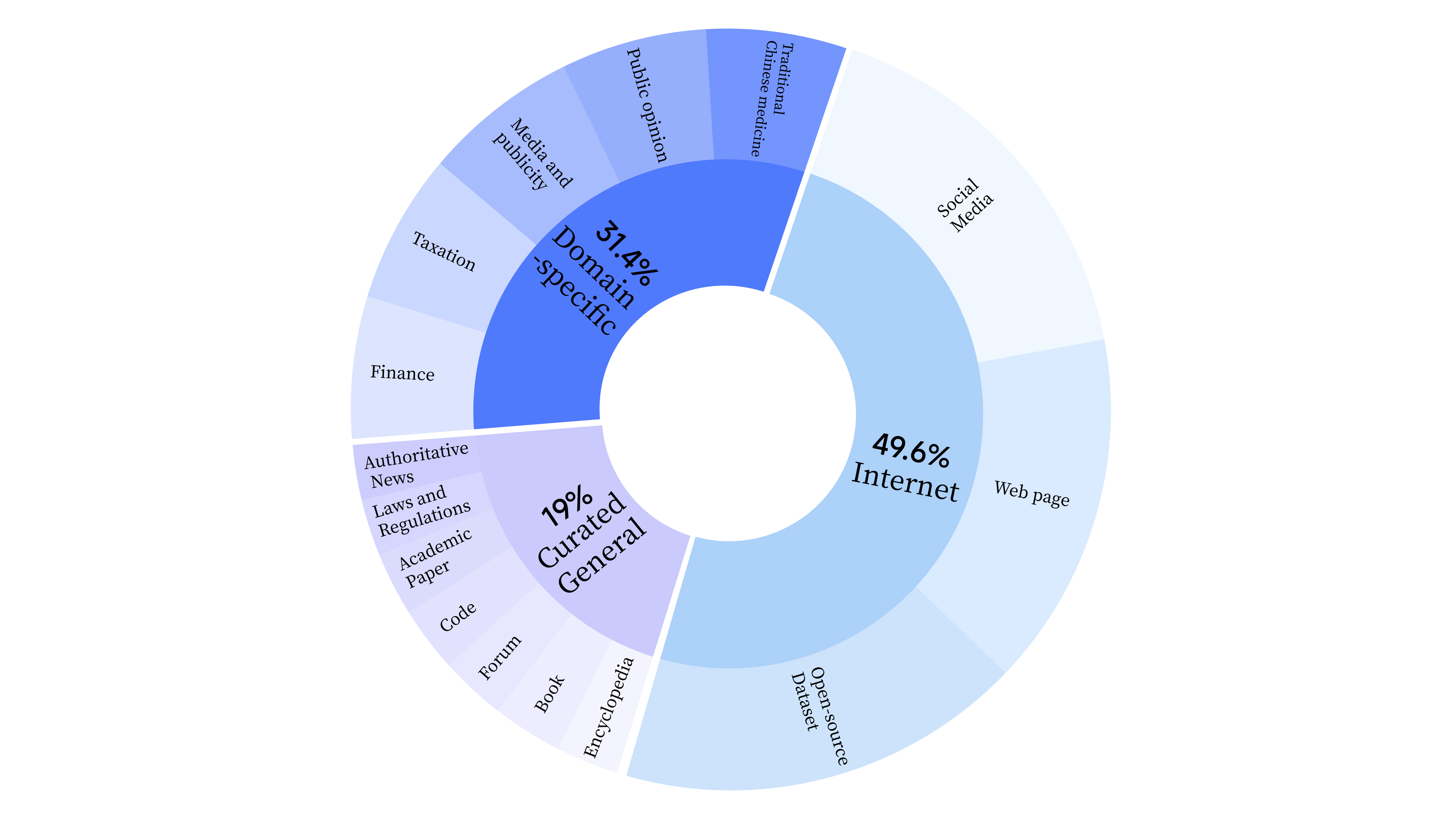

* 在预训练阶段,我们不仅使用了互联网数据来训练模型的语言能力,还添加了通用精选数据和领域数据,以增强模型的专业技能。通用精选数据包含人工收集和整理的高质量数据。涵盖了报纸类数据、文献类数据、APP类数据、代码类数据、书籍类数据、百科类数据。其中,报纸类数据包括广泛的新闻报道和专栏文章,这类数据通常结构化程度高,信息量丰富。文献类数据包括学术论文和研究报告,为我们的数据集注入了专业和深度。代码类数据包括各种编程语言的源码,有助于构建和优化技术类数据的处理模型。书籍类数据涵盖了小说、诗歌、古文、教材等内容,提供丰富的语境和词汇,增强语言模型的理解能力。数据分布情况如下:

* During the pre-training phase, we not only utilized internet data to train the model's language abilities but also incorporated curated general data and domain-specific information to enhance the model's expertise. Curated general data covers a wide range of categories including books (e.g., textbooks, novels), codes, encyclopedias, forums, academic papers, authoritative news, laws and regulations. Details of the data distribution are as follows:

## 数据清洗

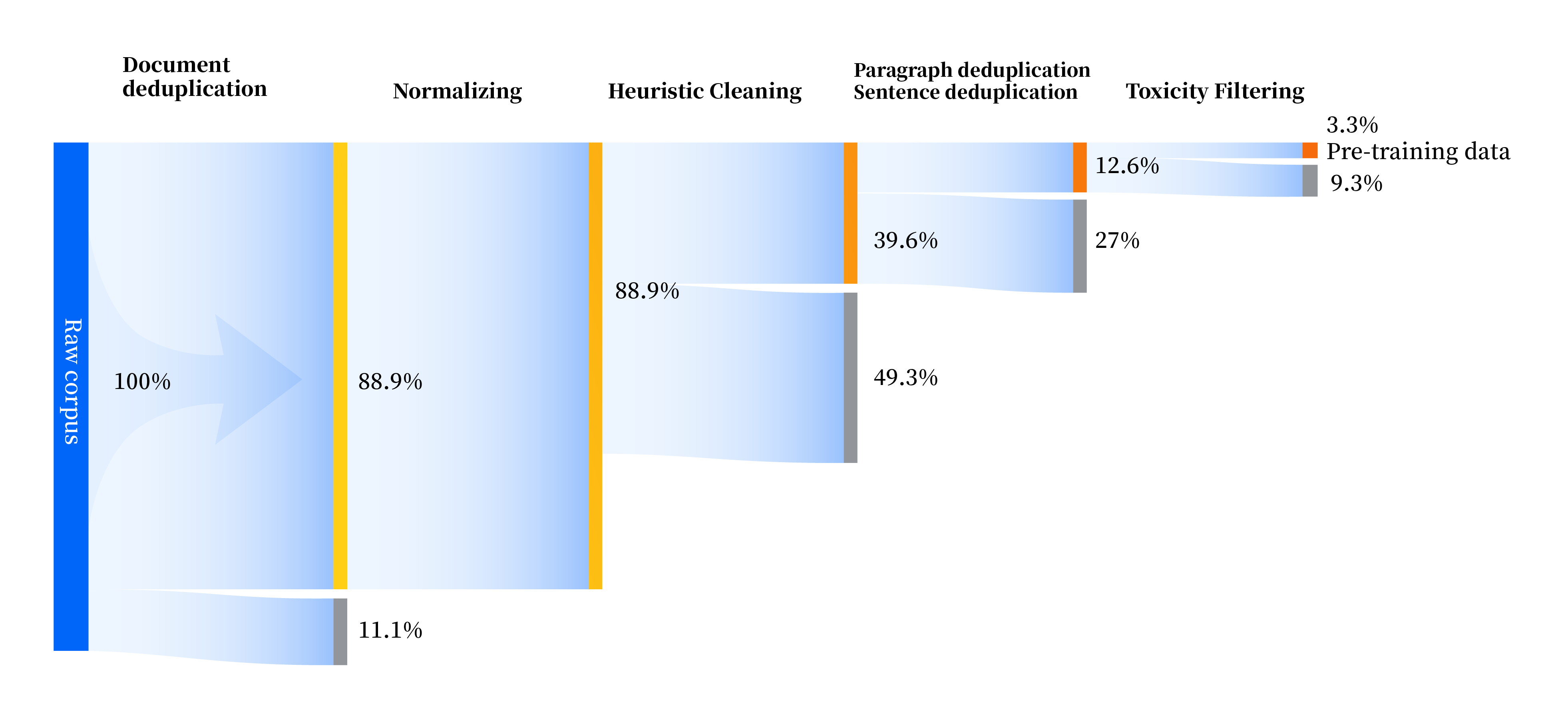

- 我们构建了一套全方位提升数据质量的数据处理流水线,包括标准化、启发式清洗、多级去重、毒性过滤四个模块。我们共收集了 240TB 原始数据,预处理后仅剩 10.6TB 高质量数据。整体流程如下:

- We establish a comprehensive data processing pipeline to enhance data quality in all aspects. This pipeline comprises four modules: normalizing, heuristic cleaning, multi-level deduplication, and toxicity filtering. 240 terabytes of raw data are collected for pre-training, and only 10.6 terabytes of high-quality data remain after preprocessing. Details of the data processing pipeline are as follows:

## 协议/License

本项目中的代码依照 [Apache-2.0](https://github.com/wenge-research/YAYI2/blob/main/LICENSE) 协议开源,社区使用 YAYI 2 模型和数据需要遵循[雅意YAYI 2 模型社区许可协议](https://github.com/wenge-research/YAYI2/blob/main/COMMUNITY_LICENSE)。若您需要将雅意 YAYI 2系列模型或其衍生品用作商业用途,请根据[《雅意 YAYI 2 模型商用许可协议》](https://github.com/wenge-research/YAYI2/blob/main/COMMERCIAL_LICENSE)将商用许可申请登记信息发送至指定邮箱 [yayi@wenge.com](mailto:yayi@wenge.com)。审核通过后,雅意将授予您商用版权许可,请遵循协议中的商业许可限制。

The code in this project is open-sourced under the [Apache-2.0](https://github.com/wenge-research/YAYI2/blob/main/LICENSE) license. The use of YaYi series model weights and data must adhere to the [YAYI 2 Community License](https://github.com/wenge-research/YAYI2/blob/main/COMMUNITY_LICENSE). If you intend to use the YAYI 2 series models or their derivatives for commercial purposes, please submit your commercial license application and registration information to [yayi@wenge.com](mailto:yayi@wenge.com), following the [YAYI 2 Commercial License](https://github.com/wenge-research/YAYI2/blob/main/COMMERCIAL_LICENSE). Upon approval, YAYI will grant you a commercial copyright license, subject to the commercial license restrictions outlined in the agreement.

## 引用/Citation

如果您在工作中使用了我们的模型或者数据,请引用我们的论文。

If you are using the resource for your work, please cite our paper.

```

@article{YAYI 2,

author = {Yin Luo, Qingchao Kong, Nan Xu, et.al.},

title = {YAYI 2: Multilingual Open Source Large Language Models},

journal = {arXiv preprint arXiv:2312.14862},

url = {https://arxiv.org/abs/2312.14862},

year = {2023}

}

``` |

PipableAI/pip-txt-to-sql-spider-bird-dataset | ---

dataset_info:

features:

- name: schema

dtype: string

- name: question

dtype: string

- name: query

dtype: string

splits:

- name: train

num_bytes: 24825271

num_examples: 16428

download_size: 1320142

dataset_size: 24825271

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Dataset Card for "spider-bird"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

BoyaWu10/Bunny-v1_0-data | ---

license: apache-2.0

task_categories:

- visual-question-answering

- question-answering

language:

- en

pretty_name: Bunny-v1.0 Dataset

size_categories:

- 1M<n<10M

---

# Bunny-v1.0 Dataset Card

📖 [Technical report](https://arxiv.org/abs/2402.11530) | 🤗 [Bunny-v1.0-3B](https://huggingface.co/BAAI/Bunny-v1_0-3B) | 🏠 [Code](https://github.com/BAAI-DCAI/Bunny) | 🐰 [Demo](https://wisemodel.cn/space/baai/Bunny)

Bunny is a family of lightweight multimodal models.

Bunny-v1.0-data is the training dataset for Bunny-v1.0 series, including [Bunny-v1.0-3B](https://huggingface.co/BAAI/Bunny-v1_0-3B).

## Pretrain

We use a high-quality coreset with less duplicates and more informative samples of LAION-2B built by [this work](https://github.com/BAAI-DCAI/Dataset-Pruning/tree/main/LAION).

We randomly sample 2 million image-text pairs from the coreset and convert them to training format.

The pretraining data and images can be found in `pretrain` folder.

## Finetune

We build Bunny-695K by modifying [SVIT-mix-665K](https://arxiv.org/abs/2307.04087) for finetuning.

The finetuning data can be found in `finetune` folder.

## Usage

The images are packed into multiple packages.

After downloading the images, run the following script to merge them into one:

```shell

cat images.tar.gz.part-* > images.tar.gz

```

Then unpack the package with following command:

```shell

tar -xvzf images.tar.gz

```

## License

The content of this project itself is licensed under the Apache license 2.0. |

Doctor-Shotgun/theory-of-mind-dpo | ---

language:

- en

---

This is [grimulkan/theory-of-mind](https://huggingface.co/datasets/grimulkan/theory-of-mind) with "rejected" responses generated using [mistralai/Mistral-7B-Instruct-v0.2](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2), and the file formatted for use in DPO training.

The code used to generate the dataset can be found in this repository: https://github.com/DocShotgun/LLM-datagen |

somosnlp/es-inclusive-language | ---

language:

- es

size_categories:

- 1K<n<10K

task_categories:

- text2text-generation

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

- split: test

path: data/test-*

dataset_info:

features:

- name: gender_exclusive

dtype: string

- name: gender_inclusive

dtype: string

- name: difficulty

dtype: string

- name: origin

dtype: string

splits:

- name: train

num_bytes: 630817

num_examples: 3212

- name: validation

num_bytes: 139222

num_examples: 721

- name: test

num_bytes: 50611

num_examples: 263

download_size: 397549

dataset_size: 820650

license: cc-by-nc-sa-4.0

---

# Dataset card for es-inclusive-language

Languages are powerful tools to communicate ideas, but their use is not impartial. The selection of words carries inherent biases and reflects subjective perspectives. In some cases, language is wielded to enforce ideologies, marginalize certain groups, or promote specific political agendas.

Spanish is not the exception to that. For instance, when we say “los alumnos” or “los ingenieros”, we are excluding women from those groups. Similarly, expressions such as “los gitanos” o “los musulmanes” perpetuate discrimination against these communities.

In response to these linguistic challenges, this dataset offers neutral alternatives in accordance with official guidelines on inclusive language from various Spanish speaking countries. Its purpose is to provide grammatically correct and inclusive solutions to situations where our language choices might otherwise be exclusive.

## Dataset Structure

This dataset consists of pairs of texts with one entry featuring exclusive language and the other one its corresponding inclusive rewrite. All pairs are tagged with the origin (source) of the data and, in order to account for completeness of inclusive translation, also with labels for translation difficulty.

### Difficulty tag descriptions

We used different labels, most of them gender related, and can be describe like this:

| Tag | Description | Example |

|-----------------------|---------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| no_cambia | No changes are needed | "Los alumnos Carlos y Manuel son muy problemáticos" cannot be translated as "El alumnado Carlos y Manuel son muy problemáticos” |

| plural_complejo | Plural words for which there is not a neutral term. There are different formulas that will vary according to the context. | "Los agricultores" -> "La comunidad agrícola", "Los y las agricultoras". “Las limpiadoras” -> “El equipo de limpieza”. More: "El grupo de...", "El sector de...", "El personal de..." |

| plural_neutro | Change the plural for a generic noun. | "Los alumnos" -> "El alumnado" |

| culturas | People and cultures | "Los andaluces" -> "El pueblo andaluz", "La comunidad andaluza" |

| feminizar_profesiones | Professions with androcentric feminine forms | “La médico” -> "La médica". “La técnico de sonido” -> "La técnica de sonido" |

| nombres_propios | Proper names | "Los alumnos Carlos y Manuel son muy problemáticos" cannot be translated as "El alumnado es muy problemático |

| persona_generica | Reference to a generic person | "Nota al lector" -> "Nota a quien lee", "Nota a la persona que lee" |

| dificultades_variadas | Mix of difficulties (to tag big chunks of diverse data) | |

| plurales | Mix of neutral and complex plurals | |

| falsa_concordancia | Androcentric agreement errors | "Estas siete parejas van a dar lo mejor de sí mismos" -> "Estas siete parejas van a dar lo mejor de sí mismas." |

| omision | The subject or some pronouns are omitted, or the phrase is restructured with verboids. | "los participantes mantendrán un debate" -> "habrá un debate", "Si los científicos trabajan adecuadamente" -> "Trabajando adecuadamente, "los estudiantes" -> "estudiantes |

| terminologia | Correction of terms with ableist, racist, or other types of discrimination bias. | |

| parafrasis | Avoid words with generic connotations by reformulating the phrase | |

| otros | Difficulties that don’t fit in the other labels | |

### Origin tags descriptions

Data quality can depend on their origin, so data are tagged with origin labels according to this table:

| Tag | Description | Link to origin |

|---------------------------|----------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| neutral_es | Curated and refined version of neutral-es dataset | https://huggingface.co/datasets/hackathon-pln-es/neutral-es |

| GPT-3.5_fewshot | Chat GPT-3.5 generated with few shot technique | |

| GPT-3.5_CaDi <sup>*</sup> | Data created based on the dataset used for developing CaDi project<sup>*</sup> | https://lenguaje-incluyente.ibero.mx/ |

| GPT-3.5_fs_multiplication | Data multiplicated from GPT-3.5_fewshot using GPT-3.5 | |

| guia_CCGG | Examples from Spanish General Courts language inclusive Guide | https://www.congreso.es/docu/igualdad/Recomendaciones_uso_no_sexista_lenguajeCC.GG..pdf |

| guia_TAI | Examples from Trenes Argentinos' Guide to the use of inclusive language | https://www.argentina.gob.ar/sites/default/files/guia_para_uso_de_lenguaje_inclusivo_v1.pdf |

| guia_CONICET | Examples from Guide to inclusive, non-sexist language (CONICET) | https://cenpat.conicet.gov.ar/wp-content/uploads/sites/91/2020/08/Guia-lenguaje-inclusivo-no-sexista-CENPAT_final-1.pdf |

| guia_INAES | Examples of Guidelines for Inclusive Language Recommendations (INAES) | https://www.argentina.gob.ar/sites/default/files/2020/10/lenguaje_inclusivo_inaes_2021.pdf |

| guia_CHRYSALLIS | Examples from Practical Guide to Inclusive Language (Chrysallis) | https://www.lgbtqiahealtheducation.org/wp-content/uploads/2020/04/Guia-practica-de-lenguaje-inclusivo-Chrysallis.pdf |

| guia_ONU | Examples from Guidance for the use of gender-inclusive language (UN) | https://www.unwomen.org/sites/default/files/Headquarters/Attachments/Sections/Library/Gender-inclusive%20language/Guidelines-on-gender-inclusive-language-es.pdf |

| guia_MX | Examples from Manual for the use of inclusive and gender-sensitive language (MX) | https://www.gob.mx/cms/uploads/attachment/file/183695/Manual_Lenguaje_Incluyente_con_perspectiva_de_g_nero-octubre-2016.pdf |

| guia_CL | Examples from Gender Inclusive Language Guide of the Government of Chile | https://www.cultura.gob.cl/wp-content/uploads/2023/01/guia-de-lenguaje-inclusivo-de-genero.pdf |

| guia_IEM | Examples from Uso del Lenguaje Inclusivo de Género | https://secretariagenero.poder-judicial.go.cr/images/Documentos/LenguajeInclusivo/Documentos/Uso-de-lenguaje-inclusivo-de-Genero-IEM-UNA.pdf |

| human_combinatory | Combinatorics of text fragments generated with GPT3.5 | |

| GPT-4_human | Chat GPT-4 generated and human revised | |

| human | Human created | |

<sup>*</sup>©Universidad Iberoamericana, A.C. , Ciudad de México, México

<sup>*</sup>©Capitolina Díaz Martínez, Elvia María Guadalupe González del Pliego Dorantes, Marco Antonio López Hernández, Alberto López Medina, Héctor Celallos Avalos, Laura Mejía Hernández

## Data collection process

The data used for training the model has been sourced from various origins. The first and more important source was a curated and refined version of [es_neutral](https://huggingface.co/datasets/hackathon-pln-es/neutral-es)

In addition, we manually generated data based on Official Guidelines from different Spanish speaking countries. Finally, we augmented this data by experimenting with various prompts and Few-Shot learning techniques. We needed to be as explicit as possible, otherwise we wouldn’t get good results. For example:

We tried to be as inclusive as possible, paying close attention to the classification of difficulties that one could encounter in texts like these.

Moreover, we took care to incorporate numerous counterexamples, recognizing that there are instances where neutrality is not required in a sentence. For instance, “Las arquitectas María Nuñez y Rosa Loria presentaron el proyecto” should not be rewritten as “El equipo de arquitectura María Nuñez y Rosa Loria presentó el proyecto”.

It’s important to highlight that the Traductor Inclusivo not only promotes gender inclusivity but also addresses other forms of discrimination such as ableism, racism, xenophobia, and more.

### Sources

- [Recomendaciones para un uso no sexista del lenguaje en la Administracio n parlamentaria (España)](https://www.congreso.es/docu/igualdad/Recomendaciones_uso_no_sexista_lenguajeCC.GG..pdf)

- [Guía para uso de lenguaje inclusivo (Argentina)](https://www.argentina.gob.ar/sites/default/files/guia_para_uso_de_lenguaje_inclusivo_v1.pdf)

- [Guía de lenguaje inclusivo no sexista CCT CONICET-CENPAT (Argentina)](https://cenpat.conicet.gov.ar/wp-content/uploads/sites/91/2020/08/Guia-lenguaje-inclusivo-no-sexista-CENPAT_final-1.pdf)

- [Guía de recomendaciones para lenguaje inclusivo (Argentina)](https://www.argentina.gob.ar/sites/default/files/2020/10/lenguaje_inclusivo_inaes_2021.pdf)

- [Guía práctica de lenguaje inclusivo (España)](https://www.lgbtqiahealtheducation.org/wp-content/uploads/2020/04/Guia-practica-de-lenguaje-inclusivo-Chrysallis.pdf)

- [Guía para el uso de un lenguaje inclusivo al género (ONU)](https://www.unwomen.org/sites/default/files/Headquarters/Attachments/Sections/Library/Gender-inclusive%20language/Guidelines-on-gender-inclusive-language-es.pdf)

- [Manual para el uso de un lenguaje incluyente y con perspectiva de género (México)](https://www.gob.mx/cms/uploads/attachment/file/183695/Manual_Lenguaje_Incluyente_con_perspectiva_de_g_nero-octubre-2016.pdf)

- [Guía de lenguaje inclusivo de Género (Chile)](https://www.cultura.gob.cl/wp-content/uploads/2023/01/guia-de-lenguaje-inclusivo-de-genero.pdf)

- [Uso del Lenguaje Inclusivo de Género, IEM (Costa Rica)](https://secretariagenero.poder-judicial.go.cr/images/Documentos/LenguajeInclusivo/Documentos/Uso-de-lenguaje-inclusivo-de-Genero-IEM-UNA.pdf)

- [Uso no sexista de la lengua, UOC (España)](https://www.uoc.edu/portal/es/servei-linguistic/redaccio/tractament-generes/index.html)

- https://huggingface.co/datasets/hackathon-pln-es/neutral-es

## Bias

As bias is what we want to tackle, this corpus pays special attention to different types of discrimination, such as sexism, racism and ableism.

## Social Impact

An inclusive translator holds significant social impact by promoting equity and representation within texts. By rectifying biases ingrained in language and fostering inclusivity, it combats discrimination, amplifies the visibility of marginalized groups, and contributes to the cultivation of a more inclusive and respectful society.

## Team members

- **Gaia Quintana Fleitas** (gaiaq)

- **Andrés Martínez Fernández-Salguero** (andresmfs)

- **Imanuel Rozenberg** (manu_20392)

- **Miguel López** (wizmik12)

- **Josué Sauca** (josue_sauca) |

ncduy/mt-en-vi | ---

annotations_creators:

- found

language_creators:

- found

language:

- en

- vi

license:

- mit

multilinguality:

- translation

pretty_name: "Machine Translation Paired English-Vietnamese Sentences"

size_categories:

- 1M<n<10M

source_datasets:

- own

- open_subtitles

- tatoeba

- opus_tedtalks

- qed_amara

- opus_wikipedia

task_categories:

- conditional-text-generation

task_ids:

- machine-translation

---

# Dataset Card for Machine Translation Paired English-Vietnamese Sentences

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

[More Information Needed]

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

The language of the dataset text sentence is English ('en') and Vietnamese (`vi`).

## Dataset Structure

### Data Instances

An instance example:

```

{

'en': 'And what I think the world needs now is more connections.',

'vi': 'Và tôi nghĩ điều thế giới đang cần bây giờ là nhiều sự kết nối hơn.',

'source': 'TED2020 v1'

}

```

### Data Fields

- `en` (str): English sentence

- `vi` (str): Vietnamese sentence

- `source` (str): Source.

### Data Splits

The dataset is split in train, validation and test.

| | Tain | Validation | Test |

|--------------------|------:|-----------:|-----:|

| Number of examples |2884451| 11316| 11225|

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

Thanks to [@ncduy0303](https://github.com/ncduy0303) for adding this dataset. |

huggan/anime-faces | ---

license: cc0-1.0

---

# Dataset Card for anime-faces

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-instances)

- [Data Splits](#data-instances)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Dataset Description

- **Homepage:** https://www.kaggle.com/soumikrakshit/anime-faces

- **Repository:** https://www.kaggle.com/soumikrakshit/anime-faces

- **Paper:** [Needs More Information]

- **Leaderboard:** [Needs More Information]

- **Point of Contact:** https://github.com/Mckinsey666

### Dataset Summary

This is a dataset consisting of 21551 anime faces scraped from www.getchu.com, which are then cropped using the anime face detection algorithm in https://github.com/nagadomi/lbpcascade_animeface. All images are resized to 64 * 64 for the sake of convenience. Please also cite the two sources when using this dataset.

Some outliers are still present in the dataset:

Bad cropping results

Some non-human faces.

Feel free to contribute to this dataset by adding images of similar quality or adding image labels.

### Supported Tasks and Leaderboards

[Needs More Information]

### Languages

[Needs More Information]

## Dataset Structure

### Data Instances

[Needs More Information]

### Data Fields

Has a data folder with png files inside.

### Data Splits

Only training set

## Dataset Creation

### Curation Rationale

[Needs More Information]

### Source Data

#### Initial Data Collection and Normalization

[Needs More Information]

#### Who are the source language producers?

[Needs More Information]

### Annotations

#### Annotation process

[Needs More Information]

#### Who are the annotators?

[Needs More Information]

### Personal and Sensitive Information

[Needs More Information]

## Considerations for Using the Data

### Social Impact of Dataset

[Needs More Information]

### Discussion of Biases

[Needs More Information]

### Other Known Limitations

[Needs More Information]

## Additional Information

### Dataset Curators

[Needs More Information]

### Licensing Information

[Needs More Information]

### Citation Information

[Needs More Information]

---

annotations_creators:

- found

language_creators:

- found

languages:

- unknown

licenses:

- unknown

multilinguality:

- unknown

pretty_name: anime-faces

size_categories:

- unknown

source_datasets:

- original

task_categories:

- image-classification

task_ids: []

--- |

inverse-scaling/NeQA | ---

language:

- en

size_categories:

- 10K<n<100K

license:

- cc-by-sa-4.0

multilinguality:

- monolingual

pretty_name: NeQA - Can Large Language Models Understand Negation in Multi-choice Questions?

source_datasets: []

task_categories:

- multiple-choice

- question-answering

- zero-shot-classification

train-eval-index:

- config: inverse-scaling--NeQA

task: text-generation

task_id: text_zero_shot_classification

splits:

eval_split: train

col_mapping:

prompt: text

classes: classes

answer_index: target

---

## NeQA: Can Large Language Models Understand Negation in Multi-choice Questions? (Zhengping Zhou and Yuhui Zhang)

### General description

This task takes an existing multiple-choice dataset and negates a part of each question to see if language models are sensitive to negation. The authors find that smaller language models display approximately random performance whereas the performance of larger models become significantly worse than random.

Language models failing to follow instructions in the prompt could be a serious issue that only becomes apparent on a task once models are sufficiently capable to perform non-randomly on the task.

### Example

The following are multiple choice questions (with answers) about common sense.

Question: If a cat has a body temp that is below average, it isn't in

A. danger

B. safe ranges

Answer:

(where the model should choose B.)

## Submission details

### Task description

Negation is a common linguistic phenomenon that can completely alter the semantics of a sentence by changing just a few words.

This task evaluates whether language models can understand negation, which is an important step towards true natural language understanding.

Specifically, we focus on negation in open-book multi-choice questions, considering its wide range of applications and the simplicity of evaluation.

We collect a multi-choice question answering dataset, NeQA, that includes questions with negations.

When negation is presented in the question, the original correct answer becomes wrong, and the wrong answer becomes correct.

We use the accuracy metric to examine whether the model can understand negation in the questions and select the correct answer given the presence of negation.

We observe a clear inverse scaling trend on GPT-3, demonstrating that larger language models can answer more complex questions but fail at the last step to understanding negation.

### Dataset generation procedure

The dataset is created by applying rules to transform questions in a publicly available multiple-choice question answering dataset named OpenBookQA. We use a simple rule by filtering questions containing "is" and adding "not" after it. For each question, we sample an incorrect answer as the correct answer and treat the correct answer as the incorrect answer. We randomly sample 300 questions and balance the label distributions (50% label as "A" and 50% label as "B" since there are two choices for each question)..

### Why do you expect to see inverse scaling?

For open-book question answering, larger language models usually achieve better accuracy because more factual and commonsense knowledge is stored in the model parameters and can be used as a knowledge base to answer these questions without context.

A higher accuracy rate means a lower chance of choosing the wrong answer. Can we change the wrong answer to the correct one? A simple solution is to negate the original question. If the model cannot understand negation, it will still predict the same answer and, therefore, will exhibit an inverse scaling trend.

We expect that the model cannot understand negation because negation introduces only a small perturbation to the model input. It is difficult for the model to understand that this small perturbation leads to completely different semantics.

### Why is the task important?

This task is important because it demonstrates that current language models cannot understand negation, a very common linguistic phenomenon and a real-world challenge to natural language understanding.

Why is the task novel or surprising? (1+ sentences)

To the best of our knowledge, no prior work shows that negation can cause inverse scaling. This finding should be surprising to the community, as large language models show an incredible variety of emergent capabilities, but still fail to understand negation, which is a fundamental concept in language.

## Results

[Inverse Scaling Prize: Round 1 Winners announcement](https://www.alignmentforum.org/posts/iznohbCPFkeB9kAJL/inverse-scaling-prize-round-1-winners#Zhengping_Zhou_and_Yuhui_Zhang__for_NeQA__Can_Large_Language_Models_Understand_Negation_in_Multi_choice_Questions_)

|

tasksource/cycic_multiplechoice | ---

license: apache-2.0

task_categories:

- multiple-choice

language:

- en

---

https://colab.research.google.com/drive/16nyxZPS7-ZDFwp7tn_q72Jxyv0dzK1MP?usp=sharing

```

@article{Kejriwal2020DoFC,

title={Do Fine-tuned Commonsense Language Models Really Generalize?},

author={Mayank Kejriwal and Ke Shen},

journal={ArXiv},

year={2020},

volume={abs/2011.09159}

}

```

added for

```

@article{sileo2023tasksource,

title={tasksource: Structured Dataset Preprocessing Annotations for Frictionless Extreme Multi-Task Learning and Evaluation},

author={Sileo, Damien},

url= {https://arxiv.org/abs/2301.05948},

journal={arXiv preprint arXiv:2301.05948},

year={2023}

}

``` |

anon8231489123/Omegle_logs_dataset | ---

license: apache-2.0

language:

- en

---

~10k conversations from Omegle. Scraped using: http://web.archive.org/cdx/search/xd?url=logs.omegle.com/*&fl=timestamp,original,statuscode&output=json. For these logs to have ended up on the cdx, it means the url was posted publicly at some point.

* PII removed by searching for conversations with these words: forbidden_words = ["kik", "telegram", "skype", "wickr", "discord", "dropbox", "insta ", "insta?", "instagram", "snap ", "snapchat"].

* Conversations with racial slurs removed.

* English only.

* Obviously, the dataset still contains a lot of (sometimes extreme) NSFW content. Do not view or use this dataset if you are under 18.

General process for scraping (There are probably other datasets that can be scraped using this method):

1. Go to page in archive.org cdx

2. Check if the page contains a log

3. Download the log image

4. Use OCR to read it

5. Save it to a json file.

This dataset could be useful for training casual conversational AI's but it likely still requires more filtering. Use at your own risk. |

kkcosmos/instagram-images-with-captions | ---

license: unknown

dataset_info:

features:

- name: image

dtype: image

- name: item_id

dtype: int64

- name: image_path

dtype: string

- name: caption

dtype: string

splits:

- name: train

num_bytes: 3516512787.609302

num_examples: 28360

- name: test

num_bytes: 391305063.2386981

num_examples: 3152

download_size: 0

dataset_size: 3907817850.848

---

|

truehealth/medicationqa | ---

dataset_info:

features:

- name: Question

dtype: string

- name: Focus (Drug)

dtype: string

- name: Question Type

dtype: string

- name: Answer

dtype: string

- name: Section Title

dtype: string

- name: URL

dtype: string

splits:

- name: train

num_bytes: 403030

num_examples: 690

download_size: 0

dataset_size: 403030

---

# Dataset Card for "medicationqa"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

nampdn-ai/tiny-webtext | ---

license: mit

viewer: true

task_categories:

- text-generation

language:

- en

pretty_name: Tiny WebText

size_categories:

- 1M<n<10M

source_datasets:

- tiiuae/falcon-refinedweb

---

# Tiny WebText

The Tiny WebText dataset is designed to help models learn about perception on web text while neutralizing the bias of the source text using critical thinking methods. By providing a rich and diverse set of texts, I aim to improve the ability of models to understand and analyze information in a more objective and unbiased manner.

This dataset can be used to train and evaluate natural language processing and machine learning models, with the goal of improving their perception and critical thinking skills. It is a valuable resource for researchers and developers, especially those working in the fields of machine learning and data engineering.

The dataset is augmented using subset of [Falcon-RefinedWeb](https://arxiv.org/abs/2306.01116), which provides additional augmented text using [Falcon-7B-Instruct](https://huggingface.co/tiiuae/falcon-7b-instruct) model to improve the quality and diversity of the texts.

I welcome any feedback or contributions. Thank you for your interest in my work! |

Shrey-1329/cxiu_hf_dataset | ---

dataset_info:

features:

- name: image

dtype: image

- name: text

dtype: string

splits:

- name: train

num_bytes: 1108945726.54

num_examples: 6060

download_size: 1108991167

dataset_size: 1108945726.54

task_categories:

- image-to-text

language:

- en

tags:

- medical

size_categories:

- 1K<n<10K

---

# Indiana University Chest Xray Dataset Card

## Data sources:

This is a converted and processed version of the open access pneumonia chest x-ray dataset provided by the indiana university.<br>

You can see its information page [here](https://openi.nlm.nih.gov/faq).<br>

The compressed images in the png format were downloaded from [here](https://openi.nlm.nih.gov/imgs/collections/NLMCXR_png.tgz) and the corresponding reports from [here](https://openi.nlm.nih.gov/imgs/collections/NLMCXR_reports.tgz).

## Data fields:

There are two fields: image and text.

The images are the x-rays and the texts are their associated findings.

## Preprocessing done:

1. **Make all text lowercase**: Convert all text to lowercase to ensure consistent and case-insensitive processing.

2. **Remove all punctuation**: Eliminate any punctuation marks (e.g., periods, commas, exclamation marks) from the text to avoid interference in language analysis.

3. **Remove all numbers**: Eliminate all numeric characters from the text since they might not be relevant for certain natural language processing tasks.

4. **Remove all words with 2 or more Xs in a row**: Remove any words that contain two or more consecutive occurrences of the letter "X" as they may not contribute meaningful information.

5. **Remove the bottom and top 2% of text by length**: Discard the shortest and longest text samples, removing the bottom 2% and top 2% of the text's length, respectively. This step is aimed at reducing the impact of outliers and ensuring a more balanced dataset. |

larryvrh/PIPPA-TavernFormat | ---

dataset_info:

features:

- name: categories

sequence: string

- name: name

dtype: string

- name: description

dtype: string

- name: first_msg

dtype: string

- name: personality

dtype: string

- name: example_dialogues

sequence: string

- name: conversation

list:

- name: is_human

dtype: bool

- name: message

dtype: string

splits:

- name: train

num_bytes: 174673097

num_examples: 11841

download_size: 88204818

dataset_size: 174673097

license: agpl-3.0

task_categories:

- conversational

language:

- en

tags:

- not-for-all-audiences

- roleplay

- conversational

size_categories:

- 10K<n<100K

---

# Dataset Card for "PIPPA_TavernFormat"

Converted from the deduped version (pippa_deduped.jsonl) of [PygmalionAI/PIPPA](https://huggingface.co/datasets/PygmalionAI/PIPPA?not-for-all-audiences=true).

Since the CAI format and the Tavern format does not align exactly, there maybe some mismatches between fields, especially character description and personality. |

CollectiveCognition/chats-data-2023-09-27 | ---

license: mit

---

# Dataset Card for "Collective Cognition ChatGPT Conversations"

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

## Dataset Description

### Dataset Summary

The "Collective Cognition ChatGPT Conversations" dataset is a collection of chat logs between users and the ChatGPT model. These conversations have been shared by users on the "Collective Cognition" website. The dataset provides insights into user interactions with language models and can be utilized for multiple purposes, including training, research, and analysis.

Visit Collective Cognition to browse or contribute chats here: https://collectivecognition.ai

### Supported Tasks

- **Language Model Finetuning**: Training through behavior cloning and knowledge instilling.

- **Dialogue Understanding**: Analyzing the flow and structure of conversations.

- **Sentiment Analysis**: Understanding the sentiment or emotion in the dialogues.

- **Topic Modeling**: Determining the topics discussed within the chats.

### Languages

The primary language of the dataset is English, but any language chat may be present in the dataset as users share more chats.

## Dataset Structure

### Data Instances

A typical data instance includes a chat log with a title, model name, whether the chat used custom instructions (currently not included if so), and the content of the message with the role of the sender (either "human" or "gpt").

The dataset is formatted to conform with ShareGPT standard.

This is an example entry in the dataset, noting that turns are variable and can be many or as few as two:

```json

{

"title": "Interest Formulas Clarification",

"model_name": "GPT-4",

"custom_instruction": false,

"conversations": [

{

"from": "human",

"value": "Is this correct? The formula for calculating simple interest (also known as Compound Interest) is given by I = P * r^n, where \n- I represents the final amount of money, also called principal or capital. This value will keep on increasing at a fixed rate per unit time denoted by 'r' and number of times denoted by 'n'. The initial investment represented by 'P', while the percentage rate expressed as an annual fraction, i.e., p/100 representing the percentage to be paid annually. In other words it\u2019s how much you earn in one year out of your original $100 invested with a 5% annual return compounded monthly."

},

{

"from": "assistant",

"value": "I'm sorry, but there seems to be some confusion in your question. The formula given and the definitions of the variables seem to be mixed up between simple interest and compound interest. Let me clarify:\n\n1. Simple Interest: This type of interest is calculated only on the original amount (principal) that you invested or loaned. The formula for simple interest is I = PRT, where:\n - I is the interest\n - P is the principal amount (initial investment)\n - R is the annual interest rate (in decimal form, so a 5% rate would be 0.05)\n - T is the time the money is invested or borrowed for, in years.\n\n2. Compound Interest: This type of interest is calculated on the initial principal and also on the accumulated interest of previous periods of a deposit or loan. The formula for compound interest is A = P(1 + r/n)^(nt), where:\n - A is the amount of money accumulated after n years, including interest.\n - P is the principal amount (the initial amount of money)\n - r is the annual interest rate (in decimal form, so a 5% rate would be 0.05)\n - n is the number of times that interest is compounded per year\n - t is the number of years the money is invested or borrowed for.\n\nIn both cases, the interest rate is usually expressed as an annual rate, regardless of the length of the term."

}

]

}

```

### Data Splits

Currently, the dataset is not divided into specific splits (train, test, validation).

## Dataset Creation

### Curation Rationale

The dataset was curated to provide insights into how users interact with language models and to contribute to the broader NLP community's resources.

### Source Data

The data originates from user contributions on the "Collective Cognition" website.

### Personal and Sensitive Information

All chats uploaded to the Collective Cognition website are made public, and are uploaded as a new dataset periodically. If you would like to have your chat removed, please email admin@collectivecognition.ai

## Considerations for Using the Data

### Social Impact of Dataset

The dataset offers a glimpse into the interaction dynamics between humans and AI models. It can be instrumental for researchers studying human-AI collaboration.

### Discussion of Biases

There might be biases in the dataset based on the types of users contributing chat logs and the topics they discuss with ChatGPT, particularly centered around what users may utilize ChatGPT for the most.

### Other Known Limitations

The dataset is dependent on the voluntary contributions of users. Hence, it might not represent the entire spectrum of interactions that users have with ChatGPT.

## Additional Information

### Licensing Information

MIT |

alexandrainst/nst-da | ---

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

dataset_info:

features:

- name: audio

dtype:

audio:

sampling_rate: 16000

- name: text

dtype: string

- name: speaker_id

dtype: int64

- name: age

dtype: int64

- name: sex

dtype: string

- name: dialect

dtype: string

- name: recording_datetime

dtype: string

splits:

- name: train

num_bytes: 55199435558.0

num_examples: 182605

- name: test

num_bytes: 8894080220.0

num_examples: 54747

download_size: 5358057252

dataset_size: 64093515778.0

size_categories:

- 100K<n<1M

license: cc0-1.0

task_categories:

- automatic-speech-recognition

- text-to-speech

language:

- da

pretty_name: NST-da

---

# Dataset Card for NST-da

## Dataset Description

- **Repository:** <https://www.nb.no/sprakbanken/en/resource-catalogue/oai-nb-no-sbr-55/>

- **Point of Contact:** [Dan Saattrup Nielsen](mailto:dan.nielsen@alexandra.dk)

- **Size of downloaded dataset files:** 5.36 GB

- **Size of the generated dataset:** 64.09 GB

- **Total amount of disk used:** 69.45 GB

### Dataset Summary

This dataset is an upload of the [NST Danish ASR Database (16 kHz) – reorganized](https://www.nb.no/sprakbanken/en/resource-catalogue/oai-nb-no-sbr-55/).

The training and test splits are the original ones.

### Supported Tasks and Leaderboards

Training automatic speech recognition is the intended task for this dataset. No leaderboard is active at this point.

### Languages

The dataset is available in Danish (`da`).

## Dataset Structure

### Data Instances

- **Size of downloaded dataset files:** 5.36 GB

- **Size of the generated dataset:** 64.09 GB

- **Total amount of disk used:** 69.45 GB

An example from the dataset looks as follows.

```

{

'audio': {

'path': 'dk14x404-05072000-1531_u0008121.wav',

'array': array([ 0.00265503, 0.00248718, 0.00253296, ..., -0.00030518,

-0.00035095, -0.00064087]),

'sampling_rate': 16000

},

'text': 'Desuden er der en svømmeprøve, en fremmedsprogstest samt en afsluttende samtale.',

'speaker_id': 404,

'age': 24,

'sex': 'Female',

'dialect': 'Storkøbenhavn',

'recording_datetime': '2000-07-05T15:31:14'

}

```

### Data Fields

The data fields are the same among all splits.

- `audio`: an `Audio` feature.

- `text`: a `string` feature.

- `speaker_id`: an `int64` feature.

- `age`: an `int64` feature.

- `sex`: a `string` feature.

- `dialect`: a `string` feature.

- `recording_datetime`: a `string` feature.

### Dataset Statistics

There are 183,205 samples in the training split, and 54,747 samples in the test split.

#### Speakers

There are 539 unique speakers in the training dataset and 56 unique speakers in the test dataset, where 54 of them are also present in the training set.

#### Age Distribution

#### Dialect Distribution

#### Sex Distribution

#### Transcription Length Distribution

## Dataset Creation

### Curation Rationale

There are not many large-scale ASR datasets in Danish.

### Source Data

The data originates from the now bankrupt company Nordisk språkteknologi (NST), whose data was transferred to the National Library of Norway, who subsequently released it into the public domain.

## Additional Information

### Dataset Curators

[Dan Saattrup Nielsen](https://saattrupdan.github.io/) from the [The Alexandra

Institute](https://alexandra.dk/) reorganised the dataset and uploaded it to the Hugging Face Hub.

### Licensing Information

The dataset is licensed under the [CC0

license](https://creativecommons.org/share-your-work/public-domain/cc0/). |

abhinand/tamil-alpaca | ---

dataset_info:

features:

- name: instruction

dtype: string

- name: input

dtype: string

- name: output

dtype: string

- name: text

dtype: string

- name: system_prompt

dtype: string

splits:

- name: train

num_bytes: 287556653

num_examples: 51876

download_size: 0

dataset_size: 287556653

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

license: gpl-3.0

task_categories:

- text-generation

language:

- ta

pretty_name: tamil-alpaca

size_categories:

- 10K<n<100K

---

# Dataset Card for "tamil-alpaca"

This repository includes a Tamil-translated version of the [Alpaca dataset](https://huggingface.co/datasets/yahma/alpaca-cleaned).

This dataset is part of the release of Tamil LLaMA family of models – an important step in advancing LLMs for the Tamil language. To dive deep into the development and capabilities of this model, please read the [research paper](https://arxiv.org/abs/2311.05845) and the [introductory blog post (WIP) ]() that outlines our journey and the model's potential impact.

**GitHub Repository:** [https://github.com/abhinand5/tamil-llama](https://github.com/abhinand5/tamil-llama)

## Models trained using this dataset

| Model | Type | Data | Base Model | # Params | Download Links |

|--------------------------|-----------------------------|-------------------|----------------------|------|------------------------------------------------------------------------|

| Tamil LLaMA 7B Instruct | Instruction following model | 145k instructions | Tamil LLaMA 7B Base | 7B | [HF Hub](https://huggingface.co/abhinand/tamil-llama-7b-instruct-v0.1) |

| Tamil LLaMA 13B Instruct | Instruction following model | 145k instructions | Tamil LLaMA 13B Base | 13B | [HF Hub](abhinand/tamil-llama-13b-instruct-v0.1) |

## Meet the Developers

Get to know the creators behind this innovative model and follow their contributions to the field:

- [Abhinand Balachandran](https://www.linkedin.com/in/abhinand-05/)

## Citation

If you use this model or any of the the Tamil-Llama datasets in your research, please cite:

```bibtex

@misc{balachandran2023tamilllama,

title={Tamil-Llama: A New Tamil Language Model Based on Llama 2},

author={Abhinand Balachandran},

year={2023},

eprint={2311.05845},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

ComponentSoft/k8s-kubectl-cot-20k | ---

dataset_info:

features:

- name: objective

dtype: string

- name: command_name

dtype: string

- name: command

dtype: string

- name: description

dtype: string

- name: syntax

dtype: string

- name: flags

list:

- name: default

dtype: string

- name: description

dtype: string

- name: option

dtype: string

- name: short

dtype: string

- name: question

dtype: string

- name: chain_of_thought

dtype: string

splits:

- name: train

num_bytes: 51338358

num_examples: 19661

download_size: 0

dataset_size: 51338358

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Dataset Card for "k8s-kubectl-cot-20k"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

Djacon/ru-izard-emotions | ---

language:

- ru

license:

- mit

multilinguality:

- russian

task_categories:

- text-classification

task_ids:

- sentiment-classification

- multi-class-classification

- multi-label-classification

pretty_name: RuIzardEmotions

tags:

- emotion

size_categories:

- 10K<n<100K

---

# Dataset Card for RuIzardEmotions

## Table of Contents

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

### Dataset Summary

The RuIzardEmotions dataset is a high-quality translation of the [go-emotions](https://huggingface.co/datasets/go_emotions) dataset and the other [emotion-detection](https://www.kaggle.com/datasets/ishantjuyal/emotions-in-text/data) dataset. It contains 30k Reddit comments labeled for 10 emotion categories (__joy__, __sadness__, __anger__, __enthusiasm__, __surprise__, __disgust__, __fear__, __guilt__, __shame__ and __neutral__).

The datasets were translated using the accurate translator [DeepL](https://www.deepl.com/translator) and additional processing. The idea for the dataset was inspired by the [Izard's model](https://en.wikipedia.org/wiki/Differential_Emotions_Scale) of human emotions.

The dataset already with predefined train/val/test splits.

### Supported Tasks and Leaderboards

This dataset is intended for multi-class, multi-label emotion classification.

### Languages

The data is in Russian.

## Dataset Structure

### Data Instances

Each instance is a reddit comment with one or more emotion annotations (or neutral).

### Data Splits

The simplified data includes a set of train/val/test splits with 24k, 3k, and 3k examples respectively.

## Considerations for Using the Data

### Social Impact of Dataset

Emotion detection is a worthwhile problem which can potentially lead to improvements such as better human/computer

interaction. However, emotion detection algorithms (particularly in computer vision) have been abused in some cases

to make erroneous inferences in human monitoring and assessment applications such as hiring decisions, insurance

pricing, and student attentiveness

## Additional Information

### Licensing Information

The GitHub repository which houses this dataset has an

[Apache License 2.0](https://github.com/Djacon/russian-emotion-detection/blob/main/LICENSE).

### Citation Information

```

@inproceedings{Djacon,

author={Djacon},

title={RuIzardEmotions: A Dataset of Fine-Grained Emotions},

year={2023}

}

``` |

pixparse/cc12m-wds | ---

license: other

license_name: conceptual-12m

license_link: LICENSE

task_categories:

- image-to-text

size_categories:

- 10M<n<100M

---

# Dataset Card for Conceptual Captions 12M (CC12M)

## Dataset Description

- **Repository:** [Conceptual 12M repository](https://github.com/google-research-datasets/conceptual-12m)

- **Paper:** [Conceptual 12M: Pushing Web-Scale Image-Text Pre-Training To Recognize Long-Tail Visual Concepts](https://arxiv.org/abs/2102.08981)

- **Point of Contact:** [Conceptual Captions e-mail](mailto:conceptual-captions@google.com)

### Dataset Summary

Conceptual 12M (CC12M) is a dataset with 12 million image-text pairs specifically meant to be used for visionand-language pre-training.

Its data collection pipeline is a relaxed version of the one used in Conceptual Captions 3M (CC3M).

### Usage

This instance of Conceptual Captions is in [webdataset](https://github.com/webdataset/webdataset/commits/main) .tar format. It can be used with webdataset library or upcoming releases of Hugging Face `datasets`.

...More Detail TBD

### Data Splits

This dataset was downloaded using img2dataset. Images resized on download if shortest edge > 512 to shortest edge = 512.

#### Train

* `cc12m-train-*.tar`

* Downloaded on 2021/18/22

* 2176 shards, 10968539 samples

## Additional Information

### Dataset Curators

Soravit Changpinyo, Piyush Sharma, Nan Ding and Radu Soricut.

### Licensing Information

The dataset may be freely used for any purpose, although acknowledgement of

Google LLC ("Google") as the data source would be appreciated. The dataset is

provided "AS IS" without any warranty, express or implied. Google disclaims all

liability for any damages, direct or indirect, resulting from the use of the

dataset.

### Citation Information

```bibtex

@inproceedings{changpinyo2021cc12m,

title = {{Conceptual 12M}: Pushing Web-Scale Image-Text Pre-Training To Recognize Long-Tail Visual Concepts},

author = {Changpinyo, Soravit and Sharma, Piyush and Ding, Nan and Soricut, Radu},

booktitle = {CVPR},

year = {2021},

}

``` |

dataautogpt3/Dalle3 | ---

license: mit

---

10,000 high-quality captions with image pairs produced by dalle3 with a raw.zip incase i uploaded it wrong. |

lovesnowbest/T-Eval | ---

license: apache-2.0

task_categories:

- question-answering

language:

- en

- zh

tags:

- code

pretty_name: teval

size_categories:

- 100M<n<1B

---

# T-Eval: Evaluating the Tool Utilization Capability of Large Language Models Step by Step

[](https://arxiv.org/abs/2312.14033)

[](./LICENSE)

## ✨ Introduction

This is an evaluation harness for the benchmark described in [T-Eval: Evaluating the Tool Utilization Capability of Large Language Models Step by Step](https://arxiv.org/abs/2312.14033).

[[Paper](https://arxiv.org/abs/2312.14033)]

[[Project Page](https://open-compass.github.io/T-Eval/)]

[[LeaderBoard](https://open-compass.github.io/T-Eval/leaderboard.html)]

[[HuggingFace](https://huggingface.co/datasets/lovesnowbest/T-Eval)]

> Large language models (LLM) have achieved remarkable performance on various NLP tasks and are augmented by tools for broader applications. Yet, how to evaluate and analyze the tool utilization capability of LLMs is still under-explored. In contrast to previous works that evaluate models holistically, we comprehensively decompose the tool utilization into multiple sub-processes, including instruction following, planning, reasoning, retrieval, understanding, and review. Based on that, we further introduce T-Eval to evaluate the tool-utilization capability step by step. T-Eval disentangles the tool utilization evaluation into several sub-domains along model capabilities, facilitating the inner understanding of both holistic and isolated competency of LLMs. We conduct extensive experiments on T-Eval and in-depth analysis of various LLMs. T-Eval not only exhibits consistency with the outcome-oriented evaluation but also provides a more fine-grained analysis of the capabilities of LLMs, providing a new perspective in LLM evaluation on tool-utilization ability.

<!--

[T-Eval: ]()<br>

Zehui Chen<sup>♠</sup>, Weihua Du<sup>♠</sup>, Wenwei Zhang<sup>♠</sup>, Kuikun Liu, Jiangning Liu, Miao Zheng, Jingming Zhuo, Songyang Zhang, Dahua Lin, Kai Chen<sup>♦</sup>, Feng Zhao<sup>♦</sup>

<sup>♠</sup> Equal Contribution<br>

<sup>♦</sup> Corresponding Author -->

<div>

<center>

<img src="figs/teaser.png">

</div>

## 🚀 What's New

- **[2024.02.18]** Release new [data](https://drive.google.com/file/d/1nQ0pn26qd0FGU8UkfSTxNdu6uWI0QXTY/view?usp=sharing) (both Chinese and English) and code for faster inference!🚀🚀🚀 The leaderboard will be updated soon! We also provide template examples for reference.

- **[2024.01.08]** Release [ZH Leaderboard](https://open-compass.github.io/T-Eval/leaderboard_zh.html) and ~~[ZH data](https://drive.google.com/file/d/1z25duwZAnBrPN5jYu9-8RMvfqnwPByKV/view?usp=sharing)~~, where the questions and answer formats are in Chinese. (公布了中文评测数据集和榜单)✨✨✨

- **[2023.12.22]** Paper available on [ArXiv](https://arxiv.org/abs/2312.14033). 🔥🔥🔥

- **[2023.12.21]** Release the test scripts and data for T-Eval. 🎉🎉🎉

## 🧾 TODO

- [x] Change the role of function response from `system` to `function`.

- [x] Merge consecutive same role conversations.

- [x] Provide template configs for open-sourced models.

- [x] Provide dev set for T-Eval, reducing the evaluation time.

- [x] Optimize the inference pipeline of huggingface model provided by Lagent, which will be 3x faster. **(Please upgrade Lagent to v0.2)**

- [ ] Support inference on Opencompass.

~~NOTE: These TODOs will be started after 2024.2.1~~ Thanks for your patience!

## 🛠️ Preparations

```bash

$ git clone https://github.com/open-compass/T-Eval.git

$ cd T-Eval

$ pip install requirements.txt

```

## 🛫️ Get Started

We support both API-based models and HuggingFace models via [Lagent](https://github.com/InternLM/lagent).

### 💾 Test Data

We provide both google drive & huggingface dataset to download test data:

1. Google Drive

~~[[EN data](https://drive.google.com/file/d/1ebR6WCCbS9-u2x7mWpWy8wV_Gb6ltgpi/view?usp=sharing)] (English format) [[ZH data](https://drive.google.com/file/d/1z25duwZAnBrPN5jYu9-8RMvfqnwPByKV/view?usp=sharing)] (Chinese format)~~

[T-Eval Data](https://drive.google.com/file/d/1nQ0pn26qd0FGU8UkfSTxNdu6uWI0QXTY/view?usp=sharing)

2. HuggingFace Datasets

You can also access the dataset through huggingface via this [link](https://huggingface.co/datasets/lovesnowbest/T-Eval).

```python

from datasets import load_dataset

dataset = load_dataset("lovesnowbest/T-Eval")

```

After downloading, please put the data in the `data` folder directly:

```

- data/

- instruct_v2.json

- plan_json_v2.json

...

```

### 🤖 API Models

1. Set your OPENAI key in your environment.

```bash

export OPENAI_API_KEY=xxxxxxxxx

```

2. Run the model with the following scripts

```bash

# test all data at once

sh test_all_en.sh api gpt-4-1106-preview gpt4

# test ZH dataset

sh test_all_zh.sh api gpt-4-1106-preview gpt4

# test for Instruct only

python test.py --model_type api --model_path gpt-4-1106-preview --resume --out_name instruct_gpt4.json --out_dir work_dirs/gpt4/ --dataset_path data/instruct_v2.json --eval instruct --prompt_type json

```

### 🤗 HuggingFace Models

1. Download the huggingface model to your local path.

2. Modify the `meta_template` json according to your tested model.

3. Run the model with the following scripts

```bash

# test all data at once

sh test_all_en.sh hf $HF_PATH $HF_MODEL_NAME $META_TEMPLATE

# test ZH dataset

sh test_all_zh.sh hf $HF_PATH $HF_MODEL_NAME $META_TEMPLATE

# test for Instruct only

python test.py --model_type hf --model_path $HF_PATH --resume --out_name instruct_$HF_MODEL_NAME.json --out_dir data/work_dirs/ --dataset_path data/instruct_v1.json --eval instruct --prompt_type json --model_display_name $HF_MODEL_NAME --meta_template $META_TEMPLATE

```

### 💫 Final Results

Once you finish all tested samples, a detailed evluation results will be logged at `$out_dir/$model_display_name/$model_display_name_-1.json` (For ZH dataset, there is a `_zh` suffix). To obtain your final score, please run the following command:

```bash

python teval/utils/convert_results.py --result_path $out_dir/$model_display_name/$model_display_name_-1.json

```

## 🔌 Protocols

T-Eval adopts multi-conversation style evaluation to gauge the model. The format of our saved prompt is as follows:

```python

[

{

"role": "system",

"content": "You have access to the following API:\n{'name': 'AirbnbSearch.search_property_by_place', 'description': 'This function takes various parameters to search properties on Airbnb.', 'required_parameters': [{'name': 'place', 'type': 'STRING', 'description': 'The name of the destination.'}], 'optional_parameters': [], 'return_data': [{'name': 'property', 'description': 'a list of at most 3 properties, containing id, name, and address.'}]}\nPlease generate the response in the following format:\ngoal: goal to call this action\n\nname: api name to call\n\nargs: JSON format api args in ONLY one line\n"

},

{

"role": "user",

"content": "Call the function AirbnbSearch.search_property_by_place with the parameter as follows: 'place' is 'Berlin'."

}

]

```

where `role` can be ['system', 'user', 'assistant'], and `content` must be in string format. Before infering it by a LLM, we need to construct it into a raw string format via `meta_template`. `meta_template` examples are provided at [meta_template.py](teval/utils/meta_template.py):

```python

[

dict(role='system', begin='<|System|>:', end='\n'),

dict(role='user', begin='<|User|>:', end='\n'),

dict(

role='assistant',

begin='<|Bot|>:',

end='<eoa>\n',

generate=True)

]

```

You need to specify the `begin` and `end` token based on your tested huggingface model at [meta_template.py](teval/utils/meta_template.py) and specify the `meta_template` args in `test.py`, same as the name you set in the `meta_template.py`. As for OpenAI model, we will handle that for you.

## 📊 Benchmark Results

More detailed and comprehensive benchmark results can refer to 🏆 [T-Eval official leaderboard](https://open-compass.github.io/T-Eval/leaderboard.html) !

<div>

<center>

<img src="figs/teval_results.png">

</div>

### ✉️ Submit Your Results

You can submit your inference results (via running test.py) to this [email](lovesnow@mail.ustc.edu.cn). We will run your predictions and update the results in our leaderboard. Please also provide the scale of your tested model. A sample structure of your submission should be like:

```

$model_display_name/

instruct_$model_display_name/

query_0_1_0.json

query_0_1_1.json

...

plan_json_$model_display_name/

plan_str_$model_display_name/

...

```

## ❤️ Acknowledgements

T-Eval is built with [Lagent](https://github.com/InternLM/lagent) and [OpenCompass](https://github.com/open-compass/opencompass). Thanks for their awesome work!

## 🖊️ Citation

If you find this project useful in your research, please consider cite:

```

@article{chen2023t,

title={T-Eval: Evaluating the Tool Utilization Capability Step by Step},

author={Chen, Zehui and Du, Weihua and Zhang, Wenwei and Liu, Kuikun and Liu, Jiangning and Zheng, Miao and Zhuo, Jingming and Zhang, Songyang and Lin, Dahua and Chen, Kai and others},

journal={arXiv preprint arXiv:2312.14033},

year={2023}

}

```

## 💳 License

This project is released under the Apache 2.0 [license](./LICENSE). |

5CD-AI/Vietnamese-meta-math-MetaMathQA-40K-gg-translated | ---

task_categories:

- question-answering

language:

- en

- vi

tags:

- math

- math-qa

size_categories:

- 10K<n<100K

--- |

UrbanSyn/UrbanSyn | ---

license: cc-by-sa-4.0

task_categories:

- object-detection

- image-segmentation

- depth-estimation

language:

- en

tags:

- Urban Scenario

- Autonomous Driving

- Synthethic data

size_categories:

- 1K<n<10K

pretty_name: UrbanSyn

---

<img src="front_urbanSyn.png" width=100% />

# UrbanSyn Dataset

UrbanSyn is an open synthetic dataset featuring photorealistic driving scenes. It contains ground-truth annotations for semantic segmentation, scene depth, panoptic instance segmentation, and 2-D bounding boxes. Website [https://urbansyn.org](https://urbansyn.org)

## Overview

UrbanSyn is a diverse, compact, and photorealistic dataset that provides more than 7.5k synthetic annotated images. It was born to address the synth-to-real domain gap, contributing to unprecedented synthetic-only baselines used by domain adaptation (DA) methods.

**- Reduce the synth-to-real domain gap**

UrbanSyn dataset helps to reduce the domain gap by contributing to unprecedented synthetic-only baselines used by domain adaptation (DA) methods.

**- Ground-truth annotations**

UrbanSyn comes with photorealistic color images, per-pixel semantic segmentation, depth, instance panoptic segmentation, and 2-D bounding boxes.

**- Open for research and commercial purposes**

UrbanSyn may be used for research and commercial purposes. It is released publicly under the Creative Commons Attribution-Commercial-ShareAlike 4.0 license.

**- High-degree of photorealism**

UrbanSyn features highly realistic and curated driving scenarios leveraging procedurally-generated content and high-quality curated assets. To achieve UrbanSyn photorealism we leverage industry-standard unbiased path-tracing and AI-based denoising techniques.

## White Paper

**[[Arxiv]](https://arxiv.org/abs/2312.12176)**

When using or referring to the UrbanSyn dataset in your research, please cite our white paper:

```BibTeX

@misc{gomez2023one,

title={All for One, and One for All: UrbanSyn Dataset, the third Musketeer of Synthetic Driving Scenes},

author={Jose L. Gómez and Manuel Silva and Antonio Seoane and Agnès Borrás and Mario Noriega and Germán Ros and Jose A. Iglesias-Guitian and Antonio M. López},

year={2023},

eprint={2312.12176},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## Terms of Use

The UrbanSyn Dataset is provided by the Computer Vision Center (UAB) and CITIC (University of A Coruña).

UrbanSyn may be used for research and commercial purposes, and it is subject to the Creative Commons Attribution-Commercial-ShareAlike 4.0. A summary of the CC-BY-SA 4.0 licensing terms can be found **[[here]](https://creativecommons.org/licenses/by-sa/4.0/deed.en)**.

Due to constraints from our asset providers for UrbanSyn, we prohibit the use of generative AI technologies for reverse engineering any assets or creating content for stock media platforms based on the UrbanSyn dataset.

While we strive to generate precise data, all information is presented 'as is' without any express or implied warranties. We explicitly disclaim all representations and warranties regarding the validity, scope, accuracy, completeness, safety, or utility of the licensed content, including any implied warranties of merchantability, fitness for a particular purpose, or otherwise.

## Acknowledgements

Funded by Grant agreement PID2020-115734RB-C21 "SSL-ADA" and Grant agreement PID2020-115734RB-C22 "PGAS-ADA"

<img src="MICINN_Gob_AEI_1.jpg" width="300" />

## For more information about our team members and how to contact us, visit our website [https://urbansyn.org](https://urbansyn.org)

## Folder structure and content

- ```rgb```: contains RGB images with a resolution of 2048x1024 in PNG format.

- ```ss and ss_colour``` : contains the pixel-level semantic segmentation labels in grayscale (value = Class ID) and colour (value = Class RGB) respectively in PNG format. We follow the 19 training classes defined on Cityscapes:

| name | trainId | color |

|----------------------|---------|-----------------|

| 'road' | 0 | (128, 64,128) |

| 'sidewalk' | 1 | (244, 35,232) |

| 'building' | 2 | ( 70, 70, 70) |

| 'wall' | 3 | (102,102,156) |

| 'fence' | 4 | (190,153,153) |

| 'pole' | 5 | (153,153,153) |

| 'traffic light' | 6 | (250,170, 30) |

| 'traffic sign' | 7 | (220,220, 0) |

| 'vegetation' | 8 | (107,142, 35) |

| 'terrain' | 9 | (152,251,152) |

| 'sky' | 10 | ( 70,130,180) |

| 'person' | 11 | (220, 20, 60) |

| 'rider' | 12 | (255, 0, 0) |

| 'car' | 13 | ( 0, 0,142) |

| 'truck' | 14 | ( 0, 0, 70) |

| 'bus' | 15 | ( 0, 60,100) |

| 'train' | 16 | ( 0, 80,100) |

| 'motorcycle' | 17 | ( 0, 0,230) |

| 'bicycle' | 18 | (119, 11, 32) |

| 'unlabeled' | 19 | ( 0, 0, 0) |

- ```panoptic```: contains the instance segmentation of the dynamic objects of the image in PNG format. Each instance is codified using the RGB channels, where RG corresponds to the instance number and B to the class ID. Dynamic objects are Person, Rider, Car, Truck, Bus, Train, Motorcycle and Bicycle.

- ```bbox2D```: contains the 2D bounding boxes and Instances information for all the dynamic objects in the image up to 110 meters of distance from the camera and bigger than 150 pixels. We provide the annotations in a json file with the next structure:

- bbox: provides the bounding box size determined by the top left corner (xMin, yMin) and Bottom right corner (xMax, YMax).

- color: corresponds to the colour of the instance in the panoptic instance segmentation map inside panoptic folder.

- label: defines the class name

- occlusion_percentage: provides the occlusion percentatge of the object. Being 0 not occluded and 100 fully occluded.

- ```depth```: contains the depth map of the image in EXR format.

## Download locally with huggingface_hub library

- [Install huggingface_hub library](https://huggingface.co/docs/huggingface_hub/installation)

- You can download the dataset on Python this way:

``` from huggingface_hub import snapshot_download ```

``` snapshot_download(repo_id="UrbanSyn/UrbanSyn", repo_type="dataset") ```

- More information about how to download and additional options can be found [here](https://huggingface.co/docs/huggingface_hub/guides/download) |

starsnatched/MemGPT-Functions-DPO | ---

license: apache-2.0

task_categories:

- conversational

- text-generation

language:

- en

tags:

- memgpt

- function calling

- function

size_categories:

- 1K<n<10K

---

I hand crafted these. For MemGPT function calling. |

Heng666/Traditional_Chinese-aya_collection | ---

dataset_info:

- config_name: aya_dataset

features:

- name: id

dtype: int64

- name: inputs

dtype: string

- name: targets

dtype: string

- name: dataset_name

dtype: string

- name: sub_dataset_name

dtype: string

- name: task_type

dtype: string

- name: template_id

dtype: string

- name: language_code

dtype: string

- name: split

dtype: string

- name: script

dtype: string

splits:

- name: train

num_bytes: 1703870

num_examples: 4909

download_size: 998499

dataset_size: 1703870

- config_name: templated_ntx_llm

features:

- name: id

dtype: int64

- name: inputs

dtype: string

- name: targets

dtype: string

- name: dataset_name

dtype: string

- name: sub_dataset_name

dtype: string

- name: task_type

dtype: string

- name: template_id

dtype: string