datasetId

stringlengths 2

81

| card

stringlengths 20

977k

|

|---|---|

princeton-nlp/QuRatedPajama-260B | ---

pretty_name: QuRatedPajama-260B

---

## QuRatedPajama

**Paper:** [QuRating: Selecting High-Quality Data for Training Language Models](https://arxiv.org/pdf/2402.09739.pdf)

A 260B token subset of [cerebras/SlimPajama-627B](https://huggingface.co/datasets/cerebras/SlimPajama-627B), annotated by [princeton-nlp/QuRater-1.3B](https://huggingface.co/princeton-nlp/QuRater-1.3B/tree/main) with sequence-level quality ratings across 4 criteria:

- **Educational Value** - e.g. the text includes clear explanations, step-by-step reasoning, or questions and answers

- **Facts & Trivia** - how much factual and trivia knowledge the text contains, where specific facts and obscure trivia are preferred over more common knowledge

- **Writing Style** - how polished and good is the writing style in the text

- **Required Expertise**: - how much required expertise and prerequisite knowledge is necessary to understand the text

In a pre-processing step, we split documents in into chunks of exactly 1024 tokens. We provide tokenization with the Llama-2 tokenizer in the `input_ids` column.

**Guidance on Responsible Use:**

In the paper, we document various types of bias that are present in the quality ratings (biases related to domains, topics, social roles, regions and languages - see Section 6 of the paper).

Hence, be aware that data selection with QuRating could have unintended and harmful effects on the language model that is being trained. We strongly recommend a comprehensive evaluation of the language model for these and other types of bias, particularly before real-world deployment. We hope that releasing the data/models can facilitate future research aimed at uncovering and mitigating such biases.

**Citation:**

```

@article{wettig2024qurating,

title={QuRating: Selecting High-Quality Data for Training Language Models},

author={Alexander Wettig, Aatmik Gupta, Saumya Malik, Danqi Chen},

journal={arXiv preprint 2402.09739},

year={2024}

}

``` |

5CD-AI/Vietnamese-yfcc15m-OpenAICLIP | ---

task_categories:

- image-to-text

- text-to-image

- visual-question-answering

language:

- en

- vi

size_categories:

- 10M<n<100M

--- |

Locutusque/OpenCerebrum-dpo | ---

license: apache-2.0

task_categories:

- text-generation

language:

- en

size_categories:

- 10K<n<100K

---

# OpenCerebrum DPO subset

## Description

OpenCerebrum is my take on creating an open source version of Aether Research's proprietary Cerebrum dataset. This repository contains the DPO subset, which contains about 21,000 examples. Unfortunately, I was unsure about how I would compress this dataset to just a few hundred examples like in the original Cerebrum dataset.

## Curation

This dataset was curated using a simple and logical rationale. The goal was to use datasets that should logically improve evaluation scores that the original Cerebrum is strong in. See the "Data Sources" section for data source information.

## Data Sources

This dataset is an amalgamation including the following sources:

- jondurbin/truthy-dpo-v0.1

- jondurbin/py-dpo-v0.1

- argilla/dpo-mix-7k

- argilla/distilabel-math-preference-dpo

- Locutusque/arc-cot-dpo

- Doctor-Shotgun/theory-of-mind-dpo |

efederici/fisica | ---

dataset_info:

features:

- name: question

dtype: string

- name: answer

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 71518930

num_examples: 27999

download_size: 35743633

dataset_size: 71518930

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

task_categories:

- question-answering

- text-generation

language:

- it

tags:

- physics

- opus

- anthropic

- gpt-4

pretty_name: Fisica

size_categories:

- 10K<n<100K

---

# Dataset Card

Fisica is a comprehensive Italian question-answering dataset focused on physics. It contains approximately 28,000 question-answer pairs, generated using Claude and GPT-4. The dataset is designed to facilitate research and development of LLMs for the Italian language.

### Dataset Description

- **Curated by:** Edoardo Federici

- **Language(s) (NLP):** Italian

- **License:** MIT

### Features

- **Diverse Physics Topics**: The dataset covers a wide range of physics topics, providing a rich resource for physics-related questions and answers.

- **High-Quality Pairs**: The question-answer pairs were generated using Claude Opus / translated using Claude Sonnet.

- **Italian Language**: Fisica is specifically curated for the Italian language, contributing to the development of Italian-specific LLMs.

### Data Sources

The dataset comprises question-answer pairs from two main sources:

1. ~8,000 pairs generated using Claude Opus from a list of seed topics

2. 20,000 pairs translated (using Claude Sonnet) from the [camel-ai/physics](https://huggingface.co/datasets/camel-ai/physics) gpt-4 dataset |

hackathon-pln-es/Axolotl-Spanish-Nahuatl | ---

annotations_creators:

- expert-generated

language_creators:

- expert-generated

language:

- es

license:

- mpl-2.0

multilinguality:

- translation

size_categories:

- unknown

source_datasets:

- original

task_categories:

- text2text-generation

- translation

task_ids: []

pretty_name: "Axolotl Spanish-Nahuatl parallel corpus , is a digital corpus that compiles\

\ several sources with parallel content in these two languages. \n\nA parallel corpus\

\ is a type of corpus that contains texts in a source language with their correspondent\

\ translation in one or more target languages. Gutierrez-Vasques, X., Sierra, G.,\

\ and Pompa, I. H. (2016). Axolotl: a web accessible parallel corpus for spanish-nahuatl.\

\ In Proceedings of the Ninth International Conference on Language Resources and\

\ Evaluation (LREC 2016), Portoro, Slovenia. European Language Resources Association\

\ (ELRA). Grupo de Ingenieria Linguistica (GIL, UNAM). Corpus paralelo español-nahuatl.\

\ http://www.corpus.unam.mx/axolotl."

language_bcp47:

- es-MX

tags:

- conditional-text-generation

---

# Axolotl-Spanish-Nahuatl : Parallel corpus for Spanish-Nahuatl machine translation

## Table of Contents

- [Dataset Card for [Axolotl-Spanish-Nahuatl]](#dataset-card-for-Axolotl-Spanish-Nahuatl)

## Dataset Description

- **Source 1:** http://www.corpus.unam.mx/axolotl

- **Source 2:** http://link.springer.com/article/10.1007/s10579-014-9287-y

- **Repository:1** https://github.com/ElotlMX/py-elotl

- **Repository:2** https://github.com/christos-c/bible-corpus/blob/master/bibles/Nahuatl-NT.xml

- **Paper:** https://aclanthology.org/N15-2021.pdf

## Dataset Collection

In order to get a good translator, we collected and cleaned two of the most complete Nahuatl-Spanish parallel corpora available. Those are Axolotl collected by an expert team at UNAM and Bible UEDIN Nahuatl Spanish crawled by Christos Christodoulopoulos and Mark Steedman from Bible Gateway site.

After this, we ended with 12,207 samples from Axolotl due to misalignments and duplicated texts in Spanish in both original and nahuatl columns and 7,821 samples from Bible UEDIN for a total of 20028 utterances.

## Team members

- Emilio Morales [(milmor)](https://huggingface.co/milmor)

- Rodrigo Martínez Arzate [(rockdrigoma)](https://huggingface.co/rockdrigoma)

- Luis Armando Mercado [(luisarmando)](https://huggingface.co/luisarmando)

- Jacobo del Valle [(jjdv)](https://huggingface.co/jjdv)

## Applications

- MODEL: Spanish Nahuatl Translation Task with a T5 model in ([t5-small-spanish-nahuatl](https://huggingface.co/hackathon-pln-es/t5-small-spanish-nahuatl))

- DEMO: Spanish Nahuatl Translation in ([Spanish-nahuatl](https://huggingface.co/spaces/hackathon-pln-es/Spanish-Nahuatl-Translation)) |

priyank-m/SROIE_2019_text_recognition | ---

annotations_creators: []

language:

- en

language_creators: []

license: []

multilinguality:

- monolingual

pretty_name: SROIE_2019_text_recognition

size_categories:

- 10K<n<100K

source_datasets: []

tags:

- text-recognition

- recognition

task_categories:

- image-to-text

task_ids:

- image-captioning

---

This dataset we prepared using the Scanned receipts OCR and information extraction(SROIE) dataset.

The SROIE dataset contains 973 scanned receipts in English language.

Cropping the bounding boxes from each of the receipts to generate this text-recognition dataset resulted in 33626 images for train set and 18704 images for the test set.

The text annotations for all the images inside a split are stored in a metadata.jsonl file.

usage:

from dataset import load_dataset

data = load_dataset("priyank-m/SROIE_2019_text_recognition")

source of raw SROIE dataset:

https://www.kaggle.com/datasets/urbikn/sroie-datasetv2 |

heegyu/kowiki-sentences | ---

license: cc-by-sa-3.0

language:

- ko

language_creators:

- other

multilinguality:

- monolingual

size_categories:

- 1M<n<10M

task_categories:

- other

---

20221001 한국어 위키를 kss(backend=mecab)을 이용해서 문장 단위로 분리한 데이터

- 549262 articles, 4724064 sentences

- 한국어 비중이 50% 이하거나 한국어 글자가 10자 이하인 경우를 제외 |

bigbio/meddocan |

---

language:

- es

bigbio_language:

- Spanish

license: cc-by-4.0

multilinguality: monolingual

bigbio_license_shortname: CC_BY_4p0

pretty_name: MEDDOCAN

homepage: https://temu.bsc.es/meddocan/

bigbio_pubmed: False

bigbio_public: True

bigbio_tasks:

- NAMED_ENTITY_RECOGNITION

---

# Dataset Card for MEDDOCAN

## Dataset Description

- **Homepage:** https://temu.bsc.es/meddocan/

- **Pubmed:** False

- **Public:** True

- **Tasks:** NER

MEDDOCAN: Medical Document Anonymization Track

This dataset is designed for the MEDDOCAN task, sponsored by Plan de Impulso de las Tecnologías del Lenguaje.

It is a manually classified collection of 1,000 clinical case reports derived from the Spanish Clinical Case Corpus (SPACCC), enriched with PHI expressions.

The annotation of the entire set of entity mentions was carried out by experts annotatorsand it includes 29 entity types relevant for the annonymiation of medical documents.22 of these annotation types are actually present in the corpus: TERRITORIO, FECHAS, EDAD_SUJETO_ASISTENCIA, NOMBRE_SUJETO_ASISTENCIA, NOMBRE_PERSONAL_SANITARIO, SEXO_SUJETO_ASISTENCIA, CALLE, PAIS, ID_SUJETO_ASISTENCIA, CORREO, ID_TITULACION_PERSONAL_SANITARIO,ID_ASEGURAMIENTO, HOSPITAL, FAMILIARES_SUJETO_ASISTENCIA, INSTITUCION, ID_CONTACTO ASISTENCIAL,NUMERO_TELEFONO, PROFESION, NUMERO_FAX, OTROS_SUJETO_ASISTENCIA, CENTRO_SALUD, ID_EMPLEO_PERSONAL_SANITARIO

For further information, please visit https://temu.bsc.es/meddocan/ or send an email to encargo-pln-life@bsc.es

## Citation Information

```

@inproceedings{marimon2019automatic,

title={Automatic De-identification of Medical Texts in Spanish: the MEDDOCAN Track, Corpus, Guidelines, Methods and Evaluation of Results.},

author={Marimon, Montserrat and Gonzalez-Agirre, Aitor and Intxaurrondo, Ander and Rodriguez, Heidy and Martin, Jose Lopez and Villegas, Marta and Krallinger, Martin},

booktitle={IberLEF@ SEPLN},

pages={618--638},

year={2019}

}

```

|

parambharat/malayalam_asr_corpus | ---

annotations_creators:

- found

language:

- ml

language_creators:

- found

license:

- cc-by-4.0

multilinguality:

- monolingual

pretty_name: Malayalam ASR Corpus

size_categories:

- 1K<n<10K

source_datasets:

- extended|common_voice

- extended|openslr

tags: []

task_categories:

- automatic-speech-recognition

task_ids: []

---

# Dataset Card for [Malayalam Asr Corpus]

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

[More Information Needed]

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

Thanks to [@parambharat](https://github.com/parambharat) for adding this dataset. |

HuggingFaceM4/LocalizedNarratives | ---

license: cc-by-4.0

---

# Dataset Card for [Dataset Name]

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Data Splits](#data-splits)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://google.github.io/localized-narratives/(https://google.github.io/localized-narratives/)

- **Repository:**: [https://github.com/google/localized-narratives](https://github.com/google/localized-narratives)

- **Paper:** [Connecting Vision and Language with Localized Narratives](https://arxiv.org/pdf/1912.03098.pdf)

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

Localized Narratives, a new form of multimodal image annotations connecting vision and language.

We ask annotators to describe an image with their voice while simultaneously hovering their mouse over the region they are describing.

Since the voice and the mouse pointer are synchronized, we can localize every single word in the description.

This dense visual grounding takes the form of a mouse trace segment per word and is unique to our data.

We annotated 849k images with Localized Narratives: the whole COCO, Flickr30k, and ADE20K datasets, and 671k images of Open Images, all of which we make publicly available.

As of now, there is only the `OpenImages` subset, but feel free to contribute the other subset of Localized Narratives!

`OpenImages_captions` is similar to the `OpenImages` subset. The differences are that captions are groupped per image (images can have multiple captions). For this subset, `timed_caption`, `traces` and `voice_recording` are not available.

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

Each instance has the following structure:

```

{

dataset_id: 'mscoco_val2017',

image_id: '137576',

annotator_id: 93,

caption: 'In this image there are group of cows standing and eating th...',

timed_caption: [{'utterance': 'In this', 'start_time': 0.0, 'end_time': 0.4}, ...],

traces: [[{'x': 0.2086, 'y': -0.0533, 't': 0.022}, ...], ...],

voice_recording: 'coco_val/coco_val_137576_93.ogg'

}

```

### Data Fields

Each line represents one Localized Narrative annotation on one image by one annotator and has the following fields:

- `dataset_id`: String identifying the dataset and split where the image belongs, e.g. mscoco_val2017.

- `image_id` String identifier of the image, as specified on each dataset.

- `annotator_id` Integer number uniquely identifying each annotator.

- `caption` Image caption as a string of characters.

- `timed_caption` List of timed utterances, i.e. {utterance, start_time, end_time} where utterance is a word (or group of words) and (start_time, end_time) is the time during which it was spoken, with respect to the start of the recording.

- `traces` List of trace segments, one between each time the mouse pointer enters the image and goes away from it. Each trace segment is represented as a list of timed points, i.e. {x, y, t}, where x and y are the normalized image coordinates (with origin at the top-left corner of the image) and t is the time in seconds since the start of the recording. Please note that the coordinates can go a bit beyond the image, i.e. <0 or >1, as we recorded the mouse traces including a small band around the image.

- `voice_recording` Relative URL path with respect to https://storage.googleapis.com/localized-narratives/voice-recordings where to find the voice recording (in OGG format) for that particular image.

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

Thanks to [@VictorSanh](https://github.com/VictorSanh) for adding this dataset.

|

keremberke/table-extraction | ---

task_categories:

- object-detection

tags:

- roboflow

- roboflow2huggingface

- Documents

---

<div align="center">

<img width="640" alt="keremberke/table-extraction" src="https://huggingface.co/datasets/keremberke/table-extraction/resolve/main/thumbnail.jpg">

</div>

### Dataset Labels

```

['bordered', 'borderless']

```

### Number of Images

```json

{'test': 34, 'train': 238, 'valid': 70}

```

### How to Use

- Install [datasets](https://pypi.org/project/datasets/):

```bash

pip install datasets

```

- Load the dataset:

```python

from datasets import load_dataset

ds = load_dataset("keremberke/table-extraction", name="full")

example = ds['train'][0]

```

### Roboflow Dataset Page

[https://universe.roboflow.com/mohamed-traore-2ekkp/table-extraction-pdf/dataset/2](https://universe.roboflow.com/mohamed-traore-2ekkp/table-extraction-pdf/dataset/2?ref=roboflow2huggingface)

### Citation

```

```

### License

CC BY 4.0

### Dataset Summary

This dataset was exported via roboflow.com on January 18, 2023 at 9:41 AM GMT

Roboflow is an end-to-end computer vision platform that helps you

* collaborate with your team on computer vision projects

* collect & organize images

* understand and search unstructured image data

* annotate, and create datasets

* export, train, and deploy computer vision models

* use active learning to improve your dataset over time

For state of the art Computer Vision training notebooks you can use with this dataset,

visit https://github.com/roboflow/notebooks

To find over 100k other datasets and pre-trained models, visit https://universe.roboflow.com

The dataset includes 342 images.

Data-table are annotated in COCO format.

The following pre-processing was applied to each image:

* Auto-orientation of pixel data (with EXIF-orientation stripping)

No image augmentation techniques were applied.

|

IlyaGusev/librusec | ---

dataset_info:

features:

- name: id

dtype: uint64

- name: text

dtype: string

splits:

- name: train

num_bytes: 125126513109

num_examples: 223256

download_size: 34905399148

dataset_size: 125126513109

task_categories:

- text-generation

language:

- ru

size_categories:

- 100K<n<1M

---

# Librusec dataset

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Description](#description)

- [Usage](#usage)

## Description

**Summary:** Based on http://panchenko.me/data/russe/librusec_fb2.plain.gz. Uploaded here for convenience. Additional cleaning was performed.

**Script:** [create_librusec.py](https://github.com/IlyaGusev/rulm/blob/master/data_processing/create_librusec.py)

**Point of Contact:** [Ilya Gusev](ilya.gusev@phystech.edu)

**Languages:** Russian.

## Usage

Prerequisites:

```bash

pip install datasets zstandard jsonlines pysimdjson

```

Dataset iteration:

```python

from datasets import load_dataset

dataset = load_dataset('IlyaGusev/librusec', split="train", streaming=True)

for example in dataset:

print(example["text"])

``` |

DFKI-SLT/DWIE | ---

license: other

language:

- en

pretty_name: >-

DWIE (Deutsche Welle corpus for Information Extraction) is a new dataset for

document-level multi-task Information Extraction (IE).

size_categories:

- 10M<n<100M

annotations_creators:

- expert-generated

language_creators:

- found

multilinguality:

- monolingual

paperswithcode_id: acronym-identification

source_datasets:

- original

tags:

- Named Entity Recognition, Coreference Resolution, Relation Extraction, Entity Linking

task_categories:

- feature-extraction

- text-classification

task_ids:

- entity-linking-classification

train-eval-index:

- col_mapping:

labels: tags

tokens: tokens

config: default

splits:

eval_split: test

task_id: entity_extraction

---

# Dataset Card for DWIE

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Supported Tasks and Leaderboards](#supported-tasks-and-leaderboards)

- [Languages](#languages)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Dataset Creation](#dataset-creation)

- [Curation Rationale](#curation-rationale)

- [Source Data](#source-data)

- [Annotations](#annotations)

- [Personal and Sensitive Information](#personal-and-sensitive-information)

- [Considerations for Using the Data](#considerations-for-using-the-data)

- [Social Impact of Dataset](#social-impact-of-dataset)

- [Discussion of Biases](#discussion-of-biases)

- [Other Known Limitations](#other-known-limitations)

- [Additional Information](#additional-information)

- [Dataset Curators](#dataset-curators)

- [Licensing Information](#licensing-information)

- [Citation Information](#citation-information)

- [Contributions](#contributions)

## Dataset Description

- **Homepage:** [https://opendatalab.com/DWIE](https://opendatalab.com/DWIE)

- **Repository:** [https://github.com/klimzaporojets/DWIE](https://github.com/klimzaporojets/DWIE)

- **Paper:** [DWIE: an entity-centric dataset for multi-task document-level information extraction](https://arxiv.org/abs/2009.12626)

- **Leaderboard:** [https://opendatalab.com/DWIE](https://opendatalab.com/DWIE)

- **Size of downloaded dataset files:** 40.8 MB

### Dataset Summary

DWIE (Deutsche Welle corpus for Information Extraction) is a new dataset for document-level multi-task Information Extraction (IE).

It combines four main IE sub-tasks:

1.Named Entity Recognition: 23,130 entities classified in 311 multi-label entity types (tags).

2.Coreference Resolution: 43,373 entity mentions clustered in 23,130 entities.

3.Relation Extraction: 21,749 annotated relations between entities classified in 65 multi-label relation types.

4.Entity Linking: the named entities are linked to Wikipedia (version 20181115).

For details, see the paper https://arxiv.org/pdf/2009.12626v2.pdf.

### Supported Tasks and Leaderboards

- **Tasks:** Named Entity Recognition, Coreference Resolution, Relation extraction and entity linking in scientific papers

- **Leaderboards:** [https://opendatalab.com/DWIE](https://opendatalab.com/DWIE)

### Languages

The language in the dataset is English.

## Dataset Structure

### Data Instances

- **Size of downloaded dataset files:** 40.8 MB

An example of 'train' looks as follows, provided sample of the data:

```json

{'id': 'DW_3980038',

'content': 'Proposed Nabucco Gas Pipeline Gets European Bank Backing\nThe heads of the EU\'s European Investment Bank and the European Bank for Reconstruction and Development (EBRD) said Tuesday, Jan. 27, that they are prepared to provide financial backing for the Nabucco gas pipeline.\nSpurred on by Europe\'s worst-ever gas crisis earlier this month, which left millions of homes across the continent without heat in the depths of winter, Hungarian Prime Minister Ferenc Gyurcsany invited top-ranking officials from both the EU and the countries involved in Nabucco to inject fresh momentum into the slow-moving project. Nabucco, an ambitious but still-unbuilt gas pipeline aimed at reducing Europe\'s energy reliance on Russia, is a 3,300-kilometer (2,050-mile) pipeline between Turkey and Austria. Costing an estimated 7.9 billion euros, the aim is to transport up to 31 billion cubic meters of gas each year from the Caspian Sea to Western Europe, bypassing Russia and Ukraine. Nabucco currently has six shareholders -- OMV of Austria, MOL of Hungary, Transgaz of Romania, Bulgargaz of Bulgaria, Botas of Turkey and RWE of Germany. But for the pipeline to get moving, Nabucco would need an initial cash injection of an estimated 300 million euros. Both the EIB and EBRD said they were willing to invest in the early stages of the project through a series of loans, providing certain conditions are met. "The EIB is ready to finance projects that further EU objectives of increased sustainability and energy security," said Philippe Maystadt, president of the European Investment Bank, during the opening addresses by participants at the "Nabucco summit" in Hungary. The EIB is prepared to finance "up to 25 percent of project cost," provided a secure intergovernmental agreement on the Nabucco pipeline is reached, he said. Maystadt noted that of 48 billion euros of financing it provided last year, a quarter was for energy projects. EBRD President Thomas Mirow also offered financial backing to the Nabucco pipeline, on the condition that it "meets the requirements of solid project financing." The bank would need to see concrete plans and completion guarantees, besides a stable political agreement, said Mirow. EU wary of future gas crises Czech Prime Minister Mirek Topolanek, whose country currently holds the rotating presidency of the EU, spoke about the recent gas crisis caused by a pricing dispute between Russia and Ukraine that affected supplies to Europe. "A new crisis could emerge at any time, and next time it could be even worse," Topolanek said. He added that reaching an agreement on Nabucco is a "test of European solidarity." The latest gas row between Russia and Ukraine has highlighted Europe\'s need to diversify its energy sources and thrown the spotlight on Nabucco. But critics insist that the vast project will remain nothing but a pipe dream because its backers cannot guarantee that they will ever have sufficient gas supplies to make it profitable. EU Energy Commissioner Andris Piebalgs urged political leaders to commit firmly to Nabucco by the end of March, or risk jeopardizing the project. In his opening address as host, Hungarian Prime Minister Ferenc Gyurcsany called on the EU to provide 200 to 300 million euros within the next few weeks to get the construction of the pipeline off the ground. Gyurcsany stressed that he was not hoping for a loan, but rather for starting capital from the EU. US Deputy Assistant Secretary of State Matthew Bryza noted that the Tuesday summit had made it clear that Gyurcsany, who dismissed Nabucco as "a dream" in 2007, was now fully committed to the energy supply diversification project. On the supply side, Turkmenistan and Azerbaijan both indicated they would be willing to supply some of the gas. "Azerbaijan, which is according to current plans is a transit country, could eventually serve as a supplier as well," Azerbaijani President Ilham Aliyev said. Azerbaijan\'s gas reserves of some two or three trillion cubic meters would be sufficient to last "several decades," he said. Austrian Economy Minister Reinhold Mitterlehner suggested that Egypt and Iran could also be brought in as suppliers in the long term. But a deal currently seems unlikely with Iran given the long-running international standoff over its disputed nuclear program. Russia, Ukraine still wrangling Meanwhile, Russia and Ukraine were still wrangling over the details of the deal which ended their gas quarrel earlier this month. Ukrainian President Viktor Yushchenko said on Tuesday he would stand by the terms of the agreement with Russia, even though not all the details are to his liking. But Russian officials questioned his reliability, saying that the political rivalry between Yushchenko and Prime Minister Yulia Timoshenko could still lead Kiev to cancel the contract. "The agreements signed are not easy ones, but Ukraine fully takes up the performance (of its commitments) and guarantees full-fledged transit to European consumers," Yushchenko told journalists in Brussels after a meeting with the head of the European Commission, Jose Manuel Barroso. The assurance that Yushchenko would abide by the terms of the agreement finalized by Timoshenko was "an important step forward in allowing us to focus on our broader relationship," Barroso said. But the spokesman for Russian Prime Minister Vladimir Putin said that Moscow still feared that the growing rivalry between Yushchenko and Timoshenko, who are set to face off in next year\'s presidential election, could torpedo the deal. EU in talks to upgrade Ukraine\'s transit system Yushchenko\'s working breakfast with Barroso was dominated by the energy question, with both men highlighting the need to upgrade Ukraine\'s gas-transit system and build more links between Ukrainian and European energy markets. The commission is set to host an international conference aimed at gathering donations to upgrade Ukraine\'s gas-transit system on March 23 in Brussels. The EU and Ukraine have agreed to form a joint expert group to plan the meeting, the leaders said Tuesday. During the conflict, Barroso had warned that both Russia and Ukraine were damaging their credibility as reliable partners. But on Monday he said that "in bilateral relations, we are not taking any negative consequences from (the gas row) because we believe Ukraine wants to deepen the relationship with the EU, and we also want to deepen the relationship with Ukraine." He also said that "we have to state very clearly that we were disappointed by the problems between Ukraine and Russia," and called for political stability and reform in Ukraine. His diplomatic balancing act is likely to have a frosty reception in Moscow, where Peskov said that Russia "would prefer to hear from the European states a very serious and severe evaluation of who is guilty for interrupting the transit."',

'tags': "['all', 'train']",

'mentions': [{'begin': 9,

'end': 29,

'text': 'Nabucco Gas Pipeline',

'concept': 1,

'candidates': [],

'scores': []},

{'begin': 287,

'end': 293,

'text': 'Europe',

'concept': 2,

'candidates': ['Europe',

'UEFA',

'Europe_(band)',

'UEFA_competitions',

'European_Athletic_Association',

'European_theatre_of_World_War_II',

'European_Union',

'Europe_(dinghy)',

'European_Cricket_Council',

'UEFA_Champions_League',

'Senior_League_World_Series_(Europe–Africa_Region)',

'Big_League_World_Series_(Europe–Africa_Region)',

'Sailing_at_the_2004_Summer_Olympics_–_Europe',

'Neolithic_Europe',

'History_of_Europe',

'Europe_(magazine)'],

'scores': [0.8408304452896118,

0.10987312346696854,

0.01377162616699934,

0.002099192701280117,

0.0015916954725980759,

0.0015686274273321033,

0.001522491336800158,

0.0013148789294064045,

0.0012456747936084867,

0.000991926179267466,

0.0008073817589320242,

0.0007843137136660516,

0.000761245668400079,

0.0006920415326021612,

0.0005536332027986646,

0.000530565157532692]},

0.00554528646171093,

0.004390018526464701,

0.003234750358387828,

0.002772643230855465,

0.001617375179193914]},

{'begin': 6757,

'end': 6765,

'text': 'European',

'concept': 13,

'candidates': None,

'scores': []}],

'concepts': [{'concept': 0,

'text': 'European Investment Bank',

'keyword': True,

'count': 5,

'link': 'European_Investment_Bank',

'tags': ['iptc::11000000',

'slot::keyword',

'topic::politics',

'type::entity',

'type::igo',

'type::organization']},

{'concept': 66,

'text': None,

'keyword': False,

'count': 0,

'link': 'Czech_Republic',

'tags': []}],

'relations': [{'s': 0, 'p': 'institution_of', 'o': 2},

{'s': 0, 'p': 'part_of', 'o': 2},

{'s': 3, 'p': 'institution_of', 'o': 2},

{'s': 3, 'p': 'part_of', 'o': 2},

{'s': 6, 'p': 'head_of', 'o': 0},

{'s': 6, 'p': 'member_of', 'o': 0},

{'s': 7, 'p': 'agent_of', 'o': 4},

{'s': 7, 'p': 'citizen_of', 'o': 4},

{'s': 7, 'p': 'citizen_of-x', 'o': 55},

{'s': 7, 'p': 'head_of_state', 'o': 4},

{'s': 7, 'p': 'head_of_state-x', 'o': 55},

{'s': 8, 'p': 'agent_of', 'o': 4},

{'s': 8, 'p': 'citizen_of', 'o': 4},

{'s': 8, 'p': 'citizen_of-x', 'o': 55},

{'s': 8, 'p': 'head_of_gov', 'o': 4},

{'s': 8, 'p': 'head_of_gov-x', 'o': 55},

{'s': 9, 'p': 'head_of', 'o': 59},

{'s': 9, 'p': 'member_of', 'o': 59},

{'s': 10, 'p': 'head_of', 'o': 3},

{'s': 10, 'p': 'member_of', 'o': 3},

{'s': 11, 'p': 'citizen_of', 'o': 66},

{'s': 11, 'p': 'citizen_of-x', 'o': 36},

{'s': 11, 'p': 'head_of_state', 'o': 66},

{'s': 11, 'p': 'head_of_state-x', 'o': 36},

{'s': 12, 'p': 'agent_of', 'o': 24},

{'s': 12, 'p': 'citizen_of', 'o': 24},

{'s': 12, 'p': 'citizen_of-x', 'o': 15},

{'s': 12, 'p': 'head_of_gov', 'o': 24},

{'s': 12, 'p': 'head_of_gov-x', 'o': 15},

{'s': 15, 'p': 'gpe0', 'o': 24},

{'s': 22, 'p': 'based_in0', 'o': 18},

{'s': 22, 'p': 'based_in0-x', 'o': 50},

{'s': 23, 'p': 'based_in0', 'o': 24},

{'s': 23, 'p': 'based_in0-x', 'o': 15},

{'s': 25, 'p': 'based_in0', 'o': 26},

{'s': 27, 'p': 'based_in0', 'o': 28},

{'s': 29, 'p': 'based_in0', 'o': 17},

{'s': 30, 'p': 'based_in0', 'o': 31},

{'s': 33, 'p': 'event_in0', 'o': 24},

{'s': 36, 'p': 'gpe0', 'o': 66},

{'s': 38, 'p': 'member_of', 'o': 2},

{'s': 43, 'p': 'agent_of', 'o': 41},

{'s': 43, 'p': 'citizen_of', 'o': 41},

{'s': 48, 'p': 'gpe0', 'o': 47},

{'s': 49, 'p': 'agent_of', 'o': 47},

{'s': 49, 'p': 'citizen_of', 'o': 47},

{'s': 49, 'p': 'citizen_of-x', 'o': 48},

{'s': 49, 'p': 'head_of_state', 'o': 47},

{'s': 49, 'p': 'head_of_state-x', 'o': 48},

{'s': 50, 'p': 'gpe0', 'o': 18},

{'s': 52, 'p': 'agent_of', 'o': 18},

{'s': 52, 'p': 'citizen_of', 'o': 18},

{'s': 52, 'p': 'citizen_of-x', 'o': 50},

{'s': 52, 'p': 'minister_of', 'o': 18},

{'s': 52, 'p': 'minister_of-x', 'o': 50},

{'s': 55, 'p': 'gpe0', 'o': 4},

{'s': 56, 'p': 'gpe0', 'o': 5},

{'s': 57, 'p': 'in0', 'o': 4},

{'s': 57, 'p': 'in0-x', 'o': 55},

{'s': 58, 'p': 'in0', 'o': 65},

{'s': 59, 'p': 'institution_of', 'o': 2},

{'s': 59, 'p': 'part_of', 'o': 2},

{'s': 60, 'p': 'agent_of', 'o': 5},

{'s': 60, 'p': 'citizen_of', 'o': 5},

{'s': 60, 'p': 'citizen_of-x', 'o': 56},

{'s': 60, 'p': 'head_of_gov', 'o': 5},

{'s': 60, 'p': 'head_of_gov-x', 'o': 56},

{'s': 61, 'p': 'in0', 'o': 5},

{'s': 61, 'p': 'in0-x', 'o': 56}],

'frames': [{'type': 'none', 'slots': []}],

'iptc': ['04000000',

'11000000',

'20000344',

'20000346',

'20000378',

'20000638']}

```

### Data Fields

- `id` : unique identifier of the article.

- `content` : textual content of the article downloaded with src/dwie_download.py script.

- `tags` : used to differentiate between train and test sets of documents.

- `mentions`: a list of entity mentions in the article each with the following keys:

- `begin` : offset of the first character of the mention (inside content field).

- `end` : offset of the last character of the mention (inside content field).

- `text` : the textual representation of the entity mention.

- `concept` : the id of the entity that represents the entity mention (multiple entity mentions in the article can refer to the same concept).

- `candidates` : the candidate Wikipedia links.

- `scores` : the prior probabilities of the candidates entity links calculated on Wikipedia corpus.

- `concepts` : a list of entities that cluster each of the entity mentions. Each entity is annotated with the following keys:

- `concept` : the unique document-level entity id.

- `text` : the text of the longest mention that belong to the entity.

- `keyword` : indicates whether the entity is a keyword.

- `count` : the number of entity mentions in the document that belong to the entity.

- `link` : the entity link to Wikipedia.

- `tags` : multi-label classification labels associated to the entity.

- `relations` : a list of document-level relations between entities (concepts). Each of the relations is annotated with the following keys:

- `s` : the subject entity id involved in the relation.

- `p` : the predicate that defines the relation name (i.e., "citizen_of", "member_of", etc.).

- `o` : the object entity id involved in the relation.

- `iptc` : multi-label article IPTC classification codes. For detailed meaning of each of the codes, please refer to the official IPTC code list.

## Dataset Creation

### Curation Rationale

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the source language producers?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Annotations

#### Annotation process

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

#### Who are the annotators?

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Personal and Sensitive Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Discussion of Biases

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Other Known Limitations

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

## Additional Information

### Dataset Curators

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Licensing Information

[More Information Needed](https://github.com/huggingface/datasets/blob/master/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards)

### Citation Information

```

@article{zaporojets2021dwie,

title={DWIE: An entity-centric dataset for multi-task document-level information extraction},

author={Zaporojets, Klim and Deleu, Johannes and Develder, Chris and Demeester, Thomas},

journal={Information Processing \& Management},

volume={58},

number={4},

pages={102563},

year={2021},

publisher={Elsevier}

}

```

### Contributions

Thanks to [@basvoju](https://github.com/basvoju) for adding this dataset. |

niizam/4chan-datasets | ---

license: unlicense

task_categories:

- text-generation

language:

- en

tags:

- not-for-all-audiences

---

Please see [repo](https://github.com/niizam/4chan-datasets) to turn the text file into json/csv format

Deleted some boards, since they are already archived by https://archive.4plebs.org/ |

taka-yayoi/databricks-dolly-15k-ja | ---

license: cc-by-sa-3.0

---

こちらのデータセットを活用させていただき、Dollyのトレーニングスクリプトで使えるように列名の変更とJSONLへの変換を行っています。

https://huggingface.co/datasets/kunishou/databricks-dolly-15k-ja

Dolly

https://github.com/databrickslabs/dolly |

renumics/cifar100-enriched | ---

license: mit

task_categories:

- image-classification

pretty_name: CIFAR-100

source_datasets:

- extended|other-80-Million-Tiny-Images

paperswithcode_id: cifar-100

size_categories:

- 10K<n<100K

tags:

- image classification

- cifar-100

- cifar-100-enriched

- embeddings

- enhanced

- spotlight

- renumics

language:

- en

multilinguality:

- monolingual

annotations_creators:

- crowdsourced

language_creators:

- found

---

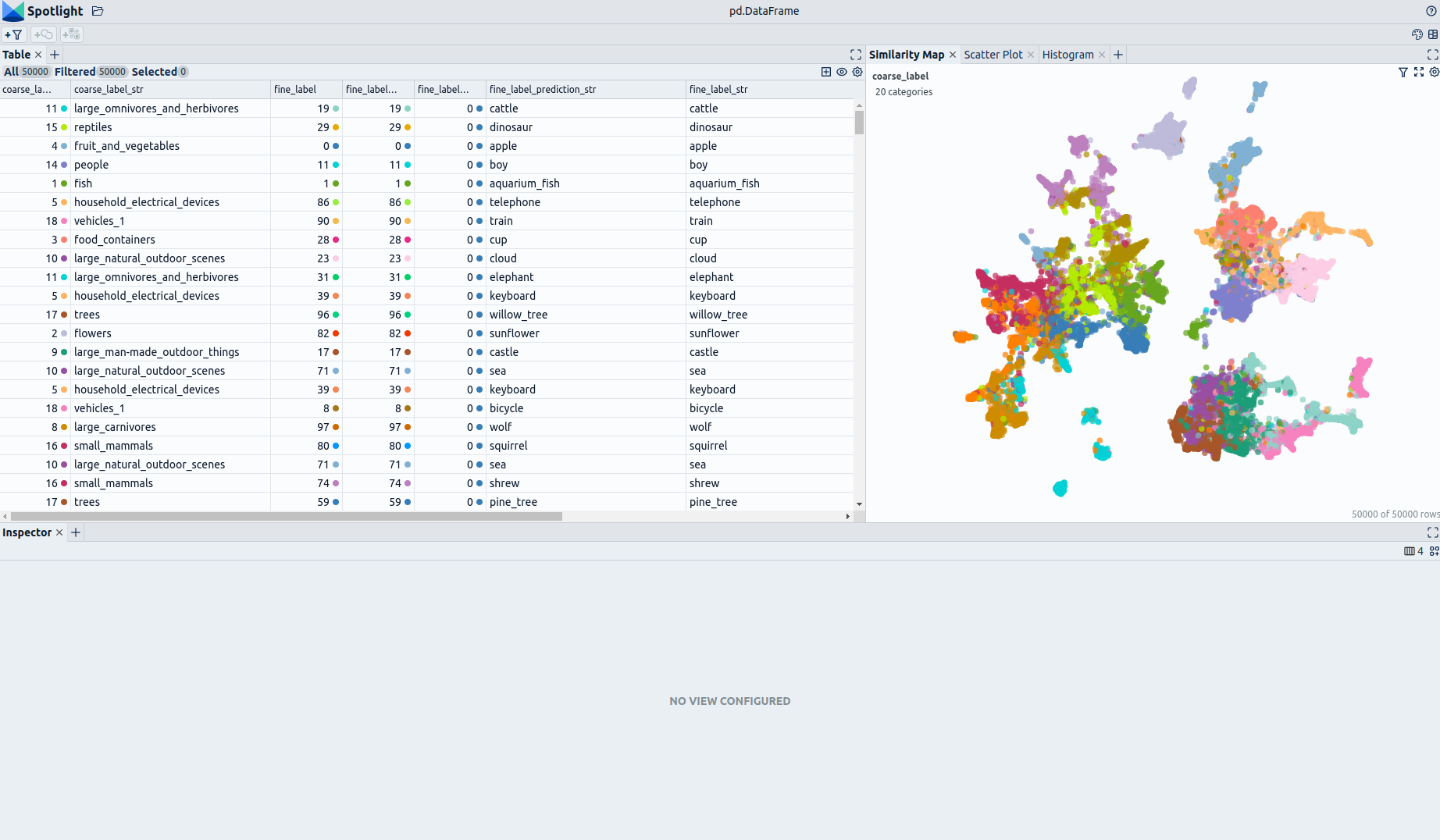

# Dataset Card for CIFAR-100-Enriched (Enhanced by Renumics)

## Dataset Description

- **Homepage:** [Renumics Homepage](https://renumics.com/?hf-dataset-card=cifar100-enriched)

- **GitHub** [Spotlight](https://github.com/Renumics/spotlight)

- **Dataset Homepage** [CS Toronto Homepage](https://www.cs.toronto.edu/~kriz/cifar.html#:~:text=The%20CIFAR%2D100%20dataset)

- **Paper:** [Learning Multiple Layers of Features from Tiny Images](https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf)

### Dataset Summary

📊 [Data-centric AI](https://datacentricai.org) principles have become increasingly important for real-world use cases.

At [Renumics](https://renumics.com/?hf-dataset-card=cifar100-enriched) we believe that classical benchmark datasets and competitions should be extended to reflect this development.

🔍 This is why we are publishing benchmark datasets with application-specific enrichments (e.g. embeddings, baseline results, uncertainties, label error scores). We hope this helps the ML community in the following ways:

1. Enable new researchers to quickly develop a profound understanding of the dataset.

2. Popularize data-centric AI principles and tooling in the ML community.

3. Encourage the sharing of meaningful qualitative insights in addition to traditional quantitative metrics.

📚 This dataset is an enriched version of the [CIFAR-100 Dataset](https://www.cs.toronto.edu/~kriz/cifar.html).

### Explore the Dataset

The enrichments allow you to quickly gain insights into the dataset. The open source data curation tool [Renumics Spotlight](https://github.com/Renumics/spotlight) enables that with just a few lines of code:

Install datasets and Spotlight via [pip](https://packaging.python.org/en/latest/key_projects/#pip):

```python

!pip install renumics-spotlight datasets

```

Load the dataset from huggingface in your notebook:

```python

import datasets

dataset = datasets.load_dataset("renumics/cifar100-enriched", split="train")

```

Start exploring with a simple view that leverages embeddings to identify relevant data segments:

```python

from renumics import spotlight

df = dataset.to_pandas()

df_show = df.drop(columns=['embedding', 'probabilities'])

spotlight.show(df_show, port=8000, dtype={"image": spotlight.Image, "embedding_reduced": spotlight.Embedding})

```

You can use the UI to interactively configure the view on the data. Depending on the concrete tasks (e.g. model comparison, debugging, outlier detection) you might want to leverage different enrichments and metadata.

### CIFAR-100 Dataset

The CIFAR-100 dataset consists of 60000 32x32 colour images in 100 classes, with 600 images per class. There are 50000 training images and 10000 test images.

The 100 classes in the CIFAR-100 are grouped into 20 superclasses. Each image comes with a "fine" label (the class to which it belongs) and a "coarse" label (the superclass to which it belongs).

The classes are completely mutually exclusive.

We have enriched the dataset by adding **image embeddings** generated with a [Vision Transformer](https://huggingface.co/google/vit-base-patch16-224).

Here is the list of classes in the CIFAR-100:

| Superclass | Classes |

|---------------------------------|----------------------------------------------------|

| aquatic mammals | beaver, dolphin, otter, seal, whale |

| fish | aquarium fish, flatfish, ray, shark, trout |

| flowers | orchids, poppies, roses, sunflowers, tulips |

| food containers | bottles, bowls, cans, cups, plates |

| fruit and vegetables | apples, mushrooms, oranges, pears, sweet peppers |

| household electrical devices | clock, computer keyboard, lamp, telephone, television|

| household furniture | bed, chair, couch, table, wardrobe |

| insects | bee, beetle, butterfly, caterpillar, cockroach |

| large carnivores | bear, leopard, lion, tiger, wolf |

| large man-made outdoor things | bridge, castle, house, road, skyscraper |

| large natural outdoor scenes | cloud, forest, mountain, plain, sea |

| large omnivores and herbivores | camel, cattle, chimpanzee, elephant, kangaroo |

| medium-sized mammals | fox, porcupine, possum, raccoon, skunk |

| non-insect invertebrates | crab, lobster, snail, spider, worm |

| people | baby, boy, girl, man, woman |

| reptiles | crocodile, dinosaur, lizard, snake, turtle |

| small mammals | hamster, mouse, rabbit, shrew, squirrel |

| trees | maple, oak, palm, pine, willow |

| vehicles 1 | bicycle, bus, motorcycle, pickup truck, train |

| vehicles 2 | lawn-mower, rocket, streetcar, tank, tractor |

### Supported Tasks and Leaderboards

- `image-classification`: The goal of this task is to classify a given image into one of 100 classes. The leaderboard is available [here](https://paperswithcode.com/sota/image-classification-on-cifar-100).

### Languages

English class labels.

## Dataset Structure

### Data Instances

A sample from the training set is provided below:

```python

{

'image': '/huggingface/datasets/downloads/extracted/f57c1a3fbca36f348d4549e820debf6cc2fe24f5f6b4ec1b0d1308a80f4d7ade/0/0.png',

'full_image': <PIL.PngImagePlugin.PngImageFile image mode=RGB size=32x32 at 0x7F15737C9C50>,

'fine_label': 19,

'coarse_label': 11,

'fine_label_str': 'cattle',

'coarse_label_str': 'large_omnivores_and_herbivores',

'fine_label_prediction': 19,

'fine_label_prediction_str': 'cattle',

'fine_label_prediction_error': 0,

'split': 'train',

'embedding': [-1.2482988834381104,

0.7280710339546204, ...,

0.5312759280204773],

'probabilities': [4.505949982558377e-05,

7.286163599928841e-05, ...,

6.577593012480065e-05],

'embedding_reduced': [1.9439491033554077, -5.35720682144165]

}

```

### Data Fields

| Feature | Data Type |

|---------------------------------|------------------------------------------------|

| image | Value(dtype='string', id=None) |

| full_image | Image(decode=True, id=None) |

| fine_label | ClassLabel(names=[...], id=None) |

| coarse_label | ClassLabel(names=[...], id=None) |

| fine_label_str | Value(dtype='string', id=None) |

| coarse_label_str | Value(dtype='string', id=None) |

| fine_label_prediction | ClassLabel(names=[...], id=None) |

| fine_label_prediction_str | Value(dtype='string', id=None) |

| fine_label_prediction_error | Value(dtype='int32', id=None) |

| split | Value(dtype='string', id=None) |

| embedding | Sequence(feature=Value(dtype='float32', id=None), length=768, id=None) |

| probabilities | Sequence(feature=Value(dtype='float32', id=None), length=100, id=None) |

| embedding_reduced | Sequence(feature=Value(dtype='float32', id=None), length=2, id=None) |

### Data Splits

| Dataset Split | Number of Images in Split | Samples per Class (fine) |

| ------------- |---------------------------| -------------------------|

| Train | 50000 | 500 |

| Test | 10000 | 100 |

## Dataset Creation

### Curation Rationale

The CIFAR-10 and CIFAR-100 are labeled subsets of the [80 million tiny images](http://people.csail.mit.edu/torralba/tinyimages/) dataset.

They were collected by Alex Krizhevsky, Vinod Nair, and Geoffrey Hinton.

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

If you use this dataset, please cite the following paper:

```

@article{krizhevsky2009learning,

added-at = {2021-01-21T03:01:11.000+0100},

author = {Krizhevsky, Alex},

biburl = {https://www.bibsonomy.org/bibtex/2fe5248afe57647d9c85c50a98a12145c/s364315},

interhash = {cc2d42f2b7ef6a4e76e47d1a50c8cd86},

intrahash = {fe5248afe57647d9c85c50a98a12145c},

keywords = {},

pages = {32--33},

timestamp = {2021-01-21T03:01:11.000+0100},

title = {Learning Multiple Layers of Features from Tiny Images},

url = {https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf},

year = 2009

}

```

### Contributions

Alex Krizhevsky, Vinod Nair, Geoffrey Hinton, and Renumics GmbH. |

c3po-ai/edgar-corpus | ---

dataset_info:

- config_name: .

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 40306320885

num_examples: 220375

download_size: 10734208660

dataset_size: 40306320885

- config_name: full

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 32237457024

num_examples: 176289

- name: validation

num_bytes: 4023129683

num_examples: 22050

- name: test

num_bytes: 4045734178

num_examples: 22036

download_size: 40699852536

dataset_size: 40306320885

- config_name: year_1993

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 112714537

num_examples: 1060

- name: validation

num_bytes: 13584432

num_examples: 133

- name: test

num_bytes: 14520566

num_examples: 133

download_size: 141862572

dataset_size: 140819535

- config_name: year_1994

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 198955093

num_examples: 2083

- name: validation

num_bytes: 23432307

num_examples: 261

- name: test

num_bytes: 26115768

num_examples: 260

download_size: 250411041

dataset_size: 248503168

- config_name: year_1995

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 356959049

num_examples: 4110

- name: validation

num_bytes: 42781161

num_examples: 514

- name: test

num_bytes: 45275568

num_examples: 514

download_size: 448617549

dataset_size: 445015778

- config_name: year_1996

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 738506135

num_examples: 7589

- name: validation

num_bytes: 89873905

num_examples: 949

- name: test

num_bytes: 91248882

num_examples: 949

download_size: 926536700

dataset_size: 919628922

- config_name: year_1997

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 854201733

num_examples: 8084

- name: validation

num_bytes: 103167272

num_examples: 1011

- name: test

num_bytes: 106843950

num_examples: 1011

download_size: 1071898139

dataset_size: 1064212955

- config_name: year_1998

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 904075497

num_examples: 8040

- name: validation

num_bytes: 112630658

num_examples: 1006

- name: test

num_bytes: 113308750

num_examples: 1005

download_size: 1137887615

dataset_size: 1130014905

- config_name: year_1999

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 911374885

num_examples: 7864

- name: validation

num_bytes: 118614261

num_examples: 984

- name: test

num_bytes: 116706581

num_examples: 983

download_size: 1154736765

dataset_size: 1146695727

- config_name: year_2000

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 926444625

num_examples: 7589

- name: validation

num_bytes: 113264749

num_examples: 949

- name: test

num_bytes: 114605470

num_examples: 949

download_size: 1162526814

dataset_size: 1154314844

- config_name: year_2001

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 964631161

num_examples: 7181

- name: validation

num_bytes: 117509010

num_examples: 898

- name: test

num_bytes: 116141097

num_examples: 898

download_size: 1207790205

dataset_size: 1198281268

- config_name: year_2002

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 1049271720

num_examples: 6636

- name: validation

num_bytes: 128339491

num_examples: 830

- name: test

num_bytes: 128444184

num_examples: 829

download_size: 1317817728

dataset_size: 1306055395

- config_name: year_2003

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 1027557690

num_examples: 6672

- name: validation

num_bytes: 126684704

num_examples: 834

- name: test

num_bytes: 130672979

num_examples: 834

download_size: 1297227566

dataset_size: 1284915373

- config_name: year_2004

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 1129657843

num_examples: 7111

- name: validation

num_bytes: 147499772

num_examples: 889

- name: test

num_bytes: 147890092

num_examples: 889

download_size: 1439663100

dataset_size: 1425047707

- config_name: year_2005

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 1200714441

num_examples: 7113

- name: validation

num_bytes: 161003977

num_examples: 890

- name: test

num_bytes: 160727195

num_examples: 889

download_size: 1538876195

dataset_size: 1522445613

- config_name: year_2006

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 1286566049

num_examples: 7064

- name: validation

num_bytes: 160843494

num_examples: 883

- name: test

num_bytes: 163270601

num_examples: 883

download_size: 1628452618

dataset_size: 1610680144

- config_name: year_2007

features:

- name: filename

dtype: string

- name: cik

dtype: string

- name: year

dtype: string

- name: section_1

dtype: string

- name: section_1A

dtype: string

- name: section_1B

dtype: string

- name: section_2

dtype: string

- name: section_3

dtype: string

- name: section_4

dtype: string

- name: section_5

dtype: string

- name: section_6

dtype: string

- name: section_7

dtype: string

- name: section_7A

dtype: string

- name: section_8

dtype: string

- name: section_9

dtype: string

- name: section_9A

dtype: string

- name: section_9B

dtype: string

- name: section_10

dtype: string

- name: section_11

dtype: string

- name: section_12

dtype: string

- name: section_13

dtype: string

- name: section_14

dtype: string

- name: section_15

dtype: string

splits:

- name: train

num_bytes: 1296737173

num_examples: 6683

- name: validation