repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

gabrieleai/gamindocar-3000-700 | gabrieleai | null | 15 | 31 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 624 | false | ### Gamindocar-3000-700 Dreambooth model trained by gabrieleai with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

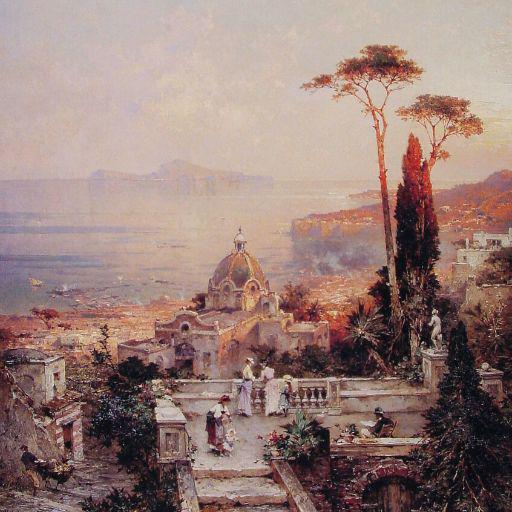

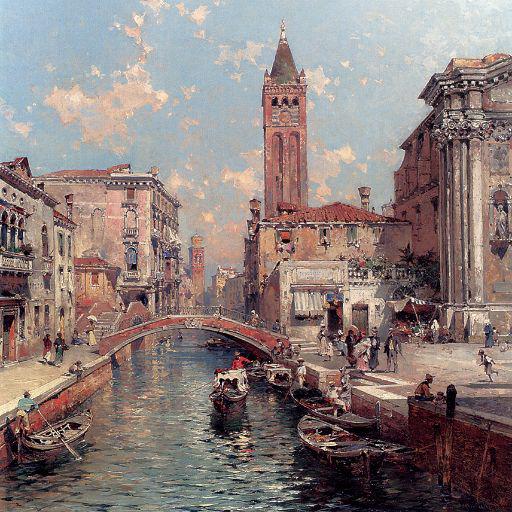

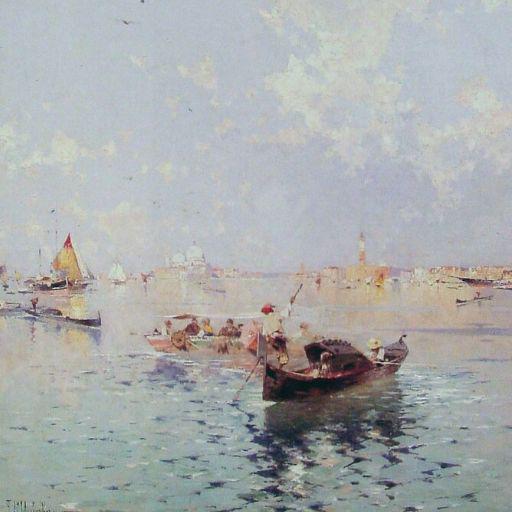

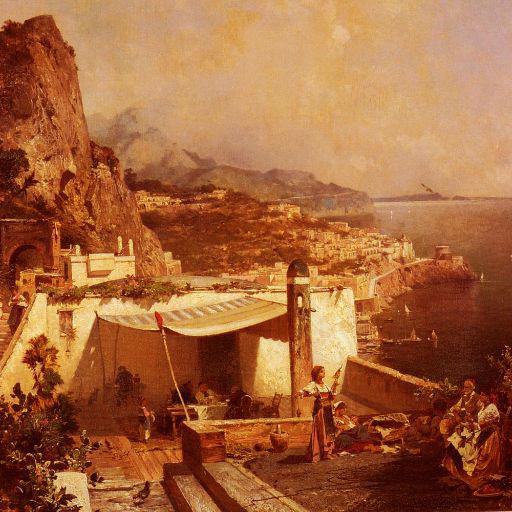

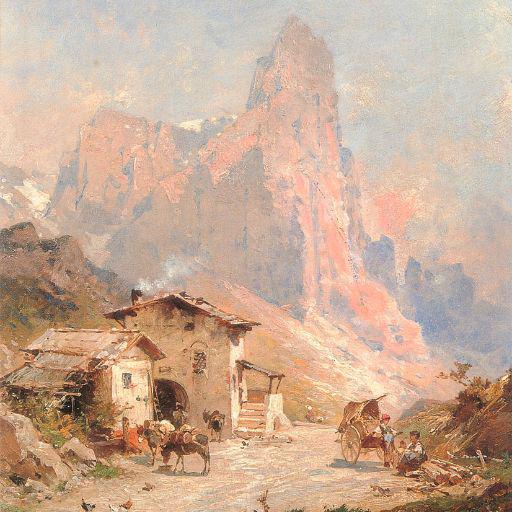

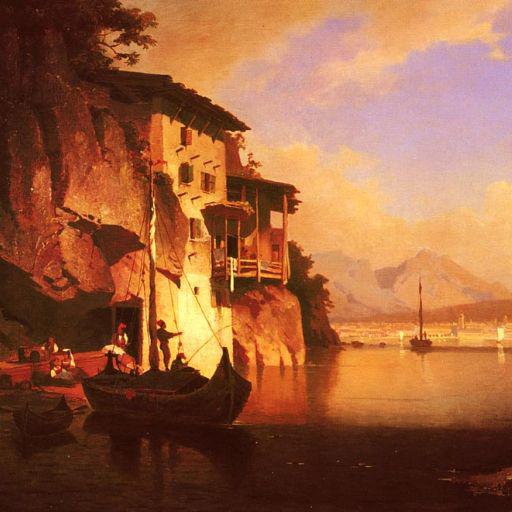

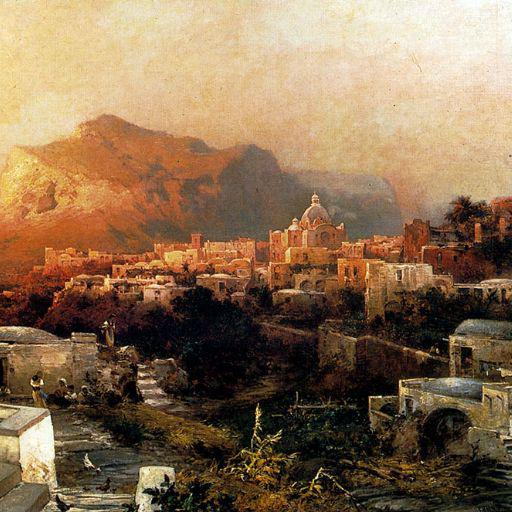

Sample pictures of this concept:

| b1249a1d409e9f73bed623272b4379d7 |

francisco-perez-sorrosal/distilbert-base-uncased-finetuned-with-spanish-tweets-clf | francisco-perez-sorrosal | distilbert | 10 | 171 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['dataset'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,660 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-with-spanish-tweets-clf

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the dataset dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0580

- Accuracy: 0.5701

- F1: 0.5652

- Precision: 0.5666

- Recall: 0.5642

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:---------:|:------:|

| 1.0643 | 1.0 | 543 | 1.0457 | 0.4423 | 0.2761 | 0.5104 | 0.3712 |

| 0.9754 | 2.0 | 1086 | 0.9700 | 0.5155 | 0.4574 | 0.5190 | 0.4712 |

| 0.8145 | 3.0 | 1629 | 0.9691 | 0.5556 | 0.5544 | 0.5616 | 0.5506 |

| 0.6318 | 4.0 | 2172 | 1.0580 | 0.5701 | 0.5652 | 0.5666 | 0.5642 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1

- Datasets 2.8.0

- Tokenizers 0.13.2

| 8e576a6e120e41c109192088dbb45963 |

jy46604790/Fake-News-Bert-Detect | jy46604790 | roberta | 8 | 198 | transformers | 2 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 2,108 | false |

# Fake News Recognition

## Overview

This model is trained by over 40,000 news from different medias based on the 'roberta-base'. It can give result by simply entering the text of the news less than 500 words(the excess will be truncated automatically).

LABEL_0: Fake news

LABEL_1: Real news

## Qucik Tutorial

### Download The Model

```python

from transformers import pipeline

MODEL = "jy46604790/Fake-News-Bert-Detect"

clf = pipeline("text-classification", model=MODEL, tokenizer=MODEL)

```

### Feed Data

```python

text = "Indonesian police have recaptured a U.S. citizen who escaped a week ago from an overcrowded prison on the holiday island of Bali, the jail s second breakout of foreign inmates this year. Cristian Beasley from California was rearrested on Sunday, Badung Police chief Yudith Satria Hananta said, without providing further details. Beasley was a suspect in crimes related to narcotics but had not been sentenced when he escaped from Kerobokan prison in Bali last week. The 32-year-old is believed to have cut through bars in the ceiling of his cell before scaling a perimeter wall of the prison in an area being refurbished. The Kerobokan prison, about 10 km (six miles) from the main tourist beaches in the Kuta area, often holds foreigners facing drug-related charges. Representatives of Beasley could not immediately be reached for comment. In June, an Australian, a Bulgarian, an Indian and a Malaysian tunneled to freedom about 12 meters (13 yards) under Kerobokan prison s walls. The Indian and the Bulgarian were caught soon after in neighboring East Timor, but Australian Shaun Edward Davidson and Malaysian Tee Kok King remain at large. Davidson has taunted authorities by saying he was enjoying life in various parts of the world, in purported posts on Facebook. Kerobokan has housed a number of well-known foreign drug convicts, including Australian Schappelle Corby, whose 12-1/2-year sentence for marijuana smuggling got huge media attention."

```

### Result

```python

result = clf(text)

result

```

output:[{'label': 'LABEL_1', 'score': 0.9994995594024658}] | 8728088caf478817ffda650aae7b9058 |

google/t5-efficient-tiny-nl24 | google | t5 | 12 | 7 | transformers | 0 | text2text-generation | true | true | true | apache-2.0 | ['en'] | ['c4'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['deep-narrow'] | false | true | true | 6,251 | false |

# T5-Efficient-TINY-NL24 (Deep-Narrow version)

T5-Efficient-TINY-NL24 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-tiny-nl24** - is of model type **Tiny** with the following variations:

- **nl** is **24**

It has **52.35** million parameters and thus requires *ca.* **209.41 MB** of memory in full precision (*fp32*)

or **104.71 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future. | 62cf5684fb6cdd59e1e9e27854eeb37c |

xander71988/t5-small-finetuned-facet-contract-type-test | xander71988 | t5 | 8 | 2 | transformers | 0 | text2text-generation | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 1,360 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# xander71988/t5-small-finetuned-facet-contract-type-test

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 1.3136

- Validation Loss: 0.3881

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5.6e-05, 'decay_steps': 3496, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 1.3136 | 0.3881 | 0 |

### Framework versions

- Transformers 4.25.1

- TensorFlow 2.5.0

- Datasets 2.3.2

- Tokenizers 0.13.2

| e3ca5ed20b98a162a620332a9892f5ae |

richielo/small-e-czech-finetuned-ner-wikiann | richielo | electra | 14 | 99 | transformers | 1 | token-classification | true | false | false | cc-by-4.0 | null | ['wikiann'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 3,131 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# small-e-czech-finetuned-ner-wikiann

This model is a fine-tuned version of [Seznam/small-e-czech](https://huggingface.co/Seznam/small-e-czech) on the wikiann dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2547

- Precision: 0.8713

- Recall: 0.8970

- F1: 0.8840

- Accuracy: 0.9557

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2924 | 1.0 | 2500 | 0.2449 | 0.7686 | 0.8088 | 0.7882 | 0.9320 |

| 0.2042 | 2.0 | 5000 | 0.2137 | 0.8050 | 0.8398 | 0.8220 | 0.9400 |

| 0.1699 | 3.0 | 7500 | 0.1912 | 0.8236 | 0.8593 | 0.8411 | 0.9466 |

| 0.1419 | 4.0 | 10000 | 0.1931 | 0.8349 | 0.8671 | 0.8507 | 0.9488 |

| 0.1316 | 5.0 | 12500 | 0.1892 | 0.8470 | 0.8776 | 0.8620 | 0.9519 |

| 0.1042 | 6.0 | 15000 | 0.2058 | 0.8433 | 0.8811 | 0.8618 | 0.9508 |

| 0.0884 | 7.0 | 17500 | 0.2020 | 0.8602 | 0.8849 | 0.8724 | 0.9531 |

| 0.0902 | 8.0 | 20000 | 0.2118 | 0.8551 | 0.8837 | 0.8692 | 0.9528 |

| 0.0669 | 9.0 | 22500 | 0.2171 | 0.8634 | 0.8906 | 0.8768 | 0.9550 |

| 0.0529 | 10.0 | 25000 | 0.2228 | 0.8638 | 0.8912 | 0.8773 | 0.9545 |

| 0.0613 | 11.0 | 27500 | 0.2293 | 0.8626 | 0.8898 | 0.8760 | 0.9544 |

| 0.0549 | 12.0 | 30000 | 0.2276 | 0.8694 | 0.8958 | 0.8824 | 0.9554 |

| 0.0516 | 13.0 | 32500 | 0.2384 | 0.8717 | 0.8940 | 0.8827 | 0.9552 |

| 0.0412 | 14.0 | 35000 | 0.2443 | 0.8701 | 0.8931 | 0.8815 | 0.9554 |

| 0.0345 | 15.0 | 37500 | 0.2464 | 0.8723 | 0.8958 | 0.8839 | 0.9557 |

| 0.0412 | 16.0 | 40000 | 0.2477 | 0.8705 | 0.8948 | 0.8825 | 0.9552 |

| 0.0363 | 17.0 | 42500 | 0.2525 | 0.8742 | 0.8973 | 0.8856 | 0.9559 |

| 0.0341 | 18.0 | 45000 | 0.2529 | 0.8727 | 0.8962 | 0.8843 | 0.9561 |

| 0.0194 | 19.0 | 47500 | 0.2533 | 0.8699 | 0.8966 | 0.8830 | 0.9557 |

| 0.0247 | 20.0 | 50000 | 0.2547 | 0.8713 | 0.8970 | 0.8840 | 0.9557 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 1.18.4

- Tokenizers 0.11.6

| d971a085071f1f4f6bf8e95f244d43ae |

marcus2000/ru_t5_model_forlegaltext_rouge | marcus2000 | mt5 | 18 | 1 | transformers | 0 | text2text-generation | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 2,337 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# multilingual_t5_model_for_law_simplification

This model is a fine-tuned version of [google/mt5-base](https://huggingface.co/google/mt5-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: nan

- Rouge1: 0.2857

- Rouge2: 0.0

- Rougel: 0.2857

- Rougelsum: 0.2857

- Gen Len: 7.9033

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.002

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| No log | 1.0 | 157 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| No log | 2.0 | 314 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| No log | 3.0 | 471 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 4.0 | 628 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 5.0 | 785 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 6.0 | 942 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 7.0 | 1099 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 8.0 | 1256 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 9.0 | 1413 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

| 0.0 | 10.0 | 1570 | nan | 0.2857 | 0.0 | 0.2857 | 0.2857 | 7.9033 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

| ac428f33e250ce23486d9a6d1a6661a1 |

PlanTL-GOB-ES/roberta-large-bne-te | PlanTL-GOB-ES | roberta | 9 | 214 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['es'] | ['xnli'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['national library of spain', 'spanish', 'bne', 'xnli', 'textual entailment'] | true | true | true | 7,219 | false |

# Spanish RoBERTa-large trained on BNE finetuned for the Spanish Cross-lingual Natural Language Inference (XNLI) dataset.

## Table of contents

<details>

<summary>Click to expand</summary>

- [Model description](#model-description)

- [Intended uses and limitations](#intended-use)

- [How to use](#how-to-use)

- [Limitations and bias](#limitations-and-bias)

- [Training](#training)

- [Training](#training)

- [Training data](#training-data)

- [Training procedure](#training-procedure)

- [Evaluation](#evaluation)

- [Evaluation](#evaluation)

- [Variable and metrics](#variable-and-metrics)

- [Evaluation results](#evaluation-results)

- [Additional information](#additional-information)

- [Author](#author)

- [Contact information](#contact-information)

- [Copyright](#copyright)

- [Licensing information](#licensing-information)

- [Funding](#funding)

- [Citing information](#citing-information)

- [Disclaimer](#disclaimer)

</details>

## Model description

The **roberta-large-bne-te** is a Textual Entailment (TE) model for the Spanish language fine-tuned from the [roberta-large-bne](https://huggingface.co/PlanTL-GOB-ES/roberta-large-bne) model, a [RoBERTa](https://arxiv.org/abs/1907.11692) large model pre-trained using the largest Spanish corpus known to date, with a total of 570GB of clean and deduplicated text, processed for this work, compiled from the web crawlings performed by the [National Library of Spain (Biblioteca Nacional de España)](http://www.bne.es/en/Inicio/index.html) from 2009 to 2019.

## Intended uses and limitations

**roberta-large-bne-te** model can be used to recognize Textual Entailment (TE). The model is limited by its training dataset and may not generalize well for all use cases.

## How to use

Here is how to use this model:

```python

from transformers import pipeline

from pprint import pprint

nlp = pipeline("text-classification", model="PlanTL-GOB-ES/roberta-large-bne-te")

example = "Mi cumpleaños es el 27 de mayo. Cumpliré años a finales de mayo."

te_results = nlp(example)

pprint(te_results)

```

## Limitations and bias

At the time of submission, no measures have been taken to estimate the bias embedded in the model. However, we are well aware that our models may be biased since the corpora have been collected using crawling techniques on multiple web sources. We intend to conduct research in these areas in the future, and if completed, this model card will be updated.

## Training

We used the TE dataset in Spanish called [XNLI dataset](https://huggingface.co/datasets/xnli) for training and evaluation.

### Training procedure

The model was trained with a batch size of 16 and a learning rate of 1e-5 for 5 epochs. We then selected the best checkpoint using the downstream task metric in the corresponding development set and then evaluated it on the test set.

## Evaluation

### Variable and metrics

This model was finetuned maximizing accuracy.

## Evaluation results

We evaluated the *roberta-large-bne-te* on the XNLI test set against standard multilingual and monolingual baselines:

| Model | XNLI (Accuracy) |

| ------------|:----|

| roberta-large-bne | **82.63** |

| roberta-base-bne | 80.16 |

| BETO | 81.30 |

| mBERT | 78.76 |

| BERTIN | 78.90 |

| ELECTRA | 78.78 |

For more details, check the fine-tuning and evaluation scripts in the official [GitHub repository](https://github.com/PlanTL-GOB-ES/lm-spanish).

## Additional information

### Author

Text Mining Unit (TeMU) at the Barcelona Supercomputing Center (bsc-temu@bsc.es)

### Contact information

For further information, send an email to <plantl-gob-es@bsc.es>

### Copyright

Copyright by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) (2022)

### Licensing information

[Apache License, Version 2.0](https://www.apache.org/licenses/LICENSE-2.0)

### Funding

This work was funded by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) within the framework of the Plan-TL.

## Citing information

If you use this model, please cite our [paper](http://journal.sepln.org/sepln/ojs/ojs/index.php/pln/article/view/6405):

```

@article{,

abstract = {We want to thank the National Library of Spain for such a large effort on the data gathering and the Future of Computing Center, a

Barcelona Supercomputing Center and IBM initiative (2020). This work was funded by the Spanish State Secretariat for Digitalization and Artificial

Intelligence (SEDIA) within the framework of the Plan-TL.},

author = {Asier Gutiérrez Fandiño and Jordi Armengol Estapé and Marc Pàmies and Joan Llop Palao and Joaquin Silveira Ocampo and Casimiro Pio Carrino and Carme Armentano Oller and Carlos Rodriguez Penagos and Aitor Gonzalez Agirre and Marta Villegas},

doi = {10.26342/2022-68-3},

issn = {1135-5948},

journal = {Procesamiento del Lenguaje Natural},

keywords = {Artificial intelligence,Benchmarking,Data processing.,MarIA,Natural language processing,Spanish language modelling,Spanish language resources,Tractament del llenguatge natural (Informàtica),Àrees temàtiques de la UPC::Informàtica::Intel·ligència artificial::Llenguatge natural},

publisher = {Sociedad Española para el Procesamiento del Lenguaje Natural},

title = {MarIA: Spanish Language Models},

volume = {68},

url = {https://upcommons.upc.edu/handle/2117/367156#.YyMTB4X9A-0.mendeley},

year = {2022},

}

```

## Disclaimer

<details>

<summary>Click to expand</summary>

The models published in this repository are intended for a generalist purpose and are available to third parties. These models may have bias and/or any other undesirable distortions.

When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of artificial intelligence.

In no event shall the owner of the models (SEDIA – State Secretariat for digitalization and artificial intelligence) nor the creator (BSC – Barcelona Supercomputing Center) be liable for any results arising from the use made by third parties of these models.

Los modelos publicados en este repositorio tienen una finalidad generalista y están a disposición de terceros. Estos modelos pueden tener sesgos y/u otro tipo de distorsiones indeseables.

Cuando terceros desplieguen o proporcionen sistemas y/o servicios a otras partes usando alguno de estos modelos (o utilizando sistemas basados en estos modelos) o se conviertan en usuarios de los modelos, deben tener en cuenta que es su responsabilidad mitigar los riesgos derivados de su uso y, en todo caso, cumplir con la normativa aplicable, incluyendo la normativa en materia de uso de inteligencia artificial.

En ningún caso el propietario de los modelos (SEDIA – Secretaría de Estado de Digitalización e Inteligencia Artificial) ni el creador (BSC – Barcelona Supercomputing Center) serán responsables de los resultados derivados del uso que hagan terceros de estos modelos.

| 6fd388ec0ace553cc491f3f5044f3a1b |

vasudevgupta/bigbird-roberta-natural-questions | vasudevgupta | big_bird | 11 | 7,949 | transformers | 7 | question-answering | true | false | false | apache-2.0 | ['en'] | ['natural_questions'] | null | 0 | 0 | 0 | 0 | 1 | 0 | 1 | [] | false | true | true | 842 | false |

This checkpoint is obtained after training `BigBirdForQuestionAnswering` (with extra pooler head) on [`natural_questions`](https://huggingface.co/datasets/natural_questions) dataset for ~ 2 weeks on 2 K80 GPUs. Script for training can be found here: https://github.com/vasudevgupta7/bigbird

| Exact Match | 47.44 |

|-------------|-------|

**Use this model just like any other model from 🤗Transformers**

```python

from transformers import BigBirdForQuestionAnswering

model_id = "vasudevgupta/bigbird-roberta-natural-questions"

model = BigBirdForQuestionAnswering.from_pretrained(model_id)

tokenizer = BigBirdTokenizer.from_pretrained(model_id)

```

In case you are interested in predicting category (null, long, short, yes, no) as well, use `BigBirdForNaturalQuestions` (instead of `BigBirdForQuestionAnswering`) from my training script.

| a0dedcd155df00db41b4985036b226c7 |

gchhablani/bert-base-cased-finetuned-wnli | gchhablani | bert | 71 | 29 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer', 'fnet-bert-base-comparison'] | true | true | true | 2,334 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned-wnli

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the GLUE WNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6996

- Accuracy: 0.4648

The model was fine-tuned to compare [google/fnet-base](https://huggingface.co/google/fnet-base) as introduced in [this paper](https://arxiv.org/abs/2105.03824) against [bert-base-cased](https://huggingface.co/bert-base-cased).

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

This model is trained using the [run_glue](https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py) script. The following command was used:

```bash

#!/usr/bin/bash

python ../run_glue.py \\n --model_name_or_path bert-base-cased \\n --task_name wnli \\n --do_train \\n --do_eval \\n --max_seq_length 512 \\n --per_device_train_batch_size 16 \\n --learning_rate 2e-5 \\n --num_train_epochs 5 \\n --output_dir bert-base-cased-finetuned-wnli \\n --push_to_hub \\n --hub_strategy all_checkpoints \\n --logging_strategy epoch \\n --save_strategy epoch \\n --evaluation_strategy epoch \\n```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.7299 | 1.0 | 40 | 0.6923 | 0.5634 |

| 0.6982 | 2.0 | 80 | 0.7027 | 0.3803 |

| 0.6972 | 3.0 | 120 | 0.7005 | 0.4507 |

| 0.6992 | 4.0 | 160 | 0.6977 | 0.5352 |

| 0.699 | 5.0 | 200 | 0.6996 | 0.4648 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0

- Datasets 1.12.1

- Tokenizers 0.10.3

| 7ef85cce6cf25c73bfa97361fdb36306 |

jonatasgrosman/exp_w2v2r_es_xls-r_accent_surpeninsular-10_nortepeninsular-0_s632 | jonatasgrosman | wav2vec2 | 10 | 3 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['es'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'es'] | false | true | true | 495 | false | # exp_w2v2r_es_xls-r_accent_surpeninsular-10_nortepeninsular-0_s632

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition using the train split of [Common Voice 7.0 (es)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 9734ba8789b5850273a8cd6165e889bd |

muhtasham/tiny-mlm-imdb-target-tweet | muhtasham | bert | 10 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['tweet_eval'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,898 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tiny-mlm-imdb-target-tweet

This model is a fine-tuned version of [muhtasham/tiny-mlm-imdb](https://huggingface.co/muhtasham/tiny-mlm-imdb) on the tweet_eval dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5550

- Accuracy: 0.6925

- F1: 0.7004

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.159 | 4.9 | 500 | 0.9977 | 0.6364 | 0.6013 |

| 0.7514 | 9.8 | 1000 | 0.8549 | 0.7112 | 0.7026 |

| 0.5011 | 14.71 | 1500 | 0.8516 | 0.7032 | 0.6962 |

| 0.34 | 19.61 | 2000 | 0.9019 | 0.7059 | 0.7030 |

| 0.2258 | 24.51 | 2500 | 0.9722 | 0.7166 | 0.7164 |

| 0.1607 | 29.41 | 3000 | 1.0724 | 0.6979 | 0.6999 |

| 0.1127 | 34.31 | 3500 | 1.1435 | 0.7193 | 0.7169 |

| 0.0791 | 39.22 | 4000 | 1.2807 | 0.7059 | 0.7069 |

| 0.0568 | 44.12 | 4500 | 1.3849 | 0.7139 | 0.7159 |

| 0.0478 | 49.02 | 5000 | 1.5550 | 0.6925 | 0.7004 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.12.1

- Datasets 2.7.1

- Tokenizers 0.13.2

| 80ebfc551cc686044bdc108be4e21182 |

gokuls/mobilebert_add_GLUE_Experiment_wnli | gokuls | mobilebert | 17 | 4 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,580 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_add_GLUE_Experiment_wnli

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE WNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6896

- Accuracy: 0.5634

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6956 | 1.0 | 5 | 0.6896 | 0.5634 |

| 0.6945 | 2.0 | 10 | 0.6950 | 0.4366 |

| 0.6938 | 3.0 | 15 | 0.6950 | 0.4366 |

| 0.693 | 4.0 | 20 | 0.6914 | 0.5634 |

| 0.6931 | 5.0 | 25 | 0.6897 | 0.5634 |

| 0.6932 | 6.0 | 30 | 0.6900 | 0.5634 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.8.0

- Tokenizers 0.13.2

| 20576a22a0d98d02474b055b1857c344 |

SongRb/distilbert-base-uncased-finetuned-cola | SongRb | distilbert | 19 | 5 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['glue'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | false | true | true | 1,570 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-cola

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8549

- Matthews Correlation: 0.5332

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Matthews Correlation |

|:-------------:|:-----:|:----:|:---------------:|:--------------------:|

| 0.5213 | 1.0 | 535 | 0.5163 | 0.4183 |

| 0.3479 | 2.0 | 1070 | 0.5351 | 0.5182 |

| 0.231 | 3.0 | 1605 | 0.6271 | 0.5291 |

| 0.166 | 4.0 | 2140 | 0.7531 | 0.5279 |

| 0.1313 | 5.0 | 2675 | 0.8549 | 0.5332 |

### Framework versions

- Transformers 4.10.0.dev0

- Pytorch 1.8.1

- Datasets 1.11.0

- Tokenizers 0.10.3

| 2957bb5ad02601e489d458c11d261be2 |

benjamin/gpt2-wechsel-german | benjamin | gpt2 | 21 | 65 | transformers | 2 | text-generation | true | false | false | mit | ['de'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 3,644 | false |

# gpt2-wechsel-german

Model trained with WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models.

See the code here: https://github.com/CPJKU/wechsel

And the paper here: https://aclanthology.org/2022.naacl-main.293/

## Performance

### RoBERTa

| Model | NLI Score | NER Score | Avg Score |

|---|---|---|---|

| `roberta-base-wechsel-french` | **82.43** | **90.88** | **86.65** |

| `camembert-base` | 80.88 | 90.26 | 85.57 |

| Model | NLI Score | NER Score | Avg Score |

|---|---|---|---|

| `roberta-base-wechsel-german` | **81.79** | **89.72** | **85.76** |

| `deepset/gbert-base` | 78.64 | 89.46 | 84.05 |

| Model | NLI Score | NER Score | Avg Score |

|---|---|---|---|

| `roberta-base-wechsel-chinese` | **78.32** | 80.55 | **79.44** |

| `bert-base-chinese` | 76.55 | **82.05** | 79.30 |

| Model | NLI Score | NER Score | Avg Score |

|---|---|---|---|

| `roberta-base-wechsel-swahili` | **75.05** | **87.39** | **81.22** |

| `xlm-roberta-base` | 69.18 | 87.37 | 78.28 |

### GPT2

| Model | PPL |

|---|---|

| `gpt2-wechsel-french` | **19.71** |

| `gpt2` (retrained from scratch) | 20.47 |

| Model | PPL |

|---|---|

| `gpt2-wechsel-german` | **26.8** |

| `gpt2` (retrained from scratch) | 27.63 |

| Model | PPL |

|---|---|

| `gpt2-wechsel-chinese` | **51.97** |

| `gpt2` (retrained from scratch) | 52.98 |

| Model | PPL |

|---|---|

| `gpt2-wechsel-swahili` | **10.14** |

| `gpt2` (retrained from scratch) | 10.58 |

See our paper for details.

## Citation

Please cite WECHSEL as

```

@inproceedings{minixhofer-etal-2022-wechsel,

title = "{WECHSEL}: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models",

author = "Minixhofer, Benjamin and

Paischer, Fabian and

Rekabsaz, Navid",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jul,

year = "2022",

address = "Seattle, United States",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-main.293",

pages = "3992--4006",

abstract = "Large pretrained language models (LMs) have become the central building block of many NLP applications. Training these models requires ever more computational resources and most of the existing models are trained on English text only. It is exceedingly expensive to train these models in other languages. To alleviate this problem, we introduce a novel method {--} called WECHSEL {--} to efficiently and effectively transfer pretrained LMs to new languages. WECHSEL can be applied to any model which uses subword-based tokenization and learns an embedding for each subword. The tokenizer of the source model (in English) is replaced with a tokenizer in the target language and token embeddings are initialized such that they are semantically similar to the English tokens by utilizing multilingual static word embeddings covering English and the target language. We use WECHSEL to transfer the English RoBERTa and GPT-2 models to four languages (French, German, Chinese and Swahili). We also study the benefits of our method on very low-resource languages. WECHSEL improves over proposed methods for cross-lingual parameter transfer and outperforms models of comparable size trained from scratch with up to 64x less training effort. Our method makes training large language models for new languages more accessible and less damaging to the environment. We make our code and models publicly available.",

}

```

| e27eafd666e624995df680d14d933b79 |

pig4431/CR_BERT_5E | pig4431 | bert | 10 | 4 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 2,046 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# CR_BERT_5E

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5094

- Accuracy: 0.8733

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.694 | 0.33 | 50 | 0.5894 | 0.6733 |

| 0.5335 | 0.66 | 100 | 0.4150 | 0.84 |

| 0.3446 | 0.99 | 150 | 0.3052 | 0.9 |

| 0.241 | 1.32 | 200 | 0.3409 | 0.8733 |

| 0.2536 | 1.66 | 250 | 0.3101 | 0.88 |

| 0.2318 | 1.99 | 300 | 0.3015 | 0.8867 |

| 0.1527 | 2.32 | 350 | 0.3806 | 0.8733 |

| 0.1026 | 2.65 | 400 | 0.3788 | 0.8733 |

| 0.1675 | 2.98 | 450 | 0.3956 | 0.8933 |

| 0.0699 | 3.31 | 500 | 0.4532 | 0.8867 |

| 0.0848 | 3.64 | 550 | 0.4636 | 0.88 |

| 0.0991 | 3.97 | 600 | 0.4951 | 0.88 |

| 0.0578 | 4.3 | 650 | 0.5073 | 0.88 |

| 0.0636 | 4.64 | 700 | 0.5090 | 0.8733 |

| 0.0531 | 4.97 | 750 | 0.5094 | 0.8733 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0

- Datasets 2.3.2

- Tokenizers 0.13.1

| 0dbe89963aac5ddaa7fa89ddf5e30788 |

Geotrend/bert-base-en-tr-cased | Geotrend | bert | 8 | 2 | transformers | 0 | fill-mask | true | true | true | apache-2.0 | ['multilingual'] | ['wikipedia'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,292 | false |

# bert-base-en-tr-cased

We are sharing smaller versions of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) that handle a custom number of languages.

Unlike [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased), our versions give exactly the same representations produced by the original model which preserves the original accuracy.

For more information please visit our paper: [Load What You Need: Smaller Versions of Multilingual BERT](https://www.aclweb.org/anthology/2020.sustainlp-1.16.pdf).

## How to use

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("Geotrend/bert-base-en-tr-cased")

model = AutoModel.from_pretrained("Geotrend/bert-base-en-tr-cased")

```

To generate other smaller versions of multilingual transformers please visit [our Github repo](https://github.com/Geotrend-research/smaller-transformers).

### How to cite

```bibtex

@inproceedings{smallermbert,

title={Load What You Need: Smaller Versions of Mutlilingual BERT},

author={Abdaoui, Amine and Pradel, Camille and Sigel, Grégoire},

booktitle={SustaiNLP / EMNLP},

year={2020}

}

```

## Contact

Please contact amine@geotrend.fr for any question, feedback or request.

| 475f8ef5299475b02f764ec47a86b484 |

Davlan/xlm-roberta-base-finetuned-arabic | Davlan | xlm-roberta | 10 | 6 | transformers | 0 | fill-mask | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,015 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ar_xlmr-base

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6612

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 10

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

### Framework versions

- Transformers 4.21.1

- Pytorch 1.7.1+cu110

- Datasets 1.16.1

- Tokenizers 0.12.1

| de4e89b3d578b62a4954cd80bc1be5ee |

ttj/flex-diffusion-2-1 | ttj | null | 28 | 42 | diffusers | 15 | text-to-image | false | false | false | openrail++ | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['stable-diffusion', 'text-to-image'] | false | true | true | 11,348 | false |

# Model Card for flex-diffusion-2-1

<!-- Provide a quick summary of what the model is/does. [Optional] -->

stable-diffusion-2-1 (stabilityai/stable-diffusion-2-1) finetuned with different aspect ratios.

## TLDR:

### There are 2 models in this repo:

- One based on stable-diffusion-2-1 (stabilityai/stable-diffusion-2-1) finetuned for 6k steps.

- One based on stable-diffusion-2-base (stabilityai/stable-diffusion-2-base) finetuned for 6k steps, on the same dataset.

For usage, see - [How to Get Started with the Model](#how-to-get-started-with-the-model)

### It aims to solve the following issues:

1. Generated images looks like they are cropped from a larger image.

2. Generating non-square images creates weird results, due to the model being trained on square images.

Examples:

| resolution | model | stable diffusion | flex diffusion |

|:---------------:|:-------:|:----------------------------:|:-----------------------------:|

| 576x1024 (9:16) | v2-1 |  |  |

| 576x1024 (9:16) | v2-base |  |  |

| 1024x576 (16:9) | v2-1 |  |  |

| 1024x576 (16:9) | v2-base |  |  |

### Limitations:

1. It's trained on a small dataset, so it's improvements may be limited.

2. For each aspect ratio, it's trained on only a fixed resolution. So it may not be able to generate images of different resolutions.

For 1:1 aspect ratio, it's fine-tuned at 512x512, although flex-diffusion-2-1 was last finetuned at 768x768.

### Potential improvements:

1. Train on a larger dataset.

2. Train on different resolutions even for the same aspect ratio.

3. Train on specific aspect ratios, instead of a range of aspect ratios.

# Table of Contents

- [Model Card for flex-diffusion-2-1](#model-card-for--model_id-)

- [Table of Contents](#table-of-contents)

- [Table of Contents](#table-of-contents-1)

- [Model Details](#model-details)

- [Model Description](#model-description)

- [Uses](#uses)

- [Direct Use](#direct-use)

- [Downstream Use [Optional]](#downstream-use-optional)

- [Out-of-Scope Use](#out-of-scope-use)

- [Bias, Risks, and Limitations](#bias-risks-and-limitations)

- [Recommendations](#recommendations)

- [Training Details](#training-details)

- [Training Data](#training-data)

- [Training Procedure](#training-procedure)

- [Preprocessing](#preprocessing)

- [Speeds, Sizes, Times](#speeds-sizes-times)

- [Evaluation](#evaluation)

- [Testing Data, Factors & Metrics](#testing-data-factors--metrics)

- [Testing Data](#testing-data)

- [Factors](#factors)

- [Metrics](#metrics)

- [Results](#results)

- [Model Examination](#model-examination)

- [Environmental Impact](#environmental-impact)

- [Technical Specifications [optional]](#technical-specifications-optional)

- [Model Architecture and Objective](#model-architecture-and-objective)

- [Compute Infrastructure](#compute-infrastructure)

- [Hardware](#hardware)

- [Software](#software)

- [Citation](#citation)

- [Glossary [optional]](#glossary-optional)

- [More Information [optional]](#more-information-optional)

- [Model Card Authors [optional]](#model-card-authors-optional)

- [Model Card Contact](#model-card-contact)

- [How to Get Started with the Model](#how-to-get-started-with-the-model)

# Model Details

## Model Description

<!-- Provide a longer summary of what this model is/does. -->

stable-diffusion-2-1 (stabilityai/stable-diffusion-2-1) finetuned for dynamic aspect ratios.

finetuned resolutions:

| | width | height | aspect ratio |

|---:|--------:|---------:|:---------------|

| 0 | 512 | 1024 | 1:2 |

| 1 | 576 | 1024 | 9:16 |

| 2 | 576 | 960 | 3:5 |

| 3 | 640 | 1024 | 5:8 |

| 4 | 512 | 768 | 2:3 |

| 5 | 640 | 896 | 5:7 |

| 6 | 576 | 768 | 3:4 |

| 7 | 512 | 640 | 4:5 |

| 8 | 640 | 768 | 5:6 |

| 9 | 640 | 704 | 10:11 |

| 10 | 512 | 512 | 1:1 |

| 11 | 704 | 640 | 11:10 |

| 12 | 768 | 640 | 6:5 |

| 13 | 640 | 512 | 5:4 |

| 14 | 768 | 576 | 4:3 |

| 15 | 896 | 640 | 7:5 |

| 16 | 768 | 512 | 3:2 |

| 17 | 1024 | 640 | 8:5 |

| 18 | 960 | 576 | 5:3 |

| 19 | 1024 | 576 | 16:9 |

| 20 | 1024 | 512 | 2:1 |

- **Developed by:** Jonathan Chang

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s)**: English

- **License:** creativeml-openrail-m

- **Parent Model:** https://huggingface.co/stabilityai/stable-diffusion-2-1

- **Resources for more information:** More information needed

# Uses

- see https://huggingface.co/stabilityai/stable-diffusion-2-1

# Training Details

## Training Data

- LAION aesthetic dataset, subset of it with 6+ rating

- https://laion.ai/blog/laion-aesthetics/

- https://huggingface.co/datasets/ChristophSchuhmann/improved_aesthetics_6plus

- I only used a small portion of that, see [Preprocessing](#preprocessing)

- most common aspect ratios in the dataset (before preprocessing)

| | aspect_ratio | counts |

|---:|:---------------|---------:|

| 0 | 1:1 | 154727 |

| 1 | 3:2 | 119615 |

| 2 | 2:3 | 61197 |

| 3 | 4:3 | 52276 |

| 4 | 16:9 | 38862 |

| 5 | 400:267 | 21893 |

| 6 | 3:4 | 16893 |

| 7 | 8:5 | 16258 |

| 8 | 4:5 | 15684 |

| 9 | 6:5 | 12228 |

| 10 | 1000:667 | 12097 |

| 11 | 2:1 | 11006 |

| 12 | 800:533 | 10259 |

| 13 | 5:4 | 9753 |

| 14 | 500:333 | 9700 |

| 15 | 250:167 | 9114 |

| 16 | 5:3 | 8460 |

| 17 | 200:133 | 7832 |

| 18 | 1024:683 | 7176 |

| 19 | 11:10 | 6470 |

- predefined aspect ratios

| | width | height | aspect ratio |

|---:|--------:|---------:|:---------------|

| 0 | 512 | 1024 | 1:2 |

| 1 | 576 | 1024 | 9:16 |

| 2 | 576 | 960 | 3:5 |

| 3 | 640 | 1024 | 5:8 |

| 4 | 512 | 768 | 2:3 |

| 5 | 640 | 896 | 5:7 |

| 6 | 576 | 768 | 3:4 |

| 7 | 512 | 640 | 4:5 |

| 8 | 640 | 768 | 5:6 |

| 9 | 640 | 704 | 10:11 |

| 10 | 512 | 512 | 1:1 |

| 11 | 704 | 640 | 11:10 |

| 12 | 768 | 640 | 6:5 |

| 13 | 640 | 512 | 5:4 |

| 14 | 768 | 576 | 4:3 |

| 15 | 896 | 640 | 7:5 |

| 16 | 768 | 512 | 3:2 |

| 17 | 1024 | 640 | 8:5 |

| 18 | 960 | 576 | 5:3 |

| 19 | 1024 | 576 | 16:9 |

| 20 | 1024 | 512 | 2:1 |

## Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

### Preprocessing

1. download files with url & caption from https://huggingface.co/datasets/ChristophSchuhmann/improved_aesthetics_6plus

- I only used the first file `train-00000-of-00007-29aec9150af50f9f.parquet`

2. use img2dataset to convert to webdataset

- https://github.com/rom1504/img2dataset

- I put train-00000-of-00007-29aec9150af50f9f.parquet in a folder called `first-file`

- the output folder is `/mnt/aesthetics6plus`, change this to your own folder

```bash

echo INPUT_FOLDER=first-file

echo OUTPUT_FOLDER=/mnt/aesthetics6plus

img2dataset --url_list $INPUT_FOLDER --input_format "parquet"\

--url_col "URL" --caption_col "TEXT" --output_format webdataset\

--output_folder $OUTPUT_FOLDER --processes_count 3 --thread_count 6 --image_size 1024 --resize_only_if_bigger --resize_mode=keep_ratio_largest \

--save_additional_columns '["WIDTH","HEIGHT","punsafe","similarity"]' --enable_wandb True

```

3. The data-loading code will do preprocessing on the fly, so no need to do anything else. But it's not optimized for speed, the GPU utilization fluctuates between 80% and 100%. And it's not written for multi-GPU training, so use it with caution. The code will do the following:

- use webdataset to load the data

- calculate the aspect ratio of each image

- find the closest aspect ratio & it's associated resolution from the predefined resolutions: `argmin(abs(aspect_ratio - predefined_aspect_ratios))`. E.g. if the aspect ratio is 1:3, the closest resolution is 1:2. and it's associated resolution is 512x1024.

- keeping the aspect ratio, resize the image such that it's larger or equal to the associated resolution on each side. E.g. resize to 512x(512*3) = 512x1536

- random crop the image to the associated resolution. E.g. crop to 512x1024

- if more than 10% of the image is lost in the cropping, discard this example.

- batch examples by aspect ratio, so all examples in a batch have the same aspect ratio

### Speeds, Sizes, Times

- Dataset size: 100k image-caption pairs, before filtering.

- I didn't wait for the whole dataset to be downloaded, I copied the first 10 tar files and their index files to a new folder called `aesthetics6plus-small`, with 100k image-caption pairs in total. The full dataset is a lot bigger.

- Hardware: 1 RTX3090 GPUs

- Optimizer: 8bit Adam

- Batch size: 32

- actual batch size: 2

- gradient_accumulation_steps: 16

- effective batch size: 32

- Learning rate: warmup to 2e-6 for 500 steps and then kept constant

- Learning rate: 2e-6

- Training steps: 6k

- Epoch size (approximate): 32 * 6k / 100k = 1.92 (not accounting for the filtering)

- Each example is seen 1.92 times on average.

- Training time: approximately 1 day

## Results

More information needed

# Model Card Authors

Jonathan Chang

# How to Get Started with the Model

Use the code below to get started with the model.

```python

from diffusers import StableDiffusionPipeline, DPMSolverMultistepScheduler, UNet2DConditionModel

def use_DPM_solver(pipe):

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

return pipe

pipe = StableDiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-2-1",

unet = UNet2DConditionModel.from_pretrained("ttj/flex-diffusion-2-1", subfolder="2-1/unet", torch_dtype=torch.float16),

torch_dtype=torch.float16,

)

# for v2-base, use the following line instead

#pipe = StableDiffusionPipeline.from_pretrained(

# "stabilityai/stable-diffusion-2-base",

# unet = UNet2DConditionModel.from_pretrained("ttj/flex-diffusion-2-1", subfolder="2-base/unet", torch_dtype=torch.float16),

# torch_dtype=torch.float16)

pipe = use_DPM_solver(pipe).to("cuda")

pipe = pipe.to("cuda")

prompt = "a professional photograph of an astronaut riding a horse"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

``` | 1b92f954f5be96fdc54cb3322ac7d8f4 |

jhaochenz/finetuned_distilgpt2_sst2_negation0.01_pretrainedTrue_epochs1 | jhaochenz | gpt2 | 14 | 0 | transformers | 0 | text-generation | true | false | false | apache-2.0 | null | ['sst2'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,163 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned_distilgpt2_sst2_negation0.01_pretrainedTrue_epochs1

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the sst2 dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2761

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7102 | 1.0 | 1323 | 3.2761 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.7.0

- Datasets 2.8.0

- Tokenizers 0.13.2

| 5aa6b3f491cd8fb68ccdec53b5d3dee0 |

Helsinki-NLP/opus-mt-kab-en | Helsinki-NLP | marian | 10 | 11 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 778 | false |

### opus-mt-kab-en

* source languages: kab

* target languages: en

* OPUS readme: [kab-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/kab-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2019-12-18.zip](https://object.pouta.csc.fi/OPUS-MT-models/kab-en/opus-2019-12-18.zip)

* test set translations: [opus-2019-12-18.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/kab-en/opus-2019-12-18.test.txt)

* test set scores: [opus-2019-12-18.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/kab-en/opus-2019-12-18.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.kab.en | 27.5 | 0.408 |

| e3d7cbad96a54b1921b3651e43340788 |

AykeeSalazar/violation-classification-bantai-vit-withES | AykeeSalazar | vit | 9 | 9 | transformers | 0 | image-classification | true | false | false | apache-2.0 | null | ['image_folder'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,274 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# violation-classification-bantai-vit-withES

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the image_folder dataset.

It achieves the following results on the evaluation set:

- eval_loss: 0.2234

- eval_accuracy: 0.9592

- eval_runtime: 64.9173

- eval_samples_per_second: 85.37

- eval_steps_per_second: 2.68

- epoch: 227.72

- step: 23000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 500

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.0+cu111

- Datasets 2.1.0

- Tokenizers 0.12.1

| 01256acfdf2f94c35f9a0e736daf6d9e |

Helsinki-NLP/opus-mt-zne-fi | Helsinki-NLP | marian | 10 | 9 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 776 | false |

### opus-mt-zne-fi

* source languages: zne

* target languages: fi

* OPUS readme: [zne-fi](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/zne-fi/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/zne-fi/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/zne-fi/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/zne-fi/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.zne.fi | 22.8 | 0.432 |

| 4d1dacd86a268f29cf5c6016cd99d598 |

dminiotas05/distilbert-base-uncased-finetuned-ft750_reg3 | dminiotas05 | distilbert | 10 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,355 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ft750_reg3

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6143

- Mse: 0.6143

- Mae: 0.6022

- R2: 0.4218

- Accuracy: 0.52

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mse | Mae | R2 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:--------:|

| 0.5241 | 1.0 | 188 | 0.6143 | 0.6143 | 0.6022 | 0.4218 | 0.52 |

### Framework versions

- Transformers 4.21.0

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

| f12074dbb299e37eb89055e19afd27a8 |

muhtasham/tiny-mlm-glue-rte-target-glue-stsb | muhtasham | bert | 10 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 2,027 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tiny-mlm-glue-rte-target-glue-stsb

This model is a fine-tuned version of [muhtasham/tiny-mlm-glue-rte](https://huggingface.co/muhtasham/tiny-mlm-glue-rte) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9754

- Pearson: 0.8093

- Spearmanr: 0.8107

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson | Spearmanr |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:---------:|

| 3.2461 | 2.78 | 500 | 1.1464 | 0.7083 | 0.7348 |

| 1.0093 | 5.56 | 1000 | 1.1455 | 0.7664 | 0.7934 |

| 0.7582 | 8.33 | 1500 | 1.0140 | 0.7980 | 0.8136 |

| 0.6329 | 11.11 | 2000 | 0.8708 | 0.8136 | 0.8184 |

| 0.5285 | 13.89 | 2500 | 0.8894 | 0.8139 | 0.8159 |

| 0.4747 | 16.67 | 3000 | 0.9908 | 0.8116 | 0.8165 |

| 0.4154 | 19.44 | 3500 | 0.9260 | 0.8137 | 0.8145 |

| 0.3792 | 22.22 | 4000 | 0.9264 | 0.8161 | 0.8156 |

| 0.3445 | 25.0 | 4500 | 0.9664 | 0.8155 | 0.8164 |

| 0.3246 | 27.78 | 5000 | 0.9735 | 0.8110 | 0.8121 |

| 0.3033 | 30.56 | 5500 | 0.9754 | 0.8093 | 0.8107 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

| eadd3ff0113ff50849f82f6d70bcca75 |

sahillihas/OntoMedQA | sahillihas | bert | 12 | 4 | transformers | 0 | multiple-choice | true | false | false | apache-2.0 | null | ['medmcqa'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,311 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# OntoMedQA

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the medmcqa dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2874

- Accuracy: 0.4118

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 187 | 1.2418 | 0.2941 |

| No log | 2.0 | 374 | 1.1449 | 0.4706 |

| 0.8219 | 3.0 | 561 | 1.2874 | 0.4118 |

### Framework versions

- Transformers 4.21.2

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

| cdc945e58c1264a88d7bcae3f571389a |

cansen88/turkishReviews_5_topic | cansen88 | gpt2 | 9 | 2 | transformers | 0 | text-generation | false | true | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 1,574 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# turkishReviews_5_topic

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 6.8939

- Validation Loss: 6.8949

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 5e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5e-05, 'decay_steps': 756, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 8.0049 | 6.8949 | 0 |

| 6.8943 | 6.8949 | 1 |

| 6.8939 | 6.8949 | 2 |

### Framework versions

- Transformers 4.21.0

- TensorFlow 2.8.2

- Datasets 2.4.0

- Tokenizers 0.12.1

| 9ca7dc140af910939259097709520904 |

jonatasgrosman/exp_w2v2t_fa_wavlm_s527 | jonatasgrosman | wavlm | 10 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['fa'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'fa'] | false | true | true | 439 | false | # exp_w2v2t_fa_wavlm_s527

Fine-tuned [microsoft/wavlm-large](https://huggingface.co/microsoft/wavlm-large) for speech recognition using the train split of [Common Voice 7.0 (fa)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 6740a953c8824985296d13f071fc3513 |

Geotrend/bert-base-en-nl-cased | Geotrend | bert | 8 | 2 | transformers | 0 | fill-mask | true | true | true | apache-2.0 | ['multilingual'] | ['wikipedia'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,292 | false |

# bert-base-en-nl-cased

We are sharing smaller versions of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) that handle a custom number of languages.

Unlike [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased), our versions give exactly the same representations produced by the original model which preserves the original accuracy.

For more information please visit our paper: [Load What You Need: Smaller Versions of Multilingual BERT](https://www.aclweb.org/anthology/2020.sustainlp-1.16.pdf).

## How to use

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("Geotrend/bert-base-en-nl-cased")

model = AutoModel.from_pretrained("Geotrend/bert-base-en-nl-cased")

```

To generate other smaller versions of multilingual transformers please visit [our Github repo](https://github.com/Geotrend-research/smaller-transformers).

### How to cite

```bibtex

@inproceedings{smallermbert,

title={Load What You Need: Smaller Versions of Mutlilingual BERT},

author={Abdaoui, Amine and Pradel, Camille and Sigel, Grégoire},

booktitle={SustaiNLP / EMNLP},

year={2020}

}

```

## Contact

Please contact amine@geotrend.fr for any question, feedback or request. | f5b472db6d12b18037c97caed276d2c7 |

coreml/coreml-Inkpunk-Diffusion | coreml | null | 4 | 0 | null | 2 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['coreml', 'stable-diffusion', 'text-to-image'] | false | true | true | 1,328 | false |

# Core ML Converted Model

This model was converted to Core ML for use on Apple Silicon devices by following Apple's instructions [here](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml).<br>

Provide the model to an app such as [Mochi Diffusion](https://github.com/godly-devotion/MochiDiffusion) to generate images.<br>

`split_einsum` version is compatible with all compute unit options including Neural Engine.<br>

`original` version is only compatible with CPU & GPU option.

# Inkpunk Diffusion

Finetuned Stable Diffusion model trained on dreambooth. Vaguely inspired by Gorillaz, FLCL, and Yoji Shinkawa. Use **_nvinkpunk_** in your prompts.

# Gradio

We support a [Gradio](https://github.com/gradio-app/gradio) Web UI to run Inkpunk-Diffusion:

[](https://huggingface.co/spaces/akhaliq/Inkpunk-Diffusion)

# Sample images

| 3f83914dd0beaba0bffba5de8b05204a |

eunyounglee/mBART_translator_kobart_2 | eunyounglee | bart | 11 | 1 | transformers | 0 | text2text-generation | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,935 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mBART_translator_kobart_2