repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

philschmid/distilbart-cnn-12-6-samsum | philschmid | bart | 13 | 2,437 | transformers | 6 | summarization | true | false | false | apache-2.0 | ['en'] | ['samsum'] | null | 4 | 0 | 4 | 0 | 0 | 0 | 0 | ['sagemaker', 'bart', 'summarization'] | true | true | true | 2,500 | false |

## `distilbart-cnn-12-6-samsum`

This model was trained using Amazon SageMaker and the new Hugging Face Deep Learning container.

For more information look at:

- [🤗 Transformers Documentation: Amazon SageMaker](https://huggingface.co/transformers/sagemaker.html)

- [Example Notebooks](https://github.com/huggingface/notebooks/tree/master/sagemaker)

- [Amazon SageMaker documentation for Hugging Face](https://docs.aws.amazon.com/sagemaker/latest/dg/hugging-face.html)

- [Python SDK SageMaker documentation for Hugging Face](https://sagemaker.readthedocs.io/en/stable/frameworks/huggingface/index.html)

- [Deep Learning Container](https://github.com/aws/deep-learning-containers/blob/master/available_images.md#huggingface-training-containers)

## Hyperparameters

```json

{

"dataset_name": "samsum",

"do_eval": true,

"do_train": true,

"fp16": true,

"learning_rate": 5e-05,

"model_name_or_path": "sshleifer/distilbart-cnn-12-6",

"num_train_epochs": 3,

"output_dir": "/opt/ml/model",

"per_device_eval_batch_size": 8,

"per_device_train_batch_size": 8,

"seed": 7

}

```

## Train results

| key | value |

| --- | ----- |

| epoch | 3.0 |

| init_mem_cpu_alloc_delta | 180338 |

| init_mem_cpu_peaked_delta | 18282 |

| init_mem_gpu_alloc_delta | 1222242816 |

| init_mem_gpu_peaked_delta | 0 |

| train_mem_cpu_alloc_delta | 6971403 |

| train_mem_cpu_peaked_delta | 640733 |

| train_mem_gpu_alloc_delta | 4910897664 |

| train_mem_gpu_peaked_delta | 23331969536 |

| train_runtime | 155.2034 |

| train_samples | 14732 |

| train_samples_per_second | 2.242 |

## Eval results

| key | value |

| --- | ----- |

| epoch | 3.0 |

| eval_loss | 1.4209576845169067 |

| eval_mem_cpu_alloc_delta | 868003 |

| eval_mem_cpu_peaked_delta | 18250 |

| eval_mem_gpu_alloc_delta | 0 |

| eval_mem_gpu_peaked_delta | 328244736 |

| eval_runtime | 0.6088 |

| eval_samples | 818 |

| eval_samples_per_second | 1343.647 |

## Usage

```python

from transformers import pipeline

summarizer = pipeline("summarization", model="philschmid/distilbart-cnn-12-6-samsum")

conversation = '''Jeff: Can I train a 🤗 Transformers model on Amazon SageMaker?

Philipp: Sure you can use the new Hugging Face Deep Learning Container.

Jeff: ok.

Jeff: and how can I get started?

Jeff: where can I find documentation?

Philipp: ok, ok you can find everything here. https://huggingface.co/blog/the-partnership-amazon-sagemaker-and-hugging-face

'''

nlp(conversation)

```

| fdc7a657e44d7fd8c9c7792249aff687 |

wanko/distilbert-base-uncased-finetuned-emotion | wanko | distilbert | 16 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['emotion'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,344 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2183

- Accuracy: 0.9285

- F1: 0.9285

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 250 | 0.3165 | 0.908 | 0.9047 |

| No log | 2.0 | 500 | 0.2183 | 0.9285 | 0.9285 |

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

| 16300fcf6d73a93232358872c358f5de |

WillHeld/bert-base-cased-rte | WillHeld | bert | 14 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,350 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-rte

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the GLUE RTE dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9753

- Accuracy: 0.6534

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.06

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.4837 | 3.21 | 500 | 0.9753 | 0.6534 |

| 0.0827 | 6.41 | 1000 | 1.6693 | 0.6715 |

| 0.0253 | 9.62 | 1500 | 1.7777 | 0.6643 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.7.1

- Datasets 1.18.3

- Tokenizers 0.11.6

| 7c25d40b76b9317efe09b4e2a7da8707 |

Jeffrover/my_donut-base-sroie | Jeffrover | vision-encoder-decoder | 14 | 2 | transformers | 0 | null | true | false | false | mit | null | ['imagefolder'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 979 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_donut-base-sroie

This model is a fine-tuned version of [naver-clova-ix/donut-base](https://huggingface.co/naver-clova-ix/donut-base) on the imagefolder dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu102

- Datasets 2.6.1

- Tokenizers 0.13.1

| 3a0dba0b61af036ba21070a408c05f12 |

bigmorning/whisper_0020 | bigmorning | whisper | 7 | 6 | transformers | 0 | automatic-speech-recognition | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 2,849 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# whisper_0020

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.1698

- Train Accuracy: 0.0335

- Validation Loss: 0.5530

- Validation Accuracy: 0.0314

- Epoch: 19

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 1e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Train Accuracy | Validation Loss | Validation Accuracy | Epoch |

|:----------:|:--------------:|:---------------:|:-------------------:|:-----:|

| 5.0856 | 0.0116 | 4.4440 | 0.0123 | 0 |

| 4.3149 | 0.0131 | 4.0521 | 0.0142 | 1 |

| 3.9260 | 0.0146 | 3.7264 | 0.0153 | 2 |

| 3.5418 | 0.0160 | 3.3026 | 0.0174 | 3 |

| 2.7510 | 0.0198 | 2.0157 | 0.0241 | 4 |

| 1.6782 | 0.0250 | 1.3567 | 0.0273 | 5 |

| 1.1705 | 0.0274 | 1.0678 | 0.0286 | 6 |

| 0.9126 | 0.0287 | 0.9152 | 0.0294 | 7 |

| 0.7514 | 0.0296 | 0.8057 | 0.0299 | 8 |

| 0.6371 | 0.0302 | 0.7409 | 0.0302 | 9 |

| 0.5498 | 0.0307 | 0.6854 | 0.0306 | 10 |

| 0.4804 | 0.0312 | 0.6518 | 0.0307 | 11 |

| 0.4214 | 0.0316 | 0.6200 | 0.0310 | 12 |

| 0.3713 | 0.0319 | 0.5947 | 0.0311 | 13 |

| 0.3281 | 0.0322 | 0.5841 | 0.0311 | 14 |

| 0.2891 | 0.0325 | 0.5700 | 0.0313 | 15 |

| 0.2550 | 0.0328 | 0.5614 | 0.0313 | 16 |

| 0.2237 | 0.0331 | 0.5572 | 0.0313 | 17 |

| 0.1959 | 0.0333 | 0.5563 | 0.0314 | 18 |

| 0.1698 | 0.0335 | 0.5530 | 0.0314 | 19 |

### Framework versions

- Transformers 4.25.0.dev0

- TensorFlow 2.9.2

- Tokenizers 0.13.2

| d244699020fd6d8c554fe98070277d63 |

nvidia/nemo-megatron-gpt-1.3B | nvidia | null | 3 | 185 | nemo | 14 | text2text-generation | true | false | false | cc-by-4.0 | ['en'] | ['the_pile'] | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 | ['text2text-generation', 'pytorch', 'causal-lm'] | false | true | true | 4,240 | false | # NeMo Megatron-GPT 1.3B

<style>

img {

display: inline;

}

</style>

|[](#model-architecture)|[](#model-architecture)|[](#datasets)

## Model Description

Megatron-GPT 1.3B is a transformer-based language model. GPT refers to a class of transformer decoder-only models similar to GPT-2 and 3 while 1.3B refers to the total trainable parameter count (1.3 Billion) [1, 2]. It has Tensor Parallelism (TP) of 1, Pipeline Parallelism (PP) of 1 and should fit on a single NVIDIA GPU.

This model was trained with [NeMo Megatron](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/stable/nlp/nemo_megatron/intro.html).

## Getting started

### Step 1: Install NeMo and dependencies

You will need to install NVIDIA Apex and NeMo.

```

git clone https://github.com/ericharper/apex.git

cd apex

git checkout nm_v1.11.0

pip install -v --disable-pip-version-check --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" --global-option="--fast_layer_norm" --global-option="--distributed_adam" --global-option="--deprecated_fused_adam" ./

```

```

pip install nemo_toolkit['nlp']==1.11.0

```

Alternatively, you can use NeMo Megatron training docker container with all dependencies pre-installed.

### Step 2: Launch eval server

**Note.** The model has been trained with Tensor Parallelism (TP) of 1 and Pipeline Parallelism (PP) of 1 and should fit on a single NVIDIA GPU.

```

git clone https://github.com/NVIDIA/NeMo.git

cd NeMo/examples/nlp/language_modeling

git checkout v1.11.0

python megatron_gpt_eval.py gpt_model_file=nemo_gpt1.3B_fp16.nemo server=True tensor_model_parallel_size=1 trainer.devices=1

```

### Step 3: Send prompts to your model!

```python

import json

import requests

port_num = 5555

headers = {"Content-Type": "application/json"}

def request_data(data):

resp = requests.put('http://localhost:{}/generate'.format(port_num),

data=json.dumps(data),

headers=headers)

sentences = resp.json()['sentences']

return sentences

data = {

"sentences": ["Tell me an interesting fact about space travel."]*1,

"tokens_to_generate": 50,

"temperature": 1.0,

"add_BOS": True,

"top_k": 0,

"top_p": 0.9,

"greedy": False,

"all_probs": False,

"repetition_penalty": 1.2,

"min_tokens_to_generate": 2,

}

sentences = request_data(data)

print(sentences)

```

## Training Data

The model was trained on ["The Piles" dataset prepared by Eleuther.AI](https://pile.eleuther.ai/). [4]

## Evaluation results

*Zero-shot performance.* Evaluated using [LM Evaluation Test Suite from AI21](https://github.com/AI21Labs/lm-evaluation)

| ARC-Challenge | ARC-Easy | RACE-middle | RACE-high | Winogrande | RTE | BoolQA | HellaSwag | PiQA |

| ------------- | -------- | ----------- | --------- | ---------- | --- | ------ | --------- | ---- |

| 0.3012 | 0.4596 | 0.459 | 0.3797 | 0.5343 | 0.5451 | 0.5979 | 0.4443 | 0.6834 |

## Limitations

The model was trained on the data originally crawled from the Internet. This data contains toxic language and societal biases. Therefore, the model may amplify those biases and return toxic responses especially when prompted with toxic prompts.

## References

[1] [Improving Language Understanding by Generative Pre-Training](https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf)

[2] [Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism](https://arxiv.org/pdf/1909.08053.pdf)

[3] [NVIDIA NeMo Toolkit](https://github.com/NVIDIA/NeMo)

[4] [The Pile: An 800GB Dataset of Diverse Text for Language Modeling](https://arxiv.org/abs/2101.00027)

## Licence

License to use this model is covered by the [CC-BY-4.0](https://creativecommons.org/licenses/by/4.0/). By downloading the public and release version of the model, you accept the terms and conditions of the [CC-BY-4.0](https://creativecommons.org/licenses/by/4.0/) license.

| 54189e0c90b45540c3345aa4f93de9bd |

rkn/distilbert-base-uncased-finetuned-emotion | rkn | distilbert | 12 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,342 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2124

- Accuracy: 0.928

- F1: 0.9279

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 250 | 0.2991 | 0.911 | 0.9091 |

| No log | 2.0 | 500 | 0.2124 | 0.928 | 0.9279 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.7.1

- Tokenizers 0.13.2

| 3995792b6dda47019e5f7a507699bfd4 |

VishwanathanR/resnet-50 | VishwanathanR | resnet | 5 | 4 | transformers | 0 | image-classification | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 834 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# resnet-50

This model is a fine-tuned version of [microsoft/resnet-50](https://huggingface.co/microsoft/resnet-50) on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.25.0.dev0

- TensorFlow 2.6.2

- Datasets 2.7.1

- Tokenizers 0.13.2

| 0f1445d62c905912ef94c6dbdd9715ec |

innocent-charles/Swahili-question-answer-latest-cased | innocent-charles | bert | 12 | 12 | transformers | 2 | question-answering | true | false | false | cc-by-4.0 | ['sw'] | ['kenyacorpus_v2'] | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 | [] | true | true | true | 3,609 | false |

# SWAHILI QUESTION - ANSWER MODEL

This is the [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) model, fine-tuned using the [KenyaCorpus](https://github.com/Neurotech-HQ/Swahili-QA-dataset) dataset. It's been trained on question-answer pairs, including unanswerable questions, for the task of Question Answering in Swahili Language.

Question answering (QA) is a computer science discipline within the fields of information retrieval and NLP that help in the development of systems in such a way that, given a question in natural language, can extract relevant information from provided data and present it in the form of natural language answers.

## Overview

**Language model used:** bert-base-multilingual-cased

**Language:** Kiswahili

**Downstream-task:** Extractive Swahili QA

**Training data:** KenyaCorpus

**Eval data:** KenyaCorpus

**Code:** See [an example QA pipeline on Haystack](https://blog.neurotech.africa/building-swahili-question-and-answering-with-haystack/)

**Infrastructure**: AWS NVIDIA A100 Tensor Core GPU

## Hyperparameters

```

batch_size = 16

n_epochs = 10

base_LM_model = "bert-base-multilingual-cased"

max_seq_len = 386

learning_rate = 3e-5

lr_schedule = LinearWarmup

warmup_proportion = 0.2

doc_stride=128

max_query_length=64

```

## Usage

### In Haystack

Haystack is an NLP framework by deepset. You can use this model in a Haystack pipeline to do question answering at scale (over many documents). To load the model in [Haystack](https://github.com/deepset-ai/haystack/):

```python

reader = FARMReader(model_name_or_path="innocent-charles/Swahili-question-answer-latest-cased")

# or

reader = TransformersReader(model_name_or_path="innocent-charles/Swahili-question-answer-latest-cased",tokenizer="innocent-charles/Swahili-question-answer-latest-cased")

```

For a complete example of ``Swahili-question-answer-latest-cased`` being used for Swahili Question Answering, check out the [Tutorials in Haystack Documentation](https://haystack.deepset.ai)

### In Transformers

```python

from transformers import AutoModelForQuestionAnswering, AutoTokenizer, pipeline

model_name = "innocent-charles/Swahili-question-answer-latest-cased"

# a) Get predictions

nlp = pipeline('question-answering', model=model_name, tokenizer=model_name)

QA_input = {

'question': 'Asubuhi ilitupata pambajioi pa hospitali gani?',

'context': 'Asubuhi hiyo ilitupata pambajioni pa hospitali ya Uguzwa.'

}

res = nlp(QA_input)

# b) Load model & tokenizer

model = AutoModelForQuestionAnswering.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

```

## Performance

```

"exact": 51.87029394424324,

"f1": 63.91251169582613,

"total": 445,

"HasAns_exact": 50.93522267206478,

"HasAns_f1": 62.02838248389763,

"HasAns_total": 386,

"NoAns_exact": 49.79983179142137,

"NoAns_f1": 60.79983179142137,

"NoAns_total": 59

```

## Special consideration

The project is still going, hence the model is still updated after training the model in more data, Therefore pull requests are welcome to contribute to increase the performance of the model.

## Author

**Innocent Charles:** contact@innocentcharles.com

## About Me

<P>

I build good things using Artificial Intelligence ,Data and Analytics , with over 3 Years of Experience as Applied AI Engineer & Data scientist from a strong background in Software Engineering ,with passion and extensive experience in Data and Businesses.

</P>

[Linkedin](https://www.linkedin.com/in/innocent-charles/) | [GitHub](https://github.com/innocent-charles) | [Website](innocentcharles.com)

| cdd4bb975564a2fbcc846dbc1f9c84c8 |

novacygni/ddpm-butterflies-128 | novacygni | null | 13 | 0 | diffusers | 0 | null | false | false | false | apache-2.0 | ['en'] | ['huggan/smithsonian_butterflies_subset'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,231 | false |

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/novacygni/ddpm-butterflies-128/tensorboard?#scalars)

| e9a036a2857f94e81be39d6d27d376f3 |

DOOGLAK/Article_50v4_NER_Model_3Epochs_AUGMENTED | DOOGLAK | bert | 13 | 5 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | ['article50v4_wikigold_split'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,557 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Article_50v4_NER_Model_3Epochs_AUGMENTED

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the article50v4_wikigold_split dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4148

- Precision: 0.2442

- Recall: 0.1804

- F1: 0.2075

- Accuracy: 0.8392

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 26 | 0.5371 | 0.2683 | 0.0632 | 0.1023 | 0.7953 |

| No log | 2.0 | 52 | 0.4314 | 0.2259 | 0.1575 | 0.1856 | 0.8325 |

| No log | 3.0 | 78 | 0.4148 | 0.2442 | 0.1804 | 0.2075 | 0.8392 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.11.0+cu113

- Datasets 2.4.0

- Tokenizers 0.11.6

| b2f672d8e043358a9a287628b2333507 |

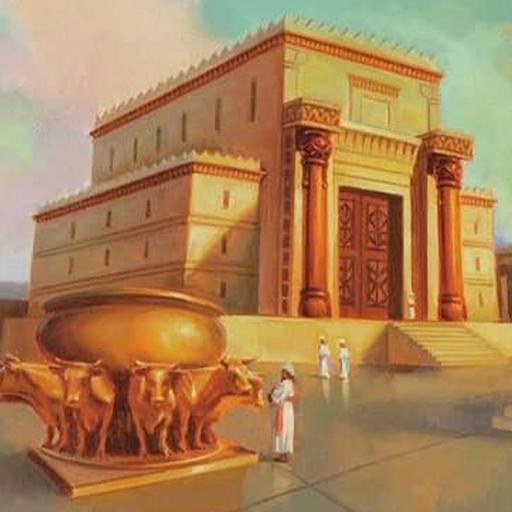

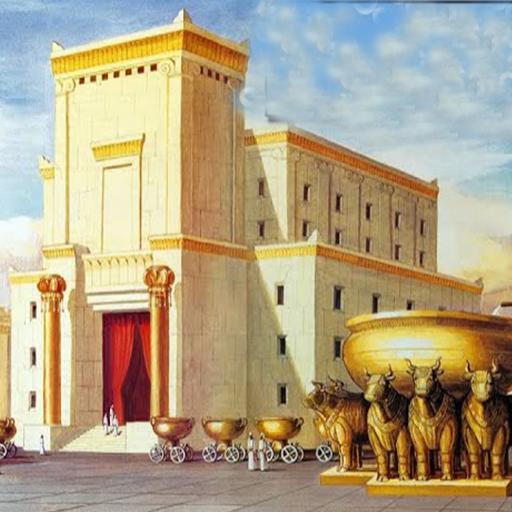

sd-concepts-library/solomon-temple | sd-concepts-library | null | 10 | 0 | null | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,186 | false | ### solomon temple on Stable Diffusion

This is the `<solomon-temple>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

| aaadfb6a07440d3b9a1252df86dcc28d |

amitness/roberta-base-ne | amitness | roberta | 8 | 3 | transformers | 1 | fill-mask | true | false | true | mit | ['ne'] | ['cc100'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['roberta', 'nepali-laguage-model'] | false | true | true | 527 | false |

# nepbert

## Model description

Roberta trained from scratch on the Nepali CC-100 dataset with 12 million sentences.

## Intended uses & limitations

#### How to use

```python

from transformers import pipeline

pipe = pipeline(

"fill-mask",

model="amitness/nepbert",

tokenizer="amitness/nepbert"

)

print(pipe(u"तिमीलाई कस्तो <mask>?"))

```

## Training data

The data was taken from the nepali language subset of CC-100 dataset.

## Training procedure

The model was trained on Google Colab using `1x Tesla V100`. | 940cf5aa9e5999943c811a39e7e5b2c8 |

SreyanG-NVIDIA/bert-base-cased-finetuned-ner | SreyanG-NVIDIA | bert | 13 | 6 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | ['conll2003'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,531 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0650

- Precision: 0.9325

- Recall: 0.9375

- F1: 0.9350

- Accuracy: 0.9840

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2346 | 1.0 | 878 | 0.0722 | 0.9168 | 0.9217 | 0.9192 | 0.9795 |

| 0.0483 | 2.0 | 1756 | 0.0618 | 0.9299 | 0.9370 | 0.9335 | 0.9837 |

| 0.0262 | 3.0 | 2634 | 0.0650 | 0.9325 | 0.9375 | 0.9350 | 0.9840 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu102

- Datasets 2.1.0

- Tokenizers 0.12.1

| 591f1a49eb3256683ae5977480f5be4c |

Habana/stable-diffusion | Habana | null | 3 | 2,462 | null | 1 | null | false | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 2,503 | false |

[Optimum Habana](https://github.com/huggingface/optimum-habana) is the interface between the Hugging Face Transformers and Diffusers libraries and Habana's Gaudi processor (HPU).

It provides a set of tools enabling easy and fast model loading, training and inference on single- and multi-HPU settings for different downstream tasks.

Learn more about how to take advantage of the power of Habana HPUs to train and deploy Transformers and Diffusers models at [hf.co/hardware/habana](https://huggingface.co/hardware/habana).

## Stable Diffusion HPU configuration

This model only contains the `GaudiConfig` file for running **Stable Diffusion 1** (e.g. [CompVis/stable-diffusion-v1-4](https://huggingface.co/CompVis/stable-diffusion-v1-4)) or **Stable Diffusion 2** (e.g. [stabilityai/stable-diffusion-2](https://huggingface.co/stabilityai/stable-diffusion-2)) on Habana's Gaudi processors (HPU).

**This model contains no model weights, only a GaudiConfig.**

This enables to specify:

- `use_habana_mixed_precision`: whether to use Habana Mixed Precision (HMP)

- `hmp_opt_level`: optimization level for HMP, see [here](https://docs.habana.ai/en/latest/PyTorch/PyTorch_Mixed_Precision/PT_Mixed_Precision.html#configuration-options) for a detailed explanation

- `hmp_bf16_ops`: list of operators that should run in bf16

- `hmp_fp32_ops`: list of operators that should run in fp32

- `hmp_is_verbose`: verbosity

## Usage

The `GaudiStableDiffusionPipeline` (`GaudiDDIMScheduler`) is instantiated the same way as the `StableDiffusionPipeline` (`DDIMScheduler`) in the 🤗 Diffusers library.

The only difference is that there are a few new training arguments specific to HPUs.

Here is an example with one prompt:

```python

from optimum.habana import GaudiConfig

from optimum.habana.diffusers import GaudiDDIMScheduler, GaudiStableDiffusionPipeline

model_name = "stabilityai/stable-diffusion-2"

scheduler = GaudiDDIMScheduler.from_pretrained(model_name, subfolder="scheduler")

pipeline = GaudiStableDiffusionPipeline.from_pretrained(

model_name,

scheduler=scheduler,

use_habana=True,

use_hpu_graphs=True,

gaudi_config="Habana/stable-diffusion",

)

outputs = generator(

["An image of a squirrel in Picasso style"],

num_images_per_prompt=16,

batch_size=4,

)

```

Check out the [documentation](https://huggingface.co/docs/optimum/habana/usage_guides/stable_diffusion) and [this example](https://github.com/huggingface/optimum-habana/tree/main/examples/stable-diffusion) for more advanced usage.

| 413e545dc0e2df279da455945618aa84 |

KoenBronstring/finetuning-sentiment-model-3000-samples | KoenBronstring | distilbert | 18 | 11 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['imdb'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,053 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3149

- Accuracy: 0.8733

- F1: 0.8758

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cpu

- Datasets 2.1.0

- Tokenizers 0.12.1

| 897b06137fda2d710a176db43b0fdcb7 |

Ayham/distilgpt2_summarization_cnndm | Ayham | gpt2 | 8 | 63 | transformers | 0 | text-generation | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,215 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2_summarization_cnndm

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.0608

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 3.0416 | 1.0 | 71779 | 3.0608 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

| 33de8f35b3f1a3d16b133d45abe742ef |

bvrtek/KusaMix | bvrtek | null | 5 | 2 | diffusers | 6 | text-to-image | false | false | false | creativeml-openrail-m | ['en'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['stable-diffusion', 'stable-diffusion-diffusers', 'text-to-image', 'diffusers', 'safetensors'] | false | true | true | 1,466 | false |

# 草ミックス

Welcome to KusaMix - a latent diffusion model for weebs. This model is intended to produce high-quality, highly detailed anime style with just a few prompts. Like other anime-style Stable Diffusion models, it also supports danbooru tags to generate images.

e.g. **_1girl, white hair, golden eyes, beautiful eyes, detail, flower meadow, cumulonimbus clouds, lighting, detailed sky, garden_**

Non cherry picked example of prompt from above:

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) | 9e551d38faf22873963d60aebf635fd2 |

Ulto/avengers2 | Ulto | gpt2 | 8 | 6 | transformers | 0 | text-generation | true | false | false | apache-2.0 | null | [] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | false | true | true | 1,215 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# avengers2

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 4.0131

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 56 | 3.9588 |

| No log | 2.0 | 112 | 3.9996 |

| No log | 3.0 | 168 | 4.0131 |

### Framework versions

- Transformers 4.10.0

- Pytorch 1.9.0

- Datasets 1.2.1

- Tokenizers 0.10.1

| dad1afaad8364e4c290899176094e638 |

robkayinto/xlm-roberta-base-finetuned-panx-all | robkayinto | xlm-roberta | 10 | 1 | transformers | 0 | token-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,319 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-all

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1739

- F1: 0.8535

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.3067 | 1.0 | 835 | 0.1840 | 0.8085 |

| 0.1566 | 2.0 | 1670 | 0.1727 | 0.8447 |

| 0.1013 | 3.0 | 2505 | 0.1739 | 0.8535 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.2+cu102

- Datasets 1.16.1

- Tokenizers 0.10.3

| 2ba8d2b40b162afd053facf8543c37fb |

Applemoon/bert-finetuned-ner | Applemoon | bert | 10 | 15 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | ['conll2003'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,512 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0399

- Precision: 0.9513

- Recall: 0.9559

- F1: 0.9536

- Accuracy: 0.9922

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0548 | 1.0 | 1756 | 0.0438 | 0.9368 | 0.9411 | 0.9390 | 0.9900 |

| 0.021 | 2.0 | 3512 | 0.0395 | 0.9446 | 0.9519 | 0.9482 | 0.9914 |

| 0.0108 | 3.0 | 5268 | 0.0399 | 0.9513 | 0.9559 | 0.9536 | 0.9922 |

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

| 26457e9696f376b19ea4511ac767bb9d |

saattrupdan/wav2vec2-xls-r-300m-ftspeech | saattrupdan | wav2vec2 | 14 | 767 | transformers | 0 | automatic-speech-recognition | true | false | false | other | ['da'] | ['ftspeech'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | true | true | true | 884 | false |

# XLS-R-300m-FTSpeech

## Model description

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the [FTSpeech dataset](https://ftspeech.github.io/), being a dataset of 1,800 hours of transcribed speeches from the Danish parliament.

## Performance

The model achieves the following WER scores (lower is better):

| **Dataset** | **WER without LM** | **WER with 5-gram LM** |

| :---: | ---: | ---: |

| [Danish part of Common Voice 8.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_8_0/viewer/da/train) | 20.48 | 17.91 |

| [Alvenir test set](https://huggingface.co/datasets/Alvenir/alvenir_asr_da_eval) | 15.46 | 13.84 |

## License

The use of this model needs to adhere to [this license from the Danish Parliament](https://www.ft.dk/da/aktuelt/tv-fra-folketinget/deling-og-rettigheder). | b11eac1e2d6bd242293f1a09fc2e46b6 |

jonatasgrosman/exp_w2v2t_de_wav2vec2_s982 | jonatasgrosman | wav2vec2 | 10 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['de'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'de'] | false | true | true | 456 | false | # exp_w2v2t_de_wav2vec2_s982

Fine-tuned [facebook/wav2vec2-large-lv60](https://huggingface.co/facebook/wav2vec2-large-lv60) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 67d36ffbe4420b89c8949a9ce4d75f68 |

ReKarma/ddpm-ema-flowers-64 | ReKarma | null | 11 | 3 | diffusers | 0 | null | false | false | false | apache-2.0 | ['en'] | ['huggan/flowers-102-categories'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,225 | false |

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-ema-flowers-64

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/flowers-102-categories` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(0.95, 0.999), weight_decay=1e-06 and epsilon=1e-08

- lr_scheduler: cosine

- lr_warmup_steps: 500

- ema_inv_gamma: 1.0

- ema_inv_gamma: 0.75

- ema_inv_gamma: 0.9999

- mixed_precision: bf16

### Training results

📈 [TensorBoard logs](https://huggingface.co/ReKarma/ddpm-ema-flowers-64/tensorboard?#scalars)

| 7a454fd24320938fb671d8e8a3b38fb8 |

AnnaR/literature_summarizer | AnnaR | bart | 9 | 4 | transformers | 0 | text2text-generation | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 1,778 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# AnnaR/literature_summarizer

This model is a fine-tuned version of [sshleifer/distilbart-xsum-1-1](https://huggingface.co/sshleifer/distilbart-xsum-1-1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 3.2180

- Validation Loss: 4.7198

- Epoch: 10

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 5.6e-05, 'decay_steps': 5300, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.1}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 5.6694 | 5.0234 | 0 |

| 4.9191 | 4.8161 | 1 |

| 4.5770 | 4.7170 | 2 |

| 4.3268 | 4.6571 | 3 |

| 4.1073 | 4.6296 | 4 |

| 3.9225 | 4.6279 | 5 |

| 3.7564 | 4.6288 | 6 |

| 3.5989 | 4.6731 | 7 |

| 3.4611 | 4.6767 | 8 |

| 3.3356 | 4.6934 | 9 |

| 3.2180 | 4.7198 | 10 |

### Framework versions

- Transformers 4.17.0

- TensorFlow 2.8.0

- Datasets 2.0.0

- Tokenizers 0.11.6

| f121b756af74b1123d33d48c42572aa6 |

HusseinHE/ramy | HusseinHE | null | 29 | 2 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 2 | 2 | 0 | 0 | 0 | 0 | 0 | ['text-to-image'] | false | true | true | 486 | false | ### ramy Dreambooth model trained by HusseinHE with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v1-5 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

e3t (use that on your prompt)

| 6a06d78b6d1bafef7b9e9258d7cb3196 |

jonatasgrosman/exp_w2v2r_de_vp-100k_accent_germany-8_austria-2_s953 | jonatasgrosman | wav2vec2 | 10 | 4 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['de'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'de'] | false | true | true | 502 | false | # exp_w2v2r_de_vp-100k_accent_germany-8_austria-2_s953

Fine-tuned [facebook/wav2vec2-large-100k-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-100k-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| bdd88a7bdcb8331b66353bd8e794b0e2 |

sd-concepts-library/willy-hd | sd-concepts-library | null | 10 | 0 | null | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,156 | false | ### Willy-HD on Stable Diffusion

This is the `<willy_character>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

| 2c871dc55a91d1b624a49ebc2cfc2065 |

jonatasgrosman/exp_w2v2r_de_xls-r_age_teens-0_sixties-10_s288 | jonatasgrosman | wav2vec2 | 10 | 0 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['de'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'de'] | false | true | true | 476 | false | # exp_w2v2r_de_xls-r_age_teens-0_sixties-10_s288

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 2f3512ec2c6864b4f7f45c3fe448a652 |

jonatasgrosman/exp_w2v2r_fr_vp-100k_age_teens-10_sixties-0_s732 | jonatasgrosman | wav2vec2 | 10 | 0 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['fr'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'fr'] | false | true | true | 498 | false | # exp_w2v2r_fr_vp-100k_age_teens-10_sixties-0_s732

Fine-tuned [facebook/wav2vec2-large-100k-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-100k-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (fr)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| ed9d5aeecb632b431f65a822fe77a11f |

Helsinki-NLP/opus-mt-fr-ha | Helsinki-NLP | marian | 10 | 28 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 768 | false |

### opus-mt-fr-ha

* source languages: fr

* target languages: ha

* OPUS readme: [fr-ha](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/fr-ha/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/fr-ha/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/fr-ha/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/fr-ha/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.fr.ha | 24.4 | 0.447 |

| bd57d1773fb4caa5ae47213f1751ed56 |

ghatgetanuj/microsoft-deberta-v3-large_cls_CR | ghatgetanuj | deberta-v2 | 13 | 1 | transformers | 0 | text-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,544 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# microsoft-deberta-v3-large_cls_CR

This model is a fine-tuned version of [microsoft/deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3338

- Accuracy: 0.9388

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 213 | 0.3517 | 0.9043 |

| No log | 2.0 | 426 | 0.2648 | 0.9229 |

| 0.3074 | 3.0 | 639 | 0.3421 | 0.9388 |

| 0.3074 | 4.0 | 852 | 0.3039 | 0.9388 |

| 0.0844 | 5.0 | 1065 | 0.3338 | 0.9388 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

| e5d32233a740a9ae996dfb97b576bb60 |

AlexN/xls-r-300m-fr-0 | AlexN | wav2vec2 | 38 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['fr'] | ['mozilla-foundation/common_voice_8_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'mozilla-foundation/common_voice_8_0', 'generated_from_trainer', 'robust-speech-event', 'hf-asr-leaderboard'] | true | true | true | 2,900 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

#

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the MOZILLA-FOUNDATION/COMMON_VOICE_8_0 - FR dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2388

- Wer: 0.3681

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1500

- num_epochs: 2.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 4.3748 | 0.07 | 500 | 3.8784 | 1.0 |

| 2.8068 | 0.14 | 1000 | 2.8289 | 0.9826 |

| 1.6698 | 0.22 | 1500 | 0.8811 | 0.7127 |

| 1.3488 | 0.29 | 2000 | 0.5166 | 0.5369 |

| 1.2239 | 0.36 | 2500 | 0.4105 | 0.4741 |

| 1.1537 | 0.43 | 3000 | 0.3585 | 0.4448 |

| 1.1184 | 0.51 | 3500 | 0.3336 | 0.4292 |

| 1.0968 | 0.58 | 4000 | 0.3195 | 0.4180 |

| 1.0737 | 0.65 | 4500 | 0.3075 | 0.4141 |

| 1.0677 | 0.72 | 5000 | 0.3015 | 0.4089 |

| 1.0462 | 0.8 | 5500 | 0.2971 | 0.4077 |

| 1.0392 | 0.87 | 6000 | 0.2870 | 0.3997 |

| 1.0178 | 0.94 | 6500 | 0.2805 | 0.3963 |

| 0.992 | 1.01 | 7000 | 0.2748 | 0.3935 |

| 1.0197 | 1.09 | 7500 | 0.2691 | 0.3884 |

| 1.0056 | 1.16 | 8000 | 0.2682 | 0.3889 |

| 0.9826 | 1.23 | 8500 | 0.2647 | 0.3868 |

| 0.9815 | 1.3 | 9000 | 0.2603 | 0.3832 |

| 0.9717 | 1.37 | 9500 | 0.2561 | 0.3807 |

| 0.9605 | 1.45 | 10000 | 0.2523 | 0.3783 |

| 0.96 | 1.52 | 10500 | 0.2494 | 0.3788 |

| 0.9442 | 1.59 | 11000 | 0.2478 | 0.3760 |

| 0.9564 | 1.66 | 11500 | 0.2454 | 0.3733 |

| 0.9436 | 1.74 | 12000 | 0.2439 | 0.3747 |

| 0.938 | 1.81 | 12500 | 0.2411 | 0.3716 |

| 0.9353 | 1.88 | 13000 | 0.2397 | 0.3698 |

| 0.9271 | 1.95 | 13500 | 0.2388 | 0.3681 |

### Framework versions

- Transformers 4.17.0.dev0

- Pytorch 1.10.2+cu102

- Datasets 1.18.2.dev0

- Tokenizers 0.11.0

| 8eb5e12575d89fc3f56ed9af98e41d1d |

aXhyra/presentation_sentiment_31415 | aXhyra | distilbert | 10 | 6 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['tweet_eval'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,402 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# presentation_sentiment_31415

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the tweet_eval dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0860

- F1: 0.7183

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 7.2792011721188e-06

- train_batch_size: 4

- eval_batch_size: 4

- seed: 0

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 0.3747 | 1.0 | 11404 | 0.6515 | 0.7045 |

| 0.6511 | 2.0 | 22808 | 0.7334 | 0.7188 |

| 0.0362 | 3.0 | 34212 | 0.9498 | 0.7195 |

| 1.0576 | 4.0 | 45616 | 1.0860 | 0.7183 |

### Framework versions

- Transformers 4.12.5

- Pytorch 1.9.1

- Datasets 1.16.1

- Tokenizers 0.10.3

| 93225fc58ee19f9c81e304bce7820e98 |

ghadeermobasher/BC4CHEMD-Original-128-PubMedBERT-Trial-latest-general | ghadeermobasher | bert | 15 | 7 | transformers | 0 | token-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,147 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# BC4CHEMD-Original-128-PubMedBERT-Trial-latest-general

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0044

- Precision: 0.9678

- Recall: 0.9892

- F1: 0.9784

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 1

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.0+cu102

- Datasets 2.3.2

- Tokenizers 0.10.3

| e8098883164d8709c0840ebee8d695c8 |

muhtasham/tiny-mlm-glue-stsb-target-glue-mnli | muhtasham | bert | 10 | 2 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 2,511 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tiny-mlm-glue-stsb-target-glue-mnli

This model is a fine-tuned version of [muhtasham/tiny-mlm-glue-stsb](https://huggingface.co/muhtasham/tiny-mlm-glue-stsb) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8112

- Accuracy: 0.6365

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- num_epochs: 200

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 1.0767 | 0.04 | 500 | 1.0354 | 0.4644 |

| 1.0091 | 0.08 | 1000 | 0.9646 | 0.5496 |

| 0.9629 | 0.12 | 1500 | 0.9236 | 0.5798 |

| 0.9384 | 0.16 | 2000 | 0.9054 | 0.5916 |

| 0.9254 | 0.2 | 2500 | 0.8894 | 0.5995 |

| 0.9167 | 0.24 | 3000 | 0.8788 | 0.6028 |

| 0.9013 | 0.29 | 3500 | 0.8707 | 0.6104 |

| 0.8962 | 0.33 | 4000 | 0.8603 | 0.6132 |

| 0.8802 | 0.37 | 4500 | 0.8561 | 0.6185 |

| 0.8834 | 0.41 | 5000 | 0.8490 | 0.6220 |

| 0.8734 | 0.45 | 5500 | 0.8427 | 0.6227 |

| 0.8721 | 0.49 | 6000 | 0.8399 | 0.6278 |

| 0.8739 | 0.53 | 6500 | 0.8336 | 0.6331 |

| 0.8654 | 0.57 | 7000 | 0.8345 | 0.6294 |

| 0.8579 | 0.61 | 7500 | 0.8192 | 0.6375 |

| 0.8567 | 0.65 | 8000 | 0.8191 | 0.6348 |

| 0.8517 | 0.69 | 8500 | 0.8275 | 0.6315 |

| 0.8528 | 0.73 | 9000 | 0.8060 | 0.6433 |

| 0.8448 | 0.77 | 9500 | 0.8152 | 0.6355 |

| 0.8361 | 0.81 | 10000 | 0.8026 | 0.6415 |

| 0.8398 | 0.86 | 10500 | 0.8112 | 0.6365 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

| 5db70b9ffe4b6d9e8ec9ee835a1bc55f |

jonatasgrosman/exp_w2v2t_nl_wav2vec2_s754 | jonatasgrosman | wav2vec2 | 10 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['nl'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'nl'] | false | true | true | 456 | false | # exp_w2v2t_nl_wav2vec2_s754

Fine-tuned [facebook/wav2vec2-large-lv60](https://huggingface.co/facebook/wav2vec2-large-lv60) for speech recognition using the train split of [Common Voice 7.0 (nl)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| f9fbd731576f748d9636ab56e333e58c |

gokuls/mobilebert_sa_GLUE_Experiment_data_aug_wnli | gokuls | mobilebert | 17 | 0 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,588 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_sa_GLUE_Experiment_data_aug_wnli

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE WNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5287

- Accuracy: 0.1268

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6415 | 1.0 | 435 | 2.5287 | 0.1268 |

| 0.4894 | 2.0 | 870 | 3.5123 | 0.1268 |

| 0.4427 | 3.0 | 1305 | 4.8804 | 0.0986 |

| 0.4026 | 4.0 | 1740 | 7.2410 | 0.0986 |

| 0.3707 | 5.0 | 2175 | 10.5770 | 0.0845 |

| 0.3376 | 6.0 | 2610 | 7.2101 | 0.0986 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

| 6412ea4a640ba8737e0cf7648c3a0e00 |

Helsinki-NLP/opus-mt-fi-pap | Helsinki-NLP | marian | 10 | 7 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 776 | false |

### opus-mt-fi-pap

* source languages: fi

* target languages: pap

* OPUS readme: [fi-pap](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/fi-pap/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-24.zip](https://object.pouta.csc.fi/OPUS-MT-models/fi-pap/opus-2020-01-24.zip)

* test set translations: [opus-2020-01-24.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/fi-pap/opus-2020-01-24.test.txt)

* test set scores: [opus-2020-01-24.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/fi-pap/opus-2020-01-24.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.fi.pap | 27.3 | 0.478 |

| 34df180b5bee94119fe42261d581f665 |

jonatasgrosman/exp_w2v2t_nl_hubert_s319 | jonatasgrosman | hubert | 10 | 4 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['nl'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'nl'] | false | true | true | 452 | false | # exp_w2v2t_nl_hubert_s319

Fine-tuned [facebook/hubert-large-ll60k](https://huggingface.co/facebook/hubert-large-ll60k) for speech recognition using the train split of [Common Voice 7.0 (nl)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| c274a8988c8642dc5744297076ada686 |

pritoms/distilgpt2-finetuned-wikitext2 | pritoms | gpt2 | 11 | 4 | transformers | 0 | text-generation | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,243 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2-finetuned-wikitext2

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 3.0540

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 130 | 3.1733 |

| No log | 2.0 | 260 | 3.0756 |

| No log | 3.0 | 390 | 3.0540 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.0+cu111

- Datasets 1.14.0

- Tokenizers 0.10.3

| 9adce18eb81da60e0bbd631c7ce3a1ef |

ali2066/finetuned_token_2e-05_16_02_2022-14_18_19 | ali2066 | distilbert | 13 | 10 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,787 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuned_token_2e-05_16_02_2022-14_18_19

This model is a fine-tuned version of [distilbert-base-uncased-finetuned-sst-2-english](https://huggingface.co/distilbert-base-uncased-finetuned-sst-2-english) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1722

- Precision: 0.3378

- Recall: 0.3615

- F1: 0.3492

- Accuracy: 0.9448

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 38 | 0.3781 | 0.1512 | 0.2671 | 0.1931 | 0.8216 |

| No log | 2.0 | 76 | 0.3020 | 0.1748 | 0.2938 | 0.2192 | 0.8551 |

| No log | 3.0 | 114 | 0.2723 | 0.1938 | 0.3339 | 0.2452 | 0.8663 |

| No log | 4.0 | 152 | 0.2574 | 0.2119 | 0.3506 | 0.2642 | 0.8727 |

| No log | 5.0 | 190 | 0.2521 | 0.2121 | 0.3623 | 0.2676 | 0.8756 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.1+cu113

- Datasets 1.18.0

- Tokenizers 0.10.3

| 3ef047dc6472c566204d0b2657da6421 |

tszocinski/bart-base-squad-question-generation | tszocinski | bart | 9 | 2 | transformers | 0 | text2text-generation | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 1,357 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# tszocinski/bart-base-squad-question-generation

This model is a fine-tuned version of [tszocinski/bart-base-squad-question-generation](https://huggingface.co/tszocinski/bart-base-squad-question-generation) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 6.5656

- Validation Loss: 11.1958

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'RMSprop', 'config': {'name': 'RMSprop', 'learning_rate': 0.001, 'decay': 0.0, 'rho': 0.9, 'momentum': 0.0, 'epsilon': 1e-07, 'centered': False}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 6.5656 | 11.1958 | 0 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.8.2

- Datasets 2.5.1

- Tokenizers 0.12.1

| 336d7179212f5232a8bfb50b83c77fc0 |

Palak/google_electra-base-discriminator_squad | Palak | electra | 13 | 7 | transformers | 0 | question-answering | true | false | false | apache-2.0 | null | ['squad'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,069 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# google_electra-base-discriminator_squad

This model is a fine-tuned version of [google/electra-base-discriminator](https://huggingface.co/google/electra-base-discriminator) on the **squadV1** dataset.

- "eval_exact_match": 85.33585619678335

- "eval_f1": 91.97363450387108

- "eval_samples": 10784

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 16

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

### Framework versions

- Transformers 4.14.1

- Pytorch 1.9.0

- Datasets 1.16.1

- Tokenizers 0.10.3