repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

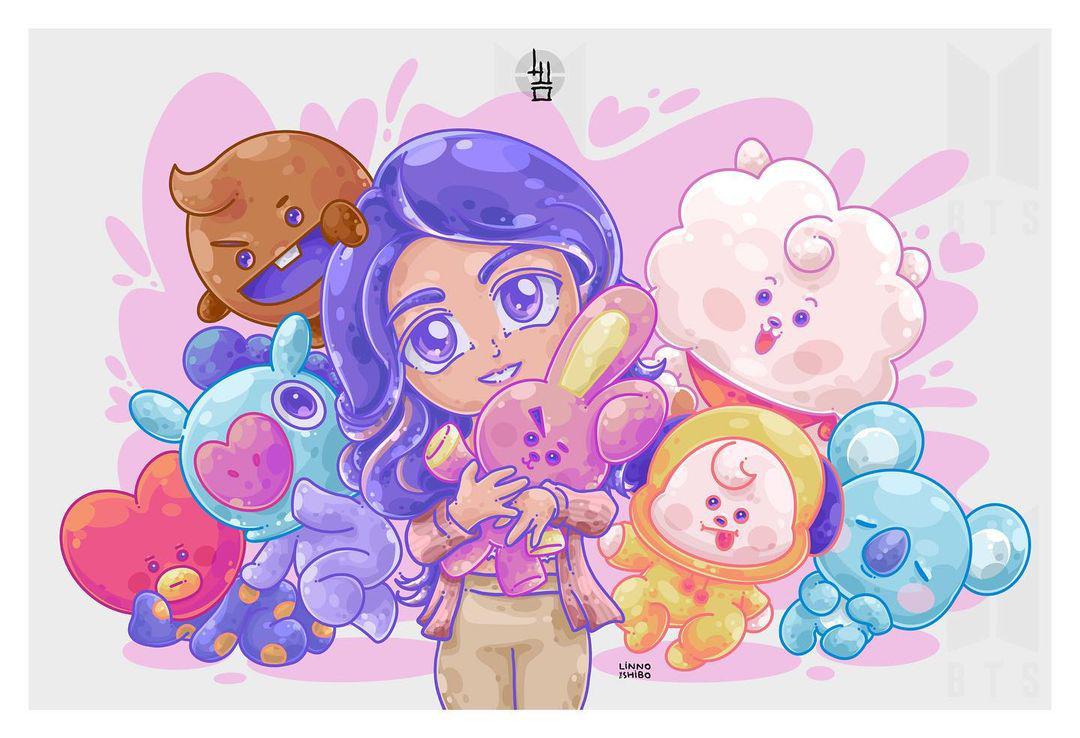

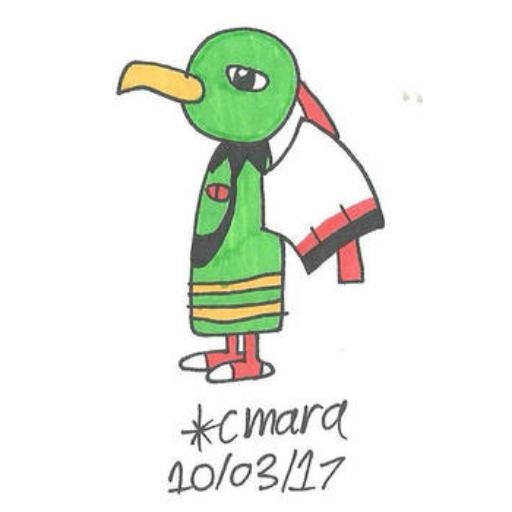

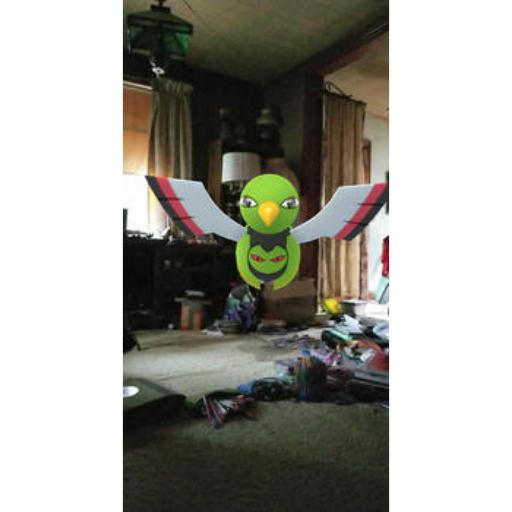

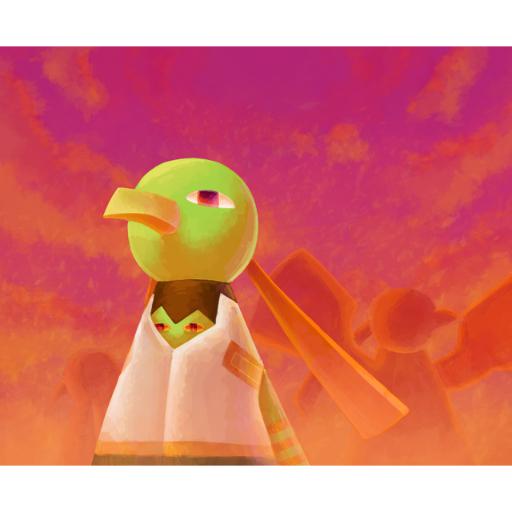

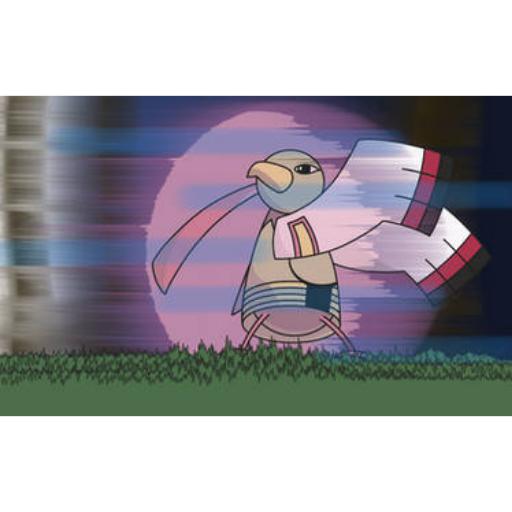

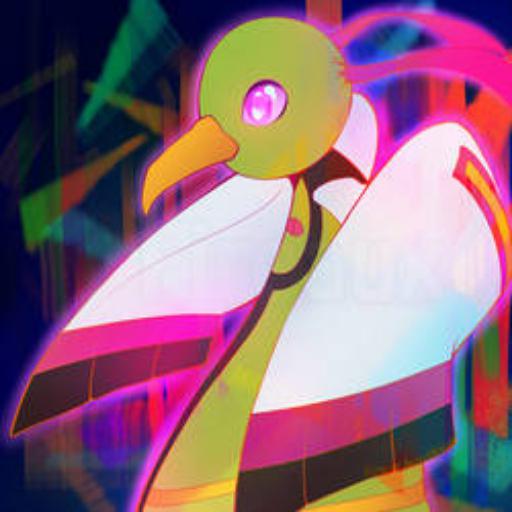

sd-concepts-library/linnopoke | sd-concepts-library | null | 17 | 0 | null | 4 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,870 | false | ### linnopoke on Stable Diffusion

This is the `<linnopoke>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

| 4aee5b3ca30b660bda3a1748968cc5b7 |

tkubotake/xlm-roberta-base-finetuned-panx-de | tkubotake | xlm-roberta | 11 | 6 | transformers | 0 | token-classification | true | false | false | mit | null | ['xtreme'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,319 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1365

- F1: 0.8649

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2553 | 1.0 | 525 | 0.1575 | 0.8279 |

| 0.1284 | 2.0 | 1050 | 0.1386 | 0.8463 |

| 0.0813 | 3.0 | 1575 | 0.1365 | 0.8649 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

| f1b418f791ea0208631d2adffd298267 |

zhiyil/roberta-base-finetuned-intent | zhiyil | roberta | 12 | 1,968 | transformers | 0 | text-classification | true | false | false | mit | null | ['snips_built_in_intents'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,919 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-finetuned-intent

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the snips_built_in_intents dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2720

- Accuracy: 0.9333

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: IPU

- gradient_accumulation_steps: 8

- total_train_batch_size: 8

- total_eval_batch_size: 5

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- training precision: Mixed Precision

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.9568 | 1.0 | 37 | 1.7598 | 0.4333 |

| 1.2238 | 2.0 | 74 | 0.8130 | 0.7667 |

| 0.4536 | 3.0 | 111 | 0.4985 | 0.8 |

| 0.2478 | 4.0 | 148 | 0.3535 | 0.8667 |

| 0.0903 | 5.0 | 185 | 0.3110 | 0.8667 |

| 0.0849 | 6.0 | 222 | 0.2720 | 0.9333 |

| 0.0708 | 7.0 | 259 | 0.2742 | 0.8667 |

| 0.0796 | 8.0 | 296 | 0.2839 | 0.8667 |

| 0.0638 | 9.0 | 333 | 0.2949 | 0.8667 |

| 0.0566 | 10.0 | 370 | 0.2925 | 0.8667 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.0+cpu

- Datasets 2.7.1

- Tokenizers 0.12.0

| 2190a94bb3e8c5309e54f0bc00725965 |

hassnain/wav2vec2-base-timit-demo-colab6 | hassnain | wav2vec2 | 12 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,701 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab6

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9394

- Wer: 0.5282

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 60

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 5.3117 | 7.35 | 500 | 3.1548 | 1.0 |

| 1.6732 | 14.71 | 1000 | 0.8857 | 0.6561 |

| 0.5267 | 22.06 | 1500 | 0.7931 | 0.6018 |

| 0.2951 | 29.41 | 2000 | 0.8152 | 0.5816 |

| 0.2013 | 36.76 | 2500 | 0.9060 | 0.5655 |

| 0.1487 | 44.12 | 3000 | 0.9201 | 0.5624 |

| 0.1189 | 51.47 | 3500 | 0.9394 | 0.5412 |

| 0.1004 | 58.82 | 4000 | 0.9394 | 0.5282 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

| 0e843dc98519c39a6cb01eeea713be98 |

ConvLab/t5-small-nlu-tm1_tm2_tm3 | ConvLab | t5 | 7 | 3 | transformers | 0 | text2text-generation | true | false | false | apache-2.0 | ['en'] | ['ConvLab/tm1', 'ConvLab/tm2', 'ConvLab/tm3'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['t5-small', 'text2text-generation', 'natural language understanding', 'conversational system', 'task-oriented dialog'] | true | true | true | 826 | false |

# t5-small-nlu-tm1_tm2_tm3

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on [Taskmaster-1](https://huggingface.co/datasets/ConvLab/tm1), [Taskmaster-2](https://huggingface.co/datasets/ConvLab/tm2), and [Taskmaster-3](https://huggingface.co/datasets/ConvLab/tm3).

Refer to [ConvLab-3](https://github.com/ConvLab/ConvLab-3) for model description and usage.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 128

- eval_batch_size: 64

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 256

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 10.0

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.2+cu102

- Datasets 1.18.3

- Tokenizers 0.11.0

| 74469152274484337e1dead1702c327d |

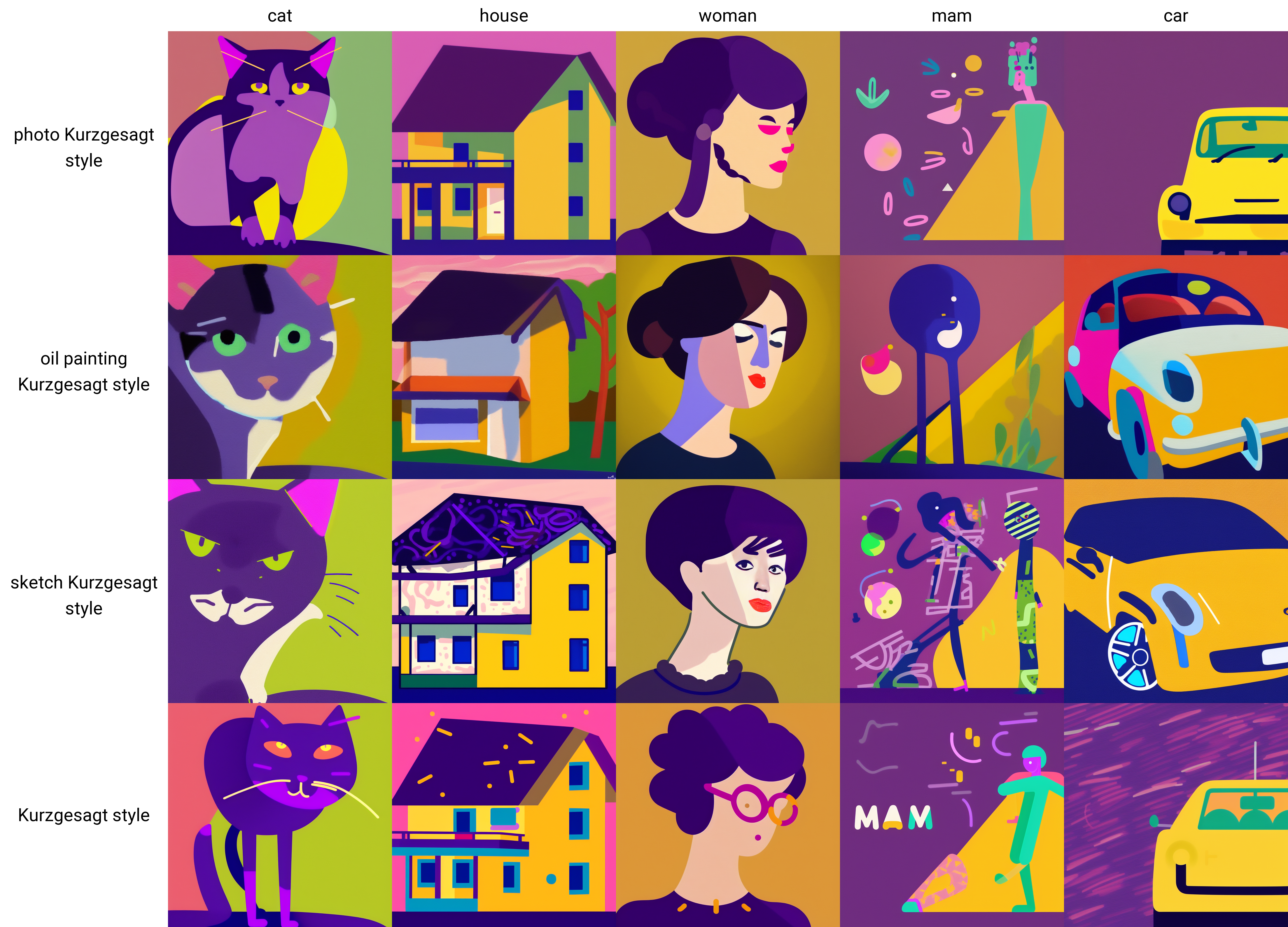

Fireman4740/kurzgesagt-style-v2-768 | Fireman4740 | null | 43 | 16 | diffusers | 5 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 | ['text-to-image'] | false | true | true | 566 | false | ### Kurzgesagt-style-v2-768 Dreambooth model trained on the v2-768 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

Kurzgesagt style (use that on your prompt)

| ce1f750a7542facf8b1077b0e9a862a8 |

Alireza1044/albert-base-v2-rte | Alireza1044 | albert | 16 | 2 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | false | true | true | 992 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# rte

This model is a fine-tuned version of [albert-base-v2](https://huggingface.co/albert-base-v2) on the GLUE RTE dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7994

- Accuracy: 0.6859

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4.0

### Training results

### Framework versions

- Transformers 4.9.0

- Pytorch 1.9.0+cu102

- Datasets 1.10.2

- Tokenizers 0.10.3

| 92b8fa9ae09f0585cbefe95de589a6aa |

rudzinskimaciej/crystalpunk | rudzinskimaciej | null | 16 | 0 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 428 | false | ### crystalpunk Dreambooth model trained by rudzinskimaciej with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

| 00bd8be4595cb44447753d33d29bafc7 |

bnriiitb/whisper-small-te | bnriiitb | whisper | 17 | 4 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['te'] | ['Chai_Bisket_Stories_16-08-2021_14-17'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['hf-asr-leaderboard', 'generated_from_trainer'] | true | true | true | 1,869 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Telugu - Naga Budigam

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) on the Chai_Bisket_Stories_16-08-2021_14-17 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7063

- Wer: 77.4871

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.2933 | 2.62 | 500 | 0.3849 | 86.6429 |

| 0.0692 | 5.24 | 1000 | 0.3943 | 82.7190 |

| 0.0251 | 7.85 | 1500 | 0.4720 | 82.4415 |

| 0.0098 | 10.47 | 2000 | 0.5359 | 81.6092 |

| 0.0061 | 13.09 | 2500 | 0.5868 | 75.9413 |

| 0.0025 | 15.71 | 3000 | 0.6235 | 76.6944 |

| 0.0009 | 18.32 | 3500 | 0.6634 | 78.3987 |

| 0.0005 | 20.94 | 4000 | 0.6776 | 77.1700 |

| 0.0002 | 23.56 | 4500 | 0.6995 | 78.2798 |

| 0.0001 | 26.18 | 5000 | 0.7063 | 77.4871 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0

- Datasets 2.7.1

- Tokenizers 0.13.2

| e6fbd57e298590cbbbfbf16373d2f8a1 |

moredeal/distilbert-base-uncased-finetuned-category-classification | moredeal | distilbert | 16 | 3 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,665 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-category-classification

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0377

- F1: 0.9943

- Roc Auc: 0.9943

- Accuracy: 0.9943

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Roc Auc | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:-------:|:--------:|

| 0.0374 | 1.0 | 7612 | 0.0373 | 0.9916 | 0.9916 | 0.9915 |

| 0.0255 | 2.0 | 15224 | 0.0409 | 0.9922 | 0.9922 | 0.9921 |

| 0.0281 | 3.0 | 22836 | 0.0332 | 0.9934 | 0.9934 | 0.9934 |

| 0.0189 | 4.0 | 30448 | 0.0359 | 0.9941 | 0.9941 | 0.9940 |

| 0.005 | 5.0 | 38060 | 0.0377 | 0.9943 | 0.9943 | 0.9943 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

| e111b97db53868381bc898cc58194dff |

Helsinki-NLP/opus-mt-tl-de | Helsinki-NLP | marian | 11 | 7 | transformers | 0 | translation | true | true | false | apache-2.0 | ['tl', 'de'] | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 2,006 | false |

### tgl-deu

* source group: Tagalog

* target group: German

* OPUS readme: [tgl-deu](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/tgl-deu/README.md)

* model: transformer-align

* source language(s): tgl_Latn

* target language(s): deu

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/tgl-deu/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/tgl-deu/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/tgl-deu/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.tgl.deu | 22.7 | 0.473 |

### System Info:

- hf_name: tgl-deu

- source_languages: tgl

- target_languages: deu

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/tgl-deu/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['tl', 'de']

- src_constituents: {'tgl_Latn'}

- tgt_constituents: {'deu'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/tgl-deu/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/tgl-deu/opus-2020-06-17.test.txt

- src_alpha3: tgl

- tgt_alpha3: deu

- short_pair: tl-de

- chrF2_score: 0.473

- bleu: 22.7

- brevity_penalty: 0.9690000000000001

- ref_len: 2453.0

- src_name: Tagalog

- tgt_name: German

- train_date: 2020-06-17

- src_alpha2: tl

- tgt_alpha2: de

- prefer_old: False

- long_pair: tgl-deu

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 | b0f65e9a5108972b5e2b9426b4a5793c |

alexlopitz/ner_kaggle_class_prediction_model | alexlopitz | bert | 16 | 3 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,530 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ner_kaggle_class_prediction_model

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0191

- Precision: 0.9850

- Recall: 0.9830

- F1: 0.9840

- Accuracy: 0.9950

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.1304 | 1.0 | 806 | 0.0202 | 0.9823 | 0.9794 | 0.9808 | 0.9940 |

| 0.0142 | 2.0 | 1612 | 0.0178 | 0.9819 | 0.9826 | 0.9823 | 0.9945 |

| 0.0081 | 3.0 | 2418 | 0.0191 | 0.9850 | 0.9830 | 0.9840 | 0.9950 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.0+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

| cffdbea42a3bf7e73a4076b3efeeaa4e |

DenilsenAxel/nlp-text-classification | DenilsenAxel | bert | 6 | 3 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['amazon_us_reviews'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,331 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# test_trainer

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the amazon_us_reviews dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9348

- Accuracy: 0.7441

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.6471 | 1.0 | 7500 | 0.6596 | 0.7376 |

| 0.5235 | 2.0 | 15000 | 0.6997 | 0.7423 |

| 0.3955 | 3.0 | 22500 | 0.9348 | 0.7441 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

| 01a91f44b412b75fad17732e296afbf3 |

Voyager1/asr-wav2vec2-commonvoice-es-finetuned-rtve | Voyager1 | wav2vec2 | 9 | 10 | speechbrain | 0 | automatic-speech-recognition | true | false | false | afl-3.0 | ['es'] | ['commonvoice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['CTC', 'pytorch', 'speechbrain', 'Transformer', 'hf-asr-leaderboard'] | true | true | true | 3,791 | false |

<iframe src="https://ghbtns.com/github-btn.html?user=speechbrain&repo=speechbrain&type=star&count=true&size=large&v=2" frameborder="0" scrolling="0" width="170" height="30" title="GitHub"></iframe>

<br/><br/>

# wav2vec 2.0 with CTC trained on data aligned from RTVE databases (No LM)

This repository provides all the necessary tools to perform automatic speech

recognition from an end-to-end system pretrained on CommonVoice (Spanish Language) within

SpeechBrain. For a better experience, we encourage you to learn more about

[SpeechBrain](https://speechbrain.github.io).

The performance of the model is the following:

| Release | RTVE 2022 Test WER | GPUs |

|:-------------:|:--------------:| :--------:|

| 16-01-23 | 23.45 | 3xRTX2080Ti 12GB |

## Pipeline description

This ASR system is composed of 2 different but linked blocks:

- Tokenizer (char) that transforms words into chars and trained with

the train transcriptions (train.tsv) of CommonVoice (ES).

- Acoustic model (wav2vec2.0 + CTC). A pretrained wav2vec 2.0 model ([wav2vec2-large-xlsr-53-spanish](https://huggingface.co/facebook/wav2vec2-large-xlsr-53-spanish)) is combined with two DNN layers and finetuned on CommonVoice ES.

The obtained final acoustic representation is given to the CTC decoder.

The system is trained with recordings sampled at 16kHz (single channel).

The code will automatically normalize your audio (i.e., resampling + mono channel selection) when calling *transcribe_file* if needed.

## Install SpeechBrain

First of all, please install tranformers and SpeechBrain with the following command:

```

pip install speechbrain transformers

```

Please notice that we encourage you to read tutorials and learn more about

[SpeechBrain](https://speechbrain.github.io).

### Transcribing your own audio files (in Spanish)

```python

from speechbrain.pretrained import EncoderASR

asr_model = EncoderASR.from_hparams(source="Voyager1/asr-wav2vec2-commonvoice-es", savedir="pretrained_models/asr-wav2vec2-commonvoice-es")

asr_model.transcribe_file("Voyager1/asr-wav2vec2-commonvoice-es/example-es.wav")

```

### Inference on GPU

To perform inference on the GPU, add `run_opts={"device":"cuda"}` when calling the `from_hparams` method.

### Limitations

We do not provide any warranty on the performance achieved by this model when used on other datasets.

# **Citations**

```bibtex

@article{lopez2022tid,

title={TID Spanish ASR system for the Albayzin 2022 Speech-to-Text Transcription Challenge},

author={L{\'o}pez, Fernando and Luque, Jordi},

journal={Proc. IberSPEECH 2022},

pages={271--275},

year={2022}

}

@misc{https://doi.org/10.48550/arxiv.2210.15226,

doi = {10.48550/ARXIV.2210.15226},

url = {https://arxiv.org/abs/2210.15226},

author = {López, Fernando and Luque, Jordi},

title = {Iterative pseudo-forced alignment by acoustic CTC loss for self-supervised ASR domain adaptation},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

@misc{lleidartve,

title={Rtve 2018, 2020 and 2022 database description},

author={Lleida, E and Ortega, A and Miguel, A and Baz{\'a}n, V and P{\'e}rez, C and G{\'o}mez, M and de Prada, A}

}

@misc{speechbrain,

title={{SpeechBrain}: A General-Purpose Speech Toolkit},

author={Mirco Ravanelli and Titouan Parcollet and Peter Plantinga and Aku Rouhe and Samuele Cornell and Loren Lugosch and Cem Subakan and Nauman Dawalatabad and Abdelwahab Heba and Jianyuan Zhong and Ju-Chieh Chou and Sung-Lin Yeh and Szu-Wei Fu and Chien-Feng Liao and Elena Rastorgueva and François Grondin and William Aris and Hwidong Na and Yan Gao and Renato De Mori and Yoshua Bengio},

year={2021},

eprint={2106.04624},

archivePrefix={arXiv},

primaryClass={eess.AS},

note={arXiv:2106.04624}

}

```

| 80d267b59d9d1de23eb2fc6e29fff910 |

riffusion/riffusion-model-v1 | riffusion | null | 61 | 11,493 | diffusers | 355 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 4 | 4 | 0 | 0 | 14 | 4 | 10 | ['stable-diffusion', 'stable-diffusion-diffusers', 'text-to-image', 'text-to-audio'] | false | true | true | 3,865 | false |

# Riffusion

Riffusion is an app for real-time music generation with stable diffusion.

Read about it at https://www.riffusion.com/about and try it at https://www.riffusion.com/.

* Code: https://github.com/riffusion/riffusion

* Web app: https://github.com/hmartiro/riffusion-app

* Model checkpoint: https://huggingface.co/riffusion/riffusion-model-v1

* Discord: https://discord.gg/yu6SRwvX4v

This repository contains the model files, including:

* a diffusers formated library

* a compiled checkpoint file

* a traced unet for improved inference speed

* a seed image library for use with riffusion-app

## Riffusion v1 Model

Riffusion is a latent text-to-image diffusion model capable of generating spectrogram images given any text input. These spectrograms can be converted into audio clips.

The model was created by [Seth Forsgren](https://sethforsgren.com/) and [Hayk Martiros](https://haykmartiros.com/) as a hobby project.

You can use the Riffusion model directly, or try the [Riffusion web app](https://www.riffusion.com/).

The Riffusion model was created by fine-tuning the **Stable-Diffusion-v1-5** checkpoint. Read about Stable Diffusion here [🤗's Stable Diffusion blog](https://huggingface.co/blog/stable_diffusion).

### Model Details

- **Developed by:** Seth Forsgren, Hayk Martiros

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming-convention-of-responsible-ai-licenses), adapted from the work that [BigScience](https://bigscience.huggingface.co/) and [the RAIL Initiative](https://www.licenses.ai/) are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) on which our license is based.

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([CLIP ViT-L/14](https://arxiv.org/abs/2103.00020)) as suggested in the [Imagen paper](https://arxiv.org/abs/2205.11487).

### Direct Use

The model is intended for research purposes only. Possible research areas and

tasks include

- Generation of artworks, audio, and use in creative processes.

- Applications in educational or creative tools.

- Research on generative models.

### Datasets

The original Stable Diffusion v1.5 was trained on the [LAION-5B](https://arxiv.org/abs/2210.08402) dataset using the [CLIP text encoder](https://openai.com/blog/clip/), which provided an amazing starting point with an in-depth understanding of language, including musical concepts. The team at LAION also compiled a fantastic audio dataset from many general, speech, and music sources that we recommend at [LAION-AI/audio-dataset](https://github.com/LAION-AI/audio-dataset/blob/main/data_collection/README.md).

### Fine Tuning

Check out the [diffusers training examples](https://huggingface.co/docs/diffusers/training/overview) from Hugging Face. Fine tuning requires a dataset of spectrogram images of short audio clips, with associated text describing them. Note that the CLIP encoder is able to understand and connect many words even if they never appear in the dataset. It is also possible to use a [dreambooth](https://huggingface.co/blog/dreambooth) method to get custom styles.

## Citation

If you build on this work, please cite it as follows:

```

@article{Forsgren_Martiros_2022,

author = {Forsgren, Seth* and Martiros, Hayk*},

title = {{Riffusion - Stable diffusion for real-time music generation}},

url = {https://riffusion.com/about},

year = {2022}

}

```

| a7f2eb893601ac4af077d518bc7aed16 |

migueladarlo/distilbert-depression-base | migueladarlo | distilbert | 5 | 2 | transformers | 2 | text-classification | true | false | false | mit | ['en'] | ['CLPsych 2015'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['text', 'Twitter'] | true | true | true | 2,507 | false |

# distilbert-depression-base

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) trained on CLPsych 2015 and evaluated on a scraped dataset from Twitter to detect potential users in Twitter for depression.

It achieves the following results on the evaluation set:

- Evaluation Loss: 0.64

- Accuracy: 0.65

- F1: 0.70

- Precision: 0.61

- Recall: 0.83

- AUC: 0.65

## Intended uses & limitations

Feed a corpus of tweets to the model to generate label if input is indicative of a depressed user or not. Label 1 is depressed, Label 0 is not depressed.

Limitation: All token sequences longer than 512 are automatically truncated. Also, training and test data may be contaminated with mislabeled users.

### How to use

You can use this model directly with a pipeline for sentiment analysis:

```python

>>> from transformers import DistilBertTokenizerFast, AutoTokenizer

>>> tokenizer = AutoTokenizer.from_pretrained('distilbert-base-uncased')

>>> from transformers import DistilBertForSequenceClassification

>>> model = DistilBertForSequenceClassification.from_pretrained(r"distilbert-depression-base")

>>> from transformers import pipeline

>>> classifier = pipeline("sentiment-analysis", model=model, tokenizer=tokenizer)

>>> tokenizer_kwargs = {'padding':True,'truncation':True,'max_length':512}

>>> result=classifier('pain peko',**tokenizer_kwargs) #For truncation to apply in the pipeline.

>>> #Should note that the string passed as the input can be a corpus of tweets concatenated together into one document.

[{'label': 'LABEL_1', 'score': 0.5048992037773132}]

```

Otherwise, download the files and specify within the pipeline the path to the folder that contains the config.json, pytorch_model.bin, and training_args.bin

## Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3.39e-05

- train_batch_size: 16

- eval_batch_size: 16

- weight_decay: 0.13

- num_epochs: 3.0

## Training results

| Epoch | Training Loss | Validation Loss | Accuracy | F1 | Precision | Recall | AUC |

|:-----:|:-------------:|:---------------:|:--------:|:--------:|:---------:|:--------:|:--------:|

| 1.0 | 0.68 | 0.66 | 0.59 | 0.63 | 0.56 | 0.73 | 0.59 |

| 2.0 | 0.60 | 0.68 | 0.63 | 0.69 | 0.59 | 0.83 | 0.63 |

| 3.0 | 0.52 | 0.67 | 0.64 | 0.66 | 0.62 | 0.72 | 0.65 | | de4c96b9851f95b0b53f51f970abe283 |

StonyBrookNLP/t5-3b-tatqa | StonyBrookNLP | t5 | 10 | 3 | transformers | 0 | text2text-generation | true | false | false | cc-by-4.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['question-answering, multi-step-reasoning, multi-hop-reasoning'] | false | true | true | 2,615 | false |

# What's this?

This is one of the models reported in the paper: ["Teaching Broad Reasoning Skills for Multi-Step QA by Generating Hard Contexts".](https://arxiv.org/abs/2205.12496).

This paper proposes a procedure to synthetically generate a QA dataset, TeaBReaC, for pretraining language models for robust multi-step reasoning. Pretraining plain LMs like Bart, T5 and numerate LMs like NT5, PReasM, POET on TeaBReaC leads to improvemed downstream performance on several multi-step QA datasets. Please checkout out the paper for the details.

We release the following models:

- **A:** Base Models finetuned on target datasets: `{base_model}-{target_dataset}`

- **B:** Base models pretrained on TeaBReaC: `teabreac-{base_model}`

- **C:** Base models pretrained on TeaBReaC and then finetuned on target datasets: `teabreac-{base_model}-{target_dataset}`

The `base_model` above can be from: `bart-large`, `t5-large`, `t5-3b`, `nt5-small`, `preasm-large`.

The `target_dataset` above can be from: `drop`, `tatqa`, `iirc-gold`, `iirc-retrieved`, `numglue`.

The **A** models are only released for completeness / reproducibility. In your end application you probably just want to use either **B** or **C**.

# How to use it?

Please checkout the details in our [github repository](https://github.com/stonybrooknlp/teabreac), but in a nutshell:

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

from digit_tokenization import enable_digit_tokenization # digit_tokenization.py from https://github.com/stonybrooknlp/teabreac

model_name = "StonyBrookNLP/t5-3b-tatqa"

tokenizer = AutoTokenizer.from_pretrained(model_name, use_fast=False) # Fast doesn't work with digit tokenization

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

enable_digit_tokenization(tokenizer)

input_texts = [

"answer_me: Who scored the first touchdown of the game?" +

"context: ... Oakland would get the early lead in the first quarter as quarterback JaMarcus Russell completed a 20-yard touchdown pass to rookie wide receiver Chaz Schilens..."

# Note: some models have slightly different qn/ctxt format. See the github repo.

]

input_ids = tokenizer(

input_texts, return_tensors="pt",

truncation=True, max_length=800,

add_special_tokens=True, padding=True,

)["input_ids"]

generated_ids = model.generate(input_ids, min_length=1, max_length=50)

generated_predictions = tokenizer.batch_decode(generated_ids, skip_special_tokens=False)

generated_predictions = [

tokenizer.fix_decoded_text(generated_prediction) for generated_prediction in generated_predictions

]

# => ["Chaz Schilens"]

``` | 66e7370f41728e2c13a8747e71ba5b74 |

sd-concepts-library/alicebeta | sd-concepts-library | null | 10 | 0 | null | 2 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,136 | false | ### AliceBeta on Stable Diffusion

This is the `<Alice-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

| fea9fc0b516ad49be0e08a3c1b7a86fb |

ericntay/clinical_bio_bert_ft | ericntay | bert | 14 | 25 | transformers | 0 | token-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,754 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# clinical_bio_bert_ft

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2570

- F1: 0.8160

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.6327 | 1.0 | 95 | 0.2442 | 0.7096 |

| 0.1692 | 2.0 | 190 | 0.2050 | 0.7701 |

| 0.0878 | 3.0 | 285 | 0.1923 | 0.8002 |

| 0.0493 | 4.0 | 380 | 0.2234 | 0.8079 |

| 0.0302 | 5.0 | 475 | 0.2250 | 0.8090 |

| 0.0191 | 6.0 | 570 | 0.2363 | 0.8145 |

| 0.0132 | 7.0 | 665 | 0.2489 | 0.8178 |

| 0.0102 | 8.0 | 760 | 0.2494 | 0.8152 |

| 0.008 | 9.0 | 855 | 0.2542 | 0.8191 |

| 0.0068 | 10.0 | 950 | 0.2570 | 0.8160 |

### Framework versions

- Transformers 4.21.1

- Pytorch 1.12.0+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

| 1894388ed8018904901c8074b88d6a9d |

devtanumisra/finetuning-sentiment-model-deberta-smote | devtanumisra | deberta-v2 | 14 | 8 | transformers | 0 | text-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,116 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-deberta-smote

This model is a fine-tuned version of [yangheng/deberta-v3-base-absa-v1.1](https://huggingface.co/yangheng/deberta-v3-base-absa-v1.1) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4852

- Accuracy: 0.7215

- F1: 0.7215

- Precision: 0.7215

- Recall: 0.7215

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.2

| a4b682feaa80b9829b4bb1e195c54833 |

egumasa/bert-base-uncased-finetuned-academic | egumasa | bert | 15 | 4 | transformers | 0 | fill-mask | true | false | false | apache-2.0 | null | ['elsevier-oa-cc-by'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,779 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-academic

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the elsevier-oa-cc-by dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5893

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 40

- eval_batch_size: 40

- seed: 42

- optimizer: Adam with betas=(0.9,0.97) and epsilon=0.0001

- lr_scheduler_type: linear

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.9591 | 0.25 | 820 | 2.6567 |

| 2.7993 | 0.5 | 1640 | 2.6006 |

| 2.7519 | 0.75 | 2460 | 2.5707 |

| 2.7319 | 1.0 | 3280 | 2.5763 |

| 2.7359 | 1.25 | 4100 | 2.5866 |

| 2.7451 | 1.5 | 4920 | 2.5855 |

| 2.7421 | 1.75 | 5740 | 2.5770 |

| 2.7319 | 2.0 | 6560 | 2.5762 |

| 2.7356 | 2.25 | 7380 | 2.5807 |

| 2.7376 | 2.5 | 8200 | 2.5813 |

| 2.7386 | 2.75 | 9020 | 2.5841 |

| 2.7378 | 3.0 | 9840 | 2.5737 |

### Framework versions

- Transformers 4.19.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

| bf79477a68e2e877f0a887d499f698d2 |

ehcalabres/distilgpt2-abc-irish-music-generation | ehcalabres | gpt2 | 8 | 2 | transformers | 0 | text-generation | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 957 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilgpt2-abc-irish-music-generation

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

| 3d87e56014cc45606d4ec0a578849fab |

patrickvonplaten/wav2vec2-xls-r-100m-common_voice-tr-ft | patrickvonplaten | wav2vec2 | 21 | 15 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['tr'] | ['common_voice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'common_voice', 'generated_from_trainer', 'xls_r_repro_common_voice_tr'] | true | true | true | 1,696 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-xls-r-100m-common_voice-tr-ft

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-100m](https://huggingface.co/facebook/wav2vec2-xls-r-100m) on the COMMON_VOICE - TR dataset.

It achieves the following results on the evaluation set:

- Loss: 3.4113

- Wer: 1.0

- Cer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 64

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 50.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer | Cer |

|:-------------:|:-----:|:----:|:---------------:|:---:|:---:|

| 3.1315 | 9.09 | 500 | 3.3832 | 1.0 | 1.0 |

| 3.1163 | 18.18 | 1000 | 3.4252 | 1.0 | 1.0 |

| 3.121 | 27.27 | 1500 | 3.4051 | 1.0 | 1.0 |

| 3.1273 | 36.36 | 2000 | 3.4345 | 1.0 | 1.0 |

| 3.2257 | 45.45 | 2500 | 3.4097 | 1.0 | 1.0 |

### Framework versions

- Transformers 4.13.0.dev0

- Pytorch 1.9.0+cu111

- Datasets 1.15.2.dev0

- Tokenizers 0.10.3

| 900bfb950215c058631f2889371496ac |

m3hrdadfi/albert-fa-base-v2-ner-peyma | m3hrdadfi | albert | 13 | 13 | transformers | 1 | token-classification | true | true | false | apache-2.0 | ['fa'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 3,272 | false |

# ALBERT Persian

A Lite BERT for Self-supervised Learning of Language Representations for the Persian Language

> میتونی بهش بگی برت_کوچولو

[ALBERT-Persian](https://github.com/m3hrdadfi/albert-persian) is the first attempt on ALBERT for the Persian Language. The model was trained based on Google's ALBERT BASE Version 2.0 over various writing styles from numerous subjects (e.g., scientific, novels, news) with more than 3.9M documents, 73M sentences, and 1.3B words, like the way we did for ParsBERT.

Please follow the [ALBERT-Persian](https://github.com/m3hrdadfi/albert-persian) repo for the latest information about previous and current models.

## Persian NER [ARMAN, PEYMA]

This task aims to extract named entities in the text, such as names and label with appropriate `NER` classes such as locations, organizations, etc. The datasets used for this task contain sentences that are marked with `IOB` format. In this format, tokens that are not part of an entity are tagged as `”O”` the `”B”`tag corresponds to the first word of an object, and the `”I”` tag corresponds to the rest of the terms of the same entity. Both `”B”` and `”I”` tags are followed by a hyphen (or underscore), followed by the entity category. Therefore, the NER task is a multi-class token classification problem that labels the tokens upon being fed a raw text. There are two primary datasets used in Persian NER, `ARMAN`, and `PEYMA`.

### PEYMA

PEYMA dataset includes 7,145 sentences with a total of 302,530 tokens from which 41,148 tokens are tagged with seven different classes.

1. Organization

2. Money

3. Location

4. Date

5. Time

6. Person

7. Percent

| Label | # |

|:------------:|:-----:|

| Organization | 16964 |

| Money | 2037 |

| Location | 8782 |

| Date | 4259 |

| Time | 732 |

| Person | 7675 |

| Percent | 699 |

**Download**

You can download the dataset from [here](http://nsurl.org/tasks/task-7-named-entity-recognition-ner-for-farsi/)

## Results

The following table summarizes the F1 score obtained as compared to other models and architectures.

| Dataset | ALBERT-fa-base-v2 | ParsBERT-v1 | mBERT | MorphoBERT | Beheshti-NER | LSTM-CRF | Rule-Based CRF | BiLSTM-CRF |

|:-------:|:-----------------:|:-----------:|:-----:|:----------:|:------------:|:--------:|:--------------:|:----------:|

| PEYMA | 88.99 | 93.10 | 86.64 | - | 90.59 | - | 84.00 | - |

### BibTeX entry and citation info

Please cite in publications as the following:

```bibtex

@misc{ALBERTPersian,

author = {Mehrdad Farahani},

title = {ALBERT-Persian: A Lite BERT for Self-supervised Learning of Language Representations for the Persian Language},

year = {2020},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/m3hrdadfi/albert-persian}},

}

@article{ParsBERT,

title={ParsBERT: Transformer-based Model for Persian Language Understanding},

author={Mehrdad Farahani, Mohammad Gharachorloo, Marzieh Farahani, Mohammad Manthouri},

journal={ArXiv},

year={2020},

volume={abs/2005.12515}

}

```

## Questions?

Post a Github issue on the [ALBERT-Persian](https://github.com/m3hrdadfi/albert-persian) repo. | b30b2a19aa14486da9b3835c5782ec2f |

yhavinga/t5-v1_1-base-dutch-english-cased | yhavinga | t5 | 13 | 10 | transformers | 0 | text2text-generation | false | false | true | apache-2.0 | ['nl', 'en'] | ['yhavinga/mc4_nl_cleaned'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['t5', 'seq2seq'] | false | true | true | 26,879 | false |

# t5-v1_1-base-dutch-english-cased

A [T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) sequence to sequence model

pre-trained from scratch on [cleaned Dutch 🇳🇱🇧🇪 mC4 and cleaned English 🇬🇧 C4](https://huggingface.co/datasets/yhavinga/mc4_nl_cleaned).

This **t5-v1.1** model has **247M** parameters.

It was pre-trained with masked language modeling (denoise token span corruption) objective on the dataset

`mc4_nl_cleaned` config `small_en_nl` for **10** epoch(s) and a duration of **11d18h**,

with a sequence length of **512**, batch size **128** and **2839630** total steps (**186B** tokens).

Pre-training evaluation loss and accuracy are **1,11** and **0,75**.

Refer to the evaluation section below for a comparison of the pre-trained models on summarization and translation.

* Pre-trained T5 models need to be finetuned before they can be used for downstream tasks, therefore the inference widget on the right has been turned off.

* For a demo of the Dutch CNN summarization models, head over to the Hugging Face Spaces for

the **[Netherformer 📰](https://huggingface.co/spaces/flax-community/netherformer)** example application!

Please refer to the original T5 papers and Scale Efficiently papers for more information about the T5 architecture

and configs, though it must be noted that this model (t5-v1_1-base-dutch-english-cased) is unrelated to these projects and not an 'official' checkpoint.

* **[Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/pdf/1910.10683.pdf)** by *Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, Peter J. Liu*.

* **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

## Tokenizer

The model uses a cased SentencePiece tokenizer configured with the `Nmt, NFKC, Replace multi-space to single-space` normalizers

and has 32003 tokens.

It was trained on Dutch and English with scripts from the Huggingface Transformers [Flax examples](https://github.com/huggingface/transformers/tree/master/examples/flax/language-modeling).

See [./raw/main/tokenizer.json](tokenizer.json) for details.

## Dataset(s)

All models listed below are pre-trained on

[cleaned Dutch mC4](https://huggingface.co/datasets/yhavinga/mc4_nl_cleaned),

which is the original mC4, except

* Documents that contained words from a selection of the Dutch and English [List of Dirty Naught Obscene and Otherwise Bad Words](https://github.com/LDNOOBW/List-of-Dirty-Naughty-Obscene-and-Otherwise-Bad-Words) are removed

* Sentences with less than 3 words are removed

* Sentences with a word of more than 1000 characters are removed

* Documents with less than 5 sentences are removed

* Documents with "javascript", "lorum ipsum", "terms of use", "privacy policy", "cookie policy", "uses cookies",

"use of cookies", "use cookies", "elementen ontbreken", "deze printversie" are removed.

The Dutch and English models are pre-trained on a 50/50% mix of Dutch mC4 and English C4.

The translation models are fine-tuned on [CCMatrix](https://huggingface.co/datasets/yhavinga/ccmatrix).

## Dutch T5 Models

Three types of [Dutch T5 models have been trained (blog)](https://huggingface.co/spaces/yhavinga/pre-training-dutch-t5-models).

`t5-base-dutch` is the only model with an original T5 config.

The other model types t5-v1.1 and t5-eff have `gated-relu` instead of `relu` as activation function,

and trained with a drop-out of `0.0` unless training would diverge (`t5-v1.1-large-dutch-cased`).

The T5-eff models are models that differ in their number of layers. The table will list

the several dimensions of these models. Not all t5-eff models are efficient, the best example being the inefficient

`t5-xl-4L-dutch-english-cased`.

| | [t5-base-dutch](https://huggingface.co/yhavinga/t5-base-dutch) | [t5-v1.1-base-dutch-uncased](https://huggingface.co/yhavinga/t5-v1.1-base-dutch-uncased) | [t5-v1.1-base-dutch-cased](https://huggingface.co/yhavinga/t5-v1.1-base-dutch-cased) | [t5-v1.1-large-dutch-cased](https://huggingface.co/yhavinga/t5-v1.1-large-dutch-cased) | [t5-v1_1-base-dutch-english-cased](https://huggingface.co/yhavinga/t5-v1_1-base-dutch-english-cased) | [t5-v1_1-base-dutch-english-cased-1024](https://huggingface.co/yhavinga/t5-v1_1-base-dutch-english-cased-1024) | [t5-small-24L-dutch-english](https://huggingface.co/yhavinga/t5-small-24L-dutch-english) | [t5-xl-4L-dutch-english-cased](https://huggingface.co/yhavinga/t5-xl-4L-dutch-english-cased) | [t5-base-36L-dutch-english-cased](https://huggingface.co/yhavinga/t5-base-36L-dutch-english-cased) | [t5-eff-xl-8l-dutch-english-cased](https://huggingface.co/yhavinga/t5-eff-xl-8l-dutch-english-cased) | [t5-eff-large-8l-dutch-english-cased](https://huggingface.co/yhavinga/t5-eff-large-8l-dutch-english-cased) |

|:------------------|:----------------|:-----------------------------|:---------------------------|:----------------------------|:-----------------------------------|:----------------------------------------|:-----------------------------|:-------------------------------|:----------------------------------|:-----------------------------------|:--------------------------------------|

| *type* | t5 | t5-v1.1 | t5-v1.1 | t5-v1.1 | t5-v1.1 | t5-v1.1 | t5 eff | t5 eff | t5 eff | t5 eff | t5 eff |

| *d_model* | 768 | 768 | 768 | 1024 | 768 | 768 | 512 | 2048 | 768 | 1024 | 1024 |

| *d_ff* | 3072 | 2048 | 2048 | 2816 | 2048 | 2048 | 1920 | 5120 | 2560 | 16384 | 4096 |

| *num_heads* | 12 | 12 | 12 | 16 | 12 | 12 | 8 | 32 | 12 | 32 | 16 |

| *d_kv* | 64 | 64 | 64 | 64 | 64 | 64 | 64 | 64 | 64 | 128 | 64 |

| *num_layers* | 12 | 12 | 12 | 24 | 12 | 12 | 24 | 4 | 36 | 8 | 8 |

| *num parameters* | 223M | 248M | 248M | 783M | 248M | 248M | 250M | 585M | 729M | 1241M | 335M |

| *feed_forward_proj* | relu | gated-gelu | gated-gelu | gated-gelu | gated-gelu | gated-gelu | gated-gelu | gated-gelu | gated-gelu | gated-gelu | gated-gelu |

| *dropout* | 0.1 | 0.0 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 | 0.1 | 0.0 | 0.0 | 0.0 |

| *dataset* | mc4_nl_cleaned | mc4_nl_cleaned full | mc4_nl_cleaned full | mc4_nl_cleaned | mc4_nl_cleaned small_en_nl | mc4_nl_cleaned large_en_nl | mc4_nl_cleaned large_en_nl | mc4_nl_cleaned large_en_nl | mc4_nl_cleaned large_en_nl | mc4_nl_cleaned large_en_nl | mc4_nl_cleaned large_en_nl |

| *tr. seq len* | 512 | 1024 | 1024 | 512 | 512 | 1024 | 512 | 512 | 512 | 512 | 512 |

| *batch size* | 128 | 64 | 64 | 64 | 128 | 64 | 128 | 512 | 512 | 64 | 128 |

| *total steps* | 527500 | 1014525 | 1210154 | 1120k/2427498 | 2839630 | 1520k/3397024 | 851852 | 212963 | 212963 | 538k/1703705 | 851850 |

| *epochs* | 1 | 2 | 2 | 2 | 10 | 4 | 1 | 1 | 1 | 1 | 1 |

| *duration* | 2d9h | 5d5h | 6d6h | 8d13h | 11d18h | 9d1h | 4d10h | 6d1h | 17d15h | 4d 19h | 3d 23h |

| *optimizer* | adafactor | adafactor | adafactor | adafactor | adafactor | adafactor | adafactor | adafactor | adafactor | adafactor | adafactor |

| *lr* | 0.005 | 0.005 | 0.005 | 0.005 | 0.005 | 0.005 | 0.005 | 0.005 | 0.009 | 0.005 | 0.005 |

| *warmup* | 10000.0 | 10000.0 | 10000.0 | 10000.0 | 10000.0 | 5000.0 | 20000.0 | 2500.0 | 1000.0 | 1500.0 | 1500.0 |

| *eval loss* | 1,38 | 1,20 | 0,96 | 1,07 | 1,11 | 1,13 | 1,18 | 1,27 | 1,05 | 1,3019 | 1,15 |

| *eval acc* | 0,70 | 0,73 | 0,78 | 0,76 | 0,75 | 0,74 | 0,74 | 0,72 | 0,76 | 0,71 | 0,74 |

## Evaluation

Most models from the list above have been fine-tuned for summarization and translation.

The figure below shows the evaluation scores, where the x-axis shows the translation Bleu score (higher is better)

and y-axis the summarization Rouge1 translation score (higher is better).

Point size is proportional to the model size. Models with faster inference speed are green, slower inference speed is

plotted as bleu.

Evaluation was run on fine-tuned models trained with the following settings:

| | Summarization | Translation |

|---------------:|------------------|-------------------|

| Dataset | CNN Dailymail NL | CCMatrix en -> nl |

| #train samples | 50K | 50K |

| Optimizer | Adam | Adam |

| learning rate | 0.001 | 0.0005 |

| source length | 1024 | 128 |

| target length | 142 | 128 |

|label smoothing | 0.05 | 0.1 |

| #eval samples | 1000 | 1000 |

Note that the amount of training data is limited to a fraction of the total dataset sizes, therefore the scores

below can only be used to compare the 'transfer-learning' strength. The fine-tuned checkpoints for this evaluation

are not saved, since they were trained for comparison of pre-trained models only.

The numbers for summarization are the Rouge scores on 1000 documents from the test split.

| | [t5-base-dutch](https://huggingface.co/yhavinga/t5-base-dutch) | [t5-v1.1-base-dutch-uncased](https://huggingface.co/yhavinga/t5-v1.1-base-dutch-uncased) | [t5-v1.1-base-dutch-cased](https://huggingface.co/yhavinga/t5-v1.1-base-dutch-cased) | [t5-v1_1-base-dutch-english-cased](https://huggingface.co/yhavinga/t5-v1_1-base-dutch-english-cased) | [t5-v1_1-base-dutch-english-cased-1024](https://huggingface.co/yhavinga/t5-v1_1-base-dutch-english-cased-1024) | [t5-small-24L-dutch-english](https://huggingface.co/yhavinga/t5-small-24L-dutch-english) | [t5-xl-4L-dutch-english-cased](https://huggingface.co/yhavinga/t5-xl-4L-dutch-english-cased) | [t5-base-36L-dutch-english-cased](https://huggingface.co/yhavinga/t5-base-36L-dutch-english-cased) | [t5-eff-large-8l-dutch-english-cased](https://huggingface.co/yhavinga/t5-eff-large-8l-dutch-english-cased) | mt5-base |

|:------------------------|----------------:|-----------------------------:|---------------------------:|-----------------------------------:|----------------------------------------:|-----------------------------:|-------------------------------:|----------------------------------:|--------------------------------------:|-----------:|

| *rouge1* | 33.38 | 33.97 | 34.39 | 33.38 | 34.97 | 34.38 | 30.35 | **35.04** | 34.04 | 33.25 |

| *rouge2* | 13.32 | 13.85 | 13.98 | 13.47 | 14.01 | 13.89 | 11.57 | **14.23** | 13.76 | 12.74 |

| *rougeL* | 24.22 | 24.72 | 25.1 | 24.34 | 24.99 | **25.25** | 22.69 | 25.05 | 24.75 | 23.5 |

| *rougeLsum* | 30.23 | 30.9 | 31.44 | 30.51 | 32.01 | 31.38 | 27.5 | **32.12** | 31.12 | 30.15 |

| *samples_per_second* | 3.18 | 3.02 | 2.99 | 3.22 | 2.97 | 1.57 | 2.8 | 0.61 | **3.27** | 1.22 |

The models below have been evaluated for English to Dutch translation.

Note that the first four models are pre-trained on Dutch only. That they still perform adequate is probably because

the translation direction is English to Dutch.

The numbers reported are the Bleu scores on 1000 documents from the test split.

| | [t5-base-dutch](https://huggingface.co/yhavinga/t5-base-dutch) | [t5-v1.1-base-dutch-uncased](https://huggingface.co/yhavinga/t5-v1.1-base-dutch-uncased) | [t5-v1.1-base-dutch-cased](https://huggingface.co/yhavinga/t5-v1.1-base-dutch-cased) | [t5-v1.1-large-dutch-cased](https://huggingface.co/yhavinga/t5-v1.1-large-dutch-cased) | [t5-v1_1-base-dutch-english-cased](https://huggingface.co/yhavinga/t5-v1_1-base-dutch-english-cased) | [t5-v1_1-base-dutch-english-cased-1024](https://huggingface.co/yhavinga/t5-v1_1-base-dutch-english-cased-1024) | [t5-small-24L-dutch-english](https://huggingface.co/yhavinga/t5-small-24L-dutch-english) | [t5-xl-4L-dutch-english-cased](https://huggingface.co/yhavinga/t5-xl-4L-dutch-english-cased) | [t5-base-36L-dutch-english-cased](https://huggingface.co/yhavinga/t5-base-36L-dutch-english-cased) | [t5-eff-large-8l-dutch-english-cased](https://huggingface.co/yhavinga/t5-eff-large-8l-dutch-english-cased) | mt5-base |

|:-------------------------------|----------------:|-----------------------------:|---------------------------:|----------------------------:|-----------------------------------:|----------------------------------------:|-----------------------------:|-------------------------------:|----------------------------------:|--------------------------------------:|-----------:|

| *precision_ng1* | 74.17 | 78.09 | 77.08 | 72.12 | 77.19 | 78.76 | 78.59 | 77.3 | **79.75** | 78.88 | 73.47 |

| *precision_ng2* | 52.42 | 57.52 | 55.31 | 48.7 | 55.39 | 58.01 | 57.83 | 55.27 | **59.89** | 58.27 | 50.12 |

| *precision_ng3* | 39.55 | 45.2 | 42.54 | 35.54 | 42.25 | 45.13 | 45.02 | 42.06 | **47.4** | 45.95 | 36.59 |

| *precision_ng4* | 30.23 | 36.04 | 33.26 | 26.27 | 32.74 | 35.72 | 35.41 | 32.61 | **38.1** | 36.91 | 27.26 |

| *bp* | 0.99 | 0.98 | 0.97 | 0.98 | 0.98 | 0.98 | 0.98 | 0.97 | 0.98 | 0.98 | 0.98 |

| *score* | 45.88 | 51.21 | 48.31 | 41.59 | 48.17 | 51.31 | 50.82 | 47.83 | **53** | 51.79 | 42.74 |

| *samples_per_second* | **45.19** | 45.05 | 38.67 | 10.12 | 42.19 | 42.61 | 12.85 | 33.74 | 9.07 | 37.86 | 9.03 |

## Translation models

The models `t5-small-24L-dutch-english` and `t5-base-36L-dutch-english` have been fine-tuned for both language

directions on the first 25M samples from CCMatrix, giving a total of 50M training samples.

Evaluation is performed on out-of-sample CCMatrix and also on Tatoeba and Opus Books.

The `_bp` columns list the *brevity penalty*. The `avg_bleu` score is the bleu score

averaged over all three evaluation datasets. The best scores displayed in bold for both translation directions.

| | [t5-base-36L-ccmatrix-multi](https://huggingface.co/yhavinga/t5-base-36L-ccmatrix-multi) | [t5-base-36L-ccmatrix-multi](https://huggingface.co/yhavinga/t5-base-36L-ccmatrix-multi) | [t5-small-24L-ccmatrix-multi](https://huggingface.co/yhavinga/t5-small-24L-ccmatrix-multi) | [t5-small-24L-ccmatrix-multi](https://huggingface.co/yhavinga/t5-small-24L-ccmatrix-multi) |

|:-----------------------|:-----------------------------|:-----------------------------|:------------------------------|:------------------------------|

| *source_lang* | en | nl | en | nl |

| *target_lang* | nl | en | nl | en |

| *source_prefix* | translate English to Dutch: | translate Dutch to English: | translate English to Dutch: | translate Dutch to English: |

| *ccmatrix_bleu* | **56.8** | 62.8 | 57.4 | **63.1** |

| *tatoeba_bleu* | **46.6** | **52.8** | 46.4 | 51.7 |

| *opus_books_bleu* | **13.5** | **24.9** | 12.9 | 23.4 |

| *ccmatrix_bp* | 0.95 | 0.96 | 0.95 | 0.96 |

| *tatoeba_bp* | 0.97 | 0.94 | 0.98 | 0.94 |

| *opus_books_bp* | 0.8 | 0.94 | 0.77 | 0.89 |

| *avg_bleu* | **38.96** | **46.86** | 38.92 | 46.06 |

| *max_source_length* | 128 | 128 | 128 | 128 |

| *max_target_length* | 128 | 128 | 128 | 128 |

| *adam_beta1* | 0.9 | 0.9 | 0.9 | 0.9 |

| *adam_beta2* | 0.997 | 0.997 | 0.997 | 0.997 |

| *weight_decay* | 0.05 | 0.05 | 0.002 | 0.002 |

| *lr* | 5e-05 | 5e-05 | 0.0005 | 0.0005 |

| *label_smoothing_factor* | 0.15 | 0.15 | 0.1 | 0.1 |

| *train_batch_size* | 128 | 128 | 128 | 128 |

| *warmup_steps* | 2000 | 2000 | 2000 | 2000 |

| *total steps* | 390625 | 390625 | 390625 | 390625 |

| *duration* | 4d 5h | 4d 5h | 3d 2h | 3d 2h |

| *num parameters* | 729M | 729M | 250M | 250M |

## Acknowledgements

This project would not have been possible without compute generously provided by Google through the

[TPU Research Cloud](https://sites.research.google/trc/). The HuggingFace 🤗 ecosystem was instrumental in all parts

of the training. Weights & Biases made it possible to keep track of many training sessions

and orchestrate hyper-parameter sweeps with insightful visualizations.

The following repositories where helpful in setting up the TPU-VM,

and getting an idea what sensible hyper-parameters are for training gpt2 from scratch:

* [Gsarti's Pretrain and Fine-tune a T5 model with Flax on GCP](https://github.com/gsarti/t5-flax-gcp)

* [Flax/Jax Community week t5-base-dutch](https://huggingface.co/flax-community/t5-base-dutch)

Created by [Yeb Havinga](https://www.linkedin.com/in/yeb-havinga-86530825/)

| ede0870192964f17c120c8a2e7b5c615 |

Sercan/wav2vec2-large-xls-r-300m-tr | Sercan | wav2vec2 | 13 | 10 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | null | ['common_voice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 2,826 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-tr

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2891

- Wer: 0.4741

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 5.4933 | 0.39 | 400 | 1.0543 | 0.9316 |

| 0.7039 | 0.78 | 800 | 0.6927 | 0.7702 |

| 0.4768 | 1.17 | 1200 | 0.4779 | 0.6774 |

| 0.4004 | 1.57 | 1600 | 0.4462 | 0.6450 |

| 0.3739 | 1.96 | 2000 | 0.4287 | 0.6296 |

| 0.317 | 2.35 | 2400 | 0.4395 | 0.6248 |

| 0.3027 | 2.74 | 2800 | 0.4052 | 0.6027 |

| 0.2633 | 3.13 | 3200 | 0.4026 | 0.5938 |

| 0.245 | 3.52 | 3600 | 0.3814 | 0.5902 |

| 0.2415 | 3.91 | 4000 | 0.3691 | 0.5708 |

| 0.2193 | 4.31 | 4400 | 0.3626 | 0.5623 |

| 0.2057 | 4.7 | 4800 | 0.3591 | 0.5551 |

| 0.1874 | 5.09 | 5200 | 0.3670 | 0.5512 |

| 0.1782 | 5.48 | 5600 | 0.3483 | 0.5406 |

| 0.1706 | 5.87 | 6000 | 0.3392 | 0.5338 |

| 0.153 | 6.26 | 6400 | 0.3189 | 0.5207 |

| 0.1493 | 6.65 | 6800 | 0.3185 | 0.5164 |

| 0.1381 | 7.05 | 7200 | 0.3199 | 0.5185 |

| 0.1244 | 7.44 | 7600 | 0.3082 | 0.4993 |

| 0.1182 | 7.83 | 8000 | 0.3122 | 0.4998 |

| 0.1136 | 8.22 | 8400 | 0.3003 | 0.4936 |

| 0.1047 | 8.61 | 8800 | 0.2945 | 0.4858 |

| 0.0986 | 9.0 | 9200 | 0.2827 | 0.4809 |

| 0.0925 | 9.39 | 9600 | 0.2894 | 0.4786 |

| 0.0885 | 9.78 | 10000 | 0.2891 | 0.4741 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.1+cu116

- Datasets 2.1.0

- Tokenizers 0.12.1

| 5332205fdd4d619957b1fcaa85769258 |

nepalprabin/xlm-roberta-base-finetuned-marc-en | nepalprabin | xlm-roberta | 12 | 3 | transformers | 0 | text-classification | true | false | false | mit | null | ['amazon_reviews_multi'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,274 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-marc-en

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the amazon_reviews_multi dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0442

- Mae: 0.5385

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mae |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.0371 | 1.0 | 1105 | 1.0522 | 0.5256 |

| 0.8925 | 2.0 | 2210 | 1.0442 | 0.5385 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.0+cu111

- Datasets 1.14.0

- Tokenizers 0.10.3

| 7b443cd392008f7884c434d87eef8335 |

nvidia/stt_rw_conformer_transducer_large | nvidia | null | 3 | 1 | nemo | 0 | automatic-speech-recognition | true | false | false | cc-by-4.0 | ['rw'] | ['mozilla-foundation/common_voice_9_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'speech', 'audio', 'Transducer', 'Conformer', 'Transformer', 'pytorch', 'NeMo', 'hf-asr-leaderboard'] | true | true | true | 5,579 | false |

# NVIDIA Conformer-Transducer Large (Kinyarwanda)

<style>

img {

display: inline;

}

</style>

| [](#model-architecture)

| [](#model-architecture)

| [](#datasets)

This model transcribes speech into lowercase Latin alphabet including space and apostrophe, and is trained on around 2000 hours of Kinyarwanda speech data.

It is a non-autoregressive "large" variant of Conformer, with around 120 million parameters.

See the [model architecture](#model-architecture) section and [NeMo documentation](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html#conformer-transducer) for complete architecture details.

## Usage

The model is available for use in the NeMo toolkit [3], and can be used as a pre-trained checkpoint for inference or for fine-tuning on another dataset.

To train, fine-tune or play with the model you will need to install [NVIDIA NeMo](https://github.com/NVIDIA/NeMo). We recommend you install it after you've installed latest PyTorch version.

```

pip install nemo_toolkit['all']

```

### Automatically instantiate the model

```python

import nemo.collections.asr as nemo_asr

asr_model = nemo_asr.models.EncDecRNNTBPEModel.from_pretrained("nvidia/stt_rw_conformer_transducer_large")

```

### Transcribing using Python

Simply do:

```

asr_model.transcribe(['<your_audio>.wav'])

```

### Transcribing many audio files

```shell

python [NEMO_GIT_FOLDER]/examples/asr/transcribe_speech.py

pretrained_name="nvidia/stt_rw_conformer_transducer_large"

audio_dir="<DIRECTORY CONTAINING AUDIO FILES>"

```

### Input

This model accepts 16 kHz mono-channel Audio (wav files) as input.

### Output

This model provides transcribed speech as a string for a given audio sample.

## Model Architecture

Conformer-Transducer model is an autoregressive variant of Conformer model [1] for Automatic Speech Recognition which uses Transducer loss/decoding. You may find more info on the detail of this model here: [Conformer-Transducer Model](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html).

## Training

The NeMo toolkit [3] was used for training the models for over several hundred epochs. These model are trained with this [example script](https://github.com/NVIDIA/NeMo/blob/main/examples/asr/asr_transducer/speech_to_text_rnnt_bpe.py) and this [base config](https://github.com/NVIDIA/NeMo/blob/main/examples/asr/conf/conformer/conformer_transducer_bpe.yaml).

The vocabulary we use contains 28 characters:

```python

[' ', "'", 'a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', 'u', 'v', 'w', 'x', 'y', 'z']

```

Rare symbols with diacritics were replaced during preprocessing.

The tokenizers for these models were built using the text transcripts of the train set with this [script](https://github.com/NVIDIA/NeMo/blob/main/scripts/tokenizers/process_asr_text_tokenizer.py).

For vocabulary of size 1024 we restrict maximum subtoken length to 4 symbols to avoid populating vocabulary with specific frequent words from the dataset. This does not affect the model performance and potentially helps to adapt to other domain without retraining tokenizer.

Full config can be found inside the .nemo files.

### Datasets

All the models in this collection are trained on MCV-9.0 Kinyarwanda dataset, which contains around 2000 hours training, 32 hours of development and 32 hours of testing speech audios.

## Performance

The list of the available models in this collection is shown in the following table. Performances of the ASR models are reported in terms of Word Error Rate (WER%) with greedy decoding.

| Version | Tokenizer | Vocabulary Size | Dev WER| Test WER| Train Dataset |

|---------|-----------------------|-----------------|--------|---------|-----------------|

| 1.11.0 | SentencePiece BPE, maxlen=4 | 1024 |13.82 | 16.19 | MCV-9.0 Train set|

## Limitations

Since this model was trained on publicly available speech datasets, the performance of this model might degrade for speech which includes technical terms, or vernacular that the model has not been trained on. The model might also perform worse for accented speech.

## Deployment with NVIDIA Riva

[NVIDIA Riva](https://developer.nvidia.com/riva), is an accelerated speech AI SDK deployable on-prem, in all clouds, multi-cloud, hybrid, on edge, and embedded.

Additionally, Riva provides:

* World-class out-of-the-box accuracy for the most common languages with model checkpoints trained on proprietary data with hundreds of thousands of GPU-compute hours