repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

othrif/wav2vec2-large-xlsr-arabic | othrif | wav2vec2 | 11 | 49 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['ar'] | ['common_voice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week'] | true | true | true | 3,781 | false |

# Wav2Vec2-Large-XLSR-53-Arabic

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on Arabic using the [Common Voice](https://huggingface.co/datasets/common_voice).

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "ar", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("othrif/wav2vec2-large-xlsr-arabic")

model = Wav2Vec2ForCTC.from_pretrained("othrif/wav2vec2-large-xlsr-arabic")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the Arabic test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "ar", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("othrif/wav2vec2-large-xlsr-arabic")

model = Wav2Vec2ForCTC.from_pretrained("othrif/wav2vec2-large-xlsr-arabic")

model.to("cuda")

chars_to_ignore_regex = '[\\\\\\\\\\\\\\\\؛\\\\\\\\\\\\\\\\—\\\\\\\\\\\\\\\\_get\\\\\\\\\\\\\\\\«\\\\\\\\\\\\\\\\»\\\\\\\\\\\\\\\\ـ\\\\\\\\\\\\\\\\ـ\\\\\\\\\\\\\\\\,\\\\\\\\\\\\\\\\?\\\\\\\\\\\\\\\\.\\\\\\\\\\\\\\\\!\\\\\\\\\\\\\\\\-\\\\\\\\\\\\\\\\;\\\\\\\\\\\\\\\\:\\\\\\\\\\\\\\\\"\\\\\\\\\\\\\\\\“\\\\\\\\\\\\\\\\%\\\\\\\\\\\\\\\\‘\\\\\\\\\\\\\\\\”\\\\\\\\\\\\\\\\�\\\\\\\\\\\\\\\\#\\\\\\\\\\\\\\\\،\\\\\\\\\\\\\\\\☭,\\\\\\\\\\\\\\\\؟]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 46.77

## Training

The Common Voice `train`, `validation` datasets were used for training.

The script used for training can be found [here](https://huggingface.co/othrif/wav2vec2-large-xlsr-arabic/tree/main) | 76c3ffc1cdc540af88204e45603b4913 |

jonatasgrosman/exp_w2v2t_ar_vp-es_s601 | jonatasgrosman | wav2vec2 | 10 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['ar'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'ar'] | false | true | true | 469 | false | # exp_w2v2t_ar_vp-es_s601

Fine-tuned [facebook/wav2vec2-large-es-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-es-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (ar)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 77ce0563d4dfa7fb1517aaa232359395 |

muhtasham/tiny-mlm-glue-wnli-target-glue-wnli | muhtasham | bert | 10 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,439 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tiny-mlm-glue-wnli-target-glue-wnli

This model is a fine-tuned version of [muhtasham/tiny-mlm-glue-wnli](https://huggingface.co/muhtasham/tiny-mlm-glue-wnli) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.1020

- Accuracy: 0.1127

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6885 | 25.0 | 500 | 0.7726 | 0.2394 |

| 0.658 | 50.0 | 1000 | 1.1609 | 0.0986 |

| 0.6084 | 75.0 | 1500 | 1.6344 | 0.1127 |

| 0.5481 | 100.0 | 2000 | 2.1020 | 0.1127 |

### Framework versions

- Transformers 4.26.0.dev0

- Pytorch 1.13.0+cu116

- Datasets 2.8.1.dev0

- Tokenizers 0.13.2

| 898aeba87e63faa466ca6aba338358b5 |

underactuated/opt-350m_mle | underactuated | opt | 10 | 0 | transformers | 0 | text-generation | true | false | false | other | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 884 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# opt-350m_mle

This model is a fine-tuned version of [facebook/opt-350m](https://huggingface.co/facebook/opt-350m) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Framework versions

- Transformers 4.25.1

- Pytorch 1.12.1

- Datasets 2.8.0

- Tokenizers 0.13.2

| 5990be6bc8a9c76224f11a7319011f6b |

NouRed/distilbert_ner_wnut17 | NouRed | distilbert | 10 | 12 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | ['wnut_17'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 987 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert_ner_wnut17

This model is a fine-tuned version of [distilbert-base-cased](https://huggingface.co/distilbert-base-cased) on [WNUT-17](https://huggingface.co/datasets/wnut_17) dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 3

### Training results

### Framework versions

- Transformers 4.23.0

- Pytorch 1.12.1+cu113

- Tokenizers 0.13.1

| 10bd956bbb9c3d049caec848d5ac9693 |

href/gpt2-schiappa | href | gpt2 | 9 | 8 | transformers | 0 | text-generation | true | false | false | unknown | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 586 | false |

# Schiappa-Minelli GPT-2

Pourquoi pas ?

## Dataset

- Marianne est déchainée, de Marlène Schiappa

- Osez les sexfriends, Marie Minelli

- Osez réussir votre divorce, Marie Minelli

- Sexe, mensonge et banlieues chaudes, Marie Minelli

## Versions

V1:

- Fine-tunée avec [Max Woolf's "aitextgen — Train a GPT-2 (or GPT Neo)" colab](https://colab.research.google.com/drive/15qBZx5y9rdaQSyWpsreMDnTiZ5IlN0zD?usp=sharing)

- Depuis le modèle gpt-2 124M [aquadzn/gpt2-french](https://github.com/aquadzn/gpt2-french/), version romans.

- ~50 minutes on Colab Pro, P100 GPU, 3 batchs, 500 steps | f2ff0b139f0f8b7166b00c504a397cdf |

salesken/natural_rephrase | salesken | gpt2 | 10 | 63 | transformers | 1 | text-generation | true | false | true | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,926 | false |

NLG model trained on the rephrase generation dataset published by Fb

Paper : https://research.fb.com/wp-content/uploads/2020/12/Sound-Natural-Content-Rephrasing-in-Dialog-Systems.pdf

Paper Abstract :

" We introduce a new task of rephrasing for a more natural virtual assistant. Currently, vir- tual assistants work in the paradigm of intent- slot tagging and the slot values are directly passed as-is to the execution engine. However, this setup fails in some scenarios such as mes- saging when the query given by the user needs to be changed before repeating it or sending it to another user. For example, for queries like ‘ask my wife if she can pick up the kids’ or ‘re- mind me to take my pills’, we need to rephrase the content to ‘can you pick up the kids’and

‘take your pills’. In this paper, we study the problem of rephrasing with messaging as a use case and release a dataset of 3000 pairs of original query and rephrased query.. "

Training data :

http://dl.fbaipublicfiles.com/rephrasing/rephrasing_dataset.tar.gz

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("salesken/natural_rephrase")

model = AutoModelWithLMHead.from_pretrained("salesken/natural_rephrase")

Input_query="Hey Siri, Send message to mom to say thank you for the delicious dinner yesterday"

query= Input_query + " ~~ "

input_ids = tokenizer.encode(query.lower(), return_tensors='pt')

sample_outputs = model.generate(input_ids,

do_sample=True,

num_beams=1,

max_length=len(Input_query),

temperature=0.2,

top_k = 10,

num_return_sequences=1)

for i in range(len(sample_outputs)):

result = tokenizer.decode(sample_outputs[i], skip_special_tokens=True).split('||')[0].split('~~')[1]

print(result)

```

| f616747f57a3d119cd4c56b969c068d4 |

SirVeggie/cutesexyrobutts | SirVeggie | null | 7 | 0 | null | 10 | null | false | false | false | creativeml-openrail-m | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,554 | false |

# Cutesexyrobutts stable diffusion model

Original artist: Cutesexyrobutts\

Patreon: https://www.patreon.com/cutesexyrobutts

## Basic explanation

Token and Class words are what guide the AI to produce images similar to the trained style/object/character.

Include any mix of these words in the prompt to produce verying results, or exclude them to have a less pronounced effect.

There is usually at least a slight stylistic effect even without the words, but it is recommended to include at least one.

Adding token word/phrase class word/phrase at the start of the prompt in that order produces results most similar to the trained concept, but they can be included elsewhere as well. Some models produce better results when not including all token/class words.

3k models are are more flexible, while 5k models produce images closer to the trained concept.

I recommend 2k/3k models for normal use, and 5k/6k models for model merging and use without token/class words.

However it can be also very prompt specific. I highly recommend self-experimentation.

These models are subject to the same legal concerns as their base models.

## Comparison

Epoch 5 version was earlier in the waifu diffusion 1.3 training process, so it is easier to produce more varied, non anime, results.

Robutts-any is the newest and best model.

## robutts-any

```

token: m_robutts

class: illustration style

base: anything v3

```

## robutts

```

token: §

class: robutts

base: waifu diffusion 1.3

```

## robutts_e5

```

token: §

class: robutts

base: waifu diffusion 1.3-e5

```

| 5d27b91fd03fc2e98d20901d9a206409 |

Helsinki-NLP/opus-mt-ss-en | Helsinki-NLP | marian | 10 | 22 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 768 | false |

### opus-mt-ss-en

* source languages: ss

* target languages: en

* OPUS readme: [ss-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/ss-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/ss-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/ss-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/ss-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.ss.en | 30.9 | 0.478 |

| 154d295745ff1e4054b05cd531184a00 |

google/t5-efficient-base-dl4 | google | t5 | 12 | 32 | transformers | 0 | text2text-generation | true | true | true | apache-2.0 | ['en'] | ['c4'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['deep-narrow'] | false | true | true | 6,247 | false |

# T5-Efficient-BASE-DL4 (Deep-Narrow version)

T5-Efficient-BASE-DL4 is a variation of [Google's original T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) following the [T5 model architecture](https://huggingface.co/docs/transformers/model_doc/t5).

It is a *pretrained-only* checkpoint and was released with the

paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)**

by *Yi Tay, Mostafa Dehghani, Jinfeng Rao, William Fedus, Samira Abnar, Hyung Won Chung, Sharan Narang, Dani Yogatama, Ashish Vaswani, Donald Metzler*.

In a nutshell, the paper indicates that a **Deep-Narrow** model architecture is favorable for **downstream** performance compared to other model architectures

of similar parameter count.

To quote the paper:

> We generally recommend a DeepNarrow strategy where the model’s depth is preferentially increased

> before considering any other forms of uniform scaling across other dimensions. This is largely due to

> how much depth influences the Pareto-frontier as shown in earlier sections of the paper. Specifically, a

> tall small (deep and narrow) model is generally more efficient compared to the base model. Likewise,

> a tall base model might also generally more efficient compared to a large model. We generally find

> that, regardless of size, even if absolute performance might increase as we continue to stack layers,

> the relative gain of Pareto-efficiency diminishes as we increase the layers, converging at 32 to 36

> layers. Finally, we note that our notion of efficiency here relates to any one compute dimension, i.e.,

> params, FLOPs or throughput (speed). We report all three key efficiency metrics (number of params,

> FLOPS and speed) and leave this decision to the practitioner to decide which compute dimension to

> consider.

To be more precise, *model depth* is defined as the number of transformer blocks that are stacked sequentially.

A sequence of word embeddings is therefore processed sequentially by each transformer block.

## Details model architecture

This model checkpoint - **t5-efficient-base-dl4** - is of model type **Base** with the following variations:

- **dl** is **4**

It has **147.4** million parameters and thus requires *ca.* **589.62 MB** of memory in full precision (*fp32*)

or **294.81 MB** of memory in half precision (*fp16* or *bf16*).

A summary of the *original* T5 model architectures can be seen here:

| Model | nl (el/dl) | ff | dm | kv | nh | #Params|

| ----| ---- | ---- | ---- | ---- | ---- | ----|

| Tiny | 4/4 | 1024 | 256 | 32 | 4 | 16M|

| Mini | 4/4 | 1536 | 384 | 32 | 8 | 31M|

| Small | 6/6 | 2048 | 512 | 32 | 8 | 60M|

| Base | 12/12 | 3072 | 768 | 64 | 12 | 220M|

| Large | 24/24 | 4096 | 1024 | 64 | 16 | 738M|

| Xl | 24/24 | 16384 | 1024 | 128 | 32 | 3B|

| XXl | 24/24 | 65536 | 1024 | 128 | 128 | 11B|

whereas the following abbreviations are used:

| Abbreviation | Definition |

| ----| ---- |

| nl | Number of transformer blocks (depth) |

| dm | Dimension of embedding vector (output vector of transformers block) |

| kv | Dimension of key/value projection matrix |

| nh | Number of attention heads |

| ff | Dimension of intermediate vector within transformer block (size of feed-forward projection matrix) |

| el | Number of transformer blocks in the encoder (encoder depth) |

| dl | Number of transformer blocks in the decoder (decoder depth) |

| sh | Signifies that attention heads are shared |

| skv | Signifies that key-values projection matrices are tied |

If a model checkpoint has no specific, *el* or *dl* than both the number of encoder- and decoder layers correspond to *nl*.

## Pre-Training

The checkpoint was pretrained on the [Colossal, Cleaned version of Common Crawl (C4)](https://huggingface.co/datasets/c4) for 524288 steps using

the span-based masked language modeling (MLM) objective.

## Fine-Tuning

**Note**: This model is a **pretrained** checkpoint and has to be fine-tuned for practical usage.

The checkpoint was pretrained in English and is therefore only useful for English NLP tasks.

You can follow on of the following examples on how to fine-tune the model:

*PyTorch*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/pytorch/summarization)

- [Question Answering](https://github.com/huggingface/transformers/blob/master/examples/pytorch/question-answering/run_seq2seq_qa.py)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/pytorch/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*Tensorflow*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/tensorflow/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

*JAX/Flax*:

- [Summarization](https://github.com/huggingface/transformers/tree/master/examples/flax/summarization)

- [Text Classification](https://github.com/huggingface/transformers/tree/master/examples/flax/text-classification) - *Note*: You will have to slightly adapt the training example here to make it work with an encoder-decoder model.

## Downstream Performance

TODO: Add table if available

## Computational Complexity

TODO: Add table if available

## More information

We strongly recommend the reader to go carefully through the original paper **[Scale Efficiently: Insights from Pre-training and Fine-tuning Transformers](https://arxiv.org/abs/2109.10686)** to get a more nuanced understanding of this model checkpoint.

As explained in the following [issue](https://github.com/google-research/google-research/issues/986#issuecomment-1035051145), checkpoints including the *sh* or *skv*

model architecture variations have *not* been ported to Transformers as they are probably of limited practical usage and are lacking a more detailed description. Those checkpoints are kept [here](https://huggingface.co/NewT5SharedHeadsSharedKeyValues) as they might be ported potentially in the future. | 38a7b78982c1bf80a179bf31d999988c |

FredZhang7/distilgpt2-stable-diffusion | FredZhang7 | gpt2 | 8 | 25 | transformers | 4 | text-generation | true | false | false | creativeml-openrail-m | null | ['FredZhang7/krea-ai-prompts', 'Gustavosta/Stable-Diffusion-Prompts', 'bartman081523/stable-diffusion-discord-prompts'] | null | 0 | 0 | 0 | 0 | 2 | 0 | 2 | ['stable-diffusion', 'prompt-generator', 'distilgpt2'] | false | true | true | 1,834 | false | # DistilGPT2 Stable Diffusion Model Card

<a href="https://huggingface.co/FredZhang7/distilgpt2-stable-diffusion-v2"> <font size="4"> <bold> Version 2 is here! </bold> </font> </a>

DistilGPT2 Stable Diffusion is a text generation model used to generate creative and coherent prompts for text-to-image models, given any text.

This model was finetuned on 2.03 million descriptive stable diffusion prompts from [Stable Diffusion discord](https://huggingface.co/datasets/bartman081523/stable-diffusion-discord-prompts), [Lexica.art](https://huggingface.co/datasets/Gustavosta/Stable-Diffusion-Prompts), and (my hand-picked) [Krea.ai](https://huggingface.co/datasets/FredZhang7/krea-ai-prompts). I filtered the hand-picked prompts based on the output results from Stable Diffusion v1.4.

Compared to other prompt generation models using GPT2, this one runs with 50% faster forwardpropagation and 40% less disk space & RAM.

### PyTorch

```bash

pip install --upgrade transformers

```

```python

from transformers import GPT2Tokenizer, GPT2LMHeadModel

# load the pretrained tokenizer

tokenizer = GPT2Tokenizer.from_pretrained('distilgpt2')

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

tokenizer.max_len = 512

# load the fine-tuned model

model = GPT2LMHeadModel.from_pretrained('FredZhang7/distilgpt2-stable-diffusion')

# generate text using fine-tuned model

from transformers import pipeline

nlp = pipeline('text-generation', model=model, tokenizer=tokenizer)

ins = "a beautiful city"

# generate 10 samples

outs = nlp(ins, max_length=80, num_return_sequences=10)

# print the 10 samples

for i in range(len(outs)):

outs[i] = str(outs[i]['generated_text']).replace(' ', '')

print('\033[96m' + ins + '\033[0m')

print('\033[93m' + '\n\n'.join(outs) + '\033[0m')

```

Example Output:

| a70eb09d9bd77c64413f4e00ff618a04 |

nsaghatelyan/blue-back-pack | nsaghatelyan | null | 19 | 1 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 428 | false | ### blue_back_pack Dreambooth model trained by nsaghatelyan with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

| a8a69981e5b839a84565bd25dd6ec1e4 |

harmonai/jmann-small-190k | harmonai | null | 6 | 1,071 | diffusers | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['audio-generation'] | false | true | true | 1,319 | false |

[Dance Diffusion](https://github.com/Harmonai-org/sample-generator) is now available in 🧨 Diffusers.

## FP32

```python

# !pip install diffusers[torch] accelerate scipy

from diffusers import DiffusionPipeline

from scipy.io.wavfile import write

model_id = "harmonai/jmann-small-190k"

pipe = DiffusionPipeline.from_pretrained(model_id)

pipe = pipe.to("cuda")

audios = pipe(audio_length_in_s=4.0).audios

# To save locally

for i, audio in enumerate(audios):

write(f"test_{i}.wav", pipe.unet.sample_rate, audio.transpose())

# To dislay in google colab

import IPython.display as ipd

for audio in audios:

display(ipd.Audio(audio, rate=pipe.unet.sample_rate))

```

## FP16

Faster at a small loss of quality

```python

# !pip install diffusers[torch] accelerate scipy

from diffusers import DiffusionPipeline

from scipy.io.wavfile import write

import torch

model_id = "harmonai/jmann-small-190k"

pipe = DiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

audios = pipeline(audio_length_in_s=4.0).audios

# To save locally

for i, audio in enumerate(audios):

write(f"{i}.wav", pipe.unet.sample_rate, audio.transpose())

# To dislay in google colab

import IPython.display as ipd

for audio in audios:

display(ipd.Audio(audio, rate=pipe.unet.sample_rate))

``` | 6e04fa21ba23c1a6da49c4a97f2dbb4b |

prompthero/openjourney-v2 | prompthero | null | 19 | 41,272 | diffusers | 452 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 13 | 4 | 6 | 2 | 19 | 16 | 3 | ['stable-diffusion', 'text-to-image'] | false | true | true | 552 | false |

# Openjourney v2 is an open source Stable Diffusion fine tuned model on +60k Midjourney images, by [PromptHero](https://prompthero.com/?utm_source=huggingface&utm_medium=referral)

This repo is for testing the first Openjourney fine tuned model.

It was trained over Stable Diffusion 1.5 with +60000 images, 4500 steps and 3 epochs.

So "mdjrny-v4 style" is not necessary anymore (yay!)

# Openjourney Links

- [Lora version](https://huggingface.co/prompthero/openjourney-lora)

- [Openjourney Dreambooth](https://huggingface.co/prompthero/openjourney) | 36cb5dba81a7a7f5fc83e90c2cc5ec68 |

Helsinki-NLP/opus-mt-vi-it | Helsinki-NLP | marian | 11 | 17 | transformers | 0 | translation | true | true | false | apache-2.0 | ['vi', 'it'] | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 2,016 | false |

### vie-ita

* source group: Vietnamese

* target group: Italian

* OPUS readme: [vie-ita](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/vie-ita/README.md)

* model: transformer-align

* source language(s): vie

* target language(s): ita

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/vie-ita/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/vie-ita/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/vie-ita/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.vie.ita | 31.2 | 0.548 |

### System Info:

- hf_name: vie-ita

- source_languages: vie

- target_languages: ita

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/vie-ita/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['vi', 'it']

- src_constituents: {'vie', 'vie_Hani'}

- tgt_constituents: {'ita'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/vie-ita/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/vie-ita/opus-2020-06-17.test.txt

- src_alpha3: vie

- tgt_alpha3: ita

- short_pair: vi-it

- chrF2_score: 0.5479999999999999

- bleu: 31.2

- brevity_penalty: 0.932

- ref_len: 1774.0

- src_name: Vietnamese

- tgt_name: Italian

- train_date: 2020-06-17

- src_alpha2: vi

- tgt_alpha2: it

- prefer_old: False

- long_pair: vie-ita

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 | bde7131f54d21defc536d02d1b8f8f4c |

nimrah/wav2vec2-large-xls-r-300m-turkish-colab | nimrah | wav2vec2 | 15 | 7 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | null | ['common_voice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,413 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-large-xls-r-300m-turkish-colab

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the common_voice dataset.

It achieves the following results on the evaluation set:

- Loss: 3.2970

- Wer: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.1

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:---:|

| 6.1837 | 3.67 | 400 | 3.2970 | 1.0 |

| 0.0 | 7.34 | 800 | 3.2970 | 1.0 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.10.3

| e589ce77b8310d22241093db1aab087a |

NimaBoscarino/IS-Net_DIS-general-use | NimaBoscarino | null | 3 | 0 | null | 0 | image-segmentation | false | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['background-removal', 'computer-vision', 'image-segmentation'] | false | true | true | 1,099 | false |

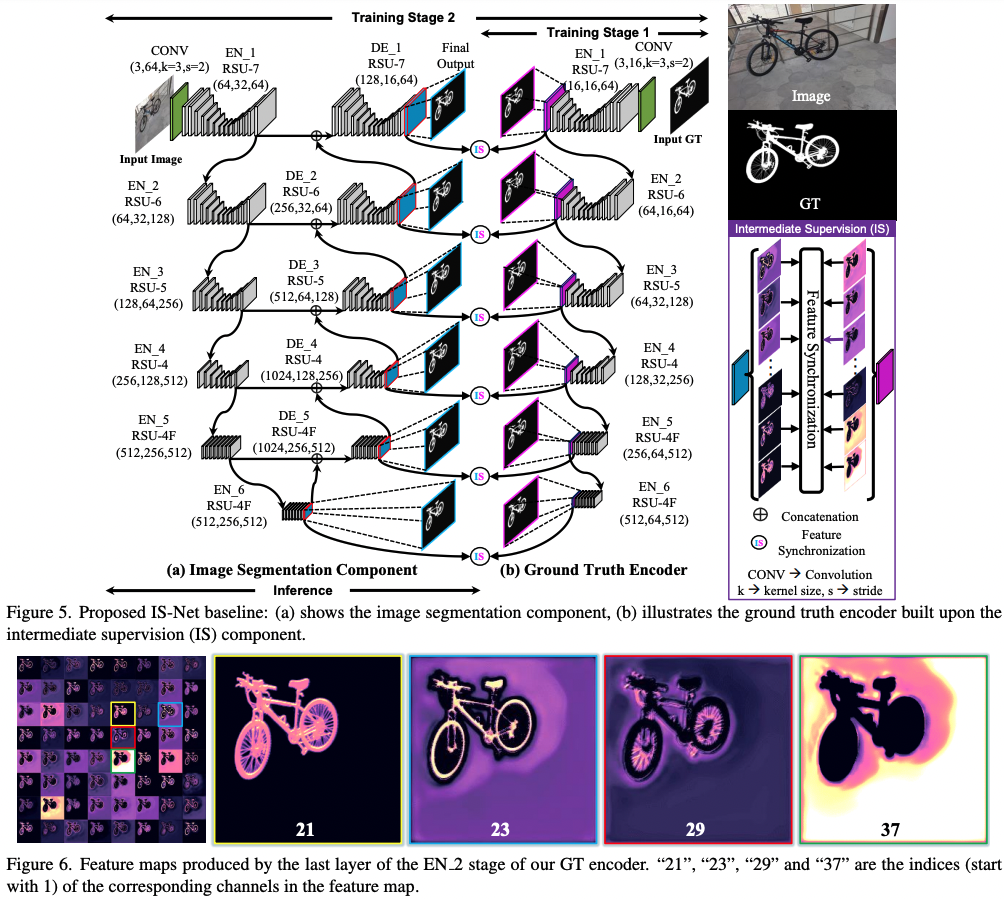

# IS-Net_DIS-general-use

* Model Authors: Xuebin Qin, Hang Dai, Xiaobin Hu, Deng-Ping Fan*, Ling Shao, Luc Van Gool

* Paper: Highly Accurate Dichotomous Image Segmentation (ECCV 2022 - https://arxiv.org/pdf/2203.03041.pdf

* Code Repo: https://github.com/xuebinqin/DIS

* Project Homepage: https://xuebinqin.github.io/dis/index.html

Note that this is an _optimized_ version of the IS-NET model.

From the paper abstract:

> [...] we introduce a simple intermediate supervision baseline (IS- Net) using both feature-level and mask-level guidance for DIS model training. Without tricks, IS-Net outperforms var- ious cutting-edge baselines on the proposed DIS5K, mak- ing it a general self-learned supervision network that can help facilitate future research in DIS.

# Citation

```

@InProceedings{qin2022,

author={Xuebin Qin and Hang Dai and Xiaobin Hu and Deng-Ping Fan and Ling Shao and Luc Van Gool},

title={Highly Accurate Dichotomous Image Segmentation},

booktitle={ECCV},

year={2022}

}

```

| 52aed6c16ad091aa07cef676fda80e70 |

Minerster/Text_process | Minerster | null | 2 | 0 | null | 0 | null | false | false | false | openrail | null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 515 | false | # Initialize the pipeline with the "text-davinci-002" model

segmenter = pipeline("text-segmentation", model="text-davinci-002", tokenizer='text-davinci-002')

# Segment the text

segmented_text = segmenter("This is a longer text that we want to segment into smaller chunks. Each chunk should correspond to a coherent piece of text.")

# Process each segment with ChatGPT

nlp = pipeline("text-generation", model="text-davinci-002", tokenizer='text-davinci-002')

for segment in segmented_text:

print(nlp(segment))

| bb9071eddfc73f6767a218d772b08998 |

mriggs/mt5-small-finetuned-1epoch-opus_books-en-to-it | mriggs | mt5 | 11 | 4 | transformers | 0 | text2text-generation | true | false | false | apache-2.0 | null | ['opus_books'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,173 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-small-finetuned-1epoch-opus_books-en-to-it

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the opus_books dataset.

It achieves the following results on the evaluation set:

- Loss: 3.3717

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 4.5201 | 1.0 | 3638 | 3.3717 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.0

- Tokenizers 0.13.1

| 492e7f690ffdc7aa1dafa81d273a362d |

jonatasgrosman/exp_w2v2r_de_vp-100k_gender_male-8_female-2_s874 | jonatasgrosman | wav2vec2 | 10 | 1 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['de'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'de'] | false | true | true | 498 | false | # exp_w2v2r_de_vp-100k_gender_male-8_female-2_s874

Fine-tuned [facebook/wav2vec2-large-100k-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-100k-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (de)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 513e20bd439a05f825a68d7a1acc291c |

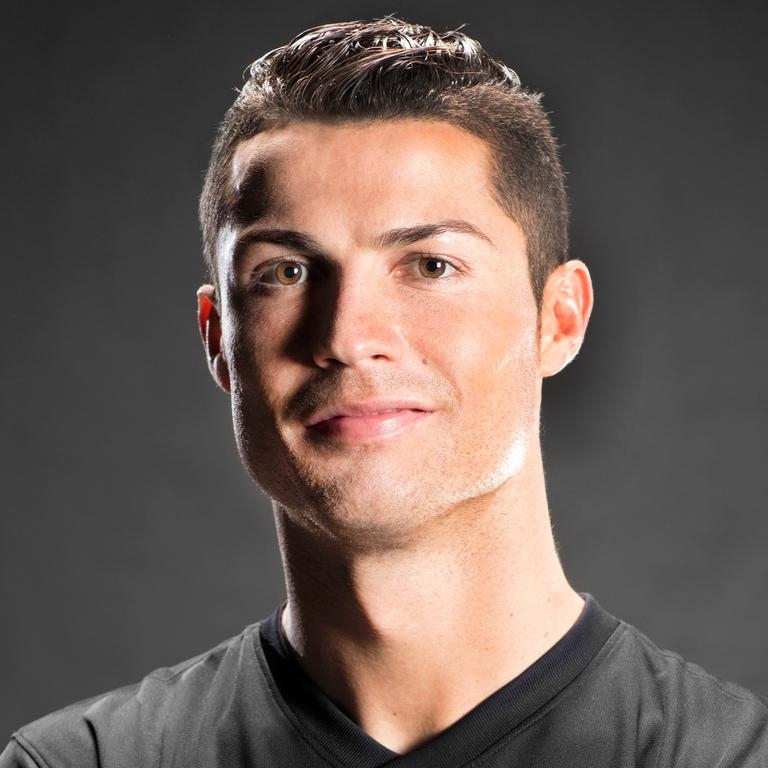

Gumibit/cr7-v2-768 | Gumibit | null | 28 | 7 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['text-to-image'] | false | true | true | 1,555 | false | ### CR7_v2_768 Dreambooth model trained by Gumibit with [Hugging Face Dreambooth Training Space](https://huggingface.co/spaces/multimodalart/dreambooth-training) with the v2-768 base model

You run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb). Don't forget to use the concept prompts!

Sample pictures of:

CrisRo07 (use that on your prompt)

| 67a8241aaef708efbdbf7be00ee6819a |

Das282000Prit/bert-base-uncased-finetuned-wikitext2 | Das282000Prit | bert | 9 | 2 | transformers | 0 | fill-mask | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,264 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-wikitext2

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7295

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.9288 | 1.0 | 2319 | 1.7729 |

| 1.8208 | 2.0 | 4638 | 1.7398 |

| 1.7888 | 3.0 | 6957 | 1.7523 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

| ec5ade9d862ff3e96e60edb4034194f7 |

sanjeev498/vit-base-beans | sanjeev498 | vit | 14 | 6 | transformers | 0 | image-classification | true | false | false | apache-2.0 | null | ['beans'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['image-classification', 'generated_from_trainer'] | true | true | true | 1,322 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# vit-base-beans

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the beans dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0189

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0568 | 1.54 | 100 | 0.0299 | 1.0 |

| 0.0135 | 3.08 | 200 | 0.0189 | 1.0 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.5.2

- Tokenizers 0.13.1

| 134a6da5513e541eb965ce825f952951 |

Helsinki-NLP/opus-mt-tc-big-zls-de | Helsinki-NLP | marian | 13 | 5 | transformers | 0 | translation | true | true | false | cc-by-4.0 | ['bg', 'de', 'hr', 'mk', 'sh', 'sl', 'sr'] | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['translation', 'opus-mt-tc'] | true | true | true | 7,862 | false | # opus-mt-tc-big-zls-de

## Table of Contents

- [Model Details](#model-details)

- [Uses](#uses)

- [Risks, Limitations and Biases](#risks-limitations-and-biases)

- [How to Get Started With the Model](#how-to-get-started-with-the-model)

- [Training](#training)

- [Evaluation](#evaluation)

- [Citation Information](#citation-information)

- [Acknowledgements](#acknowledgements)

## Model Details

Neural machine translation model for translating from South Slavic languages (zls) to German (de).

This model is part of the [OPUS-MT project](https://github.com/Helsinki-NLP/Opus-MT), an effort to make neural machine translation models widely available and accessible for many languages in the world. All models are originally trained using the amazing framework of [Marian NMT](https://marian-nmt.github.io/), an efficient NMT implementation written in pure C++. The models have been converted to pyTorch using the transformers library by huggingface. Training data is taken from [OPUS](https://opus.nlpl.eu/) and training pipelines use the procedures of [OPUS-MT-train](https://github.com/Helsinki-NLP/Opus-MT-train).

**Model Description:**

- **Developed by:** Language Technology Research Group at the University of Helsinki

- **Model Type:** Translation (transformer-big)

- **Release**: 2022-07-26

- **License:** CC-BY-4.0

- **Language(s):**

- Source Language(s): bos_Latn bul hbs hrv mkd slv srp_Cyrl srp_Latn

- Target Language(s): deu

- Language Pair(s): bul-deu hbs-deu hrv-deu mkd-deu slv-deu srp_Cyrl-deu srp_Latn-deu

- Valid Target Language Labels:

- **Original Model**: [opusTCv20210807_transformer-big_2022-07-26.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/zls-deu/opusTCv20210807_transformer-big_2022-07-26.zip)

- **Resources for more information:**

- [OPUS-MT-train GitHub Repo](https://github.com/Helsinki-NLP/OPUS-MT-train)

- More information about released models for this language pair: [OPUS-MT zls-deu README](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/zls-deu/README.md)

- [More information about MarianNMT models in the transformers library](https://huggingface.co/docs/transformers/model_doc/marian)

- [Tatoeba Translation Challenge](https://github.com/Helsinki-NLP/Tatoeba-Challenge/

## Uses

This model can be used for translation and text-to-text generation.

## Risks, Limitations and Biases

**CONTENT WARNING: Readers should be aware that the model is trained on various public data sets that may contain content that is disturbing, offensive, and can propagate historical and current stereotypes.**

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)).

## How to Get Started With the Model

A short example code:

```python

from transformers import MarianMTModel, MarianTokenizer

src_text = [

"Jesi li ti student?",

"Dve stvari deca treba da dobiju od svojih roditelja: korene i krila."

]

model_name = "pytorch-models/opus-mt-tc-big-zls-de"

tokenizer = MarianTokenizer.from_pretrained(model_name)

model = MarianMTModel.from_pretrained(model_name)

translated = model.generate(**tokenizer(src_text, return_tensors="pt", padding=True))

for t in translated:

print( tokenizer.decode(t, skip_special_tokens=True) )

# expected output:

# Sind Sie Student?

# Zwei Dinge sollten Kinder von ihren Eltern bekommen: Wurzeln und Flügel.

```

You can also use OPUS-MT models with the transformers pipelines, for example:

```python

from transformers import pipeline

pipe = pipeline("translation", model="Helsinki-NLP/opus-mt-tc-big-zls-de")

print(pipe("Jesi li ti student?"))

# expected output: Sind Sie Student?

```

## Training

- **Data**: opusTCv20210807 ([source](https://github.com/Helsinki-NLP/Tatoeba-Challenge))

- **Pre-processing**: SentencePiece (spm32k,spm32k)

- **Model Type:** transformer-big

- **Original MarianNMT Model**: [opusTCv20210807_transformer-big_2022-07-26.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/zls-deu/opusTCv20210807_transformer-big_2022-07-26.zip)

- **Training Scripts**: [GitHub Repo](https://github.com/Helsinki-NLP/OPUS-MT-train)

## Evaluation

* test set translations: [opusTCv20210807_transformer-big_2022-07-26.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/zls-deu/opusTCv20210807_transformer-big_2022-07-26.test.txt)

* test set scores: [opusTCv20210807_transformer-big_2022-07-26.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/zls-deu/opusTCv20210807_transformer-big_2022-07-26.eval.txt)

* benchmark results: [benchmark_results.txt](benchmark_results.txt)

* benchmark output: [benchmark_translations.zip](benchmark_translations.zip)

| langpair | testset | chr-F | BLEU | #sent | #words |

|----------|---------|-------|-------|-------|--------|

| bul-deu | tatoeba-test-v2021-08-07 | 0.71220 | 54.5 | 314 | 2224 |

| hbs-deu | tatoeba-test-v2021-08-07 | 0.71283 | 54.8 | 1959 | 15559 |

| hrv-deu | tatoeba-test-v2021-08-07 | 0.69448 | 53.1 | 782 | 5734 |

| slv-deu | tatoeba-test-v2021-08-07 | 0.36339 | 21.1 | 492 | 3003 |

| srp_Latn-deu | tatoeba-test-v2021-08-07 | 0.72489 | 56.0 | 986 | 8500 |

| bul-deu | flores101-devtest | 0.57688 | 28.4 | 1012 | 25094 |

| hrv-deu | flores101-devtest | 0.56674 | 27.4 | 1012 | 25094 |

| mkd-deu | flores101-devtest | 0.57688 | 29.3 | 1012 | 25094 |

| slv-deu | flores101-devtest | 0.56258 | 26.7 | 1012 | 25094 |

| srp_Cyrl-deu | flores101-devtest | 0.59271 | 30.7 | 1012 | 25094 |

## Citation Information

* Publications: [OPUS-MT – Building open translation services for the World](https://aclanthology.org/2020.eamt-1.61/) and [The Tatoeba Translation Challenge – Realistic Data Sets for Low Resource and Multilingual MT](https://aclanthology.org/2020.wmt-1.139/) (Please, cite if you use this model.)

```

@inproceedings{tiedemann-thottingal-2020-opus,

title = "{OPUS}-{MT} {--} Building open translation services for the World",

author = {Tiedemann, J{\"o}rg and Thottingal, Santhosh},

booktitle = "Proceedings of the 22nd Annual Conference of the European Association for Machine Translation",

month = nov,

year = "2020",

address = "Lisboa, Portugal",

publisher = "European Association for Machine Translation",

url = "https://aclanthology.org/2020.eamt-1.61",

pages = "479--480",

}

@inproceedings{tiedemann-2020-tatoeba,

title = "The Tatoeba Translation Challenge {--} Realistic Data Sets for Low Resource and Multilingual {MT}",

author = {Tiedemann, J{\"o}rg},

booktitle = "Proceedings of the Fifth Conference on Machine Translation",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2020.wmt-1.139",

pages = "1174--1182",

}

```

## Acknowledgements

The work is supported by the [European Language Grid](https://www.european-language-grid.eu/) as [pilot project 2866](https://live.european-language-grid.eu/catalogue/#/resource/projects/2866), by the [FoTran project](https://www.helsinki.fi/en/researchgroups/natural-language-understanding-with-cross-lingual-grounding), funded by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 771113), and the [MeMAD project](https://memad.eu/), funded by the European Union’s Horizon 2020 Research and Innovation Programme under grant agreement No 780069. We are also grateful for the generous computational resources and IT infrastructure provided by [CSC -- IT Center for Science](https://www.csc.fi/), Finland.

## Model conversion info

* transformers version: 4.16.2

* OPUS-MT git hash: 8b9f0b0

* port time: Sat Aug 13 00:05:30 EEST 2022

* port machine: LM0-400-22516.local

| 0e86657be1671ca1841fa94b8d3f05f2 |

tlapusan/distilbert-base-uncased-finetuned-imdb | tlapusan | distilbert | 21 | 5 | transformers | 0 | fill-mask | true | false | false | apache-2.0 | null | ['imdb'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,318 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.1639

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7695 | 1.0 | 90 | 2.3614 |

| 2.3627 | 2.0 | 180 | 2.1959 |

| 2.227 | 3.0 | 270 | 2.1313 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

| 975378ba8746e62cb7c8f476bf4d8cba |

abdelkader/distilbert-base-uncased-distilled-clinc | abdelkader | distilbert | 10 | 6 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['clinc_oos'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,793 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-distilled-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3038

- Accuracy: 0.9465

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 318 | 2.8460 | 0.7506 |

| 3.322 | 2.0 | 636 | 1.4301 | 0.8532 |

| 3.322 | 3.0 | 954 | 0.7377 | 0.9152 |

| 1.2296 | 4.0 | 1272 | 0.4784 | 0.9316 |

| 0.449 | 5.0 | 1590 | 0.3730 | 0.9390 |

| 0.449 | 6.0 | 1908 | 0.3367 | 0.9429 |

| 0.2424 | 7.0 | 2226 | 0.3163 | 0.9468 |

| 0.1741 | 8.0 | 2544 | 0.3074 | 0.9452 |

| 0.1741 | 9.0 | 2862 | 0.3054 | 0.9458 |

| 0.1501 | 10.0 | 3180 | 0.3038 | 0.9465 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.10.0+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

| 5568279f835927c37ca01c2eb812c7e5 |

MoHai/wav2vec2-base-timit-demo-colab | MoHai | wav2vec2 | 12 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,341 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4701

- Wer: 0.4537

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 32

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.5672 | 4.0 | 500 | 1.6669 | 1.0323 |

| 0.6226 | 8.0 | 1000 | 0.4701 | 0.4537 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.10.3

| 0e9bcf4bf575d451ce8c7bf5415fc9d6 |

CarpetCleaningPlanoTX/UpholsteryCleaningPlanoTX | CarpetCleaningPlanoTX | null | 2 | 0 | null | 0 | null | false | false | false | other | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 479 | false | Upholstery Cleaning Plano TX

https://carpetcleaningplanotx.com/upholstery-cleaning.html

(469) 444-1903

We remove stains from sofas.When you have a nice, comfortable sofa in your home, spills are common.On that new couch, game day weekends can be difficult.When they are excited about who is winning on the playing field, friends, family, and pets can cause havoc.After a party, upholstery cleaning is not a problem.We can arrive with our mobile unit, which simplifies the task. | b2d52762f8ee1a851ceeba27de5749ea |

CAMeL-Lab/bert-base-arabic-camelbert-mix-did-madar-corpus26 | CAMeL-Lab | bert | 12 | 20 | transformers | 1 | text-classification | true | true | false | apache-2.0 | ['ar'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 2,879 | false | # CAMeLBERT-Mix DID Madar Corpus26 Model

## Model description

**CAMeLBERT-Mix DID Madar Corpus26 Model** is a dialect identification (DID) model that was built by fine-tuning the [CAMeLBERT-Mix](https://huggingface.co/CAMeL-Lab/bert-base-arabic-camelbert-mix/) model.

For the fine-tuning, we used the [MADAR Corpus 26](https://camel.abudhabi.nyu.edu/madar-shared-task-2019/) dataset, which includes 26 labels.

Our fine-tuning procedure and the hyperparameters we used can be found in our paper *"[The Interplay of Variant, Size, and Task Type in Arabic Pre-trained Language Models](https://arxiv.org/abs/2103.06678)."* Our fine-tuning code can be found [here](https://github.com/CAMeL-Lab/CAMeLBERT).

## Intended uses

You can use the CAMeLBERT-Mix DID Madar Corpus26 model as part of the transformers pipeline.

This model will also be available in [CAMeL Tools](https://github.com/CAMeL-Lab/camel_tools) soon.

#### How to use

To use the model with a transformers pipeline:

```python

>>> from transformers import pipeline

>>> did = pipeline('text-classification', model='CAMeL-Lab/bert-base-arabic-camelbert-mix-did-madar26')

>>> sentences = ['عامل ايه ؟', 'شلونك ؟ شخبارك ؟']

>>> did(sentences)

[{'label': 'CAI', 'score': 0.8751305937767029},

{'label': 'DOH', 'score': 0.9867215156555176}]

```

*Note*: to download our models, you would need `transformers>=3.5.0`.

Otherwise, you could download the models manually.

## Citation

```bibtex

@inproceedings{inoue-etal-2021-interplay,

title = "The Interplay of Variant, Size, and Task Type in {A}rabic Pre-trained Language Models",

author = "Inoue, Go and

Alhafni, Bashar and

Baimukan, Nurpeiis and

Bouamor, Houda and

Habash, Nizar",

booktitle = "Proceedings of the Sixth Arabic Natural Language Processing Workshop",

month = apr,

year = "2021",

address = "Kyiv, Ukraine (Online)",

publisher = "Association for Computational Linguistics",

abstract = "In this paper, we explore the effects of language variants, data sizes, and fine-tuning task types in Arabic pre-trained language models. To do so, we build three pre-trained language models across three variants of Arabic: Modern Standard Arabic (MSA), dialectal Arabic, and classical Arabic, in addition to a fourth language model which is pre-trained on a mix of the three. We also examine the importance of pre-training data size by building additional models that are pre-trained on a scaled-down set of the MSA variant. We compare our different models to each other, as well as to eight publicly available models by fine-tuning them on five NLP tasks spanning 12 datasets. Our results suggest that the variant proximity of pre-training data to fine-tuning data is more important than the pre-training data size. We exploit this insight in defining an optimized system selection model for the studied tasks.",

}

``` | 7672980558767e17d40eb43ad827b8f8 |

benjamin/gerpt2-large | benjamin | gpt2 | 9 | 4,524 | transformers | 7 | text-generation | true | false | true | mit | ['de'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 4,834 | false |

# GerPT2

German large and small versions of GPT2:

- https://huggingface.co/benjamin/gerpt2

- https://huggingface.co/benjamin/gerpt2-large

See the [GPT2 model card](https://huggingface.co/gpt2) for considerations on limitations and bias. See the [GPT2 documentation](https://huggingface.co/transformers/model_doc/gpt2.html) for details on GPT2.

## Comparison to [dbmdz/german-gpt2](https://huggingface.co/dbmdz/german-gpt2)

I evaluated both GerPT2-large and the other German GPT2, [dbmdz/german-gpt2](https://huggingface.co/dbmdz/german-gpt2) on the [CC-100](http://data.statmt.org/cc-100/) dataset and on the German Wikipedia:

| | CC-100 (PPL) | Wikipedia (PPL) |

|-------------------|--------------|-----------------|

| dbmdz/german-gpt2 | 49.47 | 62.92 |

| GerPT2 | 24.78 | 35.33 |

| GerPT2-large | __16.08__ | __23.26__ |

| | | |

See the script `evaluate.py` in the [GerPT2 Github repository](https://github.com/bminixhofer/gerpt2) for the code.

## Usage

```python

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

tokenizer = AutoTokenizer.from_pretrained("benjamin/gerpt2-large")

model = AutoModelForCausalLM.from_pretrained("benjamin/gerpt2-large")

prompt = "<your prompt>"

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

print(pipe(prompt)[0]["generated_text"])

```

Also, two tricks might improve the generated text:

```python

output = model.generate(

# during training an EOS token was used to mark the beginning of each text

# so it can help to insert it at the start

torch.tensor(

[tokenizer.eos_token_id] + tokenizer.encode(prompt)

).unsqueeze(0),

do_sample=True,

# try setting bad_words_ids=[[0]] to disallow generating an EOS token, without this the model is

# prone to ending generation early because a significant number of texts from the training corpus

# is quite short

bad_words_ids=[[0]],

max_length=max_length,

)[0]

print(tokenizer.decode(output))

```

## Training details

GerPT2-large is trained on the entire German data from the [CC-100 Corpus](http://data.statmt.org/cc-100/) and weights were initialized from the [English GPT2 model](https://huggingface.co/gpt2-large).

GerPT2-large was trained with:

- a batch size of 256

- using OneCycle learning rate with a maximum of 5e-3

- with AdamW with a weight decay of 0.01

- for 2 epochs

Training took roughly 12 days on 8 TPUv3 cores.

To train GerPT2-large, follow these steps. Scripts are located in the [Github repository](https://github.com/bminixhofer/gerpt2):

0. Download and unzip training data from http://data.statmt.org/cc-100/.

1. Train a tokenizer using `prepare/train_tokenizer.py`. As training data for the tokenizer I used a random subset of 5% of the CC-100 data.

2. (optionally) generate a German input embedding matrix with `prepare/generate_aligned_wte.py`. This uses a neat trick to semantically map tokens from the English tokenizer to tokens from the German tokenizer using aligned word embeddings. E. g.:

```

ĠMinde -> Ġleast

Ġjed -> Ġwhatsoever

flughafen -> Air

vermittlung -> employment

teilung -> ignment

ĠInterpretation -> Ġinterpretation

Ġimport -> Ġimported

hansa -> irl

genehmigungen -> exempt

ĠAuflist -> Ġlists

Ġverschwunden -> Ġdisappeared

ĠFlyers -> ĠFlyers

Kanal -> Channel

Ġlehr -> Ġteachers

Ġnahelie -> Ġconvenient

gener -> Generally

mitarbeiter -> staff

```

This helps a lot on a trial run I did, although I wasn't able to do a full comparison due to budget and time constraints. To use this WTE matrix it can be passed via the `wte_path` to the training script. Credit to [this blogpost](https://medium.com/@pierre_guillou/faster-than-training-from-scratch-fine-tuning-the-english-gpt-2-in-any-language-with-hugging-f2ec05c98787) for the idea of initializing GPT2 from English weights.

3. Tokenize the corpus using `prepare/tokenize_text.py`. This generates files for train and validation tokens in JSON Lines format.

4. Run the training script `train.py`! `run.sh` shows how this was executed for the full run with config `configs/tpu_large.json`.

## License

GerPT2 is licensed under the MIT License.

## Citing

Please cite GerPT2 as follows:

```

@misc{Minixhofer_GerPT2_German_large_2020,

author = {Minixhofer, Benjamin},

doi = {10.5281/zenodo.5509984},

month = {12},

title = {{GerPT2: German large and small versions of GPT2}},

url = {https://github.com/bminixhofer/gerpt2},

year = {2020}

}

```

## Acknowledgements

Thanks to [Hugging Face](https://huggingface.co) for awesome tools and infrastructure.

Huge thanks to [Artus Krohn-Grimberghe](https://twitter.com/artuskg) at [LYTiQ](https://www.lytiq.de/) for making this possible by sponsoring the resources used for training.

| bebbd2a3f29d6d20c328a37fde0414c7 |

fghjfbrtb/wr1 | fghjfbrtb | null | 16 | 4 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 607 | false | ### wr1 Dreambooth model trained by fghjfbrtb with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Or you can run your new concept via `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Sample pictures of this concept:

| 21ac9e18da6f3e336296e9f0b02b7a6b |

facebook/hubert-xlarge-ls960-ft | facebook | hubert | 9 | 1,724 | transformers | 9 | automatic-speech-recognition | true | true | false | apache-2.0 | ['en'] | ['libri-light', 'librispeech_asr'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['speech', 'audio', 'automatic-speech-recognition', 'hf-asr-leaderboard'] | true | true | true | 2,825 | false |

# Hubert-Extra-Large-Finetuned

[Facebook's Hubert](https://ai.facebook.com/blog/hubert-self-supervised-representation-learning-for-speech-recognition-generation-and-compression)

The extra large model fine-tuned on 960h of Librispeech on 16kHz sampled speech audio. When using the model make sure that your speech input is also sampled at 16Khz.

The model is a fine-tuned version of [hubert-xlarge-ll60k](https://huggingface.co/facebook/hubert-xlarge-ll60k).

[Paper](https://arxiv.org/abs/2106.07447)

Authors: Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, Abdelrahman Mohamed

**Abstract**

Self-supervised approaches for speech representation learning are challenged by three unique problems: (1) there are multiple sound units in each input utterance, (2) there is no lexicon of input sound units during the pre-training phase, and (3) sound units have variable lengths with no explicit segmentation. To deal with these three problems, we propose the Hidden-Unit BERT (HuBERT) approach for self-supervised speech representation learning, which utilizes an offline clustering step to provide aligned target labels for a BERT-like prediction loss. A key ingredient of our approach is applying the prediction loss over the masked regions only, which forces the model to learn a combined acoustic and language model over the continuous inputs. HuBERT relies primarily on the consistency of the unsupervised clustering step rather than the intrinsic quality of the assigned cluster labels. Starting with a simple k-means teacher of 100 clusters, and using two iterations of clustering, the HuBERT model either matches or improves upon the state-of-the-art wav2vec 2.0 performance on the Librispeech (960h) and Libri-light (60,000h) benchmarks with 10min, 1h, 10h, 100h, and 960h fine-tuning subsets. Using a 1B parameter model, HuBERT shows up to 19% and 13% relative WER reduction on the more challenging dev-other and test-other evaluation subsets.

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/hubert .

# Usage

The model can be used for automatic-speech-recognition as follows:

```python

import torch

from transformers import Wav2Vec2Processor, HubertForCTC

from datasets import load_dataset

processor = Wav2Vec2Processor.from_pretrained("facebook/hubert-xlarge-ls960-ft")

model = HubertForCTC.from_pretrained("facebook/hubert-xlarge-ls960-ft")

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

input_values = processor(ds[0]["audio"]["array"], return_tensors="pt").input_values # Batch size 1

logits = model(input_values).logits

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.decode(predicted_ids[0])

# ->"A MAN SAID TO THE UNIVERSE SIR I EXIST"

``` | d27e0dda9b1c136fccfc1998caec173f |

cdefghijkl/ap | cdefghijkl | null | 18 | 0 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 414 | false | ### ap Dreambooth model trained by cdefghijkl with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

| a171499801f31ec319c510752a9b2362 |

sd-concepts-library/dreamy-painting | sd-concepts-library | null | 10 | 0 | null | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 1,575 | false | ### Dreamy Painting on Stable Diffusion

This is the `<dreamy-painting>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

Here are images generated in this style:

| 875e6c86daecb83a7ae78a1fbff9e78f |

hr16/any-ely-wd-ira-olympus-3000 | hr16 | null | 17 | 2 | diffusers | 0 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['text-to-image', 'stable-diffusion'] | false | true | true | 542 | false | ### Model Dreambooth concept any_ely_wd-Ira_Olympus-3000 được train bởi hr16 bằng [Shinja Zero SoTA DreamBooth_Stable_Diffusion](https://colab.research.google.com/drive/1G7qx6M_S1PDDlsWIMdbZXwdZik6sUlEh) notebook <br>

Test concept bằng [Shinja Zero no Notebook](https://colab.research.google.com/drive/1Hp1ZIjPbsZKlCtomJVmt2oX7733W44b0) <br>

Hoặc test bằng `diffusers` [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb)

Ảnh mẫu của concept: WIP

| 5cb45850c4d39c40ded658ec02a7a04d |

sd-concepts-library/yf21 | sd-concepts-library | null | 9 | 0 | null | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 970 | false | ### YF21 on Stable Diffusion

This is the `<YF21>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

| fbcc174a56a63c2898b9c32e7553b559 |

Helsinki-NLP/opus-mt-en-trk | Helsinki-NLP | marian | 11 | 425 | transformers | 0 | translation | true | true | false | apache-2.0 | ['en', 'tt', 'cv', 'tk', 'tr', 'ba', 'trk'] | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 3,537 | false |

### eng-trk

* source group: English

* target group: Turkic languages

* OPUS readme: [eng-trk](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eng-trk/README.md)

* model: transformer

* source language(s): eng

* target language(s): aze_Latn bak chv crh crh_Latn kaz_Cyrl kaz_Latn kir_Cyrl kjh kum ota_Arab ota_Latn sah tat tat_Arab tat_Latn tuk tuk_Latn tur tyv uig_Arab uig_Cyrl uzb_Cyrl uzb_Latn

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* a sentence initial language token is required in the form of `>>id<<` (id = valid target language ID)

* download original weights: [opus2m-2020-08-01.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-trk/opus2m-2020-08-01.zip)

* test set translations: [opus2m-2020-08-01.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-trk/opus2m-2020-08-01.test.txt)

* test set scores: [opus2m-2020-08-01.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-trk/opus2m-2020-08-01.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| newsdev2016-entr-engtur.eng.tur | 10.1 | 0.437 |

| newstest2016-entr-engtur.eng.tur | 9.2 | 0.410 |

| newstest2017-entr-engtur.eng.tur | 9.0 | 0.410 |

| newstest2018-entr-engtur.eng.tur | 9.2 | 0.413 |

| Tatoeba-test.eng-aze.eng.aze | 26.8 | 0.577 |

| Tatoeba-test.eng-bak.eng.bak | 7.6 | 0.308 |

| Tatoeba-test.eng-chv.eng.chv | 4.3 | 0.270 |

| Tatoeba-test.eng-crh.eng.crh | 8.1 | 0.330 |

| Tatoeba-test.eng-kaz.eng.kaz | 11.1 | 0.359 |

| Tatoeba-test.eng-kir.eng.kir | 28.6 | 0.524 |

| Tatoeba-test.eng-kjh.eng.kjh | 1.0 | 0.041 |

| Tatoeba-test.eng-kum.eng.kum | 2.2 | 0.075 |

| Tatoeba-test.eng.multi | 19.9 | 0.455 |

| Tatoeba-test.eng-ota.eng.ota | 0.5 | 0.065 |

| Tatoeba-test.eng-sah.eng.sah | 0.7 | 0.030 |

| Tatoeba-test.eng-tat.eng.tat | 9.7 | 0.316 |

| Tatoeba-test.eng-tuk.eng.tuk | 5.9 | 0.317 |

| Tatoeba-test.eng-tur.eng.tur | 34.6 | 0.623 |

| Tatoeba-test.eng-tyv.eng.tyv | 5.4 | 0.210 |

| Tatoeba-test.eng-uig.eng.uig | 0.1 | 0.155 |

| Tatoeba-test.eng-uzb.eng.uzb | 3.4 | 0.275 |

### System Info:

- hf_name: eng-trk

- source_languages: eng

- target_languages: trk

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eng-trk/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['en', 'tt', 'cv', 'tk', 'tr', 'ba', 'trk']

- src_constituents: {'eng'}

- tgt_constituents: {'kir_Cyrl', 'tat_Latn', 'tat', 'chv', 'uzb_Cyrl', 'kaz_Latn', 'aze_Latn', 'crh', 'kjh', 'uzb_Latn', 'ota_Arab', 'tuk_Latn', 'tuk', 'tat_Arab', 'sah', 'tyv', 'tur', 'uig_Arab', 'crh_Latn', 'kaz_Cyrl', 'uig_Cyrl', 'kum', 'ota_Latn', 'bak'}

- src_multilingual: False

- tgt_multilingual: True

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/eng-trk/opus2m-2020-08-01.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/eng-trk/opus2m-2020-08-01.test.txt

- src_alpha3: eng

- tgt_alpha3: trk

- short_pair: en-trk

- chrF2_score: 0.455

- bleu: 19.9

- brevity_penalty: 1.0

- ref_len: 57072.0

- src_name: English

- tgt_name: Turkic languages

- train_date: 2020-08-01

- src_alpha2: en

- tgt_alpha2: trk

- prefer_old: False

- long_pair: eng-trk

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 | 0822a9ec16216342129307712f368c1a |