repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

priyankav/distilbert-base-uncased-finetuned-squad | priyankav | distilbert | 10 | 3 | transformers | 0 | question-answering | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 2,049 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# priyankavalappil/distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.9684

- Train End Logits Accuracy: 0.7305

- Train Start Logits Accuracy: 0.6893

- Validation Loss: 1.1278

- Validation End Logits Accuracy: 0.6999

- Validation Start Logits Accuracy: 0.6635

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 11064, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Train End Logits Accuracy | Train Start Logits Accuracy | Validation Loss | Validation End Logits Accuracy | Validation Start Logits Accuracy | Epoch |

|:----------:|:-------------------------:|:---------------------------:|:---------------:|:------------------------------:|:--------------------------------:|:-----:|

| 1.5059 | 0.6070 | 0.5685 | 1.1518 | 0.6816 | 0.6482 | 0 |

| 0.9684 | 0.7305 | 0.6893 | 1.1278 | 0.6999 | 0.6635 | 1 |

### Framework versions

- Transformers 4.22.2

- TensorFlow 2.8.2

- Datasets 2.5.2

- Tokenizers 0.12.1

| f3a3cb874cea332c9a2c9049839bb772 |

tomekkorbak/competent_payne | tomekkorbak | null | 2 | 0 | null | 0 | null | false | false | false | mit | ['en'] | ['tomekkorbak/detoxify-pile-chunk3-0-50000', 'tomekkorbak/detoxify-pile-chunk3-50000-100000', 'tomekkorbak/detoxify-pile-chunk3-100000-150000', 'tomekkorbak/detoxify-pile-chunk3-150000-200000', 'tomekkorbak/detoxify-pile-chunk3-200000-250000', 'tomekkorbak/detoxify-pile-chunk3-250000-300000', 'tomekkorbak/detoxify-pile-chunk3-300000-350000', 'tomekkorbak/detoxify-pile-chunk3-350000-400000', 'tomekkorbak/detoxify-pile-chunk3-400000-450000', 'tomekkorbak/detoxify-pile-chunk3-450000-500000', 'tomekkorbak/detoxify-pile-chunk3-500000-550000', 'tomekkorbak/detoxify-pile-chunk3-550000-600000', 'tomekkorbak/detoxify-pile-chunk3-600000-650000', 'tomekkorbak/detoxify-pile-chunk3-650000-700000', 'tomekkorbak/detoxify-pile-chunk3-700000-750000', 'tomekkorbak/detoxify-pile-chunk3-750000-800000', 'tomekkorbak/detoxify-pile-chunk3-800000-850000', 'tomekkorbak/detoxify-pile-chunk3-850000-900000', 'tomekkorbak/detoxify-pile-chunk3-900000-950000', 'tomekkorbak/detoxify-pile-chunk3-950000-1000000', 'tomekkorbak/detoxify-pile-chunk3-1000000-1050000', 'tomekkorbak/detoxify-pile-chunk3-1050000-1100000', 'tomekkorbak/detoxify-pile-chunk3-1100000-1150000', 'tomekkorbak/detoxify-pile-chunk3-1150000-1200000', 'tomekkorbak/detoxify-pile-chunk3-1200000-1250000', 'tomekkorbak/detoxify-pile-chunk3-1250000-1300000', 'tomekkorbak/detoxify-pile-chunk3-1300000-1350000', 'tomekkorbak/detoxify-pile-chunk3-1350000-1400000', 'tomekkorbak/detoxify-pile-chunk3-1400000-1450000', 'tomekkorbak/detoxify-pile-chunk3-1450000-1500000', 'tomekkorbak/detoxify-pile-chunk3-1500000-1550000', 'tomekkorbak/detoxify-pile-chunk3-1550000-1600000', 'tomekkorbak/detoxify-pile-chunk3-1600000-1650000', 'tomekkorbak/detoxify-pile-chunk3-1650000-1700000', 'tomekkorbak/detoxify-pile-chunk3-1700000-1750000', 'tomekkorbak/detoxify-pile-chunk3-1750000-1800000', 'tomekkorbak/detoxify-pile-chunk3-1800000-1850000', 'tomekkorbak/detoxify-pile-chunk3-1850000-1900000', 'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 8,841 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# competent_payne

This model was trained from scratch on the tomekkorbak/detoxify-pile-chunk3-0-50000, the tomekkorbak/detoxify-pile-chunk3-50000-100000, the tomekkorbak/detoxify-pile-chunk3-100000-150000, the tomekkorbak/detoxify-pile-chunk3-150000-200000, the tomekkorbak/detoxify-pile-chunk3-200000-250000, the tomekkorbak/detoxify-pile-chunk3-250000-300000, the tomekkorbak/detoxify-pile-chunk3-300000-350000, the tomekkorbak/detoxify-pile-chunk3-350000-400000, the tomekkorbak/detoxify-pile-chunk3-400000-450000, the tomekkorbak/detoxify-pile-chunk3-450000-500000, the tomekkorbak/detoxify-pile-chunk3-500000-550000, the tomekkorbak/detoxify-pile-chunk3-550000-600000, the tomekkorbak/detoxify-pile-chunk3-600000-650000, the tomekkorbak/detoxify-pile-chunk3-650000-700000, the tomekkorbak/detoxify-pile-chunk3-700000-750000, the tomekkorbak/detoxify-pile-chunk3-750000-800000, the tomekkorbak/detoxify-pile-chunk3-800000-850000, the tomekkorbak/detoxify-pile-chunk3-850000-900000, the tomekkorbak/detoxify-pile-chunk3-900000-950000, the tomekkorbak/detoxify-pile-chunk3-950000-1000000, the tomekkorbak/detoxify-pile-chunk3-1000000-1050000, the tomekkorbak/detoxify-pile-chunk3-1050000-1100000, the tomekkorbak/detoxify-pile-chunk3-1100000-1150000, the tomekkorbak/detoxify-pile-chunk3-1150000-1200000, the tomekkorbak/detoxify-pile-chunk3-1200000-1250000, the tomekkorbak/detoxify-pile-chunk3-1250000-1300000, the tomekkorbak/detoxify-pile-chunk3-1300000-1350000, the tomekkorbak/detoxify-pile-chunk3-1350000-1400000, the tomekkorbak/detoxify-pile-chunk3-1400000-1450000, the tomekkorbak/detoxify-pile-chunk3-1450000-1500000, the tomekkorbak/detoxify-pile-chunk3-1500000-1550000, the tomekkorbak/detoxify-pile-chunk3-1550000-1600000, the tomekkorbak/detoxify-pile-chunk3-1600000-1650000, the tomekkorbak/detoxify-pile-chunk3-1650000-1700000, the tomekkorbak/detoxify-pile-chunk3-1700000-1750000, the tomekkorbak/detoxify-pile-chunk3-1750000-1800000, the tomekkorbak/detoxify-pile-chunk3-1800000-1850000, the tomekkorbak/detoxify-pile-chunk3-1850000-1900000 and the tomekkorbak/detoxify-pile-chunk3-1900000-1950000 datasets.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- training_steps: 25000

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.24.0

- Pytorch 1.11.0+cu113

- Datasets 2.5.1

- Tokenizers 0.11.6

# Full config

{'dataset': {'datasets': ['tomekkorbak/detoxify-pile-chunk3-0-50000',

'tomekkorbak/detoxify-pile-chunk3-50000-100000',

'tomekkorbak/detoxify-pile-chunk3-100000-150000',

'tomekkorbak/detoxify-pile-chunk3-150000-200000',

'tomekkorbak/detoxify-pile-chunk3-200000-250000',

'tomekkorbak/detoxify-pile-chunk3-250000-300000',

'tomekkorbak/detoxify-pile-chunk3-300000-350000',

'tomekkorbak/detoxify-pile-chunk3-350000-400000',

'tomekkorbak/detoxify-pile-chunk3-400000-450000',

'tomekkorbak/detoxify-pile-chunk3-450000-500000',

'tomekkorbak/detoxify-pile-chunk3-500000-550000',

'tomekkorbak/detoxify-pile-chunk3-550000-600000',

'tomekkorbak/detoxify-pile-chunk3-600000-650000',

'tomekkorbak/detoxify-pile-chunk3-650000-700000',

'tomekkorbak/detoxify-pile-chunk3-700000-750000',

'tomekkorbak/detoxify-pile-chunk3-750000-800000',

'tomekkorbak/detoxify-pile-chunk3-800000-850000',

'tomekkorbak/detoxify-pile-chunk3-850000-900000',

'tomekkorbak/detoxify-pile-chunk3-900000-950000',

'tomekkorbak/detoxify-pile-chunk3-950000-1000000',

'tomekkorbak/detoxify-pile-chunk3-1000000-1050000',

'tomekkorbak/detoxify-pile-chunk3-1050000-1100000',

'tomekkorbak/detoxify-pile-chunk3-1100000-1150000',

'tomekkorbak/detoxify-pile-chunk3-1150000-1200000',

'tomekkorbak/detoxify-pile-chunk3-1200000-1250000',

'tomekkorbak/detoxify-pile-chunk3-1250000-1300000',

'tomekkorbak/detoxify-pile-chunk3-1300000-1350000',

'tomekkorbak/detoxify-pile-chunk3-1350000-1400000',

'tomekkorbak/detoxify-pile-chunk3-1400000-1450000',

'tomekkorbak/detoxify-pile-chunk3-1450000-1500000',

'tomekkorbak/detoxify-pile-chunk3-1500000-1550000',

'tomekkorbak/detoxify-pile-chunk3-1550000-1600000',

'tomekkorbak/detoxify-pile-chunk3-1600000-1650000',

'tomekkorbak/detoxify-pile-chunk3-1650000-1700000',

'tomekkorbak/detoxify-pile-chunk3-1700000-1750000',

'tomekkorbak/detoxify-pile-chunk3-1750000-1800000',

'tomekkorbak/detoxify-pile-chunk3-1800000-1850000',

'tomekkorbak/detoxify-pile-chunk3-1850000-1900000',

'tomekkorbak/detoxify-pile-chunk3-1900000-1950000'],

'filter_threshold': 0.00078,

'is_split_by_sentences': True,

'skip_tokens': 1661599744},

'generation': {'metrics_configs': [{}, {'n': 1}, {'n': 2}, {'n': 5}],

'scenario_configs': [{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'unconditional',

'num_samples': 2048},

{'generate_kwargs': {'do_sample': True,

'max_length': 128,

'min_length': 10,

'temperature': 0.7,

'top_k': 0,

'top_p': 0.9},

'name': 'challenging_rtp',

'num_samples': 2048,

'prompts_path': 'resources/challenging_rtp.jsonl'}],

'scorer_config': {'device': 'cuda:0'}},

'kl_gpt3_callback': {'max_tokens': 64, 'num_samples': 4096},

'model': {'from_scratch': False,

'gpt2_config_kwargs': {'reorder_and_upcast_attn': True,

'scale_attn_by': True},

'model_kwargs': {'revision': 'f9cb81e577effccc64697016af1e8eaf2bf5dcd2'},

'path_or_name': 'tomekkorbak/nervous_wozniak'},

'objective': {'name': 'MLE'},

'tokenizer': {'path_or_name': 'gpt2'},

'training': {'dataloader_num_workers': 0,

'effective_batch_size': 64,

'evaluation_strategy': 'no',

'fp16': True,

'hub_model_id': 'competent_payne',

'hub_strategy': 'all_checkpoints',

'learning_rate': 0.0005,

'logging_first_step': True,

'logging_steps': 1,

'num_tokens': 3300000000,

'output_dir': 'training_output104340',

'per_device_train_batch_size': 16,

'push_to_hub': True,

'remove_unused_columns': False,

'save_steps': 25354,

'save_strategy': 'steps',

'seed': 42,

'tokens_already_seen': 1661599744,

'warmup_ratio': 0.01,

'weight_decay': 0.1}}

# Wandb URL:

https://wandb.ai/tomekkorbak/apo/runs/2q5t671f | ed00b9106741e5ad8660d05d37de6a24 |

Helsinki-NLP/opus-mt-en-pag | Helsinki-NLP | marian | 10 | 10 | transformers | 0 | translation | true | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 776 | false |

### opus-mt-en-pag

* source languages: en

* target languages: pag

* OPUS readme: [en-pag](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/en-pag/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-20.zip](https://object.pouta.csc.fi/OPUS-MT-models/en-pag/opus-2020-01-20.zip)

* test set translations: [opus-2020-01-20.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-pag/opus-2020-01-20.test.txt)

* test set scores: [opus-2020-01-20.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/en-pag/opus-2020-01-20.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.en.pag | 37.9 | 0.598 |

| 9ae798d149f22bf8b01357cfc3a95ea6 |

jonatasgrosman/exp_w2v2r_fr_xls-r_accent_france-5_belgium-5_s452 | jonatasgrosman | wav2vec2 | 10 | 3 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['fr'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'fr'] | false | true | true | 479 | false | # exp_w2v2r_fr_xls-r_accent_france-5_belgium-5_s452

Fine-tuned [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) for speech recognition using the train split of [Common Voice 7.0 (fr)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| b3d98d644ab8812c3343b509df02a9f9 |

lmqg/mbart-large-cc25-frquad-qg | lmqg | mbart | 20 | 63 | transformers | 0 | text2text-generation | true | false | false | cc-by-4.0 | ['fr'] | ['lmqg/qg_frquad'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['question generation'] | true | true | true | 6,905 | false |

# Model Card of `lmqg/mbart-large-cc25-frquad-qg`

This model is fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) for question generation task on the [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) (dataset_name: default) via [`lmqg`](https://github.com/asahi417/lm-question-generation).

### Overview

- **Language model:** [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25)

- **Language:** fr

- **Training data:** [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) (default)

- **Online Demo:** [https://autoqg.net/](https://autoqg.net/)

- **Repository:** [https://github.com/asahi417/lm-question-generation](https://github.com/asahi417/lm-question-generation)

- **Paper:** [https://arxiv.org/abs/2210.03992](https://arxiv.org/abs/2210.03992)

### Usage

- With [`lmqg`](https://github.com/asahi417/lm-question-generation#lmqg-language-model-for-question-generation-)

```python

from lmqg import TransformersQG

# initialize model

model = TransformersQG(language="fr", model="lmqg/mbart-large-cc25-frquad-qg")

# model prediction

questions = model.generate_q(list_context="Créateur » (Maker), lui aussi au singulier, « le Suprême Berger » (The Great Shepherd) ; de l'autre, des réminiscences de la théologie de l'Antiquité : le tonnerre, voix de Jupiter, « Et souvent ta voix gronde en un tonnerre terrifiant », etc.", list_answer="le Suprême Berger")

```

- With `transformers`

```python

from transformers import pipeline

pipe = pipeline("text2text-generation", "lmqg/mbart-large-cc25-frquad-qg")

output = pipe("Créateur » (Maker), lui aussi au singulier, « <hl> le Suprême Berger <hl> » (The Great Shepherd) ; de l'autre, des réminiscences de la théologie de l'Antiquité : le tonnerre, voix de Jupiter, « Et souvent ta voix gronde en un tonnerre terrifiant », etc.")

```

## Evaluation

- ***Metric (Question Generation)***: [raw metric file](https://huggingface.co/lmqg/mbart-large-cc25-frquad-qg/raw/main/eval/metric.first.sentence.paragraph_answer.question.lmqg_qg_frquad.default.json)

| | Score | Type | Dataset |

|:-----------|--------:|:--------|:-----------------------------------------------------------------|

| BERTScore | 71.48 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| Bleu_1 | 14.36 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| Bleu_2 | 3.58 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| Bleu_3 | 1.45 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| Bleu_4 | 0.72 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| METEOR | 7.78 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| MoverScore | 50.35 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| ROUGE_L | 16.4 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

- ***Metric (Question & Answer Generation, Reference Answer)***: Each question is generated from *the gold answer*. [raw metric file](https://huggingface.co/lmqg/mbart-large-cc25-frquad-qg/raw/main/eval/metric.first.answer.paragraph.questions_answers.lmqg_qg_frquad.default.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-----------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 81.27 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedF1Score (MoverScore) | 55.61 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedPrecision (BERTScore) | 81.29 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedPrecision (MoverScore) | 55.61 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedRecall (BERTScore) | 81.25 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedRecall (MoverScore) | 55.6 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

- ***Metric (Question & Answer Generation, Pipeline Approach)***: Each question is generated on the answer generated by [`lmqg/mbart-large-cc25-frquad-ae`](https://huggingface.co/lmqg/mbart-large-cc25-frquad-ae). [raw metric file](https://huggingface.co/lmqg/mbart-large-cc25-frquad-qg/raw/main/eval_pipeline/metric.first.answer.paragraph.questions_answers.lmqg_qg_frquad.default.lmqg_mbart-large-cc25-frquad-ae.json)

| | Score | Type | Dataset |

|:--------------------------------|--------:|:--------|:-----------------------------------------------------------------|

| QAAlignedF1Score (BERTScore) | 75.55 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedF1Score (MoverScore) | 51.75 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedPrecision (BERTScore) | 74.04 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedPrecision (MoverScore) | 51.03 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedRecall (BERTScore) | 77.16 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

| QAAlignedRecall (MoverScore) | 52.52 | default | [lmqg/qg_frquad](https://huggingface.co/datasets/lmqg/qg_frquad) |

## Training hyperparameters

The following hyperparameters were used during fine-tuning:

- dataset_path: lmqg/qg_frquad

- dataset_name: default

- input_types: ['paragraph_answer']

- output_types: ['question']

- prefix_types: None

- model: facebook/mbart-large-cc25

- max_length: 512

- max_length_output: 32

- epoch: 8

- batch: 4

- lr: 0.001

- fp16: False

- random_seed: 1

- gradient_accumulation_steps: 16

- label_smoothing: 0.15

The full configuration can be found at [fine-tuning config file](https://huggingface.co/lmqg/mbart-large-cc25-frquad-qg/raw/main/trainer_config.json).

## Citation

```

@inproceedings{ushio-etal-2022-generative,

title = "{G}enerative {L}anguage {M}odels for {P}aragraph-{L}evel {Q}uestion {G}eneration",

author = "Ushio, Asahi and

Alva-Manchego, Fernando and

Camacho-Collados, Jose",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, U.A.E.",

publisher = "Association for Computational Linguistics",

}

```

| 3ee1a395562e0b1e82f9abf439a5c516 |

jg/distilbert-base-uncased-finetuned-emotion | jg | distilbert | 12 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['emotion'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,344 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2199

- F1: 0.9236

- Accuracy: 0.9235

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:------:|:--------:|

| 0.8072 | 1.0 | 250 | 0.3153 | 0.9023 | 0.905 |

| 0.2442 | 2.0 | 500 | 0.2199 | 0.9236 | 0.9235 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.1.0

- Tokenizers 0.12.1

| d13bff8d6b7152e704292d1f86863bbd |

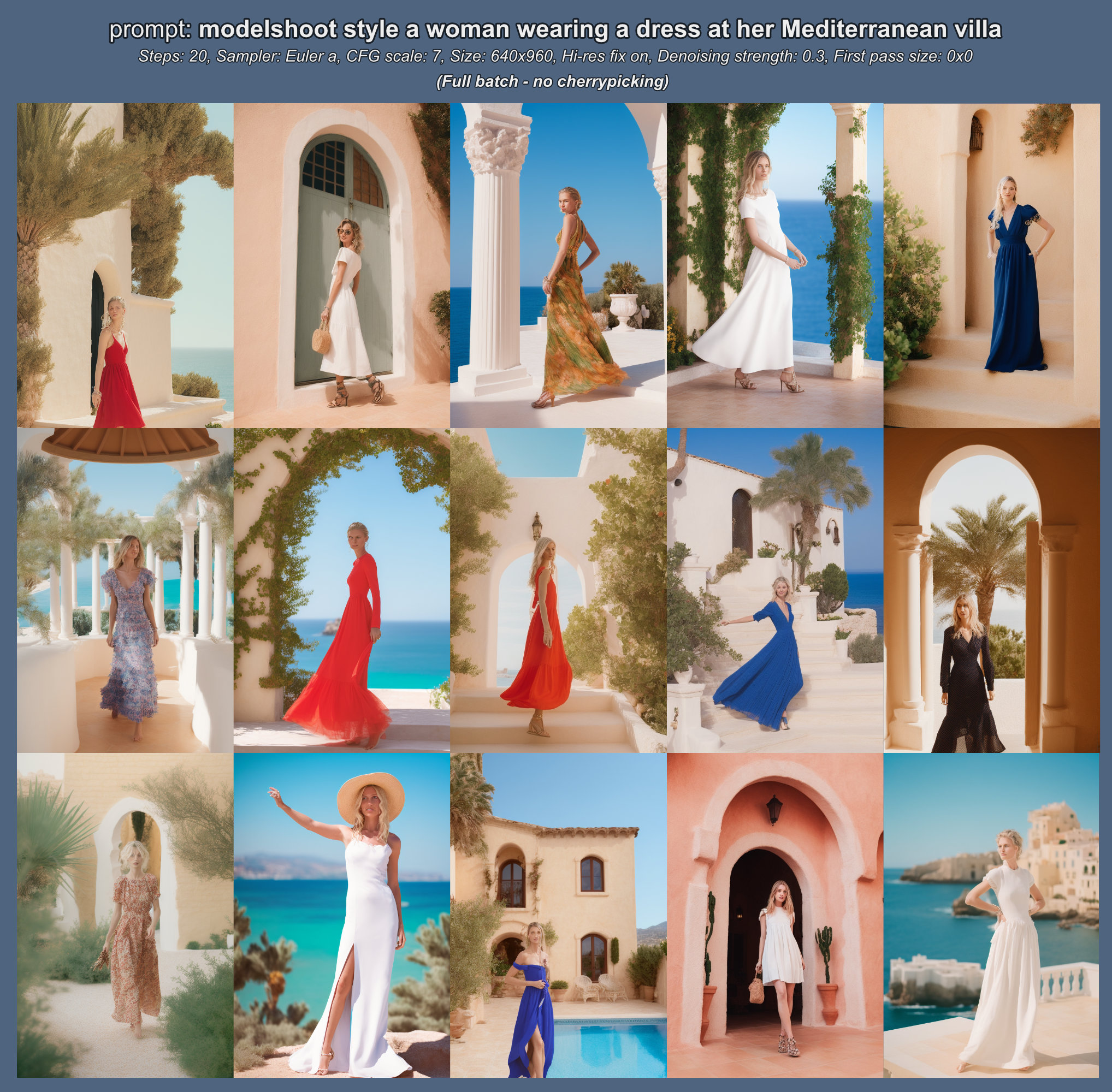

Cosk/sketchstyle-cutesexyrobutts | Cosk | null | 28 | 533 | diffusers | 29 | text-to-image | false | false | false | creativeml-openrail-m | ['en'] | ['Cosk/cutesexyrobutts'] | null | 1 | 0 | 1 | 0 | 0 | 0 | 0 | ['stable-diffusion', 'art', 'cutesexyrobutts', 'style', 'dreambooth'] | false | true | true | 33,766 | false |

# 'Sketchstyle' (cutesexyrobutts style)

Base model: https://huggingface.co/Linaqruf/anything-v3.0.</br>

Used 'fast-DreamBooth' on Google Colab and 768x768 images for all versions.

## NEW: Merges

*Merging sketchstyle models with other models will help to improve anatomy and other elements while also trying to keep the intended style as much as possible.</br>

I will upload from time to time new merges, if any of those improves on the previous ones. </br>

A 'weak' model means there is more weight to cutesexyrobutts style and a 'strong' model means there is a little more focus on the other model/models.</br>

Weak models might mantain a little more of the style but could have some anatomy problems, while strong models keep better anatomy though the style might become a little affected. Low CFG Scale (5-9) and using the "sketchstyle" token in the prompts might help with keeping the style on strong models.</br>*

**List of merges:**

- Pastelmix 0.2 + sketchstyle_v4-42k 0.8 weak (weighted sum, fp16)

- Pastelmix 0.4 + sketchstyle_v4-42k 0.6 strong (weighted sum, fp16)

**Versions:**

- V1: Trained with around 1300 images (from danbooru), automatically cropped.

- V2: Trained with 400 handpicked and handcropped images.

- V3: Trained with the same images as V2, but with 'style training' enabled.

- V4: Trained with 407 images, including 'captions' for each image.

**Recommended to use:**

- V4-42k (pretty good style and decent anatomy, might be the best)

- V3-40k (decent style and anatomy)

- V4-10k (best anatomy, meh style)

- V4-100k (good style, bad anatomy/hard to use, useful with img2img)

**Usage recommendations:**

- For V4, don't use CFG Scale over 11-12, as it will generate an overcooked image. Try between 6 to 9 at first. 9 seems to be the best if you're using the 'sketchstyle' in the prompt, if not, lower

- Generating specific characters might be hard, result in bad anatomy or not even work at all. If you want an specific character, the best is to use img2img with an image generated with another model

- Going over a certain resolution will generate incoherent results, so try staying close to 768x768 (examples: 640x896, 768x960, 640x1024, 832x640, and similar). Maybe Hires fix could help.

- Make sure to add nsfw/nipples/huge or large breasts in the negative prompts if you don't want any of those.

- Skin tone tends to be 'tan', use dark skin/tan on the negative prompts if its the case, and/or pale skin in the prompts.

- Using img2img to change the style of another image generally gives the best results, examples below.

Pay attention to this number. Normally going below 75 generates bad results, specially with models with high steps like V4-100k. Best with 100+

Token: 'sketchstyle' (if used, anatomy may get affected, but it can be useful for models with low steps to get a better style)<br />

**Limitations and known errors:**

- Not very good anatomy

- Sometimes it generates artifacts, specially on the eyes and lips

- Tends to generate skimpy clothes, open clothes, cutouts, and similar

- Might generate unclear outlines

Try using inpainting and/or img2img to fix these.

# Comparison between different versions and models

As you can see, robutts tends to give less coherent results and might need more prompting/steps to get good results (tried on other things aswell with similar results)

V2 with 10k steps or lower tends to give better anatomy results, and over that the style appears more apparent, so 10k is the 'sweet spot'.

Around 40 steps seems to be the best, but you should use 20 steps and, if you get an image you like, you increase the step count to 40 or 50.

Comparison between not completing that negative prompt and increasing the strength too much.

Comparison (using V3-5k) of token strength.

Another comparison of token strength using V3-15k.

Comparison, from 1 to 30 steps, between NovelAI - Sketchstyle V3-27500 (img2img with NovelAI image) - Sketchstyle V3-27500. Using Euler sampler.

# Examples:

```bibtex

Prompt: (masterpiece,best quality,ultra-detailed),((((face close-up)))),((profile)),((lips,pink_eyes)),((pink_hair,hair_slicked_back,hair_strand)),(serious),portrait,frown,arms_up,adjusting_hair,eyelashes,parted_lips,(sportswear,crop_top),toned,collarbone,ponytail,1girl,solo,highres<br />

Negative prompt: (deformed,disfigured),(sitting,fat,thick,thick_thighs,nsfw),open_clothes,open_shirt,(jewelry,earrings,hair_ornament),((sagging_breasts,huge_breasts,shiny,shiny_hair,shiny_skin,realistic,3D,3D game)),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 70, Sampler: Euler, CFG scale: 12, Seed: 1365838486, Size: 768x768, Model: Sketchstyle V3-5k

```

_Eyes fixed with inpainting_:

```bibtex

Prompt: (masterpiece,best quality,ultra-detailed),((((face close-up)))),((profile)),((lips,pink_eyes)),((pink_hair,hair_slicked_back,hair_strand)),(serious),portrait,frown,arms_up,adjusting_hair,eyelashes,parted_lips,(sportswear,crop_top),toned,collarbone,ponytail,1girl,solo,highres<br />

Negative prompt: (deformed,disfigured),(sitting,fat,thick,thick_thighs,nsfw),open_clothes,open_shirt,(jewelry,earrings,hair_ornament),((sagging_breasts,huge_breasts,shiny,shiny_hair,shiny_skin,realistic,3D,3D game)),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 34, Sampler: Euler, CFG scale: 12, Seed: 996011741, Size: 768x768, Denoising strength: 0.6, Mask blur: 8, Model: Sketchstyle V2-10k

```

```bibtex

Prompt: sketchstyle,(masterpiece, best quality,beautiful lighting,stunning,ultra-detailed),(portrait,upper_body,parted_lips),1girl, (nipples), (fox ears,animal_ear_fluff), (bare_shoulders,eyelashes,lips,orange eyes,blush),orange_hair,((onsen,indoors)),(toned),medium_breasts,navel,cleavage,looking at viewer,collarbone,hair bun, solo, highres,(nsfw)<br />

Negative prompt: (dark-skin,dark_nipples,extra_nipples),deformed,disfigured,(sitting,fat,thick,thick_thighs,nsfw),open_clothes,open_shirt,(jewelry,earrings,hair_ornament),((sagging_breasts,huge_breasts,shiny,shiny_hair,shiny_skin,realistic,3D,3D game)),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 30, Sampler: Euler, CFG scale: 12, Seed: 4172541433, Size: 640x832, Model: Sketchstyle V3-5k

```

```bibtex

Prompt: sketchstyle,(masterpiece, best quality,beautiful lighting,stunning,ultra-detailed),(portrait,upper_body),1girl, (nipples), (fox ears,animal_ear_fluff), (bare_shoulders,eyelashes,lips,orange eyes,ringed_eyes,shy,blush),onsen,indoors,medium_breasts, cleavage,looking at viewer,collarbone,hair bun, solo, highres,(nsfw)<br />

Negative prompt: Negative prompt: (huge_breasts,large_breasts),realistic,3D,3D Game,nsfw,lowres, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth<br />

Steps: 40, Sampler: Euler, CFG scale: 14, Seed: 4268937236, Size: 704x896, Model: Sketchstyle V3-5k

```

```bibtex

Prompt: (masterpiece,best quality,ultra detailed),(((facing_away,sitting,arm_support,thighs,legs))),(((from_behind,toned,ass,bare back,breasts))),((thong,garter_belt,garter_straps,lingerie)),(hair_flower,bed_sheet),(black_hair,braid,braided_ponytail,long_hair),1girl,grey_background,thighs,solo,highres<br />

Negative prompt: ((deformed)),((looking_back,looking_at_viewer,face)),((out_of_frame,cropped)),(fat,thick,thick_thighs),((sagging_breasts,huge_breasts,shiny,shiny_hair,shiny_skin,3D,3D game)),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, patreon_logo, patreon_username, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 50, Sampler: Euler, CFG scale: 12, Seed: 3765393440, Size: 640x832, Model: Sketchstyle V3-5k

```

```bibtex

Prompt: (masterpiece,best quality,ultra detailed),(((facing_away,sitting,arm_support,thighs,legs))),(((from_behind,toned,ass,bare back))),((thong,garter_belt,garter_straps,lingerie)),(hair_flower,bed_sheet),(black_hair,braid,braided_ponytail,long_hair),1girl,grey_background,thighs,solo,highres<br />

Negative prompt: backboob,((deformed)),((looking_back,looking_at_viewer,face)),((out_of_frame,cropped)),(fat,thick,thick_thighs),((sagging_breasts,huge_breasts,shiny,shiny_hair,shiny_skin,3D,3D game)),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, patreon_logo, patreon_username, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 50, Sampler: Euler, CFG scale: 12, Seed: 2346086519, Size: 640x832, Model: Sketchstyle V3-5k

```

```bibtex

Prompt: (masterpiece,best quality,ultra-detailed),(sketchstyle),(arms_up,tying_hair),(large_breasts,nipples),(long_hair,blonde_hair,tied_hair,ponytail,collarbone,navel,stomach,midriff,completely_nude,nude,toned),((cleft_of_venus,pussy)),cloudy_sky,1girl,solo,highres,(nsfw)<br />

Negative prompt: (deformed,disfigured,bad proportions,exaggerated),from_behind,(jewelry,earrings,hair_ornament),((sagging_breasts,huge_breasts,shiny,shiny_hair,shiny_skin,realistic,3D,3D game)),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),((fat,thick,thick_thighs)),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 40, Sampler: Euler, CFG scale: 12, Seed: 4024165718, Size: 704x960, Model: Sketchstyle V3-5k

```

```bibtex

Prompt: (masterpiece,best quality),(sketchstyle),((1boy,male_focus)),((close-up,portrait)),((black_shirt)),((((red collared_coat)))),((dante_\(devil_may_cry\),devil may cry)),((medium_hair,parted_hair,parted_bangs,forehead,white_hair)),((stubble)),(facial_hair),(popped_collar,open_coat),(closed_mouth,smile),blue_eyes,looking_at_viewer,solo,highres<br />

Negative prompt: ((deformed)),(nsfw),(long_hair,short_hair,young,genderswap,1girl,female,breasts,androgynous),((choker)),(shiny,shiny_hair,shiny_skin,3D,3D game),((extra_limbs,extra_arms)),(loli,shota),(giant nipples),((fat,thick,thick_thighs)),long body,(lowres),(((poorly drawn fingers, poorly drawn hands))),((anatomic nonsense)),(extra fingers),(fused fingers),(((one hand with more than 5 fingers))),(((one hand with less than 5 fingers))),(bad eyes),(separated eyes),(long neck),((bad proportions)),long body,((poorly drawn eyes)),((poorly drawn)),((bad drawing)),blurry,((mutation)),((bad anatomy)),(multiple arms),((bad face)),((bad eyes)),bad tail,((more than 2 ears)),((poorly drawn face)), (extra limb), ((deformed hands)), (poorly drawn feet), (mutated hands and fingers), extra legs, extra ears, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face maskissing fingers, bad hands, extra digit, fewer digits, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled,monochrome, greyscale,face mask<br />

Steps: 50, Sampler: Euler, CFG scale: 12, Seed: 4166887955, Size: 768x768, Model: Sketchstyle V3-5k

```

# img2img style change examples:

```bibtex

Original settings: Model: NovelAI, Steps: 30, Sampler: Euler a, CFG scale: 16, Seed: 3633297035, Size: 640x960<br />

Original prompt: masterpiece, best quality, 1girl, naked towel, fox ears, orange eyes, wet, ringed eyes, shy, medium breasts, cleavage, looking at viewer, hair bun, blush, solo, highres<br />

Original negative prompt: lowres, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth<br />

New settings: Model: Sketchstyle V3 5k steps, Steps: 33, CFG scale: 12, Seed: 3311014108, Size: 640x960, Denoising strength: 0.6, Mask blur: 4<br />

New prompt: ((sketchstyle)),(masterpiece, best quality,beautiful lighting,stunning,ultra-detailed),(portrait,upper_body),1girl, (((naked_towel,towel))), (fox ears,animal_ear_fluff), (bare_shoulders,eyelashes,lips,orange eyes,ringed_eyes,shy,blush),onsen,indoors,medium_breasts, cleavage,looking at viewer,collarbone,hair bun, solo, highres<br />

New negative prompt: (nipples,huge_breasts,large_breasts),realistic,3D,3D Game,nsfw,lowres, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth<br />

```

```bibtex

Original settings: Model: NovelAI, Steps: 30, Sampler: Euler a, CFG scale: 16, Seed: 764529639, Size: 640x960<br />

Prompt: masterpiece, highest quality, (1girl), (looking at viewer), ((pov)), fox ears, ((leaning forward)), [light smile], ((camisole)), short shorts, (cleavage), (((medium breasts))), blonde, (high ponytail), (highres)<br />

Negative prompt: ((deformed)), (duplicated), lowres, ((missing animal ears)), ((poorly drawn face)), ((poorly drawn eyes)), (extra limb), (mutation), ((deformed hands)), (((poorly drawn hands))), (poorly drawn feet), (fused toes), (fused fingers), (mutated hands and fingers), (one hand with more than 5 fingers), (one hand with less than 5 fingers), extra toes, missing toes, extra feet, extra legs, extra ears, missing ear, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled, huge breasts, black and white, monochrome, 3D Game, 3D, realistic, realism, huge breasts<br />

New settings: Model: Sketchstyle V3 5k steps, Steps: 28, CFG scale: 12, Seed: 1866024520, Size: 640x960, Denoising strength: 0.7, Mask blur: 8

```

```bibtex

Original settings: Model: NovelAI, Steps: 25, Sampler: Euler a, CFG scale: 11, Seed: 2604970030, Size: 640x896<br />

Original prompt: (masterpiece),(best quality),((sketch)),(ultra detailed),(1girl, teenage),((white hair, messy hair)),((expressionless)),(black jacket, long sleeves),((grey scarf)),((squatting)), (hands on own knees),((plaid_skirt, pleated skirt, miniskirt)),(fox ears, extra ears, white fox tail, fox girl, animal ear fluff),black ((boots)),full body,bangs,ahoge,(grey eyes),solo,absurdres<br />

Negative prompt: ((deformed)),((loli, young)),(kneehighs,thighhighs),long body, long legs),lowres,((((poorly drawn fingers, poorly drawn hands)))),((anatomic nonsense)),(extra fingers),((fused fingers)),(plaid scarf),(spread legs),((one hand with more than 5 fingers)), ((one hand with less than 5 fingers)),((bad eyes)),(twin, multiple girls, 2girls),(separated eyes),(long neck),((bad proportions)),(bad lips),((thick lips)),loli,long body,(((poorly drawn eyes))),((poorly drawn)),((bad drawing)),(blurry),(((mutation))),(((bad anatomy))),(((multiple arms))),(((bad face))),(((bad eyes))),bad tail,(((more than 2 ears)), (((poorly drawn face))), (extra limb), ((deformed hands)), (poorly drawn feet), (fused toes), (mutated hands and fingers), extra toes, missing toes, extra feet, extra legs, extra ears, missing ear, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled, huge breasts, black and white, monochrome, 3D Game, 3D, (realistic), face mask<br />

New settings: Model: Sketchstyle V3 5k steps, Steps: 45, CFG scale: 12, Seed: 1073378414, Size: 640x896, Denoising strength: 0.6, Mask blur: 8<br />

New prompt: (masterpiece),(best quality),(sketchstyle),(ultra detailed),(1girl, teenage),((white hair, messy hair)),((expressionless)),(black jacket, long sleeves),((grey scarf)),((squatting)), (hands on own knees),((plaid_skirt, pleated skirt, miniskirt)),(fox ears, extra ears, white fox tail, fox girl, animal ear fluff),black ((boots)),full body,bangs,ahoge,(grey eyes),solo,absurdres<br />

```

```bibtex

Original settings: Model: NovelAI, Steps: 30, Sampler: Euler a, CFG scale: 12, Seed: 3659534337, Size: 768x832<br />

Original prompt: ((masterpiece)), ((highest quality)),(((ultra-detailed))),(illustration),(1girl), portrait,((wolf ears)),(beautiful eyes),looking at viewer,dress shirt,shadows,((ponytail)), (white hair), ((sidelocks)),outdoors,bangs, solo, highres<br />

Original negative prompt: ((deformed)), lowres,loli,((monochrome)),(black and white),((lips)),long body,(((poorly drawn eyes))),((out of frame)),((poorly drawn)),((bad drawing)),(blurry),depth of field,(fused fingers),(((mutation))),((bad anatomy)),(((multiple arms))),(((bad face))),(((bad eyes))),bad tail,(((more than 2 ears)), (((poorly drawn face))), (extra limb), ((deformed hands)), (((poorly drawn hands))), (poorly drawn feet), (fused toes), (mutated hands and fingers), (one hand with more than 5 fingers), (one hand with less than 5 fingers), extra toes, missing toes, extra feet, extra legs, extra ears, missing ear, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled, huge breasts, black and white, monochrome, 3D Game, 3D, realism, face mask<br />

New settings: Model: Sketchstyle V3-20k 2000steps text encoder, Steps: 80, CFG scale: 12, Seed: 3001145714, Size: 768x832, Denoising strength: 0.5, Mask blur: 4<br />

New prompt: ((sketchstyle)),(masterpiece,best quality,highest quality,illustration),((ultra-detailed)),1girl,(portrait,close-up),((wolf_girl,wolf_ears)),(eyelashes,detailed eyes,beautiful eyes),looking at viewer,(collared-shirt,white_shirt),((ponytail)), (white hair), ((sidelocks)),(blue eyes),closed_mouth,(shadows,outdoors,sunlight,grass,trees),hair_between_eyes,bangs,solo,highres<br />

New negative prompt: ((deformed)),(less than 5 fingers, more than 5 fingers,bad hands,bad hand anatomy,missing fingers, extra fingers, mutated hands, disfigured hands, deformed hands),lowres,loli,((monochrome)),(black and white),((lips)),long body,(((poorly drawn eyes))),((out of frame)),((poorly drawn)),((bad drawing)),(blurry),depth of field,(fused fingers),(((mutation))),((bad anatomy)),(((multiple arms))),(((bad face))),(((bad eyes))),bad tail,(((more than 2 ears)), (((poorly drawn face))), (extra limb), ((deformed hands)), (((poorly drawn hands))), (poorly drawn feet), (fused toes), (mutated hands and fingers), (one hand with more than 5 fingers), (one hand with less than 5 fingers), extra toes, missing toes, extra feet, extra legs, extra ears, missing ear, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth, animal hands, censored, blurry lines, wacky outlines, unclear outlines, doubled, huge breasts, black and white, monochrome, 3D Game, 3D, realism, face mask<br />

```

```bibtex

Original settings: Model: NovelAI, Steps: 20, Sampler: Euler, CFG scale: 11, Seed: 2413712316, Size: 768x768<br />

Original prompt: (masterpiece,best quality,ultra-detailed,detailed_eyes),(sketch),((portrait,face focus)),(((shaded eyes))),(wavy hair),(((ringed eyes,red_hair))),((black hair ribbon)),((hair behind ear)),(((short ponytail))),(blush lines),(good anatomy),(((hair strands))),(bangs),((lips)),[teeth, tongue],yellow eyes,(eyelashes),shirt, v-neck,collarbone,cleavage,breasts,(medium hair),(sidelocks),looking at viewer,(shiny hair),1girl,solo,highres<br />

Original negative prompt: ((deformed)),lowres,(black hair),(formal),earrings,(twin, multiple girls, 2girls),(braided bangs),((big eyes)),((close up, eye focus)),(separated eyes),(multiple eyebrows),((eyebrows visible through hair)),(long neck),(bad lips),(tongue out),((thick lips)),(from below),loli,long body,(((poorly drawn eyes))),((poorly drawn)),((bad drawing)),((blurry)),depth of field,(fused fingers),(((mutation))),(((bad anatomy))),(((multiple arms))),(((bad face))),(((bad eyes))),bad tail,(((more than 2 ears)), (((poorly drawn face))), (extra limb), ((deformed hands)), (((poorly drawn hands))), (poorly drawn feet), (fused toes), (mutated hands and fingers), (one hand with more than 5 fingers), (one hand with less than 5 fingers), extra toes, missing toes, extra feet, extra legs, extra ears, missing ear, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth, animal hands, censored,doubled, huge breasts, black and white, monochrome, 3D Game, 3D, (realistic), face mask<br />

New settings: (img2img with original image, then again with the new generated image, inpainted to fix the neck) Model: Sketchstyle V3-27.5k 2000steps text encoder, Steps: 80, CFG scale: 12, Seed: 1237755461 / 1353966202, Size: 832x832, Denoising strength: 0.5 / 0.3, Mask blur: 4<br />

New prompt: sketchstyle,(masterpiece,best quality,ultra-detailed,detailed_eyes),(((portrait,face focus,close-up))),(((shaded eyes))),(wavy hair),(((ringed eyes,red_hair))),((black hair ribbon)),((hair behind ear)),(((short ponytail))),(blush lines),(good anatomy),(((hair strands))),(bangs),((lips)),[teeth, tongue],(yellow eyes,eyelashes,tsurime,slanted_eyes),shirt, v-neck,collarbone,breasts,(medium hair),(sidelocks),looking at viewer,(shiny hair),1girl,solo,highres<br />

New negative prompt: ((deformed)),((loli,young)),lowres,(black hair),(formal),earrings,(twin, multiple girls, 2girls),(braided bangs),((big eyes)),((close up, eye focus)),(separated eyes),(multiple eyebrows),((eyebrows visible through hair)),(long neck),(bad lips),(tongue out),((thick lips)),(from below),loli,long body,(((poorly drawn eyes))),((poorly drawn)),((bad drawing)),((blurry)),depth of field,(fused fingers),(((mutation))),(((bad anatomy))),(((multiple arms))),(((bad face))),(((bad eyes))),bad tail,(((more than 2 ears)), (((poorly drawn face))), (extra limb), ((deformed hands)), (((poorly drawn hands))), (poorly drawn feet), (fused toes), (mutated hands and fingers), (one hand with more than 5 fingers), (one hand with less than 5 fingers), extra toes, missing toes, extra feet, extra legs, extra ears, missing ear, extra hands, bad feet, bad anatomy, bad hands, text, error, missing fingers, bad hands, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts,signature, watermark, username, blurry, artist name, bad face, bad mouth, animal hands, censored,doubled, huge breasts, black and white, monochrome, 3D Game, 3D, (realistic), face mask<br />

``` | dc337dec4b1704b0d68424945b50efc2 |

sania-nawaz/finetuning-sentiment-model-3000-samples | sania-nawaz | distilbert | 13 | 12 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['imdb'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,055 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3286

- Accuracy: 0.8667

- F1: 0.8667

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

| 41729641147facf0eb8fb402e6b9305e |

pkachhad/t5-base-finetuned-parth | pkachhad | t5 | 13 | 3 | transformers | 0 | text2text-generation | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,791 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-finetuned-parth

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.3764

- Rouge1: 27.5144

- Rouge2: 22.6391

- Rougel: 25.9369

- Rougelsum: 27.1193

- Gen Len: 17.5

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 4 | 2.7016 | 27.6196 | 22.7595 | 25.9443 | 27.2369 | 17.5 |

| No log | 2.0 | 8 | 2.5425 | 27.6196 | 22.7595 | 25.9443 | 27.2369 | 17.5 |

| No log | 3.0 | 12 | 2.4526 | 27.6196 | 22.7595 | 25.9443 | 27.2369 | 17.5 |

| No log | 4.0 | 16 | 2.3977 | 27.6196 | 22.7595 | 25.9443 | 27.2369 | 17.5 |

| No log | 5.0 | 20 | 2.3764 | 27.5144 | 22.6391 | 25.9369 | 27.1193 | 17.5 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

| 2a2f6e3e6b27090868e8daef9b03f68e |

RASMUS/wav2vec2-xlsr-fi-lm-1B | RASMUS | wav2vec2 | 18 | 5 | transformers | 1 | automatic-speech-recognition | true | false | false | apache-2.0 | ['fi'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer', 'automatic-speech-recognition', 'robust-speech-event', 'hf-asr-leaderboard'] | true | true | true | 2,276 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-xlsr-fi-lm-1B

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-1b](https://huggingface.co/facebook/wav2vec2-xls-r-1b) on the common voice train/dev/other datasets.

It achieves the following results on the evaluation set without language model:

- Loss: 0.1853

- Wer: 0.2205

With language model:

- Wer: 0.1026

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.8158 | 0.67 | 400 | 0.4835 | 0.6310 |

| 0.5679 | 1.33 | 800 | 0.4806 | 0.5538 |

| 0.6055 | 2.0 | 1200 | 0.3888 | 0.5083 |

| 0.5353 | 2.67 | 1600 | 0.3258 | 0.4365 |

| 0.4883 | 3.33 | 2000 | 0.3313 | 0.4204 |

| 0.4513 | 4.0 | 2400 | 0.2924 | 0.3904 |

| 0.3753 | 4.67 | 2800 | 0.2593 | 0.3608 |

| 0.3478 | 5.33 | 3200 | 0.2832 | 0.3551 |

| 0.3796 | 6.0 | 3600 | 0.2495 | 0.3402 |

| 0.2556 | 6.67 | 4000 | 0.2342 | 0.3106 |

| 0.229 | 7.33 | 4400 | 0.2181 | 0.2812 |

| 0.205 | 8.0 | 4800 | 0.2041 | 0.2523 |

| 0.1654 | 8.67 | 5200 | 0.2015 | 0.2416 |

| 0.152 | 9.33 | 5600 | 0.1942 | 0.2294 |

| 0.1569 | 10.0 | 6000 | 0.1853 | 0.2205 |

### Framework versions

- Transformers 4.16.0.dev0

- Pytorch 1.10.1+cu102

- Datasets 1.17.1.dev0

- Tokenizers 0.11.0

| 56db68be00fa9d3a682990b7fca040bb |

Tristan/gpt2-summarization_reward_model | Tristan | null | 5 | 0 | null | 0 | null | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,533 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-summarization_reward_model

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7473

- Accuracy: 0.6006

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 16

- total_train_batch_size: 64

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6421 | 1.0 | 1451 | 0.6815 | 0.6036 |

| 0.5893 | 2.0 | 2902 | 0.6764 | 0.6048 |

| 0.5488 | 3.0 | 4353 | 0.7074 | 0.6012 |

| 0.5187 | 4.0 | 5804 | 0.7254 | 0.6009 |

| 0.5034 | 5.0 | 7255 | 0.7473 | 0.6006 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu117

- Datasets 2.8.0

- Tokenizers 0.13.2

| ce6b6a8d7014d4b2f3ed60fee98348a3 |

deepset/deberta-v3-large-squad2 | deepset | deberta-v2 | 9 | 4,107 | transformers | 15 | question-answering | true | false | false | cc-by-4.0 | ['en'] | ['squad_v2'] | null | 6 | 0 | 4 | 2 | 2 | 1 | 1 | ['deberta', 'deberta-v3', 'deberta-v3-large'] | true | true | true | 4,273 | false | # deberta-v3-large for QA

This is the [deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) model, fine-tuned using the [SQuAD2.0](https://huggingface.co/datasets/squad_v2) dataset. It's been trained on question-answer pairs, including unanswerable questions, for the task of Question Answering.

## Overview

**Language model:** deberta-v3-large

**Language:** English

**Downstream-task:** Extractive QA

**Training data:** SQuAD 2.0

**Eval data:** SQuAD 2.0

**Code:** See [an example QA pipeline on Haystack](https://haystack.deepset.ai/tutorials/first-qa-system)

**Infrastructure**: 1x NVIDIA A10G

## Hyperparameters

```

batch_size = 2

grad_acc_steps = 32

n_epochs = 6

base_LM_model = "microsoft/deberta-v3-large"

max_seq_len = 512

learning_rate = 7e-6

lr_schedule = LinearWarmup

warmup_proportion = 0.2

doc_stride=128

max_query_length=64

```

## Usage

### In Haystack

Haystack is an NLP framework by deepset. You can use this model in a Haystack pipeline to do question answering at scale (over many documents). To load the model in [Haystack](https://github.com/deepset-ai/haystack/):

```python

reader = FARMReader(model_name_or_path="deepset/deberta-v3-large-squad2")

# or

reader = TransformersReader(model_name_or_path="deepset/deberta-v3-large-squad2",tokenizer="deepset/deberta-v3-large-squad2")

```

### In Transformers

```python

from transformers import AutoModelForQuestionAnswering, AutoTokenizer, pipeline

model_name = "deepset/deberta-v3-large-squad2"

# a) Get predictions

nlp = pipeline('question-answering', model=model_name, tokenizer=model_name)

QA_input = {

'question': 'Why is model conversion important?',

'context': 'The option to convert models between FARM and transformers gives freedom to the user and let people easily switch between frameworks.'

}

res = nlp(QA_input)

# b) Load model & tokenizer

model = AutoModelForQuestionAnswering.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

```

## Performance

Evaluated on the SQuAD 2.0 dev set with the [official eval script](https://worksheets.codalab.org/rest/bundles/0x6b567e1cf2e041ec80d7098f031c5c9e/contents/blob/).

```

"exact": 87.6105449338836,

"f1": 90.75307008866517,

"total": 11873,

"HasAns_exact": 84.37921727395411,

"HasAns_f1": 90.6732795483674,

"HasAns_total": 5928,

"NoAns_exact": 90.83263246425568,

"NoAns_f1": 90.83263246425568,

"NoAns_total": 5945

```

## About us

<div class="grid lg:grid-cols-2 gap-x-4 gap-y-3">

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://huggingface.co/spaces/deepset/README/resolve/main/haystack-logo-colored.svg" class="w-40"/>

</div>

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://huggingface.co/spaces/deepset/README/resolve/main/deepset-logo-colored.svg" class="w-40"/>

</div>

</div>

[deepset](http://deepset.ai/) is the company behind the open-source NLP framework [Haystack](https://haystack.deepset.ai/) which is designed to help you build production ready NLP systems that use: Question answering, summarization, ranking etc.

Some of our other work:

- [Distilled roberta-base-squad2 (aka "tinyroberta-squad2")]([https://huggingface.co/deepset/tinyroberta-squad2)

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

- [GermanQuAD and GermanDPR datasets and models (aka "gelectra-base-germanquad", "gbert-base-germandpr")](https://deepset.ai/germanquad)

## Get in touch and join the Haystack community

<p>For more info on Haystack, visit our <strong><a href="https://github.com/deepset-ai/haystack">GitHub</a></strong> repo and <strong><a href="https://haystack.deepset.ai">Documentation</a></strong>.

We also have a <strong><a class="h-7" href="https://haystack.deepset.ai/community/join">Discord community open to everyone!</a></strong></p>

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Discord](https://haystack.deepset.ai/community/join) | [GitHub Discussions](https://github.com/deepset-ai/haystack/discussions) | [Website](https://deepset.ai)

By the way: [we're hiring!](http://www.deepset.ai/jobs)

| 368c912f4fcdfaea2940d24c924d3103 |

gchhablani/fnet-base-finetuned-rte | gchhablani | fnet | 45 | 5 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer', 'fnet-bert-base-comparison'] | true | true | true | 2,198 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# fnet-base-finetuned-rte

This model is a fine-tuned version of [google/fnet-base](https://huggingface.co/google/fnet-base) on the GLUE RTE dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6978

- Accuracy: 0.6282

The model was fine-tuned to compare [google/fnet-base](https://huggingface.co/google/fnet-base) as introduced in [this paper](https://arxiv.org/abs/2105.03824) against [bert-base-cased](https://huggingface.co/bert-base-cased).

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

This model is trained using the [run_glue](https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py) script. The following command was used:

```bash

#!/usr/bin/bash

python ../run_glue.py \\n --model_name_or_path google/fnet-base \\n --task_name rte \\n --do_train \\n --do_eval \\n --max_seq_length 512 \\n --per_device_train_batch_size 16 \\n --learning_rate 2e-5 \\n --num_train_epochs 3 \\n --output_dir fnet-base-finetuned-rte \\n --push_to_hub \\n --hub_strategy all_checkpoints \\n --logging_strategy epoch \\n --save_strategy epoch \\n --evaluation_strategy epoch \\n```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6829 | 1.0 | 156 | 0.6657 | 0.5704 |

| 0.6174 | 2.0 | 312 | 0.6784 | 0.6101 |

| 0.5141 | 3.0 | 468 | 0.6978 | 0.6282 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0

- Datasets 1.12.1

- Tokenizers 0.10.3

| c5e18835dae5d80019789c5da98f74da |

Amir13/xlm-roberta-base-fa-base-ner | Amir13 | xlm-roberta | 12 | 6 | transformers | 0 | token-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,713 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-fa-base-ner

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2856

- Precision: 0.5353

- Recall: 0.5704

- F1: 0.5523

- Accuracy: 0.9168

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.673 | 1.0 | 511 | 0.4067 | 0.4767 | 0.3981 | 0.4339 | 0.8956 |

| 0.3673 | 2.0 | 1022 | 0.3279 | 0.4611 | 0.5138 | 0.4860 | 0.9031 |

| 0.2998 | 3.0 | 1533 | 0.2977 | 0.5265 | 0.4976 | 0.5116 | 0.9132 |

| 0.2616 | 4.0 | 2044 | 0.2860 | 0.5365 | 0.5477 | 0.5420 | 0.9151 |

| 0.2394 | 5.0 | 2555 | 0.2856 | 0.5353 | 0.5704 | 0.5523 | 0.9168 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.13.1+cu116

- Datasets 2.8.0

- Tokenizers 0.13.2

| 8cda5835de7932af3aaa6c3e2ea70e5b |

PlanTL-GOB-ES/roberta-base-bne-capitel-ner-plus | PlanTL-GOB-ES | roberta | 9 | 239 | transformers | 4 | token-classification | true | false | false | apache-2.0 | ['es'] | ['bne', 'capitel'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['national library of spain', 'spanish', 'bne', 'capitel', 'ner'] | true | true | true | 7,545 | false |

# Spanish RoBERTa-base trained on BNE finetuned for CAPITEL Named Entity Recognition (NER) dataset.

## Table of contents

<details>

<summary>Click to expand</summary>

- [Model description](#model-description)

- [Intended uses and limitations](#intended-use)

- [How to use](#how-to-use)

- [Limitations and bias](#limitations-and-bias)

- [Training](#training)

- [Training](#training)

- [Training data](#training-data)

- [Training procedure](#training-procedure)

- [Evaluation](#evaluation)

- [Evaluation](#evaluation)

- [Variable and metrics](#variable-and-metrics)

- [Evaluation results](#evaluation-results)

- [Additional information](#additional-information)

- [Author](#author)

- [Contact information](#contact-information)

- [Copyright](#copyright)

- [Licensing information](#licensing-information)

- [Funding](#funding)

- [Citing information](#citing-information)

- [Disclaimer](#disclaimer)

</details>

## Model description

The **roberta-base-bne-capitel-ner-plus** is a Named Entity Recognition (NER) model for the Spanish language fine-tuned from the [roberta-base-bne](https://huggingface.co/PlanTL-GOB-ES/roberta-base-bne) model, a [RoBERTa](https://arxiv.org/abs/1907.11692) base model pre-trained using the largest Spanish corpus known to date, with a total of 570GB of clean and deduplicated text, processed for this work, compiled from the web crawlings performed by the [National Library of Spain (Biblioteca Nacional de España)](http://www.bne.es/en/Inicio/index.html) from 2009 to 2019. This model is a more robust version of the [roberta-base-bne-capitel-ner](https://huggingface.co/PlanTL-GOB-ES/roberta-base-bne-capitel-ner) model that recognizes better lowercased Named Entities (NE).

## Intended uses and limitations

**roberta-base-bne-capitel-ner-plus** model can be used to recognize Named Entities (NE). The model is limited by its training dataset and may not generalize well for all use cases.

## How to use

```python

from transformers import pipeline

from pprint import pprint

nlp = pipeline("ner", model="PlanTL-GOB-ES/roberta-base-bne-capitel-ner-plus")

example = "Me llamo francisco javier y vivo en madrid."

ner_results = nlp(example)

pprint(ner_results)

```

## Limitations and bias

At the time of submission, no measures have been taken to estimate the bias embedded in the model. However, we are well aware that our models may be biased since the corpora have been collected using crawling techniques on multiple web sources. We intend to conduct research in these areas in the future, and if completed, this model card will be updated.

## Training

The dataset used for training and evaluation is the one from the [CAPITEL competition at IberLEF 2020](https://sites.google.com/view/capitel2020) (sub-task 1). We lowercased and uppercased the dataset, and added the additional sentences to the training.

### Training procedure

The model was trained with a batch size of 16 and a learning rate of 5e-5 for 5 epochs. We then selected the best checkpoint using the downstream task metric in the corresponding development set and then evaluated it on the test set.

## Evaluation

### Variable and metrics

This model was finetuned maximizing F1 score.

## Evaluation results

We evaluated the **roberta-base-bne-capitel-ner-plus** on the CAPITEL-NERC test set against standard multilingual and monolingual baselines:

| Model | CAPITEL-NERC (F1) |

| ------------|:----|

| roberta-large-bne-capitel-ner | **90.51** |

| roberta-base-bne-capitel-ner | 89.60|

| roberta-base-bne-capitel-ner-plus | 89.60|

| BETO | 87.72 |

| mBERT | 88.10 |

| BERTIN | 88.56 |

| ELECTRA | 80.35 |

For more details, check the fine-tuning and evaluation scripts in the official [GitHub repository](https://github.com/PlanTL-GOB-ES/lm-spanish).

## Additional information

### Author

Text Mining Unit (TeMU) at the Barcelona Supercomputing Center (bsc-temu@bsc.es)

### Contact information

For further information, send an email to <plantl-gob-es@bsc.es>

### Copyright

Copyright by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) (2022)

### Licensing information

[Apache License, Version 2.0](https://www.apache.org/licenses/LICENSE-2.0)

### Funding

This work was funded by the Spanish State Secretariat for Digitalization and Artificial Intelligence (SEDIA) within the framework of the Plan-TL.

### Citing information

If you use this model, please cite our [paper](http://journal.sepln.org/sepln/ojs/ojs/index.php/pln/article/view/6405):

```

@article{,

abstract = {We want to thank the National Library of Spain for such a large effort on the data gathering and the Future of Computing Center, a

Barcelona Supercomputing Center and IBM initiative (2020). This work was funded by the Spanish State Secretariat for Digitalization and Artificial

Intelligence (SEDIA) within the framework of the Plan-TL.},

author = {Asier Gutiérrez Fandiño and Jordi Armengol Estapé and Marc Pàmies and Joan Llop Palao and Joaquin Silveira Ocampo and Casimiro Pio Carrino and Carme Armentano Oller and Carlos Rodriguez Penagos and Aitor Gonzalez Agirre and Marta Villegas},

doi = {10.26342/2022-68-3},

issn = {1135-5948},

journal = {Procesamiento del Lenguaje Natural},