repo

stringclasses 147

values | number

int64 1

172k

| title

stringlengths 2

476

| body

stringlengths 0

5k

| url

stringlengths 39

70

| state

stringclasses 2

values | labels

listlengths 0

9

| created_at

timestamp[ns, tz=UTC]date 2017-01-18 18:50:08

2026-01-06 07:33:18

| updated_at

timestamp[ns, tz=UTC]date 2017-01-18 19:20:07

2026-01-06 08:03:39

| comments

int64 0

58

⌀ | user

stringlengths 2

28

|

|---|---|---|---|---|---|---|---|---|---|---|

pytorch/vision

| 4,104

|

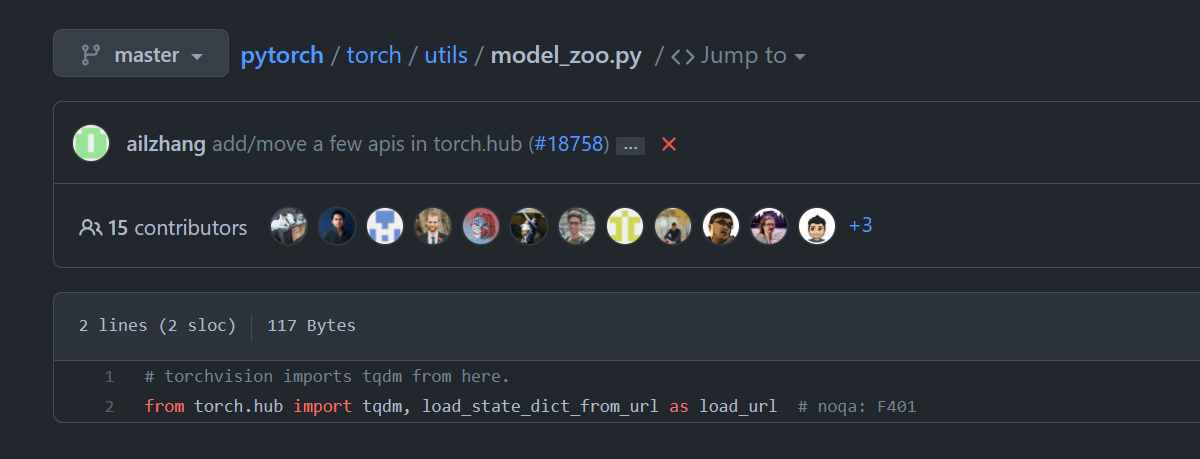

whats diff in this code ```try....except```

|

https://github.com/pytorch/vision/blob/d1ab583d0d2df73208e2fc9c4d3a84e969c69b70/torchvision/_internally_replaced_utils.py#L13

except code also use torch.hub,its same as try code!!!

why do like this?

|

https://github.com/pytorch/vision/issues/4104

|

closed

|

[

"question"

] | 2021-06-24T06:37:49Z

| 2021-06-24T11:46:28Z

| null |

jaffe-fly

|

pytorch/pytorch

| 60,625

|

How to checkout 1.8.1 release

|

I am trying to compile pytorch 1.8.1 release from source but not sure which branch to checkout, as there is no 1.8.1 and the 1.8.0 branches seem to be rc1 or rc2.

so for example

```

git checkout -b remotes/origin/lts/release/1.8

git describe --tags

```

returns

v1.8.0-rc1-4570-g80f40b172f

So how to get 1.8.1? I know the release tarballs don't work

|

https://github.com/pytorch/pytorch/issues/60625

|

closed

|

[] | 2021-06-24T04:17:58Z

| 2021-06-24T19:06:58Z

| null |

beew

|

pytorch/serve

| 1,135

|

how to serve model converted by hummingbird from sklearn?

|

if i have a sklearn model, and then use hummingbird (https://github.com/microsoft/hummingbird) to transfer as pytorch tensor model

so the model structure is from hummingbird, but not by my own such as :

hummingbird.ml.containers.sklearn.pytorch_containers.PyTorchSklearnContainerClassification

so i dont have the model.py

how to use torch serve to serve this model?

i tried below:

torch-model-archiver --model-name aa --version 1.0 --handler text_classifier --serialized-file torch_hm_model_cuda.pth

torchserve --start --ncs --model-store model_store --models tt=aa.mar

it juse show :

Removing orphan pid file.

java.lang.NoSuchMethodError: java.nio.file.Files.readString(Ljava/nio/file/Path;)Ljava/lang/String;

no other messages. thanks in advance.

|

https://github.com/pytorch/serve/issues/1135

|

closed

|

[] | 2021-06-23T07:25:56Z

| 2021-06-24T10:03:57Z

| null |

aohan237

|

pytorch/pytorch

| 60,433

|

Libtorch JIT : Does enabling profiling mode increase CPU memory usage ? How to disable profiling mode properly ?

|

Hi, I am trying to deploying an Attention-based Encoder Decoder (AED) model with libtorch C++ frontend, when model's decoder loops at output sequence ( the decoder jit module 's forward method is repeatedly called at each label time step ), the CPU memory usage is very high (~ 20 GB), and I think it's far too high compared to it should be ( at each decoder step, the internal state tensors should occupy about < 400 MB in total, and state tensors at previous steps is released correctly with management of smart pointers).

I call torch::jit::getProfilingMode() at begining of inference, and it's true; I try to set it false, but the memory usage is still high.

I would like to know :

1) whether the high CPU memory usage is related to torch JIT 's profiling mode ?

2) is there any other way to profile CPU memory usage ?

The libtorch version used is 1.9.0

Thanks a lot.

- [Discussion Forum](https://discuss.pytorch.org/)

cc @gmagogsfm

|

https://github.com/pytorch/pytorch/issues/60433

|

closed

|

[

"oncall: jit"

] | 2021-06-22T04:13:05Z

| 2021-06-28T03:36:08Z

| null |

w1d2s

|

pytorch/vision

| 4,091

|

Unnecessary call .clone() in box_convert function

|

https://github.com/pytorch/vision/blob/d391a0e992a35d7fb01e11110e2ccf8e445ad8a0/torchvision/ops/boxes.py#L183-L184

We can just return boxes without .clone().

What's the purpose?

|

https://github.com/pytorch/vision/issues/4091

|

closed

|

[

"question"

] | 2021-06-22T02:03:37Z

| 2021-06-22T14:14:56Z

| null |

developer0hye

|

pytorch/android-demo-app

| 156

|

How to add Model Inference Time to yolov5 demo when using live function? Like the iOS demo?

|

Dear developer, I watched this repository (for Android) yolov5 application test video and I compared another repository (for iOS) yolov5 application test video.

I found that the Android application is missing the provision of " Model Inference Time" for real time detection, could you please add it? If not, could you please tell me how to add it? Thank you.

|

https://github.com/pytorch/android-demo-app/issues/156

|

closed

|

[] | 2021-06-20T18:43:18Z

| 2022-05-08T15:41:52Z

| null |

zxsitu

|

pytorch/android-demo-app

| 154

|

where is yolov5 model

|

Does anyone know how to download yolov5s.torchscript.ptl, I don't have this file

|

https://github.com/pytorch/android-demo-app/issues/154

|

open

|

[] | 2021-06-19T14:38:56Z

| 2021-06-19T15:18:46Z

| null |

GuoQuanhao

|

pytorch/pytorch

| 60,266

|

UserWarning: The epoch parameter in `scheduler.step()` was not necessary and is being deprecated where possible. Please use `scheduler.step()` to step the scheduler.

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

## To Reproduce

Steps to reproduce the behavior:

1.

1.

1.

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

Please copy and paste the output from our

[environment collection script](https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

(or fill out the checklist below manually).

You can get the script and run it with:

```

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

```

- PyTorch Version (e.g., 1.0):

- OS (e.g., Linux):

- How you installed PyTorch (`conda`, `pip`, source):

- Build command you used (if compiling from source):

- Python version:

- CUDA/cuDNN version:

- GPU models and configuration:

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/pytorch/issues/60266

|

closed

|

[] | 2021-06-18T13:09:33Z

| 2021-06-18T15:53:56Z

| null |

wanyne-yyds

|

pytorch/pytorch

| 60,253

|

How to export SPP-NET to onnx ?

|

Here's my code:

----------------------------------------------------------start----------------------------------------------------------------

import torch

import torch.nn as nn

import math

import torch.nn.functional as F

class Spp(nn.Module):

def __init__(self, level, pooling_type="max_pool"):

super().__init__()

self.num = level

self.pooling_type = pooling_type

def forward(self, x):

h, w = x.shape[2:]

kernel_size = (math.ceil(h / self.num), math.ceil(w / self.num))

stride = kernel_size

pooling = (math.ceil((kernel_size[0] * self.num - h) / 2), math.ceil((kernel_size[1] * self.num - w) / 2))

if self.pooling_type == 'max_pool' or self.pooling_type == "max":

tensor = F.max_pool2d(x, kernel_size=kernel_size, stride=stride, padding=pooling)

else:

tensor = F.avg_pool2d(x, kernel_size=kernel_size, stride=stride, padding=pooling)

return tensor

class SppNet(nn.Module):

def __init__(self, pooling_type="max_pool", level=1):

super(SppNet, self).__init__()

self.spps = []

for i in range(level):

self.spps.append(Spp(pooling_type=pooling_type, level=i+1))

pass

def forward(self, x):

n, c = input.shape[0:2]

out = []

for spp in self.spps:

y = spp(x).reshape(n, c, -1)

out.append(y)

out = torch.cat(out, dim=2)

return out

if __name__ == '__main__':

input = torch.randn(3, 45, 100, 120)

sppNet = SppNet(level=7)

y0 = sppNet(input)

print(y0.shape)

sppNet.eval()

torch.onnx.export(sppNet, # model being run

input, # model input (or a tuple for multiple inputs)

'spp-net.onnx',

# where to save the model (can be a file or file-like object)

export_params=True, # store the trained parameter weights inside the model file

opset_version=11, # the ONNX version to export the model to

do_constant_folding=True, # whether to execute constant folding for optimization

input_names=["input"], # the model's input names

output_names=["output"], # the model's output names

dynamic_axes={

"input": {0: "batch_size", 1: "channel", 2:"height", 3:"width"},

"output": {0: "batch_size", 1: "channel", 2:"length"}

},

enable_onnx_checker=True)

------------------------------------------------------end----------------------------------------------------------------

Exported model “kernel_ size、pooling ” parameter is fixed.

But I need to set it to a fixed parameter.

That is to say, the kernel is calculated automatically according to the input size and other parameters, so how to do?

Ask for advice,Thank you!

cc @garymm @BowenBao @neginraoof

|

https://github.com/pytorch/pytorch/issues/60253

|

closed

|

[

"module: onnx",

"triaged",

"onnx-triaged"

] | 2021-06-18T06:56:59Z

| 2022-11-01T22:16:36Z

| null |

yongxin3344520

|

pytorch/TensorRT

| 502

|

❓ [Question] failed to build docker image

|

## ❓ Question

failed to build docker image

## What you have already tried

`docker build -t trtorch -f notebooks/Dockerfile.notebook .`

## Additional context

```

Step 13/21 : WORKDIR /workspace/TRTorch

---> Running in 6043f6a80286

Removing intermediate container 6043f6a80286

---> 18eaa4134512

Step 14/21 : RUN bazel build //:libtrtorch --compilation_mode opt

---> Running in e5ae54ec3c1e

Extracting Bazel installation...

Starting local Bazel server and connecting to it...

Loading:

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Loading: 0 packages loaded

Analyzing: target //:libtrtorch (1 packages loaded, 0 targets configured)

Analyzing: target //:libtrtorch (39 packages loaded, 155 targets configured)

INFO: Analyzed target //:libtrtorch (42 packages loaded, 2697 targets configured).

INFO: Found 1 target...

[0 / 112] [Prepa] BazelWorkspaceStatusAction stable-status.txt ... (6 actions, 0 running)

ERROR: /workspace/TRTorch/cpp/api/BUILD:3:11: C++ compilation of rule '//cpp/api:trtorch' failed (Exit 1): gcc failed: error executing command /usr/bin/gcc -U_FORTIFY_SOURCE -fstack-protector -Wall -Wunused-but-set-parameter -Wno-free-nonheap-object -fno-omit-frame-pointer -g0 -O2 '-D_FORTIFY_SOURCE=1' -DNDEBUG -ffunction-sections ... (remaining 61 argument(s) skipped)

Use --sandbox_debug to see verbose messages from the sandbox gcc failed: error executing command /usr/bin/gcc -U_FORTIFY_SOURCE -fstack-protector -Wall -Wunused-but-set-parameter -Wno-free-nonheap-object -fno-omit-frame-pointer -g0 -O2 '-D_FORTIFY_SOURCE=1' -DNDEBUG -ffunction-sections ... (remaining 61 argument(s) skipped)

Use --sandbox_debug to see verbose messages from the sandbox

In file included from cpp/api/src/compile_spec.cpp:6:0:

bazel-out/k8-opt/bin/cpp/api/_virtual_includes/trtorch/trtorch/trtorch.h:26:25: error: different underlying type in enum 'enum class c10::DeviceType'

enum class DeviceType : int8_t;

^~~~~~

In file included from bazel-out/k8-opt/bin/external/libtorch/_virtual_includes/c10_cuda/c10/core/Device.h:3:0,

from bazel-out/k8-opt/bin/external/libtorch/_virtual_includes/ATen/ATen/core/TensorBody.h:3,

from bazel-out/k8-opt/bin/external/libtorch/_virtual_includes/ATen/ATen/Tensor.h:3,

from external/libtorch/include/torch/csrc/autograd/function_hook.h:5,

from external/libtorch/include/torch/csrc/autograd/variable.h:7,

from external/libtorch/include/torch/csrc/jit/api/module.h:3,

from cpp/api/src/compile_spec.cpp:1:

bazel-out/k8-opt/bin/external/libtorch/_virtual_includes/c10_cuda/c10/core/DeviceType.h:15:12: note: previous definition here

enum class DeviceType : int16_t {

^~~~~~~~~~

Target //:libtrtorch failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 490.394s, Critical Path: 28.98s

INFO: 1030 processes: 1024 internal, 6 processwrapper-sandbox.

FAILED: Build did NOT complete successfully

FAILED: Build did NOT complete successfully

```

|

https://github.com/pytorch/TensorRT/issues/502

|

closed

|

[

"question",

"No Activity"

] | 2021-06-17T10:22:25Z

| 2021-09-27T00:01:13Z

| null |

chrjxj

|

pytorch/pytorch

| 60,122

|

what version of python is suggested with pytorch 1.9

|

I know pytorch support a variety of python version, but I wonder what version is suggested? python 3.6.6? 3.7.7? etc?

Thanks

|

https://github.com/pytorch/pytorch/issues/60122

|

closed

|

[] | 2021-06-16T19:05:52Z

| 2021-06-16T20:54:15Z

| null |

seyeeet

|

pytorch/pytorch

| 60,115

|

How to install torchaudio on Mac M1 ARM?

|

`torchaudio` doesn't seem to be available for Mac M1.

If I run `conda install pytorch torchvision torchaudio -c pytorch` (as described on pytorch's main page) I get this error message:

```

PackagesNotFoundError: The following packages are not available from current channels:

- torchaudio

```

If I run the command without `torchaudio` everything installs fine.

How can I fix this and install torchaudio too?

If it isn't available (yet) – do you have any plans to release it too?

Thanks in advance for your help! And I apologize in advance if I don't see the forest for the trees and overlooked sth. obvious.

|

https://github.com/pytorch/pytorch/issues/60115

|

closed

|

[] | 2021-06-16T18:24:04Z

| 2021-06-16T20:42:03Z

| null |

suissemaxx

|

pytorch/pytorch

| 59,933

|

If I only have the model of PyTorch and don't know the dimension of the input, how to convert it to onnx?

|

## ❓ If I only have the model of PyTorch and don't know the dimension of the input, how to convert it to onnx?

### Question

I have a series of PyTorch trained models, such as "model.pth", but I don't know the input dimensions of the model.

For instance, in the following function: torch.onnx.export(model, args, f, export_params=True, verbose=False, training=False, input_names=None, output_names=None).

I don't know the "args" of the function. How do I define it by just having the model file such as "model.pth"?

|

https://github.com/pytorch/pytorch/issues/59933

|

closed

|

[] | 2021-06-14T08:38:29Z

| 2021-06-14T15:31:26Z

| null |

Wendy-liu17

|

pytorch/pytorch

| 59,870

|

How to export a model with nn.Module in for loop to onnx?

|

Bellow is a demo code:

```

class Demo(nn.Module):

def __init__(self, hidden_size, max_span_len):

super().__init__()

self.max_span_len = max_span_len

self.fc = nn.Linear(hidden_size * 2, hidden_size)

def forward(self, seq_hiddens):

'''

seq_hiddens: (batch_size, seq_len, hidden_size)

'''

seq_len = seq_hiddens.size()[1]

hiddens_list = []

for ind in range(seq_len):

hidden_each_step = seq_hiddens[:, ind, :]

a = seq_hiddens[:, ind:ind + self.max_span_len, :]

b = hidden_each_step[:, None, :].repeat(1, a.shape[1], 1)

tmp = torch.cat([a, b], dim=-1)

tmp = torch.tanh(self.fc(tmp))

hiddens_list.append(tmp)

output = torch.cat(hiddens_list, dim = 1)

return output

```

How to expot it to onnx? I need the fc Layer in for loop. Script function seems not work.

Thanks!!!

cc @garymm @BowenBao @neginraoof

|

https://github.com/pytorch/pytorch/issues/59870

|

closed

|

[

"module: onnx"

] | 2021-06-11T12:25:15Z

| 2021-06-15T18:34:50Z

| null |

JaheimLee

|

huggingface/transformers

| 12,105

|

What is the correct way to pass labels to DetrForSegmentation?

|

The [current documentation](https://huggingface.co/transformers/master/model_doc/detr.html#transformers.DetrForSegmentation.forward) for `DetrModelForSegmentation.forward` says the following about `labels` kwarg:

> The class labels themselves should be a torch.LongTensor of len (number of bounding boxes in the image,), the boxes a torch.FloatTensor of shape (number of bounding boxes in the image, 4) and the **masks a torch.FloatTensor of shape (number of bounding boxes in the image, 4).**

But when I looked at the tests, it seems the shape of `masks` is `torch.rand(self.n_targets, self.min_size, self.max_size)` .

https://github.com/huggingface/transformers/blob/d2753dcbec7123500c1a84a7c2143a79e74df48f/tests/test_modeling_detr.py#L87-L103

---

I'm guessing this is a documentation mixup!

Anyways, it would be super helpful to include a snippet in the DETR docs that shows how to correctly pass masks/other labels + get the loss/loss dict. 😄

CC: @NielsRogge

|

https://github.com/huggingface/transformers/issues/12105

|

closed

|

[] | 2021-06-10T22:15:23Z

| 2021-06-17T14:37:54Z

| null |

nateraw

|

pytorch/vision

| 4,001

|

Unable to build torchvision on Windows (installed torch from source and it is running)

|

## ❓ Questions and Help

I have installed torch successfully in my PC via source, but I am facing this issue while installing the torchvison. I don't think I can install torchvision via pip as it is re-downloading the torch.

Please help me to install it

TIA

i used `python setup.py install`

```

Building wheel torchvision-0.9.0a0+01dfa8e

PNG found: True

Running build on conda-build: False

Running build on conda: True

JPEG found: True

Building torchvision with JPEG image support

FFmpeg found: True

Traceback (most recent call last):

File "C:\Users\dhawals\repos\build_binaries\vision\setup.py", line 472, in <module>

ext_modules=get_extensions(),

File "C:\Users\dhawals\repos\build_binaries\vision\setup.py", line 352, in get_extensions

platform_tag = subprocess.run(

File "C:\Users\dhawals\miniconda3\lib\subprocess.py", line 501, in run

with Popen(*popenargs, **kwargs) as process:

File "C:\Users\dhawals\miniconda3\lib\subprocess.py", line 947, in __init__

self._execute_child(args, executable, preexec_fn, close_fds,

File "C:\Users\dhawals\miniconda3\lib\subprocess.py", line 1356, in _execute_child

args = list2cmdline(args)

File "C:\Users\dhawals\miniconda3\lib\subprocess.py", line 561, in list2cmdline

for arg in map(os.fsdecode, seq):

File "C:\Users\dhawals\miniconda3\lib\os.py", line 822, in fsdecode

filename = fspath(filename) # Does type-checking of `filename`.

TypeError: expected str, bytes or os.PathLike object, not NoneType

```

|

https://github.com/pytorch/vision/issues/4001

|

closed

|

[

"question"

] | 2021-06-08T09:48:25Z

| 2021-06-14T11:01:21Z

| null |

dhawals1939

|

pytorch/pytorch

| 59,607

|

Where is libtorch archive???

|

Where is libtorch archive???

I can't find libtorch 1.6.0..

|

https://github.com/pytorch/pytorch/issues/59607

|

closed

|

[] | 2021-06-08T01:03:23Z

| 2023-04-07T13:29:34Z

| null |

hi-one-gg

|

pytorch/xla

| 2,981

|

Where is torch_xla/csrc/XLANativeFunctions.h?

|

## 🐛 Bug

Trying to compile master found that there is no https://github.com/pytorch/xla/blob/master/torch_xla/csrc/XLANativeFunctions.h after updating to latest master.

How this file is generated? (aka which step Im missing?)

```

$ time pip install -e . --verbose

...............

[23/101] clang++-8 -MMD -MF /home/tyoc213/Documents/github/pytorch/xla/build/temp.linux-x86_64-3.8/torch_xla/csrc/init_python_bindings.o.d -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/tyoc213/Documents/github/pytorch/xla -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-bin -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/protobuf_archive/src -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_protobuf/src -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/eigen_archive -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_absl -I/home/tyoc213/Documents/github/pytorch -I/home/tyoc213/Documents/github/pytorch/torch/csrc -I/home/tyoc213/Documents/github/pytorch/torch/lib/tmp_install/include -I/home/tyoc213/Documents/github/pytorch/torch/include -I/home/tyoc213/Documents/github/pytorch/torch/include/torch/csrc/api/include -I/home/tyoc213/Documents/github/pytorch/torch/include/TH -I/home/tyoc213/Documents/github/pytorch/torch/include/THC -I/home/tyoc213/miniconda3/envs/xla/include/python3.8 -c -c /home/tyoc213/Documents/github/pytorch/xla/torch_xla/csrc/init_python_bindings.cpp -o /home/tyoc213/Documents/github/pytorch/xla/build/temp.linux-x86_64-3.8/torch_xla/csrc/init_python_bindings.o -std=c++14 -Wno-sign-compare -Wno-deprecated-declarations -Wno-return-type -Wno-macro-redefined -Wno-return-std-move -DNDEBUG -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_clang"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1002"' -DTORCH_EXTENSION_NAME=_XLAC -D_GLIBCXX_USE_CXX11_ABI=1

FAILED: /home/tyoc213/Documents/github/pytorch/xla/build/temp.linux-x86_64-3.8/torch_xla/csrc/init_python_bindings.o

clang++-8 -MMD -MF /home/tyoc213/Documents/github/pytorch/xla/build/temp.linux-x86_64-3.8/torch_xla/csrc/init_python_bindings.o.d -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/tyoc213/Documents/github/pytorch/xla -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-bin -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/protobuf_archive/src -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_protobuf/src -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/eigen_archive -I/home/tyoc213/Documents/github/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_absl -I/home/tyoc213/Documents/github/pytorch -I/home/tyoc213/Documents/github/pytorch/torch/csrc -I/home/tyoc213/Documents/github/pytorch/torch/lib/tmp_install/include -I/home/tyoc213/Documents/github/pytorch/torch/include -I/home/tyoc213/Documents/github/pytorch/torch/include/torch/csrc/api/include -I/home/tyoc213/Documents/github/pytorch/torch/include/TH -I/home/tyoc213/Documents/github/pytorch/torch/include/THC -I/home/tyoc213/miniconda3/envs/xla/include/python3.8 -c -c /home/tyoc213/Documents/github/pytorch/xla/torch_xla/csrc/init_python_bindings.cpp -o /home/tyoc213/Documents/github/pytorch/xla/build/temp.linux-x86_64-3.8/torch_xla/csrc/init_python_bindings.o -std=c++14 -Wno-sign-compare -Wno-deprecated-declarations -Wno-return-type -Wno-macro-redefined -Wno-return-std-move -DNDEBUG -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_clang"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1002"' -DTORCH_EXTENSION_NAME=_XLAC -D_GLIBCXX_USE_CXX11_ABI=1

/home/tyoc213/Documents/github/pytorch/xla/torch_xla/csrc/init_python_bindings.cpp:36:10: fatal error: 'torch_xla/csrc/XLANativeFunctions.h' file not found

#include "torch_xla/csrc/XLANativeFunctions.h"

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

1 error generated.

```

## Environment

- Installing from source on Linux/CUDA:

- torch_xla version: master

|

https://github.com/pytorch/xla/issues/2981

|

closed

|

[

"stale"

] | 2021-06-08T00:42:14Z

| 2021-07-21T13:22:46Z

| null |

tyoc213

|

pytorch/TensorRT

| 495

|

❓ [Question] How does the compiler uses the optimal input shape ?

|

When compiling the model we have to specify an optimal input shape as well as a minimal and maximal one.

I tested various optimal sizes to evaluate the impact of this parameter but found little to no difference for the inference time.

How is this parameter used by the compiler ?

Thank you for your time and consideration,

|

https://github.com/pytorch/TensorRT/issues/495

|

closed

|

[

"question"

] | 2021-06-07T13:21:20Z

| 2021-06-09T09:52:53Z

| null |

MatthieuToulemont

|

pytorch/text

| 1,323

|

How to use pretrained embeddings (`Vectors`) in the new API?

|

From what is see in the `experimental` module is that we pass a vocab object, which transforms the token into an unique integer.

https://github.com/pytorch/text/blob/e189c260e959ab966b1eaa986177549a6445858c/torchtext/experimental/datasets/text_classification.py#L50-L55

Thus something like `['hello', 'word']` might turn into `[42, 43]`, this can then be fed into an `nn.Embedding` layer to get the corresponding embedding vector and so on.

What i dont't understand is how do i use

https://github.com/pytorch/text/blob/e189c260e959ab966b1eaa986177549a6445858c/torchtext/vocab.py#L475-L487

`GloVe` is a `Vectors` but it transforms `['hello', 'world']` into its corresponding `Embedding` tensor representation, this doesn't allow me to pad the sentences beforehand.

Also its weird that now i don't need a `Vocab` object, but in most of the modules i see that `Vocab` is built if its set to `None`.

https://github.com/pytorch/text/blob/e189c260e959ab966b1eaa986177549a6445858c/torchtext/experimental/datasets/text_classification.py#L85-L89

I don't really understand how am i supposed to interpret `Vocab` and `Vectors` and where should i use them? In `nn.Module` i.e. my model, or in `data.Dataset`, i.e. my dataset ? What if i want to fine tune the pretrained embeddings as well ?

Should both of them be used, or just either one ?

I couldn't even find good examples in https://github.com/pytorch/text/tree/master/examples/text_classification

I'm coming from the traditional torch vision library guy, so kudos to dumping the old legacy style torchtext, i really hated it, the new api's seem promising, but just a little confusing as of now.

|

https://github.com/pytorch/text/issues/1323

|

open

|

[] | 2021-06-05T10:58:38Z

| 2021-07-01T03:26:20Z

| null |

satyajitghana

|

huggingface/transformers

| 12,005

|

where is the code for DetrFeatureExtractor, DetrForObjectDetection

|

Hello my dear friend.

i am long for the model of https://huggingface.co/facebook/detr-resnet-50

i cannot find the code of it in transformers==4.7.0.dev0 and 4.6.1 pleae help me . appreciated.

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform:

- Python version:

- PyTorch version (GPU?):

- Tensorflow version (GPU?):

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

Model I am using (Bert, XLNet ...):

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1.

2.

3.

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

|

https://github.com/huggingface/transformers/issues/12005

|

closed

|

[] | 2021-06-03T09:28:27Z

| 2021-06-10T07:06:59Z

| null |

zhangbo2008

|

pytorch/pytorch

| 59,368

|

How to remap RNNs hidden tensor to other device in torch.jit.load?

|

Model: CRNN (used in OCR)

1. When I trace model in cpu device, and use torch.jit.load(f, map_location="cuda:0"), I got an error as below

Input and hidden tensor are not at same device, found input tensor at cuda:0 and hidden tensor at cpu.

2. When I trace model in cuda:0 device, and use torch.jit.load(f, map_location="cuda:1"), I got an error as below

Input and hidden tensor are not at same device, found input tensor at cuda:1 and hidden tensor at cuda:0.

Is there a way to remap RNNs hidden tensor to other device in loaded module by jit?

PyTorch Version: 1.8.1

cc @gmagogsfm

|

https://github.com/pytorch/pytorch/issues/59368

|

closed

|

[

"oncall: jit"

] | 2021-06-03T09:24:23Z

| 2021-10-21T06:19:02Z

| null |

shihaoyin

|

pytorch/vision

| 3,949

|

Meaning of Assertion of infer_scale function in torchvision/ops/poolers.py

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

I want to know the meaning of the assert at infer_scale function in torchvision/ops/poolers.py.

It makes assertion error

File "/home/ubuntu/.jupyter/engine.py", line 199, in evaluate_one_image

output = model(loader)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torchvision/models/detection/generalized_rcnn.py", line 98, in forward

detections, detector_losses = self.roi_heads(features, proposals, images.image_sizes, targets)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torchvision/models/detection/roi_heads.py", line 752, in forward

box_features = self.box_roi_pool(features, proposals, image_shapes)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torchvision/ops/poolers.py", line 221, in forward

self.setup_scales(x_filtered, image_shapes)

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torchvision/ops/poolers.py", line 182, in setup_scales

scales = [self.infer_scale(feat, original_input_shape) for feat in features]

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torchvision/ops/poolers.py", line 182, in <listcomp>

scales = [self.infer_scale(feat, original_input_shape) for feat in features]

File "/home/ubuntu/.venv/jupyter/lib/python3.6/site-packages/torchvision/ops/poolers.py", line 166, in infer_scale

assert possible_scales[0] == possible_scales[1]

AssertionError

like this, and without assertion it makes correct results.

what's the meaning of that assertion?

|

https://github.com/pytorch/vision/issues/3949

|

closed

|

[

"question",

"module: ops"

] | 2021-06-03T04:38:03Z

| 2021-06-09T11:59:52Z

| null |

teang1995

|

pytorch/pytorch

| 59,231

|

How to solve the AssertionError: Torch not compiled with CUDA enabled

|

For the usage of the repo based on PyTorch(Person_reID_baseline_pytorch), I followed the guidance on its readme.md. However, I've got an error on the training step below: (I used --gpu_ids -1 as I use CPU only option in my MacOS)

`python train.py --gpu_ids -1 --name ft_ResNet50 --train_all --batchsize 32 --data_dir /Users/455832/Person_reID_baseline_pytorch/Market-1501-v15.09.15/pytorch`

The error I got is below:

```

Downloading: "https://download.pytorch.org/models/resnet50-19c8e357.pth" to /Users/455832/.cache/torch/checkpoints/resnet50-19c8e357.pth

100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 102502400/102502400 [00:14<00:00, 7210518.23it/s]

ft_net(

(model): ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

)

(2): Bottleneck

.......

.......

)

Traceback (most recent call last):

File "train.py", line 386, in <module>

model = model.cuda()

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 265, in cuda

return self._apply(lambda t: t.cuda(device))

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 193, in _apply

module._apply(fn)

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 193, in _apply

module._apply(fn)

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 199, in _apply

param.data = fn(param.data)

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 265, in <lambda>

return self._apply(lambda t: t.cuda(device))

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/cuda/__init__.py", line 162, in _lazy_init

_check_driver()

File "/Users/455832/opt/anaconda3/envs/reid_conda/lib/python3.6/site-packages/torch/cuda/__init__.py", line 75, in _check_driver

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

```

As suggested in its readme.md, I installed pytorch=1.1.0 and torchvision=0.3.0 and numpy=1.13.1, which are requirements, into my virtual environment using 3.6.12 python requirement over the instructions in PyTorch official website (https://pytorch.org/get-started/previous-versions/#wheel-10)

`conda install pytorch==1.1.0 torchvision==0.3.0 -c pytorch`

Can you please guide me to solve this issue?

|

https://github.com/pytorch/pytorch/issues/59231

|

closed

|

[] | 2021-05-31T20:45:33Z

| 2023-06-04T06:22:56Z

| null |

aktaseren

|

pytorch/TensorRT

| 493

|

❓ [Question] How to set three input tensor shape in input_shape?

|

## ❓ Question

<!-- How to set three input tensor shape in input_shape?-->

I have three input tensor:src_tokens, dummy_embeded_x, dummy_encoder_embedding

In this case, I don't konw how to set input_shape in compile_settings's "input_shape"

Who can help me? Thank you!

`encoder_out = model.forward_encoder([src_tokens, dummy_embeded_x, dummy_encoder_embedding])`

`...`

`script_encoder = torch.jit.script(encoder)...`

`compile_settings = {

"input_shapes": [[2, 16]],

"op_precision": torch.float32

}`

|

https://github.com/pytorch/TensorRT/issues/493

|

closed

|

[

"question"

] | 2021-05-31T02:48:53Z

| 2021-06-23T19:56:33Z

| null |

wxyhv

|

pytorch/vision

| 3,938

|

Batch size of the training recipes on multiple GPUs

|

## ❓ Questions and Help

In the README file that describes the recipes of training the classification models, under the references directory, it is stated that the models are trained with batch-size=32 on 8 GPUs.

Does it mean that:

- the whole batch-size is 32 and each GPU gets only 4 images to process at a time?

- OR each GPU gets 32 images to process at a time, meaning that the global batch-size is actually 256?

Thanks.

|

https://github.com/pytorch/vision/issues/3938

|

closed

|

[

"question"

] | 2021-05-30T13:16:07Z

| 2021-05-30T14:52:38Z

| null |

talcs

|

pytorch/pytorch

| 59,186

|

Document on how to use ATEN_CPU_CAPABILITY

|

## 🚀 Feature

<!-- A clear and concise description of the feature proposal -->

It would be great if ATEN_CPU_CAPABILITY would be documented with an example on how to use it.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->

I am currently trying to build PyTorch without AVX instructions, because I am deploying my docker image to a lot of different systems. While trying to understand how to remove AVX instructions I found ATEN_CPU_CAPABILITY. It is not clear on how to use it.

My unanswered questions are: Does it work on runtime? Do I have build PyTorch myself and set ATEN_CPU_CAPABILITY before building? Can I pass ATEN_CPU_CAPABILITY to setup.py? How do I know if I set it the right way? Are there any wheels without AVX instructions available?

|

https://github.com/pytorch/pytorch/issues/59186

|

closed

|

[] | 2021-05-30T11:40:07Z

| 2021-05-30T21:31:30Z

| null |

derneuere

|

pytorch/serve

| 1,103

|

Can two workflows share the same model with each other?

|

Continuing my previous post: [How i do models chain processing and batch processing for analyzing text data?](https://github.com/pytorch/serve/issues/1055)

Can I create two workflows using the same RoBERTa base model to perform two different tasks, let's say the classifier_model and summarizer_model? I would like to be able to share the base model with two workflows.

I am trying to register two workflows: wf_classifier.war and wf_summarizer.war. The first one is registered and the second one is not.

[log.log](https://github.com/pytorch/serve/files/6562664/log.log)

**wf_classifier.war**

```

models:

min-workers: 1

max-workers: 1

batch-size: 1

max-batch-delay: 1000

retry-attempts: 5

timeout-ms: 300000

roberta:

url: roberta_base.mar

classifier:

url: classifier.mar

dag:

roberta: [classifier]

```

**wf_summarizer.war**

```

models:

min-workers: 1

max-workers: 1

batch-size: 1

max-batch-delay: 1000

retry-attempts: 5

timeout-ms: 300000

roberta:

url: roberta_base.mar

summarizer:

url: summarizer.mar

dag:

roberta_base: [summarizer]

```

|

https://github.com/pytorch/serve/issues/1103

|

open

|

[

"question",

"triaged_wait",

"workflowx"

] | 2021-05-28T18:04:57Z

| 2022-09-08T12:27:30Z

| null |

yurkoff-mv

|

pytorch/TensorRT

| 490

|

❓ [Question] How could I integrate TensorRT's Group Normalization plugin into a TRTorch model ?

|

## ❓ Question

What would be the steps to be able to use TensorRT's Group Normalization plugin into a TRTorch model ?

The plugin is defined [here](https://github.com/NVIDIA/TensorRT/tree/master/plugin/groupNormalizationPlugin)

## Context

Being new to this, the Readme from core/conversion/converters didn't really clarify the steps I should follow to make the converter for a TensorRt plugin

## Environment

As an environment I use the `docker/Dockerfile.20.10 -t trtorch:pytorch1.7-cuda11.1-trt7.2.1` from the commit 6bb9fbf561c9cc3f0f1c4c7dde3d61c88e687efc

Thank you for your time and consideration

|

https://github.com/pytorch/TensorRT/issues/490

|

closed

|

[

"question"

] | 2021-05-27T12:53:09Z

| 2021-06-09T09:53:11Z

| null |

MatthieuToulemont

|

pytorch/cpuinfo

| 55

|

Compilation for freeRTOS

|

Hi all,

We are staring to look into using cpuinfo in a freeRTOS / ZedBoard setup.

Do you know if any attempts to port this code to freeRTOS before?

If not, do you have any tips / advise on how to start this porting?

Thanks,

Pablo.

|

https://github.com/pytorch/cpuinfo/issues/55

|

open

|

[

"question"

] | 2021-05-26T09:57:18Z

| 2024-01-11T00:56:44Z

| null |

pablogh-2000

|

huggingface/notebooks

| 42

|

what is the ' token classification head'?

|

https://github.com/huggingface/notebooks/issues/42

|

closed

|

[] | 2021-05-25T09:17:49Z

| 2021-05-29T11:36:11Z

| null |

zingxy

|

|

pytorch/pytorch

| 58,894

|

ease use `scheduler.step()` to step the scheduler. During the deprecation, if epoch is different from None, the closed form is used instead of the new chainable form, where available. Please open an issue if you are unable to replicate your use case: https://github.com/pytorch/pytorch/issues/new/choose. warnings.warn(EPOCH_DEPRECATION_WARNING, UserWarning)

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

We have a set of [listed resources available on the website](https://pytorch.org/resources). Our primary means of support is our discussion forum:

- [Discussion Forum](https://discuss.pytorch.org/)

|

https://github.com/pytorch/pytorch/issues/58894

|

closed

|

[] | 2021-05-25T02:41:52Z

| 2021-05-25T21:30:41Z

| null |

umie0128

|

pytorch/tutorials

| 1,539

|

(Libtorch)How to use packed_accessor64 to access tensor elements in CUDA?

|

The [tutorial ](https://pytorch.org/cppdocs/notes/tensor_basics.html#cuda-accessors) gives an example about using _packed_accessor64_ to access tensor elements efficiently as follows. However, I still do not know how to use _packed_accessor64_. Can anyone give me a more specific example? Thanks.

```

__global__ void packed_accessor_kernel(

PackedTensorAccessor64<float, 2> foo,

float* trace) {

int i=threadIdx.x

gpuAtomicAdd(trace, foo[i][i])

}

torch::Tensor foo = torch::rand({12, 12});

// assert foo is 2-dimensional and holds floats.

auto foo_a = foo.packed_accessor64<float,2>();

float trace = 0;

packed_accessor_kernel<<<1, 12>>>(foo_a, &trace);

```

cc @sekyondaMeta @svekars @carljparker @NicolasHug @kit1980 @subramen

|

https://github.com/pytorch/tutorials/issues/1539

|

open

|

[

"CUDA",

"medium",

"docathon-h2-2023"

] | 2021-05-24T15:56:26Z

| 2023-11-14T06:41:03Z

| null |

tangyipeng100

|

pytorch/text

| 1,316

|

How to load AG_NEWS data from local files

|

## How to load AG_NEWS data from local files

I can't get ag news data with `train_iter, test_iter = AG_NEWS(split=('train', 'test'))` online because of my bad connection. So I download the the `train.csv` and `test.csv` manually to my local folder `AG_NEWS` from url `'train': "https://raw.githubusercontent.com/mhjabreel/CharCnn_Keras/master/data/ag_news_csv/train.csv",

'test': "https://raw.githubusercontent.com/mhjabreel/CharCnn_Keras/master/data/ag_news_csv/test.csv"`

After that I tried to load ag news data with `train_iter, test_iter = AG_NEWS(root = './AG_NEWS', split=('train', 'test'))`, throw a exception `RuntimeError: The hash of /myfolder/AG_NEWS/train.csv does not match. Delete the file manually and retry.`

My file content is

```

myfolder

│

└───AG_NEWS

│ └─── train.csv

│ └─── test.csv

```

|

https://github.com/pytorch/text/issues/1316

|

open

|

[] | 2021-05-24T06:23:55Z

| 2021-05-24T14:54:04Z

| null |

robbenplus

|

pytorch/tutorials

| 1,534

|

Why libtorch tensor value assignment takes so much time?

|

I just assign 10000 values to a tensor:

```

clock_t start = clock();

torch::Tensor transform_tensor = torch::zeros({ 10000 });

for (size_t m = 0; m < 10000 m++)

transform_tensor[m] = int(m);

clock_t finish = clock();

```

And it takes 0.317s. If I assign 10,000 to an array or a vector, the time cost will be less.

Why tensor takes so much time? Can the time cost be decreased?

|

https://github.com/pytorch/tutorials/issues/1534

|

open

|

[

"question",

"Tensors"

] | 2021-05-24T01:59:42Z

| 2023-03-08T16:31:16Z

| null |

tangyipeng100

|

pytorch/pytorch

| 58,554

|

How to install pytorch1.8.1 with cuda 11.3?

|

How to install pytorch1.8.1 with cuda 11.3?

|

https://github.com/pytorch/pytorch/issues/58554

|

closed

|

[] | 2021-05-19T13:24:42Z

| 2021-05-20T03:42:17Z

| null |

Bonsen

|

pytorch/xla

| 2,957

|

How to compile xla_ltc_plugin

|

I was following https://github.com/pytorch/xla/tree/asuhan/xla_ltc_plugin to build ltc-based torch/xla. I compiled ltc successfully but encountered errors when compiling xla. I guess I must have missed something here. Help is greatly appreciated :) cc @asuhan

<details>

<summary>Error log</summary>

```

[1/14] clang++-8 -MMD -MF /home/ubuntu/pytorch/xla/build/temp.linux-x86_64-3.7/lazy_xla/csrc/version.o.d -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/ubuntu/pytorch/xla -I/home/ubuntu/pytorch/xla/../lazy_tensor_core -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-bin -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/protobuf_archive/src -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_protobuf/src -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/eigen_archive -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_absl -I/home/ubuntu/pytorch -I/home/ubuntu/pytorch/torch/csrc -I/home/ubuntu/pytorch/torch/lib/tmp_install/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include/torch/csrc/api/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include/TH -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include/THC -I/home/ubuntu/anaconda3/envs/torch-dev/include/python3.7m -c -c /home/ubuntu/pytorch/xla/lazy_xla/csrc/version.cpp -o /home/ubuntu/pytorch/xla/build/temp.linux-x86_64-3.7/lazy_xla/csrc/version.o -std=c++14 -Wno-sign-compare -Wno-unknown-pragmas -Wno-deprecated-declarations -Wno-return-type -Wno-macro-redefined -Wno-return-std-move -DNDEBUG -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=_LAZYXLAC -D_GLIBCXX_USE_CXX11_ABI=1

[2/14] clang++-8 -MMD -MF /home/ubuntu/pytorch/xla/build/temp.linux-x86_64-3.7/lazy_xla/csrc/compiler/data_ops.o.d -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/ubuntu/pytorch/xla -I/home/ubuntu/pytorch/xla/../lazy_tensor_core -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-bin -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/protobuf_archive/src -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_protobuf/src -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/eigen_archive -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_absl -I/home/ubuntu/pytorch -I/home/ubuntu/pytorch/torch/csrc -I/home/ubuntu/pytorch/torch/lib/tmp_install/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include/torch/csrc/api/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include/TH -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include/THC -I/home/ubuntu/anaconda3/envs/torch-dev/include/python3.7m -c -c /home/ubuntu/pytorch/xla/lazy_xla/csrc/compiler/data_ops.cpp -o /home/ubuntu/pytorch/xla/build/temp.linux-x86_64-3.7/lazy_xla/csrc/compiler/data_ops.o -std=c++14 -Wno-sign-compare -Wno-unknown-pragmas -Wno-deprecated-declarations -Wno-return-type -Wno-macro-redefined -Wno-return-std-move -DNDEBUG -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=_LAZYXLAC -D_GLIBCXX_USE_CXX11_ABI=1

FAILED: /home/ubuntu/pytorch/xla/build/temp.linux-x86_64-3.7/lazy_xla/csrc/compiler/data_ops.o

clang++-8 -MMD -MF /home/ubuntu/pytorch/xla/build/temp.linux-x86_64-3.7/lazy_xla/csrc/compiler/data_ops.o.d -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/ubuntu/pytorch/xla -I/home/ubuntu/pytorch/xla/../lazy_tensor_core -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-bin -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/protobuf_archive/src -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_protobuf/src -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/eigen_archive -I/home/ubuntu/pytorch/xla/third_party/tensorflow/bazel-tensorflow/external/com_google_absl -I/home/ubuntu/pytorch -I/home/ubuntu/pytorch/torch/csrc -I/home/ubuntu/pytorch/torch/lib/tmp_install/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/include -I/home/ubuntu/anaconda3/envs/torch-dev/lib/python3.7/site-packages/torch/incl

|

https://github.com/pytorch/xla/issues/2957

|

closed

|

[

"stale"

] | 2021-05-19T09:31:12Z

| 2021-07-08T09:11:02Z

| null |

hzfan

|

pytorch/pytorch

| 58,530

|

How to remove layer use parent name

|

Hi, I am a new user of pytorch. I try to load trained model and want to remove the last layer named 'fc'

```

model = models.alexnet()

model.fc = nn.Linear(4096, 4)

ckpt = torch.load('net_epoch_24.pth')

model.load_state_dict(ckpt)

model.classifier = nn.Sequential(nn.Linear(9216, 1024),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(1024, 8),

nn.LogSoftmax(dim=1))

print(model)

```

print out :

```

AlexNet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(6, 6))

(classifier): Sequential(

(0): Linear(in_features=9216, out_features=1024, bias=True)

(1): ReLU()

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=1024, out_features=8, bias=True)

(4): LogSoftmax(dim=1)

)

(fc): Linear(in_features=4096, out_features=4, bias=True)

)

```

is there any simple way to remove the last layer ('fc') ?

thanks

|

https://github.com/pytorch/pytorch/issues/58530

|

closed

|

[] | 2021-05-19T03:54:29Z

| 2021-05-20T05:29:28Z

| null |

ramdhan1989

|

pytorch/pytorch

| 58,460

|

how to convert scriptmodel to onnx?

|

how to convert scriptmodel to onnx?

D:\Python\Python37\lib\site-packages\torch\onnx\utils.py:348: UserWarning: Model has no forward function

warnings.warn("Model has no forward function")

Exception occurred when processing textline: 1

cc @houseroad @spandantiwari @lara-hdr @BowenBao @neginraoof @SplitInfinity

|

https://github.com/pytorch/pytorch/issues/58460

|

closed

|

[

"module: onnx",

"triaged"

] | 2021-05-18T03:15:29Z

| 2022-02-24T08:22:22Z

| null |

williamlzw

|

pytorch/TensorRT

| 473

|

❓ Is it possible to use TRTorch with batchedNMSPlugin for TensorRT?

|

## ❓ Question

<!-- Your question -->

## What you have already tried

Hi, I am trying to convert detectron2 traced keypoint-rcnn model that contains ops from torchvision like torchvision::nms. I get the following error:

>

> terminate called after throwing an instance of 'torch::jit::ErrorReport'

> what():

> Unknown builtin op: torchvision::nms.

> Could not find any similar ops to torchvision::nms. This op may not exist or may not be currently supported in TorchScript.

> :

> File "/usr/local/lib/python3.7/dist-packages/torchvision/ops/boxes.py", line 36

> """

> _assert_has_ops()

> return torch.ops.torchvision.nms(boxes, scores, iou_threshold)

> ~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

> Serialized File "code/__torch__/torchvision/ops/boxes.py", line 26

> _8 = __torch__.torchvision.extension._assert_has_ops

> _9 = _8()

> _10 = ops.torchvision.nms(boxes, scores, iou_threshold)

> ~~~~~~~~~~~~~~~~~~~ <--- HERE

> return _10

> 'nms' is being compiled since it was called from 'batched_nms'

> File "/usr/local/lib/python3.7/dist-packages/torchvision/ops/boxes.py", line 75

> offsets = idxs.to(boxes) * (max_coordinate + torch.tensor(1).to(boxes))

> boxes_for_nms = boxes + offsets[:, None]

> keep = nms(boxes_for_nms, scores, iou_threshold)

> ~~~ <--- HERE

> return keep

> Serialized File "code/__torch__/torchvision/ops/boxes.py", line 18

> _7 = torch.slice(offsets, 0, 0, 9223372036854775807, 1)

> boxes_for_nms = torch.add(boxes, torch.unsqueeze(_7, 1), alpha=1)

> keep = __torch__.torchvision.ops.boxes.nms(boxes_for_nms, scores, iou_threshold, )

> ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

> _0 = keep

> return _0

> 'batched_nms' is being compiled since it was called from 'RPN.forward'

> Serialized File "code/__torch__/detectron2/modeling/proposal_generator/rpn.py", line 19

> argument_9: Tensor,

> image_size: Tensor) -> Tensor:

> _0 = __torch__.torchvision.ops.boxes.batched_nms

> ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

> _1 = self.rpn_head

> _2 = (self.anchor_generator).forward(argument_1, argument_2, argument_3, argument_4, argument_5, argument_6, argument_7, argument_8, )

>

<!-- A clear and concise description of what you have already done. -->

## Environment

- PyTorch Version: 1.8.0

- CPU Architecture: arm64

- OS (e.g., Linux): Ubuntu 18.04

- How you installed PyTorch (`conda`, `pip`, `libtorch`, source): .whl file for jetson

- Build command you used (if compiling from source): `bazel build //:libtrtorch`

- Are you using local sources or building from archives: Local

- Python version: 3.7

- CUDA version: 10.2

- GPU models and configuration: Nvidia Jetson Xavier nx

- Any other relevant information: torchvision C++ API compiled locally

## Additional context

I know that there is [batchedNMSPlugin](https://www.ccoderun.ca/programming/doxygen/tensorrt/md_TensorRT_plugin_batchedNMSPlugin_README.html) for TensorRT, but I have no idea how to include it for conversion. I'd appreciate any advice.

<!-- Add any other context about the problem here. -->

|

https://github.com/pytorch/TensorRT/issues/473

|

closed

|

[

"question"

] | 2021-05-16T11:28:14Z

| 2022-08-20T07:31:37Z

| null |

VRSEN

|

pytorch/extension-cpp

| 72

|

How does the layer of C++ extensions translate to TorchScript or onnx?

|

https://github.com/pytorch/extension-cpp/issues/72

|

open

|

[] | 2021-05-14T09:50:12Z

| 2025-08-26T03:36:50Z

| null |

yanglinxiabuaaa

|

|

pytorch/vision

| 3,832

|

Error converting to onnx: forward function contains for loop

|

Hello, there is a for loop in my forward function. When I turned to onnx, the following error occurred:

`[ONNXRuntimeError] : 1 : FAIL : Non-zero status code returned while running Split node. Name:'Split_ 1277' Status Message: Cannot split using values in 'split' attribute. Axis=0 Input shape={59} NumOutputs=17 Num entries in 'split' (must equal number of outputs) was 17 Sum of sizes in 'split' (must equal size of selected axis) was 17`

Part of my forward code:

```

y, ey, x, ex = pad(boxes, w, h)

if len(boxes) > 0:

im_data = []

indx_y = torch.where(ey > y-1)[0]

for ind in indx_y:

img_k = imgs[image_inds[ind],:, (y[ind] - 1).type(torch.int64):ey[ind].type(torch.int64), (x[ind]-1).type(torch.int64):ex[ind].type(torch.int64)].unsqueeze(0)

im_data.append(imresample(img_k, (24, 24)))

im_data = torch.cat(im_data, dim=0)

return im_data

```

I found that during the first onnx conversion, the for loop was executed 17 times, but when I tested it, the for loop required 59 times, so there was an error. In the forward function, indx_y is dynamic, so the number of for loops is also dynamic. Is there any way to solve this problem?

cc @neginraoof

|

https://github.com/pytorch/vision/issues/3832

|

open

|

[

"question",

"awaiting response",

"module: onnx"

] | 2021-05-14T03:52:58Z

| 2021-05-18T09:42:32Z

| null |

wytcsuch

|

pytorch/vision

| 3,825

|

Why does RandomErasing transform aspect ratio use log scale

|

See from https://github.com/pytorch/vision/commit/06a5858b3b73d62351456886f0a9f725fddbb3fe the aspect ratio is chosen randomly from a log scale

I didn't see this in the original paper? And in the reference implementation.

https://github.com/zhunzhong07/Random-Erasing/blob/c699ae481219334755de93e9c870151f256013e4/transforms.py#L38

cc @vfdev-5

|

https://github.com/pytorch/vision/issues/3825

|

closed

|

[

"question",

"module: transforms"

] | 2021-05-13T11:43:04Z

| 2021-05-13T12:05:11Z

| null |

jxu

|

pytorch/vision

| 3,822

|

torchvision C++ compiling

|

1. quesion:

When I trying to compile torchvision from source in c++ language, the terminal thow erros:

In file included from /home/pc/anaconda3/include/python3.8/pytime.h:6:0,

from /home/pc/anaconda3/include/python3.8/Python.h:85,

from /media/pc/data/software/vision-0.9.0/torchvision/csrc/vision.cpp:4:

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:647:30: error: stray ‘\343’ in program

const std::vector<IValue>& slots() const {

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:647:30: error: stray ‘\200’ in program

const std::vector<IValue>& slots() const {

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:647:30: error: stray ‘\200’ in program

const std::vector<IValue>& slots() const {

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:647:30: error: stray ‘\343’ in program

const std::vector<IValue>& slots() const {

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:647:30: error: stray ‘\200’ in program

const std::vector<IValue>& slots() const {

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:647:30: error: stray ‘\200’ in program

const std::vector<IValue>& slots() const {

^

In file included from /media/pc/data/software/libtorch/include/c10/core/DispatchKey.h:6:0,

from /media/pc/data/software/libtorch/include/torch/library.h:61,

from /media/pc/data/software/vision-0.9.0/torchvision/csrc/vision.cpp:6:

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:835:12: error: stray ‘\343’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:835:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:835:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:835:12: error: stray ‘\343’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:835:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:835:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:853:12: error: stray ‘\343’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:853:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:853:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:853:12: error: stray ‘\343’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:853:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

/media/pc/data/software/libtorch/include/ATen/core/ivalue_inl.h:853:12: error: stray ‘\200’ in program

obj->slots().size() == 1,

^

2. enviroment:

libtorch: 1.8.1

vision: 0.9.1

cmake: 3.19.6

gcc: 7.5.0

python: 3.8.5

system: Ubuntu 18.04

3. compile code

cmake -DCMAKE_PREFIX_PATH=/media/pc/data/software/libtorch -DCMAKE_INSTALL_PREFIX=/media/pc/data/software/torchvision/install -DCMAKE_BUILD_TYPE=Release -DWITH_CUDA=ON ..

Thanks~!

|

https://github.com/pytorch/vision/issues/3822

|

closed

|

[

"question"

] | 2021-05-13T10:29:13Z

| 2021-05-13T12:02:06Z

| null |

swordnosword

|

pytorch/text

| 1,305

|

On Vocab Factory functions behavior

|

Related discussion #1016

Related PRs #1304, #1302

---------

torchtext provides several factory functions to construct [Vocab class](https://github.com/pytorch/text/blob/f7a6fbd3a910c4066b9a748545df388ae5933a6a/torchtext/vocab.py#L19) object. The primary ways to construct vocabulary are:

1. Reading raw text from file followed by tokenization to get token entries.

2. Reading token entries directly from file

3. Through iterators that yields iterator or list of tokens

3. Through user supplied ordered dictionary that maps tokens to their corresponding occurrence frequencies

Typically a vocabulary not only serve the purpose of numericalizing supplied tokens, but they also provide index for special occasions for example when the queried token is out of vocabulary (OOV) or when we need indices for special places like padding, masking, sentence beginning and end etc.

As the NLP is fast evolving, research and applied community alike will find novel and creative ways to push the frontiers of the field. Hence as a platform provider for NLP research and application, it is best not to make assumptions on special symbols including unknown token. We shall provide the aforementioned factory functions with minimal API requirements. We would expect the user to set the special symbols and fallback index through low level APIs of Vocab class.

Below are the examples of few scenarios and use cases:

Note that querying OOV token through Vocab object without setting default index would raise RuntimeError. Hence it is necessary to explicitly set this through API unless user wants to explicitly handle the runtime error as and when it happens. In below examples we set the default index to be same as index of `<unk>` token.

Example 1: Creating Vocab through text file and explicitly handling special symbols and fallback scenario

```

from torchtext.vocab import build_vocab_from_text_file

vocab = build_vocab_from_text_file("path/to/raw_text.txt", min_freq = 1)

special_symbols = {'<unk>':0,'<pad>':1,'<s>':2,'</s>':3}

default_index = special_symbols['<unk>']

for token, index in special_symbols.items():

if token in vocab:

vocab.reassign_token(token, index)

else:

vocab.insert_token(token, index)

vocab.set_default_index(default_index)

```

Example 2: Reading vocab directly from file with all the special symbols and setting fallback index to unknown token

```

from torchtext.vocab import build_vocab_from_file

unk_token = '<unk>'

vocab = build_vocab_from_text_file("path/to/tokens.txt", min_freq = 1)

assert unk_token in vocab

vocab.set_default_index(vocab[unk_token])

```

Example 3: Building Vocab using Iterators and explicitly adding special symbols and fallback index

```

from torchtext.vocab import build_vocab_from_iterator

special_symbols = {'<unk>':0,'<pad>':1,'<s>':2,'</s>':3}

vocab = build_vocab_from_iterator(iter_obj, min_freq = 1)

for token, index in special_symbols.items():

if token in vocab:

vocab.reassign_token(token, index)

else:

vocab.insert_token(token, index)

vocab.set_default_index(vocab[unk_token])

```

Example 4: Creating vocab through user supplied ordered dictionary that also contains all the special symbols

```

from torchtext.vocab import vocab as vocab_factory

unk_token = '<unk>'

vocab = vocab_factory(ordered_dict, min_freq = 1)

assert unk_token in vocab

vocab.set_default_index(vocab[unk_token])

```

Furthermore, legacy [Vocab class constructor](https://github.com/pytorch/text/blob/f7a6fbd3a910c4066b9a748545df388ae5933a6a/torchtext/legacy/vocab.py#L28) provide additional arguments to build Vocab using [Counters](https://docs.python.org/3/library/collections.html#collections.Counter). Here it provide support to add special symbols directly through input arguments rather than calling any low-level API.

We would love to hear from our users and community if the factory functions above is a good trade-off between flexibility and abstraction or if users would like to handle special symbols and default index through API arguments instead of explicitly calling the low level APIs of Vocab class.

with @cpuhrsch

cc: @hudeven, @snisarg, @dongreenberg

|

https://github.com/pytorch/text/issues/1305

|

open

|

[

"enhancement",

"question",

"need discussions"

] | 2021-05-13T02:52:19Z

| 2021-05-13T04:07:13Z

| null |

parmeet

|

pytorch/functorch

| 23

|

Figure out how to transform over optimizers

|

One way to transform over training loops (e.g. to do model ensembling or the inner step of a MAML) is to use a function that represents the optimizer step instead of an actual PyTorch optimizer. Right now I think we have the following requirements

- There should be a function version of each optimizer (e.g. `F.sgd`)

- The function should have an option to not mutate (e.g. `F.sgd(..., inplace=False)`)

- The function should be differentiable

PyTorch already has some here (in Prototype stage): https://github.com/pytorch/pytorch/blob/master/torch/optim/_functional.py, so we should check if these fit the requirements, and, if not, decide if we should influence the design

|

https://github.com/pytorch/functorch/issues/23

|

open

|

[] | 2021-05-11T13:13:39Z

| 2021-05-11T13:13:39Z

| null |

zou3519

|

pytorch/vision

| 3,811

|

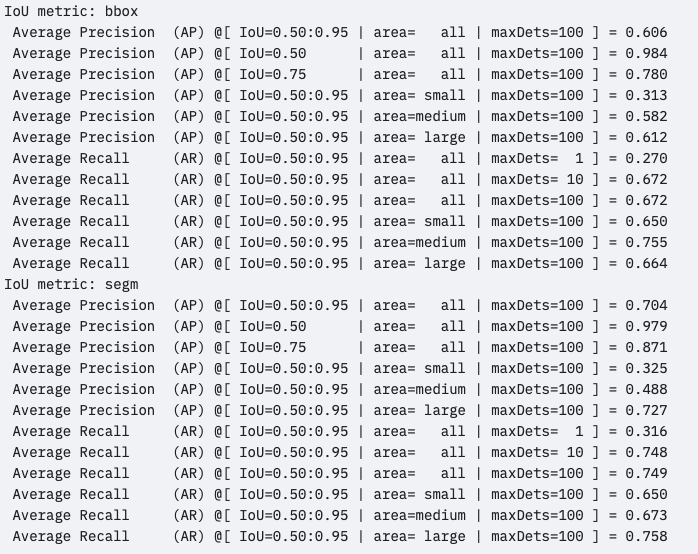

Mask-rcnn training - all AP and Recall scores in “IoU Metric: segm” remain 0

|

With torchvision’s pre-trained mask-rcnn model, trying to train on a custom dataset prepared in COCO format.

Using torch/vision/detection/engine’s `train_one_epoch` and `evaluate` methods for training and evaluation, respectively.

The loss_mask metric is reducing as can be seen here:

```

Epoch: [5] [ 0/20] eta: 0:00:54 lr: 0.005000 loss: 0.5001 (0.5001) loss_classifier: 0.2200 (0.2200) loss_box_reg: 0.2616 (0.2616) loss_mask: 0.0014 (0.0014) loss_objectness: 0.0051 (0.0051) loss_rpn_box_reg: 0.0120 (0.0120) time: 2.7308 data: 1.2866 max mem: 9887

Epoch: [5] [10/20] eta: 0:00:26 lr: 0.005000 loss: 0.4734 (0.4982) loss_classifier: 0.2055 (0.2208) loss_box_reg: 0.2515 (0.2595) loss_mask: 0.0012 (0.0013) loss_objectness: 0.0038 (0.0054) loss_rpn_box_reg: 0.0094 (0.0113) time: 2.6218 data: 1.1780 max mem: 9887

Epoch: [5] [19/20] eta: 0:00:02 lr: 0.005000 loss: 0.5162 (0.5406) loss_classifier: 0.2200 (0.2384) loss_box_reg: 0.2616 (0.2820) loss_mask: 0.0014 (0.0013) loss_objectness: 0.0051 (0.0062) loss_rpn_box_reg: 0.0120 (0.0127) time: 2.6099 data: 1.1755 max mem: 9887

```

But the `evaluate` output shows absolutely no improvement from zero for IoU segm metric:

IoU metric: bbox

```

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.653

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.843

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.723

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.788

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.325

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.701

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.738

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.739

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.832

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.456

IoU metric: segm

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000