Commit

•

42472b3

0

Parent(s):

Duplicate from Weyaxi/commit-trash-huggingface-spaces-codes

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +63 -0

- README.md +202 -0

- errors.txt +24 -0

- names.txt +0 -0

- spaces.csv +0 -0

- spaces.zip +3 -0

- spaces/0019c/NewBing/Dockerfile +34 -0

- spaces/0019c/NewBing/README.md +12 -0

- spaces/01zhangclare/bingai/Dockerfile +34 -0

- spaces/01zhangclare/bingai/README.md +12 -0

- spaces/07jeancms/minima/README.md +13 -0

- spaces/07jeancms/minima/app.py +7 -0

- spaces/0x1337/vector-inference/README.md +12 -0

- spaces/0x1337/vector-inference/app.py +5 -0

- spaces/0x7194633/mbrat-ru-sum/README.md +12 -0

- spaces/0x7194633/mbrat-ru-sum/app.py +13 -0

- spaces/0x7194633/nllb-1.3B-demo/README.md +12 -0

- spaces/0x7194633/nllb-1.3B-demo/app.py +83 -0

- spaces/0x7194633/nllb-1.3B-demo/flores200_codes.py +211 -0

- spaces/0x876/Yotta_Mix/README.md +12 -0

- spaces/0x876/Yotta_Mix/app.py +3 -0

- spaces/0x90e/ESRGAN-MANGA/ESRGAN/architecture.py +37 -0

- spaces/0x90e/ESRGAN-MANGA/ESRGAN/block.py +261 -0

- spaces/0x90e/ESRGAN-MANGA/ESRGAN_plus/architecture.py +38 -0

- spaces/0x90e/ESRGAN-MANGA/ESRGAN_plus/block.py +287 -0

- spaces/0x90e/ESRGAN-MANGA/ESRGANer.py +156 -0

- spaces/0x90e/ESRGAN-MANGA/README.md +10 -0

- spaces/0x90e/ESRGAN-MANGA/app.py +86 -0

- spaces/0x90e/ESRGAN-MANGA/inference.py +59 -0

- spaces/0x90e/ESRGAN-MANGA/inference_manga_v2.py +46 -0

- spaces/0x90e/ESRGAN-MANGA/process_image.py +31 -0

- spaces/0x90e/ESRGAN-MANGA/run_cmd.py +9 -0

- spaces/0x90e/ESRGAN-MANGA/util.py +6 -0

- spaces/0xAnders/ama-bot/README.md +13 -0

- spaces/0xAnders/ama-bot/app.py +70 -0

- spaces/0xHacked/zkProver/Dockerfile +21 -0

- spaces/0xHacked/zkProver/README.md +11 -0

- spaces/0xHacked/zkProver/app.py +77 -0

- spaces/0xJustin/0xJustin-Dungeons-and-Diffusion/README.md +13 -0

- spaces/0xJustin/0xJustin-Dungeons-and-Diffusion/app.py +3 -0

- spaces/0xSpleef/openchat-openchat_8192/README.md +12 -0

- spaces/0xSpleef/openchat-openchat_8192/app.py +3 -0

- spaces/0xSynapse/Image_captioner/README.md +13 -0

- spaces/0xSynapse/Image_captioner/app.py +62 -0

- spaces/0xSynapse/LlamaGPT/README.md +13 -0

- spaces/0xSynapse/LlamaGPT/app.py +408 -0

- spaces/0xSynapse/PixelFusion/README.md +13 -0

- spaces/0xSynapse/PixelFusion/app.py +85 -0

- spaces/0xSynapse/Segmagine/README.md +13 -0

- spaces/0xSynapse/Segmagine/app.py +97 -0

.gitattributes

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.lz4 filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

# Audio files - uncompressed

|

| 38 |

+

*.pcm filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

*.sam filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

*.raw filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

# Audio files - compressed

|

| 42 |

+

*.aac filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

*.flac filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

*.mp3 filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

*.ogg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

*.wav filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

# Image files - uncompressed

|

| 48 |

+

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

*.tiff filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

# Image files - compressed

|

| 53 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

*.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

*.webp filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

spaces/Pattr/DrumClassification/lilypond-2.24.2/lib/guile/2.2/ccache/system/vm/assembler.go filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

spaces/Pattr/DrumClassification/lilypond-2.24.2/lib/guile/2.2/ccache/system/vm/dwarf.go filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

spaces/bigscience-data/bloom-tokenizer-multilinguality/index.html filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

spaces/bigscience-data/bloom-tokens/index.html filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

spaces/ghuron/artist/dataset/astro.sql filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

spaces/pdjewell/sommeli_ai/images/px.html filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

spaces/pdjewell/sommeli_ai/images/px_2d.html filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

spaces/pdjewell/sommeli_ai/images/px_3d.html filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

configs:

|

| 3 |

+

- config_name: default

|

| 4 |

+

data_files:

|

| 5 |

+

spaces.csv

|

| 6 |

+

|

| 7 |

+

license: other

|

| 8 |

+

language:

|

| 9 |

+

- code

|

| 10 |

+

size_categories:

|

| 11 |

+

- 100K<n<1M

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# 📊 Dataset Description

|

| 16 |

+

|

| 17 |

+

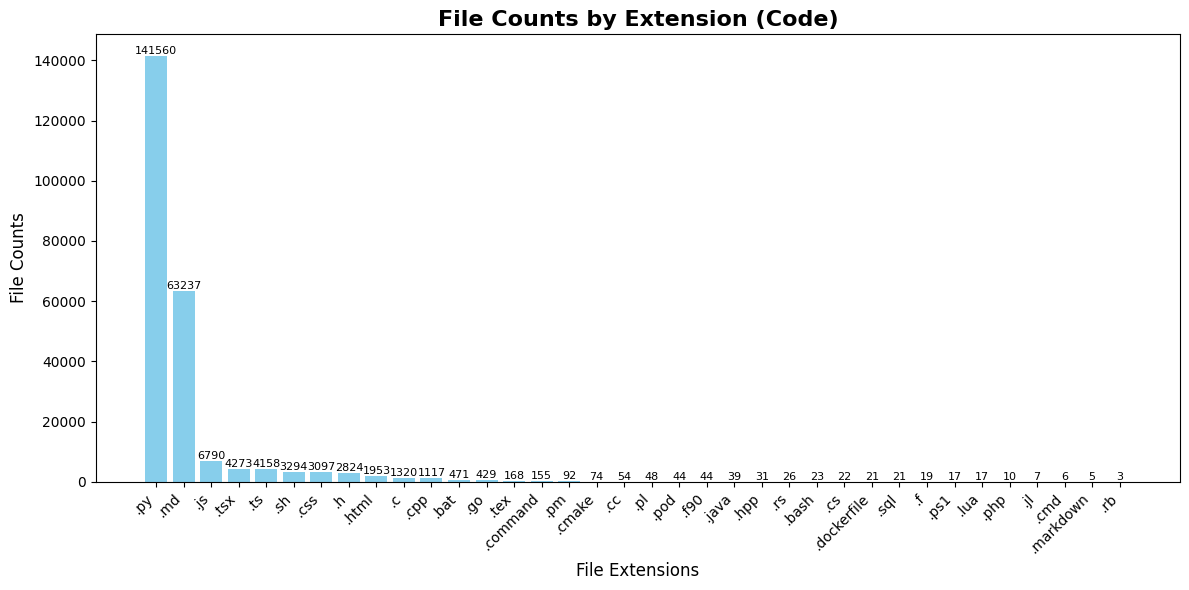

This dataset comprises code files of Huggingface Spaces that have more than 0 likes as of November 10, 2023. This dataset contains various programming languages totaling in 672 MB of compressed and 2.05 GB of uncompressed data.

|

| 18 |

+

|

| 19 |

+

# 📝 Data Fields

|

| 20 |

+

|

| 21 |

+

| Field | Type | Description |

|

| 22 |

+

|------------|--------|------------------------------------------|

|

| 23 |

+

| repository | string | Huggingface Spaces repository names. |

|

| 24 |

+

| sdk | string | Software Development Kit of the space. |

|

| 25 |

+

| license | string | License type of the space. |

|

| 26 |

+

|

| 27 |

+

## 🧩 Data Structure

|

| 28 |

+

|

| 29 |

+

Data structure of the data.

|

| 30 |

+

|

| 31 |

+

```

|

| 32 |

+

spaces/

|

| 33 |

+

├─ author1/

|

| 34 |

+

│ ├─ space1

|

| 35 |

+

│ ├─ space2

|

| 36 |

+

├─ author2/

|

| 37 |

+

│ ├─ space1

|

| 38 |

+

│ ├─ space2

|

| 39 |

+

│ ├─ space3

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

# 🏛️ Licenses

|

| 43 |

+

|

| 44 |

+

Huggingface Spaces contains a variety of licenses. Here is the list of the licenses that this dataset contains:

|

| 45 |

+

|

| 46 |

+

```python

|

| 47 |

+

[

|

| 48 |

+

'None',

|

| 49 |

+

'mit',

|

| 50 |

+

'apache-2.0',

|

| 51 |

+

'openrail',

|

| 52 |

+

'gpl-3.0',

|

| 53 |

+

'other',

|

| 54 |

+

'afl-3.0',

|

| 55 |

+

'unknown',

|

| 56 |

+

'creativeml-openrail-m',

|

| 57 |

+

'cc-by-nc-4.0',

|

| 58 |

+

'cc-by-4.0',

|

| 59 |

+

'cc',

|

| 60 |

+

'cc-by-nc-sa-4.0',

|

| 61 |

+

'bigscience-openrail-m',

|

| 62 |

+

'bsd-3-clause',

|

| 63 |

+

'agpl-3.0',

|

| 64 |

+

'wtfpl',

|

| 65 |

+

'gpl',

|

| 66 |

+

'artistic-2.0',

|

| 67 |

+

'lgpl-3.0',

|

| 68 |

+

'cc-by-sa-4.0',

|

| 69 |

+

'Configuration error',

|

| 70 |

+

'bsd',

|

| 71 |

+

'cc-by-nc-nd-4.0',

|

| 72 |

+

'cc0-1.0',

|

| 73 |

+

'unlicense',

|

| 74 |

+

'llama2',

|

| 75 |

+

'bigscience-bloom-rail-1.0',

|

| 76 |

+

'gpl-2.0',

|

| 77 |

+

'bsd-2-clause',

|

| 78 |

+

'osl-3.0',

|

| 79 |

+

'cc-by-2.0',

|

| 80 |

+

'cc-by-3.0',

|

| 81 |

+

'cc-by-nc-3.0',

|

| 82 |

+

'cc-by-nc-2.0',

|

| 83 |

+

'cc-by-nd-4.0',

|

| 84 |

+

'openrail++',

|

| 85 |

+

'bigcode-openrail-m',

|

| 86 |

+

'bsd-3-clause-clear',

|

| 87 |

+

'eupl-1.1',

|

| 88 |

+

'cc-by-sa-3.0',

|

| 89 |

+

'mpl-2.0',

|

| 90 |

+

'c-uda',

|

| 91 |

+

'gfdl',

|

| 92 |

+

'cc-by-nc-sa-2.0',

|

| 93 |

+

'cc-by-2.5',

|

| 94 |

+

'bsl-1.0',

|

| 95 |

+

'odc-by',

|

| 96 |

+

'deepfloyd-if-license',

|

| 97 |

+

'ms-pl',

|

| 98 |

+

'ecl-2.0',

|

| 99 |

+

'pddl',

|

| 100 |

+

'ofl-1.1',

|

| 101 |

+

'lgpl-2.1',

|

| 102 |

+

'postgresql',

|

| 103 |

+

'lppl-1.3c',

|

| 104 |

+

'ncsa',

|

| 105 |

+

'cc-by-nc-sa-3.0'

|

| 106 |

+

]

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

# 📊 Dataset Statistics

|

| 110 |

+

|

| 111 |

+

| Language | File Extension | File Counts | File Size (MB) | Line Counts |

|

| 112 |

+

|------------|-----------------|-------------|----------------|-------------|

|

| 113 |

+

| Python | .py | 141,560 | 1079.0 | 28,653,744 |

|

| 114 |

+

| SQL | .sql | 21 | 523.6 | 645 |

|

| 115 |

+

| JavaScript | .js | 6,790 | 369.8 | 2,137,054 |

|

| 116 |

+

| Markdown | .md | 63,237 | 273.4 | 3,110,443 |

|

| 117 |

+

| HTML | .html | 1,953 | 265.8 | 516,020 |

|

| 118 |

+

| C | .c | 1,320 | 132.2 | 3,558,826 |

|

| 119 |

+

| Go | .go | 429 | 46.3 | 6,331 |

|

| 120 |

+

| CSS | .css | 3,097 | 25.6 | 386,334 |

|

| 121 |

+

| C Header | .h | 2,824 | 20.4 | 570,948 |

|

| 122 |

+

| C++ | .cpp | 1,117 | 15.3 | 494,939 |

|

| 123 |

+

| TypeScript | .ts | 4,158 | 14.8 | 439,551 |

|

| 124 |

+

| TSX | .tsx | 4,273 | 9.4 | 306,416 |

|

| 125 |

+

| Shell | .sh | 3,294 | 5.5 | 171,943 |

|

| 126 |

+

| Perl | .pm | 92 | 4.2 | 128,594 |

|

| 127 |

+

| C# | .cs | 22 | 3.9 | 41,265 |

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

## 🖥️ Language

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

## 📁 Size

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

## 📝 Line Count

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

# 🤗 Huggingface Spaces Statistics

|

| 143 |

+

|

| 144 |

+

## 🛠️ Software Development Kit (SDK)

|

| 145 |

+

|

| 146 |

+

Software Development Kit pie chart.

|

| 147 |

+

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

## 🏛️ License

|

| 151 |

+

|

| 152 |

+

License chart.

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

# 📅 Dataset Creation

|

| 157 |

+

|

| 158 |

+

This dataset was created in these steps:

|

| 159 |

+

|

| 160 |

+

1. Scraped all spaces using the Huggingface Hub API.

|

| 161 |

+

|

| 162 |

+

```python

|

| 163 |

+

from huggingface_hub import HfApi

|

| 164 |

+

api = HfApi()

|

| 165 |

+

|

| 166 |

+

spaces = api.list_spaces(sort="likes", full=1, direction=-1)

|

| 167 |

+

```

|

| 168 |

+

|

| 169 |

+

2. Filtered spaces with more than 0 likes.

|

| 170 |

+

|

| 171 |

+

```python

|

| 172 |

+

a = {}

|

| 173 |

+

|

| 174 |

+

for i in tqdm(spaces):

|

| 175 |

+

i = i.__dict__

|

| 176 |

+

if i['likes'] > 0:

|

| 177 |

+

try:

|

| 178 |

+

try:

|

| 179 |

+

a[i['id']] = {'sdk': i['sdk'], 'license': i['cardData']['license'], 'likes': i['likes']}

|

| 180 |

+

except KeyError:

|

| 181 |

+

a[i['id']] = {'sdk': i['sdk'], 'license': None, 'likes': i['likes']}

|

| 182 |

+

except:

|

| 183 |

+

a[i['id']] = {'sdk': "Configuration error", 'license': "Configuration error", 'likes': i['likes']}

|

| 184 |

+

|

| 185 |

+

data_list = [{'repository': key, 'sdk': value['sdk'], 'license': value['license'], 'likes': value['likes']} for key, value in a.items()]

|

| 186 |

+

|

| 187 |

+

df = pd.DataFrame(data_list)

|

| 188 |

+

```

|

| 189 |

+

|

| 190 |

+

3. Cloned spaces locally.

|

| 191 |

+

|

| 192 |

+

```python

|

| 193 |

+

from huggingface_hub import snapshot_download

|

| 194 |

+

|

| 195 |

+

programming = ['.asm', '.bat', '.cmd', '.c', '.h', '.cs', '.cpp', '.hpp', '.c++', '.h++', '.cc', '.hh', '.C', '.H', '.cmake', '.css', '.dockerfile', 'Dockerfile', '.f90', '.f', '.f03', '.f08', '.f77', '.f95', '.for', '.fpp', '.go', '.hs', '.html', '.java', '.js', '.jl', '.lua', 'Makefile', '.md', '.markdown', '.php', '.php3', '.php4', '.php5', '.phps', '.phpt', '.pl', '.pm', '.pod', '.perl', '.ps1', '.psd1', '.psm1', '.py', '.rb', '.rs', '.sql', '.scala', '.sh', '.bash', '.command', '.zsh', '.ts', '.tsx', '.tex', '.vb']

|

| 196 |

+

pattern = [f"*{i}" for i in programming]

|

| 197 |

+

|

| 198 |

+

for i in repos:

|

| 199 |

+

snapshot_download(i, repo_type="space", local_dir=f"spaces/{i}", allow_patterns=pattern)

|

| 200 |

+

````

|

| 201 |

+

|

| 202 |

+

4. Processed the data to derive statistics.

|

errors.txt

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ky2k/Toxicity_Classifier_POC

|

| 2 |

+

tialenAdioni/chat-gpt-api

|

| 3 |

+

Narsil/myspace

|

| 4 |

+

arxify/RVC-beta-v2-0618

|

| 5 |

+

WitchHuntTV/WinnieThePoohSVC_sovits4

|

| 6 |

+

yizhangliu/Grounded-Segment-Anything

|

| 7 |

+

Robert001/UniControl-Demo

|

| 8 |

+

internetsignal/audioLDM

|

| 9 |

+

inamXcontru/PoeticTTS

|

| 10 |

+

dcarpintero/nlp-summarizer-pegasus

|

| 11 |

+

SungBeom/chatwine-korean

|

| 12 |

+

x6/BingAi

|

| 13 |

+

1gistliPinn/ChatGPT4

|

| 14 |

+

colakin/video-generater

|

| 15 |

+

stomexserde/gpt4-ui

|

| 16 |

+

quidiaMuxgu/Expedit-SAM

|

| 17 |

+

NasirKhalid24/Dalle2-Diffusion-Prior

|

| 18 |

+

joaopereirajp/livvieChatBot

|

| 19 |

+

diacanFperku/AutoGPT

|

| 20 |

+

tioseFevbu/cartoon-converter

|

| 21 |

+

chuan-hd/law-assistant-chatbot

|

| 22 |

+

mshukor/UnIVAL

|

| 23 |

+

xuyingliKepler/openai_play_tts

|

| 24 |

+

TNR-5/lib111

|

names.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

spaces.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

spaces.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fbb4b253de8e51bfa330e5c7cf31f7841e64ef30c1718d4a05c75e21c8ccf729

|

| 3 |

+

size 671941275

|

spaces/0019c/NewBing/Dockerfile

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Build Stage

|

| 2 |

+

# 使用 golang:alpine 作为构建阶段的基础镜像

|

| 3 |

+

FROM golang:alpine AS builder

|

| 4 |

+

|

| 5 |

+

# 添加 git,以便之后能从GitHub克隆项目

|

| 6 |

+

RUN apk --no-cache add git

|

| 7 |

+

|

| 8 |

+

# 从 GitHub 克隆 go-proxy-bingai 项目到 /workspace/app 目录下

|

| 9 |

+

RUN git clone https://github.com/Harry-zklcdc/go-proxy-bingai.git /workspace/app

|

| 10 |

+

|

| 11 |

+

# 设置工作目录为之前克隆的项目目录

|

| 12 |

+

WORKDIR /workspace/app

|

| 13 |

+

|

| 14 |

+

# 编译 go 项目。-ldflags="-s -w" 是为了减少编译后的二进制大小

|

| 15 |

+

RUN go build -ldflags="-s -w" -tags netgo -trimpath -o go-proxy-bingai main.go

|

| 16 |

+

|

| 17 |

+

# Runtime Stage

|

| 18 |

+

# 使用轻量级的 alpine 镜像作为运行时的基础镜像

|

| 19 |

+

FROM alpine

|

| 20 |

+

|

| 21 |

+

# 设置工作目录

|

| 22 |

+

WORKDIR /workspace/app

|

| 23 |

+

|

| 24 |

+

# 从构建阶段复制编译后的二进制文件到运行时镜像中

|

| 25 |

+

COPY --from=builder /workspace/app/go-proxy-bingai .

|

| 26 |

+

|

| 27 |

+

# 设置环境变量,此处为随机字符

|

| 28 |

+

ENV Go_Proxy_BingAI_USER_TOKEN_1="1h_21qf8tNmRtDy5a4fZ05RFgkZeZ9akmnW9NtSo5s6aJilplld4X4Lj7BkJ3EQSNbu7tu-z_-OAHqeELJqlpF-bvOCMo5lWGjyCTcJcqIHnYiu_vlgrdDyo99wQHgsvNR5pKASGikeDgAVSN7CN6YM74n7glWgJ7hGpd33s9zcgdCea94XcsO5AmoPIoxA02O6zGkpTnIdc61W7D1WQUflqxgaSHCGWlrhw7aoPs-io"

|

| 29 |

+

|

| 30 |

+

# 暴露8080端口

|

| 31 |

+

EXPOSE 8080

|

| 32 |

+

|

| 33 |

+

# 容器启动时运行的命令

|

| 34 |

+

CMD ["/workspace/app/go-proxy-bingai"]

|

spaces/0019c/NewBing/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: NewBing

|

| 3 |

+

emoji: 🏢

|

| 4 |

+

colorFrom: green

|

| 5 |

+

colorTo: red

|

| 6 |

+

sdk: docker

|

| 7 |

+

pinned: false

|

| 8 |

+

license: mit

|

| 9 |

+

app_port: 8080

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/01zhangclare/bingai/Dockerfile

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Build Stage

|

| 2 |

+

# 使用 golang:alpine 作为构建阶段的基础镜像

|

| 3 |

+

FROM golang:alpine AS builder

|

| 4 |

+

|

| 5 |

+

# 添加 git,以便之后能从GitHub克隆项目

|

| 6 |

+

RUN apk --no-cache add git

|

| 7 |

+

|

| 8 |

+

# 从 GitHub 克隆 go-proxy-bingai 项目到 /workspace/app 目录下

|

| 9 |

+

RUN git clone https://github.com/Harry-zklcdc/go-proxy-bingai.git /workspace/app

|

| 10 |

+

|

| 11 |

+

# 设置工作目录为之前克隆的项目目录

|

| 12 |

+

WORKDIR /workspace/app

|

| 13 |

+

|

| 14 |

+

# 编译 go 项目。-ldflags="-s -w" 是为了减少编译后的二进制大小

|

| 15 |

+

RUN go build -ldflags="-s -w" -tags netgo -trimpath -o go-proxy-bingai main.go

|

| 16 |

+

|

| 17 |

+

# Runtime Stage

|

| 18 |

+

# 使用轻量级的 alpine 镜像作为运行时的基础镜像

|

| 19 |

+

FROM alpine

|

| 20 |

+

|

| 21 |

+

# 设置工作目录

|

| 22 |

+

WORKDIR /workspace/app

|

| 23 |

+

|

| 24 |

+

# 从构建阶段复制编译后的二进制文件到运行时镜像中

|

| 25 |

+

COPY --from=builder /workspace/app/go-proxy-bingai .

|

| 26 |

+

|

| 27 |

+

# 设置环境变量,此处为随机字符

|

| 28 |

+

ENV Go_Proxy_BingAI_USER_TOKEN_1="kJs8hD92ncMzLaoQWYtX5rG6bE3fZ4iO"

|

| 29 |

+

|

| 30 |

+

# 暴露8080端口

|

| 31 |

+

EXPOSE 8080

|

| 32 |

+

|

| 33 |

+

# 容器启动时运行的命令

|

| 34 |

+

CMD ["/workspace/app/go-proxy-bingai"]

|

spaces/01zhangclare/bingai/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Bingai

|

| 3 |

+

emoji: 🏃

|

| 4 |

+

colorFrom: indigo

|

| 5 |

+

colorTo: purple

|

| 6 |

+

sdk: docker

|

| 7 |

+

pinned: false

|

| 8 |

+

license: mit

|

| 9 |

+

app_port: 8080

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/07jeancms/minima/README.md

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Minima

|

| 3 |

+

emoji: 🔥

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: gray

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.35.2

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

license: apache-2.0

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/07jeancms/minima/app.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

def greet(name):

|

| 4 |

+

return "Hello " + name + "!!"

|

| 5 |

+

|

| 6 |

+

iface = gr.Interface(fn=greet, inputs="text", outputs="text")

|

| 7 |

+

iface.launch()

|

spaces/0x1337/vector-inference/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Vector Inference

|

| 3 |

+

emoji: 🏃

|

| 4 |

+

colorFrom: pink

|

| 5 |

+

colorTo: purple

|

| 6 |

+

sdk: gradio

|

| 7 |

+

app_file: app.py

|

| 8 |

+

pinned: false

|

| 9 |

+

license: wtfpl

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/0x1337/vector-inference/app.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

gr.Interface.load("models/coder119/Vectorartz_Diffusion").launch()\

|

| 4 |

+

|

| 5 |

+

iface.launch()

|

spaces/0x7194633/mbrat-ru-sum/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Mbrat Ru Sum

|

| 3 |

+

emoji: 🦀

|

| 4 |

+

colorFrom: purple

|

| 5 |

+

colorTo: green

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.1.3

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/0x7194633/mbrat-ru-sum/app.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from transformers import MBartTokenizer, MBartForConditionalGeneration

|

| 3 |

+

|

| 4 |

+

model_name = "IlyaGusev/mbart_ru_sum_gazeta"

|

| 5 |

+

tokenizer = MBartTokenizer.from_pretrained(model_name)

|

| 6 |

+

model = MBartForConditionalGeneration.from_pretrained(model_name)

|

| 7 |

+

|

| 8 |

+

def summarize(text):

|

| 9 |

+

input_ids = tokenizer.batch_encode_plus([text], return_tensors="pt", max_length=1024)["input_ids"].to(model.device)

|

| 10 |

+

summary_ids = model.generate(input_ids=input_ids, no_repeat_ngram_size=4)

|

| 11 |

+

return tokenizer.decode(summary_ids[0], skip_special_tokens=True)

|

| 12 |

+

|

| 13 |

+

gr.Interface(fn=summarize, inputs="text", outputs="text", description="Russian Summarizer").launch()

|

spaces/0x7194633/nllb-1.3B-demo/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Nllb Translation Demo

|

| 3 |

+

emoji: 👀

|

| 4 |

+

colorFrom: indigo

|

| 5 |

+

colorTo: green

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.0.26

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/0x7194633/nllb-1.3B-demo/app.py

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import time

|

| 5 |

+

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM, pipeline

|

| 6 |

+

from flores200_codes import flores_codes

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def load_models():

|

| 10 |

+

# build model and tokenizer

|

| 11 |

+

model_name_dict = {'nllb-distilled-1.3B': 'facebook/nllb-200-distilled-1.3B'}

|

| 12 |

+

|

| 13 |

+

model_dict = {}

|

| 14 |

+

|

| 15 |

+

for call_name, real_name in model_name_dict.items():

|

| 16 |

+

print('\tLoading model: %s' % call_name)

|

| 17 |

+

model = AutoModelForSeq2SeqLM.from_pretrained(real_name)

|

| 18 |

+

tokenizer = AutoTokenizer.from_pretrained(real_name)

|

| 19 |

+

model_dict[call_name+'_model'] = model

|

| 20 |

+

model_dict[call_name+'_tokenizer'] = tokenizer

|

| 21 |

+

|

| 22 |

+

return model_dict

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

def translation(source, target, text):

|

| 26 |

+

if len(model_dict) == 2:

|

| 27 |

+

model_name = 'nllb-distilled-1.3B'

|

| 28 |

+

|

| 29 |

+

start_time = time.time()

|

| 30 |

+

source = flores_codes[source]

|

| 31 |

+

target = flores_codes[target]

|

| 32 |

+

|

| 33 |

+

model = model_dict[model_name + '_model']

|

| 34 |

+

tokenizer = model_dict[model_name + '_tokenizer']

|

| 35 |

+

|

| 36 |

+

translator = pipeline('translation', model=model, tokenizer=tokenizer, src_lang=source, tgt_lang=target)

|

| 37 |

+

output = translator(text, max_length=400)

|

| 38 |

+

|

| 39 |

+

end_time = time.time()

|

| 40 |

+

|

| 41 |

+

output = output[0]['translation_text']

|

| 42 |

+

result = {'inference_time': end_time - start_time,

|

| 43 |

+

'source': source,

|

| 44 |

+

'target': target,

|

| 45 |

+

'result': output}

|

| 46 |

+

return result

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

if __name__ == '__main__':

|

| 50 |

+

print('\tinit models')

|

| 51 |

+

|

| 52 |

+

global model_dict

|

| 53 |

+

|

| 54 |

+

model_dict = load_models()

|

| 55 |

+

|

| 56 |

+

# define gradio demo

|

| 57 |

+

lang_codes = list(flores_codes.keys())

|

| 58 |

+

#inputs = [gr.inputs.Radio(['nllb-distilled-600M', 'nllb-1.3B', 'nllb-distilled-1.3B'], label='NLLB Model'),

|

| 59 |

+

inputs = [gr.inputs.Dropdown(lang_codes, default='English', label='Source'),

|

| 60 |

+

gr.inputs.Dropdown(lang_codes, default='Korean', label='Target'),

|

| 61 |

+

gr.inputs.Textbox(lines=5, label="Input text"),

|

| 62 |

+

]

|

| 63 |

+

|

| 64 |

+

outputs = gr.outputs.JSON()

|

| 65 |

+

|

| 66 |

+

title = "NLLB distilled 1.3B demo"

|

| 67 |

+

|

| 68 |

+

demo_status = "Demo is running on CPU"

|

| 69 |

+

description = f"Details: https://github.com/facebookresearch/fairseq/tree/nllb. {demo_status}"

|

| 70 |

+

examples = [

|

| 71 |

+

['English', 'Korean', 'Hi. nice to meet you']

|

| 72 |

+

]

|

| 73 |

+

|

| 74 |

+

gr.Interface(translation,

|

| 75 |

+

inputs,

|

| 76 |

+

outputs,

|

| 77 |

+

title=title,

|

| 78 |

+

description=description,

|

| 79 |

+

examples=examples,

|

| 80 |

+

examples_per_page=50,

|

| 81 |

+

).launch()

|

| 82 |

+

|

| 83 |

+

|

spaces/0x7194633/nllb-1.3B-demo/flores200_codes.py

ADDED

|

@@ -0,0 +1,211 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

codes_as_string = '''Acehnese (Arabic script) ace_Arab

|

| 2 |

+

Acehnese (Latin script) ace_Latn

|

| 3 |

+

Mesopotamian Arabic acm_Arab

|

| 4 |

+

Ta’izzi-Adeni Arabic acq_Arab

|

| 5 |

+

Tunisian Arabic aeb_Arab

|

| 6 |

+

Afrikaans afr_Latn

|

| 7 |

+

South Levantine Arabic ajp_Arab

|

| 8 |

+

Akan aka_Latn

|

| 9 |

+

Amharic amh_Ethi

|

| 10 |

+

North Levantine Arabic apc_Arab

|

| 11 |

+

Modern Standard Arabic arb_Arab

|

| 12 |

+

Modern Standard Arabic (Romanized) arb_Latn

|

| 13 |

+

Najdi Arabic ars_Arab

|

| 14 |

+

Moroccan Arabic ary_Arab

|

| 15 |

+

Egyptian Arabic arz_Arab

|

| 16 |

+

Assamese asm_Beng

|

| 17 |

+

Asturian ast_Latn

|

| 18 |

+

Awadhi awa_Deva

|

| 19 |

+

Central Aymara ayr_Latn

|

| 20 |

+

South Azerbaijani azb_Arab

|

| 21 |

+

North Azerbaijani azj_Latn

|

| 22 |

+

Bashkir bak_Cyrl

|

| 23 |

+

Bambara bam_Latn

|

| 24 |

+

Balinese ban_Latn

|

| 25 |

+

Belarusian bel_Cyrl

|

| 26 |

+

Bemba bem_Latn

|

| 27 |

+

Bengali ben_Beng

|

| 28 |

+

Bhojpuri bho_Deva

|

| 29 |

+

Banjar (Arabic script) bjn_Arab

|

| 30 |

+

Banjar (Latin script) bjn_Latn

|

| 31 |

+

Standard Tibetan bod_Tibt

|

| 32 |

+

Bosnian bos_Latn

|

| 33 |

+

Buginese bug_Latn

|

| 34 |

+

Bulgarian bul_Cyrl

|

| 35 |

+

Catalan cat_Latn

|

| 36 |

+

Cebuano ceb_Latn

|

| 37 |

+

Czech ces_Latn

|

| 38 |

+

Chokwe cjk_Latn

|

| 39 |

+

Central Kurdish ckb_Arab

|

| 40 |

+

Crimean Tatar crh_Latn

|

| 41 |

+

Welsh cym_Latn

|

| 42 |

+

Danish dan_Latn

|

| 43 |

+

German deu_Latn

|

| 44 |

+

Southwestern Dinka dik_Latn

|

| 45 |

+

Dyula dyu_Latn

|

| 46 |

+

Dzongkha dzo_Tibt

|

| 47 |

+

Greek ell_Grek

|

| 48 |

+

English eng_Latn

|

| 49 |

+

Esperanto epo_Latn

|

| 50 |

+

Estonian est_Latn

|

| 51 |

+

Basque eus_Latn

|

| 52 |

+

Ewe ewe_Latn

|

| 53 |

+

Faroese fao_Latn

|

| 54 |

+

Fijian fij_Latn

|

| 55 |

+

Finnish fin_Latn

|

| 56 |

+

Fon fon_Latn

|

| 57 |

+

French fra_Latn

|

| 58 |

+

Friulian fur_Latn

|

| 59 |

+

Nigerian Fulfulde fuv_Latn

|

| 60 |

+

Scottish Gaelic gla_Latn

|

| 61 |

+

Irish gle_Latn

|

| 62 |

+

Galician glg_Latn

|

| 63 |

+

Guarani grn_Latn

|

| 64 |

+

Gujarati guj_Gujr

|

| 65 |

+

Haitian Creole hat_Latn

|

| 66 |

+

Hausa hau_Latn

|

| 67 |

+

Hebrew heb_Hebr

|

| 68 |

+

Hindi hin_Deva

|

| 69 |

+

Chhattisgarhi hne_Deva

|

| 70 |

+

Croatian hrv_Latn

|

| 71 |

+

Hungarian hun_Latn

|

| 72 |

+

Armenian hye_Armn

|

| 73 |

+

Igbo ibo_Latn

|

| 74 |

+

Ilocano ilo_Latn

|

| 75 |

+

Indonesian ind_Latn

|

| 76 |

+

Icelandic isl_Latn

|

| 77 |

+

Italian ita_Latn

|

| 78 |

+

Javanese jav_Latn

|

| 79 |

+

Japanese jpn_Jpan

|

| 80 |

+

Kabyle kab_Latn

|

| 81 |

+

Jingpho kac_Latn

|

| 82 |

+

Kamba kam_Latn

|

| 83 |

+

Kannada kan_Knda

|

| 84 |

+

Kashmiri (Arabic script) kas_Arab

|

| 85 |

+

Kashmiri (Devanagari script) kas_Deva

|

| 86 |

+

Georgian kat_Geor

|

| 87 |

+

Central Kanuri (Arabic script) knc_Arab

|

| 88 |

+

Central Kanuri (Latin script) knc_Latn

|

| 89 |

+

Kazakh kaz_Cyrl

|

| 90 |

+

Kabiyè kbp_Latn

|

| 91 |

+

Kabuverdianu kea_Latn

|

| 92 |

+

Khmer khm_Khmr

|

| 93 |

+

Kikuyu kik_Latn

|

| 94 |

+

Kinyarwanda kin_Latn

|

| 95 |

+

Kyrgyz kir_Cyrl

|

| 96 |

+

Kimbundu kmb_Latn

|

| 97 |

+

Northern Kurdish kmr_Latn

|

| 98 |

+

Kikongo kon_Latn

|

| 99 |

+

Korean kor_Hang

|

| 100 |

+

Lao lao_Laoo

|

| 101 |

+

Ligurian lij_Latn

|

| 102 |

+

Limburgish lim_Latn

|

| 103 |

+

Lingala lin_Latn

|

| 104 |

+

Lithuanian lit_Latn

|

| 105 |

+

Lombard lmo_Latn

|

| 106 |

+

Latgalian ltg_Latn

|

| 107 |

+

Luxembourgish ltz_Latn

|

| 108 |

+

Luba-Kasai lua_Latn

|

| 109 |

+

Ganda lug_Latn

|

| 110 |

+

Luo luo_Latn

|

| 111 |

+

Mizo lus_Latn

|

| 112 |

+

Standard Latvian lvs_Latn

|

| 113 |

+

Magahi mag_Deva

|

| 114 |

+

Maithili mai_Deva

|

| 115 |

+

Malayalam mal_Mlym

|

| 116 |

+

Marathi mar_Deva

|

| 117 |

+

Minangkabau (Arabic script) min_Arab

|

| 118 |

+

Minangkabau (Latin script) min_Latn

|

| 119 |

+

Macedonian mkd_Cyrl

|

| 120 |

+

Plateau Malagasy plt_Latn

|

| 121 |

+

Maltese mlt_Latn

|

| 122 |

+

Meitei (Bengali script) mni_Beng

|

| 123 |

+

Halh Mongolian khk_Cyrl

|

| 124 |

+

Mossi mos_Latn

|

| 125 |

+

Maori mri_Latn

|

| 126 |

+

Burmese mya_Mymr

|

| 127 |

+

Dutch nld_Latn

|

| 128 |

+

Norwegian Nynorsk nno_Latn

|

| 129 |

+

Norwegian Bokmål nob_Latn

|

| 130 |

+

Nepali npi_Deva

|

| 131 |

+

Northern Sotho nso_Latn

|

| 132 |

+

Nuer nus_Latn

|

| 133 |

+

Nyanja nya_Latn

|

| 134 |

+

Occitan oci_Latn

|

| 135 |

+

West Central Oromo gaz_Latn

|

| 136 |

+

Odia ory_Orya

|

| 137 |

+

Pangasinan pag_Latn

|

| 138 |

+

Eastern Panjabi pan_Guru

|

| 139 |

+

Papiamento pap_Latn

|

| 140 |

+

Western Persian pes_Arab

|

| 141 |

+

Polish pol_Latn

|

| 142 |

+

Portuguese por_Latn

|

| 143 |

+

Dari prs_Arab

|

| 144 |

+

Southern Pashto pbt_Arab

|

| 145 |

+

Ayacucho Quechua quy_Latn

|

| 146 |

+

Romanian ron_Latn

|

| 147 |

+

Rundi run_Latn

|

| 148 |

+

Russian rus_Cyrl

|

| 149 |

+

Sango sag_Latn

|

| 150 |

+

Sanskrit san_Deva

|

| 151 |

+

Santali sat_Olck

|

| 152 |

+

Sicilian scn_Latn

|

| 153 |

+

Shan shn_Mymr

|

| 154 |

+

Sinhala sin_Sinh

|

| 155 |

+

Slovak slk_Latn

|

| 156 |

+

Slovenian slv_Latn

|

| 157 |

+

Samoan smo_Latn

|

| 158 |

+

Shona sna_Latn

|

| 159 |

+

Sindhi snd_Arab

|

| 160 |

+

Somali som_Latn

|

| 161 |

+

Southern Sotho sot_Latn

|

| 162 |

+

Spanish spa_Latn

|

| 163 |

+

Tosk Albanian als_Latn

|

| 164 |

+

Sardinian srd_Latn

|

| 165 |

+

Serbian srp_Cyrl

|

| 166 |

+

Swati ssw_Latn

|

| 167 |

+

Sundanese sun_Latn

|

| 168 |

+

Swedish swe_Latn

|

| 169 |

+

Swahili swh_Latn

|

| 170 |

+

Silesian szl_Latn

|

| 171 |

+

Tamil tam_Taml

|

| 172 |

+

Tatar tat_Cyrl

|

| 173 |

+

Telugu tel_Telu

|

| 174 |

+

Tajik tgk_Cyrl

|

| 175 |

+

Tagalog tgl_Latn

|

| 176 |

+

Thai tha_Thai

|

| 177 |

+

Tigrinya tir_Ethi

|

| 178 |

+

Tamasheq (Latin script) taq_Latn

|

| 179 |

+

Tamasheq (Tifinagh script) taq_Tfng

|

| 180 |

+

Tok Pisin tpi_Latn

|

| 181 |

+

Tswana tsn_Latn

|

| 182 |

+

Tsonga tso_Latn

|

| 183 |

+

Turkmen tuk_Latn

|

| 184 |

+

Tumbuka tum_Latn

|

| 185 |

+

Turkish tur_Latn

|

| 186 |

+

Twi twi_Latn

|

| 187 |

+

Central Atlas Tamazight tzm_Tfng

|

| 188 |

+

Uyghur uig_Arab

|

| 189 |

+

Ukrainian ukr_Cyrl

|

| 190 |

+

Umbundu umb_Latn

|

| 191 |

+

Urdu urd_Arab

|

| 192 |

+

Northern Uzbek uzn_Latn

|

| 193 |

+

Venetian vec_Latn

|

| 194 |

+

Vietnamese vie_Latn

|

| 195 |

+

Waray war_Latn

|

| 196 |

+

Wolof wol_Latn

|

| 197 |

+

Xhosa xho_Latn

|

| 198 |

+

Eastern Yiddish ydd_Hebr

|

| 199 |

+

Yoruba yor_Latn

|

| 200 |

+

Yue Chinese yue_Hant

|

| 201 |

+

Chinese (Simplified) zho_Hans

|

| 202 |

+

Chinese (Traditional) zho_Hant

|

| 203 |

+

Standard Malay zsm_Latn

|

| 204 |

+

Zulu zul_Latn'''

|

| 205 |

+

|

| 206 |

+

codes_as_string = codes_as_string.split('\n')

|

| 207 |

+

|

| 208 |

+

flores_codes = {}

|

| 209 |

+

for code in codes_as_string:

|

| 210 |

+

lang, lang_code = code.split('\t')

|

| 211 |

+

flores_codes[lang] = lang_code

|

spaces/0x876/Yotta_Mix/README.md

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: CompVis Stable Diffusion V1 4

|

| 3 |

+

emoji: 📉

|

| 4 |

+

colorFrom: pink

|

| 5 |

+

colorTo: red

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.39.0

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

spaces/0x876/Yotta_Mix/app.py

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

gr.Interface.load("models/CompVis/stable-diffusion-v1-4").launch()

|

spaces/0x90e/ESRGAN-MANGA/ESRGAN/architecture.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import ESRGAN.block as B

|

| 5 |

+

|

| 6 |

+

class RRDB_Net(nn.Module):

|

| 7 |

+

def __init__(self, in_nc, out_nc, nf, nb, gc=32, upscale=4, norm_type=None, act_type='leakyrelu', \

|

| 8 |

+

mode='CNA', res_scale=1, upsample_mode='upconv'):

|

| 9 |

+

super(RRDB_Net, self).__init__()

|

| 10 |

+

n_upscale = int(math.log(upscale, 2))

|

| 11 |

+

if upscale == 3:

|

| 12 |

+

n_upscale = 1

|

| 13 |

+

|

| 14 |

+

fea_conv = B.conv_block(in_nc, nf, kernel_size=3, norm_type=None, act_type=None)

|

| 15 |

+

rb_blocks = [B.RRDB(nf, kernel_size=3, gc=32, stride=1, bias=True, pad_type='zero', \

|

| 16 |

+

norm_type=norm_type, act_type=act_type, mode='CNA') for _ in range(nb)]

|

| 17 |

+

LR_conv = B.conv_block(nf, nf, kernel_size=3, norm_type=norm_type, act_type=None, mode=mode)

|

| 18 |

+

|

| 19 |

+

if upsample_mode == 'upconv':

|

| 20 |

+

upsample_block = B.upconv_blcok

|

| 21 |

+

elif upsample_mode == 'pixelshuffle':

|

| 22 |

+

upsample_block = B.pixelshuffle_block

|

| 23 |

+

else:

|

| 24 |

+

raise NotImplementedError('upsample mode [%s] is not found' % upsample_mode)

|

| 25 |

+

if upscale == 3:

|

| 26 |

+

upsampler = upsample_block(nf, nf, 3, act_type=act_type)

|

| 27 |

+

else:

|

| 28 |

+

upsampler = [upsample_block(nf, nf, act_type=act_type) for _ in range(n_upscale)]

|

| 29 |

+

HR_conv0 = B.conv_block(nf, nf, kernel_size=3, norm_type=None, act_type=act_type)

|

| 30 |

+

HR_conv1 = B.conv_block(nf, out_nc, kernel_size=3, norm_type=None, act_type=None)

|

| 31 |

+

|

| 32 |

+

self.model = B.sequential(fea_conv, B.ShortcutBlock(B.sequential(*rb_blocks, LR_conv)),\

|

| 33 |

+

*upsampler, HR_conv0, HR_conv1)

|

| 34 |

+

|

| 35 |

+

def forward(self, x):

|

| 36 |

+

x = self.model(x)

|

| 37 |

+

return x

|

spaces/0x90e/ESRGAN-MANGA/ESRGAN/block.py

ADDED

|

@@ -0,0 +1,261 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|