title

stringlengths 2

169

| diff

stringlengths 235

19.5k

| body

stringlengths 0

30.5k

| url

stringlengths 48

84

| created_at

stringlengths 20

20

| closed_at

stringlengths 20

20

| merged_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| diff_len

float64 101

3.99k

| repo_name

stringclasses 83

values | __index_level_0__

int64 15

52.7k

|

|---|---|---|---|---|---|---|---|---|---|---|

Added CodeLlama 70b model | diff --git a/g4f/models.py b/g4f/models.py

index e58ccef2ed..dd8e175d57 100644

--- a/g4f/models.py

+++ b/g4f/models.py

@@ -123,6 +123,12 @@ def __all__() -> list[str]:

best_provider = RetryProvider([HuggingChat, PerplexityLabs, DeepInfra])

)

+codellama_70b_instruct = Model(

+ name = "codellama/CodeLlama-70b-Instruct-hf",

+ base_provider = "huggingface",

+ best_provider = DeepInfra

+)

+

# Mistral

mixtral_8x7b = Model(

name = "mistralai/Mixtral-8x7B-Instruct-v0.1",

@@ -256,6 +262,7 @@ class ModelUtils:

'llama2-13b': llama2_13b,

'llama2-70b': llama2_70b,

'codellama-34b-instruct': codellama_34b_instruct,

+ 'codellama-70b-instruct': codellama_70b_instruct,

'mixtral-8x7b': mixtral_8x7b,

'mistral-7b': mistral_7b,

@@ -270,4 +277,4 @@ class ModelUtils:

'pi': pi

}

-_all_models = list(ModelUtils.convert.keys())

\ No newline at end of file

+_all_models = list(ModelUtils.convert.keys())

| https://api.github.com/repos/xtekky/gpt4free/pulls/1547 | 2024-02-04T18:51:10Z | 2024-02-05T13:47:24Z | 2024-02-05T13:47:24Z | 2024-02-14T01:28:01Z | 357 | xtekky/gpt4free | 38,171 |

|

Fixed #18110 -- Improve template cache tag documentation | diff --git a/docs/topics/cache.txt b/docs/topics/cache.txt

index 03afa86647632..d0bd9f699294d 100644

--- a/docs/topics/cache.txt

+++ b/docs/topics/cache.txt

@@ -595,7 +595,8 @@ the ``cache`` template tag. To give your template access to this tag, put

The ``{% cache %}`` template tag caches the contents of the block for a given

amount of time. It takes at least two arguments: the cache timeout, in seconds,

-and the name to give the cache fragment. For example:

+and the name to give the cache fragment. The name will be taken as is, do not

+use a variable. For example:

.. code-block:: html+django

| For me it was not clear that the fragment name cannot be a variable. I just found out by wondering about errors and having a quick look into Django's code. It should be made more clear that the second argument will not be resolved even though all the others will be (even the cache time gets resolved).

"It takes at least two arguments: the cache timeout, in seconds, and the name to give the cache fragment. For example:"

should at least be something like

"It takes at least two arguments: the cache timeout, in seconds, and the name to give the cache fragment. The name will be taken as is, do not use a variable. For example:"

https://docs.djangoproject.com/en/dev/topics/cache/#template-fragment-caching

https://code.djangoproject.com/ticket/18110

| https://api.github.com/repos/django/django/pulls/153 | 2012-06-14T14:43:06Z | 2012-08-04T19:50:42Z | 2012-08-04T19:50:42Z | 2014-06-26T08:30:28Z | 164 | django/django | 50,876 |

Fix TFDWConv() `c1 == c2` check | diff --git a/models/tf.py b/models/tf.py

index b70e3748800..3428032a0aa 100644

--- a/models/tf.py

+++ b/models/tf.py

@@ -88,10 +88,10 @@ def call(self, inputs):

class TFDWConv(keras.layers.Layer):

# Depthwise convolution

- def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True, w=None):

+ def __init__(self, c1, c2, k=1, s=1, p=None, act=True, w=None):

# ch_in, ch_out, weights, kernel, stride, padding, groups

super().__init__()

- assert g == c1 == c2, f'TFDWConv() groups={g} must equal input={c1} and output={c2} channels'

+ assert c1 == c2, f'TFDWConv() input={c1} must equal output={c2} channels'

conv = keras.layers.DepthwiseConv2D(

kernel_size=k,

strides=s,

|

## 🛠️ PR Summary

<sub>Made with ❤️ by [Ultralytics Actions](https://github.com/ultralytics/actions)<sub>

### 🌟 Summary

Enhancement of Depthwise Convolution Layer in TensorFlow Models.

### 📊 Key Changes

- Removed the `g` parameter (number of groups) from `TFDWConv` layer initializer arguments.

- Modified the assertion check to only require input channels (`c1`) to be equal to the output channels (`c2`), instead of also checking against the `g` parameter.

### 🎯 Purpose & Impact

- 🧹 Simplifies the initialization of the depthwise convolution layer by removing an unnecessary parameter.

- 🛠️ Ensures the integrity of the model by checking that the number of input channels matches the number of output channels, a requirement for depthwise convolution.

- 🔧 Reduces potential for configuration errors by removing the group check, which is not needed in depthwise convolutions as the input and output channels are naturally equal.

- 👩💻 Helps developers to work with a cleaner interface when utilizing the TFDWConv layer in their machine learning models, potentially increasing development productivity and model stability. | https://api.github.com/repos/ultralytics/yolov5/pulls/7842 | 2022-05-16T16:06:17Z | 2022-05-16T16:06:46Z | 2022-05-16T16:06:46Z | 2024-01-19T10:27:17Z | 254 | ultralytics/yolov5 | 25,521 |

Fix for #13740 | diff --git a/youtube_dl/extractor/mlb.py b/youtube_dl/extractor/mlb.py

index 59cd4b8389f..4d45f960eea 100644

--- a/youtube_dl/extractor/mlb.py

+++ b/youtube_dl/extractor/mlb.py

@@ -15,7 +15,7 @@ class MLBIE(InfoExtractor):

(?:[\da-z_-]+\.)*mlb\.com/

(?:

(?:

- (?:.*?/)?video/(?:topic/[\da-z_-]+/)?v|

+ (?:.*?/)?video/(?:topic/[\da-z_-]+/)?(?:v|.*?/c-)|

(?:

shared/video/embed/(?:embed|m-internal-embed)\.html|

(?:[^/]+/)+(?:play|index)\.jsp|

@@ -94,6 +94,10 @@ class MLBIE(InfoExtractor):

'upload_date': '20150415',

}

},

+ {

+ 'url': 'https://www.mlb.com/video/hargrove-homers-off-caldwell/c-1352023483?tid=67793694',

+ 'only_matching': True,

+ },

{

'url': 'http://m.mlb.com/shared/video/embed/embed.html?content_id=35692085&topic_id=6479266&width=400&height=224&property=mlb',

'only_matching': True,

| ## Please follow the guide below

- You will be asked some questions, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your *pull request* (like that [x])

- Use *Preview* tab to see how your *pull request* will actually look like

---

### Before submitting a *pull request* make sure you have:

- [x] At least skimmed through [adding new extractor tutorial](https://github.com/rg3/youtube-dl#adding-support-for-a-new-site) and [youtube-dl coding conventions](https://github.com/rg3/youtube-dl#youtube-dl-coding-conventions) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?q=is%3Apr&type=Issues) the bugtracker for similar pull requests

### In order to be accepted and merged into youtube-dl each piece of code must be in public domain or released under [Unlicense](http://unlicense.org/). Check one of the following options:

- [x] I am the original author of this code and I am willing to release it under [Unlicense](http://unlicense.org/)

- [ ] I am not the original author of this code but it is in public domain or released under [Unlicense](http://unlicense.org/) (provide reliable evidence)

### What is the purpose of your *pull request*?

- [x] Bug fix

- [ ] Improvement

- [ ] New extractor

- [ ] New feature

---

### Description of your *pull request* and other information

Adjust `_VALID_URL` in mlb.py to fix #13740

| https://api.github.com/repos/ytdl-org/youtube-dl/pulls/13773 | 2017-07-29T23:32:08Z | 2017-08-04T15:46:55Z | 2017-08-04T15:46:55Z | 2017-08-04T15:46:55Z | 326 | ytdl-org/youtube-dl | 50,090 |

Create model card for spanbert-finetuned-squadv2 | diff --git a/model_cards/mrm8488/spanbert-finetuned-squadv2/README.md b/model_cards/mrm8488/spanbert-finetuned-squadv2/README.md

new file mode 100644

index 0000000000000..47a4cc42d8c89

--- /dev/null

+++ b/model_cards/mrm8488/spanbert-finetuned-squadv2/README.md

@@ -0,0 +1,86 @@

+---

+language: english

+thumbnail:

+---

+

+# SpanBERT (spanbert-base-cased) fine-tuned on SQuAD v2

+

+[SpanBERT](https://github.com/facebookresearch/SpanBERT) created by [Facebook Research](https://github.com/facebookresearch) and fine-tuned on [SQuAD 2.0](https://rajpurkar.github.io/SQuAD-explorer/) for **Q&A** downstream task.

+

+## Details of SpanBERT

+

+[SpanBERT: Improving Pre-training by Representing and Predicting Spans](https://arxiv.org/abs/1907.10529)

+

+## Details of the downstream task (Q&A) - Dataset

+

+[SQuAD2.0](https://rajpurkar.github.io/SQuAD-explorer/) combines the 100,000 questions in SQuAD1.1 with over 50,000 unanswerable questions written adversarially by crowdworkers to look similar to answerable ones. To do well on SQuAD2.0, systems must not only answer questions when possible, but also determine when no answer is supported by the paragraph and abstain from answering.

+

+| Dataset | Split | # samples |

+| -------- | ----- | --------- |

+| SQuAD2.0 | train | 130k |

+| SQuAD2.0 | eval | 12.3k |

+

+## Model training

+

+The model was trained on a Tesla P100 GPU and 25GB of RAM.

+The script for fine tuning can be found [here](https://github.com/huggingface/transformers/blob/master/examples/run_squad.py)

+

+## Results:

+

+| Metric | # Value |

+| ------ | --------- |

+| **EM** | **78.80** |

+| **F1** | **82.22** |

+

+### Raw metrics:

+

+```json

+{

+ "exact": 78.80064010780762,

+ "f1": 82.22801347271162,

+ "total": 11873,

+ "HasAns_exact": 78.74493927125506,

+ "HasAns_f1": 85.60951483831069,

+ "HasAns_total": 5928,

+ "NoAns_exact": 78.85618166526493,

+ "NoAns_f1": 78.85618166526493,

+ "NoAns_total": 5945,

+ "best_exact": 78.80064010780762,

+ "best_exact_thresh": 0.0,

+ "best_f1": 82.2280134727116,

+ "best_f1_thresh": 0.0

+}

+```

+

+## Comparison:

+

+| Model | EM | F1 score |

+| ----------------------------------------------------------------------------------------- | --------- | --------- |

+| [SpanBert official repo](https://github.com/facebookresearch/SpanBERT#pre-trained-models) | - | 83.6\* |

+| [spanbert-finetuned-squadv2](https://huggingface.co/mrm8488/spanbert-finetuned-squadv2) | **78.80** | **82.22** |

+

+## Model in action

+

+Fast usage with **pipelines**:

+

+```python

+from transformers import pipeline

+

+qa_pipeline = pipeline(

+ "question-answering",

+ model="mrm8488/spanbert-finetuned-squadv2",

+ tokenizer="mrm8488/spanbert-finetuned-squadv2"

+)

+

+qa_pipeline({

+ 'context': "Manuel Romero has been working hardly in the repository hugginface/transformers lately",

+ 'question': "Who has been working hard for hugginface/transformers lately?"

+

+})

+

+# Output: {'answer': 'Manuel Romero','end': 13,'score': 6.836378586818937e-09, 'start': 0}

+```

+

+> Created by [Manuel Romero/@mrm8488](https://twitter.com/mrm8488)

+

+> Made with <span style="color: #e25555;">♥</span> in Spain

| https://api.github.com/repos/huggingface/transformers/pulls/3293 | 2020-03-16T08:02:08Z | 2020-03-16T16:32:47Z | 2020-03-16T16:32:47Z | 2020-03-16T16:32:48Z | 1,044 | huggingface/transformers | 12,822 |

|

downloader.webclient: make reactor import local | diff --git a/scrapy/core/downloader/webclient.py b/scrapy/core/downloader/webclient.py

index 915cb5fe332..06cb9648978 100644

--- a/scrapy/core/downloader/webclient.py

+++ b/scrapy/core/downloader/webclient.py

@@ -3,7 +3,7 @@

from urllib.parse import urlparse, urlunparse, urldefrag

from twisted.web.http import HTTPClient

-from twisted.internet import defer, reactor

+from twisted.internet import defer

from twisted.internet.protocol import ClientFactory

from scrapy.http import Headers

@@ -170,6 +170,7 @@ def buildProtocol(self, addr):

p.followRedirect = self.followRedirect

p.afterFoundGet = self.afterFoundGet

if self.timeout:

+ from twisted.internet import reactor

timeoutCall = reactor.callLater(self.timeout, p.timeout)

self.deferred.addBoth(self._cancelTimeout, timeoutCall)

return p

| All reactor imports must be local not top level because twisted.internet.reactor import installs default reactor, and if you want to use non-default reactor like asyncio top level import will break things for you.

In my case I had a middleware imported in spider

in spider

```python

from project.middlewares.retry import CustomRetryMiddleware

```

this was importing

```

from scrapy.downloadermiddlewares import retry

```

then retry was importing

```

from scrapy.core.downloader.handlers.http11 import TunnelError

```

and http11 was importing

```

from scrapy.core.downloader.webclient import _parse

```

and webclient was importing and installing reactor. I wonder how this was not detected earlier? It should break things for people trying to be using asyncio reactor in more complex projects.

This import of reactor may be annoying for many projects because it is easy to forget and do import reactor somewhere in your scrapy middleware or somewhere else. Then you'll get an error when using asyncio, and some people will be confused they may not know where to look for source of the problem. | https://api.github.com/repos/scrapy/scrapy/pulls/5357 | 2021-12-30T12:15:31Z | 2021-12-31T10:57:12Z | 2021-12-31T10:57:12Z | 2021-12-31T10:57:26Z | 204 | scrapy/scrapy | 35,059 |

Update interpreter.py for a typo error | diff --git a/interpreter/interpreter.py b/interpreter/interpreter.py

index ea34fdf68..d7db07dbc 100644

--- a/interpreter/interpreter.py

+++ b/interpreter/interpreter.py

@@ -132,7 +132,7 @@ def cli(self):

def get_info_for_system_message(self):

"""

- Gets relevent information for the system message.

+ Gets relevant information for the system message.

"""

info = ""

| ### Describe the changes you have made:

I have fixed a typo error in interpreter.py file

### Reference any relevant issue (actually none for current PR)

None

- [x] I have performed a self-review of my code:

### I have tested the code on the following OS:

- [x] Windows

- [x] MacOS

- [x] Linux

| https://api.github.com/repos/OpenInterpreter/open-interpreter/pulls/397 | 2023-09-16T04:13:58Z | 2023-09-16T06:25:43Z | 2023-09-16T06:25:43Z | 2023-09-16T06:25:44Z | 102 | OpenInterpreter/open-interpreter | 40,810 |

Minor CF refactoring; enhance error logging for SSL cert errors | diff --git a/localstack/services/cloudformation/cloudformation_starter.py b/localstack/services/cloudformation/cloudformation_starter.py

index c4f8bfbbb21a9..cdadc7312c167 100644

--- a/localstack/services/cloudformation/cloudformation_starter.py

+++ b/localstack/services/cloudformation/cloudformation_starter.py

@@ -174,6 +174,14 @@ def update_physical_resource_id(resource):

LOG.warning('Unable to determine physical_resource_id for resource %s' % type(resource))

+def update_resource_name(resource, resource_json):

+ """ Some resources require minor fixes in their CF resource definition

+ before we can pass them on to deployment. """

+ props = resource_json['Properties'] = resource_json.get('Properties') or {}

+ if isinstance(resource, sfn_models.StateMachine) and not props.get('StateMachineName'):

+ props['StateMachineName'] = resource.name

+

+

def apply_patches():

""" Apply patches to make LocalStack seamlessly interact with the moto backend.

TODO: Eventually, these patches should be contributed to the upstream repo! """

@@ -354,13 +362,6 @@ def find_id(resource):

return resource

- def update_resource_name(resource, resource_json):

- """ Some resources require minor fixes in their CF resource definition

- before we can pass them on to deployment. """

- props = resource_json['Properties'] = resource_json.get('Properties') or {}

- if isinstance(resource, sfn_models.StateMachine) and not props.get('StateMachineName'):

- props['StateMachineName'] = resource.name

-

def update_resource_id(resource, new_id, props, region_name, stack_name, resource_map):

""" Update and fix the ID(s) of the given resource. """

diff --git a/localstack/utils/server/http2_server.py b/localstack/utils/server/http2_server.py

index 988ffe12299d6..60343a42b4555 100644

--- a/localstack/utils/server/http2_server.py

+++ b/localstack/utils/server/http2_server.py

@@ -1,5 +1,4 @@

import os

-import ssl

import asyncio

import logging

import traceback

@@ -113,13 +112,14 @@ def run_app_sync(*args, loop=None, shutdown_event=None):

try:

try:

return loop.run_until_complete(serve(app, config, **run_kwargs))

- except ssl.SSLError:

- c_exists = os.path.exists(cert_file_name)

- k_exists = os.path.exists(key_file_name)

- c_size = len(load_file(cert_file_name)) if c_exists else 0

- k_size = len(load_file(key_file_name)) if k_exists else 0

- LOG.warning('Unable to create SSL context. Cert files exist: %s %s (%sB), %s %s (%sB)' %

- (cert_file_name, c_exists, c_size, key_file_name, k_exists, k_size))

+ except Exception as e:

+ if 'SSLError' in str(e):

+ c_exists = os.path.exists(cert_file_name)

+ k_exists = os.path.exists(key_file_name)

+ c_size = len(load_file(cert_file_name)) if c_exists else 0

+ k_size = len(load_file(key_file_name)) if k_exists else 0

+ LOG.warning('Unable to create SSL context. Cert files exist: %s %s (%sB), %s %s (%sB)' %

+ (cert_file_name, c_exists, c_size, key_file_name, k_exists, k_size))

raise

finally:

try:

diff --git a/requirements.txt b/requirements.txt

index ced77277e46d4..3dae44630ef9f 100644

--- a/requirements.txt

+++ b/requirements.txt

@@ -41,7 +41,7 @@ pympler>=0.6

pyopenssl==17.5.0

python-coveralls>=2.9.1

pyyaml>=3.13,<=5.1

-quart>=0.12.0

+Quart>=0.12.0

requests>=2.20.0 #basic-lib

requests-aws4auth==0.9

sasl>=0.2.1

| Minor CF refactoring; enhance error logging for SSL cert errors | https://api.github.com/repos/localstack/localstack/pulls/2549 | 2020-06-11T23:05:17Z | 2020-06-11T23:47:14Z | 2020-06-11T23:47:14Z | 2020-06-11T23:47:20Z | 924 | localstack/localstack | 28,443 |

Contentview scripts | diff --git a/examples/custom_contentviews.py b/examples/custom_contentviews.py

new file mode 100644

index 0000000000..1a2bcb1e8d

--- /dev/null

+++ b/examples/custom_contentviews.py

@@ -0,0 +1,66 @@

+import string

+from libmproxy import script, flow, utils

+import libmproxy.contentviews as cv

+from netlib.http import Headers

+import lxml.html

+import lxml.etree

+

+

+class ViewPigLatin(cv.View):

+ name = "pig_latin_HTML"

+ prompt = ("pig latin HTML", "l")

+ content_types = ["text/html"]

+

+ def __call__(self, data, **metadata):

+ if utils.isXML(data):

+ parser = lxml.etree.HTMLParser(

+ strip_cdata=True,

+ remove_blank_text=True

+ )

+ d = lxml.html.fromstring(data, parser=parser)

+ docinfo = d.getroottree().docinfo

+

+ def piglify(src):

+ words = string.split(src)

+ ret = ''

+ for word in words:

+ idx = -1

+ while word[idx] in string.punctuation and (idx * -1) != len(word): idx -= 1

+ if word[0].lower() in 'aeiou':

+ if idx == -1: ret += word[0:] + "hay"

+ else: ret += word[0:len(word)+idx+1] + "hay" + word[idx+1:]

+ else:

+ if idx == -1: ret += word[1:] + word[0] + "ay"

+ else: ret += word[1:len(word)+idx+1] + word[0] + "ay" + word[idx+1:]

+ ret += ' '

+ return ret.strip()

+

+ def recurse(root):

+ if hasattr(root, 'text') and root.text:

+ root.text = piglify(root.text)

+ if hasattr(root, 'tail') and root.tail:

+ root.tail = piglify(root.tail)

+

+ if len(root):

+ for child in root:

+ recurse(child)

+

+ recurse(d)

+

+ s = lxml.etree.tostring(

+ d,

+ pretty_print=True,

+ doctype=docinfo.doctype

+ )

+ return "HTML", cv.format_text(s)

+

+

+pig_view = ViewPigLatin()

+

+

+def start(context, argv):

+ context.add_contentview(pig_view)

+

+

+def stop(context):

+ context.remove_contentview(pig_view)

diff --git a/libmproxy/contentviews.py b/libmproxy/contentviews.py

index 9af0803353..2f46cccafc 100644

--- a/libmproxy/contentviews.py

+++ b/libmproxy/contentviews.py

@@ -479,34 +479,9 @@ def __call__(self, data, **metadata):

return None

-views = [

- ViewAuto(),

- ViewRaw(),

- ViewHex(),

- ViewJSON(),

- ViewXML(),

- ViewWBXML(),

- ViewHTML(),

- ViewHTMLOutline(),

- ViewJavaScript(),

- ViewCSS(),

- ViewURLEncoded(),

- ViewMultipart(),

- ViewImage(),

-]

-if pyamf:

- views.append(ViewAMF())

-

-if ViewProtobuf.is_available():

- views.append(ViewProtobuf())

-

+views = []

content_types_map = {}

-for i in views:

- for ct in i.content_types:

- l = content_types_map.setdefault(ct, [])

- l.append(i)

-

-view_prompts = [i.prompt for i in views]

+view_prompts = []

def get_by_shortcut(c):

@@ -515,6 +490,58 @@ def get_by_shortcut(c):

return i

+def add(view):

+ # TODO: auto-select a different name (append an integer?)

+ for i in views:

+ if i.name == view.name:

+ raise ContentViewException("Duplicate view: " + view.name)

+

+ # TODO: the UI should auto-prompt for a replacement shortcut

+ for prompt in view_prompts:

+ if prompt[1] == view.prompt[1]:

+ raise ContentViewException("Duplicate view shortcut: " + view.prompt[1])

+

+ views.append(view)

+

+ for ct in view.content_types:

+ l = content_types_map.setdefault(ct, [])

+ l.append(view)

+

+ view_prompts.append(view.prompt)

+

+

+def remove(view):

+ for ct in view.content_types:

+ l = content_types_map.setdefault(ct, [])

+ l.remove(view)

+

+ if not len(l):

+ del content_types_map[ct]

+

+ view_prompts.remove(view.prompt)

+ views.remove(view)

+

+

+add(ViewAuto())

+add(ViewRaw())

+add(ViewHex())

+add(ViewJSON())

+add(ViewXML())

+add(ViewWBXML())

+add(ViewHTML())

+add(ViewHTMLOutline())

+add(ViewJavaScript())

+add(ViewCSS())

+add(ViewURLEncoded())

+add(ViewMultipart())

+add(ViewImage())

+

+if pyamf:

+ add(ViewAMF())

+

+if ViewProtobuf.is_available():

+ add(ViewProtobuf())

+

def get(name):

for i in views:

if i.name == name:

diff --git a/libmproxy/flow.py b/libmproxy/flow.py

index 55a4dbcfb3..3343e694f1 100644

--- a/libmproxy/flow.py

+++ b/libmproxy/flow.py

@@ -9,7 +9,7 @@

import os

import re

import urlparse

-

+import inspect

from netlib import wsgi

from netlib.exceptions import HttpException

@@ -21,6 +21,7 @@

from .protocol.http_replay import RequestReplayThread

from .protocol import Kill

from .models import ClientConnection, ServerConnection, HTTPResponse, HTTPFlow, HTTPRequest

+from . import contentviews as cv

class AppRegistry:

diff --git a/libmproxy/script.py b/libmproxy/script.py

index 9d051c129c..4da40c52f6 100644

--- a/libmproxy/script.py

+++ b/libmproxy/script.py

@@ -5,6 +5,8 @@

import shlex

import sys

+from . import contentviews as cv

+

class ScriptError(Exception):

pass

@@ -56,6 +58,12 @@ def replay_request(self, f):

def app_registry(self):

return self._master.apps

+ def add_contentview(self, view_obj):

+ cv.add(view_obj)

+

+ def remove_contentview(self, view_obj):

+ cv.remove(view_obj)

+

class Script:

"""

diff --git a/test/test_contentview.py b/test/test_contentview.py

index 9760852094..eba624a269 100644

--- a/test/test_contentview.py

+++ b/test/test_contentview.py

@@ -210,6 +210,21 @@ def test_get_content_view(self):

assert "decoded gzip" in r[0]

assert "Raw" in r[0]

+ def test_add_cv(self):

+ class TestContentView(cv.View):

+ name = "test"

+ prompt = ("t", "test")

+

+ tcv = TestContentView()

+ cv.add(tcv)

+

+ # repeated addition causes exception

+ tutils.raises(

+ ContentViewException,

+ cv.add,

+ tcv

+ )

+

if pyamf:

def test_view_amf_request():

diff --git a/test/test_custom_contentview.py b/test/test_custom_contentview.py

new file mode 100644

index 0000000000..4b5a3e53f6

--- /dev/null

+++ b/test/test_custom_contentview.py

@@ -0,0 +1,52 @@

+from libmproxy import script, flow

+import libmproxy.contentviews as cv

+from netlib.http import Headers

+

+

+def test_custom_views():

+ class ViewNoop(cv.View):

+ name = "noop"

+ prompt = ("noop", "n")

+ content_types = ["text/none"]

+

+ def __call__(self, data, **metadata):

+ return "noop", cv.format_text(data)

+

+

+ view_obj = ViewNoop()

+

+ cv.add(view_obj)

+

+ assert cv.get("noop")

+

+ r = cv.get_content_view(

+ cv.get("noop"),

+ "[1, 2, 3]",

+ headers=Headers(

+ content_type="text/plain"

+ )

+ )

+ assert "noop" in r[0]

+

+ # now try content-type matching

+ r = cv.get_content_view(

+ cv.get("Auto"),

+ "[1, 2, 3]",

+ headers=Headers(

+ content_type="text/none"

+ )

+ )

+ assert "noop" in r[0]

+

+ # now try removing the custom view

+ cv.remove(view_obj)

+ r = cv.get_content_view(

+ cv.get("Auto"),

+ "[1, 2, 3]",

+ headers=Headers(

+ content_type="text/none"

+ )

+ )

+ assert "noop" not in r[0]

+

+

diff --git a/test/test_script.py b/test/test_script.py

index 1b0e5a5b4c..8612d5f344 100644

--- a/test/test_script.py

+++ b/test/test_script.py

@@ -127,3 +127,4 @@ def test_command_parsing():

absfilepath = os.path.normcase(tutils.test_data.path("scripts/a.py"))

s = script.Script(absfilepath, fm)

assert os.path.isfile(s.args[0])

+

| Based on feedback from PR #832, simple custom content view support in scripts for mitmproxy/mitmdump.

| https://api.github.com/repos/mitmproxy/mitmproxy/pulls/833 | 2015-11-13T21:56:30Z | 2015-11-14T02:41:05Z | 2015-11-14T02:41:05Z | 2015-11-14T02:41:06Z | 2,213 | mitmproxy/mitmproxy | 27,422 |

PERF: add shortcut to Timestamp constructor | diff --git a/asv_bench/benchmarks/tslibs/timestamp.py b/asv_bench/benchmarks/tslibs/timestamp.py

index 8ebb2d8d2f35d..3ef9b814dd79e 100644

--- a/asv_bench/benchmarks/tslibs/timestamp.py

+++ b/asv_bench/benchmarks/tslibs/timestamp.py

@@ -1,12 +1,19 @@

import datetime

import dateutil

+import numpy as np

import pytz

from pandas import Timestamp

class TimestampConstruction:

+ def setup(self):

+ self.npdatetime64 = np.datetime64("2020-01-01 00:00:00")

+ self.dttime_unaware = datetime.datetime(2020, 1, 1, 0, 0, 0)

+ self.dttime_aware = datetime.datetime(2020, 1, 1, 0, 0, 0, 0, pytz.UTC)

+ self.ts = Timestamp("2020-01-01 00:00:00")

+

def time_parse_iso8601_no_tz(self):

Timestamp("2017-08-25 08:16:14")

@@ -28,6 +35,18 @@ def time_fromordinal(self):

def time_fromtimestamp(self):

Timestamp.fromtimestamp(1515448538)

+ def time_from_npdatetime64(self):

+ Timestamp(self.npdatetime64)

+

+ def time_from_datetime_unaware(self):

+ Timestamp(self.dttime_unaware)

+

+ def time_from_datetime_aware(self):

+ Timestamp(self.dttime_aware)

+

+ def time_from_pd_timestamp(self):

+ Timestamp(self.ts)

+

class TimestampProperties:

_tzs = [None, pytz.timezone("Europe/Amsterdam"), pytz.UTC, dateutil.tz.tzutc()]

diff --git a/doc/source/whatsnew/v1.1.0.rst b/doc/source/whatsnew/v1.1.0.rst

index d0cf92b60fe0d..a0e1c964dd365 100644

--- a/doc/source/whatsnew/v1.1.0.rst

+++ b/doc/source/whatsnew/v1.1.0.rst

@@ -109,7 +109,9 @@ Deprecations

Performance improvements

~~~~~~~~~~~~~~~~~~~~~~~~

+

- Performance improvement in :class:`Timedelta` constructor (:issue:`30543`)

+- Performance improvement in :class:`Timestamp` constructor (:issue:`30543`)

-

-

diff --git a/pandas/_libs/tslibs/timestamps.pyx b/pandas/_libs/tslibs/timestamps.pyx

index 36566b55e74ad..4915671aa6512 100644

--- a/pandas/_libs/tslibs/timestamps.pyx

+++ b/pandas/_libs/tslibs/timestamps.pyx

@@ -391,7 +391,18 @@ class Timestamp(_Timestamp):

# User passed tzinfo instead of tz; avoid silently ignoring

tz, tzinfo = tzinfo, None

- if isinstance(ts_input, str):

+ # GH 30543 if pd.Timestamp already passed, return it

+ # check that only ts_input is passed

+ # checking verbosely, because cython doesn't optimize

+ # list comprehensions (as of cython 0.29.x)

+ if (isinstance(ts_input, Timestamp) and freq is None and

+ tz is None and unit is None and year is None and

+ month is None and day is None and hour is None and

+ minute is None and second is None and

+ microsecond is None and nanosecond is None and

+ tzinfo is None):

+ return ts_input

+ elif isinstance(ts_input, str):

# User passed a date string to parse.

# Check that the user didn't also pass a date attribute kwarg.

if any(arg is not None for arg in _date_attributes):

diff --git a/pandas/tests/indexes/datetimes/test_constructors.py b/pandas/tests/indexes/datetimes/test_constructors.py

index b6013c3939793..68285d41bda70 100644

--- a/pandas/tests/indexes/datetimes/test_constructors.py

+++ b/pandas/tests/indexes/datetimes/test_constructors.py

@@ -957,3 +957,10 @@ def test_timedelta_constructor_identity():

expected = pd.Timedelta(np.timedelta64(1, "s"))

result = pd.Timedelta(expected)

assert result is expected

+

+

+def test_timestamp_constructor_identity():

+ # Test for #30543

+ expected = pd.Timestamp("2017-01-01T12")

+ result = pd.Timestamp(expected)

+ assert result is expected

diff --git a/pandas/tests/indexes/datetimes/test_timezones.py b/pandas/tests/indexes/datetimes/test_timezones.py

index c785eb67e5184..cd8e8c3542cce 100644

--- a/pandas/tests/indexes/datetimes/test_timezones.py

+++ b/pandas/tests/indexes/datetimes/test_timezones.py

@@ -2,7 +2,6 @@

Tests for DatetimeIndex timezone-related methods

"""

from datetime import date, datetime, time, timedelta, tzinfo

-from distutils.version import LooseVersion

import dateutil

from dateutil.tz import gettz, tzlocal

@@ -11,7 +10,6 @@

import pytz

from pandas._libs.tslibs import conversion, timezones

-from pandas.compat._optional import _get_version

import pandas.util._test_decorators as td

import pandas as pd

@@ -583,15 +581,7 @@ def test_dti_construction_ambiguous_endpoint(self, tz):

["US/Pacific", "shift_forward", "2019-03-10 03:00"],

["dateutil/US/Pacific", "shift_forward", "2019-03-10 03:00"],

["US/Pacific", "shift_backward", "2019-03-10 01:00"],

- pytest.param(

- "dateutil/US/Pacific",

- "shift_backward",

- "2019-03-10 01:00",

- marks=pytest.mark.xfail(

- LooseVersion(_get_version(dateutil)) < LooseVersion("2.7.0"),

- reason="GH 31043",

- ),

- ),

+ ["dateutil/US/Pacific", "shift_backward", "2019-03-10 01:00"],

["US/Pacific", timedelta(hours=1), "2019-03-10 03:00"],

],

)

| - [X] closes #30543

- [X] tests added 1 / passed 1

- [X] passes `black pandas`

- [X] passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

- [X] whatsnew entry

This implements a shortcut in the Timestamp constructor to cut down on processing if Timestamp is passed. We still need to check if the timezone was passed correctly. Then, if a Timestamp was passed, and there is no timezone, we just return that same Timestamp.

A test is added to check that the Timestamp is still the same object.

PR for timedelta to be added once I confirm that this is the approach we want to go with.

| https://api.github.com/repos/pandas-dev/pandas/pulls/30676 | 2020-01-04T07:19:38Z | 2020-01-26T01:03:37Z | 2020-01-26T01:03:37Z | 2020-01-27T06:55:10Z | 1,464 | pandas-dev/pandas | 45,157 |

BUG: pivot/unstack leading to too many items should raise exception | diff --git a/doc/source/whatsnew/v0.24.0.rst b/doc/source/whatsnew/v0.24.0.rst

index a84fd118061bc..5f40ca2ad3b36 100644

--- a/doc/source/whatsnew/v0.24.0.rst

+++ b/doc/source/whatsnew/v0.24.0.rst

@@ -1646,6 +1646,7 @@ Reshaping

- :meth:`DataFrame.nlargest` and :meth:`DataFrame.nsmallest` now returns the correct n values when keep != 'all' also when tied on the first columns (:issue:`22752`)

- Constructing a DataFrame with an index argument that wasn't already an instance of :class:`~pandas.core.Index` was broken (:issue:`22227`).

- Bug in :class:`DataFrame` prevented list subclasses to be used to construction (:issue:`21226`)

+- Bug in :func:`DataFrame.unstack` and :func:`DataFrame.pivot_table` returning a missleading error message when the resulting DataFrame has more elements than int32 can handle. Now, the error message is improved, pointing towards the actual problem (:issue:`20601`)

.. _whatsnew_0240.bug_fixes.sparse:

diff --git a/pandas/core/reshape/pivot.py b/pandas/core/reshape/pivot.py

index 61ac5d9ed6a2e..c7c447d18b6b1 100644

--- a/pandas/core/reshape/pivot.py

+++ b/pandas/core/reshape/pivot.py

@@ -78,8 +78,6 @@ def pivot_table(data, values=None, index=None, columns=None, aggfunc='mean',

pass

values = list(values)

- # group by the cartesian product of the grouper

- # if we have a categorical

grouped = data.groupby(keys, observed=False)

agged = grouped.agg(aggfunc)

if dropna and isinstance(agged, ABCDataFrame) and len(agged.columns):

diff --git a/pandas/core/reshape/reshape.py b/pandas/core/reshape/reshape.py

index 70161826696c5..f436b3b92a359 100644

--- a/pandas/core/reshape/reshape.py

+++ b/pandas/core/reshape/reshape.py

@@ -109,6 +109,21 @@ def __init__(self, values, index, level=-1, value_columns=None,

self.removed_level = self.new_index_levels.pop(self.level)

self.removed_level_full = index.levels[self.level]

+ # Bug fix GH 20601

+ # If the data frame is too big, the number of unique index combination

+ # will cause int32 overflow on windows environments.

+ # We want to check and raise an error before this happens

+ num_rows = np.max([index_level.size for index_level

+ in self.new_index_levels])

+ num_columns = self.removed_level.size

+

+ # GH20601: This forces an overflow if the number of cells is too high.

+ num_cells = np.multiply(num_rows, num_columns, dtype=np.int32)

+

+ if num_rows > 0 and num_columns > 0 and num_cells <= 0:

+ raise ValueError('Unstacked DataFrame is too big, '

+ 'causing int32 overflow')

+

self._make_sorted_values_labels()

self._make_selectors()

diff --git a/pandas/tests/reshape/test_pivot.py b/pandas/tests/reshape/test_pivot.py

index e32e1999836ec..a2b5eacd873bb 100644

--- a/pandas/tests/reshape/test_pivot.py

+++ b/pandas/tests/reshape/test_pivot.py

@@ -1272,6 +1272,17 @@ def test_pivot_string_func_vs_func(self, f, f_numpy):

aggfunc=f_numpy)

tm.assert_frame_equal(result, expected)

+ @pytest.mark.slow

+ def test_pivot_number_of_levels_larger_than_int32(self):

+ # GH 20601

+ df = DataFrame({'ind1': np.arange(2 ** 16),

+ 'ind2': np.arange(2 ** 16),

+ 'count': 0})

+

+ with pytest.raises(ValueError, match='int32 overflow'):

+ df.pivot_table(index='ind1', columns='ind2',

+ values='count', aggfunc='count')

+

class TestCrosstab(object):

diff --git a/pandas/tests/test_multilevel.py b/pandas/tests/test_multilevel.py

index 6c1a2490ea76e..ce95f0f86ef7b 100644

--- a/pandas/tests/test_multilevel.py

+++ b/pandas/tests/test_multilevel.py

@@ -3,6 +3,7 @@

from warnings import catch_warnings, simplefilter

import datetime

import itertools

+

import pytest

import pytz

@@ -720,6 +721,14 @@ def test_unstack_unobserved_keys(self):

recons = result.stack()

tm.assert_frame_equal(recons, df)

+ @pytest.mark.slow

+ def test_unstack_number_of_levels_larger_than_int32(self):

+ # GH 20601

+ df = DataFrame(np.random.randn(2 ** 16, 2),

+ index=[np.arange(2 ** 16), np.arange(2 ** 16)])

+ with pytest.raises(ValueError, match='int32 overflow'):

+ df.unstack()

+

def test_stack_order_with_unsorted_levels(self):

# GH 16323

|

- [x] closes #20601

- [x] tests added / passed

- [x] passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

- [x] whatsnew entry | https://api.github.com/repos/pandas-dev/pandas/pulls/23512 | 2018-11-05T16:56:10Z | 2018-12-31T13:16:44Z | 2018-12-31T13:16:44Z | 2018-12-31T15:51:55Z | 1,237 | pandas-dev/pandas | 45,175 |

Address #1980 | diff --git a/docs/patterns/packages.rst b/docs/patterns/packages.rst

index af51717d92..1cd7797420 100644

--- a/docs/patterns/packages.rst

+++ b/docs/patterns/packages.rst

@@ -8,9 +8,9 @@ module. That is quite simple. Imagine a small application looks like

this::

/yourapplication

- /yourapplication.py

+ yourapplication.py

/static

- /style.css

+ style.css

/templates

layout.html

index.html

@@ -29,9 +29,9 @@ You should then end up with something like that::

/yourapplication

/yourapplication

- /__init__.py

+ __init__.py

/static

- /style.css

+ style.css

/templates

layout.html

index.html

@@ -41,11 +41,36 @@ You should then end up with something like that::

But how do you run your application now? The naive ``python

yourapplication/__init__.py`` will not work. Let's just say that Python

does not want modules in packages to be the startup file. But that is not

-a big problem, just add a new file called :file:`runserver.py` next to the inner

+a big problem, just add a new file called :file:`setup.py` next to the inner

:file:`yourapplication` folder with the following contents::

- from yourapplication import app

- app.run(debug=True)

+ from setuptools import setup

+

+ setup(

+ name='yourapplication',

+ packages=['yourapplication'],

+ include_package_data=True,

+ install_requires=[

+ 'flask',

+ ],

+ )

+

+In order to run the application you need to export an environment variable

+that tells Flask where to find the application instance::

+

+ export FLASK_APP=yourapplication

+

+If you are outside of the project directory make sure to provide the exact

+path to your application directory. Similiarly you can turn on "debug

+mode" with this environment variable::

+

+ export FLASK_DEBUG=true

+

+In order to install and run the application you need to issue the following

+commands::

+

+ pip install -e .

+ flask run

What did we gain from this? Now we can restructure the application a bit

into multiple modules. The only thing you have to remember is the

@@ -77,12 +102,12 @@ And this is what :file:`views.py` would look like::

You should then end up with something like that::

/yourapplication

- /runserver.py

+ setup.py

/yourapplication

- /__init__.py

- /views.py

+ __init__.py

+ views.py

/static

- /style.css

+ style.css

/templates

layout.html

index.html

| - Large apps play nicer with recommended project structure #1980

- runserver.py --> setup.py

- Run as:

pip install -e.

export FLASK_APP=yourapplication

flask run

I did not change much, one new paragraph.

<!-- Reviewable:start -->

---

This change is [<img src="https://reviewable.io/review_button.svg" height="34" align="absmiddle" alt="Reviewable"/>](https://reviewable.io/reviews/pallets/flask/2021)

<!-- Reviewable:end -->

| https://api.github.com/repos/pallets/flask/pulls/2021 | 2016-09-11T15:38:18Z | 2016-09-11T15:53:35Z | 2016-09-11T15:53:35Z | 2020-11-14T04:33:22Z | 665 | pallets/flask | 20,216 |

Fix superbooga when using regenerate | diff --git a/extensions/superbooga/script.py b/extensions/superbooga/script.py

index 5ef14d9d82..475cf1e061 100644

--- a/extensions/superbooga/script.py

+++ b/extensions/superbooga/script.py

@@ -96,7 +96,8 @@ def apply_settings(chunk_count, chunk_count_initial, time_weight):

def custom_generate_chat_prompt(user_input, state, **kwargs):

global chat_collector

- history = state['history']

+ # get history as being modified when using regenerate.

+ history = kwargs['history']

if state['mode'] == 'instruct':

results = collector.get_sorted(user_input, n_results=params['chunk_count'])

| When superbooga is active and you use regenerate, superbooga uses the history that still includes the output of the last query. This little change fixes it. I do not think that there are other side effects. | https://api.github.com/repos/oobabooga/text-generation-webui/pulls/3362 | 2023-07-30T00:51:21Z | 2023-08-09T02:26:28Z | 2023-08-09T02:26:28Z | 2023-08-09T02:51:18Z | 158 | oobabooga/text-generation-webui | 26,598 |

Fix leaderboard.json file in Hebrew translation, Resolves #3159. | diff --git a/website/public/locales/he/leaderboard.json b/website/public/locales/he/leaderboard.json

index 117c7b2296..fc8f45535a 100644

--- a/website/public/locales/he/leaderboard.json

+++ b/website/public/locales/he/leaderboard.json

@@ -9,6 +9,7 @@

"labels_simple": "תוויות (פשוטות)",

"last_updated_at": "עודכן לאחרונה ב: {{val, datetime}}",

"leaderboard": "לוח ניהול",

+ "level_progress_message": "עם סך נקודות של {{score}}, אתה הגעת לרמה {{level,number,integer}}!",

"month": "חודש",

"monthly": "חודשי",

"next": "הבא",

@@ -19,6 +20,7 @@

"prompt": "קלטים",

"rank": "דרגה",

"rankings": "דירוגים",

+ "reached_max_level": "הגעת לרמה במקסימלית, תודה על העבודה הקשה!",

"replies_assistant": "תשובות כעוזר",

"replies_prompter": "תשובות כמזין",

"reply": "תשובות",

@@ -31,6 +33,7 @@

"view_all": "הצג הכל",

"week": "שבוע",

"weekly": "שבועי",

+ "xp_progress_message": "אתה צריך עוד {{need, number, integer}} נקודות כדי להגיע לרמה הבאה!",

"your_account": "החשבון שלך",

"your_stats": "הסטטיסטיקה שלך"

}

| Added `level_progress_message`, `reached_max_level`, and `xp_progress_message` to the leaderboard.json file in the Hebrew translation. | https://api.github.com/repos/LAION-AI/Open-Assistant/pulls/3160 | 2023-05-14T16:36:40Z | 2023-05-14T18:07:20Z | 2023-05-14T18:07:20Z | 2023-05-14T18:30:37Z | 522 | LAION-AI/Open-Assistant | 36,878 |

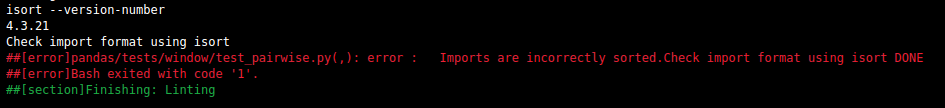

CI: Format isort output for azure | diff --git a/ci/code_checks.sh b/ci/code_checks.sh

index ceb13c52ded9c..cfe55f1e05f71 100755

--- a/ci/code_checks.sh

+++ b/ci/code_checks.sh

@@ -105,7 +105,12 @@ if [[ -z "$CHECK" || "$CHECK" == "lint" ]]; then

# Imports - Check formatting using isort see setup.cfg for settings

MSG='Check import format using isort ' ; echo $MSG

- isort --recursive --check-only pandas asv_bench

+ ISORT_CMD="isort --recursive --check-only pandas asv_bench"

+ if [[ "$GITHUB_ACTIONS" == "true" ]]; then

+ eval $ISORT_CMD | awk '{print "##[error]" $0}'; RET=$(($RET + ${PIPESTATUS[0]}))

+ else

+ eval $ISORT_CMD

+ fi

RET=$(($RET + $?)) ; echo $MSG "DONE"

fi

| - [x] closes #27179

- [x] tests added / passed

- [x] passes `black pandas`

- [x] passes `git diff upstream/master -u -- "*.py" | flake8 --diff`

Current behaviour on master of isort formatting:

Finishing up stale PR @https://github.com/pandas-dev/pandas/pull/27334

| https://api.github.com/repos/pandas-dev/pandas/pulls/29654 | 2019-11-16T02:59:26Z | 2019-12-06T01:10:29Z | 2019-12-06T01:10:28Z | 2019-12-25T20:27:02Z | 230 | pandas-dev/pandas | 45,550 |

Slight refactoring to allow customizing DynamoDB server startup | diff --git a/localstack/services/dynamodb/server.py b/localstack/services/dynamodb/server.py

index 2752e309d1a08..59aff5c8dc57a 100644

--- a/localstack/services/dynamodb/server.py

+++ b/localstack/services/dynamodb/server.py

@@ -100,11 +100,13 @@ def create_dynamodb_server(port=None) -> DynamodbServer:

Creates a dynamodb server from the LocalStack configuration.

"""

port = port or get_free_tcp_port()

+ ddb_data_dir = f"{config.dirs.data}/dynamodb" if config.dirs.data else None

+ return do_create_dynamodb_server(port, ddb_data_dir)

- server = DynamodbServer(port)

- if config.dirs.data:

- ddb_data_dir = "%s/dynamodb" % config.dirs.data

+def do_create_dynamodb_server(port: int, ddb_data_dir: Optional[str]) -> DynamodbServer:

+ server = DynamodbServer(port)

+ if ddb_data_dir:

mkdir(ddb_data_dir)

absolute_path = os.path.abspath(ddb_data_dir)

server.db_path = absolute_path

| DynamoDBLocal can start in [two different modes](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/DynamoDBLocal.UsageNotes.html). If a `dbPath` is set, DynamoDB writes its database files in that directory.

If not, it runs in `inMemory`mode with a transient state (which is what we currently do if persistence is not enabled).

Before, we were interested in having files on disk only when `DATA_DIR` was set. However, now that Pods are independent from persistence, we have a similar need. Therefore, we would just set a temporary directory to dump the database files.

As a future optimization we can investigate whether is possible to run start DynamoDBLocal `inMemory` and only ask for its content on the fly.

@whummer this is basically what we briefly discussed before. Could there be any implications that I do not see? | https://api.github.com/repos/localstack/localstack/pulls/6109 | 2022-05-20T16:00:59Z | 2022-05-21T15:29:05Z | 2022-05-21T15:29:05Z | 2022-05-21T15:29:22Z | 256 | localstack/localstack | 28,639 |

cli[patch]: copyright 2024 default | diff --git a/libs/cli/langchain_cli/integration_template/LICENSE b/libs/cli/langchain_cli/integration_template/LICENSE

index 426b65090341f3..fc0602feecdd67 100644

--- a/libs/cli/langchain_cli/integration_template/LICENSE

+++ b/libs/cli/langchain_cli/integration_template/LICENSE

@@ -1,6 +1,6 @@

MIT License

-Copyright (c) 2023 LangChain, Inc.

+Copyright (c) 2024 LangChain, Inc.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

diff --git a/libs/cli/langchain_cli/package_template/LICENSE b/libs/cli/langchain_cli/package_template/LICENSE

index 426b65090341f3..fc0602feecdd67 100644

--- a/libs/cli/langchain_cli/package_template/LICENSE

+++ b/libs/cli/langchain_cli/package_template/LICENSE

@@ -1,6 +1,6 @@

MIT License

-Copyright (c) 2023 LangChain, Inc.

+Copyright (c) 2024 LangChain, Inc.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

diff --git a/templates/retrieval-agent-fireworks/LICENSE b/templates/retrieval-agent-fireworks/LICENSE

index 426b65090341f3..fc0602feecdd67 100644

--- a/templates/retrieval-agent-fireworks/LICENSE

+++ b/templates/retrieval-agent-fireworks/LICENSE

@@ -1,6 +1,6 @@

MIT License

-Copyright (c) 2023 LangChain, Inc.

+Copyright (c) 2024 LangChain, Inc.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

| https://api.github.com/repos/langchain-ai/langchain/pulls/17204 | 2024-02-07T22:51:51Z | 2024-02-07T22:52:37Z | 2024-02-07T22:52:37Z | 2024-02-07T22:56:26Z | 413 | langchain-ai/langchain | 42,832 |

|

Fix race condition when running mssql tests | diff --git a/scripts/ci/docker-compose/backend-mssql.yml b/scripts/ci/docker-compose/backend-mssql.yml

index 69b4aa5fb6e5a..7fc5540e467a0 100644

--- a/scripts/ci/docker-compose/backend-mssql.yml

+++ b/scripts/ci/docker-compose/backend-mssql.yml

@@ -49,6 +49,12 @@ services:

entrypoint:

- bash

- -c

- - opt/mssql-tools/bin/sqlcmd -S mssql -U sa -P Airflow123 -i /mssql_create_airflow_db.sql || true

+ - >

+ for i in {1..10};

+ do

+ /opt/mssql-tools/bin/sqlcmd -S mssql -U sa -P Airflow123 -i /mssql_create_airflow_db.sql &&

+ exit 0;

+ sleep 1;

+ done

volumes:

- ./mssql_create_airflow_db.sql:/mssql_create_airflow_db.sql:ro

| There is a race condition where initialization of Airlfow DB

for mssql might be executed when the server is started but it is

not yet initialized with a model db needed to create airflow db.

In such case mssql database intialization will fail as it will

not be able to obtain a locl on the `model` database. The error

in the mssqlsetup container will be similar to:

```

Msg 1807, Level 16, State 3, Server d2888dd467fe, Line 20

Could not obtain exclusive lock on database 'model'. Retry the operation later.

Msg 1802, Level 16, State 4, Server d2888dd467fe, Line 20

CREATE DATABASE failed. Some file names listed could not be created. Check related errors.

Msg 5011, Level 14, State 5, Server d2888dd467fe, Line 21

User does not have permission to alter database 'airflow', the database does not exist, or the database is not in a state that allows access checks.

Msg 5069, Level 16, State 1, Server d2888dd467fe, Line 21

ALTER DATABASE statement failed.

```

This PR alters the setup job to try to create airflow db

several times and wait a second before every retry.

<!--

Thank you for contributing! Please make sure that your code changes

are covered with tests. And in case of new features or big changes

remember to adjust the documentation.

Feel free to ping committers for the review!

In case of existing issue, reference it using one of the following:

closes: #ISSUE

related: #ISSUE

How to write a good git commit message:

http://chris.beams.io/posts/git-commit/

-->

---

**^ Add meaningful description above**

Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/main/CONTRIBUTING.rst#pull-request-guidelines)** for more information.

In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/main/UPDATING.md).

| https://api.github.com/repos/apache/airflow/pulls/19863 | 2021-11-28T16:59:25Z | 2021-11-29T11:13:41Z | 2021-11-29T11:13:41Z | 2022-07-29T20:10:11Z | 225 | apache/airflow | 14,261 |

De-Multiprocess Convert | diff --git a/plugins/convert/writer/opencv.py b/plugins/convert/writer/opencv.py

index cabd0ab652..17cafdc591 100644

--- a/plugins/convert/writer/opencv.py

+++ b/plugins/convert/writer/opencv.py

@@ -47,6 +47,7 @@ def write(self, filename, image):

logger.error("Failed to save image '%s'. Original Error: %s", filename, err)

def pre_encode(self, image):

+ """ Pre_encode the image in lib/convert.py threads as it is a LOT quicker """

logger.trace("Pre-encoding image")

image = cv2.imencode(self.extension, image, self.args)[1] # pylint: disable=no-member

return image

diff --git a/plugins/convert/writer/pillow.py b/plugins/convert/writer/pillow.py

index e207183c22..ef440f2b6c 100644

--- a/plugins/convert/writer/pillow.py

+++ b/plugins/convert/writer/pillow.py

@@ -50,6 +50,7 @@ def write(self, filename, image):

logger.error("Failed to save image '%s'. Original Error: %s", filename, err)

def pre_encode(self, image):

+ """ Pre_encode the image in lib/convert.py threads as it is a LOT quicker """

logger.trace("Pre-encoding image")

fmt = self.format_dict.get(self.config["format"], None)

fmt = self.config["format"].upper() if fmt is None else fmt

diff --git a/scripts/convert.py b/scripts/convert.py

index bd3a1632cf..cf51e73902 100644

--- a/scripts/convert.py

+++ b/scripts/convert.py

@@ -6,6 +6,7 @@

import os

import sys

from threading import Event

+from time import sleep

from cv2 import imwrite # pylint:disable=no-name-in-module

import numpy as np

@@ -16,8 +17,8 @@

from lib.convert import Converter

from lib.faces_detect import DetectedFace

from lib.gpu_stats import GPUStats

-from lib.multithreading import MultiThread, PoolProcess, total_cpus

-from lib.queue_manager import queue_manager, QueueEmpty

+from lib.multithreading import MultiThread, total_cpus

+from lib.queue_manager import queue_manager

from lib.utils import FaceswapError, get_folder, get_image_paths, hash_image_file

from plugins.extract.pipeline import Extractor

from plugins.plugin_loader import PluginLoader

@@ -32,6 +33,7 @@ def __init__(self, arguments):

self.args = arguments

Utils.set_verbosity(self.args.loglevel)

+ self.patch_threads = None

self.images = Images(self.args)

self.validate()

self.alignments = Alignments(self.args, False, self.images.is_video)

@@ -83,9 +85,8 @@ def validate(self):

if (self.args.writer == "ffmpeg" and

not self.images.is_video and

self.args.reference_video is None):

- logger.error("Output as video selected, but using frames as input. You must provide a "

- "reference video ('-ref', '--reference-video').")

- exit(1)

+ raise FaceswapError("Output as video selected, but using frames as input. You must "

+ "provide a reference video ('-ref', '--reference-video').")

output_dir = get_folder(self.args.output_dir)

logger.info("Output Directory: %s", output_dir)

@@ -93,7 +94,7 @@ def add_queues(self):

""" Add the queues for convert """

logger.debug("Adding queues. Queue size: %s", self.queue_size)

for qname in ("convert_in", "convert_out", "patch"):

- queue_manager.add_queue(qname, self.queue_size)

+ queue_manager.add_queue(qname, self.queue_size, multiprocessing_queue=False)

def process(self):

""" Process the conversion """

@@ -121,27 +122,17 @@ def convert_images(self):

logger.debug("Converting images")

save_queue = queue_manager.get_queue("convert_out")

patch_queue = queue_manager.get_queue("patch")

- completion_queue = queue_manager.get_queue("patch_completed")

- pool = PoolProcess(self.converter.process, patch_queue, save_queue,

- completion_queue=completion_queue,

- processes=self.pool_processes)

- pool.start()

- completed_count = 0

+ self.patch_threads = MultiThread(self.converter.process, patch_queue, save_queue,

+ thread_count=self.pool_processes, name="patch")

+

+ self.patch_threads.start()

while True:

self.check_thread_error()

if self.disk_io.completion_event.is_set():

logger.debug("DiskIO completion event set. Joining Pool")

break

- try:

- completed = completion_queue.get(True, 1)

- except QueueEmpty:

- continue

- completed_count += completed

- logger.debug("Total process pools completed: %s of %s", completed_count, pool.procs)

- if completed_count == pool.procs:

- logger.debug("All processes completed. Joining Pool")

- break

- pool.join()

+ sleep(1)

+ self.patch_threads.join()

logger.debug("Putting EOF")

save_queue.put("EOF")

@@ -149,7 +140,10 @@ def convert_images(self):

def check_thread_error(self):

""" Check and raise thread errors """

- for thread in (self.predictor.thread, self.disk_io.load_thread, self.disk_io.save_thread):

+ for thread in (self.predictor.thread,

+ self.disk_io.load_thread,

+ self.disk_io.save_thread,

+ self.patch_threads):

thread.check_and_raise_error()

@@ -238,15 +232,13 @@ def get_frame_ranges(self):

logger.debug("minframe: %s, maxframe: %s", minframe, maxframe)

if minframe is None or maxframe is None:

- logger.error("Frame Ranges specified, but could not determine frame numbering "

- "from filenames")

- exit(1)

+ raise FaceswapError("Frame Ranges specified, but could not determine frame numbering "

+ "from filenames")

retval = list()

for rng in self.args.frame_ranges:

if "-" not in rng:

- logger.error("Frame Ranges not specified in the correct format")

- exit(1)

+ raise FaceswapError("Frame Ranges not specified in the correct format")

start, end = rng.split("-")

retval.append((max(int(start), minframe), min(int(end), maxframe)))

logger.debug("frame ranges: %s", retval)

@@ -289,7 +281,9 @@ def add_queue(self, task):

q_name = "convert_out"

else:

q_name = task

- setattr(self, "{}_queue".format(task), queue_manager.get_queue(q_name))

+ setattr(self,

+ "{}_queue".format(task),

+ queue_manager.get_queue(q_name, multiprocessing_queue=False))

logger.debug("Added queue for task: '%s'", task)

def start_thread(self, task):

@@ -312,7 +306,7 @@ def load(self, *args): # pylint: disable=unused-argument

if self.load_queue.shutdown.is_set():

logger.debug("Load Queue: Stop signal received. Terminating")

break

- if image is None or (not image.any() and image.ndim not in ((2, 3))):

+ if image is None or (not image.any() and image.ndim not in (2, 3)):

# All black frames will return not np.any() so check dims too

logger.warning("Unable to open image. Skipping: '%s'", filename)

continue

@@ -488,8 +482,7 @@ def load_model(self):

logger.debug("Loading Model")

model_dir = get_folder(self.args.model_dir, make_folder=False)

if not model_dir:

- logger.error("%s does not exist.", self.args.model_dir)

- exit(1)

+ raise FaceswapError("{} does not exist.".format(self.args.model_dir))

trainer = self.get_trainer(model_dir)

gpus = 1 if not hasattr(self.args, "gpus") else self.args.gpus

model = PluginLoader.get_model(trainer)(model_dir, gpus, predict=True)

@@ -505,9 +498,9 @@ def get_trainer(self, model_dir):

statefile = [fname for fname in os.listdir(str(model_dir))

if fname.endswith("_state.json")]

if len(statefile) != 1:

- logger.error("There should be 1 state file in your model folder. %s were found. "

- "Specify a trainer with the '-t', '--trainer' option.", len(statefile))

- exit(1)

+ raise FaceswapError("There should be 1 state file in your model folder. {} were "

+ "found. Specify a trainer with the '-t', '--trainer' "

+ "option.".format(len(statefile)))

statefile = os.path.join(str(model_dir), statefile[0])

with open(statefile, "rb") as inp:

@@ -515,9 +508,8 @@ def get_trainer(self, model_dir):

trainer = state.get("name", None)

if not trainer:

- logger.error("Trainer name could not be read from state file. "

- "Specify a trainer with the '-t', '--trainer' option.")

- exit(1)

+ raise FaceswapError("Trainer name could not be read from state file. "

+ "Specify a trainer with the '-t', '--trainer' option.")

logger.debug("Trainer from state file: '%s'", trainer)

return trainer

@@ -702,9 +694,8 @@ def get_face_hashes(self):

face_hashes.append(hash_image_file(face))

logger.debug("Face Hashes: %s", (len(face_hashes)))

if not face_hashes:

- logger.error("Aligned directory is empty, no faces will be converted!")

- exit(1)

- elif len(face_hashes) <= len(self.input_images) / 3:

+ raise FaceswapError("Aligned directory is empty, no faces will be converted!")

+ if len(face_hashes) <= len(self.input_images) / 3:

logger.warning("Aligned directory contains far fewer images than the input "

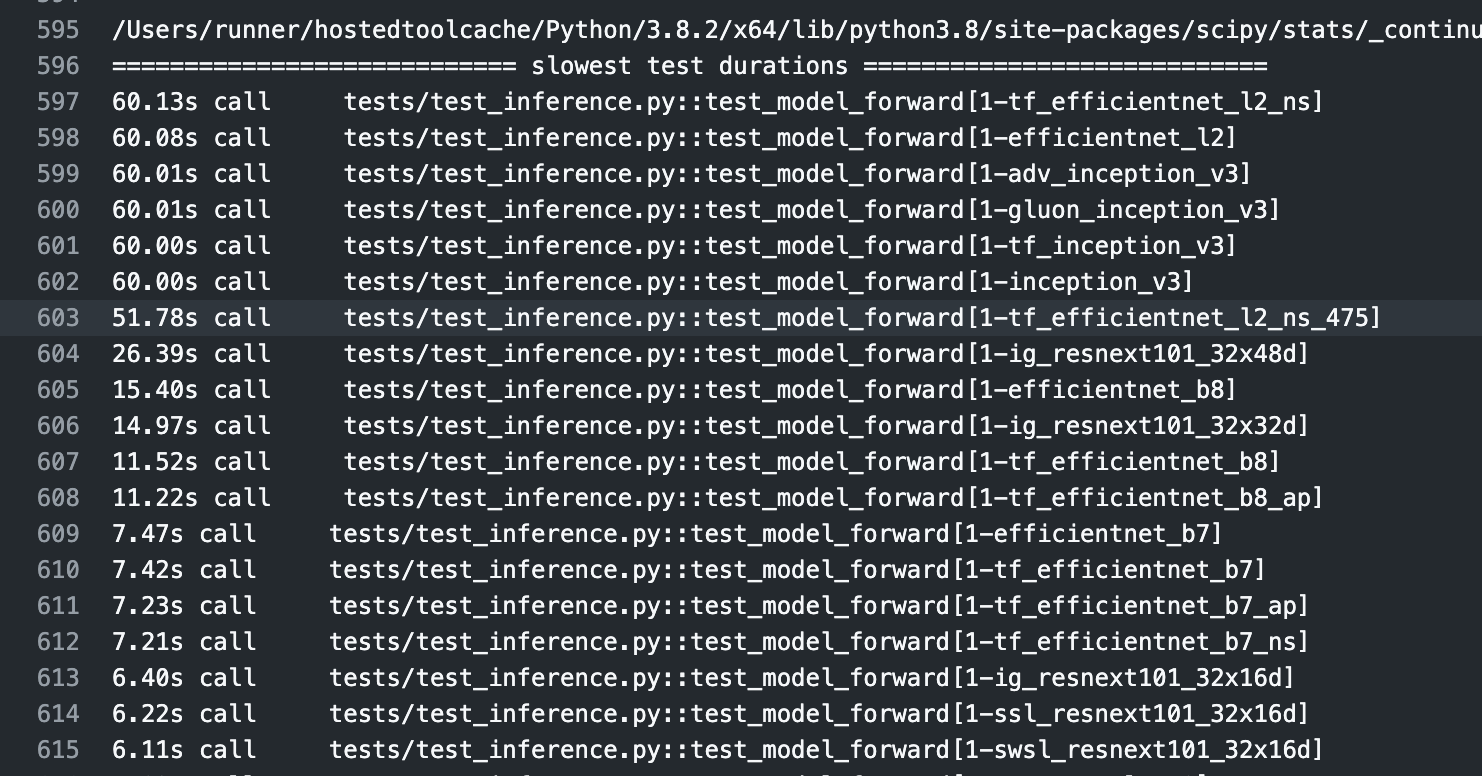

"directory, are you sure this is the right folder?")

return face_hashes

| OpenCV and Numpy release the GIL, so it is actually faster, in many cases, to use threading rather than Multiprocess. This has the added bonus of simplifying the code.

In tests, this PR improves conversion speeds by up to 30% for OpenCV writer and up to 40% for FFMPEG writer. Other writers remain unchanged.

```

|WRITER | MP | THREADS | DIFF |

|-------|-------|---------|------|

|OpenCV | 45 | 32 | 29% |

|FFMPEG | 49 | 29 | 41% |

|Pillow | 47.5 | 47.5 | 0% |

Time in seconds for 550 images

Tested on 8 cores. Average over 3 runs

``` | https://api.github.com/repos/deepfakes/faceswap/pulls/857 | 2019-09-02T21:35:54Z | 2019-09-02T21:55:37Z | 2019-09-02T21:55:37Z | 2019-09-02T21:55:37Z | 2,281 | deepfakes/faceswap | 18,826 |

Control ds_report output | diff --git a/bin/ds_report b/bin/ds_report

index 4a31a75c2eab..e6f7b50a78b2 100755

--- a/bin/ds_report

+++ b/bin/ds_report

@@ -1,6 +1,6 @@

#!/usr/bin/env python3

-from deepspeed.env_report import main

+from deepspeed.env_report import cli_main

if __name__ == '__main__':

- main()

+ cli_main()

diff --git a/deepspeed/env_report.py b/deepspeed/env_report.py

index b14ac4464835..5ede10a678c4 100644

--- a/deepspeed/env_report.py

+++ b/deepspeed/env_report.py

@@ -1,6 +1,7 @@

import torch

import deepspeed

import subprocess

+import argparse

from .ops.op_builder import ALL_OPS

from .git_version_info import installed_ops, torch_info

from .ops import __compatible_ops__ as compatible_ops

@@ -20,7 +21,7 @@

warning = f"{YELLOW}[WARNING]{END}"

-def op_report():

+def op_report(verbose=True):

max_dots = 23

max_dots2 = 11

h = ["op name", "installed", "compatible"]

@@ -43,7 +44,7 @@ def op_report():

no = f"{YELLOW}[NO]{END}"

for op_name, builder in ALL_OPS.items():

dots = "." * (max_dots - len(op_name))

- is_compatible = OKAY if builder.is_compatible() else no

+ is_compatible = OKAY if builder.is_compatible(verbose) else no

is_installed = installed if installed_ops[op_name] else no

dots2 = '.' * ((len(h[1]) + (max_dots2 - len(h[1]))) -

(len(is_installed) - color_len))

@@ -100,10 +101,32 @@ def debug_report():

print(name, "." * (max_dots - len(name)), value)

-def main():

- op_report()

+def parse_arguments():

+ parser = argparse.ArgumentParser()

+ parser.add_argument(

+ '--hide_operator_status',

+ action='store_true',

+ help=

+ 'Suppress display of installation and compatiblity statuses of DeepSpeed operators. '

+ )

+ parser.add_argument('--hide_errors_and_warnings',

+ action='store_true',

+ help='Suppress warning and error messages.')

+ args = parser.parse_args()

+ return args

+

+

+def main(hide_operator_status=False, hide_errors_and_warnings=False):

+ if not hide_operator_status:

+ op_report(verbose=not hide_errors_and_warnings)

debug_report()

+def cli_main():

+ args = parse_arguments()

+ main(hide_operator_status=args.hide_operator_status,

+ hide_errors_and_warnings=args.hide_errors_and_warnings)

+

+

if __name__ == "__main__":

main()

diff --git a/op_builder/async_io.py b/op_builder/async_io.py

index 3860f3376106..aec7911ce96b 100644

--- a/op_builder/async_io.py

+++ b/op_builder/async_io.py

@@ -84,14 +84,14 @@ def check_for_libaio_pkg(self):

break

return found

- def is_compatible(self):

+ def is_compatible(self, verbose=True):

# Check for the existence of libaio by using distutils

# to compile and link a test program that calls io_submit,

# which is a function provided by libaio that is used in the async_io op.

# If needed, one can define -I and -L entries in CFLAGS and LDFLAGS

# respectively to specify the directories for libaio.h and libaio.so.

aio_compatible = self.has_function('io_submit', ('aio', ))

- if not aio_compatible:

+ if verbose and not aio_compatible:

self.warning(

f"{self.NAME} requires the dev libaio .so object and headers but these were not found."

)

@@ -103,4 +103,4 @@ def is_compatible(self):

self.warning(

"If libaio is already installed (perhaps from source), try setting the CFLAGS and LDFLAGS environment variables to where it can be found."

)

- return super().is_compatible() and aio_compatible

+ return super().is_compatible(verbose) and aio_compatible

diff --git a/op_builder/builder.py b/op_builder/builder.py

index 5b0da34a3456..1a98e8d7b110 100644

--- a/op_builder/builder.py

+++ b/op_builder/builder.py

@@ -153,7 +153,7 @@ def cxx_args(self):

'''

return []

- def is_compatible(self):

+ def is_compatible(self, verbose=True):

'''

Check if all non-python dependencies are satisfied to build this op

'''

@@ -370,7 +370,7 @@ def load(self, verbose=True):

return self.jit_load(verbose)

def jit_load(self, verbose=True):

- if not self.is_compatible():

+ if not self.is_compatible(verbose):

raise RuntimeError(

f"Unable to JIT load the {self.name} op due to it not being compatible due to hardware/software issue."

)

@@ -482,8 +482,8 @@ def version_dependent_macros(self):

version_ge_1_5 = ['-DVERSION_GE_1_5']

return version_ge_1_1 + version_ge_1_3 + version_ge_1_5

- def is_compatible(self):

- return super().is_compatible()

+ def is_compatible(self, verbose=True):

+ return super().is_compatible(verbose)

def builder(self):

from torch.utils.cpp_extension import CUDAExtension

diff --git a/op_builder/cpu_adagrad.py b/op_builder/cpu_adagrad.py

index 6558477c094d..68fc78583960 100644

--- a/op_builder/cpu_adagrad.py

+++ b/op_builder/cpu_adagrad.py

@@ -14,7 +14,7 @@ class CPUAdagradBuilder(CUDAOpBuilder):

def __init__(self):

super().__init__(name=self.NAME)

- def is_compatible(self):

+ def is_compatible(self, verbose=True):

# Disable on Windows.

return sys.platform != "win32"

diff --git a/op_builder/cpu_adam.py b/op_builder/cpu_adam.py

index 640e244aad4c..8a58756d1fcd 100644

--- a/op_builder/cpu_adam.py

+++ b/op_builder/cpu_adam.py

@@ -14,7 +14,7 @@ class CPUAdamBuilder(CUDAOpBuilder):

def __init__(self):

super().__init__(name=self.NAME)

- def is_compatible(self):

+ def is_compatible(self, verbose=True):

# Disable on Windows.

return sys.platform != "win32"

diff --git a/op_builder/sparse_attn.py b/op_builder/sparse_attn.py

index 6f30cc84da23..a4acff6cd767 100644

--- a/op_builder/sparse_attn.py

+++ b/op_builder/sparse_attn.py

@@ -21,7 +21,7 @@ def sources(self):

def cxx_args(self):

return ['-O2', '-fopenmp']

- def is_compatible(self):

+ def is_compatible(self, verbose=True):

# Check to see if llvm and cmake are installed since they are dependencies

#required_commands = ['llvm-config|llvm-config-9', 'cmake']

#command_status = list(map(self.command_exists, required_commands))

@@ -52,4 +52,4 @@ def is_compatible(self):

f'{self.NAME} requires a torch version >= 1.5 but detected {TORCH_MAJOR}.{TORCH_MINOR}'

)

- return super().is_compatible() and torch_compatible and cuda_compatible

+ return super().is_compatible(verbose) and torch_compatible and cuda_compatible

| Add two flags to help control ds_report out:

1. --hide_operator_status

2. --hide_errors_and_warnings

Separate cli and function entry points into ds_report

Should fix #1541. | https://api.github.com/repos/microsoft/DeepSpeed/pulls/1622 | 2021-12-08T20:10:25Z | 2021-12-09T00:29:13Z | 2021-12-09T00:29:13Z | 2023-07-07T02:40:01Z | 1,798 | microsoft/DeepSpeed | 10,188 |

[Bandcamp] Added track number for metadata (fixes issue #17266) | diff --git a/youtube_dl/extractor/bandcamp.py b/youtube_dl/extractor/bandcamp.py

index b8514734d57..bc4c5165af7 100644

--- a/youtube_dl/extractor/bandcamp.py

+++ b/youtube_dl/extractor/bandcamp.py

@@ -44,6 +44,17 @@ class BandcampIE(InfoExtractor):

'title': 'Ben Prunty - Lanius (Battle)',

'uploader': 'Ben Prunty',

},

+ }, {

+ 'url': 'https://relapsealumni.bandcamp.com/track/hail-to-fire',

+ 'info_dict': {

+ 'id': '2584466013',

+ 'ext': 'mp3',

+ 'title': 'Hail to Fire',

+ 'track_number': 5,

+ },

+ 'params': {

+ 'skip_download': True,

+ },

}]

def _real_extract(self, url):

@@ -82,6 +93,7 @@ def _real_extract(self, url):

'thumbnail': thumbnail,

'formats': formats,

'duration': float_or_none(data.get('duration')),

+ 'track_number': int_or_none(data.get('track_num')),

}

else:

raise ExtractorError('No free songs found')

| ## Please follow the guide below

- You will be asked some questions, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your *pull request* (like that [x])

- Use *Preview* tab to see how your *pull request* will actually look like

---

### Before submitting a *pull request* make sure you have:

- [x] At least skimmed through [adding new extractor tutorial](https://github.com/rg3/youtube-dl#adding-support-for-a-new-site) and [youtube-dl coding conventions](https://github.com/rg3/youtube-dl#youtube-dl-coding-conventions) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?q=is%3Apr&type=Issues) the bugtracker for similar pull requests

- [x] Checked the code with [flake8](https://pypi.python.org/pypi/flake8)

### In order to be accepted and merged into youtube-dl each piece of code must be in public domain or released under [Unlicense](http://unlicense.org/). Check one of the following options:

- [x] I am the original author of this code and I am willing to release it under [Unlicense](http://unlicense.org/)

- [ ] I am not the original author of this code but it is in public domain or released under [Unlicense](http://unlicense.org/) (provide reliable evidence)

### What is the purpose of your *pull request*?

- [ ] Bug fix

- [x] Improvement

- [ ] New extractor

- [ ] New feature

---

Updated the extractor for Bandcamp.com to also include the track number as part of the metadata - issue #17266.

Cheers and thank you!

Parmjit V. | https://api.github.com/repos/ytdl-org/youtube-dl/pulls/17366 | 2018-08-27T22:03:56Z | 2018-08-30T19:32:36Z | 2018-08-30T19:32:36Z | 2018-08-30T21:52:41Z | 296 | ytdl-org/youtube-dl | 50,093 |

Fix an exception when auto-casting dicts to fp16 | diff --git a/deepspeed/runtime/engine.py b/deepspeed/runtime/engine.py

index 1fd7d7e964e8..59e1b9030431 100644

--- a/deepspeed/runtime/engine.py

+++ b/deepspeed/runtime/engine.py

@@ -1694,7 +1694,7 @@ def _cast_inputs_half(self, inputs):

return inputs.__class__(new_inputs)

elif isinstance(inputs, dict):

new_inputs = {}

- for k, v in inputs:

+ for k, v in inputs.items():

new_inputs[k] = self._cast_inputs_half(v)

return new_inputs

elif hasattr(inputs, 'half'):

| Deepspeed raises an exception when using fp16 auto_cast mode with a model that takes a dictionary as input. Quick test on `0.7.3` to display what I'm talking about:

```

$ cat test.py

import torch

import deepspeed

import argparse

parser = argparse.ArgumentParser(description='Test model')

parser.add_argument('--local_rank', type=int, default=-1,

help='local rank passed from distributed launcher')

parser = deepspeed.add_config_arguments(parser)

cmd_args = parser.parse_args()

class ValueDoubler(torch.nn.Module):

def forward(self, d):

return d["value"] * 2

m = ValueDoubler()

m, _, _, _ = deepspeed.initialize(args=cmd_args, model=m)

print("Torch: ", torch.__version__)

print("DeepSpeed: ", deepspeed.__version__)

print("New value: ", m({"value": 1.0}))

$ cat ds.json

{"fp16": {"auto_cast": true, "enabled": true}, "train_batch_size": 1}

$ deepspeed test.py --deepspeed --deepspeed_config ds.json

...

Torch: 1.12.1+cu102

DeepSpeed: 0.7.3

Traceback (most recent call last):

File "test.py", line 20, in <module>

print(m({"value": 1.0}))

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/utils/nvtx.py", line 11, in wrapped_fn

return func(*args, **kwargs)

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 1664, in forward

inputs = self._cast_inputs_half(inputs)

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 1693, in _cast_inputs_half

new_inputs.append(self._cast_inputs_half(v))

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 1697, in _cast_inputs_half

for k, v in inputs:

ValueError: too many values to unpack (expected 2)

```

And testing on master (I had to fuss it a little to get master to work):

```

$ deepspeed test.py --deepspeed --deepspeed_config ds.json

...

Torch: 1.12.1+cu102

DeepSpeed: 0.7.4+eed4032

Traceback (most recent call last):

File "test.py", line 20, in <module>

print(m({"value": 1.0}))

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/utils/nvtx.py", line 11, in wrapped_fn

return func(*args, **kwargs)

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 1664, in forward

inputs = self._cast_inputs_half(inputs)

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 1693, in _cast_inputs_half

new_inputs.append(self._cast_inputs_half(v))

File "/data/home/mattks/.local/share/virtualenvs/test-t-h-loqS/lib/python3.8/site-packages/deepspeed/runtime/engine.py", line 1697, in _cast_inputs_half

for k, v in inputs:

ValueError: too many values to unpack (expected 2)

```

This PR fixes this:

```

$ deepspeed test.py --deepspeed --deepspeed_config ds.json

Torch: 1.12.1+cu102