Upload 47 files

Browse files- backups/db_config_0.json +129 -0

- backups/db_config_17.json +129 -0

- bucket_counts.json +11 -0

- db_config.json +129 -0

- logging/dreambooth/events.out.tfevents.1711643279.cristianpamu.1044.0 +3 -0

- logging/dreambooth/events.out.tfevents.1711790062.cristianpamu.23572.0 +3 -0

- logging/dreambooth/events.out.tfevents.1711790661.cristianpamu.23572.1 +3 -0

- logging/dreambooth/events.out.tfevents.1711793198.cristianpamu.1684.0 +3 -0

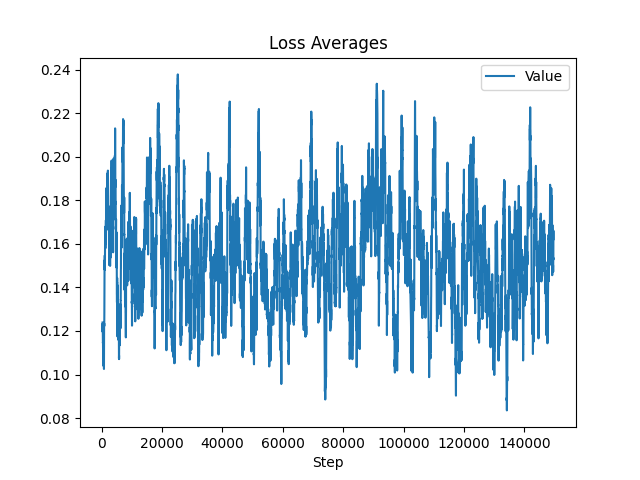

- logging/loss_plot_0.png +0 -0

- logging/loss_plot_17.png +0 -0

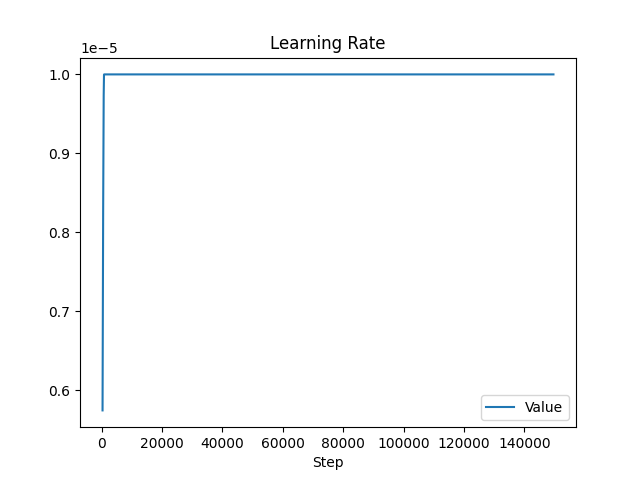

- logging/lr_plot_0.png +0 -0

- logging/lr_plot_17.png +0 -0

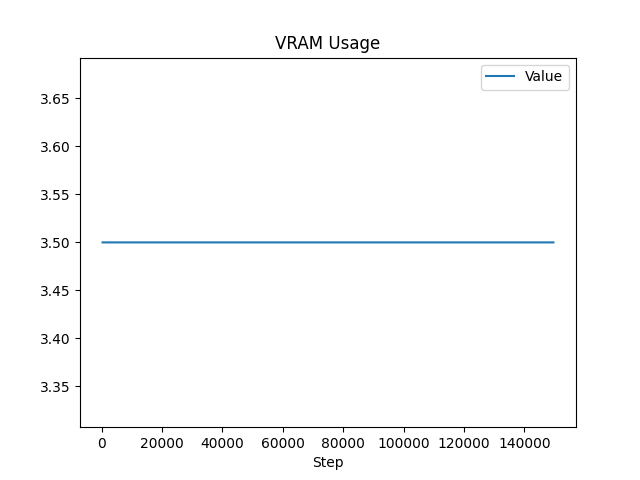

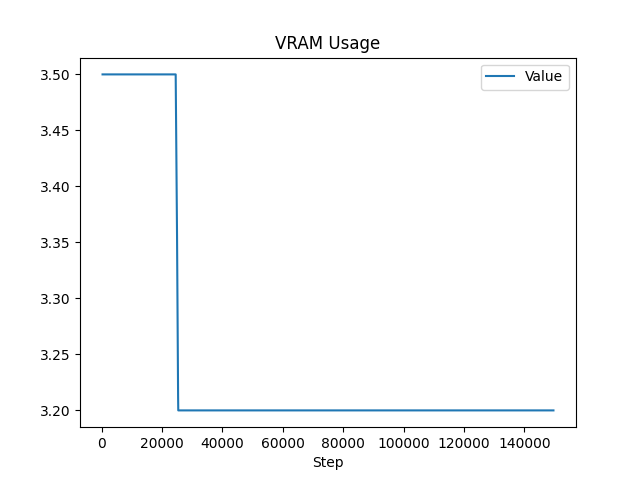

- logging/ram_plot_0.png +0 -0

- logging/ram_plot_17.png +0 -0

- model_28_mar.yaml +68 -0

- samples/sample_100017-0.png +0 -0

- samples/sample_100017-0.txt +1 -0

- samples/sample_125017-0.png +0 -0

- samples/sample_125017-0.txt +1 -0

- samples/sample_150000-0.png +0 -0

- samples/sample_150000-0.txt +1 -0

- samples/sample_150017-0.png +0 -0

- samples/sample_150017-0.txt +1 -0

- samples/sample_17-0.png +0 -0

- samples/sample_17-0.txt +1 -0

- samples/sample_25017-0.png +0 -0

- samples/sample_25017-0.txt +1 -0

- samples/sample_50017-0.png +0 -0

- samples/sample_50017-0.txt +1 -0

- samples/sample_75017-0.png +0 -0

- samples/sample_75017-0.txt +1 -0

- token_counts.json +5 -0

- working/feature_extractor/preprocessor_config.json +28 -0

- working/model_index.json +38 -0

- working/safety_checker/config.json +168 -0

- working/safety_checker/pytorch_model.bin +3 -0

- working/scheduler/scheduler_config.json +15 -0

- working/text_encoder/config.json +25 -0

- working/text_encoder/pytorch_model.bin +3 -0

- working/tokenizer/merges.txt +0 -0

- working/tokenizer/special_tokens_map.json +24 -0

- working/tokenizer/tokenizer_config.json +33 -0

- working/tokenizer/vocab.json +0 -0

- working/unet/config.json +68 -0

- working/unet/diffusion_pytorch_model.bin +3 -0

- working/vae/config.json +32 -0

- working/vae/diffusion_pytorch_model.bin +3 -0

backups/db_config_0.json

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"weight_decay": 0.01,

|

| 3 |

+

"attention": "default",

|

| 4 |

+

"cache_latents": true,

|

| 5 |

+

"clip_skip": 2,

|

| 6 |

+

"concepts_list": [

|

| 7 |

+

{

|

| 8 |

+

"class_data_dir": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\classifiers_0",

|

| 9 |

+

"class_guidance_scale": 7.5,

|

| 10 |

+

"class_infer_steps": 40,

|

| 11 |

+

"class_negative_prompt": "",

|

| 12 |

+

"class_prompt": "[filewords]",

|

| 13 |

+

"class_token": "movie",

|

| 14 |

+

"instance_data_dir": "C:\\ProyectoDL\\data\\test_5k",

|

| 15 |

+

"instance_prompt": "",

|

| 16 |

+

"instance_token": "ohwx",

|

| 17 |

+

"is_valid": true,

|

| 18 |

+

"n_save_sample": 1,

|

| 19 |

+

"num_class_images_per": 0,

|

| 20 |

+

"sample_seed": -1,

|

| 21 |

+

"save_guidance_scale": 7.5,

|

| 22 |

+

"save_infer_steps": 20,

|

| 23 |

+

"save_sample_negative_prompt": "",

|

| 24 |

+

"save_sample_prompt": "[filewords]",

|

| 25 |

+

"save_sample_template": ""

|

| 26 |

+

}

|

| 27 |

+

],

|

| 28 |

+

"concepts_path": "",

|

| 29 |

+

"custom_model_name": "",

|

| 30 |

+

"deterministic": false,

|

| 31 |

+

"disable_class_matching": false,

|

| 32 |

+

"disable_logging": false,

|

| 33 |

+

"ema_predict": false,

|

| 34 |

+

"epoch": 0,

|

| 35 |

+

"epoch_pause_frequency": 0,

|

| 36 |

+

"epoch_pause_time": 0,

|

| 37 |

+

"freeze_clip_normalization": false,

|

| 38 |

+

"full_mixed_precision": true,

|

| 39 |

+

"gradient_accumulation_steps": 1,

|

| 40 |

+

"gradient_checkpointing": true,

|

| 41 |

+

"gradient_set_to_none": true,

|

| 42 |

+

"graph_smoothing": 50,

|

| 43 |

+

"half_model": false,

|

| 44 |

+

"has_ema": false,

|

| 45 |

+

"hflip": false,

|

| 46 |

+

"infer_ema": false,

|

| 47 |

+

"initial_revision": 0,

|

| 48 |

+

"input_pertubation": true,

|

| 49 |

+

"learning_rate": 2e-06,

|

| 50 |

+

"learning_rate_min": 1e-06,

|

| 51 |

+

"lifetime_revision": 0,

|

| 52 |

+

"lora_learning_rate": 0.0001,

|

| 53 |

+

"lora_model_name": "",

|

| 54 |

+

"lora_txt_learning_rate": 5e-05,

|

| 55 |

+

"lora_txt_rank": 4,

|

| 56 |

+

"lora_unet_rank": 4,

|

| 57 |

+

"lora_weight": 0.8,

|

| 58 |

+

"lora_use_buggy_requires_grad": false,

|

| 59 |

+

"lr_cycles": 1,

|

| 60 |

+

"lr_factor": 0.5,

|

| 61 |

+

"lr_power": 1,

|

| 62 |

+

"lr_scale_pos": 0.5,

|

| 63 |

+

"lr_scheduler": "constant_with_warmup",

|

| 64 |

+

"lr_warmup_steps": 500,

|

| 65 |

+

"max_token_length": 75,

|

| 66 |

+

"min_snr_gamma": 0,

|

| 67 |

+

"use_dream": false,

|

| 68 |

+

"dream_detail_preservation": 0.5,

|

| 69 |

+

"freeze_spectral_norm": false,

|

| 70 |

+

"mixed_precision": "bf16",

|

| 71 |

+

"model_dir": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar",

|

| 72 |

+

"model_name": "model_28_mar",

|

| 73 |

+

"model_path": "model_28_mar",

|

| 74 |

+

"model_type": "v2x-512",

|

| 75 |

+

"noise_scheduler": "DDPM",

|

| 76 |

+

"num_train_epochs": 100,

|

| 77 |

+

"offset_noise": 0,

|

| 78 |

+

"optimizer": "8bit AdamW",

|

| 79 |

+

"pad_tokens": true,

|

| 80 |

+

"pretrained_model_name_or_path": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\working",

|

| 81 |

+

"pretrained_vae_name_or_path": null,

|

| 82 |

+

"prior_loss_scale": false,

|

| 83 |

+

"prior_loss_target": 100.0,

|

| 84 |

+

"prior_loss_weight": 0.75,

|

| 85 |

+

"prior_loss_weight_min": 0.1,

|

| 86 |

+

"resolution": 512,

|

| 87 |

+

"revision": 0,

|

| 88 |

+

"sample_batch_size": 1,

|

| 89 |

+

"sanity_prompt": "",

|

| 90 |

+

"sanity_seed": 420420.0,

|

| 91 |

+

"save_ckpt_after": true,

|

| 92 |

+

"save_ckpt_cancel": false,

|

| 93 |

+

"save_ckpt_during": false,

|

| 94 |

+

"save_ema": true,

|

| 95 |

+

"save_embedding_every": 25,

|

| 96 |

+

"save_lora_after": true,

|

| 97 |

+

"save_lora_cancel": false,

|

| 98 |

+

"save_lora_during": false,

|

| 99 |

+

"save_lora_for_extra_net": false,

|

| 100 |

+

"save_preview_every": 5,

|

| 101 |

+

"save_safetensors": true,

|

| 102 |

+

"save_state_after": false,

|

| 103 |

+

"save_state_cancel": false,

|

| 104 |

+

"save_state_during": false,

|

| 105 |

+

"scheduler": "DEISMultistep",

|

| 106 |

+

"shared_diffusers_path": "",

|

| 107 |

+

"shuffle_tags": true,

|

| 108 |

+

"snapshot": "",

|

| 109 |

+

"split_loss": true,

|

| 110 |

+

"src": "realisticVisionV51_v51VAE",

|

| 111 |

+

"stop_text_encoder": 1,

|

| 112 |

+

"strict_tokens": false,

|

| 113 |

+

"dynamic_img_norm": false,

|

| 114 |

+

"tenc_weight_decay": 0.01,

|

| 115 |

+

"tenc_grad_clip_norm": 0,

|

| 116 |

+

"tomesd": 0,

|

| 117 |

+

"train_batch_size": 1,

|

| 118 |

+

"train_imagic": false,

|

| 119 |

+

"train_unet": true,

|

| 120 |

+

"train_unfrozen": true,

|

| 121 |

+

"txt_learning_rate": 1e-06,

|

| 122 |

+

"use_concepts": false,

|

| 123 |

+

"use_ema": false,

|

| 124 |

+

"use_lora": false,

|

| 125 |

+

"use_lora_extended": false,

|

| 126 |

+

"use_shared_src": false,

|

| 127 |

+

"use_subdir": true,

|

| 128 |

+

"v2": false

|

| 129 |

+

}

|

backups/db_config_17.json

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"weight_decay": 0.01,

|

| 3 |

+

"attention": "default",

|

| 4 |

+

"cache_latents": false,

|

| 5 |

+

"clip_skip": 2,

|

| 6 |

+

"concepts_list": [

|

| 7 |

+

{

|

| 8 |

+

"class_data_dir": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\classifiers_0",

|

| 9 |

+

"class_guidance_scale": 7.5,

|

| 10 |

+

"class_infer_steps": 40,

|

| 11 |

+

"class_negative_prompt": "",

|

| 12 |

+

"class_prompt": "[filewords]",

|

| 13 |

+

"class_token": "movie",

|

| 14 |

+

"instance_data_dir": "C:\\ProyectoDL\\data\\test_5k",

|

| 15 |

+

"instance_prompt": "",

|

| 16 |

+

"instance_token": "ohwx",

|

| 17 |

+

"is_valid": true,

|

| 18 |

+

"n_save_sample": 1,

|

| 19 |

+

"num_class_images_per": 0,

|

| 20 |

+

"sample_seed": -1,

|

| 21 |

+

"save_guidance_scale": 7.5,

|

| 22 |

+

"save_infer_steps": 20,

|

| 23 |

+

"save_sample_negative_prompt": "",

|

| 24 |

+

"save_sample_prompt": "[filewords]",

|

| 25 |

+

"save_sample_template": ""

|

| 26 |

+

}

|

| 27 |

+

],

|

| 28 |

+

"concepts_path": "",

|

| 29 |

+

"custom_model_name": "",

|

| 30 |

+

"deterministic": false,

|

| 31 |

+

"disable_class_matching": false,

|

| 32 |

+

"disable_logging": false,

|

| 33 |

+

"ema_predict": false,

|

| 34 |

+

"epoch": 1,

|

| 35 |

+

"epoch_pause_frequency": 0,

|

| 36 |

+

"epoch_pause_time": 0,

|

| 37 |

+

"freeze_clip_normalization": false,

|

| 38 |

+

"full_mixed_precision": true,

|

| 39 |

+

"gradient_accumulation_steps": 1,

|

| 40 |

+

"gradient_checkpointing": true,

|

| 41 |

+

"gradient_set_to_none": false,

|

| 42 |

+

"graph_smoothing": 50,

|

| 43 |

+

"half_model": false,

|

| 44 |

+

"has_ema": false,

|

| 45 |

+

"hflip": false,

|

| 46 |

+

"infer_ema": false,

|

| 47 |

+

"initial_revision": 0,

|

| 48 |

+

"input_pertubation": true,

|

| 49 |

+

"learning_rate": 2e-06,

|

| 50 |

+

"learning_rate_min": 1e-06,

|

| 51 |

+

"lifetime_revision": 0,

|

| 52 |

+

"lora_learning_rate": 1e-05,

|

| 53 |

+

"lora_model_name": "",

|

| 54 |

+

"lora_txt_learning_rate": 5e-05,

|

| 55 |

+

"lora_txt_rank": 4,

|

| 56 |

+

"lora_unet_rank": 4,

|

| 57 |

+

"lora_weight": 0.8,

|

| 58 |

+

"lora_use_buggy_requires_grad": false,

|

| 59 |

+

"lr_cycles": 1,

|

| 60 |

+

"lr_factor": 0.5,

|

| 61 |

+

"lr_power": 1,

|

| 62 |

+

"lr_scale_pos": 0.5,

|

| 63 |

+

"lr_scheduler": "constant_with_warmup",

|

| 64 |

+

"lr_warmup_steps": 500,

|

| 65 |

+

"max_token_length": 75,

|

| 66 |

+

"min_snr_gamma": 0,

|

| 67 |

+

"use_dream": false,

|

| 68 |

+

"dream_detail_preservation": 0.5,

|

| 69 |

+

"freeze_spectral_norm": false,

|

| 70 |

+

"mixed_precision": "bf16",

|

| 71 |

+

"model_dir": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar",

|

| 72 |

+

"model_name": "model_28_mar",

|

| 73 |

+

"model_path": "model_28_mar",

|

| 74 |

+

"model_type": "v2x-512",

|

| 75 |

+

"noise_scheduler": "DDPM",

|

| 76 |

+

"num_train_epochs": 30,

|

| 77 |

+

"offset_noise": 0,

|

| 78 |

+

"optimizer": "8bit AdamW",

|

| 79 |

+

"pad_tokens": true,

|

| 80 |

+

"pretrained_model_name_or_path": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\working",

|

| 81 |

+

"pretrained_vae_name_or_path": null,

|

| 82 |

+

"prior_loss_scale": false,

|

| 83 |

+

"prior_loss_target": 100.0,

|

| 84 |

+

"prior_loss_weight": 0.75,

|

| 85 |

+

"prior_loss_weight_min": 0.1,

|

| 86 |

+

"resolution": 512,

|

| 87 |

+

"revision": 17,

|

| 88 |

+

"sample_batch_size": 1,

|

| 89 |

+

"sanity_prompt": "",

|

| 90 |

+

"sanity_seed": 420420.0,

|

| 91 |

+

"save_ckpt_after": true,

|

| 92 |

+

"save_ckpt_cancel": false,

|

| 93 |

+

"save_ckpt_during": false,

|

| 94 |

+

"save_ema": true,

|

| 95 |

+

"save_embedding_every": 20,

|

| 96 |

+

"save_lora_after": true,

|

| 97 |

+

"save_lora_cancel": false,

|

| 98 |

+

"save_lora_during": false,

|

| 99 |

+

"save_lora_for_extra_net": false,

|

| 100 |

+

"save_preview_every": 5,

|

| 101 |

+

"save_safetensors": true,

|

| 102 |

+

"save_state_after": false,

|

| 103 |

+

"save_state_cancel": false,

|

| 104 |

+

"save_state_during": false,

|

| 105 |

+

"scheduler": "DEISMultistep",

|

| 106 |

+

"shared_diffusers_path": "",

|

| 107 |

+

"shuffle_tags": true,

|

| 108 |

+

"snapshot": "",

|

| 109 |

+

"split_loss": true,

|

| 110 |

+

"src": "realisticVisionV51_v51VAE",

|

| 111 |

+

"stop_text_encoder": 1,

|

| 112 |

+

"strict_tokens": false,

|

| 113 |

+

"dynamic_img_norm": false,

|

| 114 |

+

"tenc_weight_decay": 0.01,

|

| 115 |

+

"tenc_grad_clip_norm": 0,

|

| 116 |

+

"tomesd": 0,

|

| 117 |

+

"train_batch_size": 1,

|

| 118 |

+

"train_imagic": false,

|

| 119 |

+

"train_unet": true,

|

| 120 |

+

"train_unfrozen": true,

|

| 121 |

+

"txt_learning_rate": 1e-06,

|

| 122 |

+

"use_concepts": false,

|

| 123 |

+

"use_ema": false,

|

| 124 |

+

"use_lora": true,

|

| 125 |

+

"use_lora_extended": false,

|

| 126 |

+

"use_shared_src": false,

|

| 127 |

+

"use_subdir": true,

|

| 128 |

+

"v2": false

|

| 129 |

+

}

|

bucket_counts.json

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"buckets": {

|

| 3 |

+

"(512, 512, 0)": {

|

| 4 |

+

"resolution": [

|

| 5 |

+

512,

|

| 6 |

+

512

|

| 7 |

+

],

|

| 8 |

+

"count": 5000

|

| 9 |

+

}

|

| 10 |

+

}

|

| 11 |

+

}

|

db_config.json

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"weight_decay": 0.01,

|

| 3 |

+

"attention": "default",

|

| 4 |

+

"cache_latents": false,

|

| 5 |

+

"clip_skip": 2,

|

| 6 |

+

"concepts_list": [

|

| 7 |

+

{

|

| 8 |

+

"class_data_dir": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\classifiers_0",

|

| 9 |

+

"class_guidance_scale": 7.5,

|

| 10 |

+

"class_infer_steps": 40,

|

| 11 |

+

"class_negative_prompt": "",

|

| 12 |

+

"class_prompt": "[filewords]",

|

| 13 |

+

"class_token": "movie",

|

| 14 |

+

"instance_data_dir": "C:\\ProyectoDL\\data\\test_5k",

|

| 15 |

+

"instance_prompt": "",

|

| 16 |

+

"instance_token": "ohwx",

|

| 17 |

+

"is_valid": true,

|

| 18 |

+

"n_save_sample": 1,

|

| 19 |

+

"num_class_images_per": 0,

|

| 20 |

+

"sample_seed": -1,

|

| 21 |

+

"save_guidance_scale": 7.5,

|

| 22 |

+

"save_infer_steps": 20,

|

| 23 |

+

"save_sample_negative_prompt": "",

|

| 24 |

+

"save_sample_prompt": "[filewords]",

|

| 25 |

+

"save_sample_template": ""

|

| 26 |

+

}

|

| 27 |

+

],

|

| 28 |

+

"concepts_path": "",

|

| 29 |

+

"custom_model_name": "",

|

| 30 |

+

"deterministic": false,

|

| 31 |

+

"disable_class_matching": false,

|

| 32 |

+

"disable_logging": false,

|

| 33 |

+

"ema_predict": false,

|

| 34 |

+

"epoch": 31,

|

| 35 |

+

"epoch_pause_frequency": 0,

|

| 36 |

+

"epoch_pause_time": 0,

|

| 37 |

+

"freeze_clip_normalization": false,

|

| 38 |

+

"full_mixed_precision": true,

|

| 39 |

+

"gradient_accumulation_steps": 1,

|

| 40 |

+

"gradient_checkpointing": true,

|

| 41 |

+

"gradient_set_to_none": false,

|

| 42 |

+

"graph_smoothing": 50,

|

| 43 |

+

"half_model": false,

|

| 44 |

+

"has_ema": false,

|

| 45 |

+

"hflip": false,

|

| 46 |

+

"infer_ema": false,

|

| 47 |

+

"initial_revision": 0,

|

| 48 |

+

"input_pertubation": true,

|

| 49 |

+

"learning_rate": 2e-06,

|

| 50 |

+

"learning_rate_min": 1e-06,

|

| 51 |

+

"lifetime_revision": 0,

|

| 52 |

+

"lora_learning_rate": 1e-05,

|

| 53 |

+

"lora_model_name": "",

|

| 54 |

+

"lora_txt_learning_rate": 5e-05,

|

| 55 |

+

"lora_txt_rank": 4,

|

| 56 |

+

"lora_unet_rank": 4,

|

| 57 |

+

"lora_weight": 0.8,

|

| 58 |

+

"lora_use_buggy_requires_grad": false,

|

| 59 |

+

"lr_cycles": 1,

|

| 60 |

+

"lr_factor": 0.5,

|

| 61 |

+

"lr_power": 1,

|

| 62 |

+

"lr_scale_pos": 0.5,

|

| 63 |

+

"lr_scheduler": "constant_with_warmup",

|

| 64 |

+

"lr_warmup_steps": 500,

|

| 65 |

+

"max_token_length": 75,

|

| 66 |

+

"min_snr_gamma": 0,

|

| 67 |

+

"use_dream": false,

|

| 68 |

+

"dream_detail_preservation": 0.5,

|

| 69 |

+

"freeze_spectral_norm": false,

|

| 70 |

+

"mixed_precision": "bf16",

|

| 71 |

+

"model_dir": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar",

|

| 72 |

+

"model_name": "model_28_mar",

|

| 73 |

+

"model_path": "model_28_mar",

|

| 74 |

+

"model_type": "v2x-512",

|

| 75 |

+

"noise_scheduler": "DDPM",

|

| 76 |

+

"num_train_epochs": 30,

|

| 77 |

+

"offset_noise": 0,

|

| 78 |

+

"optimizer": "8bit AdamW",

|

| 79 |

+

"pad_tokens": true,

|

| 80 |

+

"pretrained_model_name_or_path": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\working",

|

| 81 |

+

"pretrained_vae_name_or_path": "",

|

| 82 |

+

"prior_loss_scale": false,

|

| 83 |

+

"prior_loss_target": 100.0,

|

| 84 |

+

"prior_loss_weight": 0.75,

|

| 85 |

+

"prior_loss_weight_min": 0.1,

|

| 86 |

+

"resolution": 512,

|

| 87 |

+

"revision": 17,

|

| 88 |

+

"sample_batch_size": 1,

|

| 89 |

+

"sanity_prompt": "",

|

| 90 |

+

"sanity_seed": 420420.0,

|

| 91 |

+

"save_ckpt_after": true,

|

| 92 |

+

"save_ckpt_cancel": false,

|

| 93 |

+

"save_ckpt_during": false,

|

| 94 |

+

"save_ema": true,

|

| 95 |

+

"save_embedding_every": 20,

|

| 96 |

+

"save_lora_after": true,

|

| 97 |

+

"save_lora_cancel": false,

|

| 98 |

+

"save_lora_during": false,

|

| 99 |

+

"save_lora_for_extra_net": false,

|

| 100 |

+

"save_preview_every": 5,

|

| 101 |

+

"save_safetensors": true,

|

| 102 |

+

"save_state_after": false,

|

| 103 |

+

"save_state_cancel": false,

|

| 104 |

+

"save_state_during": false,

|

| 105 |

+

"scheduler": "DEISMultistep",

|

| 106 |

+

"shared_diffusers_path": "",

|

| 107 |

+

"shuffle_tags": true,

|

| 108 |

+

"snapshot": "",

|

| 109 |

+

"split_loss": true,

|

| 110 |

+

"src": "realisticVisionV51_v51VAE",

|

| 111 |

+

"stop_text_encoder": 1,

|

| 112 |

+

"strict_tokens": false,

|

| 113 |

+

"dynamic_img_norm": false,

|

| 114 |

+

"tenc_weight_decay": 0.01,

|

| 115 |

+

"tenc_grad_clip_norm": 0,

|

| 116 |

+

"tomesd": 0,

|

| 117 |

+

"train_batch_size": 1,

|

| 118 |

+

"train_imagic": false,

|

| 119 |

+

"train_unet": true,

|

| 120 |

+

"train_unfrozen": true,

|

| 121 |

+

"txt_learning_rate": 1e-06,

|

| 122 |

+

"use_concepts": false,

|

| 123 |

+

"use_ema": false,

|

| 124 |

+

"use_lora": true,

|

| 125 |

+

"use_lora_extended": false,

|

| 126 |

+

"use_shared_src": false,

|

| 127 |

+

"use_subdir": true,

|

| 128 |

+

"v2": false

|

| 129 |

+

}

|

logging/dreambooth/events.out.tfevents.1711643279.cristianpamu.1044.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:21d283d7bf52aa05bae12a856525115879564f3838e626813bb4e405893c1048

|

| 3 |

+

size 28792548

|

logging/dreambooth/events.out.tfevents.1711790062.cristianpamu.23572.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:05d5d9a03ccd27bcf8f5e71292edfc0e73f720b3dfffbeb6d0a8d55436bf1e6a

|

| 3 |

+

size 3012

|

logging/dreambooth/events.out.tfevents.1711790661.cristianpamu.23572.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e117fb6c625cbaeca840aee67d472ccb56f2431f026b3edd6f5bc63fb1db6134

|

| 3 |

+

size 24799

|

logging/dreambooth/events.out.tfevents.1711793198.cristianpamu.1684.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a2b40e2bd914e174c9431ada4808f688b03d3f2f4c0ebf9f07816cfef24e2313

|

| 3 |

+

size 26934150

|

logging/loss_plot_0.png

ADDED

|

logging/loss_plot_17.png

ADDED

|

logging/lr_plot_0.png

ADDED

|

logging/lr_plot_17.png

ADDED

|

logging/ram_plot_0.png

ADDED

|

logging/ram_plot_17.png

ADDED

|

model_28_mar.yaml

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

base_learning_rate: 1.0e-4

|

| 3 |

+

target: ldm.models.diffusion.ddpm.LatentDiffusion

|

| 4 |

+

params:

|

| 5 |

+

linear_start: 0.00085

|

| 6 |

+

linear_end: 0.0120

|

| 7 |

+

num_timesteps_cond: 1

|

| 8 |

+

log_every_t: 200

|

| 9 |

+

timesteps: 1000

|

| 10 |

+

first_stage_key: "images"

|

| 11 |

+

cond_stage_key: "input_ids"

|

| 12 |

+

image_size: 64

|

| 13 |

+

channels: 4

|

| 14 |

+

cond_stage_trainable: true

|

| 15 |

+

conditioning_key: crossattn

|

| 16 |

+

monitor: val/loss_simple_ema

|

| 17 |

+

scale_factor: 0.18215

|

| 18 |

+

use_ema: False # we set this to false because this is an inference only config

|

| 19 |

+

unfreeze_model: true

|

| 20 |

+

|

| 21 |

+

unet_config:

|

| 22 |

+

target: ldm.modules.diffusionmodules.openaimodel.UNetModel

|

| 23 |

+

params:

|

| 24 |

+

use_checkpoint: True

|

| 25 |

+

use_fp16: True

|

| 26 |

+

image_size: 32 # unused

|

| 27 |

+

in_channels: 4

|

| 28 |

+

out_channels: 4

|

| 29 |

+

model_channels: 320

|

| 30 |

+

attention_resolutions: [ 4, 2, 1 ]

|

| 31 |

+

num_res_blocks: 2

|

| 32 |

+

channel_mult: [ 1, 2, 4, 4 ]

|

| 33 |

+

num_head_channels: 64 # need to fix for flash-attn

|

| 34 |

+

use_spatial_transformer: True

|

| 35 |

+

use_linear_in_transformer: True

|

| 36 |

+

transformer_depth: 1

|

| 37 |

+

context_dim: 1024

|

| 38 |

+

legacy: False

|

| 39 |

+

|

| 40 |

+

first_stage_config:

|

| 41 |

+

target: ldm.models.autoencoder.AutoencoderKL

|

| 42 |

+

params:

|

| 43 |

+

embed_dim: 4

|

| 44 |

+

monitor: val/rec_loss

|

| 45 |

+

ddconfig:

|

| 46 |

+

#attn_type: "vanilla-xformers"

|

| 47 |

+

double_z: true

|

| 48 |

+

z_channels: 4

|

| 49 |

+

resolution: 256

|

| 50 |

+

in_channels: 3

|

| 51 |

+

out_ch: 3

|

| 52 |

+

ch: 128

|

| 53 |

+

ch_mult:

|

| 54 |

+

- 1

|

| 55 |

+

- 2

|

| 56 |

+

- 4

|

| 57 |

+

- 4

|

| 58 |

+

num_res_blocks: 2

|

| 59 |

+

attn_resolutions: [ ]

|

| 60 |

+

dropout: 0.0

|

| 61 |

+

lossconfig:

|

| 62 |

+

target: torch.nn.Identity

|

| 63 |

+

|

| 64 |

+

cond_stage_config:

|

| 65 |

+

target: ldm.modules.encoders.modules.FrozenOpenCLIPEmbedder

|

| 66 |

+

params:

|

| 67 |

+

freeze: True

|

| 68 |

+

layer: "penultimate"

|

samples/sample_100017-0.png

ADDED

|

samples/sample_100017-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, county fair begins nasty rich guy threatening turn old lady onto streetunless niece lives marries mans son shes dead set niece sweet thing would anything help aunteven marry rich jerk however possible way presented poor young man taken fed turns naturally jockey thinks win prize fair save farm

|

samples/sample_125017-0.png

ADDED

|

samples/sample_125017-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, american millionaire wants reform parisian cabaret singer moonlights jewel thief

|

samples/sample_150000-0.png

ADDED

|

samples/sample_150000-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, british engineer india takes simple native girl bride act defies social strictures leads tragedy

|

samples/sample_150017-0.png

ADDED

|

samples/sample_150017-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, myra pendleton sent away raised mother died childbirth shes young woman father wants return instead simply welcoming back pretends pendleton family attorney presents lovely home house actually belongs kirk waring warner baxter abroad waring returns find hes swindled house fortune

|

samples/sample_17-0.png

ADDED

|

samples/sample_17-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, drama two girls married different layers society

|

samples/sample_25017-0.png

ADDED

|

samples/sample_25017-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, runaway becomes thief sentenced reformatory

|

samples/sample_50017-0.png

ADDED

|

samples/sample_50017-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, smartalec street kids father policeman killed line duty boy turns new leaf goes work support mother brothers sisters gets job usher theater really wants become policeman avenge death father soon finds involved fake kidnapping real gangsters tip identity man killed dad

|

samples/sample_75017-0.png

ADDED

|

samples/sample_75017-0.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ohwx, backdrop new york city early young woman naively seeking win love reads romance novels devours finds one prospect earnest denizen bowery another elegant young aristocrat focusing bygone eras fashions novelty bicyclebuiltfortwo inventors quest horseless carriage film gently stirs audiences nostalgia simpler times

|

token_counts.json

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"model_28_mar": {

|

| 3 |

+

"ohwx": 15000

|

| 4 |

+

}

|

| 5 |

+

}

|

working/feature_extractor/preprocessor_config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": {

|

| 3 |

+

"height": 224,

|

| 4 |

+

"width": 224

|

| 5 |

+

},

|

| 6 |

+

"do_center_crop": true,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_rescale": true,

|

| 10 |

+

"do_resize": true,

|

| 11 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 12 |

+

"image_mean": [

|

| 13 |

+

0.48145466,

|

| 14 |

+

0.4578275,

|

| 15 |

+

0.40821073

|

| 16 |

+

],

|

| 17 |

+

"image_processor_type": "CLIPFeatureExtractor",

|

| 18 |

+

"image_std": [

|

| 19 |

+

0.26862954,

|

| 20 |

+

0.26130258,

|

| 21 |

+

0.27577711

|

| 22 |

+

],

|

| 23 |

+

"resample": 3,

|

| 24 |

+

"rescale_factor": 0.00392156862745098,

|

| 25 |

+

"size": {

|

| 26 |

+

"shortest_edge": 224

|

| 27 |

+

}

|

| 28 |

+

}

|

working/model_index.json

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableDiffusionPipeline",

|

| 3 |

+

"_diffusers_version": "0.25.0",

|

| 4 |

+

"_name_or_path": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\working",

|

| 5 |

+

"feature_extractor": [

|

| 6 |

+

"transformers",

|

| 7 |

+

"CLIPFeatureExtractor"

|

| 8 |

+

],

|

| 9 |

+

"image_encoder": [

|

| 10 |

+

null,

|

| 11 |

+

null

|

| 12 |

+

],

|

| 13 |

+

"requires_safety_checker": false,

|

| 14 |

+

"safety_checker": [

|

| 15 |

+

null,

|

| 16 |

+

null

|

| 17 |

+

],

|

| 18 |

+

"scheduler": [

|

| 19 |

+

"diffusers",

|

| 20 |

+

"PNDMScheduler"

|

| 21 |

+

],

|

| 22 |

+

"text_encoder": [

|

| 23 |

+

"transformers",

|

| 24 |

+

"CLIPTextModel"

|

| 25 |

+

],

|

| 26 |

+

"tokenizer": [

|

| 27 |

+

"transformers",

|

| 28 |

+

"CLIPTokenizer"

|

| 29 |

+

],

|

| 30 |

+

"unet": [

|

| 31 |

+

"diffusers",

|

| 32 |

+

"UNet2DConditionModel"

|

| 33 |

+

],

|

| 34 |

+

"vae": [

|

| 35 |

+

"diffusers",

|

| 36 |

+

"AutoencoderKL"

|

| 37 |

+

]

|

| 38 |

+

}

|

working/safety_checker/config.json

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_commit_hash": "cb41f3a270d63d454d385fc2e4f571c487c253c5",

|

| 3 |

+

"_name_or_path": "CompVis/stable-diffusion-safety-checker",

|

| 4 |

+

"architectures": [

|

| 5 |

+

"StableDiffusionSafetyChecker"

|

| 6 |

+

],

|

| 7 |

+

"initializer_factor": 1.0,

|

| 8 |

+

"logit_scale_init_value": 2.6592,

|

| 9 |

+

"model_type": "clip",

|

| 10 |

+

"projection_dim": 768,

|

| 11 |

+

"text_config": {

|

| 12 |

+

"_name_or_path": "",

|

| 13 |

+

"add_cross_attention": false,

|

| 14 |

+

"architectures": null,

|

| 15 |

+

"attention_dropout": 0.0,

|

| 16 |

+

"bad_words_ids": null,

|

| 17 |

+

"begin_suppress_tokens": null,

|

| 18 |

+

"bos_token_id": 0,

|

| 19 |

+

"chunk_size_feed_forward": 0,

|

| 20 |

+

"cross_attention_hidden_size": null,

|

| 21 |

+

"decoder_start_token_id": null,

|

| 22 |

+

"diversity_penalty": 0.0,

|

| 23 |

+

"do_sample": false,

|

| 24 |

+

"dropout": 0.0,

|

| 25 |

+

"early_stopping": false,

|

| 26 |

+

"encoder_no_repeat_ngram_size": 0,

|

| 27 |

+

"eos_token_id": 2,

|

| 28 |

+

"exponential_decay_length_penalty": null,

|

| 29 |

+

"finetuning_task": null,

|

| 30 |

+

"forced_bos_token_id": null,

|

| 31 |

+

"forced_eos_token_id": null,

|

| 32 |

+

"hidden_act": "quick_gelu",

|

| 33 |

+

"hidden_size": 768,

|

| 34 |

+

"id2label": {

|

| 35 |

+

"0": "LABEL_0",

|

| 36 |

+

"1": "LABEL_1"

|

| 37 |

+

},

|

| 38 |

+

"initializer_factor": 1.0,

|

| 39 |

+

"initializer_range": 0.02,

|

| 40 |

+

"intermediate_size": 3072,

|

| 41 |

+

"is_decoder": false,

|

| 42 |

+

"is_encoder_decoder": false,

|

| 43 |

+

"label2id": {

|

| 44 |

+

"LABEL_0": 0,

|

| 45 |

+

"LABEL_1": 1

|

| 46 |

+

},

|

| 47 |

+

"layer_norm_eps": 1e-05,

|

| 48 |

+

"length_penalty": 1.0,

|

| 49 |

+

"max_length": 20,

|

| 50 |

+

"max_position_embeddings": 77,

|

| 51 |

+

"min_length": 0,

|

| 52 |

+

"model_type": "clip_text_model",

|

| 53 |

+

"no_repeat_ngram_size": 0,

|

| 54 |

+

"num_attention_heads": 12,

|

| 55 |

+

"num_beam_groups": 1,

|

| 56 |

+

"num_beams": 1,

|

| 57 |

+

"num_hidden_layers": 12,

|

| 58 |

+

"num_return_sequences": 1,

|

| 59 |

+

"output_attentions": false,

|

| 60 |

+

"output_hidden_states": false,

|

| 61 |

+

"output_scores": false,

|

| 62 |

+

"pad_token_id": 1,

|

| 63 |

+

"prefix": null,

|

| 64 |

+

"problem_type": null,

|

| 65 |

+

"projection_dim": 512,

|

| 66 |

+

"pruned_heads": {},

|

| 67 |

+

"remove_invalid_values": false,

|

| 68 |

+

"repetition_penalty": 1.0,

|

| 69 |

+

"return_dict": true,

|

| 70 |

+

"return_dict_in_generate": false,

|

| 71 |

+

"sep_token_id": null,

|

| 72 |

+

"suppress_tokens": null,

|

| 73 |

+

"task_specific_params": null,

|

| 74 |

+

"temperature": 1.0,

|

| 75 |

+

"tf_legacy_loss": false,

|

| 76 |

+

"tie_encoder_decoder": false,

|

| 77 |

+

"tie_word_embeddings": true,

|

| 78 |

+

"tokenizer_class": null,

|

| 79 |

+

"top_k": 50,

|

| 80 |

+

"top_p": 1.0,

|

| 81 |

+

"torch_dtype": null,

|

| 82 |

+

"torchscript": false,

|

| 83 |

+

"transformers_version": "4.30.2",

|

| 84 |

+

"typical_p": 1.0,

|

| 85 |

+

"use_bfloat16": false,

|

| 86 |

+

"vocab_size": 49408

|

| 87 |

+

},

|

| 88 |

+

"torch_dtype": "float32",

|

| 89 |

+

"transformers_version": null,

|

| 90 |

+

"vision_config": {

|

| 91 |

+

"_name_or_path": "",

|

| 92 |

+

"add_cross_attention": false,

|

| 93 |

+

"architectures": null,

|

| 94 |

+

"attention_dropout": 0.0,

|

| 95 |

+

"bad_words_ids": null,

|

| 96 |

+

"begin_suppress_tokens": null,

|

| 97 |

+

"bos_token_id": null,

|

| 98 |

+

"chunk_size_feed_forward": 0,

|

| 99 |

+

"cross_attention_hidden_size": null,

|

| 100 |

+

"decoder_start_token_id": null,

|

| 101 |

+

"diversity_penalty": 0.0,

|

| 102 |

+

"do_sample": false,

|

| 103 |

+

"dropout": 0.0,

|

| 104 |

+

"early_stopping": false,

|

| 105 |

+

"encoder_no_repeat_ngram_size": 0,

|

| 106 |

+

"eos_token_id": null,

|

| 107 |

+

"exponential_decay_length_penalty": null,

|

| 108 |

+

"finetuning_task": null,

|

| 109 |

+

"forced_bos_token_id": null,

|

| 110 |

+

"forced_eos_token_id": null,

|

| 111 |

+

"hidden_act": "quick_gelu",

|

| 112 |

+

"hidden_size": 1024,

|

| 113 |

+

"id2label": {

|

| 114 |

+

"0": "LABEL_0",

|

| 115 |

+

"1": "LABEL_1"

|

| 116 |

+

},

|

| 117 |

+

"image_size": 224,

|

| 118 |

+

"initializer_factor": 1.0,

|

| 119 |

+

"initializer_range": 0.02,

|

| 120 |

+

"intermediate_size": 4096,

|

| 121 |

+

"is_decoder": false,

|

| 122 |

+

"is_encoder_decoder": false,

|

| 123 |

+

"label2id": {

|

| 124 |

+

"LABEL_0": 0,

|

| 125 |

+

"LABEL_1": 1

|

| 126 |

+

},

|

| 127 |

+

"layer_norm_eps": 1e-05,

|

| 128 |

+

"length_penalty": 1.0,

|

| 129 |

+

"max_length": 20,

|

| 130 |

+

"min_length": 0,

|

| 131 |

+

"model_type": "clip_vision_model",

|

| 132 |

+

"no_repeat_ngram_size": 0,

|

| 133 |

+

"num_attention_heads": 16,

|

| 134 |

+

"num_beam_groups": 1,

|

| 135 |

+

"num_beams": 1,

|

| 136 |

+

"num_channels": 3,

|

| 137 |

+

"num_hidden_layers": 24,

|

| 138 |

+

"num_return_sequences": 1,

|

| 139 |

+

"output_attentions": false,

|

| 140 |

+

"output_hidden_states": false,

|

| 141 |

+

"output_scores": false,

|

| 142 |

+

"pad_token_id": null,

|

| 143 |

+

"patch_size": 14,

|

| 144 |

+

"prefix": null,

|

| 145 |

+

"problem_type": null,

|

| 146 |

+

"projection_dim": 512,

|

| 147 |

+

"pruned_heads": {},

|

| 148 |

+

"remove_invalid_values": false,

|

| 149 |

+

"repetition_penalty": 1.0,

|

| 150 |

+

"return_dict": true,

|

| 151 |

+

"return_dict_in_generate": false,

|

| 152 |

+

"sep_token_id": null,

|

| 153 |

+

"suppress_tokens": null,

|

| 154 |

+

"task_specific_params": null,

|

| 155 |

+

"temperature": 1.0,

|

| 156 |

+

"tf_legacy_loss": false,

|

| 157 |

+

"tie_encoder_decoder": false,

|

| 158 |

+

"tie_word_embeddings": true,

|

| 159 |

+

"tokenizer_class": null,

|

| 160 |

+

"top_k": 50,

|

| 161 |

+

"top_p": 1.0,

|

| 162 |

+

"torch_dtype": null,

|

| 163 |

+

"torchscript": false,

|

| 164 |

+

"transformers_version": "4.30.2",

|

| 165 |

+

"typical_p": 1.0,

|

| 166 |

+

"use_bfloat16": false

|

| 167 |

+

}

|

| 168 |

+

}

|

working/safety_checker/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:756983ee73e0a2443fcaa7f41680b258a417ac1c5b1dca2d6520bf3f0f50a9e4

|

| 3 |

+

size 1216065214

|

working/scheduler/scheduler_config.json

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "PNDMScheduler",

|

| 3 |

+

"_diffusers_version": "0.25.0",

|

| 4 |

+

"beta_end": 0.012,

|

| 5 |

+

"beta_schedule": "scaled_linear",

|

| 6 |

+

"beta_start": 0.00085,

|

| 7 |

+

"clip_sample": false,

|

| 8 |

+

"num_train_timesteps": 1000,

|

| 9 |

+

"prediction_type": "epsilon",

|

| 10 |

+

"set_alpha_to_one": false,

|

| 11 |

+

"skip_prk_steps": true,

|

| 12 |

+

"steps_offset": 1,

|

| 13 |

+

"timestep_spacing": "leading",

|

| 14 |

+

"trained_betas": null

|

| 15 |

+

}

|

working/text_encoder/config.json

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "C:\\ProyectoDL\\webui_forge_cu121_torch21\\webui\\models\\dreambooth\\model_28_mar\\working",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"CLIPTextModel"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"dropout": 0.0,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"hidden_act": "quick_gelu",

|

| 11 |

+

"hidden_size": 768,

|

| 12 |

+

"initializer_factor": 1.0,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 3072,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 77,

|

| 17 |

+

"model_type": "clip_text_model",

|

| 18 |

+

"num_attention_heads": 12,

|

| 19 |

+

"num_hidden_layers": 12,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"projection_dim": 768,

|

| 22 |

+

"torch_dtype": "float32",

|

| 23 |

+

"transformers_version": "4.30.2",

|

| 24 |

+

"vocab_size": 49408

|

| 25 |

+

}

|

working/text_encoder/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3151fc5ad18cf4701708c0dbb8a8e429037ba6e6fb7e9748c5a7db4255cc7619

|

| 3 |

+

size 492307486

|

working/tokenizer/merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

working/tokenizer/special_tokens_map.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<|startoftext|>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": true,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "<|endoftext|>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": true,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": "<|endoftext|>",

|

| 17 |

+

"unk_token": {

|

| 18 |

+

"content": "<|endoftext|>",

|

| 19 |

+

"lstrip": false,

|

| 20 |

+

"normalized": true,

|

| 21 |

+

"rstrip": false,

|

| 22 |

+

"single_word": false

|

| 23 |

+

}

|

| 24 |

+

}

|

working/tokenizer/tokenizer_config.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_prefix_space": false,

|

| 3 |

+

"bos_token": {

|

| 4 |

+

"__type": "AddedToken",

|

| 5 |

+

"content": "<|startoftext|>",

|

| 6 |

+

"lstrip": false,

|

| 7 |

+

"normalized": true,

|

| 8 |

+

"rstrip": false,

|

| 9 |

+

"single_word": false

|

| 10 |

+

},

|

| 11 |

+

"clean_up_tokenization_spaces": true,

|

| 12 |

+

"do_lower_case": true,

|

| 13 |

+

"eos_token": {

|

| 14 |

+

"__type": "AddedToken",

|

| 15 |

+

"content": "<|endoftext|>",

|

| 16 |