Corentin

commited on

Commit

·

0245482

1

Parent(s):

3282ccf

Model Card and tensorboard

Browse files

README.md

CHANGED

|

@@ -1,3 +1,138 @@

|

|

| 1 |

---

|

| 2 |

license: agpl-3.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: agpl-3.0

|

| 3 |

+

tags:

|

| 4 |

+

- image

|

| 5 |

+

- keras

|

| 6 |

+

- myology

|

| 7 |

+

- biology

|

| 8 |

+

- histology

|

| 9 |

+

- muscle

|

| 10 |

+

- cells

|

| 11 |

+

- fibers

|

| 12 |

+

- myopathy

|

| 13 |

+

- SDH

|

| 14 |

+

- myoquant

|

| 15 |

+

- classification

|

| 16 |

+

- mitochondria

|

| 17 |

+

datasets:

|

| 18 |

+

- corentinm7/MyoQuant-SDH-Data

|

| 19 |

+

metrics:

|

| 20 |

+

- accuracy

|

| 21 |

+

library_name: keras

|

| 22 |

+

model-index:

|

| 23 |

+

- name: MyoQuant-SDH-Resnet50V2

|

| 24 |

+

results:

|

| 25 |

+

- task:

|

| 26 |

+

type: image-classification # Required. Example: automatic-speech-recognition

|

| 27 |

+

name: Image Classification # Optional. Example: Speech Recognition

|

| 28 |

+

dataset:

|

| 29 |

+

type: corentinm7/MyoQuant-SDH-Data # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

|

| 30 |

+

name: MyoQuant SDH Data # Required. A pretty name for the dataset. Example: Common Voice (French)

|

| 31 |

+

split: test # Optional. Example: test

|

| 32 |

+

metrics:

|

| 33 |

+

- type: accuracy # Required. Example: wer. Use metric id from https://hf.co/metrics

|

| 34 |

+

value: 0.9285 # Required. Example: 20.90

|

| 35 |

+

name: Test Accuracy # Optional. Example: Test WER

|

| 36 |

---

|

| 37 |

+

|

| 38 |

+

## Model description

|

| 39 |

+

|

| 40 |

+

This is the model card for the SDH Model used by the [MyoQuant](https://github.com/lambda-science/MyoQuant) tool.

|

| 41 |

+

|

| 42 |

+

## Intended uses & limitations

|

| 43 |

+

|

| 44 |

+

It's intended to allow people to use, improve and verify the reproducibility of our MyoQuant tool. The SDH model is used to classify SDH stained muscle fiber with abnormal mitochondria profile.

|

| 45 |

+

|

| 46 |

+

## Training and evaluation data

|

| 47 |

+

|

| 48 |

+

It's trained on the [corentinm7/MyoQuant-SDH-Data](https://huggingface.co/datasets/corentinm7/MyoQuant-SDH-Data), avaliable on HuggingFace Dataset Hub.

|

| 49 |

+

|

| 50 |

+

## Training procedure

|

| 51 |

+

|

| 52 |

+

This model was trained using the ResNet50V2 model architecture in Keras.

|

| 53 |

+

All images have been resized to 256x256 using the `tf.image.resize()` function from Tensorflow.

|

| 54 |

+

Data augmentation was included as layers before ResNet50V2.

|

| 55 |

+

Full model code:

|

| 56 |

+

|

| 57 |

+

```python

|

| 58 |

+

data_augmentation = tf.keras.Sequential([

|

| 59 |

+

layers.Resizing(256, 256, interpolation="bilinear", crop_to_aspect_ratio=True, input_shape=(None, None, 3)),

|

| 60 |

+

layers.Rescaling(scale=1./127.5, offset=-1),

|

| 61 |

+

RandomBrightness(factor=0.2, value_range=(-1.0, 1.0)), # Not avaliable in tensorflow 2.8

|

| 62 |

+

layers.RandomContrast(factor=0.2),

|

| 63 |

+

layers.RandomFlip("horizontal_and_vertical"),

|

| 64 |

+

layers.RandomRotation(0.3, fill_mode="constant"),

|

| 65 |

+

layers.RandomZoom(.2, .2, fill_mode="constant"),

|

| 66 |

+

layers.RandomTranslation(0.2, .2,fill_mode="constant"),

|

| 67 |

+

|

| 68 |

+

])

|

| 69 |

+

model = models.Sequential()

|

| 70 |

+

model.add(data_augmentation)

|

| 71 |

+

model.add(

|

| 72 |

+

ResNet50V2(

|

| 73 |

+

include_top=False,

|

| 74 |

+

input_shape=(256,256,3),

|

| 75 |

+

pooling="avg",

|

| 76 |

+

)

|

| 77 |

+

)

|

| 78 |

+

model.add(layers.Flatten())

|

| 79 |

+

model.add(layers.Dense(2, activation='softmax'))

|

| 80 |

+

```

|

| 81 |

+

|

| 82 |

+

```

|

| 83 |

+

_________________________________________________________________

|

| 84 |

+

Layer (type) Output Shape Param #

|

| 85 |

+

=================================================================

|

| 86 |

+

sequential (Sequential) (None, 256, 256, 3) 0

|

| 87 |

+

|

| 88 |

+

resnet50v2 (Functional) (None, 2048) 23564800

|

| 89 |

+

|

| 90 |

+

flatten (Flatten) (None, 2048) 0

|

| 91 |

+

|

| 92 |

+

dense (Dense) (None, 2) 4098

|

| 93 |

+

|

| 94 |

+

=================================================================

|

| 95 |

+

Total params: 23,568,898

|

| 96 |

+

Trainable params: 23,523,458

|

| 97 |

+

Non-trainable params: 45,440

|

| 98 |

+

_________________________________________________________________

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

+

We used a ResNet50V2 pre-trained on ImageNet as a starting point and trained the model using an EarlyStopping with a value of 20 (i.e. if validation loss doesn't improve after 20 epoch, stop the training and roll back to the epoch with lowest val loss.)

|

| 102 |

+

Class imbalance was handled by using the class\_-weight attribute during training. It was calculated for each class as `(1/n. elem of the class) * (n. of all training elem / 2)` giving in our case: `{0: 0.6593016912165849, 1: 2.069349315068493}`

|

| 103 |

+

|

| 104 |

+

### Training hyperparameters

|

| 105 |

+

|

| 106 |

+

The following hyperparameters were used during training:

|

| 107 |

+

|

| 108 |

+

- optimizer: Adam

|

| 109 |

+

- Learning Rate Schedule: `ReduceLROnPlateau(monitor='val_loss', factor=0.2, patience=5, min_lr=MIN_LR` with START_LR = 1e-5 and MIN_LR = 1e-7

|

| 110 |

+

- Loss Function: SparseCategoricalCrossentropy

|

| 111 |

+

- Metric: Accuracy

|

| 112 |

+

|

| 113 |

+

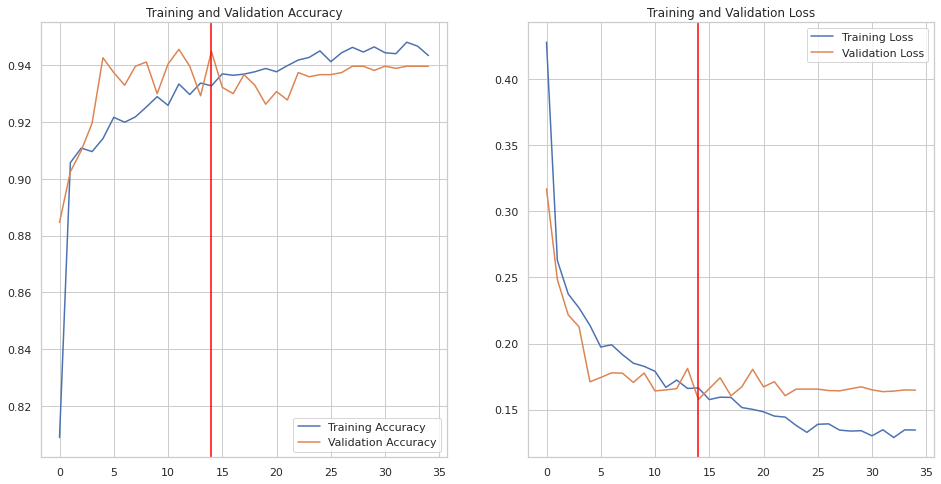

## Training Curve

|

| 114 |

+

|

| 115 |

+

Plot of the accuracy vs epoch and loss vs epoch for training and validation set.

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

## Test Results

|

| 119 |

+

|

| 120 |

+

Results for accuracy metrics on the test split of the [corentinm7/MyoQuant-SDH-Data](https://huggingface.co/datasets/corentinm7/MyoQuant-SDH-Data) dataset.

|

| 121 |

+

|

| 122 |

+

```

|

| 123 |

+

105/105 - 15s - loss: 0.1618 - accuracy: 0.9285 - 15s/epoch - 140ms/step

|

| 124 |

+

Test data results:

|

| 125 |

+

0.928528904914856

|

| 126 |

+

```

|

| 127 |

+

|

| 128 |

+

# How to Import the Model

|

| 129 |

+

|

| 130 |

+

To import this model as it was trained in Tensorflow 2.8 on Google Colab, RandomBrightness layer had to be added by hand (it was only introduced in Tensorflow 2.10.). So you will need to download the `random_brightness.py` fille in addition to the model.

|

| 131 |

+

Then the model can easily be imported in Tensorflow/Keras using:

|

| 132 |

+

|

| 133 |

+

```python

|

| 134 |

+

from .random_brightness import *

|

| 135 |

+

model_sdh = keras.models.load_model(

|

| 136 |

+

"model.h5", custom_objects={"RandomBrightness": RandomBrightness}

|

| 137 |

+

)

|

| 138 |

+

```

|

random_brightness.py

ADDED

|

@@ -0,0 +1,345 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# @title Random Brightness Layer

|

| 2 |

+

import tensorflow as tf

|

| 3 |

+

from keras import backend

|

| 4 |

+

from keras.engine import base_layer

|

| 5 |

+

from keras.engine import base_preprocessing_layer

|

| 6 |

+

from keras.layers.preprocessing import preprocessing_utils as utils

|

| 7 |

+

from keras.utils import tf_utils

|

| 8 |

+

|

| 9 |

+

from tensorflow.python.ops import stateless_random_ops

|

| 10 |

+

from tensorflow.python.util.tf_export import keras_export

|

| 11 |

+

from tensorflow.tools.docs import doc_controls

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

@keras_export("keras.__internal__.layers.BaseImageAugmentationLayer")

|

| 15 |

+

class BaseImageAugmentationLayer(base_layer.BaseRandomLayer):

|

| 16 |

+

"""Abstract base layer for image augmentaion.

|

| 17 |

+

This layer contains base functionalities for preprocessing layers which

|

| 18 |

+

augment image related data, eg. image and in future, label and bounding boxes.

|

| 19 |

+

The subclasses could avoid making certain mistakes and reduce code

|

| 20 |

+

duplications.

|

| 21 |

+

This layer requires you to implement one method: `augment_image()`, which

|

| 22 |

+

augments one single image during the training. There are a few additional

|

| 23 |

+

methods that you can implement for added functionality on the layer:

|

| 24 |

+

`augment_label()`, which handles label augmentation if the layer supports

|

| 25 |

+

that.

|

| 26 |

+

`augment_bounding_box()`, which handles the bounding box augmentation, if the

|

| 27 |

+

layer supports that.

|

| 28 |

+

`get_random_transformation()`, which should produce a random transformation

|

| 29 |

+

setting. The tranformation object, which could be any type, will be passed to

|

| 30 |

+

`augment_image`, `augment_label` and `augment_bounding_box`, to coodinate

|

| 31 |

+

the randomness behavior, eg, in the RandomFlip layer, the image and

|

| 32 |

+

bounding_box should be changed in the same way.

|

| 33 |

+

The `call()` method support two formats of inputs:

|

| 34 |

+

1. Single image tensor with 3D (HWC) or 4D (NHWC) format.

|

| 35 |

+

2. A dict of tensors with stable keys. The supported keys are:

|

| 36 |

+

`"images"`, `"labels"` and `"bounding_boxes"` at the moment. We might add

|

| 37 |

+

more keys in future when we support more types of augmentation.

|

| 38 |

+

The output of the `call()` will be in two formats, which will be the same

|

| 39 |

+

structure as the inputs.

|

| 40 |

+

The `call()` will handle the logic detecting the training/inference

|

| 41 |

+

mode, unpack the inputs, forward to the correct function, and pack the output

|

| 42 |

+

back to the same structure as the inputs.

|

| 43 |

+

By default the `call()` method leverages the `tf.vectorized_map()` function.

|

| 44 |

+

Auto-vectorization can be disabled by setting `self.auto_vectorize = False`

|

| 45 |

+

in your `__init__()` method. When disabled, `call()` instead relies

|

| 46 |

+

on `tf.map_fn()`. For example:

|

| 47 |

+

```python

|

| 48 |

+

class SubclassLayer(BaseImageAugmentationLayer):

|

| 49 |

+

def __init__(self):

|

| 50 |

+

super().__init__()

|

| 51 |

+

self.auto_vectorize = False

|

| 52 |

+

```

|

| 53 |

+

Example:

|

| 54 |

+

```python

|

| 55 |

+

class RandomContrast(BaseImageAugmentationLayer):

|

| 56 |

+

def __init__(self, factor=(0.5, 1.5), **kwargs):

|

| 57 |

+

super().__init__(**kwargs)

|

| 58 |

+

self._factor = factor

|

| 59 |

+

def augment_image(self, image, transformation=None):

|

| 60 |

+

random_factor = tf.random.uniform([], self._factor[0], self._factor[1])

|

| 61 |

+

mean = tf.math.reduced_mean(inputs, axis=-1, keep_dim=True)

|

| 62 |

+

return (inputs - mean) * random_factor + mean

|

| 63 |

+

```

|

| 64 |

+

Note that since the randomness is also a common functionnality, this layer

|

| 65 |

+

also includes a tf.keras.backend.RandomGenerator, which can be used to produce

|

| 66 |

+

the random numbers. The random number generator is stored in the

|

| 67 |

+

`self._random_generator` attribute.

|

| 68 |

+

"""

|

| 69 |

+

|

| 70 |

+

def __init__(self, rate=1.0, seed=None, **kwargs):

|

| 71 |

+

super().__init__(seed=seed, **kwargs)

|

| 72 |

+

self.rate = rate

|

| 73 |

+

|

| 74 |

+

@property

|

| 75 |

+

def auto_vectorize(self):

|

| 76 |

+

"""Control whether automatic vectorization occurs.

|

| 77 |

+

By default the `call()` method leverages the `tf.vectorized_map()` function.

|

| 78 |

+

Auto-vectorization can be disabled by setting `self.auto_vectorize = False`

|

| 79 |

+

in your `__init__()` method. When disabled, `call()` instead relies

|

| 80 |

+

on `tf.map_fn()`. For example:

|

| 81 |

+

```python

|

| 82 |

+

class SubclassLayer(BaseImageAugmentationLayer):

|

| 83 |

+

def __init__(self):

|

| 84 |

+

super().__init__()

|

| 85 |

+

self.auto_vectorize = False

|

| 86 |

+

```

|

| 87 |

+

"""

|

| 88 |

+

return getattr(self, "_auto_vectorize", True)

|

| 89 |

+

|

| 90 |

+

@auto_vectorize.setter

|

| 91 |

+

def auto_vectorize(self, auto_vectorize):

|

| 92 |

+

self._auto_vectorize = auto_vectorize

|

| 93 |

+

|

| 94 |

+

@property

|

| 95 |

+

def _map_fn(self):

|

| 96 |

+

if self.auto_vectorize:

|

| 97 |

+

return tf.vectorized_map

|

| 98 |

+

else:

|

| 99 |

+

return tf.map_fn

|

| 100 |

+

|

| 101 |

+

@doc_controls.for_subclass_implementers

|

| 102 |

+

def augment_image(self, image, transformation=None):

|

| 103 |

+

"""Augment a single image during training.

|

| 104 |

+

Args:

|

| 105 |

+

image: 3D image input tensor to the layer. Forwarded from `layer.call()`.

|

| 106 |

+

transformation: The transformation object produced by

|

| 107 |

+

`get_random_transformation`. Used to coordinate the randomness between

|

| 108 |

+

image, label and bounding box.

|

| 109 |

+

Returns:

|

| 110 |

+

output 3D tensor, which will be forward to `layer.call()`.

|

| 111 |

+

"""

|

| 112 |

+

raise NotImplementedError()

|

| 113 |

+

|

| 114 |

+

@doc_controls.for_subclass_implementers

|

| 115 |

+

def augment_label(self, label, transformation=None):

|

| 116 |

+

"""Augment a single label during training.

|

| 117 |

+

Args:

|

| 118 |

+

label: 1D label to the layer. Forwarded from `layer.call()`.

|

| 119 |

+

transformation: The transformation object produced by

|

| 120 |

+

`get_random_transformation`. Used to coordinate the randomness between

|

| 121 |

+

image, label and bounding box.

|

| 122 |

+

Returns:

|

| 123 |

+

output 1D tensor, which will be forward to `layer.call()`.

|

| 124 |

+

"""

|

| 125 |

+

raise NotImplementedError()

|

| 126 |

+

|

| 127 |

+

@doc_controls.for_subclass_implementers

|

| 128 |

+

def augment_bounding_box(self, bounding_box, transformation=None):

|

| 129 |

+

"""Augment bounding boxes for one image during training.

|

| 130 |

+

Args:

|

| 131 |

+

bounding_box: 2D bounding boxes to the layer. Forwarded from `call()`.

|

| 132 |

+

transformation: The transformation object produced by

|

| 133 |

+

`get_random_transformation`. Used to coordinate the randomness between

|

| 134 |

+

image, label and bounding box.

|

| 135 |

+

Returns:

|

| 136 |

+

output 2D tensor, which will be forward to `layer.call()`.

|

| 137 |

+

"""

|

| 138 |

+

raise NotImplementedError()

|

| 139 |

+

|

| 140 |

+

@doc_controls.for_subclass_implementers

|

| 141 |

+

def get_random_transformation(self, image=None, label=None, bounding_box=None):

|

| 142 |

+

"""Produce random transformation config for one single input.

|

| 143 |

+

This is used to produce same randomness between image/label/bounding_box.

|

| 144 |

+

Args:

|

| 145 |

+

image: 3D image tensor from inputs.

|

| 146 |

+

label: optional 1D label tensor from inputs.

|

| 147 |

+

bounding_box: optional 2D bounding boxes tensor from inputs.

|

| 148 |

+

Returns:

|

| 149 |

+

Any type of object, which will be forwarded to `augment_image`,

|

| 150 |

+

`augment_label` and `augment_bounding_box` as the `transformation`

|

| 151 |

+

parameter.

|

| 152 |

+

"""

|

| 153 |

+

return None

|

| 154 |

+

|

| 155 |

+

def call(self, inputs, training=True):

|

| 156 |

+

inputs = self._ensure_inputs_are_compute_dtype(inputs)

|

| 157 |

+

if training:

|

| 158 |

+

inputs, is_dict = self._format_inputs(inputs)

|

| 159 |

+

images = inputs["images"]

|

| 160 |

+

if images.shape.rank == 3:

|

| 161 |

+

return self._format_output(self._augment(inputs), is_dict)

|

| 162 |

+

elif images.shape.rank == 4:

|

| 163 |

+

return self._format_output(self._batch_augment(inputs), is_dict)

|

| 164 |

+

else:

|

| 165 |

+

raise ValueError(

|

| 166 |

+

"Image augmentation layers are expecting inputs to be "

|

| 167 |

+

"rank 3 (HWC) or 4D (NHWC) tensors. Got shape: "

|

| 168 |

+

f"{images.shape}"

|

| 169 |

+

)

|

| 170 |

+

else:

|

| 171 |

+

return inputs

|

| 172 |

+

|

| 173 |

+

def _augment(self, inputs):

|

| 174 |

+

image = inputs.get("images", None)

|

| 175 |

+

label = inputs.get("labels", None)

|

| 176 |

+

bounding_box = inputs.get("bounding_boxes", None)

|

| 177 |

+

transformation = self.get_random_transformation(

|

| 178 |

+

image=image, label=label, bounding_box=bounding_box

|

| 179 |

+

) # pylint: disable=assignment-from-none

|

| 180 |

+

image = self.augment_image(image, transformation=transformation)

|

| 181 |

+

result = {"images": image}

|

| 182 |

+

if label is not None:

|

| 183 |

+

label = self.augment_label(label, transformation=transformation)

|

| 184 |

+

result["labels"] = label

|

| 185 |

+

if bounding_box is not None:

|

| 186 |

+

bounding_box = self.augment_bounding_box(

|

| 187 |

+

bounding_box, transformation=transformation

|

| 188 |

+

)

|

| 189 |

+

result["bounding_boxes"] = bounding_box

|

| 190 |

+

return result

|

| 191 |

+

|

| 192 |

+

def _batch_augment(self, inputs):

|

| 193 |

+

return self._map_fn(self._augment, inputs)

|

| 194 |

+

|

| 195 |

+

def _format_inputs(self, inputs):

|

| 196 |

+

if tf.is_tensor(inputs):

|

| 197 |

+

# single image input tensor

|

| 198 |

+

return {"images": inputs}, False

|

| 199 |

+

elif isinstance(inputs, dict):

|

| 200 |

+

# TODO(scottzhu): Check if it only contains the valid keys

|

| 201 |

+

return inputs, True

|

| 202 |

+

else:

|

| 203 |

+

raise ValueError(

|

| 204 |

+

f"Expect the inputs to be image tensor or dict. Got {inputs}"

|

| 205 |

+

)

|

| 206 |

+

|

| 207 |

+

def _format_output(self, output, is_dict):

|

| 208 |

+

if not is_dict:

|

| 209 |

+

return output["images"]

|

| 210 |

+

else:

|

| 211 |

+

return output

|

| 212 |

+

|

| 213 |

+

def _ensure_inputs_are_compute_dtype(self, inputs):

|

| 214 |

+

if isinstance(inputs, dict):

|

| 215 |

+

inputs["images"] = utils.ensure_tensor(inputs["images"], self.compute_dtype)

|

| 216 |

+

else:

|

| 217 |

+

inputs = utils.ensure_tensor(inputs, self.compute_dtype)

|

| 218 |

+

return inputs

|

| 219 |

+

|

| 220 |

+

|

| 221 |

+

@keras_export("keras.layers.RandomBrightness", v1=[])

|

| 222 |

+

class RandomBrightness(BaseImageAugmentationLayer):

|

| 223 |

+

"""A preprocessing layer which randomly adjusts brightness during training.

|

| 224 |

+

This layer will randomly increase/reduce the brightness for the input RGB

|

| 225 |

+

images. At inference time, the output will be identical to the input.

|

| 226 |

+

Call the layer with `training=True` to adjust the brightness of the input.

|

| 227 |

+

Note that different brightness adjustment factors

|

| 228 |

+

will be apply to each the images in the batch.

|

| 229 |

+

For an overview and full list of preprocessing layers, see the preprocessing

|

| 230 |

+

[guide](https://www.tensorflow.org/guide/keras/preprocessing_layers).

|

| 231 |

+

Args:

|

| 232 |

+

factor: Float or a list/tuple of 2 floats between -1.0 and 1.0. The

|

| 233 |

+

factor is used to determine the lower bound and upper bound of the

|

| 234 |

+

brightness adjustment. A float value will be chosen randomly between

|

| 235 |

+

the limits. When -1.0 is chosen, the output image will be black, and

|

| 236 |

+

when 1.0 is chosen, the image will be fully white. When only one float

|

| 237 |

+

is provided, eg, 0.2, then -0.2 will be used for lower bound and 0.2

|

| 238 |

+

will be used for upper bound.

|

| 239 |

+

value_range: Optional list/tuple of 2 floats for the lower and upper limit

|

| 240 |

+

of the values of the input data. Defaults to [0.0, 255.0]. Can be changed

|

| 241 |

+

to e.g. [0.0, 1.0] if the image input has been scaled before this layer.

|

| 242 |

+

The brightness adjustment will be scaled to this range, and the

|

| 243 |

+

output values will be clipped to this range.

|

| 244 |

+

seed: optional integer, for fixed RNG behavior.

|

| 245 |

+

Inputs: 3D (HWC) or 4D (NHWC) tensor, with float or int dtype. Input pixel

|

| 246 |

+

values can be of any range (e.g. `[0., 1.)` or `[0, 255]`)

|

| 247 |

+

Output: 3D (HWC) or 4D (NHWC) tensor with brightness adjusted based on the

|

| 248 |

+

`factor`. By default, the layer will output floats. The output value will

|

| 249 |

+

be clipped to the range `[0, 255]`, the valid range of RGB colors, and

|

| 250 |

+

rescaled based on the `value_range` if needed.

|

| 251 |

+

Sample usage:

|

| 252 |

+

```python

|

| 253 |

+

random_bright = tf.keras.layers.RandomBrightness(factor=0.2)

|

| 254 |

+

# An image with shape [2, 2, 3]

|

| 255 |

+

image = [[[1, 2, 3], [4 ,5 ,6]], [[7, 8, 9], [10, 11, 12]]]

|

| 256 |

+

# Assume we randomly select the factor to be 0.1, then it will apply

|

| 257 |

+

# 0.1 * 255 to all the channel

|

| 258 |

+

output = random_bright(image, training=True)

|

| 259 |

+

# output will be int64 with 25.5 added to each channel and round down.

|

| 260 |

+

tf.Tensor([[[26.5, 27.5, 28.5]

|

| 261 |

+

[29.5, 30.5, 31.5]]

|

| 262 |

+

[[32.5, 33.5, 34.5]

|

| 263 |

+

[35.5, 36.5, 37.5]]],

|

| 264 |

+

shape=(2, 2, 3), dtype=int64)

|

| 265 |

+

```

|

| 266 |

+

"""

|

| 267 |

+

|

| 268 |

+

_FACTOR_VALIDATION_ERROR = (

|

| 269 |

+

"The `factor` argument should be a number (or a list of two numbers) "

|

| 270 |

+

"in the range [-1.0, 1.0]. "

|

| 271 |

+

)

|

| 272 |

+

_VALUE_RANGE_VALIDATION_ERROR = (

|

| 273 |

+

"The `value_range` argument should be a list of two numbers. "

|

| 274 |

+

)

|

| 275 |

+

|

| 276 |

+

def __init__(self, factor, value_range=(0, 255), seed=None, **kwargs):

|

| 277 |

+

base_preprocessing_layer.keras_kpl_gauge.get_cell("RandomBrightness").set(True)

|

| 278 |

+

super().__init__(seed=seed, force_generator=True, **kwargs)

|

| 279 |

+

self._set_factor(factor)

|

| 280 |

+

self._set_value_range(value_range)

|

| 281 |

+

self._seed = seed

|

| 282 |

+

|

| 283 |

+

def augment_image(self, image, transformation=None):

|

| 284 |

+

return self._brightness_adjust(image, transformation["rgb_delta"])

|

| 285 |

+

|

| 286 |

+

def augment_label(self, label, transformation=None):

|

| 287 |

+

return label

|

| 288 |

+

|

| 289 |

+

def get_random_transformation(self, image=None, label=None, bounding_box=None):

|

| 290 |

+

rgb_delta_shape = (1, 1, 1)

|

| 291 |

+

random_rgb_delta = self._random_generator.random_uniform(

|

| 292 |

+

shape=rgb_delta_shape,

|

| 293 |

+

minval=self._factor[0],

|

| 294 |

+

maxval=self._factor[1],

|

| 295 |

+

)

|

| 296 |

+

random_rgb_delta = random_rgb_delta * (

|

| 297 |

+

self._value_range[1] - self._value_range[0]

|

| 298 |

+

)

|

| 299 |

+

return {"rgb_delta": random_rgb_delta}

|

| 300 |

+

|

| 301 |

+

def _set_value_range(self, value_range):

|

| 302 |

+

if not isinstance(value_range, (tuple, list)):

|

| 303 |

+

raise ValueError(self._VALUE_RANGE_VALIDATION_ERROR + f"Got {value_range}")

|

| 304 |

+

if len(value_range) != 2:

|

| 305 |

+

raise ValueError(self._VALUE_RANGE_VALIDATION_ERROR + f"Got {value_range}")

|

| 306 |

+

self._value_range = sorted(value_range)

|

| 307 |

+

|

| 308 |

+

def _set_factor(self, factor):

|

| 309 |

+

if isinstance(factor, (tuple, list)):

|

| 310 |

+

if len(factor) != 2:

|

| 311 |

+

raise ValueError(self._FACTOR_VALIDATION_ERROR + f"Got {factor}")

|

| 312 |

+

self._check_factor_range(factor[0])

|

| 313 |

+

self._check_factor_range(factor[1])

|

| 314 |

+

self._factor = sorted(factor)

|

| 315 |

+

elif isinstance(factor, (int, float)):

|

| 316 |

+

self._check_factor_range(factor)

|

| 317 |

+

factor = abs(factor)

|

| 318 |

+

self._factor = [-factor, factor]

|

| 319 |

+

else:

|

| 320 |

+

raise ValueError(self._FACTOR_VALIDATION_ERROR + f"Got {factor}")

|

| 321 |

+

|

| 322 |

+

def _check_factor_range(self, input_number):

|

| 323 |

+

if input_number > 1.0 or input_number < -1.0:

|

| 324 |

+

raise ValueError(self._FACTOR_VALIDATION_ERROR + f"Got {input_number}")

|

| 325 |

+

|

| 326 |

+

def _brightness_adjust(self, image, rgb_delta):

|

| 327 |

+

image = utils.ensure_tensor(image, self.compute_dtype)

|

| 328 |

+

rank = image.shape.rank

|

| 329 |

+

if rank != 3:

|

| 330 |

+

raise ValueError(

|

| 331 |

+

"Expected the input image to be rank 3. Got "

|

| 332 |

+

f"inputs.shape = {image.shape}"

|

| 333 |

+

)

|

| 334 |

+

rgb_delta = tf.cast(rgb_delta, image.dtype)

|

| 335 |

+

image += rgb_delta

|

| 336 |

+

return tf.clip_by_value(image, self._value_range[0], self._value_range[1])

|

| 337 |

+

|

| 338 |

+

def get_config(self):

|

| 339 |

+

config = {

|

| 340 |

+

"factor": self._factor,

|

| 341 |

+

"value_range": self._value_range,

|

| 342 |

+

"seed": self._seed,

|

| 343 |

+

}

|

| 344 |

+

base_config = super().get_config()

|

| 345 |

+

return dict(list(base_config.items()) + list(config.items()))

|

runs/sdh16k_normal_resize_20220830-083856/train/events.out.tfevents.1661848752.561a638614d6.77.0.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b74dc7d937740821f56203f12e53a9f279e14e65e58aae07b6f0b2cd1c37c2b9

|

| 3 |

+

size 8894151

|

runs/sdh16k_normal_resize_20220830-083856/validation/events.out.tfevents.1661848941.561a638614d6.77.1.v2

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f2a41bb11af557b261892fa9b42a6a8ff9ddd0917418cbdccac031a2009f7c6f

|

| 3 |

+

size 11236

|

training_curve.png

ADDED

|