Upload 5 files

Browse files

MISC/1. Conda Environment.txt

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

My Conda environment was set up as follows:

|

| 2 |

+

|

| 3 |

+

git clone https://github.com/apple/ml-stable-diffusion.git

|

| 4 |

+

|

| 5 |

+

conda create -n python_playground python=3.10 -y

|

| 6 |

+

|

| 7 |

+

conda activate python_playground

|

| 8 |

+

|

| 9 |

+

cd ml-stable-diffusion

|

| 10 |

+

|

| 11 |

+

pip install -e .

|

| 12 |

+

|

| 13 |

+

pip install omegaconf

|

| 14 |

+

|

| 15 |

+

pip install pytorch-lightning

|

| 16 |

+

|

| 17 |

+

pip install safetensors

|

| 18 |

+

|

| 19 |

+

pip install --upgrade --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cpu

|

| 20 |

+

|

| 21 |

+

Maybe another package or two was installed because I was prompted to in an error message at some point.

|

MISC/2. Converting SD Models For ControlNet.txt

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

To convert a .ckpt or .safetensors SD-1.5 type model for use with ControlNet:

|

| 2 |

+

|

| 3 |

+

Download this Python script and place it in the same folder as the model you want to convert:

|

| 4 |

+

|

| 5 |

+

https://github.com/huggingface/diffusers/raw/main/scripts/convert_original_stable_diffusion_to_diffusers.py

|

| 6 |

+

|

| 7 |

+

Activate the Conda environment with conda activate python_playground

|

| 8 |

+

|

| 9 |

+

Navigate to the folder where the model and script are located.

|

| 10 |

+

|

| 11 |

+

If your model is in CKPT format, run:

|

| 12 |

+

|

| 13 |

+

python convert_original_stable_diffusion_to_diffusers.py --checkpoint_path <MODEL-NAME>.ckpt --device cpu --extract_ema --dump_path diffusers

|

| 14 |

+

|

| 15 |

+

If your model is in SafeTensors format, run:

|

| 16 |

+

|

| 17 |

+

python convert_original_stable_diffusion_to_diffusers.py --checkpoint_path <MODEL-NAME>.safetensors --from_safetensors --device cpu --extract_ema --dump_path diffusers

|

| 18 |

+

|

| 19 |

+

Copy or move the resulting diffusers folder to:

|

| 20 |

+

|

| 21 |

+

xxxxx/miniconda3/envs/python_playground/coreml-swift/convert

|

| 22 |

+

|

| 23 |

+

cd xxxxx/miniconda3/envs/python_playground/coreml-swift/convert

|

| 24 |

+

|

| 25 |

+

For 512x512 SPLIT run: python -m python_coreml_stable_diffusion.torch2coreml --convert-unet --convert-text-encoder --convert-vae-decoder --convert-vae-encoder --unet-support-controlnet --model-version "./diffusers" --bundle-resources-for-swift-cli --attention-implementation SPLIT_EINSUM -o "./Split-512x512"

|

| 26 |

+

|

| 27 |

+

For 512x512 ORIGINAL run: python -m python_coreml_stable_diffusion.torch2coreml --convert-unet --convert-text-encoder --convert-vae-encoder --convert-vae-decoder --unet-support-controlnet --model-version "./diffusers" --bundle-resources-for-swift-cli --attention-implementation ORIGINAL --latent-h 64 --latent-w 64 --compute-unit CPU_AND_GPU -o "./Orig-512x512"

|

| 28 |

+

|

| 29 |

+

The finished model files will either be in the Split-512x512/Resources folder or in the Orig-512x512/Resources folder

|

| 30 |

+

|

| 31 |

+

Rename the folder with a good full model name and move it to the model store (xxxxx/miniconda3/envs/python_playground/coreml-swift//Models

|

| 32 |

+

|

| 33 |

+

Everything else can be discarded.

|

MISC/3. Converting A ControlNet Model.txt

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

To convert a ControlNet model:

|

| 2 |

+

|

| 3 |

+

conda activate coreml_stable_diffusion

|

| 4 |

+

|

| 5 |

+

python -m python_coreml_stable_diffusion.torch2coreml --convert-controlnet lllyasviel/control_v11p_sd15_softedge --model-version "runwayml/stable-diffusion-v1-5" --bundle-resources-for-swift-cli --attention-implementation SPLIT_EINSUM -o "./SoftEdge"

|

| 6 |

+

|

| 7 |

+

python -m python_coreml_stable_diffusion.torch2coreml --convert-controlnet lllyasviel/control_v11p_sd15_softedge --model-version "runwayml/stable-diffusion-v1-5" --bundle-resources-for-swift-cli --attention-implementation ORIGINAL --latent-w 64 --latent-h 64 --compute-unit CPU_AND_GPU -o "./SoftEdge"

|

| 8 |

+

|

| 9 |

+

Adjust --attention-implementation, --compute-unit, --latent-h, --latent-w, and -o to fit the type of model you want to end up with. These need to match the main model you plan to use them with. You can't mix sizes, but you can sometimes mix attention implementations - see the last note below abot this.

|

| 10 |

+

|

| 11 |

+

The --convert-controlnet argument needs to point to the ControlNet model's specific repo. There is an index of these repos at: https://huggingface.co/lllyasviel. The name for the argument needs to be in the form of: lllyasviel/control_Version_Info_name

|

| 12 |

+

|

| 13 |

+

The final converted model will be the .mlmodelc file in the Model_Name/Resources/controlnet folder.

|

| 14 |

+

|

| 15 |

+

Move it to your ControlNet store folder, rename it as desired, and discard everything else.

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

NOTES AND COMPLICATIONS

|

| 19 |

+

|

| 20 |

+

The first time you do a conversion, you may need to log in to Hugging face using their command line log in tool and a token. They have instructions over there if you've never done it before. You need to be logged in there because the command will pull about 4 GB of checkpoint files needed to do model conversions. (That is what the argument --model-version "runwayml/stable-diffusion-v1-5" does. This will only happen once. The files will be saved in a hidden .cache folder in you home (User) directory. If you've been playing with model conversionss before, it is possible that you already have these files cached.

|

| 21 |

+

|

| 22 |

+

Converted ControlNets that are SPLIT_EINSUM run extremely slowly using CPU and NE. They run at normal speed using CPU and GPU. But you can also just use an ORIGINAL ControlNet with a SPLIT_EINSUM main model, using CPU and GPU, and get normal speed as well. So there is probably no point in making a SPLIT_EINSUM ControlNet model.

|

MISC/4. Swift-CoreML Pipeline.txt

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-------------------------------------------------------------------------------

|

| 2 |

+

|

| 3 |

+

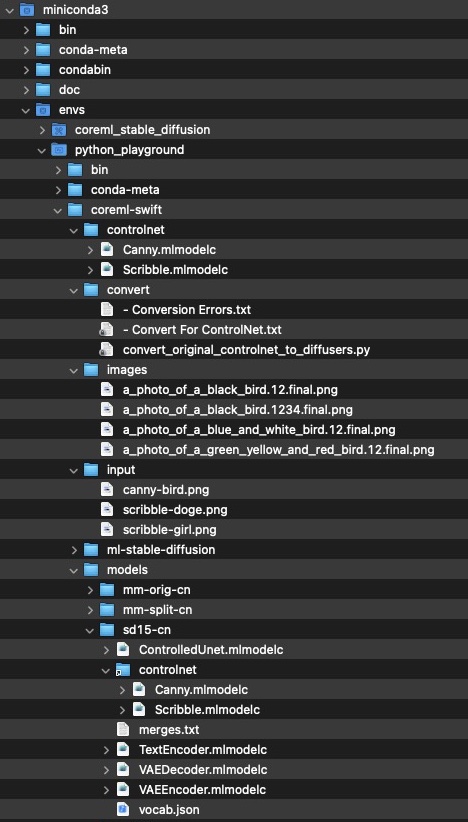

Model folders (noted with -cn when built for use with ControlNet) live in the /models folder.

|

| 4 |

+

|

| 5 |

+

Each -cn model must have a /controlnet folder inside it that is a symlink to the real /controlnet folder. You need to add this folder symlink to any model you download from my HF repo, after you unzip the model and put it in your /models folder.

|

| 6 |

+

|

| 7 |

+

(( The SwiftCLI scripts look for the ControlNet *.mlmodelc you are using inside the full model's /controlnet folder. This is crazy. It means every full model needs a folder inside symlinked to the /controlnet store folder. That is how they set it up and I haven't looked into editing their scripts yet. ))

|

| 8 |

+

|

| 9 |

+

The input image(s) you want to use with the ControlNet need to be inside the /input folder.

|

| 10 |

+

|

| 11 |

+

Generated images will be saved to the /images folder.

|

| 12 |

+

|

| 13 |

+

There is a screencap of the folder structure I have that matches all these notes in the MISC section at my HF page.

|

| 14 |

+

|

| 15 |

+

-------------------------------------------------------------------------------

|

| 16 |

+

|

| 17 |

+

Inference Without ControlNet (Using any standard SD-1.5 or SD-2.1 type CoreML model):

|

| 18 |

+

|

| 19 |

+

Test your setup with this first, before trying with ControlNet.

|

| 20 |

+

|

| 21 |

+

conda activate python_playground

|

| 22 |

+

cd xxxxx/miniconda3/envs/python_playground/coreml-swift/ml-stable-diffusion

|

| 23 |

+

|

| 24 |

+

swift run StableDiffusionSample "a photo of a cat" --seed 12 --guidance-scale 8.0 --step-count 24 --image-count 1 --scheduler dpmpp --compute-units cpuAndGPU --resource-path ../models/SD21 --output-path ../images

|

| 25 |

+

|

| 26 |

+

-------------------------------------------------------------------------------

|

| 27 |

+

|

| 28 |

+

Inference With ControlNet:

|

| 29 |

+

|

| 30 |

+

conda activate python_playground

|

| 31 |

+

cd /Users/jrittvo/miniconda3/envs/python_playground/coreml-swift/ml-stable-diffusion

|

| 32 |

+

|

| 33 |

+

swift run StableDiffusionSample "a photo of a green yellow and red bird" --seed 12 --guidance-scale 8.0 --step-count 24 --image-count 1 --scheduler dpmpp --compute-units cpuAndGPU --resource-path ../models/SD15-cn --controlnet Canny --controlnet-inputs ../input/canny-bird.png --output-path ../images

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

--negative-prompt "in quotes"

|

| 37 |

+

--seed default is random

|

| 38 |

+

--guidance-scale default is 7

|

| 39 |

+

--step-count default is 50

|

| 40 |

+

--image-count batch size, default is 1

|

| 41 |

+

--image path to image for image2image

|

| 42 |

+

--strength strength for image2image, 0.0 - 1.0, default 0.5

|

| 43 |

+

--scheduler pndm or dpmpp (DPM++), default is pndm

|

| 44 |

+

--compute-units all, cpuOnly, cpuAndGPU, cpuAndNeuralEngine

|

| 45 |

+

--resource-path one of the model checkpoint .mlmodelc bundle folders

|

| 46 |

+

--controlnet path/controlnet-model <<path/controlnet-model-2>> (no extension)

|

| 47 |

+

--controlnet-inputs path/image.png <<path/image-2.png>> (same order as --controlnet)

|

| 48 |

+

--output-path folder to save image(s) (auto-named to: prompt.seed.final.png)

|

| 49 |

+

--help

|

MISC/Directory Tree.jpg

ADDED

|