Update README.md

Browse files

README.md

CHANGED

|

@@ -13,7 +13,7 @@ pipeline_tag: visual-question-answering

|

|

| 13 |

# paligemma-3b-ft-docvqa-896-lora

|

| 14 |

|

| 15 |

|

| 16 |

-

paligemma-3b-ft-docvqa-896-lora is a fine-tuned version of the [google/paligemma-3b-ft-docvqa-896](https://huggingface.co/google/paligemma-3b-ft-docvqa-896/edit/main/README.md) model, specifically trained on the [doc-vqa](https://huggingface.co/datasets/cmarkea/doc-vqa) dataset published by cmarkea. Optimized using the LoRA (Low-Rank Adaptation) method, this model was designed to enhance performance while reducing the complexity of fine-tuning.

|

| 17 |

|

| 18 |

During training, particular attention was given to linguistic balance, with a focus on French. The model was exposed to a predominantly French context, with a 70% likelihood of interacting with French questions/answers for a given image. It operates exclusively in bfloat16 precision, optimizing computational resources. The entire training process took 3 week on a single A100 40GB.

|

| 19 |

|

|

@@ -76,7 +76,7 @@ with torch.inference_mode():

|

|

| 76 |

|

| 77 |

### Results

|

| 78 |

|

| 79 |

-

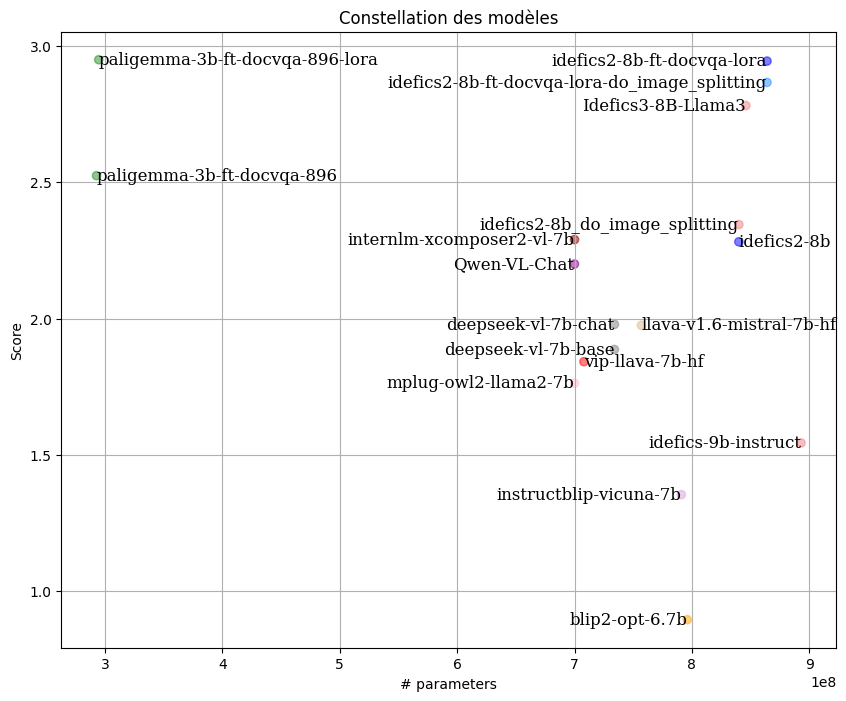

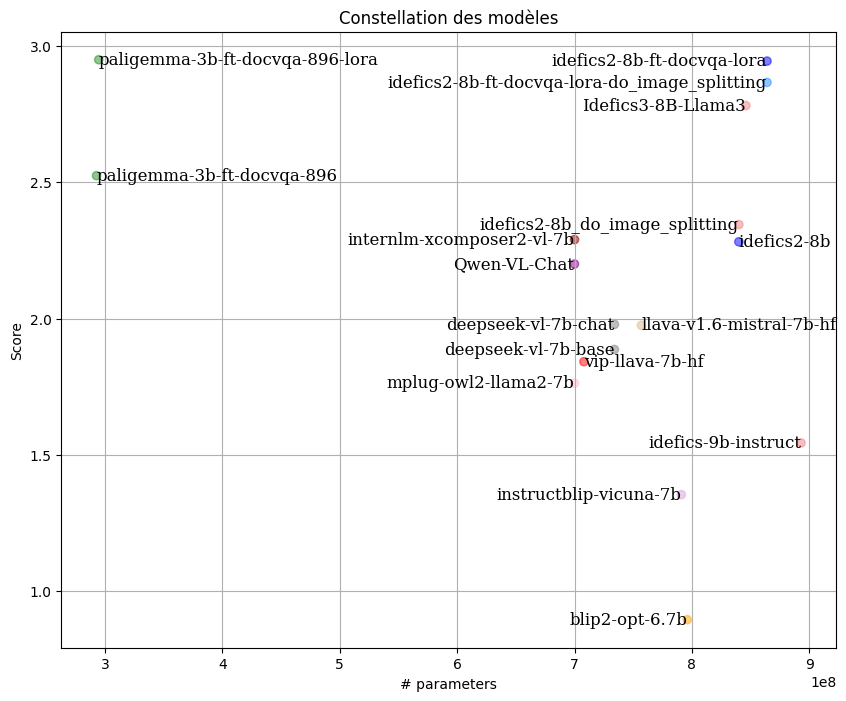

By following the LLM-as-Juries evaluation method, the following results were obtained using three judge models (GPT-4o, Gemini1.5 Pro, and Claude 3.5-Sonnet). These models were evaluated based on a well-defined scoring rubric specifically designed for the VQA context, with clear criteria for each score to ensure the highest possible precision in meeting expectations.

|

| 80 |

|

| 81 |

|

| 82 |

|

|

|

|

| 13 |

# paligemma-3b-ft-docvqa-896-lora

|

| 14 |

|

| 15 |

|

| 16 |

+

**paligemma-3b-ft-docvqa-896-lora** is a fine-tuned version of the **[google/paligemma-3b-ft-docvqa-896](https://huggingface.co/google/paligemma-3b-ft-docvqa-896/edit/main/README.md)** model, specifically trained on the **[doc-vqa](https://huggingface.co/datasets/cmarkea/doc-vqa)** dataset published by cmarkea. Optimized using the **LoRA** (Low-Rank Adaptation) method, this model was designed to enhance performance while reducing the complexity of fine-tuning.

|

| 17 |

|

| 18 |

During training, particular attention was given to linguistic balance, with a focus on French. The model was exposed to a predominantly French context, with a 70% likelihood of interacting with French questions/answers for a given image. It operates exclusively in bfloat16 precision, optimizing computational resources. The entire training process took 3 week on a single A100 40GB.

|

| 19 |

|

|

|

|

| 76 |

|

| 77 |

### Results

|

| 78 |

|

| 79 |

+

By following the **LLM-as-Juries** evaluation method, the following results were obtained using three judge models (GPT-4o, Gemini1.5 Pro, and Claude 3.5-Sonnet). These models were evaluated based on a well-defined scoring rubric specifically designed for the VQA context, with clear criteria for each score to ensure the highest possible precision in meeting expectations.

|

| 80 |

|

| 81 |

|

| 82 |

|