Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

arxiv: 2205.12424

|

| 4 |

+

datasets:

|

| 5 |

+

- VulDeePecker

|

| 6 |

+

pipeline_tag: fill-mask

|

| 7 |

+

tags:

|

| 8 |

+

- defect detection

|

| 9 |

+

- code

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

# VulBERTa Pretrained

|

| 13 |

+

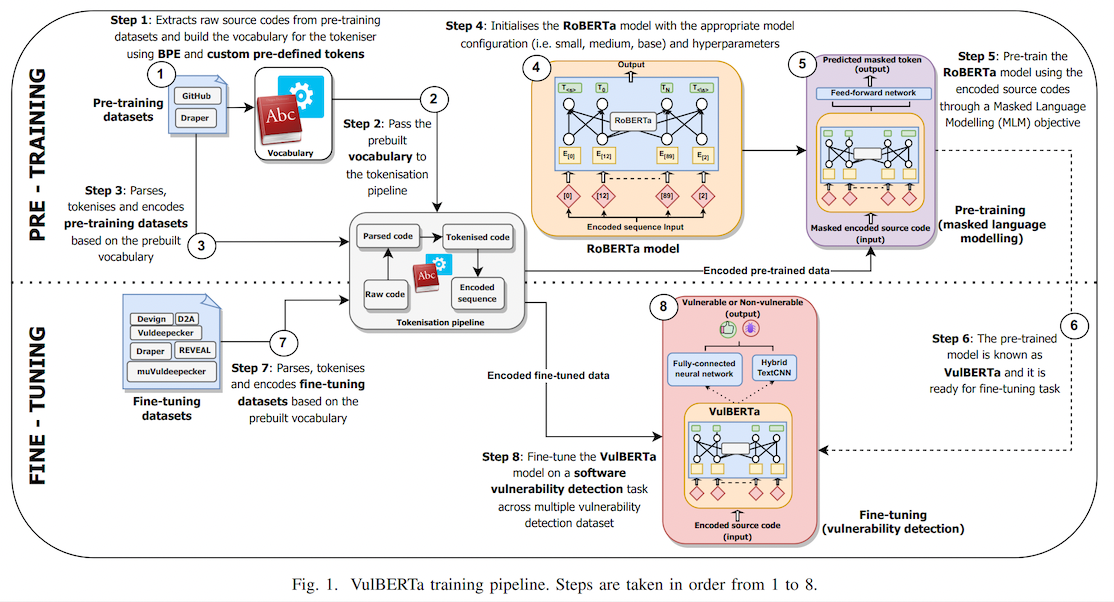

## VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

## Overview

|

| 18 |

+

This model is the unofficial HuggingFace version of "[VulBERTa](https://github.com/ICL-ml4csec/VulBERTa/tree/main)" with just the masked language modeling head for pretraining. I simplified the tokenization process by adding the cleaning (comment removal) step to the tokenizer and added the simplified tokenizer to this model repo as an AutoClass, allowing everyone to load this model without manually pulling any repos (with the caveat of `trust_remote_code`).

|

| 19 |

+

|

| 20 |

+

> This paper presents presents VulBERTa, a deep learning approach to detect security vulnerabilities in source code. Our approach pre-trains a RoBERTa model with a custom tokenisation pipeline on real-world code from open-source C/C++ projects. The model learns a deep knowledge representation of the code syntax and semantics, which we leverage to train vulnerability detection classifiers. We evaluate our approach on binary and multi-class vulnerability detection tasks across several datasets (Vuldeepecker, Draper, REVEAL and muVuldeepecker) and benchmarks (CodeXGLUE and D2A). The evaluation results show that VulBERTa achieves state-of-the-art performance and outperforms existing approaches across different datasets, despite its conceptual simplicity, and limited cost in terms of size of training data and number of model parameters.

|

| 21 |

+

|

| 22 |

+

## Usage

|

| 23 |

+

**You must install libclang for tokenization.**

|

| 24 |

+

|

| 25 |

+

```bash

|

| 26 |

+

pip install libclang

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

Note that due to the custom tokenizer, you must pass `trust_remote_code=True` when instantiating the model.

|

| 30 |

+

Example:

|

| 31 |

+

```

|

| 32 |

+

from transformers import pipeline

|

| 33 |

+

pipe = pipeline("fill-mask", model="claudios/VulBERTa-mlm", trust_remote_code=True, return_all_scores=True)

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

***

|

| 37 |

+

|

| 38 |

+

## Data

|

| 39 |

+

We provide all data required by VulBERTa.

|

| 40 |

+

This includes:

|

| 41 |

+

- Tokenizer training data

|

| 42 |

+

- Pre-training data

|

| 43 |

+

- Fine-tuning data

|

| 44 |

+

|

| 45 |

+

Please refer to the [data](https://github.com/ICL-ml4csec/VulBERTa/tree/main/data "data") directory for further instructions and details.

|

| 46 |

+

|

| 47 |

+

## Models

|

| 48 |

+

We provide all models pre-trained and fine-tuned by VulBERTa.

|

| 49 |

+

This includes:

|

| 50 |

+

- Trained tokenisers

|

| 51 |

+

- Pre-trained VulBERTa model (core representation knowledge)

|

| 52 |

+

- Fine-tuned VulBERTa-MLP and VulBERTa-CNN models

|

| 53 |

+

|

| 54 |

+

Please refer to the [models](https://github.com/ICL-ml4csec/VulBERTa/tree/main/models "models") directory for further instructions and details.

|

| 55 |

+

|

| 56 |

+

## How to use

|

| 57 |

+

|

| 58 |

+

In our project, we uses Jupyterlab notebook to run experiments.

|

| 59 |

+

Therefore, we separate each task into different notebook:

|

| 60 |

+

|

| 61 |

+

- [Pretraining_VulBERTa.ipynb](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Pretraining_VulBERTa.ipynb "Pretraining_VulBERTa.ipynb") - Pre-trains the core VulBERTa knowledge representation model using DrapGH dataset.

|

| 62 |

+

- [Finetuning_VulBERTa-MLP.ipynb](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Finetuning_VulBERTa-MLP.ipynb "Finetuning_VulBERTa-MLP.ipynb") - Fine-tunes the VulBERTa-MLP model on a specific vulnerability detection dataset.

|

| 63 |

+

- [Evaluation_VulBERTa-MLP.ipynb](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Evaluation_VulBERTa-MLP.ipynb "Evaluation_VulBERTa-MLP.ipynb") - Evaluates the fine-tuned VulBERTa-MLP models on testing set of a specific vulnerability detection dataset.

|

| 64 |

+

- [Finetuning+evaluation_VulBERTa-CNN](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Finetuning%2Bevaluation_VulBERTa-CNN.ipynb "Finetuning+evaluation_VulBERTa-CNN.ipynb") - Fine-tunes VulBERTa-CNN models and evaluates it on a testing set of a specific vulnerability detection dataset.

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

## Citation

|

| 68 |

+

|

| 69 |

+

Accepted as conference paper (oral presentation) at the International Joint Conference on Neural Networks (IJCNN) 2022.

|

| 70 |

+

Link to paper: https://ieeexplore.ieee.org/document/9892280

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

```bibtex

|

| 74 |

+

@INPROCEEDINGS{hanif2022vulberta,

|

| 75 |

+

author={Hanif, Hazim and Maffeis, Sergio},

|

| 76 |

+

booktitle={2022 International Joint Conference on Neural Networks (IJCNN)},

|

| 77 |

+

title={VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection},

|

| 78 |

+

year={2022},

|

| 79 |

+

volume={},

|

| 80 |

+

number={},

|

| 81 |

+

pages={1-8},

|

| 82 |

+

doi={10.1109/IJCNN55064.2022.9892280}

|

| 83 |

+

|

| 84 |

+

}

|

| 85 |

+

```

|