Upload folder using huggingface_hub

Browse files- README.md +40 -4

- config.json +28 -0

- model.safetensors +3 -0

- special_tokens_map.json +3 -0

- tokenization_vulberta.py +51 -0

- tokenizer.json +0 -0

- tokenizer_config.json +26 -0

README.md

CHANGED

|

@@ -1,19 +1,52 @@

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

arxiv: 2205.12424

|

| 4 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

tags:

|

|

|

|

| 6 |

- defect detection

|

| 7 |

- code

|

| 8 |

---

|

| 9 |

|

| 10 |

-

# VulBERTa

|

| 11 |

## VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection

|

| 12 |

|

| 13 |

|

| 14 |

|

| 15 |

## Overview

|

| 16 |

-

This model is the unofficial HuggingFace version of "[VulBERTa](https://github.com/ICL-ml4csec/VulBERTa/tree/main)" with

|

| 17 |

|

| 18 |

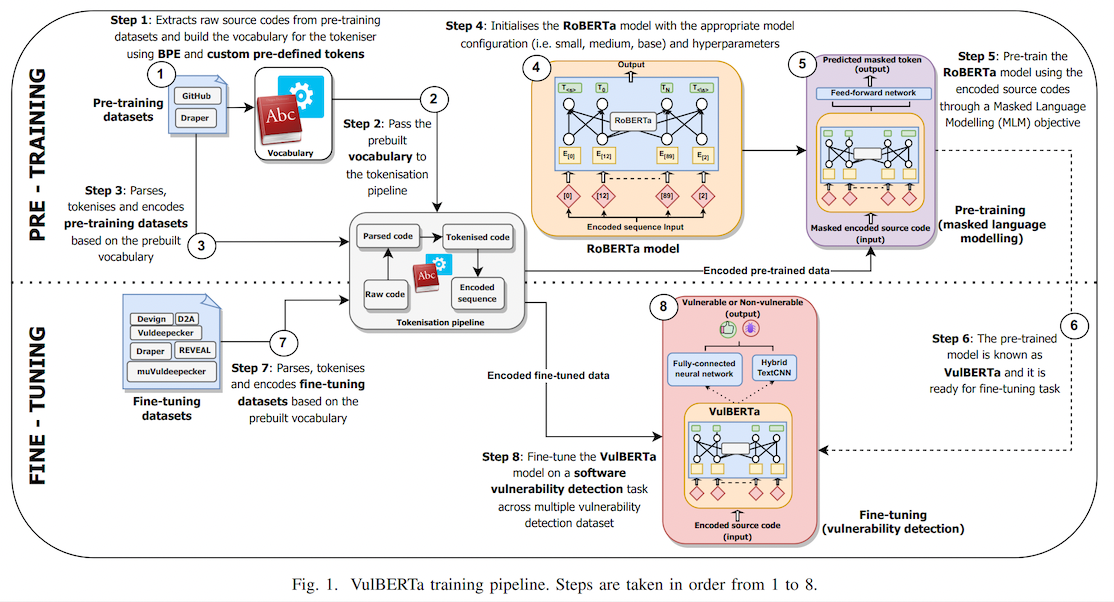

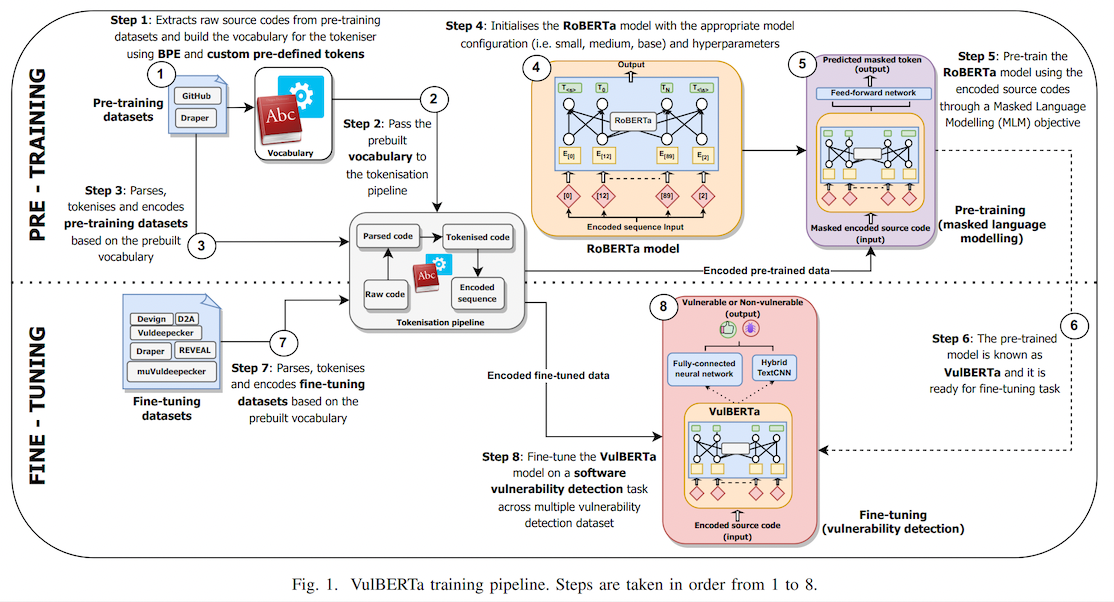

> This paper presents presents VulBERTa, a deep learning approach to detect security vulnerabilities in source code. Our approach pre-trains a RoBERTa model with a custom tokenisation pipeline on real-world code from open-source C/C++ projects. The model learns a deep knowledge representation of the code syntax and semantics, which we leverage to train vulnerability detection classifiers. We evaluate our approach on binary and multi-class vulnerability detection tasks across several datasets (Vuldeepecker, Draper, REVEAL and muVuldeepecker) and benchmarks (CodeXGLUE and D2A). The evaluation results show that VulBERTa achieves state-of-the-art performance and outperforms existing approaches across different datasets, despite its conceptual simplicity, and limited cost in terms of size of training data and number of model parameters.

|

| 19 |

|

|

@@ -28,7 +61,10 @@ Note that due to the custom tokenizer, you must pass `trust_remote_code=True` wh

|

|

| 28 |

Example:

|

| 29 |

```

|

| 30 |

from transformers import pipeline

|

| 31 |

-

pipe = pipeline("

|

|

|

|

|

|

|

|

|

|

| 32 |

```

|

| 33 |

|

| 34 |

***

|

|

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

arxiv: 2205.12424

|

| 4 |

+

datasets:

|

| 5 |

+

- code_x_glue_cc_defect_detection

|

| 6 |

+

metrics:

|

| 7 |

+

- accuracy

|

| 8 |

+

- precision

|

| 9 |

+

- recall

|

| 10 |

+

- f1

|

| 11 |

+

- roc_auc

|

| 12 |

+

model-index:

|

| 13 |

+

- name: VulBERTa MLP

|

| 14 |

+

results:

|

| 15 |

+

- task:

|

| 16 |

+

type: defect-detection

|

| 17 |

+

dataset:

|

| 18 |

+

name: codexglue-devign

|

| 19 |

+

type: codexglue-devign

|

| 20 |

+

metrics:

|

| 21 |

+

- name: Accuracy

|

| 22 |

+

type: Accuracy

|

| 23 |

+

value: 64.71

|

| 24 |

+

- name: Precision

|

| 25 |

+

type: Precision

|

| 26 |

+

value: 64.80

|

| 27 |

+

- name: Recall

|

| 28 |

+

type: Recall

|

| 29 |

+

value: 50.76

|

| 30 |

+

- name: F1

|

| 31 |

+

type: F1

|

| 32 |

+

value: 56.93

|

| 33 |

+

- name: ROC-AUC

|

| 34 |

+

type: ROC-AUC

|

| 35 |

+

value: 71.02

|

| 36 |

+

pipeline_tag: text-classification

|

| 37 |

tags:

|

| 38 |

+

- devign

|

| 39 |

- defect detection

|

| 40 |

- code

|

| 41 |

---

|

| 42 |

|

| 43 |

+

# VulBERTa MLP Devign

|

| 44 |

## VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection

|

| 45 |

|

| 46 |

|

| 47 |

|

| 48 |

## Overview

|

| 49 |

+

This model is the unofficial HuggingFace version of "[VulBERTa](https://github.com/ICL-ml4csec/VulBERTa/tree/main)" with an MLP classification head, trained on CodeXGlue Devign (C code), by Hazim Hanif & Sergio Maffeis (Imperial College London). I simplified the tokenization process by adding the cleaning (comment removal) step to the tokenizer and added the simplified tokenizer to this model repo as an AutoClass.

|

| 50 |

|

| 51 |

> This paper presents presents VulBERTa, a deep learning approach to detect security vulnerabilities in source code. Our approach pre-trains a RoBERTa model with a custom tokenisation pipeline on real-world code from open-source C/C++ projects. The model learns a deep knowledge representation of the code syntax and semantics, which we leverage to train vulnerability detection classifiers. We evaluate our approach on binary and multi-class vulnerability detection tasks across several datasets (Vuldeepecker, Draper, REVEAL and muVuldeepecker) and benchmarks (CodeXGLUE and D2A). The evaluation results show that VulBERTa achieves state-of-the-art performance and outperforms existing approaches across different datasets, despite its conceptual simplicity, and limited cost in terms of size of training data and number of model parameters.

|

| 52 |

|

|

|

|

| 61 |

Example:

|

| 62 |

```

|

| 63 |

from transformers import pipeline

|

| 64 |

+

pipe = pipeline("text-classification", model="claudios/VulBERTa-MLP-Devign", trust_remote_code=True, return_all_scores=True)

|

| 65 |

+

pipe("static void filter_mirror_setup(NetFilterState *nf, Error **errp)\n{\n MirrorState *s = FILTER_MIRROR(nf);\n Chardev *chr;\n chr = qemu_chr_find(s->outdev);\n if (chr == NULL) {\n error_set(errp, ERROR_CLASS_DEVICE_NOT_FOUND,\n \"Device '%s' not found\", s->outdev);\n qemu_chr_fe_init(&s->chr_out, chr, errp);")

|

| 66 |

+

>> [[{'label': 'LABEL_0', 'score': 0.014685827307403088},

|

| 67 |

+

{'label': 'LABEL_1', 'score': 0.985314130783081}]]

|

| 68 |

```

|

| 69 |

|

| 70 |

***

|

config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "VulBERTa",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"RobertaForMaskedLM"

|

| 5 |

+

],

|

| 6 |

+

"attention_probs_dropout_prob": 0.1,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"classifier_dropout": null,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"gradient_checkpointing": false,

|

| 11 |

+

"hidden_act": "gelu",

|

| 12 |

+

"hidden_dropout_prob": 0.1,

|

| 13 |

+

"hidden_size": 768,

|

| 14 |

+

"initializer_range": 0.02,

|

| 15 |

+

"intermediate_size": 3072,

|

| 16 |

+

"layer_norm_eps": 1e-12,

|

| 17 |

+

"max_position_embeddings": 1026,

|

| 18 |

+

"model_type": "roberta",

|

| 19 |

+

"num_attention_heads": 12,

|

| 20 |

+

"num_hidden_layers": 12,

|

| 21 |

+

"pad_token_id": 1,

|

| 22 |

+

"position_embedding_type": "absolute",

|

| 23 |

+

"torch_dtype": "float32",

|

| 24 |

+

"transformers_version": "4.40.1",

|

| 25 |

+

"type_vocab_size": 1,

|

| 26 |

+

"use_cache": true,

|

| 27 |

+

"vocab_size": 50000

|

| 28 |

+

}

|

model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a9d0d35e89d5f4f97e647744a76852a211825e4f5e0db2d305db8fe08e219264

|

| 3 |

+

size 499363688

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"pad_token": "<pad>"

|

| 3 |

+

}

|

tokenization_vulberta.py

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List

|

| 2 |

+

|

| 3 |

+

from tokenizers import NormalizedString, PreTokenizedString

|

| 4 |

+

from tokenizers.pre_tokenizers import PreTokenizer

|

| 5 |

+

from transformers import PreTrainedTokenizerFast

|

| 6 |

+

|

| 7 |

+

try:

|

| 8 |

+

from clang import cindex

|

| 9 |

+

except ModuleNotFoundError as e:

|

| 10 |

+

raise ModuleNotFoundError(

|

| 11 |

+

"VulBERTa Clang tokenizer requires `libclang`. Please install it via `pip install libclang`.",

|

| 12 |

+

) from e

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class ClangPreTokenizer:

|

| 16 |

+

cidx = cindex.Index.create()

|

| 17 |

+

|

| 18 |

+

def clang_split(

|

| 19 |

+

self,

|

| 20 |

+

i: int,

|

| 21 |

+

normalized_string: NormalizedString,

|

| 22 |

+

) -> List[NormalizedString]:

|

| 23 |

+

tok = []

|

| 24 |

+

tu = self.cidx.parse(

|

| 25 |

+

"tmp.c",

|

| 26 |

+

args=[""],

|

| 27 |

+

unsaved_files=[("tmp.c", str(normalized_string.original))],

|

| 28 |

+

options=0,

|

| 29 |

+

)

|

| 30 |

+

for t in tu.get_tokens(extent=tu.cursor.extent):

|

| 31 |

+

spelling = t.spelling.strip()

|

| 32 |

+

if spelling == "":

|

| 33 |

+

continue

|

| 34 |

+

tok.append(NormalizedString(spelling))

|

| 35 |

+

return tok

|

| 36 |

+

|

| 37 |

+

def pre_tokenize(self, pretok: PreTokenizedString):

|

| 38 |

+

pretok.split(self.clang_split)

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

class VulBERTaTokenizer(PreTrainedTokenizerFast):

|

| 42 |

+

def __init__(

|

| 43 |

+

self,

|

| 44 |

+

*args,

|

| 45 |

+

**kwargs,

|

| 46 |

+

):

|

| 47 |

+

super().__init__(

|

| 48 |

+

*args,

|

| 49 |

+

**kwargs,

|

| 50 |

+

)

|

| 51 |

+

self._tokenizer.pre_tokenizer = PreTokenizer.custom(ClangPreTokenizer())

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"added_tokens_decoder": {

|

| 3 |

+

"1": {

|

| 4 |

+

"content": "<pad>",

|

| 5 |

+

"lstrip": false,

|

| 6 |

+

"normalized": false,

|

| 7 |

+

"rstrip": false,

|

| 8 |

+

"single_word": false,

|

| 9 |

+

"special": true

|

| 10 |

+

}

|

| 11 |

+

},

|

| 12 |

+

"clean_up_tokenization_spaces": true,

|

| 13 |

+

"max_length": 1024,

|

| 14 |

+

"model_max_length": 1024,

|

| 15 |

+

"pad_to_multiple_of": null,

|

| 16 |

+

"pad_token": "<pad>",

|

| 17 |

+

"pad_token_type_id": 0,

|

| 18 |

+

"padding_side": "right",

|

| 19 |

+

"stride": 0,

|

| 20 |

+

"tokenizer_class": "VulBERTaTokenizer",

|

| 21 |

+

"auto_map": {

|

| 22 |

+

"AutoTokenizer": ["tokenization_vulberta.VulBERTaTokenizer", null]

|

| 23 |

+

},

|

| 24 |

+

"truncation_side": "right",

|

| 25 |

+

"truncation_strategy": "longest_first"

|

| 26 |

+

}

|