Update README.md

Browse files

README.md

CHANGED

|

@@ -41,17 +41,17 @@ tags:

|

|

| 41 |

---

|

| 42 |

|

| 43 |

# VulBERTa MLP Devign

|

| 44 |

-

## VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection

|

| 45 |

|

| 46 |

|

| 47 |

|

| 48 |

## Overview

|

| 49 |

-

This model is the unofficial HuggingFace version of "VulBERTa" with an MLP classification head, trained on CodeXGlue Devign, by Hazim Hanif & Sergio Maffeis (Imperial College London).

|

| 50 |

|

| 51 |

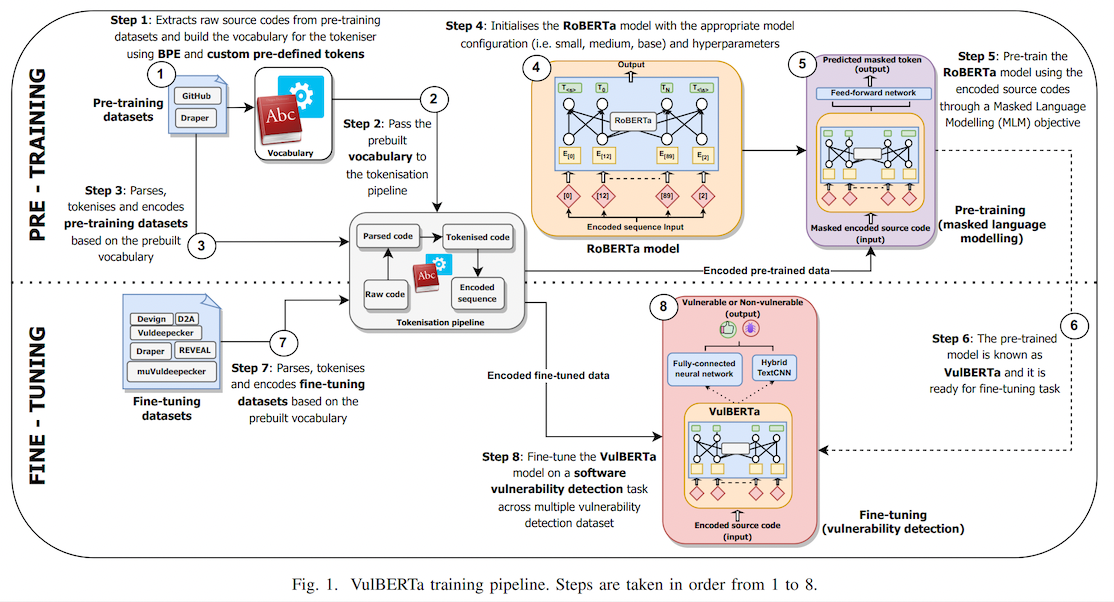

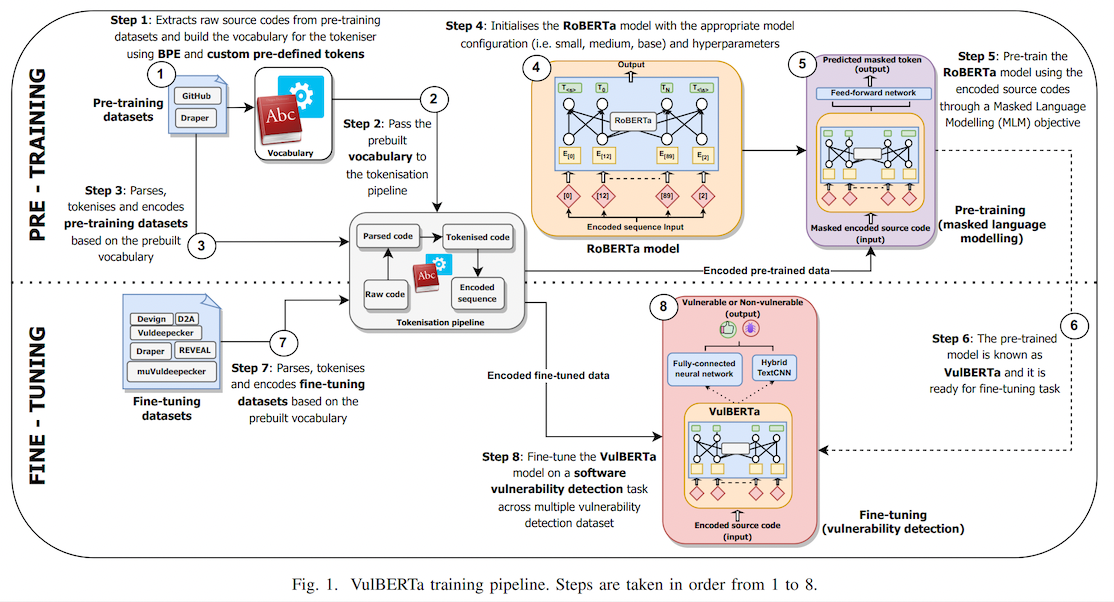

> This paper presents presents VulBERTa, a deep learning approach to detect security vulnerabilities in source code. Our approach pre-trains a RoBERTa model with a custom tokenisation pipeline on real-world code from open-source C/C++ projects. The model learns a deep knowledge representation of the code syntax and semantics, which we leverage to train vulnerability detection classifiers. We evaluate our approach on binary and multi-class vulnerability detection tasks across several datasets (Vuldeepecker, Draper, REVEAL and muVuldeepecker) and benchmarks (CodeXGLUE and D2A). The evaluation results show that VulBERTa achieves state-of-the-art performance and outperforms existing approaches across different datasets, despite its conceptual simplicity, and limited cost in terms of size of training data and number of model parameters.

|

| 52 |

|

| 53 |

## Usage

|

| 54 |

-

|

| 55 |

|

| 56 |

```bash

|

| 57 |

pip install libclang

|

|

@@ -67,6 +67,8 @@ pipe("static void filter_mirror_setup(NetFilterState *nf, Error **errp)\n{\n

|

|

| 67 |

{'label': 'LABEL_1', 'score': 0.985314130783081}]]

|

| 68 |

```

|

| 69 |

|

|

|

|

|

|

|

| 70 |

## Data

|

| 71 |

We provide all data required by VulBERTa.

|

| 72 |

This includes:

|

|

@@ -85,18 +87,6 @@ This includes:

|

|

| 85 |

|

| 86 |

Please refer to the [models](https://github.com/ICL-ml4csec/VulBERTa/tree/main/models "models") directory for further instructions and details.

|

| 87 |

|

| 88 |

-

## Pre-requisites and requirements

|

| 89 |

-

|

| 90 |

-

In general, we used this version of packages when running the experiments:

|

| 91 |

-

|

| 92 |

-

- Python 3.8.5

|

| 93 |

-

- Pytorch 1.7.0

|

| 94 |

-

- Transformers 4.4.1

|

| 95 |

-

- Tokenizers 0.10.1

|

| 96 |

-

- Libclang (any version > 12.0 should work. https://pypi.org/project/libclang/)

|

| 97 |

-

|

| 98 |

-

For an exhaustive list of all the packages, please refer to [requirements.txt](https://github.com/ICL-ml4csec/VulBERTa/blob/main/requirements.txt "requirements.txt") file.

|

| 99 |

-

|

| 100 |

## How to use

|

| 101 |

|

| 102 |

In our project, we uses Jupyterlab notebook to run experiments.

|

|

@@ -107,9 +97,6 @@ Therefore, we separate each task into different notebook:

|

|

| 107 |

- [Evaluation_VulBERTa-MLP.ipynb](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Evaluation_VulBERTa-MLP.ipynb "Evaluation_VulBERTa-MLP.ipynb") - Evaluates the fine-tuned VulBERTa-MLP models on testing set of a specific vulnerability detection dataset.

|

| 108 |

- [Finetuning+evaluation_VulBERTa-CNN](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Finetuning%2Bevaluation_VulBERTa-CNN.ipynb "Finetuning+evaluation_VulBERTa-CNN.ipynb") - Fine-tunes VulBERTa-CNN models and evaluates it on a testing set of a specific vulnerability detection dataset.

|

| 109 |

|

| 110 |

-

## Running VulBERTa-CNN or VulBERTa-MLP on arbitrary codes

|

| 111 |

-

|

| 112 |

-

Coming soon!

|

| 113 |

|

| 114 |

## Citation

|

| 115 |

|

|

|

|

| 41 |

---

|

| 42 |

|

| 43 |

# VulBERTa MLP Devign

|

| 44 |

+

## [VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection](https://github.com/ICL-ml4csec/VulBERTa/tree/main)

|

| 45 |

|

| 46 |

|

| 47 |

|

| 48 |

## Overview

|

| 49 |

+

This model is the unofficial HuggingFace version of "[VulBERTa](https://github.com/ICL-ml4csec/VulBERTa/tree/main)" with an MLP classification head, trained on CodeXGlue Devign (C code), by Hazim Hanif & Sergio Maffeis (Imperial College London). I simplified the tokenization process by adding the cleaning (comment removal) step to the tokenizer and added the simplified tokenizer to this model repo as an AutoClass.

|

| 50 |

|

| 51 |

> This paper presents presents VulBERTa, a deep learning approach to detect security vulnerabilities in source code. Our approach pre-trains a RoBERTa model with a custom tokenisation pipeline on real-world code from open-source C/C++ projects. The model learns a deep knowledge representation of the code syntax and semantics, which we leverage to train vulnerability detection classifiers. We evaluate our approach on binary and multi-class vulnerability detection tasks across several datasets (Vuldeepecker, Draper, REVEAL and muVuldeepecker) and benchmarks (CodeXGLUE and D2A). The evaluation results show that VulBERTa achieves state-of-the-art performance and outperforms existing approaches across different datasets, despite its conceptual simplicity, and limited cost in terms of size of training data and number of model parameters.

|

| 52 |

|

| 53 |

## Usage

|

| 54 |

+

**You must install libclang for tokenization.**

|

| 55 |

|

| 56 |

```bash

|

| 57 |

pip install libclang

|

|

|

|

| 67 |

{'label': 'LABEL_1', 'score': 0.985314130783081}]]

|

| 68 |

```

|

| 69 |

|

| 70 |

+

***

|

| 71 |

+

|

| 72 |

## Data

|

| 73 |

We provide all data required by VulBERTa.

|

| 74 |

This includes:

|

|

|

|

| 87 |

|

| 88 |

Please refer to the [models](https://github.com/ICL-ml4csec/VulBERTa/tree/main/models "models") directory for further instructions and details.

|

| 89 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 90 |

## How to use

|

| 91 |

|

| 92 |

In our project, we uses Jupyterlab notebook to run experiments.

|

|

|

|

| 97 |

- [Evaluation_VulBERTa-MLP.ipynb](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Evaluation_VulBERTa-MLP.ipynb "Evaluation_VulBERTa-MLP.ipynb") - Evaluates the fine-tuned VulBERTa-MLP models on testing set of a specific vulnerability detection dataset.

|

| 98 |

- [Finetuning+evaluation_VulBERTa-CNN](https://github.com/ICL-ml4csec/VulBERTa/blob/main/Finetuning%2Bevaluation_VulBERTa-CNN.ipynb "Finetuning+evaluation_VulBERTa-CNN.ipynb") - Fine-tunes VulBERTa-CNN models and evaluates it on a testing set of a specific vulnerability detection dataset.

|

| 99 |

|

|

|

|

|

|

|

|

|

|

| 100 |

|

| 101 |

## Citation

|

| 102 |

|