Training in progress, step 500

Browse files- .ipynb_checkpoints/lora_orpo-checkpoint.yaml +3 -3

- .ipynb_checkpoints/tokenizer_config-checkpoint.json +45 -0

- .ipynb_checkpoints/training_loss-checkpoint.png +0 -0

- .ipynb_checkpoints/training_rewards_accuracies-checkpoint.png +0 -0

- .ipynb_checkpoints/training_sft_loss-checkpoint.png +0 -0

- adapter_config.json +3 -3

- adapter_model.safetensors +1 -1

- lora_orpo.yaml +3 -3

- trainer_log.jsonl +51 -17

- training_args.bin +1 -1

.ipynb_checkpoints/lora_orpo-checkpoint.yaml

CHANGED

|

@@ -12,7 +12,7 @@ dataset: dpo_mix_en

|

|

| 12 |

dataset_dir: data

|

| 13 |

template: mistral

|

| 14 |

cutoff_len: 1024

|

| 15 |

-

max_samples: 1000

|

| 16 |

overwrite_cache: true

|

| 17 |

preprocessing_num_workers: 16

|

| 18 |

|

|

@@ -34,10 +34,10 @@ learning_rate: 0.000005

|

|

| 34 |

num_train_epochs: 3.0

|

| 35 |

lr_scheduler_type: cosine

|

| 36 |

warmup_steps: 0.1

|

| 37 |

-

|

| 38 |

|

| 39 |

### eval

|

| 40 |

val_size: 0.1

|

| 41 |

-

per_device_eval_batch_size:

|

| 42 |

evaluation_strategy: steps

|

| 43 |

eval_steps: 500

|

|

|

|

| 12 |

dataset_dir: data

|

| 13 |

template: mistral

|

| 14 |

cutoff_len: 1024

|

| 15 |

+

# max_samples: 1000

|

| 16 |

overwrite_cache: true

|

| 17 |

preprocessing_num_workers: 16

|

| 18 |

|

|

|

|

| 34 |

num_train_epochs: 3.0

|

| 35 |

lr_scheduler_type: cosine

|

| 36 |

warmup_steps: 0.1

|

| 37 |

+

bf16: true

|

| 38 |

|

| 39 |

### eval

|

| 40 |

val_size: 0.1

|

| 41 |

+

per_device_eval_batch_size: 2

|

| 42 |

evaluation_strategy: steps

|

| 43 |

eval_steps: 500

|

.ipynb_checkpoints/tokenizer_config-checkpoint.json

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": true,

|

| 3 |

+

"add_eos_token": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"0": {

|

| 6 |

+

"content": "<unk>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"1": {

|

| 14 |

+

"content": "<s>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"2": {

|

| 22 |

+

"content": "</s>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": false,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

}

|

| 29 |

+

},

|

| 30 |

+

"additional_special_tokens": [],

|

| 31 |

+

"bos_token": "<s>",

|

| 32 |

+

"chat_template": "{% if messages[0]['role'] == 'system' %}{% set system_message = messages[0]['content'] %}{% endif %}{{ '<s>' + system_message }}{% for message in messages %}{% set content = message['content'] %}{% if message['role'] == 'user' %}{{ ' [INST] ' + content + ' [/INST]' }}{% elif message['role'] == 'assistant' %}{{ content + '</s>' }}{% endif %}{% endfor %}",

|

| 33 |

+

"clean_up_tokenization_spaces": false,

|

| 34 |

+

"eos_token": "</s>",

|

| 35 |

+

"legacy": true,

|

| 36 |

+

"model_max_length": 1000000000000000019884624838656,

|

| 37 |

+

"pad_token": "</s>",

|

| 38 |

+

"padding_side": "right",

|

| 39 |

+

"sp_model_kwargs": {},

|

| 40 |

+

"spaces_between_special_tokens": false,

|

| 41 |

+

"split_special_tokens": false,

|

| 42 |

+

"tokenizer_class": "LlamaTokenizer",

|

| 43 |

+

"unk_token": "<unk>",

|

| 44 |

+

"use_default_system_prompt": false

|

| 45 |

+

}

|

.ipynb_checkpoints/training_loss-checkpoint.png

ADDED

|

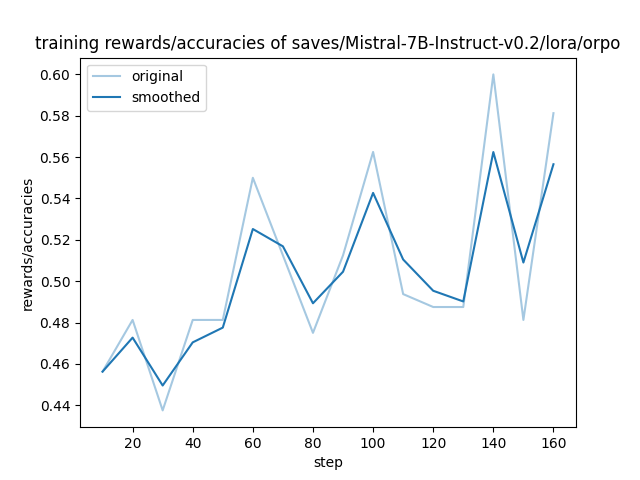

.ipynb_checkpoints/training_rewards_accuracies-checkpoint.png

ADDED

|

.ipynb_checkpoints/training_sft_loss-checkpoint.png

ADDED

|

adapter_config.json

CHANGED

|

@@ -20,12 +20,12 @@

|

|

| 20 |

"rank_pattern": {},

|

| 21 |

"revision": null,

|

| 22 |

"target_modules": [

|

| 23 |

-

"o_proj",

|

| 24 |

-

"q_proj",

|

| 25 |

"k_proj",

|

| 26 |

-

"gate_proj",

|

| 27 |

"down_proj",

|

|

|

|

| 28 |

"v_proj",

|

|

|

|

|

|

|

| 29 |

"up_proj"

|

| 30 |

],

|

| 31 |

"task_type": "CAUSAL_LM",

|

|

|

|

| 20 |

"rank_pattern": {},

|

| 21 |

"revision": null,

|

| 22 |

"target_modules": [

|

|

|

|

|

|

|

| 23 |

"k_proj",

|

|

|

|

| 24 |

"down_proj",

|

| 25 |

+

"gate_proj",

|

| 26 |

"v_proj",

|

| 27 |

+

"o_proj",

|

| 28 |

+

"q_proj",

|

| 29 |

"up_proj"

|

| 30 |

],

|

| 31 |

"task_type": "CAUSAL_LM",

|

adapter_model.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 83945296

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:983b2090b9dc05a7ea87a28d1dd832e6ddff90c7e0318fc0682eb99ec4cef416

|

| 3 |

size 83945296

|

lora_orpo.yaml

CHANGED

|

@@ -12,7 +12,7 @@ dataset: dpo_mix_en

|

|

| 12 |

dataset_dir: data

|

| 13 |

template: mistral

|

| 14 |

cutoff_len: 1024

|

| 15 |

-

max_samples: 1000

|

| 16 |

overwrite_cache: true

|

| 17 |

preprocessing_num_workers: 16

|

| 18 |

|

|

@@ -34,10 +34,10 @@ learning_rate: 0.000005

|

|

| 34 |

num_train_epochs: 3.0

|

| 35 |

lr_scheduler_type: cosine

|

| 36 |

warmup_steps: 0.1

|

| 37 |

-

|

| 38 |

|

| 39 |

### eval

|

| 40 |

val_size: 0.1

|

| 41 |

-

per_device_eval_batch_size:

|

| 42 |

evaluation_strategy: steps

|

| 43 |

eval_steps: 500

|

|

|

|

| 12 |

dataset_dir: data

|

| 13 |

template: mistral

|

| 14 |

cutoff_len: 1024

|

| 15 |

+

# max_samples: 1000

|

| 16 |

overwrite_cache: true

|

| 17 |

preprocessing_num_workers: 16

|

| 18 |

|

|

|

|

| 34 |

num_train_epochs: 3.0

|

| 35 |

lr_scheduler_type: cosine

|

| 36 |

warmup_steps: 0.1

|

| 37 |

+

bf16: true

|

| 38 |

|

| 39 |

### eval

|

| 40 |

val_size: 0.1

|

| 41 |

+

per_device_eval_batch_size: 2

|

| 42 |

evaluation_strategy: steps

|

| 43 |

eval_steps: 500

|

trainer_log.jsonl

CHANGED

|

@@ -1,17 +1,51 @@

|

|

| 1 |

-

{"current_steps": 10, "total_steps":

|

| 2 |

-

{"current_steps": 20, "total_steps":

|

| 3 |

-

{"current_steps": 30, "total_steps":

|

| 4 |

-

{"current_steps": 40, "total_steps":

|

| 5 |

-

{"current_steps": 50, "total_steps":

|

| 6 |

-

{"current_steps": 60, "total_steps":

|

| 7 |

-

{"current_steps": 70, "total_steps":

|

| 8 |

-

{"current_steps": 80, "total_steps":

|

| 9 |

-

{"current_steps": 90, "total_steps":

|

| 10 |

-

{"current_steps": 100, "total_steps":

|

| 11 |

-

{"current_steps": 110, "total_steps":

|

| 12 |

-

{"current_steps": 120, "total_steps":

|

| 13 |

-

{"current_steps": 130, "total_steps":

|

| 14 |

-

{"current_steps": 140, "total_steps":

|

| 15 |

-

{"current_steps": 150, "total_steps":

|

| 16 |

-

{"current_steps": 160, "total_steps":

|

| 17 |

-

{"current_steps":

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{"current_steps": 10, "total_steps": 1686, "loss": 1.542, "accuracy": 0.4937500059604645, "learning_rate": 4.9995745934141085e-06, "epoch": 0.017781729273171815, "percentage": 0.59, "elapsed_time": "0:01:37", "remaining_time": "4:31:06"}

|

| 2 |

+

{"current_steps": 20, "total_steps": 1686, "loss": 1.8501, "accuracy": 0.543749988079071, "learning_rate": 4.9982812903243405e-06, "epoch": 0.03556345854634363, "percentage": 1.19, "elapsed_time": "0:03:14", "remaining_time": "4:30:04"}

|

| 3 |

+

{"current_steps": 30, "total_steps": 1686, "loss": 1.5428, "accuracy": 0.5625, "learning_rate": 4.996120496405222e-06, "epoch": 0.05334518781951545, "percentage": 1.78, "elapsed_time": "0:04:51", "remaining_time": "4:27:44"}

|

| 4 |

+

{"current_steps": 40, "total_steps": 1686, "loss": 1.4122, "accuracy": 0.543749988079071, "learning_rate": 4.99309296196014e-06, "epoch": 0.07112691709268726, "percentage": 2.37, "elapsed_time": "0:06:26", "remaining_time": "4:25:03"}

|

| 5 |

+

{"current_steps": 50, "total_steps": 1686, "loss": 1.4155, "accuracy": 0.675000011920929, "learning_rate": 4.989199738255166e-06, "epoch": 0.08890864636585907, "percentage": 2.97, "elapsed_time": "0:08:04", "remaining_time": "4:24:24"}

|

| 6 |

+

{"current_steps": 60, "total_steps": 1686, "loss": 1.4047, "accuracy": 0.574999988079071, "learning_rate": 4.984442177154031e-06, "epoch": 0.1066903756390309, "percentage": 3.56, "elapsed_time": "0:09:44", "remaining_time": "4:24:12"}

|

| 7 |

+

{"current_steps": 70, "total_steps": 1686, "loss": 1.5087, "accuracy": 0.550000011920929, "learning_rate": 4.978821930648704e-06, "epoch": 0.12447210491220272, "percentage": 4.15, "elapsed_time": "0:11:25", "remaining_time": "4:23:52"}

|

| 8 |

+

{"current_steps": 80, "total_steps": 1686, "loss": 1.2502, "accuracy": 0.550000011920929, "learning_rate": 4.97234095028576e-06, "epoch": 0.14225383418537452, "percentage": 4.74, "elapsed_time": "0:13:07", "remaining_time": "4:23:25"}

|

| 9 |

+

{"current_steps": 90, "total_steps": 1686, "loss": 1.1006, "accuracy": 0.625, "learning_rate": 4.965001486488743e-06, "epoch": 0.16003556345854633, "percentage": 5.34, "elapsed_time": "0:14:46", "remaining_time": "4:22:03"}

|

| 10 |

+

{"current_steps": 100, "total_steps": 1686, "loss": 1.1262, "accuracy": 0.5625, "learning_rate": 4.956806087776732e-06, "epoch": 0.17781729273171815, "percentage": 5.93, "elapsed_time": "0:16:21", "remaining_time": "4:19:27"}

|

| 11 |

+

{"current_steps": 110, "total_steps": 1686, "loss": 1.3076, "accuracy": 0.581250011920929, "learning_rate": 4.947757599879411e-06, "epoch": 0.19559902200489, "percentage": 6.52, "elapsed_time": "0:17:54", "remaining_time": "4:16:41"}

|

| 12 |

+

{"current_steps": 120, "total_steps": 1686, "loss": 1.0426, "accuracy": 0.581250011920929, "learning_rate": 4.937859164748931e-06, "epoch": 0.2133807512780618, "percentage": 7.12, "elapsed_time": "0:19:28", "remaining_time": "4:14:11"}

|

| 13 |

+

{"current_steps": 130, "total_steps": 1686, "loss": 1.0981, "accuracy": 0.5874999761581421, "learning_rate": 4.92711421946891e-06, "epoch": 0.23116248055123362, "percentage": 7.71, "elapsed_time": "0:21:11", "remaining_time": "4:13:42"}

|

| 14 |

+

{"current_steps": 140, "total_steps": 1686, "loss": 0.9758, "accuracy": 0.625, "learning_rate": 4.915526495060961e-06, "epoch": 0.24894420982440543, "percentage": 8.3, "elapsed_time": "0:22:51", "remaining_time": "4:12:29"}

|

| 15 |

+

{"current_steps": 150, "total_steps": 1686, "loss": 1.0096, "accuracy": 0.5687500238418579, "learning_rate": 4.903100015189153e-06, "epoch": 0.26672593909757725, "percentage": 8.9, "elapsed_time": "0:24:31", "remaining_time": "4:11:10"}

|

| 16 |

+

{"current_steps": 160, "total_steps": 1686, "loss": 1.0307, "accuracy": 0.606249988079071, "learning_rate": 4.889839094762848e-06, "epoch": 0.28450766837074903, "percentage": 9.49, "elapsed_time": "0:26:07", "remaining_time": "4:09:09"}

|

| 17 |

+

{"current_steps": 170, "total_steps": 1686, "loss": 1.1294, "accuracy": 0.5562499761581421, "learning_rate": 4.875748338438416e-06, "epoch": 0.3022893976439209, "percentage": 10.08, "elapsed_time": "0:27:42", "remaining_time": "4:07:04"}

|

| 18 |

+

{"current_steps": 180, "total_steps": 1686, "loss": 1.0378, "accuracy": 0.59375, "learning_rate": 4.8608326390203386e-06, "epoch": 0.32007112691709266, "percentage": 10.68, "elapsed_time": "0:29:20", "remaining_time": "4:05:31"}

|

| 19 |

+

{"current_steps": 190, "total_steps": 1686, "loss": 1.0657, "accuracy": 0.550000011920929, "learning_rate": 4.845097175762251e-06, "epoch": 0.3378528561902645, "percentage": 11.27, "elapsed_time": "0:30:59", "remaining_time": "4:03:58"}

|

| 20 |

+

{"current_steps": 200, "total_steps": 1686, "loss": 1.0677, "accuracy": 0.59375, "learning_rate": 4.8285474125685286e-06, "epoch": 0.3556345854634363, "percentage": 11.86, "elapsed_time": "0:32:35", "remaining_time": "4:02:07"}

|

| 21 |

+

{"current_steps": 210, "total_steps": 1686, "loss": 1.0165, "accuracy": 0.550000011920929, "learning_rate": 4.811189096097025e-06, "epoch": 0.37341631473660813, "percentage": 12.46, "elapsed_time": "0:34:20", "remaining_time": "4:01:21"}

|

| 22 |

+

{"current_steps": 220, "total_steps": 1686, "loss": 1.0181, "accuracy": 0.581250011920929, "learning_rate": 4.793028253763633e-06, "epoch": 0.39119804400978, "percentage": 13.05, "elapsed_time": "0:35:59", "remaining_time": "3:59:50"}

|

| 23 |

+

{"current_steps": 230, "total_steps": 1686, "loss": 1.019, "accuracy": 0.606249988079071, "learning_rate": 4.774071191649352e-06, "epoch": 0.40897977328295176, "percentage": 13.64, "elapsed_time": "0:37:40", "remaining_time": "3:58:29"}

|

| 24 |

+

{"current_steps": 240, "total_steps": 1686, "loss": 1.0087, "accuracy": 0.581250011920929, "learning_rate": 4.7543244923105975e-06, "epoch": 0.4267615025561236, "percentage": 14.23, "elapsed_time": "0:39:20", "remaining_time": "3:56:59"}

|

| 25 |

+

{"current_steps": 250, "total_steps": 1686, "loss": 1.0815, "accuracy": 0.512499988079071, "learning_rate": 4.733795012493506e-06, "epoch": 0.4445432318292954, "percentage": 14.83, "elapsed_time": "0:41:00", "remaining_time": "3:55:34"}

|

| 26 |

+

{"current_steps": 260, "total_steps": 1686, "loss": 0.9165, "accuracy": 0.612500011920929, "learning_rate": 4.712489880753035e-06, "epoch": 0.46232496110246724, "percentage": 15.42, "elapsed_time": "0:42:43", "remaining_time": "3:54:20"}

|

| 27 |

+

{"current_steps": 270, "total_steps": 1686, "loss": 0.9283, "accuracy": 0.6499999761581421, "learning_rate": 4.690416494977673e-06, "epoch": 0.480106690375639, "percentage": 16.01, "elapsed_time": "0:44:22", "remaining_time": "3:52:45"}

|

| 28 |

+

{"current_steps": 280, "total_steps": 1686, "loss": 1.0455, "accuracy": 0.5375000238418579, "learning_rate": 4.667582519820639e-06, "epoch": 0.49788841964881086, "percentage": 16.61, "elapsed_time": "0:46:07", "remaining_time": "3:51:36"}

|

| 29 |

+

{"current_steps": 290, "total_steps": 1686, "loss": 0.9957, "accuracy": 0.5625, "learning_rate": 4.643995884038443e-06, "epoch": 0.5156701489219827, "percentage": 17.2, "elapsed_time": "0:47:47", "remaining_time": "3:50:03"}

|

| 30 |

+

{"current_steps": 300, "total_steps": 1686, "loss": 1.0047, "accuracy": 0.5625, "learning_rate": 4.6196647777377475e-06, "epoch": 0.5334518781951545, "percentage": 17.79, "elapsed_time": "0:49:29", "remaining_time": "3:48:37"}

|

| 31 |

+

{"current_steps": 310, "total_steps": 1686, "loss": 0.9884, "accuracy": 0.4937500059604645, "learning_rate": 4.59459764953147e-06, "epoch": 0.5512336074683263, "percentage": 18.39, "elapsed_time": "0:51:13", "remaining_time": "3:47:23"}

|

| 32 |

+

{"current_steps": 320, "total_steps": 1686, "loss": 0.9223, "accuracy": 0.581250011920929, "learning_rate": 4.568803203605133e-06, "epoch": 0.5690153367414981, "percentage": 18.98, "elapsed_time": "0:52:53", "remaining_time": "3:45:48"}

|

| 33 |

+

{"current_steps": 330, "total_steps": 1686, "loss": 0.9709, "accuracy": 0.5, "learning_rate": 4.542290396694462e-06, "epoch": 0.58679706601467, "percentage": 19.57, "elapsed_time": "0:54:37", "remaining_time": "3:44:25"}

|

| 34 |

+

{"current_steps": 340, "total_steps": 1686, "loss": 1.0369, "accuracy": 0.550000011920929, "learning_rate": 4.515068434975298e-06, "epoch": 0.6045787952878418, "percentage": 20.17, "elapsed_time": "0:56:15", "remaining_time": "3:42:42"}

|

| 35 |

+

{"current_steps": 350, "total_steps": 1686, "loss": 0.9736, "accuracy": 0.5249999761581421, "learning_rate": 4.487146770866887e-06, "epoch": 0.6223605245610135, "percentage": 20.76, "elapsed_time": "0:57:54", "remaining_time": "3:41:04"}

|

| 36 |

+

{"current_steps": 360, "total_steps": 1686, "loss": 1.0655, "accuracy": 0.53125, "learning_rate": 4.458535099749666e-06, "epoch": 0.6401422538341853, "percentage": 21.35, "elapsed_time": "0:59:32", "remaining_time": "3:39:17"}

|

| 37 |

+

{"current_steps": 370, "total_steps": 1686, "loss": 0.9872, "accuracy": 0.5562499761581421, "learning_rate": 4.429243356598694e-06, "epoch": 0.6579239831073572, "percentage": 21.95, "elapsed_time": "1:01:09", "remaining_time": "3:37:32"}

|

| 38 |

+

{"current_steps": 380, "total_steps": 1686, "loss": 0.9257, "accuracy": 0.5375000238418579, "learning_rate": 4.399281712533875e-06, "epoch": 0.675705712380529, "percentage": 22.54, "elapsed_time": "1:02:44", "remaining_time": "3:35:36"}

|

| 39 |

+

{"current_steps": 390, "total_steps": 1686, "loss": 0.9727, "accuracy": 0.48124998807907104, "learning_rate": 4.368660571288192e-06, "epoch": 0.6934874416537008, "percentage": 23.13, "elapsed_time": "1:04:27", "remaining_time": "3:34:10"}

|

| 40 |

+

{"current_steps": 400, "total_steps": 1686, "loss": 1.0562, "accuracy": 0.5, "learning_rate": 4.337390565595163e-06, "epoch": 0.7112691709268726, "percentage": 23.72, "elapsed_time": "1:06:05", "remaining_time": "3:32:30"}

|

| 41 |

+

{"current_steps": 410, "total_steps": 1686, "loss": 0.9042, "accuracy": 0.5687500238418579, "learning_rate": 4.305482553496786e-06, "epoch": 0.7290509002000445, "percentage": 24.32, "elapsed_time": "1:07:41", "remaining_time": "3:30:40"}

|

| 42 |

+

{"current_steps": 420, "total_steps": 1686, "loss": 1.0031, "accuracy": 0.606249988079071, "learning_rate": 4.272947614573244e-06, "epoch": 0.7468326294732163, "percentage": 24.91, "elapsed_time": "1:09:16", "remaining_time": "3:28:49"}

|

| 43 |

+

{"current_steps": 430, "total_steps": 1686, "loss": 0.9426, "accuracy": 0.581250011920929, "learning_rate": 4.23979704609569e-06, "epoch": 0.7646143587463881, "percentage": 25.5, "elapsed_time": "1:10:56", "remaining_time": "3:27:11"}

|

| 44 |

+

{"current_steps": 440, "total_steps": 1686, "loss": 0.9991, "accuracy": 0.574999988079071, "learning_rate": 4.206042359103435e-06, "epoch": 0.78239608801956, "percentage": 26.1, "elapsed_time": "1:12:35", "remaining_time": "3:25:33"}

|

| 45 |

+

{"current_steps": 450, "total_steps": 1686, "loss": 0.9571, "accuracy": 0.48124998807907104, "learning_rate": 4.17169527440691e-06, "epoch": 0.8001778172927317, "percentage": 26.69, "elapsed_time": "1:14:10", "remaining_time": "3:23:44"}

|

| 46 |

+

{"current_steps": 460, "total_steps": 1686, "loss": 0.8763, "accuracy": 0.574999988079071, "learning_rate": 4.136767718517797e-06, "epoch": 0.8179595465659035, "percentage": 27.28, "elapsed_time": "1:15:49", "remaining_time": "3:22:05"}

|

| 47 |

+

{"current_steps": 470, "total_steps": 1686, "loss": 0.9656, "accuracy": 0.5062500238418579, "learning_rate": 4.1012718195077196e-06, "epoch": 0.8357412758390753, "percentage": 27.88, "elapsed_time": "1:17:29", "remaining_time": "3:20:29"}

|

| 48 |

+

{"current_steps": 480, "total_steps": 1686, "loss": 0.9158, "accuracy": 0.59375, "learning_rate": 4.065219902796953e-06, "epoch": 0.8535230051122472, "percentage": 28.47, "elapsed_time": "1:19:04", "remaining_time": "3:18:41"}

|

| 49 |

+

{"current_steps": 490, "total_steps": 1686, "loss": 0.9181, "accuracy": 0.5375000238418579, "learning_rate": 4.028624486874608e-06, "epoch": 0.871304734385419, "percentage": 29.06, "elapsed_time": "1:20:43", "remaining_time": "3:17:02"}

|

| 50 |

+

{"current_steps": 500, "total_steps": 1686, "loss": 1.0001, "accuracy": 0.512499988079071, "learning_rate": 3.99149827895177e-06, "epoch": 0.8890864636585908, "percentage": 29.66, "elapsed_time": "1:22:25", "remaining_time": "3:15:31"}

|

| 51 |

+

{"current_steps": 500, "total_steps": 1686, "eval_loss": 0.9318326711654663, "epoch": 0.8890864636585908, "percentage": 29.66, "elapsed_time": "1:25:35", "remaining_time": "3:23:00"}

|

training_args.bin

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 5240

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e6ab2396e5055a1ca26da0140d4bbca4110d8a454644d56af71319708b8b1be6

|

| 3 |

size 5240

|