first

Browse files- .gitattributes +1 -0

- README.md +150 -0

- attn.png +0 -0

- config.json +48 -0

- modeling_lsg_xlm_roberta.py +1249 -0

- pytorch_model.bin +3 -0

- sentencepiece.bpe.model +3 -0

- special_tokens_map.json +15 -0

- tokenizer.json +3 -0

- tokenizer_config.json +20 -0

.gitattributes

CHANGED

|

@@ -20,6 +20,7 @@

|

|

| 20 |

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 21 |

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 22 |

*.rar filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 23 |

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 24 |

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 25 |

*.tflite filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 20 |

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 21 |

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 22 |

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

| 24 |

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 25 |

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 26 |

*.tflite filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,150 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: en

|

| 3 |

+

tags:

|

| 4 |

+

- long context

|

| 5 |

+

pipeline_tag: fill-mask

|

| 6 |

+

---

|

| 7 |

+

|

| 8 |

+

# LSG model

|

| 9 |

+

**Transformers >= 4.18.0**\

|

| 10 |

+

**This model relies on a custom modeling file, you need to add trust_remote_code=True**\

|

| 11 |

+

**See [\#13467](https://github.com/huggingface/transformers/pull/13467)**

|

| 12 |

+

|

| 13 |

+

* [Usage](#usage)

|

| 14 |

+

* [Parameters](#parameters)

|

| 15 |

+

* [Sparse selection type](#sparse-selection-type)

|

| 16 |

+

* [Tasks](#tasks)

|

| 17 |

+

* [Training global tokens](#training-global-tokens)

|

| 18 |

+

|

| 19 |

+

This model is a small version of the [XLM-roberta-base](https://huggingface.co/xlm-roberta-base) model without additional pretraining yet. It uses the same number of parameters/layers and the same tokenizer.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

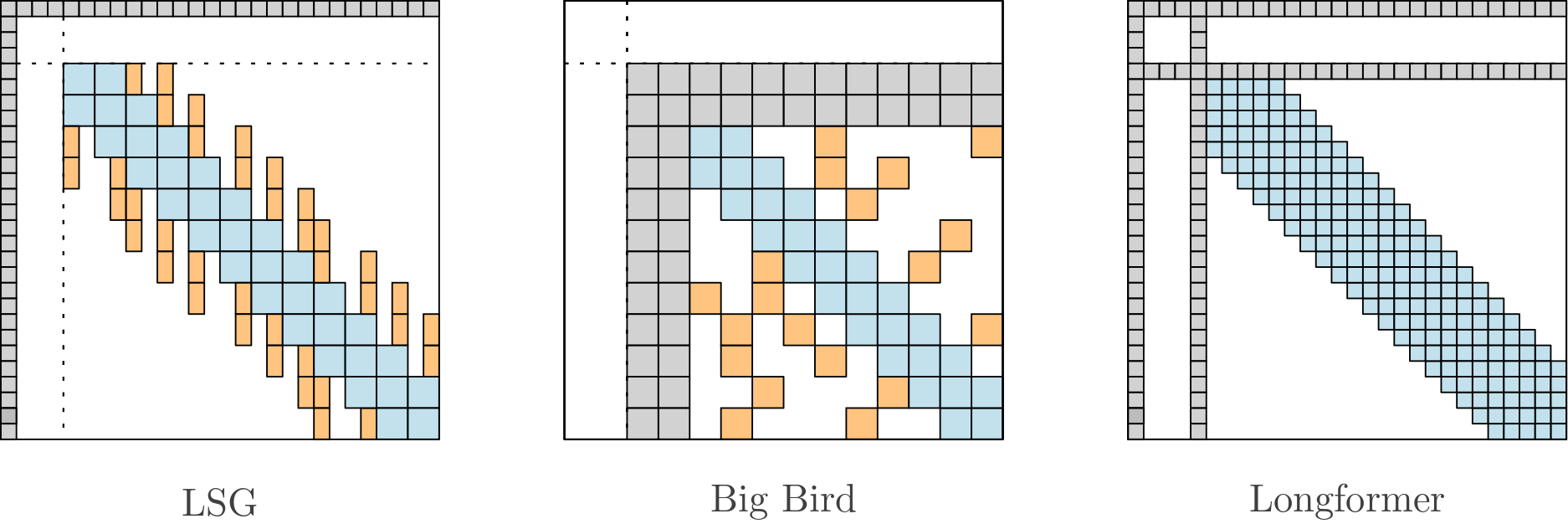

This model can handle long sequences but faster and more efficiently than Longformer or BigBird (from Transformers) and relies on Local + Sparse + Global attention (LSG).

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

The model requires sequences whose length is a multiple of the block size. The model is "adaptive" and automatically pads the sequences if needed (adaptive=True in config). It is however recommended, thanks to the tokenizer, to truncate the inputs (truncation=True) and optionally to pad with a multiple of the block size (pad_to_multiple_of=...). \

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

Support encoder-decoder but I didnt test it extensively.\

|

| 29 |

+

Implemented in PyTorch.

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

## Usage

|

| 34 |

+

The model relies on a custom modeling file, you need to add trust_remote_code=True to use it.

|

| 35 |

+

|

| 36 |

+

```python:

|

| 37 |

+

from transformers import AutoModel, AutoTokenizer

|

| 38 |

+

|

| 39 |

+

model = AutoModel.from_pretrained("ccdv/lsg-xlm-roberta-base-4096", trust_remote_code=True)

|

| 40 |

+

tokenizer = AutoTokenizer.from_pretrained("ccdv/lsg-xlm-roberta-base-4096")

|

| 41 |

+

```

|

| 42 |

+

|

| 43 |

+

## Parameters

|

| 44 |

+

You can change various parameters like :

|

| 45 |

+

* the number of global tokens (num_global_tokens=1)

|

| 46 |

+

* local block size (block_size=128)

|

| 47 |

+

* sparse block size (sparse_block_size=128)

|

| 48 |

+

* sparsity factor (sparsity_factor=2)

|

| 49 |

+

* mask_first_token (mask first token since it is redundant with the first global token)

|

| 50 |

+

* see config.json file

|

| 51 |

+

|

| 52 |

+

Default parameters work well in practice. If you are short on memory, reduce block sizes, increase sparsity factor and remove dropout in the attention score matrix.

|

| 53 |

+

|

| 54 |

+

```python:

|

| 55 |

+

from transformers import AutoModel

|

| 56 |

+

|

| 57 |

+

model = AutoModel.from_pretrained("ccdv/lsg-xlm-roberta-base-4096",

|

| 58 |

+

trust_remote_code=True,

|

| 59 |

+

num_global_tokens=16,

|

| 60 |

+

block_size=64,

|

| 61 |

+

sparse_block_size=64,

|

| 62 |

+

attention_probs_dropout_prob=0.0

|

| 63 |

+

sparsity_factor=4,

|

| 64 |

+

sparsity_type="none",

|

| 65 |

+

mask_first_token=True

|

| 66 |

+

)

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

## Sparse selection type

|

| 70 |

+

|

| 71 |

+

There are 5 different sparse selection patterns. The best type is task dependent. \

|

| 72 |

+

Note that for sequences with length < 2*block_size, the type has no effect.

|

| 73 |

+

|

| 74 |

+

* sparsity_type="norm", select highest norm tokens

|

| 75 |

+

* Works best for a small sparsity_factor (2 to 4)

|

| 76 |

+

* Additional parameters:

|

| 77 |

+

* None

|

| 78 |

+

* sparsity_type="pooling", use average pooling to merge tokens

|

| 79 |

+

* Works best for a small sparsity_factor (2 to 4)

|

| 80 |

+

* Additional parameters:

|

| 81 |

+

* None

|

| 82 |

+

* sparsity_type="lsh", use the LSH algorithm to cluster similar tokens

|

| 83 |

+

* Works best for a large sparsity_factor (4+)

|

| 84 |

+

* LSH relies on random projections, thus inference may differ slightly with different seeds

|

| 85 |

+

* Additional parameters:

|

| 86 |

+

* lsg_num_pre_rounds=1, pre merge tokens n times before computing centroids

|

| 87 |

+

* sparsity_type="stride", use a striding mecanism per head

|

| 88 |

+

* Each head will use different tokens strided by sparsify_factor

|

| 89 |

+

* Not recommended if sparsify_factor > num_heads

|

| 90 |

+

* sparsity_type="block_stride", use a striding mecanism per head

|

| 91 |

+

* Each head will use block of tokens strided by sparsify_factor

|

| 92 |

+

* Not recommended if sparsify_factor > num_heads

|

| 93 |

+

|

| 94 |

+

## Tasks

|

| 95 |

+

Fill mask example:

|

| 96 |

+

```python:

|

| 97 |

+

from transformers import FillMaskPipeline, AutoModelForMaskedLM, AutoTokenizer

|

| 98 |

+

|

| 99 |

+

model = AutoModelForMaskedLM.from_pretrained("ccdv/lsg-xlm-roberta-base-4096", trust_remote_code=True)

|

| 100 |

+

tokenizer = AutoTokenizer.from_pretrained("ccdv/lsg-xlm-roberta-base-4096")

|

| 101 |

+

|

| 102 |

+

SENTENCES = ["Paris is the <mask> of France."]

|

| 103 |

+

pipeline = FillMaskPipeline(model, tokenizer)

|

| 104 |

+

output = pipeline(SENTENCES, top_k=1)

|

| 105 |

+

|

| 106 |

+

output = [o[0]["sequence"] for o in output]

|

| 107 |

+

> ['Paris is the capital of France.']

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

Classification example:

|

| 112 |

+

```python:

|

| 113 |

+

from transformers import AutoModelForSequenceClassification, AutoTokenizer

|

| 114 |

+

|

| 115 |

+

model = AutoModelForSequenceClassification.from_pretrained("ccdv/lsg-xlm-roberta-base-4096",

|

| 116 |

+

trust_remote_code=True,

|

| 117 |

+

pool_with_global=True, # pool with a global token instead of first token

|

| 118 |

+

)

|

| 119 |

+

tokenizer = AutoTokenizer.from_pretrained("ccdv/lsg-xlm-roberta-base-4096")

|

| 120 |

+

|

| 121 |

+

SENTENCE = "This is a test for sequence classification. " * 300

|

| 122 |

+

token_ids = tokenizer(

|

| 123 |

+

SENTENCE,

|

| 124 |

+

return_tensors="pt",

|

| 125 |

+

#pad_to_multiple_of=... # Optional

|

| 126 |

+

truncation=True

|

| 127 |

+

)

|

| 128 |

+

output = model(**token_ids)

|

| 129 |

+

|

| 130 |

+

> SequenceClassifierOutput(loss=None, logits=tensor([[-0.3051, -0.1762]], grad_fn=<AddmmBackward>), hidden_states=None, attentions=None)

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

## Training global tokens

|

| 134 |

+

To train global tokens and the classification head only:

|

| 135 |

+

```python:

|

| 136 |

+

from transformers import AutoModelForSequenceClassification, AutoTokenizer

|

| 137 |

+

|

| 138 |

+

model = AutoModelForSequenceClassification.from_pretrained("ccdv/lsg-xlm-roberta-base-4096",

|

| 139 |

+

trust_remote_code=True,

|

| 140 |

+

pool_with_global=True, # pool with a global token instead of first token

|

| 141 |

+

num_global_tokens=16

|

| 142 |

+

)

|

| 143 |

+

tokenizer = AutoTokenizer.from_pretrained("ccdv/lsg-xlm-roberta-base-4096")

|

| 144 |

+

|

| 145 |

+

for name, param in model.named_parameters():

|

| 146 |

+

if "global_embeddings" not in name:

|

| 147 |

+

param.requires_grad = False

|

| 148 |

+

else:

|

| 149 |

+

param.required_grad = True

|

| 150 |

+

```

|

attn.png

ADDED

|

config.json

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "ccdv/lsg-xlm",

|

| 3 |

+

"adaptive": true,

|

| 4 |

+

"architectures": [

|

| 5 |

+

"LSGXLMRobertaForMaskedLM"

|

| 6 |

+

],

|

| 7 |

+

"attention_probs_dropout_prob": 0.1,

|

| 8 |

+

"auto_map": {

|

| 9 |

+

"AutoConfig": "modeling_lsg_xlm_roberta.LSGXLMRobertaConfig",

|

| 10 |

+

"AutoModel": "modeling_lsg_xlm_roberta.LSGXLMRobertaModel",

|

| 11 |

+

"AutoModelForCausalLM": "modeling_lsg_xlm_roberta.LSGXLMRobertaForCausalLM",

|

| 12 |

+

"AutoModelForMaskedLM": "modeling_lsg_xlm_roberta.LSGXLMRobertaForMaskedLM",

|

| 13 |

+

"AutoModelForMultipleChoice": "modeling_lsg_xlm_roberta.LSGXLMRobertaForMultipleChoice",

|

| 14 |

+

"AutoModelForQuestionAnswering": "modeling_lsg_xlm_roberta.LSGXLMRobertaForQuestionAnswering",

|

| 15 |

+

"AutoModelForSequenceClassification": "modeling_lsg_xlm_roberta.LSGXLMRobertaForSequenceClassification",

|

| 16 |

+

"AutoModelForTokenClassification": "modeling_lsg_xlm_roberta.LSGXLMRobertaForTokenClassification"

|

| 17 |

+

},

|

| 18 |

+

"base_model_prefix": "lsg",

|

| 19 |

+

"block_size": 256,

|

| 20 |

+

"bos_token_id": 0,

|

| 21 |

+

"classifier_dropout": null,

|

| 22 |

+

"eos_token_id": 2,

|

| 23 |

+

"hidden_act": "gelu",

|

| 24 |

+

"hidden_dropout_prob": 0.1,

|

| 25 |

+

"hidden_size": 768,

|

| 26 |

+

"initializer_range": 0.02,

|

| 27 |

+

"intermediate_size": 3072,

|

| 28 |

+

"layer_norm_eps": 1e-05,

|

| 29 |

+

"lsh_num_pre_rounds": 1,

|

| 30 |

+

"mask_first_token": true,

|

| 31 |

+

"max_position_embeddings": 4098,

|

| 32 |

+

"model_type": "xlm-roberta",

|

| 33 |

+

"num_attention_heads": 12,

|

| 34 |

+

"num_global_tokens": 1,

|

| 35 |

+

"num_hidden_layers": 12,

|

| 36 |

+

"output_past": true,

|

| 37 |

+

"pad_token_id": 1,

|

| 38 |

+

"pool_with_global": true,

|

| 39 |

+

"position_embedding_type": "absolute",

|

| 40 |

+

"sparse_block_size": 0,

|

| 41 |

+

"sparsity_factor": 4,

|

| 42 |

+

"sparsity_type": "none",

|

| 43 |

+

"torch_dtype": "float32",

|

| 44 |

+

"transformers_version": "4.20.1",

|

| 45 |

+

"type_vocab_size": 1,

|

| 46 |

+

"use_cache": true,

|

| 47 |

+

"vocab_size": 250002

|

| 48 |

+

}

|

modeling_lsg_xlm_roberta.py

ADDED

|

@@ -0,0 +1,1249 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from logging import warn

|

| 2 |

+

from transformers.models.roberta.modeling_roberta import *

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

from transformers.models.xlm_roberta.configuration_xlm_roberta import XLMRobertaConfig

|

| 6 |

+

import sys

|

| 7 |

+

|

| 8 |

+

AUTO_MAP = {

|

| 9 |

+

"AutoModel": "modeling_lsg_xlm_roberta.LSGXLMRobertaModel",

|

| 10 |

+

"AutoModelForCausalLM": "modeling_lsg_xlm_roberta.LSGXLMRobertaForCausalLM",

|

| 11 |

+

"AutoModelForMaskedLM": "modeling_lsg_xlm_roberta.LSGXLMRobertaForMaskedLM",

|

| 12 |

+

"AutoModelForMultipleChoice": "modeling_lsg_xlm_roberta.LSGXLMRobertaForMultipleChoice",

|

| 13 |

+

"AutoModelForQuestionAnswering": "modeling_lsg_xlm_roberta.LSGXLMRobertaForQuestionAnswering",

|

| 14 |

+

"AutoModelForSequenceClassification": "modeling_lsg_xlm_roberta.LSGXLMRobertaForSequenceClassification",

|

| 15 |

+

"AutoModelForTokenClassification": "modeling_lsg_xlm_roberta.LSGXLMRobertaForTokenClassification"

|

| 16 |

+

}

|

| 17 |

+

|

| 18 |

+

class LSGXLMRobertaConfig(XLMRobertaConfig):

|

| 19 |

+

"""

|

| 20 |

+

This class overrides :class:`~transformers.RobertaConfig`. Please check the superclass for the appropriate

|

| 21 |

+

documentation alongside usage examples.

|

| 22 |

+

"""

|

| 23 |

+

|

| 24 |

+

base_model_prefix = "lsg"

|

| 25 |

+

model_type = "xlm-roberta"

|

| 26 |

+

|

| 27 |

+

def __init__(

|

| 28 |

+

self,

|

| 29 |

+

adaptive=True,

|

| 30 |

+

base_model_prefix="lsg",

|

| 31 |

+

block_size=128,

|

| 32 |

+

lsh_num_pre_rounds=1,

|

| 33 |

+

mask_first_token=False,

|

| 34 |

+

num_global_tokens=1,

|

| 35 |

+

pool_with_global=True,

|

| 36 |

+

sparse_block_size=128,

|

| 37 |

+

sparsity_factor=2,

|

| 38 |

+

sparsity_type="norm",

|

| 39 |

+

**kwargs

|

| 40 |

+

):

|

| 41 |

+

"""Constructs LSGXLMRobertaConfig."""

|

| 42 |

+

super().__init__(**kwargs)

|

| 43 |

+

|

| 44 |

+

self.adaptive = adaptive

|

| 45 |

+

self.auto_map = AUTO_MAP

|

| 46 |

+

self.base_model_prefix = base_model_prefix

|

| 47 |

+

self.block_size = block_size

|

| 48 |

+

self.lsh_num_pre_rounds = lsh_num_pre_rounds

|

| 49 |

+

self.mask_first_token = mask_first_token

|

| 50 |

+

self.num_global_tokens = num_global_tokens

|

| 51 |

+

self.pool_with_global = pool_with_global

|

| 52 |

+

self.sparse_block_size = sparse_block_size

|

| 53 |

+

self.sparsity_factor = sparsity_factor

|

| 54 |

+

self.sparsity_type = sparsity_type

|

| 55 |

+

|

| 56 |

+

if sparsity_type not in [None, "none", "norm", "lsh", "pooling", "stride", "block_stride"]:

|

| 57 |

+

logger.warning(

|

| 58 |

+

"[WARNING CONFIG]: sparsity_mode not in [None, 'none', 'norm', 'lsh', 'pooling', 'stride', 'block_stride'], setting sparsity_type=None, computation will skip sparse attention")

|

| 59 |

+

self.sparsity_type = None

|

| 60 |

+

|

| 61 |

+

if self.sparsity_type in ["stride", "block_stride"]:

|

| 62 |

+

if self.sparsity_factor > self.encoder_attention_heads:

|

| 63 |

+

logger.warning(

|

| 64 |

+

"[WARNING CONFIG]: sparsity_factor > encoder_attention_heads is not recommended for stride/block_stride sparsity"

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |

+

if self.num_global_tokens < 1:

|

| 68 |

+

logger.warning(

|

| 69 |

+

"[WARNING CONFIG]: num_global_tokens < 1 is not compatible, setting num_global_tokens=1"

|

| 70 |

+

)

|

| 71 |

+

self.num_global_tokens = 1

|

| 72 |

+

elif self.num_global_tokens > 512:

|

| 73 |

+

logger.warning(

|

| 74 |

+

"[WARNING CONFIG]: num_global_tokens > 512 is not compatible, setting num_global_tokens=512"

|

| 75 |

+

)

|

| 76 |

+

self.num_global_tokens = 512

|

| 77 |

+

|

| 78 |

+

if self.sparsity_factor > 0:

|

| 79 |

+

assert self.block_size % self.sparsity_factor == 0, "[ERROR CONFIG]: block_size must be divisible by sparsity_factor"

|

| 80 |

+

assert self.block_size//self.sparsity_factor >= 1, "[ERROR CONFIG]: make sure block_size >= sparsity_factor"

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

class BaseSelfAttention(nn.Module):

|

| 84 |

+

|

| 85 |

+

def init_modules(self, config):

|

| 86 |

+

if config.hidden_size % config.num_attention_heads != 0 and not hasattr(

|

| 87 |

+

config, "embedding_size"

|

| 88 |

+

):

|

| 89 |

+

raise ValueError(

|

| 90 |

+

"The hidden size (%d) is not a multiple of the number of attention "

|

| 91 |

+

"heads (%d)" % (config.hidden_size, config.num_attention_heads)

|

| 92 |

+

)

|

| 93 |

+

|

| 94 |

+

self.num_attention_heads = config.num_attention_heads

|

| 95 |

+

self.attention_head_size = int(config.hidden_size / config.num_attention_heads)

|

| 96 |

+

self.all_head_size = self.num_attention_heads * self.attention_head_size

|

| 97 |

+

|

| 98 |

+

self.query = nn.Linear(config.hidden_size, self.all_head_size)

|

| 99 |

+

self.key = nn.Linear(config.hidden_size, self.all_head_size)

|

| 100 |

+

self.value = nn.Linear(config.hidden_size, self.all_head_size)

|

| 101 |

+

|

| 102 |

+

self.dropout = nn.Dropout(config.attention_probs_dropout_prob)

|

| 103 |

+

|

| 104 |

+

def transpose_for_scores(self, x):

|

| 105 |

+

new_x_shape = x.size()[:-1] + (

|

| 106 |

+

self.num_attention_heads,

|

| 107 |

+

self.attention_head_size,

|

| 108 |

+

)

|

| 109 |

+

x = x.view(*new_x_shape)

|

| 110 |

+

return x.permute(0, 2, 1, 3)

|

| 111 |

+

|

| 112 |

+

def reshape_output(self, context_layer):

|

| 113 |

+

context_layer = context_layer.permute(0, 2, 1, 3).contiguous()

|

| 114 |

+

new_context_layer_shape = context_layer.size()[:-2] + (self.all_head_size,)

|

| 115 |

+

return context_layer.view(*new_context_layer_shape)

|

| 116 |

+

|

| 117 |

+

def project_QKV(self, hidden_states):

|

| 118 |

+

|

| 119 |

+

query_layer = self.transpose_for_scores(self.query(hidden_states))

|

| 120 |

+

key_layer = self.transpose_for_scores(self.key(hidden_states))

|

| 121 |

+

value_layer = self.transpose_for_scores(self.value(hidden_states))

|

| 122 |

+

return query_layer, key_layer, value_layer

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

class BaseAttentionProduct(nn.Module):

|

| 126 |

+

|

| 127 |

+

def __init__(self, config):

|

| 128 |

+

"""

|

| 129 |

+

Compute attention: softmax(Q @ K.T) @ V

|

| 130 |

+

"""

|

| 131 |

+

super().__init__()

|

| 132 |

+

self.dropout = nn.Dropout(config.attention_probs_dropout_prob)

|

| 133 |

+

|

| 134 |

+

def forward(self, query_layer, key_layer, value_layer, attention_mask=None):

|

| 135 |

+

|

| 136 |

+

d = query_layer.shape[-1]

|

| 137 |

+

|

| 138 |

+

# Take the dot product between "query" and "key" to get the raw attention scores.

|

| 139 |

+

attention_scores = query_layer @ key_layer.transpose(-1, -2) / math.sqrt(d)

|

| 140 |

+

|

| 141 |

+

del query_layer

|

| 142 |

+

del key_layer

|

| 143 |

+

|

| 144 |

+

if attention_mask is not None:

|

| 145 |

+

# Apply the attention mask is (precomputed for all layers in RobertaModel forward() function)

|

| 146 |

+

attention_scores = attention_scores + attention_mask

|

| 147 |

+

del attention_mask

|

| 148 |

+

|

| 149 |

+

# Normalize the attention scores to probabilities.

|

| 150 |

+

attention_probs = nn.Softmax(dim=-1)(attention_scores)

|

| 151 |

+

|

| 152 |

+

# This is actually dropping out entire tokens to attend to, which might

|

| 153 |

+

# seem a bit unusual, but is taken from the original Transformer paper.

|

| 154 |

+

context_layer = self.dropout(attention_probs) @ value_layer

|

| 155 |

+

|

| 156 |

+

return context_layer

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

class CausalAttentionProduct(nn.Module):

|

| 160 |

+

|

| 161 |

+

def __init__(self, config):

|

| 162 |

+

"""

|

| 163 |

+

Compute attention: softmax(Q @ K.T) @ V

|

| 164 |

+

"""

|

| 165 |

+

super().__init__()

|

| 166 |

+

self.dropout = nn.Dropout(config.attention_probs_dropout_prob)

|

| 167 |

+

self.block_size = config.block_size

|

| 168 |

+

|

| 169 |

+

def forward(self, query_layer, key_layer, value_layer, attention_mask=None, causal_shape=None):

|

| 170 |

+

|

| 171 |

+

d = query_layer.shape[-1]

|

| 172 |

+

|

| 173 |

+

# Take the dot product between "query" and "key" to get the raw attention scores.

|

| 174 |

+

attention_scores = query_layer @ key_layer.transpose(-1, -2) / math.sqrt(d)

|

| 175 |

+

|

| 176 |

+

del query_layer

|

| 177 |

+

del key_layer

|

| 178 |

+

|

| 179 |

+

if attention_mask is not None:

|

| 180 |

+

# Apply the attention mask is (precomputed for all layers in RobertaModel forward() function)

|

| 181 |

+

attention_scores = attention_scores + attention_mask

|

| 182 |

+

|

| 183 |

+

# Add causal mask

|

| 184 |

+

causal_shape = (self.block_size, self.block_size) if causal_shape is None else causal_shape

|

| 185 |

+

causal_mask = torch.tril(

|

| 186 |

+

torch.ones(*causal_shape, device=attention_mask.device, dtype=attention_scores.dtype),

|

| 187 |

+

diagonal=-1

|

| 188 |

+

)

|

| 189 |

+

causal_mask = causal_mask.T * torch.finfo(attention_scores.dtype).min

|

| 190 |

+

attention_scores[..., -causal_shape[0]:, -causal_shape[1]:] = causal_mask

|

| 191 |

+

|

| 192 |

+

del attention_mask

|

| 193 |

+

|

| 194 |

+

# Normalize the attention scores to probabilities.

|

| 195 |

+

attention_probs = nn.Softmax(dim=-1)(attention_scores)

|

| 196 |

+

|

| 197 |

+

# This is actually dropping out entire tokens to attend to, which might

|

| 198 |

+

# seem a bit unusual, but is taken from the original Transformer paper.

|

| 199 |

+

context_layer = self.dropout(attention_probs) @ value_layer

|

| 200 |

+

|

| 201 |

+

return context_layer

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

class LSGAttentionProduct(nn.Module):

|

| 205 |

+

|

| 206 |

+

def __init__(self, config, block_size=None, sparse_block_size=None, sparsity_factor=4, is_causal=False):

|

| 207 |

+

"""

|

| 208 |

+

Compute block or overlapping blocks attention products

|

| 209 |

+

"""

|

| 210 |

+

super().__init__()

|

| 211 |

+

|

| 212 |

+

self.block_size = block_size

|

| 213 |

+

self.sparse_block_size = sparse_block_size

|

| 214 |

+

self.sparsity_factor = sparsity_factor

|

| 215 |

+

self.is_causal = is_causal

|

| 216 |

+

|

| 217 |

+

if self.block_size is None:

|

| 218 |

+

self.block_size = config.block_size

|

| 219 |

+

|

| 220 |

+

if self.sparse_block_size is None:

|

| 221 |

+

self.sparse_block_size = config.sparse_block_size

|

| 222 |

+

|

| 223 |

+

# Shape of blocks

|

| 224 |

+

self.local_shapes = (self.block_size*3, self.block_size)

|

| 225 |

+

if self.sparse_block_size and self.sparsity_factor > 0:

|

| 226 |

+

self.sparse_shapes = (self.sparse_block_size*3, self.block_size//self.sparsity_factor)

|

| 227 |

+

|

| 228 |

+

if is_causal:

|

| 229 |

+

self.attention = CausalAttentionProduct(config)

|

| 230 |

+

else:

|

| 231 |

+

self.attention = BaseAttentionProduct(config)

|

| 232 |

+

|

| 233 |

+

def build_lsg_inputs(self, hidden_states, sparse_hidden_states, global_hidden_states, is_attn_mask=False):

|

| 234 |

+

|

| 235 |

+

# Build local tokens

|

| 236 |

+

local_hidden_states = self.reshape_to_local_block(hidden_states, is_attn_mask)

|

| 237 |

+

del hidden_states

|

| 238 |

+

|

| 239 |

+

# Build sparse tokens

|

| 240 |

+

if sparse_hidden_states is not None:

|

| 241 |

+

sparse_hidden_states = self.reshape_to_sparse_block(sparse_hidden_states, is_attn_mask)

|

| 242 |

+

|

| 243 |

+

return self.cat_global_sparse_local_tokens(global_hidden_states, sparse_hidden_states, local_hidden_states)

|

| 244 |

+

|

| 245 |

+

def forward(

|

| 246 |

+

self,

|

| 247 |

+

query_layer,

|

| 248 |

+

key_layer,

|

| 249 |

+

value_layer,

|

| 250 |

+

attention_mask=None,

|

| 251 |

+

sparse_key=None,

|

| 252 |

+

sparse_value=None,

|

| 253 |

+

sparse_mask=None,

|

| 254 |

+

global_key=None,

|

| 255 |

+

global_value=None,

|

| 256 |

+

global_mask=None

|

| 257 |

+

):

|

| 258 |

+

|

| 259 |

+

# Input batch, heads, length, hidden_size

|

| 260 |

+

n, h, t, d = query_layer.size()

|

| 261 |

+

n_blocks = t // self.block_size

|

| 262 |

+

assert t % self.block_size == 0

|

| 263 |

+

|

| 264 |

+

key_layer = self.build_lsg_inputs(

|

| 265 |

+

key_layer,

|

| 266 |

+

sparse_key,

|

| 267 |

+

global_key

|

| 268 |

+

)

|

| 269 |

+

del sparse_key

|

| 270 |

+

del global_key

|

| 271 |

+

|

| 272 |

+

value_layer = self.build_lsg_inputs(

|

| 273 |

+

value_layer,

|

| 274 |

+

sparse_value,

|

| 275 |

+

global_value

|

| 276 |

+

)

|

| 277 |

+

del sparse_value

|

| 278 |

+

del global_value

|

| 279 |

+

|

| 280 |

+

attention_mask = self.build_lsg_inputs(

|

| 281 |

+

attention_mask,

|

| 282 |

+

sparse_mask,

|

| 283 |

+

global_mask.transpose(-1, -2),

|

| 284 |

+

is_attn_mask=True

|

| 285 |

+

).transpose(-1, -2)

|

| 286 |

+

del sparse_mask

|

| 287 |

+

del global_mask

|

| 288 |

+

|

| 289 |

+

# expect (..., t, d) shape

|

| 290 |

+

# Compute attention

|

| 291 |

+

context_layer = self.attention(

|

| 292 |

+

query_layer=self.chunk(query_layer, n_blocks),

|

| 293 |

+

key_layer=key_layer,

|

| 294 |

+

value_layer=value_layer,

|

| 295 |

+

attention_mask=attention_mask

|

| 296 |

+

)

|

| 297 |

+

|

| 298 |

+

return context_layer.reshape(n, h, -1, d)

|

| 299 |

+

|

| 300 |

+

def reshape_to_local_block(self, hidden_states, is_attn_mask=False):

|

| 301 |

+

|

| 302 |

+

size, step = self.local_shapes

|

| 303 |

+

s = (size - step) // 2

|

| 304 |

+

|

| 305 |

+

# Pad before block reshaping

|

| 306 |

+

if is_attn_mask:

|

| 307 |

+

pad_value = torch.finfo(hidden_states.dtype).min

|

| 308 |

+

hidden_states = hidden_states.transpose(-1, -2)

|

| 309 |

+

else:

|

| 310 |

+

pad_value = 0

|

| 311 |

+

|

| 312 |

+

hidden_states = torch.nn.functional.pad(

|

| 313 |

+

hidden_states.transpose(-1, -2),

|

| 314 |

+

pad=(s, s),

|

| 315 |

+

value=pad_value

|

| 316 |

+

).transpose(-1, -2)

|

| 317 |

+

|

| 318 |

+

# Make blocks

|

| 319 |

+

hidden_states = hidden_states.unfold(-2, size=size, step=step).transpose(-1, -2)

|

| 320 |

+

|

| 321 |

+

# Skip third block if causal

|

| 322 |

+

if self.is_causal:

|

| 323 |

+

return hidden_states[..., :size*2//3, :]

|

| 324 |

+

|

| 325 |

+

return hidden_states

|

| 326 |

+

|

| 327 |

+

def reshape_to_sparse_block(self, hidden_states, is_attn_mask=False):

|

| 328 |

+

|

| 329 |

+

size, step = self.sparse_shapes

|

| 330 |

+

|

| 331 |

+

# In case of odd case

|

| 332 |

+

odd_offset = (step % 2)

|

| 333 |

+

|

| 334 |

+

# n, h, t, d*2 + 1

|

| 335 |

+

size = size*2

|

| 336 |

+

s = (size - step) // 2 + odd_offset

|

| 337 |

+

|

| 338 |

+

# Pad before block reshaping

|

| 339 |

+

if is_attn_mask:

|

| 340 |

+

pad_value = torch.finfo(hidden_states.dtype).min

|

| 341 |

+

hidden_states = hidden_states.transpose(-1, -2)

|

| 342 |

+

else:

|

| 343 |

+

pad_value = 0

|

| 344 |

+

|

| 345 |

+

hidden_states = torch.nn.functional.pad(

|

| 346 |

+

hidden_states.transpose(-1, -2),

|

| 347 |

+

pad=(s, s),

|

| 348 |

+

value=pad_value

|

| 349 |

+

).transpose(-1, -2)

|

| 350 |

+

|

| 351 |

+

# Make blocks

|

| 352 |

+

hidden_states = hidden_states.unfold(-2, size=size, step=step).transpose(-1, -2)

|

| 353 |

+

|

| 354 |

+

# Fix case where block_size == sparsify_factor

|

| 355 |

+

if odd_offset:

|

| 356 |

+

hidden_states = hidden_states[..., :-1, :, :]

|

| 357 |

+

|

| 358 |

+

# Indexes for selection

|

| 359 |

+

u = (size - self.block_size * 3 // self.sparsity_factor) // 2 + odd_offset

|

| 360 |

+

s = self.sparse_block_size

|

| 361 |

+

|

| 362 |

+

# Skip right block if causal

|

| 363 |

+

if self.is_causal:

|

| 364 |

+

return hidden_states[..., u-s:u, :]

|

| 365 |

+

|

| 366 |

+

u_ = u + odd_offset

|

| 367 |

+

return torch.cat([hidden_states[..., u-s:u, :], hidden_states[..., -u_:-u_+s, :]], dim=-2)

|

| 368 |

+

|

| 369 |

+

def cat_global_sparse_local_tokens(self, x_global, x_sparse=None, x_local=None, dim=-2):

|

| 370 |

+

|

| 371 |

+

n, h, b, t, d = x_local.size()

|

| 372 |

+

x_global = x_global.unsqueeze(-3).expand(-1, -1, b, -1, -1)

|

| 373 |

+

if x_sparse is not None:

|

| 374 |

+

return torch.cat([x_global, x_sparse, x_local], dim=dim)

|

| 375 |

+

return torch.cat([x_global, x_local], dim=dim)

|

| 376 |

+

|

| 377 |

+

def chunk(self, x, n_blocks):

|

| 378 |

+

|

| 379 |

+

t, d = x.size()[-2:]

|

| 380 |

+

return x.reshape(*x.size()[:-2], n_blocks, -1, d)

|

| 381 |

+

|