Upload 25 files

Browse files- train/F1_curve.png +0 -0

- train/PR_curve.png +0 -0

- train/P_curve.png +0 -0

- train/R_curve.png +0 -0

- train/args.yaml +17 -9

- train/confusion_matrix.png +0 -0

- train/confusion_matrix_normalized.png +0 -0

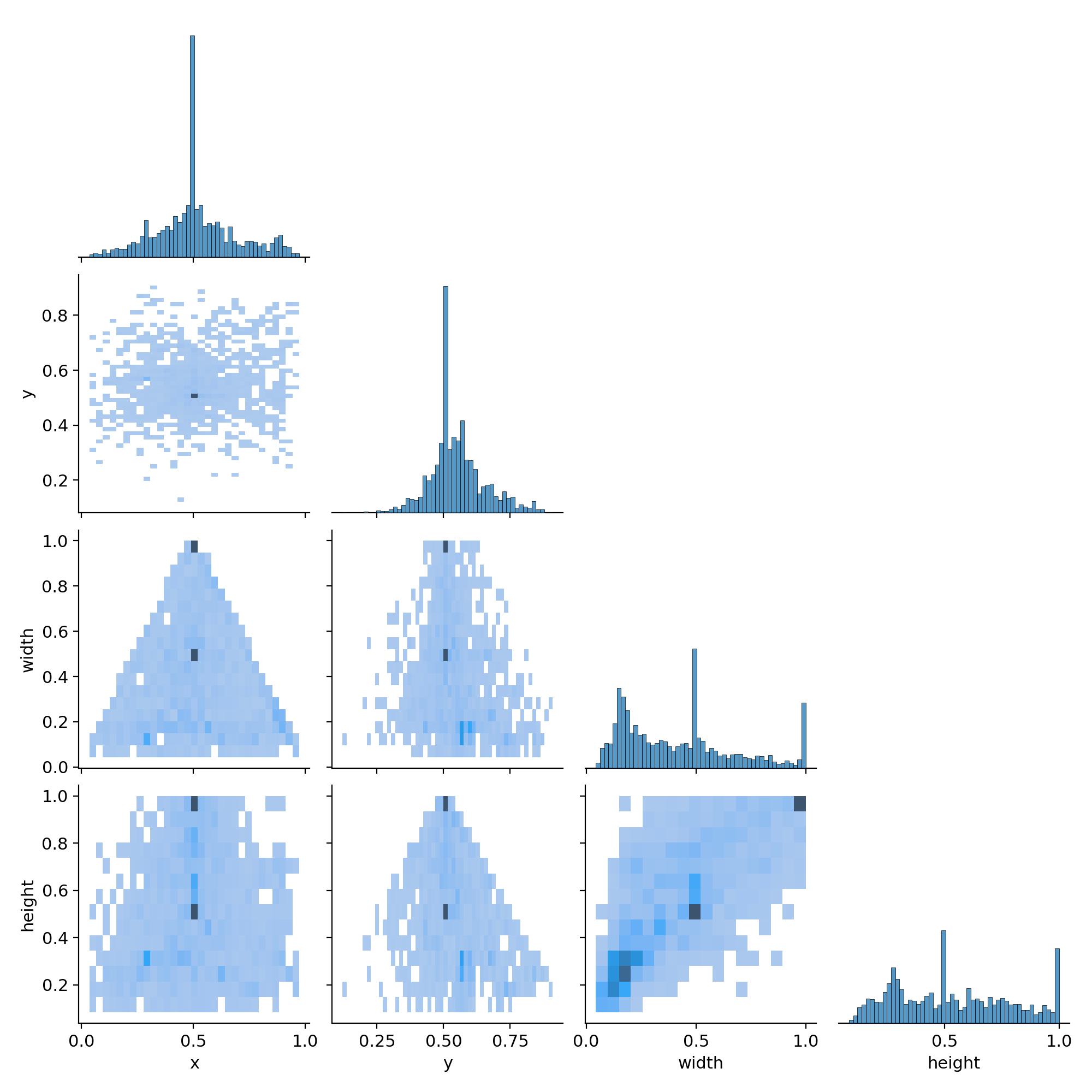

- train/labels.jpg +0 -0

- train/labels_correlogram.jpg +0 -0

- train/results.csv +100 -54

- train/results.png +0 -0

- train/train_batch0.jpg +0 -0

- train/train_batch1.jpg +0 -0

- train/train_batch11250.jpg +0 -0

- train/train_batch11251.jpg +0 -0

- train/train_batch11252.jpg +0 -0

- train/train_batch2.jpg +0 -0

- train/val_batch0_labels.jpg +0 -0

- train/val_batch0_pred.jpg +0 -0

- train/val_batch1_labels.jpg +0 -0

- train/val_batch1_pred.jpg +0 -0

- train/val_batch2_labels.jpg +0 -0

- train/val_batch2_pred.jpg +0 -0

- train/weights/best.pt +2 -2

- train/weights/last.pt +2 -2

train/F1_curve.png

CHANGED

|

|

train/PR_curve.png

CHANGED

|

|

train/P_curve.png

CHANGED

|

|

train/R_curve.png

CHANGED

|

|

train/args.yaml

CHANGED

|

@@ -1,8 +1,9 @@

|

|

| 1 |

task: detect

|

| 2 |

mode: train

|

| 3 |

model: /workspace/yolov8n.pt

|

| 4 |

-

data: /

|

| 5 |

epochs: 100

|

|

|

|

| 6 |

patience: 10

|

| 7 |

batch: 16

|

| 8 |

imgsz: 640

|

|

@@ -28,6 +29,7 @@ amp: true

|

|

| 28 |

fraction: 1.0

|

| 29 |

profile: false

|

| 30 |

freeze: null

|

|

|

|

| 31 |

overlap_mask: true

|

| 32 |

mask_ratio: 4

|

| 33 |

dropout: 0.0

|

|

@@ -42,21 +44,23 @@ half: false

|

|

| 42 |

dnn: false

|

| 43 |

plots: true

|

| 44 |

source: null

|

| 45 |

-

show: false

|

| 46 |

-

save_txt: false

|

| 47 |

-

save_conf: false

|

| 48 |

-

save_crop: false

|

| 49 |

-

show_labels: true

|

| 50 |

-

show_conf: true

|

| 51 |

vid_stride: 1

|

| 52 |

stream_buffer: false

|

| 53 |

-

line_width: null

|

| 54 |

visualize: false

|

| 55 |

augment: false

|

| 56 |

agnostic_nms: false

|

| 57 |

classes: null

|

| 58 |

retina_masks: false

|

| 59 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 60 |

format: torchscript

|

| 61 |

keras: false

|

| 62 |

optimize: false

|

|

@@ -90,9 +94,13 @@ shear: 0.0

|

|

| 90 |

perspective: 0.0

|

| 91 |

flipud: 0.0

|

| 92 |

fliplr: 0.5

|

|

|

|

| 93 |

mosaic: 1.0

|

| 94 |

mixup: 0.0

|

| 95 |

copy_paste: 0.0

|

|

|

|

|

|

|

|

|

|

| 96 |

cfg: null

|

| 97 |

tracker: botsort.yaml

|

| 98 |

save_dir: /workspace/runs/train_run_1/train

|

|

|

|

| 1 |

task: detect

|

| 2 |

mode: train

|

| 3 |

model: /workspace/yolov8n.pt

|

| 4 |

+

data: /deer_v7_batched/deer_v7_batch_1/data.yml

|

| 5 |

epochs: 100

|

| 6 |

+

time: null

|

| 7 |

patience: 10

|

| 8 |

batch: 16

|

| 9 |

imgsz: 640

|

|

|

|

| 29 |

fraction: 1.0

|

| 30 |

profile: false

|

| 31 |

freeze: null

|

| 32 |

+

multi_scale: false

|

| 33 |

overlap_mask: true

|

| 34 |

mask_ratio: 4

|

| 35 |

dropout: 0.0

|

|

|

|

| 44 |

dnn: false

|

| 45 |

plots: true

|

| 46 |

source: null

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 47 |

vid_stride: 1

|

| 48 |

stream_buffer: false

|

|

|

|

| 49 |

visualize: false

|

| 50 |

augment: false

|

| 51 |

agnostic_nms: false

|

| 52 |

classes: null

|

| 53 |

retina_masks: false

|

| 54 |

+

embed: null

|

| 55 |

+

show: false

|

| 56 |

+

save_frames: false

|

| 57 |

+

save_txt: false

|

| 58 |

+

save_conf: false

|

| 59 |

+

save_crop: false

|

| 60 |

+

show_labels: true

|

| 61 |

+

show_conf: true

|

| 62 |

+

show_boxes: true

|

| 63 |

+

line_width: null

|

| 64 |

format: torchscript

|

| 65 |

keras: false

|

| 66 |

optimize: false

|

|

|

|

| 94 |

perspective: 0.0

|

| 95 |

flipud: 0.0

|

| 96 |

fliplr: 0.5

|

| 97 |

+

bgr: 0.0

|

| 98 |

mosaic: 1.0

|

| 99 |

mixup: 0.0

|

| 100 |

copy_paste: 0.0

|

| 101 |

+

auto_augment: randaugment

|

| 102 |

+

erasing: 0.4

|

| 103 |

+

crop_fraction: 1.0

|

| 104 |

cfg: null

|

| 105 |

tracker: botsort.yaml

|

| 106 |

save_dir: /workspace/runs/train_run_1/train

|

train/confusion_matrix.png

CHANGED

|

|

train/confusion_matrix_normalized.png

CHANGED

|

|

train/labels.jpg

CHANGED

|

|

train/labels_correlogram.jpg

CHANGED

|

|

train/results.csv

CHANGED

|

@@ -1,55 +1,101 @@

|

|

| 1 |

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

-

1, 1.

|

| 3 |

-

2, 1.

|

| 4 |

-

3, 1.

|

| 5 |

-

4, 1.

|

| 6 |

-

5, 1.

|

| 7 |

-

6, 1.

|

| 8 |

-

7, 1.

|

| 9 |

-

8, 1.

|

| 10 |

-

9,

|

| 11 |

-

10,

|

| 12 |

-

11, 1.

|

| 13 |

-

12, 1.

|

| 14 |

-

13, 1.

|

| 15 |

-

14, 1.

|

| 16 |

-

15, 1.

|

| 17 |

-

16, 1.

|

| 18 |

-

17,

|

| 19 |

-

18, 1.

|

| 20 |

-

19, 1.

|

| 21 |

-

20,

|

| 22 |

-

21, 1.

|

| 23 |

-

22, 1.

|

| 24 |

-

23,

|

| 25 |

-

24,

|

| 26 |

-

25, 1.

|

| 27 |

-

26, 1.

|

| 28 |

-

27, 1.

|

| 29 |

-

28, 1.

|

| 30 |

-

29, 1.

|

| 31 |

-

30, 1.

|

| 32 |

-

31, 1.

|

| 33 |

-

32, 1.

|

| 34 |

-

33, 1.

|

| 35 |

-

34, 1.

|

| 36 |

-

35, 1.

|

| 37 |

-

36, 1.

|

| 38 |

-

37, 1.

|

| 39 |

-

38, 1.

|

| 40 |

-

39,

|

| 41 |

-

40, 1.

|

| 42 |

-

41,

|

| 43 |

-

42,

|

| 44 |

-

43,

|

| 45 |

-

44, 1.

|

| 46 |

-

45,

|

| 47 |

-

46,

|

| 48 |

-

47,

|

| 49 |

-

48,

|

| 50 |

-

49,

|

| 51 |

-

50,

|

| 52 |

-

51,

|

| 53 |

-

52,

|

| 54 |

-

53,

|

| 55 |

-

54,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

1, 1.3319, 1.9389, 1.5572, 0.66437, 0.51574, 0.57525, 0.28466, 1.5432, 2.2896, 1.7922, 0.00066133, 0.00066133, 0.00066133

|

| 3 |

+

2, 1.4503, 1.6458, 1.6456, 0.58779, 0.56777, 0.58637, 0.29895, 1.7695, 2.0758, 2.0873, 0.0013147, 0.0013147, 0.0013147

|

| 4 |

+

3, 1.4837, 1.505, 1.6676, 0.65056, 0.5512, 0.59051, 0.27176, 1.8934, 1.6368, 2.0316, 0.0019548, 0.0019548, 0.0019548

|

| 5 |

+

4, 1.4742, 1.4377, 1.6535, 0.76204, 0.65211, 0.71095, 0.38159, 1.613, 1.7156, 1.8098, 0.00194, 0.00194, 0.00194

|

| 6 |

+

5, 1.4436, 1.3329, 1.6229, 0.70811, 0.6138, 0.63616, 0.32627, 1.7326, 1.6136, 1.8997, 0.00192, 0.00192, 0.00192

|

| 7 |

+

6, 1.4162, 1.2883, 1.6068, 0.8353, 0.71235, 0.79082, 0.40202, 1.7221, 1.3139, 1.8792, 0.0019001, 0.0019001, 0.0019001

|

| 8 |

+

7, 1.3578, 1.2017, 1.5542, 0.8356, 0.71954, 0.7939, 0.42871, 1.5642, 1.1996, 1.7318, 0.0018801, 0.0018801, 0.0018801

|

| 9 |

+

8, 1.3795, 1.1859, 1.5733, 0.85289, 0.75753, 0.82535, 0.48419, 1.4731, 1.1071, 1.6581, 0.0018601, 0.0018601, 0.0018601

|

| 10 |

+

9, 1.328, 1.1385, 1.5299, 0.85182, 0.78765, 0.84764, 0.50074, 1.4434, 1.0358, 1.596, 0.0018401, 0.0018401, 0.0018401

|

| 11 |

+

10, 1.3056, 1.0945, 1.5098, 0.87109, 0.76323, 0.84347, 0.50211, 1.44, 1.0225, 1.6233, 0.0018201, 0.0018201, 0.0018201

|

| 12 |

+

11, 1.2995, 1.1002, 1.5105, 0.83467, 0.80873, 0.86351, 0.51792, 1.3729, 1.0322, 1.5746, 0.0018001, 0.0018001, 0.0018001

|

| 13 |

+

12, 1.2755, 1.0501, 1.4925, 0.82779, 0.71536, 0.79584, 0.4422, 1.5377, 1.1848, 1.7186, 0.0017801, 0.0017801, 0.0017801

|

| 14 |

+

13, 1.2691, 1.0404, 1.4961, 0.87969, 0.74699, 0.84741, 0.52667, 1.37, 0.95022, 1.5667, 0.0017601, 0.0017601, 0.0017601

|

| 15 |

+

14, 1.2237, 0.99533, 1.4577, 0.89408, 0.81325, 0.87567, 0.5437, 1.34, 0.9001, 1.5343, 0.0017401, 0.0017401, 0.0017401

|

| 16 |

+

15, 1.2304, 0.98767, 1.4774, 0.88232, 0.82831, 0.88297, 0.54429, 1.3675, 0.85753, 1.5657, 0.0017201, 0.0017201, 0.0017201

|

| 17 |

+

16, 1.2063, 0.95478, 1.4433, 0.92573, 0.82596, 0.89358, 0.56527, 1.2983, 0.81623, 1.501, 0.0017002, 0.0017002, 0.0017002

|

| 18 |

+

17, 1.193, 0.94033, 1.4333, 0.90835, 0.82229, 0.88183, 0.55209, 1.3506, 0.82356, 1.5663, 0.0016802, 0.0016802, 0.0016802

|

| 19 |

+

18, 1.1762, 0.91876, 1.4211, 0.93227, 0.86145, 0.91883, 0.58322, 1.2944, 0.77196, 1.465, 0.0016602, 0.0016602, 0.0016602

|

| 20 |

+

19, 1.1766, 0.89996, 1.4273, 0.88375, 0.8358, 0.88575, 0.54884, 1.3057, 0.84002, 1.5216, 0.0016402, 0.0016402, 0.0016402

|

| 21 |

+

20, 1.1663, 0.89129, 1.4204, 0.91018, 0.85459, 0.92199, 0.58784, 1.2428, 0.75788, 1.4358, 0.0016202, 0.0016202, 0.0016202

|

| 22 |

+

21, 1.1488, 0.87247, 1.404, 0.88688, 0.83133, 0.87583, 0.54372, 1.3351, 0.76871, 1.5392, 0.0016002, 0.0016002, 0.0016002

|

| 23 |

+

22, 1.1282, 0.85634, 1.3964, 0.89432, 0.85693, 0.90005, 0.57259, 1.2556, 0.78641, 1.4653, 0.0015802, 0.0015802, 0.0015802

|

| 24 |

+

23, 1.122, 0.83773, 1.3815, 0.92305, 0.8509, 0.90502, 0.58526, 1.2636, 0.75162, 1.4748, 0.0015602, 0.0015602, 0.0015602

|

| 25 |

+

24, 1.1529, 0.87412, 1.4047, 0.92811, 0.87499, 0.92486, 0.61492, 1.2026, 0.69445, 1.4086, 0.0015402, 0.0015402, 0.0015402

|

| 26 |

+

25, 1.1204, 0.8215, 1.3786, 0.91869, 0.87651, 0.92202, 0.60921, 1.2123, 0.70228, 1.4267, 0.0015202, 0.0015202, 0.0015202

|

| 27 |

+

26, 1.1108, 0.83268, 1.3781, 0.90403, 0.83283, 0.90531, 0.58423, 1.2607, 0.78015, 1.4531, 0.0015002, 0.0015002, 0.0015002

|

| 28 |

+

27, 1.1091, 0.82368, 1.3757, 0.92232, 0.87616, 0.92691, 0.60369, 1.2177, 0.6925, 1.4254, 0.0014803, 0.0014803, 0.0014803

|

| 29 |

+

28, 1.0772, 0.80484, 1.3531, 0.94044, 0.83886, 0.91844, 0.60243, 1.2137, 0.71425, 1.4197, 0.0014603, 0.0014603, 0.0014603

|

| 30 |

+

29, 1.0886, 0.79289, 1.3518, 0.93182, 0.87048, 0.92517, 0.61245, 1.198, 0.69431, 1.4314, 0.0014403, 0.0014403, 0.0014403

|

| 31 |

+

30, 1.0808, 0.81306, 1.3562, 0.93818, 0.86851, 0.91973, 0.60768, 1.2042, 0.68076, 1.4199, 0.0014203, 0.0014203, 0.0014203

|

| 32 |

+

31, 1.0541, 0.76236, 1.3351, 0.94171, 0.87589, 0.93404, 0.61088, 1.2033, 0.65307, 1.4515, 0.0014003, 0.0014003, 0.0014003

|

| 33 |

+

32, 1.0461, 0.7607, 1.3405, 0.95169, 0.89012, 0.92513, 0.60954, 1.2216, 0.65525, 1.4279, 0.0013803, 0.0013803, 0.0013803

|

| 34 |

+

33, 1.0405, 0.76315, 1.3173, 0.94051, 0.86898, 0.9253, 0.60309, 1.2132, 0.66961, 1.4088, 0.0013603, 0.0013603, 0.0013603

|

| 35 |

+

34, 1.0537, 0.76513, 1.3262, 0.94901, 0.89157, 0.93683, 0.61974, 1.1821, 0.6361, 1.3885, 0.0013403, 0.0013403, 0.0013403

|

| 36 |

+

35, 1.0364, 0.74774, 1.3137, 0.95, 0.88698, 0.93346, 0.62166, 1.1639, 0.62096, 1.386, 0.0013203, 0.0013203, 0.0013203

|

| 37 |

+

36, 1.0375, 0.75455, 1.3219, 0.90028, 0.88705, 0.92154, 0.62715, 1.1578, 0.66632, 1.3846, 0.0013004, 0.0013004, 0.0013004

|

| 38 |

+

37, 1.0348, 0.73579, 1.3174, 0.93157, 0.88705, 0.93624, 0.63515, 1.1485, 0.61699, 1.3809, 0.0012804, 0.0012804, 0.0012804

|

| 39 |

+

38, 1.0362, 0.72428, 1.3183, 0.93287, 0.87651, 0.92179, 0.62797, 1.1491, 0.63005, 1.3902, 0.0012604, 0.0012604, 0.0012604

|

| 40 |

+

39, 0.9951, 0.71699, 1.2976, 0.94785, 0.87599, 0.93424, 0.64226, 1.1366, 0.62394, 1.3764, 0.0012404, 0.0012404, 0.0012404

|

| 41 |

+

40, 1.0286, 0.73329, 1.3192, 0.94696, 0.86295, 0.93192, 0.62027, 1.1531, 0.65479, 1.3848, 0.0012204, 0.0012204, 0.0012204

|

| 42 |

+

41, 0.99408, 0.69377, 1.2959, 0.94441, 0.87952, 0.93655, 0.64236, 1.1281, 0.60461, 1.3764, 0.0012004, 0.0012004, 0.0012004

|

| 43 |

+

42, 0.98241, 0.68742, 1.2776, 0.93415, 0.88855, 0.93312, 0.6435, 1.1133, 0.59133, 1.3584, 0.0011804, 0.0011804, 0.0011804

|

| 44 |

+

43, 0.97985, 0.70068, 1.2927, 0.92997, 0.89307, 0.93209, 0.62546, 1.1875, 0.61591, 1.3906, 0.0011604, 0.0011604, 0.0011604

|

| 45 |

+

44, 1.0005, 0.6981, 1.296, 0.94236, 0.91107, 0.93997, 0.64518, 1.1049, 0.59164, 1.3586, 0.0011404, 0.0011404, 0.0011404

|

| 46 |

+

45, 0.9878, 0.69831, 1.2833, 0.95094, 0.87578, 0.93467, 0.65068, 1.1129, 0.59774, 1.3432, 0.0011204, 0.0011204, 0.0011204

|

| 47 |

+

46, 0.99315, 0.68826, 1.2971, 0.94434, 0.89608, 0.93404, 0.64314, 1.1345, 0.59672, 1.3651, 0.0011004, 0.0011004, 0.0011004

|

| 48 |

+

47, 0.98394, 0.67581, 1.2867, 0.93328, 0.88705, 0.92861, 0.64548, 1.1003, 0.57873, 1.3558, 0.0010805, 0.0010805, 0.0010805

|

| 49 |

+

48, 0.94958, 0.67277, 1.2631, 0.9366, 0.88988, 0.92701, 0.63939, 1.1163, 0.59788, 1.3604, 0.0010605, 0.0010605, 0.0010605

|

| 50 |

+

49, 0.94926, 0.6719, 1.2596, 0.93922, 0.88102, 0.93569, 0.64946, 1.0985, 0.58132, 1.3427, 0.0010405, 0.0010405, 0.0010405

|

| 51 |

+

50, 0.96627, 0.65725, 1.2672, 0.95063, 0.88253, 0.93601, 0.64978, 1.0963, 0.57288, 1.3604, 0.0010205, 0.0010205, 0.0010205

|

| 52 |

+

51, 0.93638, 0.65394, 1.2542, 0.95005, 0.88102, 0.93848, 0.64735, 1.0872, 0.57092, 1.3354, 0.0010005, 0.0010005, 0.0010005

|

| 53 |

+

52, 0.95541, 0.66516, 1.2649, 0.91461, 0.88717, 0.92964, 0.64037, 1.1362, 0.61545, 1.379, 0.00098051, 0.00098051, 0.00098051

|

| 54 |

+

53, 0.94663, 0.63978, 1.2653, 0.95479, 0.875, 0.93873, 0.6471, 1.1087, 0.5885, 1.3729, 0.00096052, 0.00096052, 0.00096052

|

| 55 |

+

54, 0.93826, 0.6342, 1.2647, 0.94367, 0.88297, 0.93765, 0.65868, 1.1025, 0.58733, 1.3526, 0.00094053, 0.00094053, 0.00094053

|

| 56 |

+

55, 0.92148, 0.62356, 1.2452, 0.93676, 0.89006, 0.93833, 0.65184, 1.1361, 0.58628, 1.3708, 0.00092054, 0.00092054, 0.00092054

|

| 57 |

+

56, 0.94256, 0.63751, 1.2642, 0.94328, 0.90163, 0.94404, 0.65295, 1.0917, 0.56268, 1.3527, 0.00090055, 0.00090055, 0.00090055

|

| 58 |

+

57, 0.93246, 0.63583, 1.2526, 0.93614, 0.90515, 0.94025, 0.65183, 1.0904, 0.57427, 1.3857, 0.00088056, 0.00088056, 0.00088056

|

| 59 |

+

58, 0.92829, 0.62547, 1.2537, 0.95068, 0.89985, 0.94038, 0.65068, 1.09, 0.57144, 1.3484, 0.00086057, 0.00086057, 0.00086057

|

| 60 |

+

59, 0.91279, 0.61362, 1.2392, 0.94549, 0.88814, 0.93805, 0.65967, 1.0807, 0.56447, 1.3499, 0.00084058, 0.00084058, 0.00084058

|

| 61 |

+

60, 0.90413, 0.62406, 1.2336, 0.95183, 0.8928, 0.94162, 0.66447, 1.0822, 0.5538, 1.3472, 0.00082059, 0.00082059, 0.00082059

|

| 62 |

+

61, 0.90536, 0.61694, 1.2381, 0.95787, 0.89029, 0.94043, 0.65505, 1.0942, 0.55624, 1.3542, 0.0008006, 0.0008006, 0.0008006

|

| 63 |

+

62, 0.87961, 0.60812, 1.2262, 0.93794, 0.89608, 0.92748, 0.64996, 1.1086, 0.56629, 1.3794, 0.00078061, 0.00078061, 0.00078061

|

| 64 |

+

63, 0.87036, 0.58885, 1.2155, 0.94793, 0.89307, 0.93379, 0.65885, 1.0846, 0.54267, 1.3491, 0.00076062, 0.00076062, 0.00076062

|

| 65 |

+

64, 0.87382, 0.58893, 1.2168, 0.95493, 0.89355, 0.94819, 0.6715, 1.0748, 0.55735, 1.3361, 0.00074063, 0.00074063, 0.00074063

|

| 66 |

+

65, 0.88006, 0.58724, 1.2138, 0.94674, 0.9102, 0.94689, 0.65812, 1.1036, 0.5506, 1.3471, 0.00072064, 0.00072064, 0.00072064

|

| 67 |

+

66, 0.88766, 0.58998, 1.2187, 0.93954, 0.90813, 0.94545, 0.65942, 1.0788, 0.53711, 1.3273, 0.00070065, 0.00070065, 0.00070065

|

| 68 |

+

67, 0.87421, 0.57441, 1.2051, 0.96279, 0.89628, 0.94583, 0.66176, 1.1002, 0.54171, 1.3444, 0.00068066, 0.00068066, 0.00068066

|

| 69 |

+

68, 0.87971, 0.5827, 1.2185, 0.93937, 0.90512, 0.94814, 0.65634, 1.0967, 0.546, 1.3503, 0.00066067, 0.00066067, 0.00066067

|

| 70 |

+

69, 0.85838, 0.57282, 1.1943, 0.94977, 0.91117, 0.94533, 0.6648, 1.1031, 0.53447, 1.3639, 0.00064068, 0.00064068, 0.00064068

|

| 71 |

+

70, 0.85315, 0.56511, 1.2001, 0.96022, 0.90892, 0.95485, 0.66769, 1.0828, 0.53359, 1.348, 0.00062069, 0.00062069, 0.00062069

|

| 72 |

+

71, 0.86474, 0.5826, 1.2171, 0.95041, 0.89478, 0.94002, 0.66189, 1.0692, 0.53754, 1.3521, 0.0006007, 0.0006007, 0.0006007

|

| 73 |

+

72, 0.81885, 0.54574, 1.1857, 0.95275, 0.89608, 0.94652, 0.67172, 1.0635, 0.53495, 1.3406, 0.00058071, 0.00058071, 0.00058071

|

| 74 |

+

73, 0.82888, 0.56118, 1.1885, 0.94647, 0.90543, 0.94526, 0.67382, 1.0595, 0.52944, 1.3422, 0.00056072, 0.00056072, 0.00056072

|

| 75 |

+

74, 0.81734, 0.54483, 1.1845, 0.95082, 0.90265, 0.94598, 0.67337, 1.0672, 0.53474, 1.3354, 0.00054073, 0.00054073, 0.00054073

|

| 76 |

+

75, 0.83908, 0.54928, 1.2002, 0.94465, 0.90211, 0.94151, 0.66637, 1.0686, 0.53234, 1.345, 0.00052074, 0.00052074, 0.00052074

|

| 77 |

+

76, 0.81866, 0.53604, 1.1807, 0.95673, 0.9006, 0.94468, 0.67302, 1.0515, 0.52123, 1.344, 0.00050075, 0.00050075, 0.00050075

|

| 78 |

+

77, 0.82191, 0.53904, 1.1758, 0.94633, 0.91114, 0.94587, 0.66746, 1.071, 0.5304, 1.3436, 0.00048076, 0.00048076, 0.00048076

|

| 79 |

+

78, 0.80042, 0.5252, 1.1679, 0.95124, 0.91089, 0.94954, 0.67373, 1.0467, 0.50964, 1.3335, 0.00046077, 0.00046077, 0.00046077

|

| 80 |

+

79, 0.808, 0.52938, 1.1692, 0.95917, 0.89759, 0.94061, 0.67831, 1.0487, 0.51605, 1.3452, 0.00044078, 0.00044078, 0.00044078

|

| 81 |

+

80, 0.78654, 0.51889, 1.1722, 0.96708, 0.8991, 0.94873, 0.67791, 1.0465, 0.51037, 1.3351, 0.00042079, 0.00042079, 0.00042079

|

| 82 |

+

81, 0.80758, 0.53654, 1.1702, 0.95245, 0.90494, 0.94529, 0.673, 1.0598, 0.51563, 1.3441, 0.0004008, 0.0004008, 0.0004008

|

| 83 |

+

82, 0.78199, 0.50917, 1.1536, 0.95568, 0.90211, 0.94753, 0.67363, 1.0498, 0.51088, 1.3454, 0.00038081, 0.00038081, 0.00038081

|

| 84 |

+

83, 0.7792, 0.50774, 1.1621, 0.96001, 0.90384, 0.95187, 0.68448, 1.0385, 0.50381, 1.3267, 0.00036082, 0.00036082, 0.00036082

|

| 85 |

+

84, 0.78113, 0.50577, 1.1479, 0.96166, 0.90652, 0.95033, 0.68063, 1.053, 0.50589, 1.3395, 0.00034083, 0.00034083, 0.00034083

|

| 86 |

+

85, 0.77674, 0.51404, 1.151, 0.94349, 0.91566, 0.95109, 0.67947, 1.0445, 0.50468, 1.3308, 0.00032084, 0.00032084, 0.00032084

|

| 87 |

+

86, 0.79311, 0.52062, 1.1665, 0.95497, 0.90663, 0.95151, 0.6833, 1.043, 0.50616, 1.3417, 0.00030085, 0.00030085, 0.00030085

|

| 88 |

+

87, 0.76435, 0.50203, 1.1492, 0.9472, 0.91566, 0.95502, 0.68569, 1.0446, 0.49888, 1.3438, 0.00028086, 0.00028086, 0.00028086

|

| 89 |

+

88, 0.77065, 0.50064, 1.1522, 0.95602, 0.91668, 0.95431, 0.68404, 1.0298, 0.498, 1.3321, 0.00026087, 0.00026087, 0.00026087

|

| 90 |

+

89, 0.72368, 0.48203, 1.1275, 0.96458, 0.91566, 0.95318, 0.68537, 1.0418, 0.50315, 1.3473, 0.00024088, 0.00024088, 0.00024088

|

| 91 |

+

90, 0.75847, 0.50445, 1.1423, 0.96042, 0.91368, 0.95354, 0.6875, 1.0431, 0.502, 1.3456, 0.00022089, 0.00022089, 0.00022089

|

| 92 |

+

91, 0.71651, 0.3934, 1.1427, 0.96363, 0.90663, 0.94836, 0.6696, 1.0555, 0.51315, 1.3555, 0.0002009, 0.0002009, 0.0002009

|

| 93 |

+

92, 0.67522, 0.36113, 1.1121, 0.96004, 0.90455, 0.94801, 0.67579, 1.0607, 0.52278, 1.3623, 0.00018091, 0.00018091, 0.00018091

|

| 94 |

+

93, 0.67981, 0.36274, 1.1086, 0.95343, 0.90512, 0.95026, 0.68512, 1.0599, 0.51072, 1.3552, 0.00016092, 0.00016092, 0.00016092

|

| 95 |

+

94, 0.66726, 0.35318, 1.0998, 0.948, 0.91114, 0.95, 0.68621, 1.0549, 0.51119, 1.362, 0.00014093, 0.00014093, 0.00014093

|

| 96 |

+

95, 0.65902, 0.34472, 1.1051, 0.96506, 0.89307, 0.95143, 0.68727, 1.0408, 0.5027, 1.3495, 0.00012094, 0.00012094, 0.00012094

|

| 97 |

+

96, 0.6542, 0.34101, 1.0967, 0.95465, 0.90663, 0.95092, 0.68507, 1.0418, 0.5031, 1.3514, 0.00010095, 0.00010095, 0.00010095

|

| 98 |

+

97, 0.64514, 0.34047, 1.086, 0.95706, 0.90512, 0.95248, 0.68645, 1.0447, 0.5041, 1.3534, 8.096e-05, 8.096e-05, 8.096e-05

|

| 99 |

+

98, 0.63979, 0.33715, 1.0742, 0.95381, 0.90196, 0.95255, 0.68842, 1.043, 0.50853, 1.3554, 6.097e-05, 6.097e-05, 6.097e-05

|

| 100 |

+

99, 0.64051, 0.3345, 1.0865, 0.9526, 0.90512, 0.9527, 0.68805, 1.0422, 0.5018, 1.3549, 4.098e-05, 4.098e-05, 4.098e-05

|

| 101 |

+

100, 0.6264, 0.33138, 1.0779, 0.95132, 0.90512, 0.95375, 0.69118, 1.0417, 0.50098, 1.3542, 2.099e-05, 2.099e-05, 2.099e-05

|

train/results.png

CHANGED

|

|

train/train_batch0.jpg

CHANGED

|

|

train/train_batch1.jpg

CHANGED

|

|

train/train_batch11250.jpg

ADDED

|

train/train_batch11251.jpg

ADDED

|

train/train_batch11252.jpg

ADDED

|

train/train_batch2.jpg

CHANGED

|

|

train/val_batch0_labels.jpg

CHANGED

|

|

train/val_batch0_pred.jpg

CHANGED

|

|

train/val_batch1_labels.jpg

CHANGED

|

|

train/val_batch1_pred.jpg

CHANGED

|

|

train/val_batch2_labels.jpg

CHANGED

|

|

train/val_batch2_pred.jpg

CHANGED

|

|

train/weights/best.pt

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ab4f2eb05949c6ae6eb4b81f356c58dce6de6a216e21ab1ab7d4a9b4a43d5310

|

| 3 |

+

size 6241262

|

train/weights/last.pt

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8dad8be627a8a4929df348a66d92b1b14a32971977d709ab524493f436dcc3e9

|

| 3 |

+

size 6241262

|