v6 vast.ai

Browse files- train/F1_curve.png +0 -0

- train/PR_curve.png +0 -0

- train/P_curve.png +0 -0

- train/R_curve.png +0 -0

- train/args.yaml +98 -0

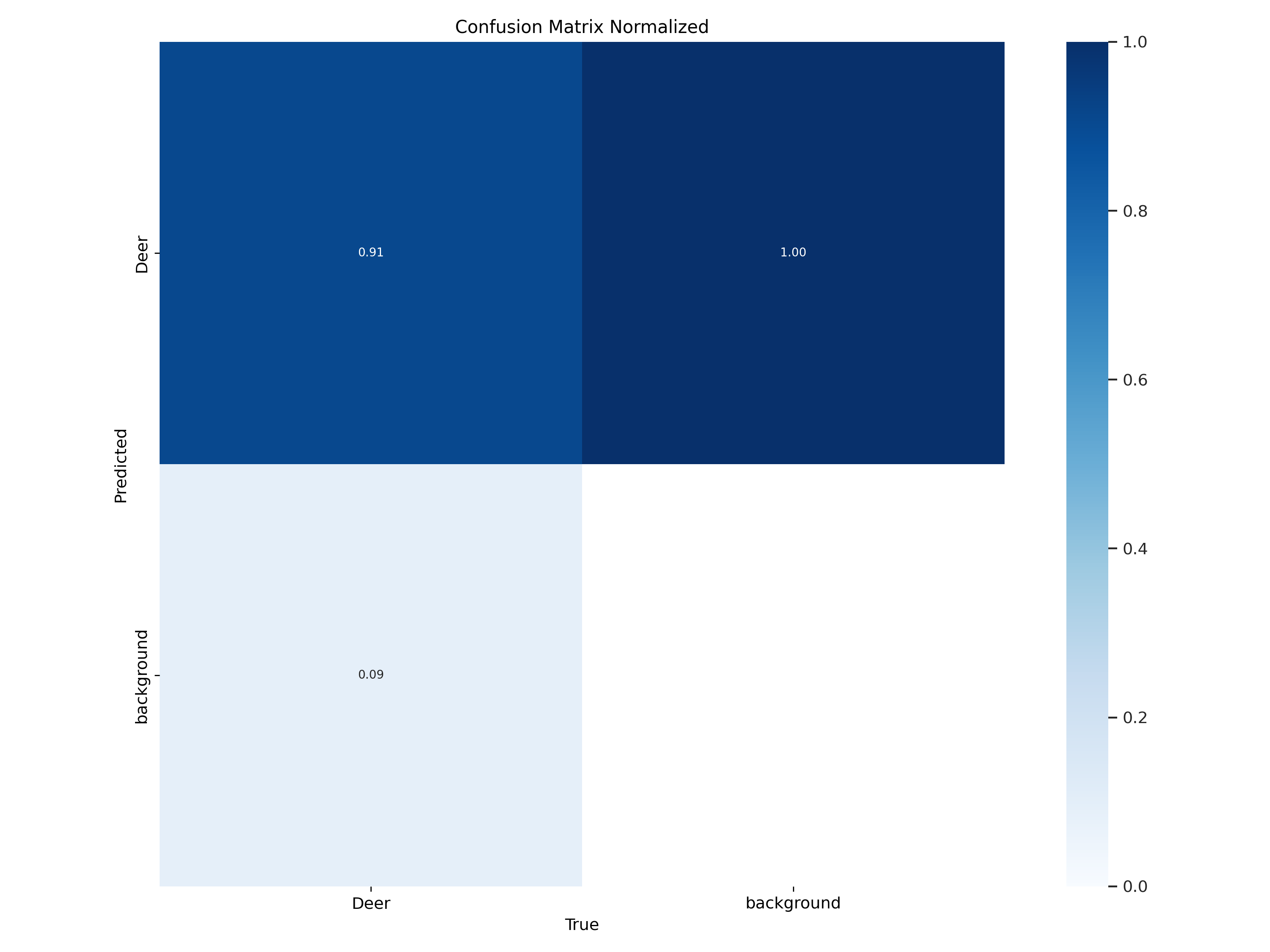

- train/confusion_matrix.png +0 -0

- train/confusion_matrix_normalized.png +0 -0

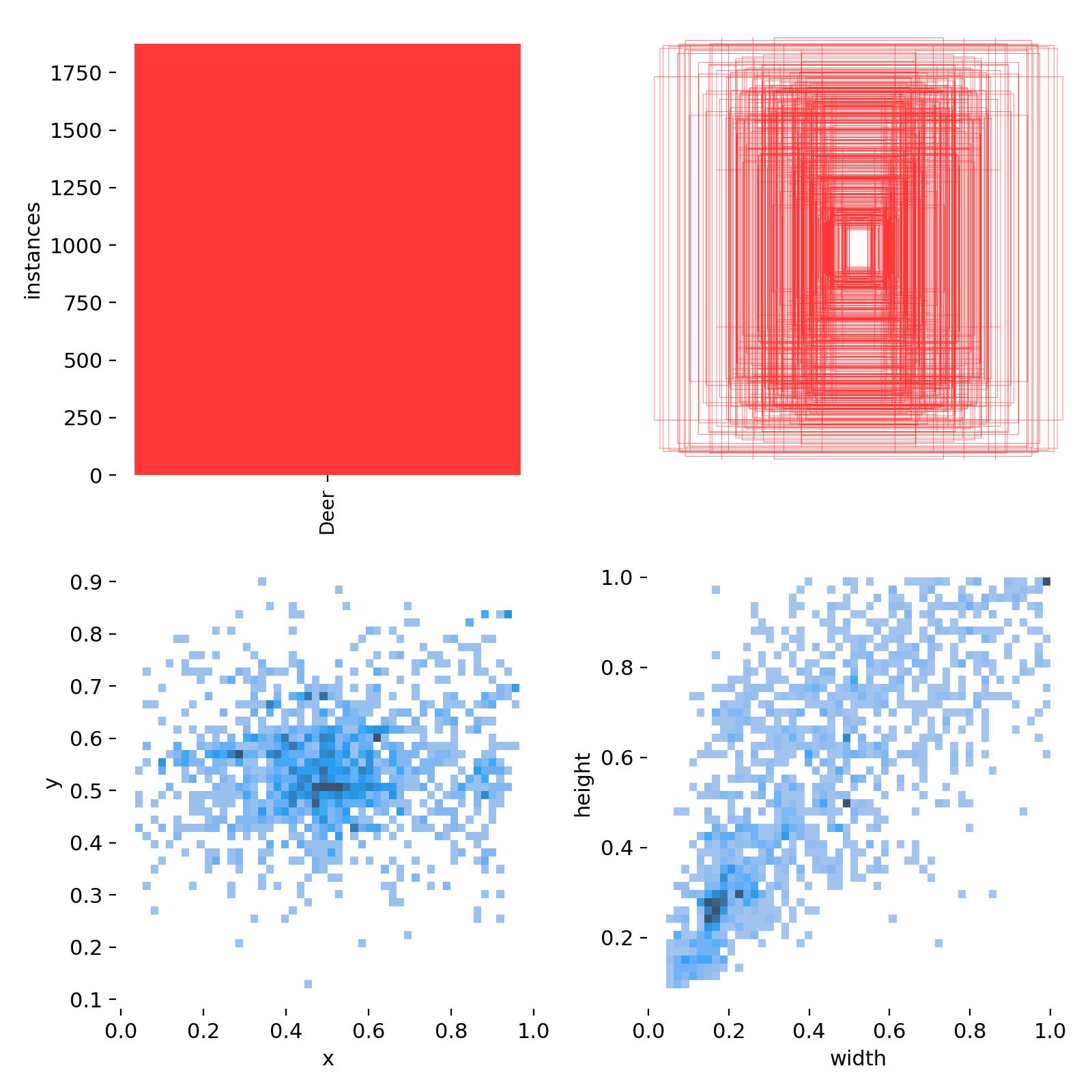

- train/labels.jpg +0 -0

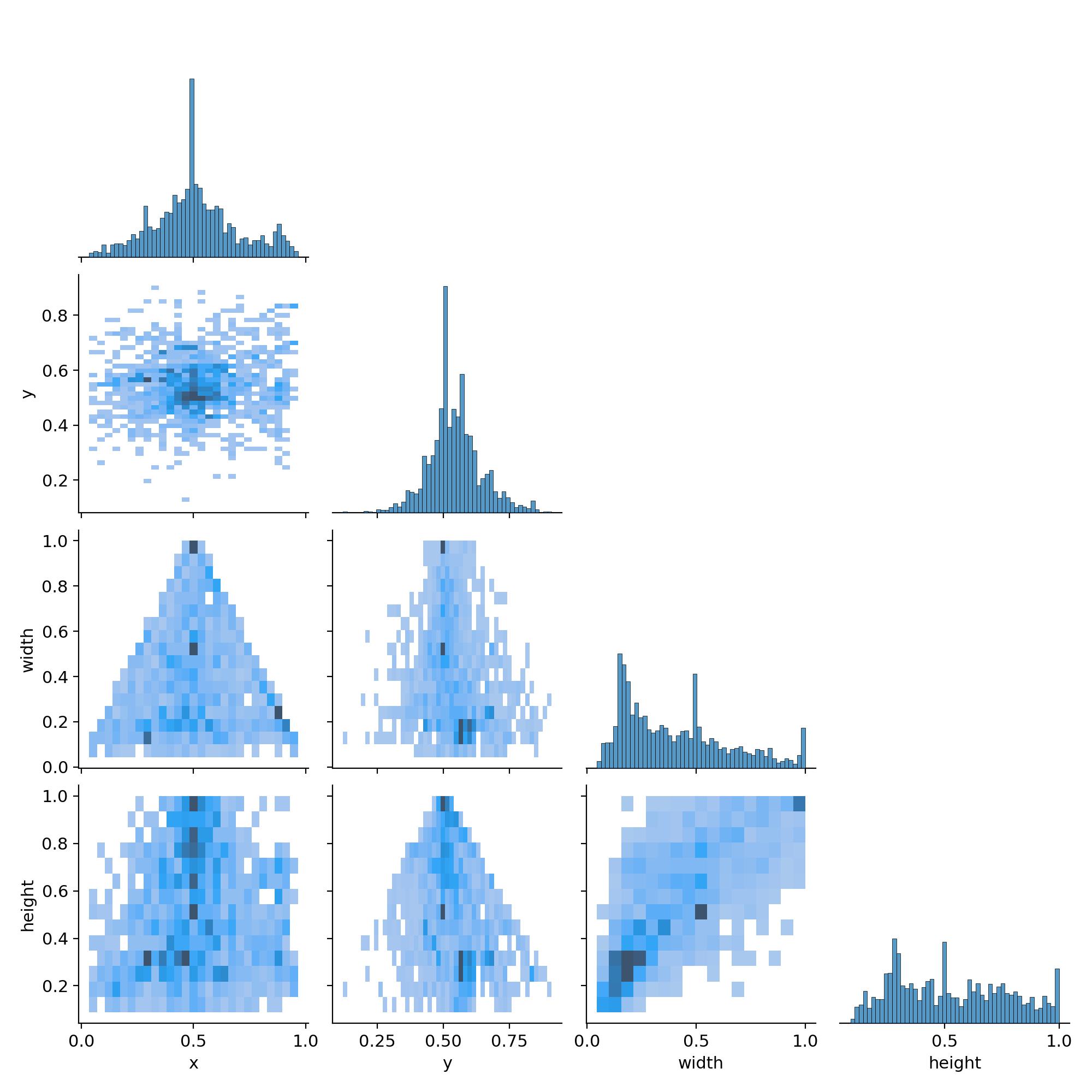

- train/labels_correlogram.jpg +0 -0

- train/results.csv +55 -0

- train/results.png +0 -0

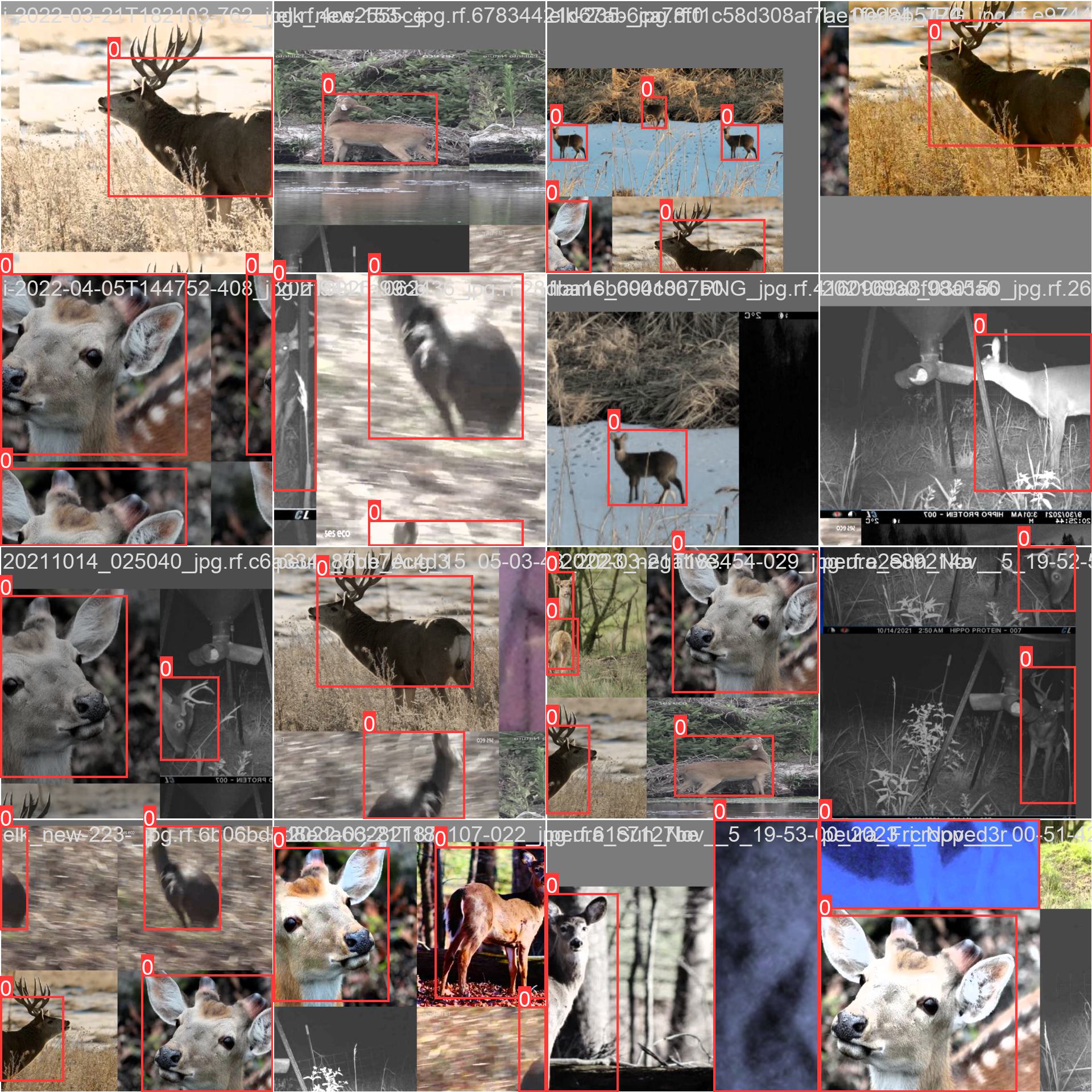

- train/train_batch0.jpg +0 -0

- train/train_batch1.jpg +0 -0

- train/train_batch2.jpg +0 -0

- train/val_batch0_labels.jpg +0 -0

- train/val_batch0_pred.jpg +0 -0

- train/val_batch1_labels.jpg +0 -0

- train/val_batch1_pred.jpg +0 -0

- train/val_batch2_labels.jpg +0 -0

- train/val_batch2_pred.jpg +0 -0

- train/weights/best.pt +3 -0

- train/weights/last.pt +3 -0

train/F1_curve.png

ADDED

|

train/PR_curve.png

ADDED

|

train/P_curve.png

ADDED

|

train/R_curve.png

ADDED

|

train/args.yaml

ADDED

|

@@ -0,0 +1,98 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: detect

|

| 2 |

+

mode: train

|

| 3 |

+

model: /workspace/yolov8n.pt

|

| 4 |

+

data: /deer_v6_batched/deer_v6_batch_1/data.yml

|

| 5 |

+

epochs: 100

|

| 6 |

+

patience: 10

|

| 7 |

+

batch: 16

|

| 8 |

+

imgsz: 640

|

| 9 |

+

save: true

|

| 10 |

+

save_period: -1

|

| 11 |

+

cache: false

|

| 12 |

+

device: null

|

| 13 |

+

workers: 8

|

| 14 |

+

project: /workspace/runs/train_run_1

|

| 15 |

+

name: train

|

| 16 |

+

exist_ok: false

|

| 17 |

+

pretrained: true

|

| 18 |

+

optimizer: auto

|

| 19 |

+

verbose: true

|

| 20 |

+

seed: 0

|

| 21 |

+

deterministic: true

|

| 22 |

+

single_cls: false

|

| 23 |

+

rect: false

|

| 24 |

+

cos_lr: false

|

| 25 |

+

close_mosaic: 10

|

| 26 |

+

resume: false

|

| 27 |

+

amp: true

|

| 28 |

+

fraction: 1.0

|

| 29 |

+

profile: false

|

| 30 |

+

freeze: null

|

| 31 |

+

overlap_mask: true

|

| 32 |

+

mask_ratio: 4

|

| 33 |

+

dropout: 0.0

|

| 34 |

+

val: true

|

| 35 |

+

split: val

|

| 36 |

+

save_json: false

|

| 37 |

+

save_hybrid: false

|

| 38 |

+

conf: null

|

| 39 |

+

iou: 0.7

|

| 40 |

+

max_det: 300

|

| 41 |

+

half: false

|

| 42 |

+

dnn: false

|

| 43 |

+

plots: true

|

| 44 |

+

source: null

|

| 45 |

+

show: false

|

| 46 |

+

save_txt: false

|

| 47 |

+

save_conf: false

|

| 48 |

+

save_crop: false

|

| 49 |

+

show_labels: true

|

| 50 |

+

show_conf: true

|

| 51 |

+

vid_stride: 1

|

| 52 |

+

stream_buffer: false

|

| 53 |

+

line_width: null

|

| 54 |

+

visualize: false

|

| 55 |

+

augment: false

|

| 56 |

+

agnostic_nms: false

|

| 57 |

+

classes: null

|

| 58 |

+

retina_masks: false

|

| 59 |

+

boxes: true

|

| 60 |

+

format: torchscript

|

| 61 |

+

keras: false

|

| 62 |

+

optimize: false

|

| 63 |

+

int8: false

|

| 64 |

+

dynamic: false

|

| 65 |

+

simplify: false

|

| 66 |

+

opset: null

|

| 67 |

+

workspace: 4

|

| 68 |

+

nms: false

|

| 69 |

+

lr0: 0.01

|

| 70 |

+

lrf: 0.0005

|

| 71 |

+

momentum: 0.937

|

| 72 |

+

weight_decay: 0.0005

|

| 73 |

+

warmup_epochs: 3.0

|

| 74 |

+

warmup_momentum: 0.8

|

| 75 |

+

warmup_bias_lr: 0.1

|

| 76 |

+

box: 7.5

|

| 77 |

+

cls: 0.5

|

| 78 |

+

dfl: 1.5

|

| 79 |

+

pose: 12.0

|

| 80 |

+

kobj: 1.0

|

| 81 |

+

label_smoothing: 0.0

|

| 82 |

+

nbs: 64

|

| 83 |

+

hsv_h: 0.015

|

| 84 |

+

hsv_s: 0.7

|

| 85 |

+

hsv_v: 0.4

|

| 86 |

+

degrees: 0.0

|

| 87 |

+

translate: 0.1

|

| 88 |

+

scale: 0.5

|

| 89 |

+

shear: 0.0

|

| 90 |

+

perspective: 0.0

|

| 91 |

+

flipud: 0.0

|

| 92 |

+

fliplr: 0.5

|

| 93 |

+

mosaic: 1.0

|

| 94 |

+

mixup: 0.0

|

| 95 |

+

copy_paste: 0.0

|

| 96 |

+

cfg: null

|

| 97 |

+

tracker: botsort.yaml

|

| 98 |

+

save_dir: /workspace/runs/train_run_1/train

|

train/confusion_matrix.png

ADDED

|

train/confusion_matrix_normalized.png

ADDED

|

train/labels.jpg

ADDED

|

train/labels_correlogram.jpg

ADDED

|

train/results.csv

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

1, 1.4022, 1.9869, 1.602, 0.49242, 0.35966, 0.36613, 0.17133, 1.8564, 2.8766, 2.3258, 0.00066032, 0.00066032, 0.00066032

|

| 3 |

+

2, 1.5159, 1.6898, 1.6693, 0.67758, 0.58985, 0.63666, 0.29797, 1.8018, 2.0623, 2.0906, 0.0013137, 0.0013137, 0.0013137

|

| 4 |

+

3, 1.5811, 1.595, 1.7205, 0.52419, 0.49412, 0.47777, 0.22989, 1.8458, 2.3771, 2.0768, 0.0019538, 0.0019538, 0.0019538

|

| 5 |

+

4, 1.5444, 1.466, 1.6873, 0.58286, 0.50252, 0.52354, 0.27907, 1.7378, 2.0047, 1.9542, 0.00194, 0.00194, 0.00194

|

| 6 |

+

5, 1.5172, 1.3901, 1.6596, 0.72378, 0.63414, 0.70667, 0.39196, 1.5993, 1.4542, 1.8117, 0.00194, 0.00194, 0.00194

|

| 7 |

+

6, 1.5224, 1.3681, 1.6564, 0.65639, 0.52973, 0.60126, 0.33722, 1.6528, 1.6858, 1.8654, 0.00192, 0.00192, 0.00192

|

| 8 |

+

7, 1.4431, 1.2733, 1.606, 0.82891, 0.70843, 0.79735, 0.47648, 1.4644, 1.1365, 1.6775, 0.0019001, 0.0019001, 0.0019001

|

| 9 |

+

8, 1.4417, 1.2162, 1.6, 0.7921, 0.75126, 0.81159, 0.47208, 1.4922, 1.1433, 1.6828, 0.0018801, 0.0018801, 0.0018801

|

| 10 |

+

9, 1.4181, 1.1742, 1.5889, 0.87717, 0.81008, 0.85561, 0.51097, 1.4188, 1.0247, 1.6415, 0.0018601, 0.0018601, 0.0018601

|

| 11 |

+

10, 1.37, 1.1242, 1.5515, 0.87959, 0.79328, 0.84023, 0.50059, 1.4293, 1.0708, 1.647, 0.0018401, 0.0018401, 0.0018401

|

| 12 |

+

11, 1.3733, 1.1187, 1.5376, 0.82881, 0.7479, 0.81171, 0.4773, 1.4998, 1.1129, 1.6903, 0.0018201, 0.0018201, 0.0018201

|

| 13 |

+

12, 1.3582, 1.109, 1.5371, 0.81731, 0.7479, 0.82079, 0.48707, 1.4728, 1.0386, 1.637, 0.0018001, 0.0018001, 0.0018001

|

| 14 |

+

13, 1.3254, 1.061, 1.5217, 0.90118, 0.79702, 0.87057, 0.52683, 1.3896, 0.93789, 1.608, 0.0017801, 0.0017801, 0.0017801

|

| 15 |

+

14, 1.3351, 1.0765, 1.5309, 0.90551, 0.75698, 0.85719, 0.51818, 1.4016, 0.96081, 1.5739, 0.0017601, 0.0017601, 0.0017601

|

| 16 |

+

15, 1.2845, 1.0365, 1.4921, 0.89055, 0.80679, 0.8716, 0.54512, 1.3188, 0.90927, 1.5838, 0.0017401, 0.0017401, 0.0017401

|

| 17 |

+

16, 1.2875, 1.0188, 1.4957, 0.87866, 0.84034, 0.87732, 0.54921, 1.3314, 0.87526, 1.5633, 0.0017201, 0.0017201, 0.0017201

|

| 18 |

+

17, 1.2847, 0.97791, 1.4889, 0.8889, 0.82024, 0.87516, 0.53727, 1.3909, 0.90252, 1.5712, 0.0017002, 0.0017002, 0.0017002

|

| 19 |

+

18, 1.2625, 0.96971, 1.467, 0.80187, 0.68403, 0.75473, 0.46015, 1.4362, 1.161, 1.6749, 0.0016802, 0.0016802, 0.0016802

|

| 20 |

+

19, 1.2892, 0.99695, 1.4972, 0.91164, 0.85042, 0.89519, 0.55526, 1.3422, 0.82048, 1.5511, 0.0016602, 0.0016602, 0.0016602

|

| 21 |

+

20, 1.233, 0.92399, 1.4526, 0.90322, 0.81008, 0.87384, 0.54602, 1.3088, 0.85425, 1.5509, 0.0016402, 0.0016402, 0.0016402

|

| 22 |

+

21, 1.2389, 0.92448, 1.4534, 0.92486, 0.8275, 0.88883, 0.57321, 1.313, 0.79565, 1.5329, 0.0016202, 0.0016202, 0.0016202

|

| 23 |

+

22, 1.2374, 0.91973, 1.4606, 0.93455, 0.83994, 0.89432, 0.57838, 1.276, 0.77076, 1.4844, 0.0016002, 0.0016002, 0.0016002

|

| 24 |

+

23, 1.2126, 0.89915, 1.4364, 0.90066, 0.82857, 0.89838, 0.58633, 1.2445, 0.77945, 1.4901, 0.0015802, 0.0015802, 0.0015802

|

| 25 |

+

24, 1.198, 0.89593, 1.4331, 0.9048, 0.81345, 0.87424, 0.56558, 1.2745, 0.79531, 1.5123, 0.0015602, 0.0015602, 0.0015602

|

| 26 |

+

25, 1.1987, 0.87272, 1.4271, 0.91395, 0.85546, 0.90272, 0.58771, 1.2413, 0.73536, 1.4613, 0.0015402, 0.0015402, 0.0015402

|

| 27 |

+

26, 1.1933, 0.88773, 1.4303, 0.90524, 0.85089, 0.8947, 0.58394, 1.2104, 0.76973, 1.4655, 0.0015202, 0.0015202, 0.0015202

|

| 28 |

+

27, 1.1721, 0.86922, 1.4196, 0.92561, 0.88403, 0.92345, 0.59353, 1.2327, 0.72791, 1.4812, 0.0015002, 0.0015002, 0.0015002

|

| 29 |

+

28, 1.1866, 0.85015, 1.4247, 0.9296, 0.82185, 0.9012, 0.58725, 1.2591, 0.76265, 1.4839, 0.0014803, 0.0014803, 0.0014803

|

| 30 |

+

29, 1.1965, 0.86947, 1.4234, 0.92244, 0.85951, 0.90545, 0.58995, 1.2385, 0.71622, 1.4831, 0.0014603, 0.0014603, 0.0014603

|

| 31 |

+

30, 1.1525, 0.82079, 1.3968, 0.92743, 0.83529, 0.91153, 0.59497, 1.2575, 0.74439, 1.4615, 0.0014403, 0.0014403, 0.0014403

|

| 32 |

+

31, 1.1533, 0.83974, 1.3918, 0.9462, 0.84538, 0.91415, 0.60413, 1.2105, 0.69743, 1.4736, 0.0014203, 0.0014203, 0.0014203

|

| 33 |

+

32, 1.1462, 0.81224, 1.3814, 0.93874, 0.87562, 0.9222, 0.60705, 1.206, 0.69147, 1.4408, 0.0014003, 0.0014003, 0.0014003

|

| 34 |

+

33, 1.1246, 0.81799, 1.381, 0.91616, 0.87059, 0.91192, 0.59867, 1.2455, 0.70027, 1.4936, 0.0013803, 0.0013803, 0.0013803

|

| 35 |

+

34, 1.1219, 0.79834, 1.3732, 0.9266, 0.8605, 0.91687, 0.60165, 1.2305, 0.70597, 1.4893, 0.0013603, 0.0013603, 0.0013603

|

| 36 |

+

35, 1.1275, 0.80298, 1.396, 0.92623, 0.84706, 0.90242, 0.59448, 1.2182, 0.71913, 1.4678, 0.0013403, 0.0013403, 0.0013403

|

| 37 |

+

36, 1.1146, 0.80059, 1.3681, 0.9274, 0.87059, 0.91451, 0.6127, 1.2157, 0.6902, 1.4752, 0.0013203, 0.0013203, 0.0013203

|

| 38 |

+

37, 1.1062, 0.79163, 1.3714, 0.92267, 0.85378, 0.91299, 0.61014, 1.1995, 0.69226, 1.4567, 0.0013004, 0.0013004, 0.0013004

|

| 39 |

+

38, 1.1268, 0.78697, 1.3759, 0.85058, 0.83025, 0.89471, 0.5831, 1.2363, 0.80012, 1.4541, 0.0012804, 0.0012804, 0.0012804

|

| 40 |

+

39, 1.1214, 0.78269, 1.3684, 0.93576, 0.85684, 0.91722, 0.6195, 1.1691, 0.67836, 1.4282, 0.0012604, 0.0012604, 0.0012604

|

| 41 |

+

40, 1.0883, 0.77343, 1.3583, 0.93545, 0.88403, 0.92622, 0.62589, 1.2136, 0.65422, 1.467, 0.0012404, 0.0012404, 0.0012404

|

| 42 |

+

41, 1.0779, 0.75971, 1.3516, 0.92519, 0.87297, 0.91932, 0.61484, 1.181, 0.66976, 1.4356, 0.0012204, 0.0012204, 0.0012204

|

| 43 |

+

42, 1.0828, 0.74471, 1.3442, 0.95202, 0.86697, 0.92161, 0.61385, 1.1833, 0.65513, 1.4317, 0.0012004, 0.0012004, 0.0012004

|

| 44 |

+

43, 1.0886, 0.77801, 1.3581, 0.96051, 0.85836, 0.9203, 0.61386, 1.1957, 0.6452, 1.4305, 0.0011804, 0.0011804, 0.0011804

|

| 45 |

+

44, 1.0809, 0.74482, 1.3442, 0.93465, 0.88945, 0.9259, 0.63948, 1.1604, 0.62151, 1.3923, 0.0011604, 0.0011604, 0.0011604

|

| 46 |

+

45, 1.0788, 0.73858, 1.3415, 0.92683, 0.8605, 0.92208, 0.62566, 1.1642, 0.64843, 1.4213, 0.0011404, 0.0011404, 0.0011404

|

| 47 |

+

46, 1.0609, 0.73258, 1.334, 0.91769, 0.86891, 0.92056, 0.61995, 1.155, 0.6471, 1.4171, 0.0011204, 0.0011204, 0.0011204

|

| 48 |

+

47, 1.0588, 0.71404, 1.3243, 0.90214, 0.87059, 0.91457, 0.61935, 1.1903, 0.65616, 1.4538, 0.0011004, 0.0011004, 0.0011004

|

| 49 |

+

48, 1.0529, 0.73404, 1.3248, 0.94592, 0.85042, 0.91578, 0.61942, 1.1822, 0.65899, 1.4596, 0.0010805, 0.0010805, 0.0010805

|

| 50 |

+

49, 1.068, 0.72478, 1.3311, 0.91075, 0.86723, 0.91071, 0.6227, 1.1753, 0.63218, 1.417, 0.0010605, 0.0010605, 0.0010605

|

| 51 |

+

50, 1.0143, 0.70671, 1.2985, 0.93885, 0.88235, 0.9277, 0.63441, 1.1702, 0.63744, 1.4099, 0.0010405, 0.0010405, 0.0010405

|

| 52 |

+

51, 1.0343, 0.70221, 1.3024, 0.91576, 0.89523, 0.92488, 0.637, 1.1669, 0.61616, 1.4287, 0.0010205, 0.0010205, 0.0010205

|

| 53 |

+

52, 1.0452, 0.71684, 1.3193, 0.92586, 0.88155, 0.91904, 0.63805, 1.1497, 0.61456, 1.4077, 0.0010005, 0.0010005, 0.0010005

|

| 54 |

+

53, 1.0155, 0.70001, 1.3042, 0.92149, 0.88773, 0.9216, 0.62819, 1.1767, 0.63093, 1.4187, 0.00098051, 0.00098051, 0.00098051

|

| 55 |

+

54, 1.0223, 0.69477, 1.3089, 0.91163, 0.88908, 0.91844, 0.62991, 1.1461, 0.6253, 1.4056, 0.00096052, 0.00096052, 0.00096052

|

train/results.png

ADDED

|

train/train_batch0.jpg

ADDED

|

train/train_batch1.jpg

ADDED

|

train/train_batch2.jpg

ADDED

|

train/val_batch0_labels.jpg

ADDED

|

train/val_batch0_pred.jpg

ADDED

|

train/val_batch1_labels.jpg

ADDED

|

train/val_batch1_pred.jpg

ADDED

|

train/val_batch2_labels.jpg

ADDED

|

train/val_batch2_pred.jpg

ADDED

|

train/weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f3d19cd15d9a537af44cb18b1c0878bb3d165fee74412167aeccddf416895c84

|

| 3 |

+

size 6234094

|

train/weights/last.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:385536630f5fe8ede2444af5c3d94fad8ead874d1a23659d6958056058021967

|

| 3 |

+

size 6235310

|