Training in progress, step 4098

Browse files- adapter_config.json +19 -0

- adapter_model.bin +3 -0

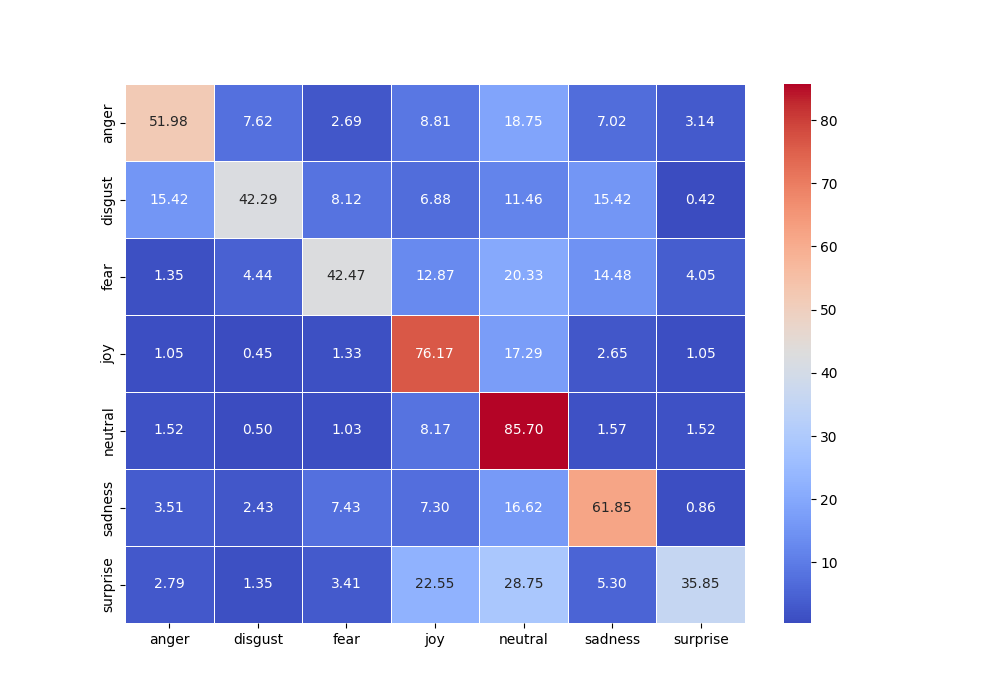

- cf.png +0 -0

- cf.txt +7 -0

- class_report.txt +13 -0

- merges.txt +0 -0

- special_tokens_map.json +15 -0

- tokenizer.json +0 -0

- tokenizer_config.json +15 -0

- training_args.bin +3 -0

- vocab.json +0 -0

adapter_config.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"base_model_name_or_path": "roberta-large",

|

| 3 |

+

"bias": "none",

|

| 4 |

+

"fan_in_fan_out": false,

|

| 5 |

+

"inference_mode": true,

|

| 6 |

+

"init_lora_weights": true,

|

| 7 |

+

"lora_alpha": 16,

|

| 8 |

+

"lora_dropout": 0.05,

|

| 9 |

+

"modules_to_save": [

|

| 10 |

+

"classifier"

|

| 11 |

+

],

|

| 12 |

+

"peft_type": "LORA",

|

| 13 |

+

"r": 8,

|

| 14 |

+

"target_modules": [

|

| 15 |

+

"query",

|

| 16 |

+

"value"

|

| 17 |

+

],

|

| 18 |

+

"task_type": null

|

| 19 |

+

}

|

adapter_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:18ae15ed7c1d7b8d12864ce0ea1974595d5bff90d7810d6852df3cb83e967e08

|

| 3 |

+

size 7409629

|

cf.png

ADDED

|

cf.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

5.197908887229275576e-01 7.617625093353248833e-02 2.688573562359970054e-02 8.812546676624347097e-02 1.874533233756534856e-01 7.020164301717700395e-02 3.136669156086632076e-02

|

| 2 |

+

1.541666666666666741e-01 4.229166666666666630e-01 8.125000000000000278e-02 6.875000000000000555e-02 1.145833333333333287e-01 1.541666666666666741e-01 4.166666666666666609e-03

|

| 3 |

+

1.351351351351351426e-02 4.440154440154440302e-02 4.247104247104246944e-01 1.287001287001287020e-01 2.033462033462033469e-01 1.447876447876447759e-01 4.054054054054054279e-02

|

| 4 |

+

1.051226430835975299e-02 4.505256132154179978e-03 1.334890705823460651e-02 7.617220090105122354e-01 1.728683464041381734e-01 2.653095277824128045e-02 1.051226430835975299e-02

|

| 5 |

+

1.515576761156329014e-02 4.958368416128730756e-03 1.029095331649359200e-02 8.167274768453550160e-02 8.570493030217981589e-01 1.571709233791748414e-02 1.515576761156329014e-02

|

| 6 |

+

3.513513513513513709e-02 2.432432432432432567e-02 7.432432432432432845e-02 7.297297297297297702e-02 1.662162162162162116e-01 6.184684684684684797e-01 8.558558558558557877e-03

|

| 7 |

+

2.785265049415992789e-02 1.347708894878706272e-02 3.414195867026055542e-02 2.255166217430368270e-01 2.875112309074573380e-01 5.300988319856244496e-02 3.584905660377358250e-01

|

class_report.txt

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

precision recall f1-score support

|

| 2 |

+

|

| 3 |

+

0anger 0.62 0.52 0.56 1339

|

| 4 |

+

1disgust 0.39 0.42 0.40 480

|

| 5 |

+

2fear 0.59 0.42 0.49 1554

|

| 6 |

+

3joy 0.74 0.76 0.75 5993

|

| 7 |

+

4neutral 0.80 0.86 0.83 10689

|

| 8 |

+

5sadness 0.64 0.62 0.63 2220

|

| 9 |

+

6surprise 0.53 0.36 0.43 1113

|

| 10 |

+

|

| 11 |

+

accuracy 0.73 23388

|

| 12 |

+

macro avg 0.61 0.57 0.58 23388

|

| 13 |

+

weighted avg 0.72 0.73 0.72 23388

|

merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": "<s>",

|

| 3 |

+

"cls_token": "<s>",

|

| 4 |

+

"eos_token": "</s>",

|

| 5 |

+

"mask_token": {

|

| 6 |

+

"content": "<mask>",

|

| 7 |

+

"lstrip": true,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false

|

| 11 |

+

},

|

| 12 |

+

"pad_token": "<pad>",

|

| 13 |

+

"sep_token": "</s>",

|

| 14 |

+

"unk_token": "<unk>"

|

| 15 |

+

}

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_prefix_space": false,

|

| 3 |

+

"bos_token": "<s>",

|

| 4 |

+

"clean_up_tokenization_spaces": true,

|

| 5 |

+

"cls_token": "<s>",

|

| 6 |

+

"eos_token": "</s>",

|

| 7 |

+

"errors": "replace",

|

| 8 |

+

"mask_token": "<mask>",

|

| 9 |

+

"model_max_length": 512,

|

| 10 |

+

"pad_token": "<pad>",

|

| 11 |

+

"sep_token": "</s>",

|

| 12 |

+

"tokenizer_class": "RobertaTokenizer",

|

| 13 |

+

"trim_offsets": true,

|

| 14 |

+

"unk_token": "<unk>"

|

| 15 |

+

}

|

training_args.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:aa02c566d7a4d3724760e1024598d36ecae3945cd1343a8a9f44c7ced8d5db97

|

| 3 |

+

size 4155

|

vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|