Commit

•

a4be04d

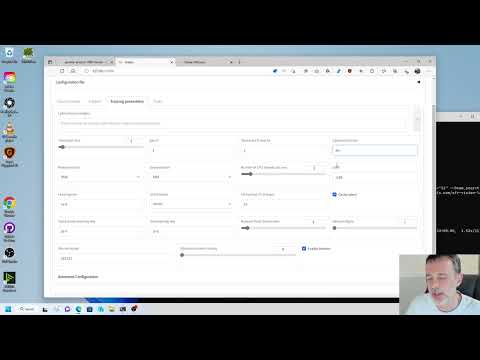

1

Parent(s):

35df028

Upload 42 files

Browse files- .gitattributes +4 -34

- .gitignore +11 -0

- LICENSE.md +201 -0

- README-ja.md +147 -0

- README.md +338 -0

- XTI_hijack.py +209 -0

- _typos.toml +15 -0

- activate.bat +5 -0

- activate.ps1 +1 -0

- config_README-ja.md +279 -0

- dreambooth_gui.py +954 -0

- fine_tune.py +440 -0

- fine_tune_README.md +465 -0

- fine_tune_README_ja.md +140 -0

- finetune_gui.py +900 -0

- gen_img_diffusers.py +0 -0

- gui.bat +16 -0

- gui.ps1 +21 -0

- gui.sh +15 -0

- kohya_gui.py +114 -0

- lora_gui.py +1294 -0

- requirements.txt +34 -0

- setup.bat +48 -0

- setup.py +10 -0

- setup.sh +648 -0

- style.css +21 -0

- textual_inversion_gui.py +1014 -0

- train_README-ja.md +945 -0

- train_db.py +437 -0

- train_db_README-ja.md +167 -0

- train_db_README.md +295 -0

- train_network.py +773 -0

- train_network_README-ja.md +479 -0

- train_network_README.md +189 -0

- train_textual_inversion.py +598 -0

- train_textual_inversion_XTI.py +650 -0

- train_ti_README-ja.md +105 -0

- train_ti_README.md +62 -0

- upgrade.bat +16 -0

- upgrade.ps1 +14 -0

- upgrade.sh +16 -0

- utilities.cmd +1 -0

.gitattributes

CHANGED

|

@@ -1,34 +1,4 @@

|

|

| 1 |

-

*.

|

| 2 |

-

*.

|

| 3 |

-

*.

|

| 4 |

-

*.

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 1 |

+

*.sh text eol=lf

|

| 2 |

+

*.ps1 text eol=crlf

|

| 3 |

+

*.bat text eol=crlf

|

| 4 |

+

*.cmd text eol=crlf

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.gitignore

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

venv

|

| 2 |

+

__pycache__

|

| 3 |

+

cudnn_windows

|

| 4 |

+

.vscode

|

| 5 |

+

*.egg-info

|

| 6 |

+

build

|

| 7 |

+

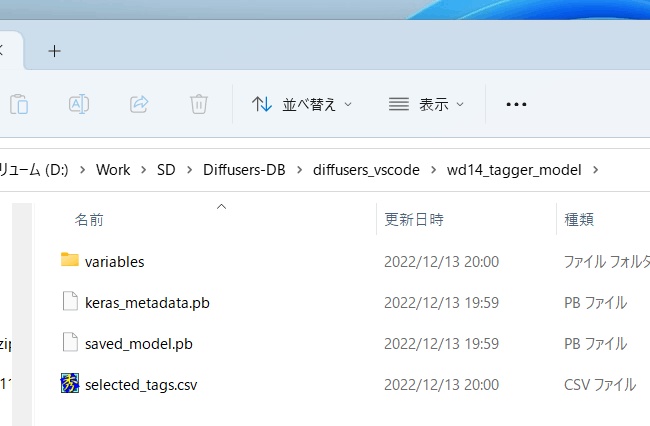

wd14_tagger_model

|

| 8 |

+

.DS_Store

|

| 9 |

+

locon

|

| 10 |

+

gui-user.bat

|

| 11 |

+

gui-user.ps1

|

LICENSE.md

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [2022] [kohya-ss]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README-ja.md

ADDED

|

@@ -0,0 +1,147 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## リポジトリについて

|

| 2 |

+

Stable Diffusionの学習、画像生成、その他のスクリプトを入れたリポジトリです。

|

| 3 |

+

|

| 4 |

+

[README in English](./README.md) ←更新情報はこちらにあります

|

| 5 |

+

|

| 6 |

+

GUIやPowerShellスクリプトなど、より使いやすくする機能が[bmaltais氏のリポジトリ](https://github.com/bmaltais/kohya_ss)で提供されています(英語です)のであわせてご覧ください。bmaltais氏に感謝します。

|

| 7 |

+

|

| 8 |

+

以下のスクリプトがあります。

|

| 9 |

+

|

| 10 |

+

* DreamBooth、U-NetおよびText Encoderの学習をサポート

|

| 11 |

+

* fine-tuning、同上

|

| 12 |

+

* 画像生成

|

| 13 |

+

* モデル変換(Stable Diffision ckpt/safetensorsとDiffusersの相互変換)

|

| 14 |

+

|

| 15 |

+

## 使用法について

|

| 16 |

+

|

| 17 |

+

当リポジトリ内およびnote.comに記事がありますのでそちらをご覧ください(将来的にはすべてこちらへ移すかもしれません)。

|

| 18 |

+

|

| 19 |

+

* [学習について、共通編](./train_README-ja.md) : データ整備やオプションなど

|

| 20 |

+

* [データセット設定](./config_README-ja.md)

|

| 21 |

+

* [DreamBoothの学習について](./train_db_README-ja.md)

|

| 22 |

+

* [fine-tuningのガイド](./fine_tune_README_ja.md):

|

| 23 |

+

* [LoRAの学習について](./train_network_README-ja.md)

|

| 24 |

+

* [Textual Inversionの学習について](./train_ti_README-ja.md)

|

| 25 |

+

* note.com [画像生成スクリプト](https://note.com/kohya_ss/n/n2693183a798e)

|

| 26 |

+

* note.com [モデル変換スクリプト](https://note.com/kohya_ss/n/n374f316fe4ad)

|

| 27 |

+

|

| 28 |

+

## Windowsでの動作に必要なプログラム

|

| 29 |

+

|

| 30 |

+

Python 3.10.6およびGitが必要です。

|

| 31 |

+

|

| 32 |

+

- Python 3.10.6: https://www.python.org/ftp/python/3.10.6/python-3.10.6-amd64.exe

|

| 33 |

+

- git: https://git-scm.com/download/win

|

| 34 |

+

|

| 35 |

+

PowerShellを使う場合、venvを使えるようにするためには以下の手順でセキュリティ設定を変更してください。

|

| 36 |

+

(venvに限らずスクリプトの実行が可能になりますので注意してください。)

|

| 37 |

+

|

| 38 |

+

- PowerShellを管理者として開きます。

|

| 39 |

+

- 「Set-ExecutionPolicy Unrestricted」と入力し、Yと答えます。

|

| 40 |

+

- 管理者のPowerShellを閉じます。

|

| 41 |

+

|

| 42 |

+

## Windows環境でのインストール

|

| 43 |

+

|

| 44 |

+

以下の例ではPyTorchは1.12.1/CUDA 11.6版をインストールします。CUDA 11.3版やPyTorch 1.13を使う場合は適宜書き換えください。

|

| 45 |

+

|

| 46 |

+

(なお、python -m venv~の行で「python」とだけ表示された場合、py -m venv~のようにpythonをpyに変更してください。)

|

| 47 |

+

|

| 48 |

+

通常の(管理者ではない)PowerShellを開き以下を順に実行します。

|

| 49 |

+

|

| 50 |

+

```powershell

|

| 51 |

+

git clone https://github.com/kohya-ss/sd-scripts.git

|

| 52 |

+

cd sd-scripts

|

| 53 |

+

|

| 54 |

+

python -m venv venv

|

| 55 |

+

.\venv\Scripts\activate

|

| 56 |

+

|

| 57 |

+

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

| 58 |

+

pip install --upgrade -r requirements.txt

|

| 59 |

+

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

| 60 |

+

|

| 61 |

+

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

| 62 |

+

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

| 63 |

+

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

| 64 |

+

|

| 65 |

+

accelerate config

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

<!--

|

| 69 |

+

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117

|

| 70 |

+

pip install --use-pep517 --upgrade -r requirements.txt

|

| 71 |

+

pip install -U -I --no-deps xformers==0.0.16

|

| 72 |

+

-->

|

| 73 |

+

|

| 74 |

+

コマンドプロンプトでは以下になります。

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

```bat

|

| 78 |

+

git clone https://github.com/kohya-ss/sd-scripts.git

|

| 79 |

+

cd sd-scripts

|

| 80 |

+

|

| 81 |

+

python -m venv venv

|

| 82 |

+

.\venv\Scripts\activate

|

| 83 |

+

|

| 84 |

+

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

| 85 |

+

pip install --upgrade -r requirements.txt

|

| 86 |

+

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

| 87 |

+

|

| 88 |

+

copy /y .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

| 89 |

+

copy /y .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

| 90 |

+

copy /y .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

| 91 |

+

|

| 92 |

+

accelerate config

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

(注:``python -m venv venv`` のほうが ``python -m venv --system-site-packages venv`` より安全そうなため書き換えました。globalなpythonにパッケージがインストールしてあると、後者だといろいろと問題が起きます。)

|

| 96 |

+

|

| 97 |

+

accelerate configの質問には以下のように答えてください。(bf16で学習する場合、最後の質問にはbf16と答えてください。)

|

| 98 |

+

|

| 99 |

+

※0.15.0から日本語環境では選択のためにカーソルキーを押すと落ちます(……)。数字キーの0、1、2……で選択できますので、そちらを使ってください。

|

| 100 |

+

|

| 101 |

+

```txt

|

| 102 |

+

- This machine

|

| 103 |

+

- No distributed training

|

| 104 |

+

- NO

|

| 105 |

+

- NO

|

| 106 |

+

- NO

|

| 107 |

+

- all

|

| 108 |

+

- fp16

|

| 109 |

+

```

|

| 110 |

+

|

| 111 |

+

※場合によって ``ValueError: fp16 mixed precision requires a GPU`` というエラーが出ることがあるようです。この場合、6番目の質問(

|

| 112 |

+

``What GPU(s) (by id) should be used for training on this machine as a comma-separated list? [all]:``)に「0」と答えてください。(id `0`のGPUが使われます。)

|

| 113 |

+

|

| 114 |

+

### PyTorchとxformersのバージョンについて

|

| 115 |

+

|

| 116 |

+

他のバージョンでは学習がうまくいかない場合があるようです。特に他の理由がなければ指定のバージョンをお使いください。

|

| 117 |

+

|

| 118 |

+

## アップグレード

|

| 119 |

+

|

| 120 |

+

新しいリリースがあった場合、以下のコマンドで更新できます。

|

| 121 |

+

|

| 122 |

+

```powershell

|

| 123 |

+

cd sd-scripts

|

| 124 |

+

git pull

|

| 125 |

+

.\venv\Scripts\activate

|

| 126 |

+

pip install --use-pep517 --upgrade -r requirements.txt

|

| 127 |

+

```

|

| 128 |

+

|

| 129 |

+

コマンドが成功すれば新しいバージョンが使用できます。

|

| 130 |

+

|

| 131 |

+

## 謝意

|

| 132 |

+

|

| 133 |

+

LoRAの実装は[cloneofsimo氏のリポジトリ](https://github.com/cloneofsimo/lora)を基にしたものです。感謝申し上げます。

|

| 134 |

+

|

| 135 |

+

Conv2d 3x3への拡大は [cloneofsimo氏](https://github.com/cloneofsimo/lora) が最初にリリースし、KohakuBlueleaf氏が [LoCon](https://github.com/KohakuBlueleaf/LoCon) でその有効性を明らかにしたものです。KohakuBlueleaf氏に深く感謝します。

|

| 136 |

+

|

| 137 |

+

## ライセンス

|

| 138 |

+

|

| 139 |

+

スクリプトのライセンスはASL 2.0ですが(Diffusersおよびcloneofsimo氏のリポジトリ由来のものも同様)、一部他のライセンスのコードを含みます。

|

| 140 |

+

|

| 141 |

+

[Memory Efficient Attention Pytorch](https://github.com/lucidrains/memory-efficient-attention-pytorch): MIT

|

| 142 |

+

|

| 143 |

+

[bitsandbytes](https://github.com/TimDettmers/bitsandbytes): MIT

|

| 144 |

+

|

| 145 |

+

[BLIP](https://github.com/salesforce/BLIP): BSD-3-Clause

|

| 146 |

+

|

| 147 |

+

|

README.md

ADDED

|

@@ -0,0 +1,338 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Kohya's GUI

|

| 2 |

+

|

| 3 |

+

This repository provides a Windows-focused Gradio GUI for [Kohya's Stable Diffusion trainers](https://github.com/kohya-ss/sd-scripts). The GUI allows you to set the training parameters and generate and run the required CLI commands to train the model.

|

| 4 |

+

|

| 5 |

+

If you run on Linux and would like to use the GUI, there is now a port of it as a docker container. You can find the project [here](https://github.com/P2Enjoy/kohya_ss-docker).

|

| 6 |

+

|

| 7 |

+

### Table of Contents

|

| 8 |

+

|

| 9 |

+

- [Tutorials](#tutorials)

|

| 10 |

+

- [Required Dependencies](#required-dependencies)

|

| 11 |

+

- [Linux/macOS](#linux-and-macos-dependencies)

|

| 12 |

+

- [Installation](#installation)

|

| 13 |

+

- [Linux/macOS](#linux-and-macos)

|

| 14 |

+

- [Default Install Locations](#install-location)

|

| 15 |

+

- [Windows](#windows)

|

| 16 |

+

- [CUDNN 8.6](#optional--cudnn-86)

|

| 17 |

+

- [Upgrading](#upgrading)

|

| 18 |

+

- [Windows](#windows-upgrade)

|

| 19 |

+

- [Linux/macOS](#linux-and-macos-upgrade)

|

| 20 |

+

- [Launching the GUI](#starting-gui-service)

|

| 21 |

+

- [Windows](#launching-the-gui-on-windows)

|

| 22 |

+

- [Linux/macOS](#launching-the-gui-on-linux-and-macos)

|

| 23 |

+

- [Direct Launch via Python Script](#launching-the-gui-directly-using-kohyaguipy)

|

| 24 |

+

- [Dreambooth](#dreambooth)

|

| 25 |

+

- [Finetune](#finetune)

|

| 26 |

+

- [Train Network](#train-network)

|

| 27 |

+

- [LoRA](#lora)

|

| 28 |

+

- [Troubleshooting](#troubleshooting)

|

| 29 |

+

- [Page File Limit](#page-file-limit)

|

| 30 |

+

- [No module called tkinter](#no-module-called-tkinter)

|

| 31 |

+

- [FileNotFoundError](#filenotfounderror)

|

| 32 |

+

- [Change History](#change-history)

|

| 33 |

+

|

| 34 |

+

## Tutorials

|

| 35 |

+

|

| 36 |

+

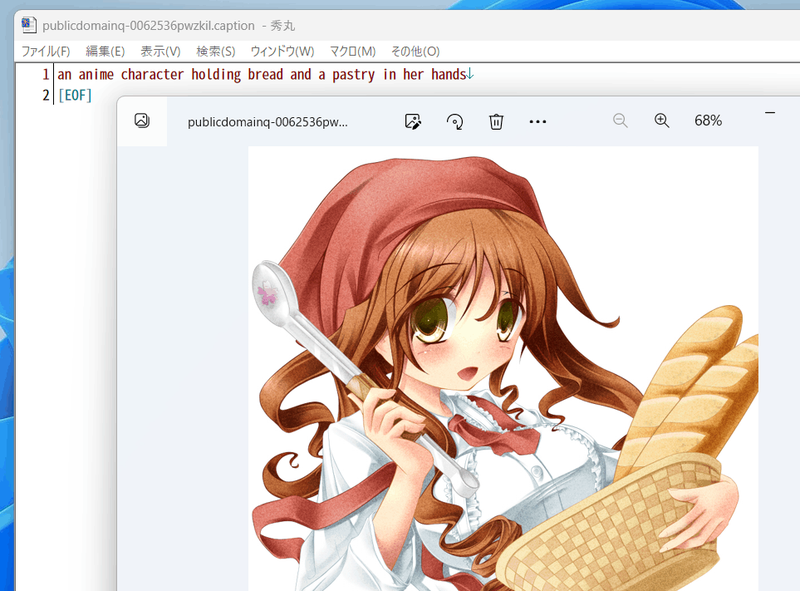

[How to Create a LoRA Part 1: Dataset Preparation](https://www.youtube.com/watch?v=N4_-fB62Hwk):

|

| 37 |

+

|

| 38 |

+

[](https://www.youtube.com/watch?v=N4_-fB62Hwk)

|

| 39 |

+

|

| 40 |

+

[How to Create a LoRA Part 2: Training the Model](https://www.youtube.com/watch?v=k5imq01uvUY):

|

| 41 |

+

|

| 42 |

+

[](https://www.youtube.com/watch?v=k5imq01uvUY)

|

| 43 |

+

|

| 44 |

+

## Required Dependencies

|

| 45 |

+

|

| 46 |

+

- Install [Python 3.10](https://www.python.org/ftp/python/3.10.9/python-3.10.9-amd64.exe)

|

| 47 |

+

- make sure to tick the box to add Python to the 'PATH' environment variable

|

| 48 |

+

- Install [Git](https://git-scm.com/download/win)

|

| 49 |

+

- Install [Visual Studio 2015, 2017, 2019, and 2022 redistributable](https://aka.ms/vs/17/release/vc_redist.x64.exe)

|

| 50 |

+

|

| 51 |

+

### Linux and macOS dependencies

|

| 52 |

+

|

| 53 |

+

These dependencies are taken care of via `setup.sh` in the installation section. No additional steps should be needed unless the scripts inform you otherwise.

|

| 54 |

+

|

| 55 |

+

## Installation

|

| 56 |

+

|

| 57 |

+

### Runpod

|

| 58 |

+

Follow the instructions found in this discussion: https://github.com/bmaltais/kohya_ss/discussions/379

|

| 59 |

+

|

| 60 |

+

### Linux and macOS

|

| 61 |

+

In the terminal, run

|

| 62 |

+

|

| 63 |

+

```

|

| 64 |

+

git clone https://github.com/bmaltais/kohya_ss.git

|

| 65 |

+

cd kohya_ss

|

| 66 |

+

# May need to chmod +x ./setup.sh if you're on a machine with stricter security.

|

| 67 |

+

# There are additional options if needed for a runpod environment.

|

| 68 |

+

# Call 'setup.sh -h' or 'setup.sh --help' for more information.

|

| 69 |

+

./setup.sh

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

Setup.sh help included here:

|

| 73 |

+

|

| 74 |

+

```bash

|

| 75 |

+

Kohya_SS Installation Script for POSIX operating systems.

|

| 76 |

+

|

| 77 |

+

The following options are useful in a runpod environment,

|

| 78 |

+

but will not affect a local machine install.

|

| 79 |

+

|

| 80 |

+

Usage:

|

| 81 |

+

setup.sh -b dev -d /workspace/kohya_ss -g https://mycustom.repo.tld/custom_fork.git

|

| 82 |

+

setup.sh --branch=dev --dir=/workspace/kohya_ss --git-repo=https://mycustom.repo.tld/custom_fork.git

|

| 83 |

+

|

| 84 |

+

Options:

|

| 85 |

+

-b BRANCH, --branch=BRANCH Select which branch of kohya to check out on new installs.

|

| 86 |

+

-d DIR, --dir=DIR The full path you want kohya_ss installed to.

|

| 87 |

+

-g REPO, --git_repo=REPO You can optionally provide a git repo to check out for runpod installation. Useful for custom forks.

|

| 88 |

+

-h, --help Show this screen.

|

| 89 |

+

-i, --interactive Interactively configure accelerate instead of using default config file.

|

| 90 |

+

-n, --no-update Do not update kohya_ss repo. No git pull or clone operations.

|

| 91 |

+

-p, --public Expose public URL in runpod mode. Won't have an effect in other modes.

|

| 92 |

+

-r, --runpod Forces a runpod installation. Useful if detection fails for any reason.

|

| 93 |

+

-s, --skip-space-check Skip the 10Gb minimum storage space check.

|

| 94 |

+

-u, --no-gui Skips launching the GUI.

|

| 95 |

+

-v, --verbose Increase verbosity levels up to 3.

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

#### Install location

|

| 99 |

+

|

| 100 |

+

The default install location for Linux is where the script is located if a previous installation is detected that location.

|

| 101 |

+

Otherwise, it will fall to `/opt/kohya_ss`. If /opt is not writeable, the fallback is `$HOME/kohya_ss`. Lastly, if all else fails it will simply install to the current folder you are in (PWD).

|

| 102 |

+

|

| 103 |

+

On macOS and other non-Linux machines, it will first try to detect an install where the script is run from and then run setup there if that's detected.

|

| 104 |

+

If a previous install isn't found at that location, then it will default install to `$HOME/kohya_ss` followed by where you're currently at if there's no access to $HOME.

|

| 105 |

+

You can override this behavior by specifying an install directory with the -d option.

|

| 106 |

+

|

| 107 |

+

If you are using the interactive mode, our default values for the accelerate config screen after running the script answer "This machine", "None", "No" for the remaining questions.

|

| 108 |

+

These are the same answers as the Windows install.

|

| 109 |

+

|

| 110 |

+

### Windows

|

| 111 |

+

|

| 112 |

+

- Install [Python 3.10](https://www.python.org/ftp/python/3.10.9/python-3.10.9-amd64.exe)

|

| 113 |

+

- make sure to tick the box to add Python to the 'PATH' environment variable

|

| 114 |

+

- Install [Git](https://git-scm.com/download/win)

|

| 115 |

+

- Install [Visual Studio 2015, 2017, 2019, and 2022 redistributable](https://aka.ms/vs/17/release/vc_redist.x64.exe)

|

| 116 |

+

|

| 117 |

+

In the terminal, run:

|

| 118 |

+

|

| 119 |

+

```

|

| 120 |

+

git clone https://github.com/bmaltais/kohya_ss.git

|

| 121 |

+

cd kohya_ss

|

| 122 |

+

.\setup.bat

|

| 123 |

+

```

|

| 124 |

+

|

| 125 |

+

If this is a 1st install answer No when asked `Do you want to uninstall previous versions of torch and associated files before installing`.

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

Then configure accelerate with the same answers as in the MacOS instructions when prompted.

|

| 129 |

+

|

| 130 |

+

### Optional: CUDNN 8.6

|

| 131 |

+

|

| 132 |

+

This step is optional but can improve the learning speed for NVIDIA 30X0/40X0 owners. It allows for larger training batch size and faster training speed.

|

| 133 |

+

|

| 134 |

+

Due to the file size, I can't host the DLLs needed for CUDNN 8.6 on Github. I strongly advise you download them for a speed boost in sample generation (almost 50% on 4090 GPU) you can download them [here](https://b1.thefileditch.ch/mwxKTEtelILoIbMbruuM.zip).

|

| 135 |

+

|

| 136 |

+

To install, simply unzip the directory and place the `cudnn_windows` folder in the root of the this repo.

|

| 137 |

+

|

| 138 |

+

Run the following commands to install:

|

| 139 |

+

|

| 140 |

+

```

|

| 141 |

+

.\venv\Scripts\activate

|

| 142 |

+

|

| 143 |

+

python .\tools\cudann_1.8_install.py

|

| 144 |

+

```

|

| 145 |

+

|

| 146 |

+

Once the commands have completed successfully you should be ready to use the new version. MacOS support is not tested and has been mostly taken from https://gist.github.com/jstayco/9f5733f05b9dc29de95c4056a023d645

|

| 147 |

+

|

| 148 |

+

## Upgrading

|

| 149 |

+

|

| 150 |

+

The following commands will work from the root directory of the project if you'd prefer to not run scripts.

|

| 151 |

+

These commands will work on any OS.

|

| 152 |

+

```bash

|

| 153 |

+

git pull

|

| 154 |

+

|

| 155 |

+

.\venv\Scripts\activate

|

| 156 |

+

|

| 157 |

+

pip install --use-pep517 --upgrade -r requirements.txt

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

+

### Windows Upgrade

|

| 161 |

+

When a new release comes out, you can upgrade your repo with the following commands in the root directory:

|

| 162 |

+

|

| 163 |

+

```powershell

|

| 164 |

+

upgrade.bat

|

| 165 |

+

```

|

| 166 |

+

|

| 167 |

+

### Linux and macOS Upgrade

|

| 168 |

+

You can cd into the root directory and simply run

|

| 169 |

+

|

| 170 |

+

```bash

|

| 171 |

+

# Refresh and update everything

|

| 172 |

+

./setup.sh

|

| 173 |

+

|

| 174 |

+

# This will refresh everything, but NOT clone or pull the git repo.

|

| 175 |

+

./setup.sh --no-git-update

|

| 176 |

+

```

|

| 177 |

+

|

| 178 |

+

Once the commands have completed successfully you should be ready to use the new version.

|

| 179 |

+

|

| 180 |

+

# Starting GUI Service

|

| 181 |

+

|

| 182 |

+

The following command line arguments can be passed to the scripts on any OS to configure the underlying service.

|

| 183 |

+

```

|

| 184 |

+

--listen: the IP address to listen on for connections to Gradio.

|

| 185 |

+

--username: a username for authentication.

|

| 186 |

+

--password: a password for authentication.

|

| 187 |

+

--server_port: the port to run the server listener on.

|

| 188 |

+

--inbrowser: opens the Gradio UI in a web browser.

|

| 189 |

+

--share: shares the Gradio UI.

|

| 190 |

+

```

|

| 191 |

+

|

| 192 |

+

### Launching the GUI on Windows

|

| 193 |

+

|

| 194 |

+

The two scripts to launch the GUI on Windows are gui.ps1 and gui.bat in the root directory.

|

| 195 |

+

You can use whichever script you prefer.

|

| 196 |

+

|

| 197 |

+

To launch the Gradio UI, run the script in a terminal with the desired command line arguments, for example:

|

| 198 |

+

|

| 199 |

+

`gui.ps1 --listen 127.0.0.1 --server_port 7860 --inbrowser --share`

|

| 200 |

+

|

| 201 |

+

or

|

| 202 |

+

|

| 203 |

+

`gui.bat --listen 127.0.0.1 --server_port 7860 --inbrowser --share`

|

| 204 |

+

|

| 205 |

+

## Launching the GUI on Linux and macOS

|

| 206 |

+

|

| 207 |

+

Run the launcher script with the desired command line arguments similar to Windows.

|

| 208 |

+

`gui.sh --listen 127.0.0.1 --server_port 7860 --inbrowser --share`

|

| 209 |

+

|

| 210 |

+

## Launching the GUI directly using kohya_gui.py

|

| 211 |

+

|

| 212 |

+

To run the GUI directly bypassing the wrapper scripts, simply use this command from the root project directory:

|

| 213 |

+

|

| 214 |

+

```

|

| 215 |

+

.\venv\Scripts\activate

|

| 216 |

+

|

| 217 |

+

python .\kohya_gui.py

|

| 218 |

+

```

|

| 219 |

+

|

| 220 |

+

## Dreambooth

|

| 221 |

+

|

| 222 |

+

You can find the dreambooth solution specific here: [Dreambooth README](train_db_README.md)

|

| 223 |

+

|

| 224 |

+

## Finetune

|

| 225 |

+

|

| 226 |

+

You can find the finetune solution specific here: [Finetune README](fine_tune_README.md)

|

| 227 |

+

|

| 228 |

+

## Train Network

|

| 229 |

+

|

| 230 |

+

You can find the train network solution specific here: [Train network README](train_network_README.md)

|

| 231 |

+

|

| 232 |

+

## LoRA

|

| 233 |

+

|

| 234 |

+

Training a LoRA currently uses the `train_network.py` code. You can create a LoRA network by using the all-in-one `gui.cmd` or by running the dedicated LoRA training GUI with:

|

| 235 |

+

|

| 236 |

+

```

|

| 237 |

+

.\venv\Scripts\activate

|

| 238 |

+

|

| 239 |

+

python lora_gui.py

|

| 240 |

+

```

|

| 241 |

+

|

| 242 |

+

Once you have created the LoRA network, you can generate images via auto1111 by installing [this extension](https://github.com/kohya-ss/sd-webui-additional-networks).

|

| 243 |

+

|

| 244 |

+

### Naming of LoRA

|

| 245 |

+

|

| 246 |

+

The LoRA supported by `train_network.py` has been named to avoid confusion. The documentation has been updated. The following are the names of LoRA types in this repository.

|

| 247 |

+

|

| 248 |

+

1. __LoRA-LierLa__ : (LoRA for __Li__ n __e__ a __r__ __La__ yers)

|

| 249 |

+

|

| 250 |

+

LoRA for Linear layers and Conv2d layers with 1x1 kernel

|

| 251 |

+

|

| 252 |

+

2. __LoRA-C3Lier__ : (LoRA for __C__ olutional layers with __3__ x3 Kernel and __Li__ n __e__ a __r__ layers)

|

| 253 |

+

|

| 254 |

+

In addition to 1., LoRA for Conv2d layers with 3x3 kernel

|

| 255 |

+

|

| 256 |

+

LoRA-LierLa is the default LoRA type for `train_network.py` (without `conv_dim` network arg). LoRA-LierLa can be used with [our extension](https://github.com/kohya-ss/sd-webui-additional-networks) for AUTOMATIC1111's Web UI, or with the built-in LoRA feature of the Web UI.

|

| 257 |

+

|

| 258 |

+

To use LoRA-C3Liar with Web UI, please use our extension.

|

| 259 |

+

|

| 260 |

+

## Sample image generation during training

|

| 261 |

+

A prompt file might look like this, for example

|

| 262 |

+

|

| 263 |

+

```

|

| 264 |

+

# prompt 1

|

| 265 |

+

masterpiece, best quality, (1girl), in white shirts, upper body, looking at viewer, simple background --n low quality, worst quality, bad anatomy,bad composition, poor, low effort --w 768 --h 768 --d 1 --l 7.5 --s 28

|

| 266 |

+

|

| 267 |

+

# prompt 2

|

| 268 |

+

masterpiece, best quality, 1boy, in business suit, standing at street, looking back --n (low quality, worst quality), bad anatomy,bad composition, poor, low effort --w 576 --h 832 --d 2 --l 5.5 --s 40

|

| 269 |

+

```

|

| 270 |

+

|

| 271 |

+

Lines beginning with `#` are comments. You can specify options for the generated image with options like `--n` after the prompt. The following can be used.

|

| 272 |

+

|

| 273 |

+

* `--n` Negative prompt up to the next option.

|

| 274 |

+

* `--w` Specifies the width of the generated image.

|

| 275 |

+

* `--h` Specifies the height of the generated image.

|

| 276 |

+

* `--d` Specifies the seed of the generated image.

|

| 277 |

+

* `--l` Specifies the CFG scale of the generated image.

|

| 278 |

+

* `--s` Specifies the number of steps in the generation.

|

| 279 |

+

|

| 280 |

+

The prompt weighting such as `( )` and `[ ]` are working.

|

| 281 |

+

|

| 282 |

+

## Troubleshooting

|

| 283 |

+

|

| 284 |

+

### Page File Limit

|

| 285 |

+

|

| 286 |

+

- X error relating to `page file`: Increase the page file size limit in Windows.

|

| 287 |

+

|

| 288 |

+

### No module called tkinter

|

| 289 |

+

|

| 290 |

+

- Re-install [Python 3.10](https://www.python.org/ftp/python/3.10.9/python-3.10.9-amd64.exe) on your system.

|

| 291 |

+

|

| 292 |

+

### FileNotFoundError

|

| 293 |

+

|

| 294 |

+

This is usually related to an installation issue. Make sure you do not have any python modules installed locally that could conflict with the ones installed in the venv:

|

| 295 |

+

|

| 296 |

+

1. Open a new powershell terminal and make sure no venv is active.

|

| 297 |

+

2. Run the following commands:

|

| 298 |

+

|

| 299 |

+

```

|

| 300 |

+

pip freeze > uninstall.txt

|

| 301 |

+

pip uninstall -r uninstall.txt

|

| 302 |

+

```

|

| 303 |

+

|

| 304 |

+

This will store a backup file with your current locally installed pip packages and then uninstall them. Then, redo the installation instructions within the kohya_ss venv.

|

| 305 |

+

|

| 306 |

+

## Change History

|

| 307 |

+

|

| 308 |

+

* 2023/04/22 (v21.5.5)

|

| 309 |

+

- Update LoRA merge GUI to support SD checkpoint merge and up to 4 LoRA merging

|

| 310 |

+

- Fixed `lora_interrogator.py` not working. Please refer to [PR #392](https://github.com/kohya-ss/sd-scripts/pull/392) for details. Thank you A2va and heyalexchoi!

|

| 311 |

+

- Fixed the handling of tags containing `_` in `tag_images_by_wd14_tagger.py`.

|

| 312 |

+

- Add new Extract DyLoRA gui to the Utilities tab.

|

| 313 |

+

- Add new Merge LyCORIS models into checkpoint gui to the Utilities tab.

|

| 314 |

+

- Add new info on startup to help debug things

|

| 315 |

+

* 2023/04/17 (v21.5.4)

|

| 316 |

+

- Fixed a bug that caused an error when loading DyLoRA with the `--network_weight` option in `train_network.py`.

|

| 317 |

+

- Added the `--recursive` option to each script in the `finetune` folder to process folders recursively. Please refer to [PR #400](https://github.com/kohya-ss/sd-scripts/pull/400/) for details. Thanks to Linaqruf!

|

| 318 |

+

- Upgrade Gradio to latest release

|

| 319 |

+

- Fix issue when Adafactor is used as optimizer and LR Warmup is not 0: https://github.com/bmaltais/kohya_ss/issues/617

|

| 320 |

+

- Added support for DyLoRA in `train_network.py`. Please refer to [here](./train_network_README-ja.md#dylora) for details (currently only in Japanese).

|

| 321 |

+

- Added support for caching latents to disk in each training script. Please specify __both__ `--cache_latents` and `--cache_latents_to_disk` options.

|

| 322 |

+

- The files are saved in the same folder as the images with the extension `.npz`. If you specify the `--flip_aug` option, the files with `_flip.npz` will also be saved.

|

| 323 |

+

- Multi-GPU training has not been tested.

|

| 324 |

+

- This feature is not tested with all combinations of datasets and training scripts, so there may be bugs.

|

| 325 |

+

- Added workaround for an error that occurs when training with `fp16` or `bf16` in `fine_tune.py`.

|

| 326 |

+

- Implemented DyLoRA GUI support. There will now be a new 'DyLoRA Unit` slider when the LoRA type is selected as `kohya DyLoRA` to specify the desired Unit value for DyLoRA training.

|

| 327 |

+

- Update gui.bat and gui.ps1 based on: https://github.com/bmaltais/kohya_ss/issues/188

|

| 328 |

+

- Update `setup.bat` to install torch 2.0.0 instead of 1.2.1. If you want to upgrade from 1.2.1 to 2.0.0 run setup.bat again, select 1 to uninstall the previous torch modules, then select 2 for torch 2.0.0

|

| 329 |

+

|

| 330 |

+

* 2023/04/09 (v21.5.2)

|

| 331 |

+

|

| 332 |

+

- Added support for training with weighted captions. Thanks to AI-Casanova for the great contribution!

|

| 333 |

+

- Please refer to the PR for details: [PR #336](https://github.com/kohya-ss/sd-scripts/pull/336)

|

| 334 |

+

- Specify the `--weighted_captions` option. It is available for all training scripts except Textual Inversion and XTI.

|

| 335 |

+

- This option is also applicable to token strings of the DreamBooth method.

|

| 336 |

+

- The syntax for weighted captions is almost the same as the Web UI, and you can use things like `(abc)`, `[abc]`, and `(abc:1.23)`. Nesting is also possible.

|

| 337 |

+

- If you include a comma in the parentheses, the parentheses will not be properly matched in the prompt shuffle/dropout, so do not include a comma in the parentheses.

|

| 338 |

+

- Run gui.sh from any place

|

XTI_hijack.py

ADDED

|

@@ -0,0 +1,209 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|