Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- CITATION.cff +24 -0

- README.md +577 -0

- assets/4090_bs_1.png +0 -0

- assets/4090_bs_8.png +0 -0

- assets/a100_bs_1.png +0 -0

- assets/a100_bs_8.png +0 -0

- assets/collage_full.png +3 -0

- assets/collage_small.png +3 -0

- assets/glowing_256_1.png +0 -0

- assets/glowing_256_2.png +0 -0

- assets/glowing_256_3.png +0 -0

- assets/glowing_512_1.png +0 -0

- assets/glowing_512_2.png +0 -0

- assets/glowing_512_3.png +0 -0

- assets/image2image_256.png +0 -0

- assets/image2image_256_orig.png +0 -0

- assets/image2image_512.png +0 -0

- assets/image2image_512_orig.png +0 -0

- assets/inpainting_256.png +0 -0

- assets/inpainting_256_mask.png +0 -0

- assets/inpainting_256_orig.png +0 -0

- assets/inpainting_512.png +0 -0

- assets/inpainting_512_mask.png +0 -0

- assets/inpainting_512_orig.jpeg +0 -0

- assets/minecraft1.png +0 -0

- assets/minecraft2.png +0 -0

- assets/minecraft3.png +0 -0

- assets/noun1.png +0 -0

- assets/noun2.png +0 -0

- assets/noun3.png +0 -0

- assets/text2image_256.png +0 -0

- assets/text2image_512.png +0 -0

- model_index.json +24 -0

- scheduler/scheduler_config.json +6 -0

- text_encoder/config.json +24 -0

- text_encoder/model.fp16.safetensors +3 -0

- text_encoder/model.safetensors +3 -0

- tokenizer/merges.txt +0 -0

- tokenizer/special_tokens_map.json +30 -0

- tokenizer/tokenizer_config.json +38 -0

- tokenizer/vocab.json +0 -0

- training/A mushroom in [V] style.png +0 -0

- training/A woman working on a laptop in [V] style.jpg +3 -0

- training/generate_images.py +119 -0

- training/training.py +916 -0

- transformer/config.json +26 -0

- transformer/diffusion_pytorch_model.fp16.safetensors +3 -0

- transformer/diffusion_pytorch_model.safetensors +3 -0

- vqvae/config.json +39 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

training/A[[:space:]]woman[[:space:]]working[[:space:]]on[[:space:]]a[[:space:]]laptop[[:space:]]in[[:space:]]\[V\][[:space:]]style.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/collage_small.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/collage_full.png filter=lfs diff=lfs merge=lfs -text

|

CITATION.cff

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

cff-version: 1.2.0

|

| 2 |

+

title: 'Amused: An open MUSE model'

|

| 3 |

+

message: >-

|

| 4 |

+

If you use this software, please cite it using the

|

| 5 |

+

metadata from this file.

|

| 6 |

+

type: software

|

| 7 |

+

authors:

|

| 8 |

+

- given-names: Suraj

|

| 9 |

+

family-names: Patil

|

| 10 |

+

- given-names: Berman

|

| 11 |

+

family-names: William

|

| 12 |

+

- given-names: Patrick

|

| 13 |

+

family-names: von Platen

|

| 14 |

+

repository-code: 'https://github.com/huggingface/amused'

|

| 15 |

+

keywords:

|

| 16 |

+

- deep-learning

|

| 17 |

+

- pytorch

|

| 18 |

+

- image-generation

|

| 19 |

+

- text2image

|

| 20 |

+

- image2image

|

| 21 |

+

- language-modeling

|

| 22 |

+

- masked-language-modeling

|

| 23 |

+

license: Apache-2.0

|

| 24 |

+

version: 0.12.1

|

README.md

ADDED

|

@@ -0,0 +1,577 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# amused

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

<sup><sub>Images cherry-picked from 512 and 256 models. Images are degraded to load faster. See ./assets/collage_full.png for originals</sub></sup>

|

| 5 |

+

|

| 6 |

+

[[Paper - TODO]]()

|

| 7 |

+

|

| 8 |

+

| Model | Params |

|

| 9 |

+

|-------|--------|

|

| 10 |

+

| [amused-256](https://huggingface.co/huggingface/amused-256) | 603M |

|

| 11 |

+

| [amused-512](https://huggingface.co/huggingface/amused-512) | 608M |

|

| 12 |

+

|

| 13 |

+

Amused is a lightweight text to image model based off of the [muse](https://arxiv.org/pdf/2301.00704.pdf) architecture. Amused is particularly useful in applications that require a lightweight and fast model such as generating many images quickly at once.

|

| 14 |

+

|

| 15 |

+

Amused is a vqvae token based transformer that can generate an image in fewer forward passes than many diffusion models. In contrast with muse, it uses the smaller text encoder clip instead of t5. Due to its small parameter count and few forward pass generation process, amused can generate many images quickly. This benefit is seen particularly at larger batch sizes.

|

| 16 |

+

|

| 17 |

+

## 1. Usage

|

| 18 |

+

|

| 19 |

+

### Text to image

|

| 20 |

+

|

| 21 |

+

#### 256x256 model

|

| 22 |

+

|

| 23 |

+

```python

|

| 24 |

+

import torch

|

| 25 |

+

from diffusers import AmusedPipeline

|

| 26 |

+

|

| 27 |

+

pipe = AmusedPipeline.from_pretrained(

|

| 28 |

+

"huggingface/amused-256", variant="fp16", torch_dtype=torch.float16

|

| 29 |

+

)

|

| 30 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans in fp16

|

| 31 |

+

pipe = pipe.to("cuda")

|

| 32 |

+

|

| 33 |

+

prompt = "cowboy"

|

| 34 |

+

image = pipe(prompt, generator=torch.Generator('cuda').manual_seed(8)).images[0]

|

| 35 |

+

image.save('text2image_256.png')

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

#### 512x512 model

|

| 41 |

+

|

| 42 |

+

```python

|

| 43 |

+

import torch

|

| 44 |

+

from diffusers import AmusedPipeline

|

| 45 |

+

|

| 46 |

+

pipe = AmusedPipeline.from_pretrained(

|

| 47 |

+

"huggingface/amused-512", variant="fp16", torch_dtype=torch.float16

|

| 48 |

+

)

|

| 49 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans n fp16

|

| 50 |

+

pipe = pipe.to("cuda")

|

| 51 |

+

|

| 52 |

+

prompt = "summer in the mountains"

|

| 53 |

+

image = pipe(prompt, generator=torch.Generator('cuda').manual_seed(2)).images[0]

|

| 54 |

+

image.save('text2image_512.png')

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

### Image to image

|

| 60 |

+

|

| 61 |

+

#### 256x256 model

|

| 62 |

+

|

| 63 |

+

```python

|

| 64 |

+

import torch

|

| 65 |

+

from diffusers import AmusedImg2ImgPipeline

|

| 66 |

+

from diffusers.utils import load_image

|

| 67 |

+

|

| 68 |

+

pipe = AmusedImg2ImgPipeline.from_pretrained(

|

| 69 |

+

"huggingface/amused-256", variant="fp16", torch_dtype=torch.float16

|

| 70 |

+

)

|

| 71 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans in fp16

|

| 72 |

+

pipe = pipe.to("cuda")

|

| 73 |

+

|

| 74 |

+

prompt = "apple watercolor"

|

| 75 |

+

input_image = (

|

| 76 |

+

load_image(

|

| 77 |

+

"https://raw.githubusercontent.com/huggingface/amused/main/assets/image2image_256_orig.png"

|

| 78 |

+

)

|

| 79 |

+

.resize((256, 256))

|

| 80 |

+

.convert("RGB")

|

| 81 |

+

)

|

| 82 |

+

|

| 83 |

+

image = pipe(prompt, input_image, strength=0.7, generator=torch.Generator('cuda').manual_seed(3)).images[0]

|

| 84 |

+

image.save('image2image_256.png')

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

#### 512x512 model

|

| 90 |

+

|

| 91 |

+

```python

|

| 92 |

+

import torch

|

| 93 |

+

from diffusers import AmusedImg2ImgPipeline

|

| 94 |

+

from diffusers.utils import load_image

|

| 95 |

+

|

| 96 |

+

pipe = AmusedImg2ImgPipeline.from_pretrained(

|

| 97 |

+

"huggingface/amused-512", variant="fp16", torch_dtype=torch.float16

|

| 98 |

+

)

|

| 99 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans in fp16

|

| 100 |

+

pipe = pipe.to("cuda")

|

| 101 |

+

|

| 102 |

+

prompt = "winter mountains"

|

| 103 |

+

input_image = (

|

| 104 |

+

load_image(

|

| 105 |

+

"https://raw.githubusercontent.com/huggingface/amused/main/assets/image2image_512_orig.png"

|

| 106 |

+

)

|

| 107 |

+

.resize((512, 512))

|

| 108 |

+

.convert("RGB")

|

| 109 |

+

)

|

| 110 |

+

|

| 111 |

+

image = pipe(prompt, input_image, generator=torch.Generator('cuda').manual_seed(15)).images[0]

|

| 112 |

+

image.save('image2image_512.png')

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

### Inpainting

|

| 118 |

+

|

| 119 |

+

#### 256x256 model

|

| 120 |

+

|

| 121 |

+

```python

|

| 122 |

+

import torch

|

| 123 |

+

from diffusers import AmusedInpaintPipeline

|

| 124 |

+

from diffusers.utils import load_image

|

| 125 |

+

from PIL import Image

|

| 126 |

+

|

| 127 |

+

pipe = AmusedInpaintPipeline.from_pretrained(

|

| 128 |

+

"huggingface/amused-256", variant="fp16", torch_dtype=torch.float16

|

| 129 |

+

)

|

| 130 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans in fp16

|

| 131 |

+

pipe = pipe.to("cuda")

|

| 132 |

+

|

| 133 |

+

prompt = "a man with glasses"

|

| 134 |

+

input_image = (

|

| 135 |

+

load_image(

|

| 136 |

+

"https://raw.githubusercontent.com/huggingface/amused/main/assets/inpainting_256_orig.png"

|

| 137 |

+

)

|

| 138 |

+

.resize((256, 256))

|

| 139 |

+

.convert("RGB")

|

| 140 |

+

)

|

| 141 |

+

mask = (

|

| 142 |

+

load_image(

|

| 143 |

+

"https://raw.githubusercontent.com/huggingface/amused/main/assets/inpainting_256_mask.png"

|

| 144 |

+

)

|

| 145 |

+

.resize((256, 256))

|

| 146 |

+

.convert("L")

|

| 147 |

+

)

|

| 148 |

+

|

| 149 |

+

for seed in range(20):

|

| 150 |

+

image = pipe(prompt, input_image, mask, generator=torch.Generator('cuda').manual_seed(seed)).images[0]

|

| 151 |

+

image.save(f'inpainting_256_{seed}.png')

|

| 152 |

+

|

| 153 |

+

```

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

#### 512x512 model

|

| 158 |

+

|

| 159 |

+

```python

|

| 160 |

+

import torch

|

| 161 |

+

from diffusers import AmusedInpaintPipeline

|

| 162 |

+

from diffusers.utils import load_image

|

| 163 |

+

|

| 164 |

+

pipe = AmusedInpaintPipeline.from_pretrained(

|

| 165 |

+

"huggingface/amused-512", variant="fp16", torch_dtype=torch.float16

|

| 166 |

+

)

|

| 167 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans in fp16

|

| 168 |

+

pipe = pipe.to("cuda")

|

| 169 |

+

|

| 170 |

+

prompt = "fall mountains"

|

| 171 |

+

input_image = (

|

| 172 |

+

load_image(

|

| 173 |

+

"https://raw.githubusercontent.com/huggingface/amused/main/assets/inpainting_512_orig.jpeg"

|

| 174 |

+

)

|

| 175 |

+

.resize((512, 512))

|

| 176 |

+

.convert("RGB")

|

| 177 |

+

)

|

| 178 |

+

mask = (

|

| 179 |

+

load_image(

|

| 180 |

+

"https://raw.githubusercontent.com/huggingface/amused/main/assets/inpainting_512_mask.png"

|

| 181 |

+

)

|

| 182 |

+

.resize((512, 512))

|

| 183 |

+

.convert("L")

|

| 184 |

+

)

|

| 185 |

+

image = pipe(prompt, input_image, mask, generator=torch.Generator('cuda').manual_seed(0)).images[0]

|

| 186 |

+

image.save('inpainting_512.png')

|

| 187 |

+

```

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

|

| 192 |

+

|

| 193 |

+

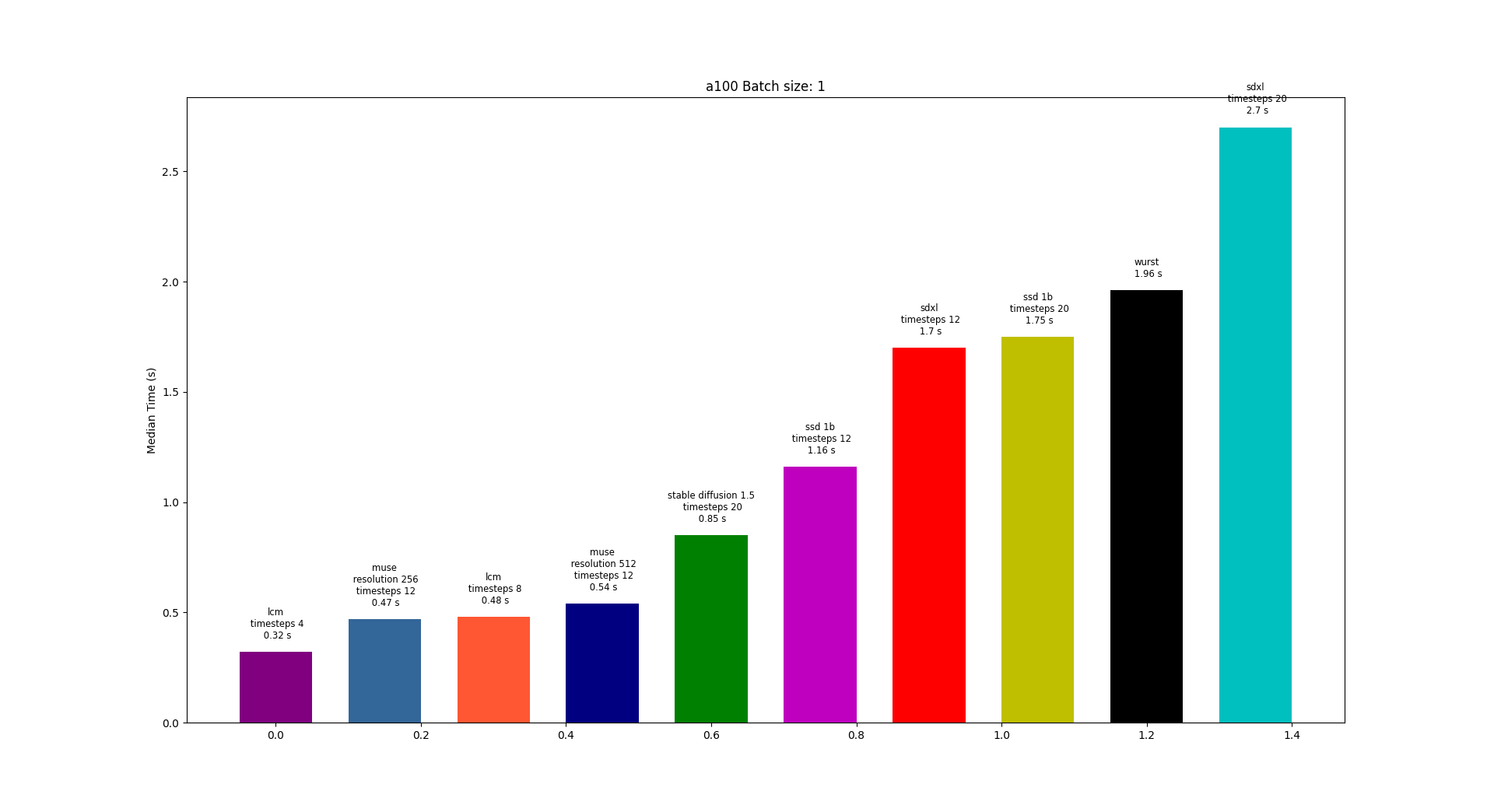

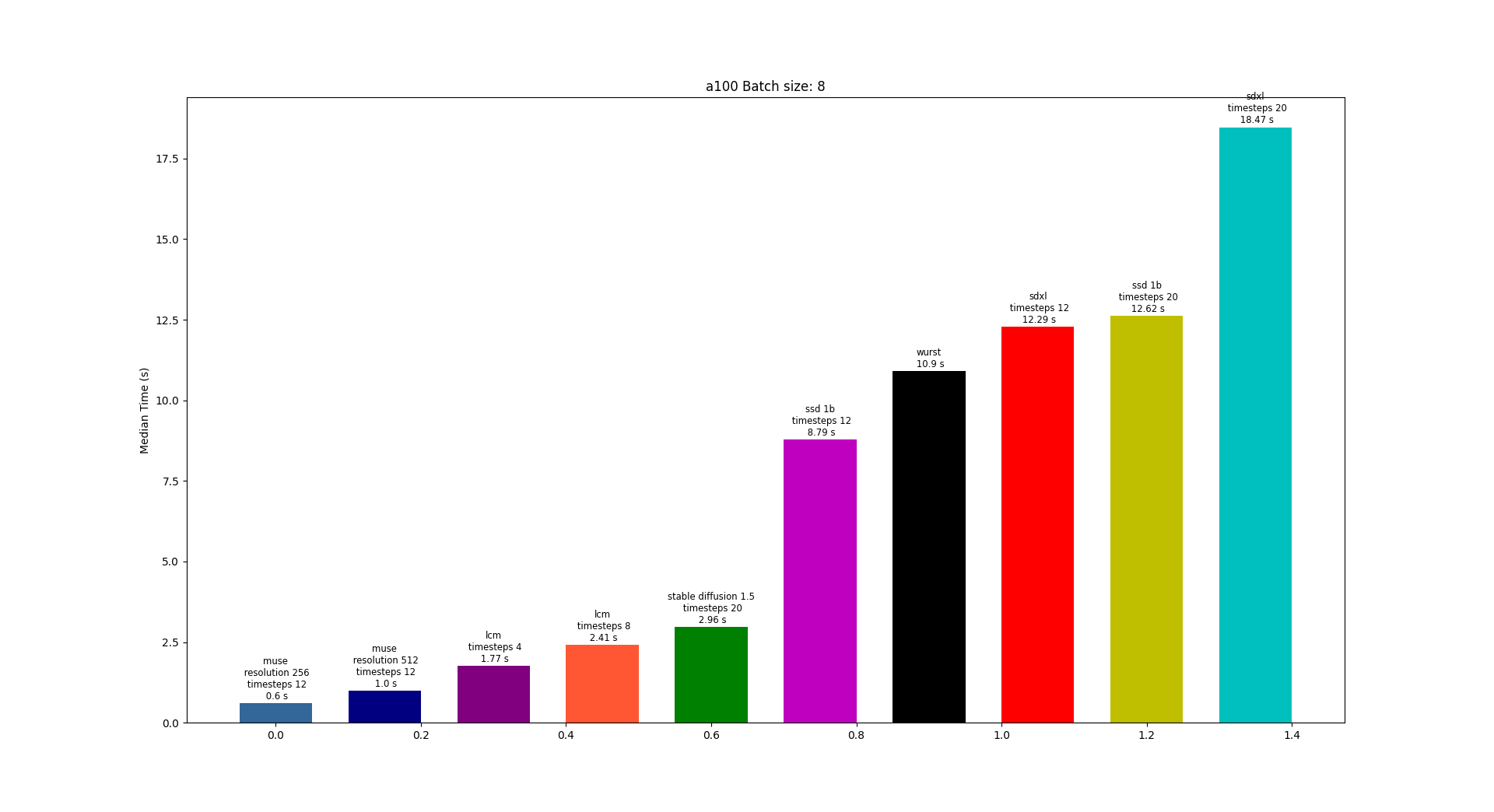

## 2. Performance

|

| 194 |

+

|

| 195 |

+

Amused inherits performance benefits from original [muse](https://arxiv.org/pdf/2301.00704.pdf).

|

| 196 |

+

|

| 197 |

+

1. Parallel decoding: The model follows a denoising schedule that aims to unmask some percent of tokens at each denoising step. At each step, all masked tokens are predicted, and some number of tokens that the network is most confident about are unmasked. Because multiple tokens are predicted at once, we can generate a full 256x256 or 512x512 image in around 12 steps. In comparison, an autoregressive model must predict a single token at a time. Note that a 256x256 image with the 16x downsampled VAE that muse uses will have 256 tokens.

|

| 198 |

+

|

| 199 |

+

2. Fewer sampling steps: Compared to many diffusion models, muse requires fewer samples.

|

| 200 |

+

|

| 201 |

+

Additionally, amused uses the smaller CLIP as its text encoder instead of T5 compared to muse. Amused is also smaller with ~600M params compared the largest 3B param muse model. Note that being smaller, amused produces comparably lower quality results.

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

### Muse performance knobs

|

| 209 |

+

|

| 210 |

+

| | Uncompiled Transformer + regular attention | Uncompiled Transformer + flash attention (ms) | Compiled Transformer (ms) | Speed Up |

|

| 211 |

+

|---------------------|--------------------------------------------|-------------------------|----------------------|----------|

|

| 212 |

+

| 256 Batch Size 1 | 594.7 | 507.7 | 212.1 | 58% |

|

| 213 |

+

| 512 Batch Size 1 | 637 | 547 | 249.9 | 54% |

|

| 214 |

+

| 256 Batch Size 8 | 719 | 628.6 | 427.8 | 32% |

|

| 215 |

+

| 512 Batch Size 8 | 1000 | 917.7 | 703.6 | 23% |

|

| 216 |

+

|

| 217 |

+

Flash attention is enabled by default in the diffusers codebase through torch `F.scaled_dot_product_attention`

|

| 218 |

+

|

| 219 |

+

### torch.compile

|

| 220 |

+

To use torch.compile, simply wrap the transformer in torch.compile i.e.

|

| 221 |

+

|

| 222 |

+

```python

|

| 223 |

+

pipe.transformer = torch.compile(pipe.transformer)

|

| 224 |

+

```

|

| 225 |

+

|

| 226 |

+

Full snippet:

|

| 227 |

+

|

| 228 |

+

```python

|

| 229 |

+

import torch

|

| 230 |

+

from diffusers import AmusedPipeline

|

| 231 |

+

|

| 232 |

+

pipe = AmusedPipeline.from_pretrained(

|

| 233 |

+

"huggingface/amused-256", variant="fp16", torch_dtype=torch.float16

|

| 234 |

+

)

|

| 235 |

+

|

| 236 |

+

# HERE use torch.compile

|

| 237 |

+

pipe.transformer = torch.compile(pipe.transformer)

|

| 238 |

+

|

| 239 |

+

pipe.vqvae.to(torch.float32) # vqvae is producing nans in fp16

|

| 240 |

+

pipe = pipe.to("cuda")

|

| 241 |

+

|

| 242 |

+

prompt = "cowboy"

|

| 243 |

+

image = pipe(prompt, generator=torch.Generator('cuda').manual_seed(8)).images[0]

|

| 244 |

+

image.save('text2image_256.png')

|

| 245 |

+

```

|

| 246 |

+

|

| 247 |

+

## 3. Training

|

| 248 |

+

|

| 249 |

+

Amused can be finetuned on simple datasets relatively cheaply and quickly. Using 8bit optimizers, lora, and gradient accumulation, amused can be finetuned with as little as 5.5 GB. Here are a set of examples for finetuning amused on some relatively simple datasets. These training recipies are aggressively oriented towards minimal resources and fast verification -- i.e. the batch sizes are quite low and the learning rates are quite high. For optimal quality, you will probably want to increase the batch sizes and decrease learning rates.

|

| 250 |

+

|

| 251 |

+

All training examples use fp16 mixed precision and gradient checkpointing. We don't show 8 bit adam + lora as its about the same memory use as just using lora (bitsandbytes uses full precision optimizer states for weights below a minimum size).

|

| 252 |

+

|

| 253 |

+

### Finetuning the 256 checkpoint

|

| 254 |

+

|

| 255 |

+

These examples finetune on this [nouns](https://huggingface.co/datasets/m1guelpf/nouns) dataset.

|

| 256 |

+

|

| 257 |

+

Example results:

|

| 258 |

+

|

| 259 |

+

|

| 260 |

+

|

| 261 |

+

#### Full finetuning

|

| 262 |

+

|

| 263 |

+

Batch size: 8, Learning rate: 1e-4, Gives decent results in 750-1000 steps

|

| 264 |

+

|

| 265 |

+

| Batch Size | Gradient Accumulation Steps | Effective Total Batch Size | Memory Used |

|

| 266 |

+

|------------|-----------------------------|------------------|-------------|

|

| 267 |

+

| 8 | 1 | 8 | 19.7 GB |

|

| 268 |

+

| 4 | 2 | 8 | 18.3 GB |

|

| 269 |

+

| 1 | 8 | 8 | 17.9 GB |

|

| 270 |

+

|

| 271 |

+

```sh

|

| 272 |

+

accelerate launch training/training.py \

|

| 273 |

+

--output_dir <output path> \

|

| 274 |

+

--train_batch_size <batch size> \

|

| 275 |

+

--gradient_accumulation_steps <gradient accumulation steps> \

|

| 276 |

+

--learning_rate 1e-4 \

|

| 277 |

+

--pretrained_model_name_or_path huggingface/amused-256 \

|

| 278 |

+

--instance_data_dataset 'm1guelpf/nouns' \

|

| 279 |

+

--image_key image \

|

| 280 |

+

--prompt_key text \

|

| 281 |

+

--resolution 256 \

|

| 282 |

+

--mixed_precision fp16 \

|

| 283 |

+

--lr_scheduler constant \

|

| 284 |

+

--validation_prompts \

|

| 285 |

+

'a pixel art character with square red glasses, a baseball-shaped head and a orange-colored body on a dark background' \

|

| 286 |

+

'a pixel art character with square orange glasses, a lips-shaped head and a red-colored body on a light background' \

|

| 287 |

+

'a pixel art character with square blue glasses, a microwave-shaped head and a purple-colored body on a sunny background' \

|

| 288 |

+

'a pixel art character with square red glasses, a baseball-shaped head and a blue-colored body on an orange background' \

|

| 289 |

+

'a pixel art character with square red glasses' \

|

| 290 |

+

'a pixel art character' \

|

| 291 |

+

'square red glasses on a pixel art character' \

|

| 292 |

+

'square red glasses on a pixel art character with a baseball-shaped head' \

|

| 293 |

+

--max_train_steps 10000 \

|

| 294 |

+

--checkpointing_steps 500 \

|

| 295 |

+

--validation_steps 250 \

|

| 296 |

+

--gradient_checkpointing

|

| 297 |

+

```

|

| 298 |

+

|

| 299 |

+

#### Full finetuning + 8 bit adam

|

| 300 |

+

|

| 301 |

+

Note that this training config keeps the batch size low and the learning rate high to get results fast with low resources. However, due to 8 bit adam, it will diverge eventually. If you want to train for longer, you will have to up the batch size and lower the learning rate.

|

| 302 |

+

|

| 303 |

+

Batch size: 16, Learning rate: 2e-5, Gives decent results in ~750 steps

|

| 304 |

+

|

| 305 |

+

| Batch Size | Gradient Accumulation Steps | Effective Total Batch Size | Memory Used |

|

| 306 |

+

|------------|-----------------------------|------------------|-------------|

|

| 307 |

+

| 16 | 1 | 16 | 20.1 GB |

|

| 308 |

+

| 8 | 2 | 16 | 15.6 GB |

|

| 309 |

+

| 1 | 16 | 16 | 10.7 GB |

|

| 310 |

+

|

| 311 |

+

```sh

|

| 312 |

+

accelerate launch training/training.py \

|

| 313 |

+

--output_dir <output path> \

|

| 314 |

+

--train_batch_size <batch size> \

|

| 315 |

+

--gradient_accumulation_steps <gradient accumulation steps> \

|

| 316 |

+

--learning_rate 2e-5 \

|

| 317 |

+

--use_8bit_adam \

|

| 318 |

+

--pretrained_model_name_or_path huggingface/amused-256 \

|

| 319 |

+

--instance_data_dataset 'm1guelpf/nouns' \

|

| 320 |

+

--image_key image \

|

| 321 |

+

--prompt_key text \

|

| 322 |

+

--resolution 256 \

|

| 323 |

+

--mixed_precision fp16 \

|

| 324 |

+

--lr_scheduler constant \

|

| 325 |

+

--validation_prompts \

|

| 326 |

+

'a pixel art character with square red glasses, a baseball-shaped head and a orange-colored body on a dark background' \

|

| 327 |

+

'a pixel art character with square orange glasses, a lips-shaped head and a red-colored body on a light background' \

|

| 328 |

+

'a pixel art character with square blue glasses, a microwave-shaped head and a purple-colored body on a sunny background' \

|

| 329 |

+

'a pixel art character with square red glasses, a baseball-shaped head and a blue-colored body on an orange background' \

|

| 330 |

+

'a pixel art character with square red glasses' \

|

| 331 |

+

'a pixel art character' \

|

| 332 |

+

'square red glasses on a pixel art character' \

|

| 333 |

+

'square red glasses on a pixel art character with a baseball-shaped head' \

|

| 334 |

+

--max_train_steps 10000 \

|

| 335 |

+

--checkpointing_steps 500 \

|

| 336 |

+

--validation_steps 250 \

|

| 337 |

+

--gradient_checkpointing

|

| 338 |

+

```

|

| 339 |

+

|

| 340 |

+

#### Full finetuning + lora

|

| 341 |

+

|

| 342 |

+

Batch size: 16, Learning rate: 8e-4, Gives decent results in 1000-1250 steps

|

| 343 |

+

|

| 344 |

+

| Batch Size | Gradient Accumulation Steps | Effective Total Batch Size | Memory Used |

|

| 345 |

+

|------------|-----------------------------|------------------|-------------|

|

| 346 |

+

| 16 | 1 | 16 | 14.1 GB |

|

| 347 |

+

| 8 | 2 | 16 | 10.1 GB |

|

| 348 |

+

| 1 | 16 | 16 | 6.5 GB |

|

| 349 |

+

|

| 350 |

+

```sh

|

| 351 |

+

accelerate launch training/training.py \

|

| 352 |

+

--output_dir <output path> \

|

| 353 |

+

--train_batch_size <batch size> \

|

| 354 |

+

--gradient_accumulation_steps <gradient accumulation steps> \

|

| 355 |

+

--learning_rate 8e-4 \

|

| 356 |

+

--use_lora \

|

| 357 |

+

--pretrained_model_name_or_path huggingface/amused-256 \

|

| 358 |

+

--instance_data_dataset 'm1guelpf/nouns' \

|

| 359 |

+

--image_key image \

|

| 360 |

+

--prompt_key text \

|

| 361 |

+

--resolution 256 \

|

| 362 |

+

--mixed_precision fp16 \

|

| 363 |

+

--lr_scheduler constant \

|

| 364 |

+

--validation_prompts \

|

| 365 |

+

'a pixel art character with square red glasses, a baseball-shaped head and a orange-colored body on a dark background' \

|

| 366 |

+

'a pixel art character with square orange glasses, a lips-shaped head and a red-colored body on a light background' \

|

| 367 |

+

'a pixel art character with square blue glasses, a microwave-shaped head and a purple-colored body on a sunny background' \

|

| 368 |

+

'a pixel art character with square red glasses, a baseball-shaped head and a blue-colored body on an orange background' \

|

| 369 |

+

'a pixel art character with square red glasses' \

|

| 370 |

+

'a pixel art character' \

|

| 371 |

+

'square red glasses on a pixel art character' \

|

| 372 |

+

'square red glasses on a pixel art character with a baseball-shaped head' \

|

| 373 |

+

--max_train_steps 10000 \

|

| 374 |

+

--checkpointing_steps 500 \

|

| 375 |

+

--validation_steps 250 \

|

| 376 |

+

--gradient_checkpointing

|

| 377 |

+

```

|

| 378 |

+

|

| 379 |

+

### Finetuning the 512 checkpoint

|

| 380 |

+

|

| 381 |

+

These examples finetune on this [minecraft](https://huggingface.co/monadical-labs/minecraft-preview) dataset.

|

| 382 |

+

|

| 383 |

+

Example results:

|

| 384 |

+

|

| 385 |

+

|

| 386 |

+

|

| 387 |

+

#### Full finetuning

|

| 388 |

+

|

| 389 |

+

Batch size: 8, Learning rate: 8e-5, Gives decent results in 500-1000 steps

|

| 390 |

+

|

| 391 |

+

| Batch Size | Gradient Accumulation Steps | Effective Total Batch Size | Memory Used |

|

| 392 |

+

|------------|-----------------------------|------------------|-------------|

|

| 393 |

+

| 8 | 1 | 8 | 24.2 GB |

|

| 394 |

+

| 4 | 2 | 8 | 19.7 GB |

|

| 395 |

+

| 1 | 8 | 8 | 16.99 GB |

|

| 396 |

+

|

| 397 |

+

```sh

|

| 398 |

+

accelerate launch training/training.py \

|

| 399 |

+

--output_dir <output path> \

|

| 400 |

+

--train_batch_size <batch size> \

|

| 401 |

+

--gradient_accumulation_steps <gradient accumulation steps> \

|

| 402 |

+

--learning_rate 8e-5 \

|

| 403 |

+

--pretrained_model_name_or_path huggingface/amused-512 \

|

| 404 |

+

--instance_data_dataset 'monadical-labs/minecraft-preview' \

|

| 405 |

+

--prompt_prefix 'minecraft ' \

|

| 406 |

+

--image_key image \

|

| 407 |

+

--prompt_key text \

|

| 408 |

+

--resolution 512 \

|

| 409 |

+

--mixed_precision fp16 \

|

| 410 |

+

--lr_scheduler constant \

|

| 411 |

+

--validation_prompts \

|

| 412 |

+

'minecraft Avatar' \

|

| 413 |

+

'minecraft character' \

|

| 414 |

+

'minecraft' \

|

| 415 |

+

'minecraft president' \

|

| 416 |

+

'minecraft pig' \

|

| 417 |

+

--max_train_steps 10000 \

|

| 418 |

+

--checkpointing_steps 500 \

|

| 419 |

+

--validation_steps 250 \

|

| 420 |

+

--gradient_checkpointing

|

| 421 |

+

```

|

| 422 |

+

|

| 423 |

+

#### Full finetuning + 8 bit adam

|

| 424 |

+

|

| 425 |

+

Batch size: 8, Learning rate: 5e-6, Gives decent results in 500-1000 steps

|

| 426 |

+

|

| 427 |

+

| Batch Size | Gradient Accumulation Steps | Effective Total Batch Size | Memory Used |

|

| 428 |

+

|------------|-----------------------------|------------------|-------------|

|

| 429 |

+

| 8 | 1 | 8 | 21.2 GB |

|

| 430 |

+

| 4 | 2 | 8 | 13.3 GB |

|

| 431 |

+

| 1 | 8 | 8 | 9.9 GB |

|

| 432 |

+

|

| 433 |

+

```sh

|

| 434 |

+

accelerate launch training/training.py \

|

| 435 |

+

--output_dir <output path> \

|

| 436 |

+

--train_batch_size <batch size> \

|

| 437 |

+

--gradient_accumulation_steps <gradient accumulation steps> \

|

| 438 |

+

--learning_rate 5e-6 \

|

| 439 |

+

--pretrained_model_name_or_path huggingface/amused-512 \

|

| 440 |

+

--instance_data_dataset 'monadical-labs/minecraft-preview' \

|

| 441 |

+

--prompt_prefix 'minecraft ' \

|

| 442 |

+

--image_key image \

|

| 443 |

+

--prompt_key text \

|

| 444 |

+

--resolution 512 \

|

| 445 |

+

--mixed_precision fp16 \

|

| 446 |

+

--lr_scheduler constant \

|

| 447 |

+

--validation_prompts \

|

| 448 |

+

'minecraft Avatar' \

|

| 449 |

+

'minecraft character' \

|

| 450 |

+

'minecraft' \

|

| 451 |

+

'minecraft president' \

|

| 452 |

+

'minecraft pig' \

|

| 453 |

+

--max_train_steps 10000 \

|

| 454 |

+

--checkpointing_steps 500 \

|

| 455 |

+

--validation_steps 250 \

|

| 456 |

+

--gradient_checkpointing

|

| 457 |

+

```

|

| 458 |

+

|

| 459 |

+

#### Full finetuning + lora

|

| 460 |

+

|

| 461 |

+

Batch size: 8, Learning rate: 1e-4, Gives decent results in 500-1000 steps

|

| 462 |

+

|

| 463 |

+

| Batch Size | Gradient Accumulation Steps | Effective Total Batch Size | Memory Used |

|

| 464 |

+

|------------|-----------------------------|------------------|-------------|

|

| 465 |

+

| 8 | 1 | 8 | 12.7 GB |

|

| 466 |

+

| 4 | 2 | 8 | 9.0 GB |

|

| 467 |

+

| 1 | 8 | 8 | 5.6 GB |

|

| 468 |

+

|

| 469 |

+

```sh

|

| 470 |

+

accelerate launch training/training.py \

|

| 471 |

+

--output_dir <output path> \

|

| 472 |

+

--train_batch_size <batch size> \

|

| 473 |

+

--gradient_accumulation_steps <gradient accumulation steps> \

|

| 474 |

+

--learning_rate 1e-4 \

|

| 475 |

+

--pretrained_model_name_or_path huggingface/amused-512 \

|

| 476 |

+

--instance_data_dataset 'monadical-labs/minecraft-preview' \

|

| 477 |

+

--prompt_prefix 'minecraft ' \

|

| 478 |

+

--image_key image \

|

| 479 |

+

--prompt_key text \

|

| 480 |

+

--resolution 512 \

|

| 481 |

+

--mixed_precision fp16 \

|

| 482 |

+

--lr_scheduler constant \

|

| 483 |

+

--validation_prompts \

|

| 484 |

+

'minecraft Avatar' \

|

| 485 |

+

'minecraft character' \

|

| 486 |

+

'minecraft' \

|

| 487 |

+

'minecraft president' \

|

| 488 |

+

'minecraft pig' \

|

| 489 |

+

--max_train_steps 10000 \

|

| 490 |

+

--checkpointing_steps 500 \

|

| 491 |

+

--validation_steps 250 \

|

| 492 |

+

--gradient_checkpointing

|

| 493 |

+

```

|

| 494 |

+

|

| 495 |

+

### Styledrop

|

| 496 |

+

|

| 497 |

+

[Styledrop](https://arxiv.org/abs/2306.00983) is an efficient finetuning method for learning a new style from a small number of images. It has an optional first stage to generate human picked additional training samples. The additional training samples can be used to augment the initial images. Our examples exclude the optional additional image selection stage and instead we just finetune on a single image.

|

| 498 |

+

|

| 499 |

+

This is our example style image:

|

| 500 |

+

|

| 501 |

+

|

| 502 |

+

#### 256

|

| 503 |

+

|

| 504 |

+

Example results:

|

| 505 |

+

|

| 506 |

+

|

| 507 |

+

|

| 508 |

+

Learning rate: 4e-4, Gives decent results in 1500-2000 steps

|

| 509 |

+

|

| 510 |

+

```sh

|

| 511 |

+

accelerate launch ./training/training.py \

|

| 512 |

+

--output_dir <output path> \

|

| 513 |

+

--mixed_precision fp16 \

|

| 514 |

+

--report_to wandb \

|

| 515 |

+

--use_lora \

|

| 516 |

+

--pretrained_model_name_or_path huggingface/amused-256 \

|

| 517 |

+

--train_batch_size 1 \

|

| 518 |

+

--lr_scheduler constant \

|

| 519 |

+

--learning_rate 4e-4 \

|

| 520 |

+

--validation_prompts \

|

| 521 |

+

'A chihuahua walking on the street in [V] style' \

|

| 522 |

+

'A banana on the table in [V] style' \

|

| 523 |

+

'A church on the street in [V] style' \

|

| 524 |

+

'A tabby cat walking in the forest in [V] style' \

|

| 525 |

+

--instance_data_image './training/A mushroom in [V] style.png' \

|

| 526 |

+

--max_train_steps 10000 \

|

| 527 |

+

--checkpointing_steps 500 \

|

| 528 |

+

--validation_steps 100 \

|

| 529 |

+

--resolution 256

|

| 530 |

+

```

|

| 531 |

+

|

| 532 |

+

#### 512

|

| 533 |

+

|

| 534 |

+

Learning rate: 1e-3, Lora alpha 1, Gives decent results in 1500-2000 steps

|

| 535 |

+

|

| 536 |

+

Example results:

|

| 537 |

+

|

| 538 |

+

|

| 539 |

+

|

| 540 |

+

```

|

| 541 |

+

accelerate launch ./training/training.py \

|

| 542 |

+

--output_dir ../styledrop \

|

| 543 |

+

--mixed_precision fp16 \

|

| 544 |

+

--report_to wandb \

|

| 545 |

+

--use_lora \

|

| 546 |

+

--pretrained_model_name_or_path huggingface/amused-512 \

|

| 547 |

+

--train_batch_size 1 \

|

| 548 |

+

--lr_scheduler constant \

|

| 549 |

+

--learning_rate 1e-3 \

|

| 550 |

+

--validation_prompts \

|

| 551 |

+

'A chihuahua walking on the street in [V] style' \

|

| 552 |

+

'A banana on the table in [V] style' \

|

| 553 |

+

'A church on the street in [V] style' \

|

| 554 |

+

'A tabby cat walking in the forest in [V] style' \

|

| 555 |

+

--instance_data_image './training/A mushroom in [V] style.png' \

|

| 556 |

+

--max_train_steps 100000 \

|

| 557 |

+

--checkpointing_steps 500 \

|

| 558 |

+

--validation_steps 100 \

|

| 559 |

+

--resolution 512 \

|

| 560 |

+

--lora_alpha 1

|

| 561 |

+

```

|

| 562 |

+

|

| 563 |

+

## 4. Acknowledgements

|

| 564 |

+

|

| 565 |

+

TODO

|

| 566 |

+

|

| 567 |

+

## 5. Citation

|

| 568 |

+

```

|

| 569 |

+

@misc{patil-etal-2023-amused,

|

| 570 |

+

author = {Suraj Patil and William Berman and Patrick von Platen},

|

| 571 |

+

title = {Amused: An open MUSE model},

|

| 572 |

+

year = {2023},

|

| 573 |

+

publisher = {GitHub},

|

| 574 |

+

journal = {GitHub repository},

|

| 575 |

+

howpublished = {\url{https://github.com/huggingface/amused}}

|

| 576 |

+

}

|

| 577 |

+

```

|

assets/4090_bs_1.png

ADDED

|

assets/4090_bs_8.png

ADDED

|

assets/a100_bs_1.png

ADDED

|

assets/a100_bs_8.png

ADDED

|

assets/collage_full.png

ADDED

|

Git LFS Details

|

assets/collage_small.png

ADDED

|

Git LFS Details

|

assets/glowing_256_1.png

ADDED

|

assets/glowing_256_2.png

ADDED

|

assets/glowing_256_3.png

ADDED

|

assets/glowing_512_1.png

ADDED

|

assets/glowing_512_2.png

ADDED

|

assets/glowing_512_3.png

ADDED

|

assets/image2image_256.png

ADDED

|

assets/image2image_256_orig.png

ADDED

|

assets/image2image_512.png

ADDED

|

assets/image2image_512_orig.png

ADDED

|

assets/inpainting_256.png

ADDED

|

assets/inpainting_256_mask.png

ADDED

|

assets/inpainting_256_orig.png

ADDED

|

assets/inpainting_512.png

ADDED

|

assets/inpainting_512_mask.png

ADDED

|

assets/inpainting_512_orig.jpeg

ADDED

|

assets/minecraft1.png

ADDED

|

assets/minecraft2.png

ADDED

|

assets/minecraft3.png

ADDED

|

assets/noun1.png

ADDED

|

assets/noun2.png

ADDED

|

assets/noun3.png

ADDED

|

assets/text2image_256.png

ADDED

|

assets/text2image_512.png

ADDED

|

model_index.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "AmusedPipeline",

|

| 3 |

+

"_diffusers_version": "0.25.0.dev0",

|

| 4 |

+

"scheduler": [

|

| 5 |

+

"diffusers",

|

| 6 |

+

"AmusedScheduler"

|

| 7 |

+

],

|

| 8 |

+

"text_encoder": [

|

| 9 |

+

"transformers",

|

| 10 |

+

"CLIPTextModelWithProjection"

|

| 11 |

+

],

|

| 12 |

+

"tokenizer": [

|

| 13 |

+

"transformers",

|

| 14 |

+

"CLIPTokenizer"

|

| 15 |

+

],

|

| 16 |

+

"transformer": [

|

| 17 |

+

"diffusers",

|

| 18 |

+

"UVit2DModel"

|

| 19 |

+

],

|

| 20 |

+

"vqvae": [

|

| 21 |

+

"diffusers",

|

| 22 |

+

"VQModel"

|

| 23 |

+

]

|

| 24 |

+

}

|

scheduler/scheduler_config.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "AmusedScheduler",

|

| 3 |

+

"_diffusers_version": "0.25.0.dev0",

|

| 4 |

+

"mask_token_id": 8255,

|

| 5 |

+

"masking_schedule": "cosine"

|

| 6 |

+

}

|

text_encoder/config.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"CLIPTextModelWithProjection"

|

| 4 |

+

],

|

| 5 |

+

"attention_dropout": 0.0,

|

| 6 |

+

"bos_token_id": 0,

|

| 7 |

+

"dropout": 0.0,

|

| 8 |

+

"eos_token_id": 2,

|

| 9 |

+

"hidden_act": "quick_gelu",

|

| 10 |

+

"hidden_size": 768,

|

| 11 |

+

"initializer_factor": 1.0,

|

| 12 |

+

"initializer_range": 0.02,

|

| 13 |

+

"intermediate_size": 3072,

|

| 14 |

+

"layer_norm_eps": 1e-05,

|

| 15 |

+

"max_position_embeddings": 77,

|

| 16 |

+

"model_type": "clip_text_model",

|

| 17 |

+

"num_attention_heads": 12,

|

| 18 |

+

"num_hidden_layers": 12,

|

| 19 |

+

"pad_token_id": 1,

|

| 20 |

+

"projection_dim": 768,

|

| 21 |

+

"torch_dtype": "float32",

|

| 22 |

+

"transformers_version": "4.34.1",

|

| 23 |

+

"vocab_size": 49408

|

| 24 |

+

}

|

text_encoder/model.fp16.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:549d39f40a16f8ef48ed56da60cd25a467bd2c70866f4d49196829881b13b7b2

|

| 3 |

+

size 247323896

|

text_encoder/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dae0eabbb1fd83756ed9dd893c17ff2f6825c98555a1e1b96154e2df0739b9e2

|

| 3 |

+

size 494624560

|

tokenizer/merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer/special_tokens_map.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<|startoftext|>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": true,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "<|endoftext|>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": true,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": {

|

| 17 |

+

"content": "!",

|

| 18 |

+

"lstrip": false,

|

| 19 |

+

"normalized": false,

|

| 20 |

+

"rstrip": false,

|

| 21 |

+

"single_word": false

|

| 22 |

+

},

|

| 23 |

+

"unk_token": {

|

| 24 |

+

"content": "<|endoftext|>",

|

| 25 |

+

"lstrip": false,

|

| 26 |

+

"normalized": true,

|

| 27 |

+

"rstrip": false,

|

| 28 |

+

"single_word": false

|

| 29 |

+

}

|

| 30 |

+

}

|

tokenizer/tokenizer_config.json

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_prefix_space": false,

|

| 3 |

+

"added_tokens_decoder": {

|

| 4 |

+

"0": {

|

| 5 |

+

"content": "!",

|

| 6 |

+

"lstrip": false,

|

| 7 |

+

"normalized": false,

|

| 8 |

+

"rstrip": false,

|

| 9 |

+

"single_word": false,

|

| 10 |

+

"special": true

|

| 11 |

+

},

|

| 12 |

+

"49406": {

|

| 13 |

+

"content": "<|startoftext|>",

|

| 14 |

+

"lstrip": false,

|

| 15 |

+

"normalized": true,

|

| 16 |

+

"rstrip": false,

|

| 17 |

+

"single_word": false,

|

| 18 |

+

"special": true

|

| 19 |

+

},

|

| 20 |

+

"49407": {

|

| 21 |

+

"content": "<|endoftext|>",

|

| 22 |

+

"lstrip": false,

|

| 23 |

+

"normalized": true,

|

| 24 |

+

"rstrip": false,

|

| 25 |

+

"single_word": false,

|

| 26 |

+

"special": true

|

| 27 |

+

}

|

| 28 |

+

},

|

| 29 |

+

"bos_token": "<|startoftext|>",

|

| 30 |

+

"clean_up_tokenization_spaces": true,

|

| 31 |

+

"do_lower_case": true,

|

| 32 |

+

"eos_token": "<|endoftext|>",

|

| 33 |

+

"errors": "replace",

|

| 34 |

+

"model_max_length": 77,

|

| 35 |

+

"pad_token": "!",

|

| 36 |

+

"tokenizer_class": "CLIPTokenizer",

|

| 37 |

+

"unk_token": "<|endoftext|>"

|

| 38 |

+

}

|

tokenizer/vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

training/A mushroom in [V] style.png

ADDED

|

training/A woman working on a laptop in [V] style.jpg

ADDED

|

Git LFS Details

|

training/generate_images.py

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import logging

|

| 3 |

+

from diffusers import AmusedPipeline

|

| 4 |

+

import os

|

| 5 |

+

from peft import PeftModel

|

| 6 |

+

from diffusers import UVit2DModel

|

| 7 |

+

|

| 8 |

+

logger = logging.getLogger(__name__)

|

| 9 |

+

|

| 10 |

+

def parse_args():

|

| 11 |

+

parser = argparse.ArgumentParser()

|

| 12 |

+

parser.add_argument(

|

| 13 |

+

"--pretrained_model_name_or_path",

|

| 14 |

+

type=str,

|

| 15 |

+

default=None,

|

| 16 |

+

required=True,

|

| 17 |

+

help="Path to pretrained model or model identifier from huggingface.co/models.",

|

| 18 |

+

)

|

| 19 |

+

parser.add_argument(

|

| 20 |

+

"--revision",

|

| 21 |

+

type=str,

|

| 22 |

+

default=None,

|

| 23 |

+

required=False,

|

| 24 |

+

help="Revision of pretrained model identifier from huggingface.co/models.",

|

| 25 |

+

)

|

| 26 |

+

parser.add_argument(

|

| 27 |

+

"--variant",

|

| 28 |

+

type=str,

|

| 29 |

+

default=None,

|

| 30 |

+

help="Variant of the model files of the pretrained model identifier from huggingface.co/models, 'e.g.' fp16",

|

| 31 |

+

)

|

| 32 |

+

parser.add_argument("--style_descriptor", type=str, default="[V]")

|

| 33 |

+

parser.add_argument(

|

| 34 |

+

"--load_transformer_from",

|

| 35 |

+