Commit

•

0ca1180

1

Parent(s):

6ac0d17

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +8 -0

- Dockerfile +89 -0

- LICENSE +21 -0

- README.md +13 -0

- app.py +426 -0

- assets/BBOX_SHIFT.md +26 -0

- assets/demo/man/man.png +3 -0

- assets/demo/monalisa/monalisa.png +0 -0

- assets/demo/musk/musk.png +0 -0

- assets/demo/sit/sit.jpeg +0 -0

- assets/demo/sun1/sun.png +0 -0

- assets/demo/sun2/sun.png +0 -0

- assets/demo/video1/video1.png +0 -0

- assets/demo/yongen/yongen.jpeg +0 -0

- assets/figs/landmark_ref.png +0 -0

- assets/figs/musetalk_arc.jpg +0 -0

- configs/inference/test.yaml +14 -0

- data/audio/sun.wav +3 -0

- data/audio/yongen.wav +3 -0

- data/video/fake.mp4 +0 -0

- data/video/sun.mp4 +3 -0

- data/video/yongen.mp4 +3 -0

- entrypoint.sh +11 -0

- insta.sh +5 -0

- install_ffmpeg.sh +70 -0

- models/.gitattributes +35 -0

- models/.huggingface/.gitignore +1 -0

- models/.huggingface/download/.gitattributes.lock +0 -0

- models/.huggingface/download/.gitattributes.metadata +3 -0

- models/.huggingface/download/README.md.lock +0 -0

- models/.huggingface/download/README.md.metadata +3 -0

- models/.huggingface/download/musetalk/musetalk.json.lock +0 -0

- models/.huggingface/download/musetalk/musetalk.json.metadata +3 -0

- models/.huggingface/download/musetalk/pytorch_model.bin.lock +0 -0

- models/.huggingface/download/musetalk/pytorch_model.bin.metadata +3 -0

- models/README.md +259 -0

- models/dwpose/.gitattributes +35 -0

- models/dwpose/.huggingface/.gitignore +1 -0

- models/dwpose/.huggingface/download/.gitattributes.lock +0 -0

- models/dwpose/.huggingface/download/.gitattributes.metadata +3 -0

- models/dwpose/.huggingface/download/README.md.lock +0 -0

- models/dwpose/.huggingface/download/README.md.metadata +3 -0

- models/dwpose/.huggingface/download/dw-ll_ucoco.pth.lock +0 -0

- models/dwpose/.huggingface/download/dw-ll_ucoco.pth.metadata +3 -0

- models/dwpose/.huggingface/download/dw-ll_ucoco_384.onnx.lock +0 -0

- models/dwpose/.huggingface/download/dw-ll_ucoco_384.onnx.metadata +3 -0

- models/dwpose/.huggingface/download/dw-ll_ucoco_384.pth.lock +0 -0

- models/dwpose/.huggingface/download/dw-ll_ucoco_384.pth.metadata +3 -0

- models/dwpose/.huggingface/download/dw-mm_ucoco.pth.lock +0 -0

- models/dwpose/.huggingface/download/dw-mm_ucoco.pth.metadata +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,11 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/demo/man/man.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/audio/sun.wav filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

data/audio/yongen.wav filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

data/video/sun.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

data/video/yongen.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

results/input/outputxxx_sun_yongen.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

results/output/outputxxx_sun_yongen_audio.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

results/sun_sun.mp4 filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,89 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM anchorxia/musev:latest

|

| 2 |

+

|

| 3 |

+

#MAINTAINER 维护者信息

|

| 4 |

+

LABEL MAINTAINER="zkangchen"

|

| 5 |

+

LABEL Email="zkangchen@tencent.com"

|

| 6 |

+

LABEL Description="musev gradio image, from docker pull anchorxia/musev:latest"

|

| 7 |

+

|

| 8 |

+

SHELL ["/bin/bash", "--login", "-c"]

|

| 9 |

+

|

| 10 |

+

# Set up a new user named "user" with user ID 1000

|

| 11 |

+

RUN useradd -m -u 1000 user

|

| 12 |

+

|

| 13 |

+

# Switch to the "user" user

|

| 14 |

+

USER user

|

| 15 |

+

|

| 16 |

+

# Set home to the user's home directory

|

| 17 |

+

ENV HOME=/home/user \

|

| 18 |

+

PATH=/home/user/.local/bin:$PATH

|

| 19 |

+

|

| 20 |

+

# Set the working directory to the user's home directory

|

| 21 |

+

WORKDIR $HOME/app

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

################################################# INSTALLING FFMPEG ##################################################

|

| 25 |

+

# RUN apt-get update ; apt-get install -y git build-essential gcc make yasm autoconf automake cmake libtool checkinstall libmp3lame-dev pkg-config libunwind-dev zlib1g-dev libssl-dev

|

| 26 |

+

|

| 27 |

+

# RUN apt-get update \

|

| 28 |

+

# && apt-get clean \

|

| 29 |

+

# && apt-get install -y --no-install-recommends libc6-dev libgdiplus wget software-properties-common

|

| 30 |

+

|

| 31 |

+

#RUN RUN apt-add-repository ppa:git-core/ppa && apt-get update && apt-get install -y git

|

| 32 |

+

|

| 33 |

+

# RUN wget https://www.ffmpeg.org/releases/ffmpeg-4.0.2.tar.gz

|

| 34 |

+

# RUN tar -xzf ffmpeg-4.0.2.tar.gz; rm -r ffmpeg-4.0.2.tar.gz

|

| 35 |

+

# RUN cd ./ffmpeg-4.0.2; ./configure --enable-gpl --enable-libmp3lame --enable-decoder=mjpeg,png --enable-encoder=png --enable-openssl --enable-nonfree

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# RUN cd ./ffmpeg-4.0.2; make

|

| 39 |

+

# RUN cd ./ffmpeg-4.0.2; make install

|

| 40 |

+

######################################################################################################################

|

| 41 |

+

|

| 42 |

+

RUN echo "docker start"\

|

| 43 |

+

&& whoami \

|

| 44 |

+

&& which python \

|

| 45 |

+

&& pwd

|

| 46 |

+

|

| 47 |

+

RUN git clone -b main --recursive https://github.com/TMElyralab/MuseTalk.git

|

| 48 |

+

|

| 49 |

+

RUN chmod -R 777 /home/user/app/MuseTalk

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

RUN . /opt/conda/etc/profile.d/conda.sh \

|

| 54 |

+

&& echo "source activate musev" >> ~/.bashrc \

|

| 55 |

+

&& conda activate musev \

|

| 56 |

+

&& conda env list

|

| 57 |

+

# && conda install ffmpeg

|

| 58 |

+

|

| 59 |

+

RUN ffmpeg -codecs

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

WORKDIR /home/user/app/MuseTalk/

|

| 66 |

+

|

| 67 |

+

RUN pip install -r requirements.txt \

|

| 68 |

+

&& pip install --no-cache-dir -U openmim \

|

| 69 |

+

&& mim install mmengine \

|

| 70 |

+

&& mim install "mmcv>=2.0.1" \

|

| 71 |

+

&& mim install "mmdet>=3.1.0" \

|

| 72 |

+

&& mim install "mmpose>=1.1.0"

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

# Add entrypoint script

|

| 76 |

+

#RUN chmod 777 ./entrypoint.sh

|

| 77 |

+

RUN ls -l ./

|

| 78 |

+

|

| 79 |

+

EXPOSE 7860

|

| 80 |

+

|

| 81 |

+

# CMD ["/bin/bash", "-c", "python app.py"]

|

| 82 |

+

CMD ["./install_ffmpeg.sh"]

|

| 83 |

+

CMD ["./entrypoint.sh"]

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 TMElyralab

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: MuseTalkDemo

|

| 3 |

+

emoji: 🌍

|

| 4 |

+

colorFrom: gray

|

| 5 |

+

colorTo: purple

|

| 6 |

+

sdk: docker

|

| 7 |

+

pinned: false

|

| 8 |

+

license: creativeml-openrail-m

|

| 9 |

+

app_file: app.py

|

| 10 |

+

app_port: 7860

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,426 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import time

|

| 3 |

+

import pdb

|

| 4 |

+

import re

|

| 5 |

+

|

| 6 |

+

import gradio as gr

|

| 7 |

+

import spaces

|

| 8 |

+

import numpy as np

|

| 9 |

+

import sys

|

| 10 |

+

import subprocess

|

| 11 |

+

|

| 12 |

+

from huggingface_hub import snapshot_download

|

| 13 |

+

import requests

|

| 14 |

+

|

| 15 |

+

import argparse

|

| 16 |

+

import os

|

| 17 |

+

from omegaconf import OmegaConf

|

| 18 |

+

import numpy as np

|

| 19 |

+

import cv2

|

| 20 |

+

import torch

|

| 21 |

+

import glob

|

| 22 |

+

import pickle

|

| 23 |

+

from tqdm import tqdm

|

| 24 |

+

import copy

|

| 25 |

+

from argparse import Namespace

|

| 26 |

+

import shutil

|

| 27 |

+

import gdown

|

| 28 |

+

import imageio

|

| 29 |

+

import ffmpeg

|

| 30 |

+

from moviepy.editor import *

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

ProjectDir = os.path.abspath(os.path.dirname(__file__))

|

| 34 |

+

CheckpointsDir = os.path.join(ProjectDir, "models")

|

| 35 |

+

|

| 36 |

+

def print_directory_contents(path):

|

| 37 |

+

for child in os.listdir(path):

|

| 38 |

+

child_path = os.path.join(path, child)

|

| 39 |

+

if os.path.isdir(child_path):

|

| 40 |

+

print(child_path)

|

| 41 |

+

|

| 42 |

+

def download_model():

|

| 43 |

+

if not os.path.exists(CheckpointsDir):

|

| 44 |

+

os.makedirs(CheckpointsDir)

|

| 45 |

+

print("Checkpoint Not Downloaded, start downloading...")

|

| 46 |

+

tic = time.time()

|

| 47 |

+

snapshot_download(

|

| 48 |

+

repo_id="TMElyralab/MuseTalk",

|

| 49 |

+

local_dir=CheckpointsDir,

|

| 50 |

+

max_workers=8,

|

| 51 |

+

local_dir_use_symlinks=True,

|

| 52 |

+

force_download=True, resume_download=False

|

| 53 |

+

)

|

| 54 |

+

# weight

|

| 55 |

+

os.makedirs(f"{CheckpointsDir}/sd-vae-ft-mse/")

|

| 56 |

+

snapshot_download(

|

| 57 |

+

repo_id="stabilityai/sd-vae-ft-mse",

|

| 58 |

+

local_dir=CheckpointsDir+'/sd-vae-ft-mse',

|

| 59 |

+

max_workers=8,

|

| 60 |

+

local_dir_use_symlinks=True,

|

| 61 |

+

force_download=True, resume_download=False

|

| 62 |

+

)

|

| 63 |

+

#dwpose

|

| 64 |

+

os.makedirs(f"{CheckpointsDir}/dwpose/")

|

| 65 |

+

snapshot_download(

|

| 66 |

+

repo_id="yzd-v/DWPose",

|

| 67 |

+

local_dir=CheckpointsDir+'/dwpose',

|

| 68 |

+

max_workers=8,

|

| 69 |

+

local_dir_use_symlinks=True,

|

| 70 |

+

force_download=True, resume_download=False

|

| 71 |

+

)

|

| 72 |

+

#vae

|

| 73 |

+

url = "https://openaipublic.azureedge.net/main/whisper/models/65147644a518d12f04e32d6f3b26facc3f8dd46e5390956a9424a650c0ce22b9/tiny.pt"

|

| 74 |

+

response = requests.get(url)

|

| 75 |

+

# 确保请求成功

|

| 76 |

+

if response.status_code == 200:

|

| 77 |

+

# 指定文件保存的位置

|

| 78 |

+

file_path = f"{CheckpointsDir}/whisper/tiny.pt"

|

| 79 |

+

os.makedirs(f"{CheckpointsDir}/whisper/")

|

| 80 |

+

# 将文件内容写入指定位置

|

| 81 |

+

with open(file_path, "wb") as f:

|

| 82 |

+

f.write(response.content)

|

| 83 |

+

else:

|

| 84 |

+

print(f"请求失败,状态码:{response.status_code}")

|

| 85 |

+

#gdown face parse

|

| 86 |

+

url = "https://drive.google.com/uc?id=154JgKpzCPW82qINcVieuPH3fZ2e0P812"

|

| 87 |

+

os.makedirs(f"{CheckpointsDir}/face-parse-bisent/")

|

| 88 |

+

file_path = f"{CheckpointsDir}/face-parse-bisent/79999_iter.pth"

|

| 89 |

+

gdown.download(url, file_path, quiet=False)

|

| 90 |

+

#resnet

|

| 91 |

+

url = "https://download.pytorch.org/models/resnet18-5c106cde.pth"

|

| 92 |

+

response = requests.get(url)

|

| 93 |

+

# 确保请求成功

|

| 94 |

+

if response.status_code == 200:

|

| 95 |

+

# 指定文件保存的位置

|

| 96 |

+

file_path = f"{CheckpointsDir}/face-parse-bisent/resnet18-5c106cde.pth"

|

| 97 |

+

# 将文件内容写入指定位置

|

| 98 |

+

with open(file_path, "wb") as f:

|

| 99 |

+

f.write(response.content)

|

| 100 |

+

else:

|

| 101 |

+

print(f"请求失败,状态码:{response.status_code}")

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

toc = time.time()

|

| 105 |

+

|

| 106 |

+

print(f"download cost {toc-tic} seconds")

|

| 107 |

+

print_directory_contents(CheckpointsDir)

|

| 108 |

+

|

| 109 |

+

else:

|

| 110 |

+

print("Already download the model.")

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

download_model() # for huggingface deployment.

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

from musetalk.utils.utils import get_file_type,get_video_fps,datagen

|

| 120 |

+

from musetalk.utils.preprocessing import get_landmark_and_bbox,read_imgs,coord_placeholder,get_bbox_range

|

| 121 |

+

from musetalk.utils.blending import get_image

|

| 122 |

+

from musetalk.utils.utils import load_all_model

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

@spaces.GPU(duration=600)

|

| 130 |

+

@torch.no_grad()

|

| 131 |

+

def inference(audio_path,video_path,bbox_shift,progress=gr.Progress(track_tqdm=True)):

|

| 132 |

+

args_dict={"result_dir":'./results/output', "fps":25, "batch_size":8, "output_vid_name":'', "use_saved_coord":False}#same with inferenece script

|

| 133 |

+

args = Namespace(**args_dict)

|

| 134 |

+

|

| 135 |

+

input_basename = os.path.basename(video_path).split('.')[0]

|

| 136 |

+

audio_basename = os.path.basename(audio_path).split('.')[0]

|

| 137 |

+

output_basename = f"{input_basename}_{audio_basename}"

|

| 138 |

+

result_img_save_path = os.path.join(args.result_dir, output_basename) # related to video & audio inputs

|

| 139 |

+

crop_coord_save_path = os.path.join(result_img_save_path, input_basename+".pkl") # only related to video input

|

| 140 |

+

os.makedirs(result_img_save_path,exist_ok =True)

|

| 141 |

+

|

| 142 |

+

if args.output_vid_name=="":

|

| 143 |

+

output_vid_name = os.path.join(args.result_dir, output_basename+".mp4")

|

| 144 |

+

else:

|

| 145 |

+

output_vid_name = os.path.join(args.result_dir, args.output_vid_name)

|

| 146 |

+

############################################## extract frames from source video ##############################################

|

| 147 |

+

if get_file_type(video_path)=="video":

|

| 148 |

+

save_dir_full = os.path.join(args.result_dir, input_basename)

|

| 149 |

+

os.makedirs(save_dir_full,exist_ok = True)

|

| 150 |

+

# cmd = f"ffmpeg -v fatal -i {video_path} -start_number 0 {save_dir_full}/%08d.png"

|

| 151 |

+

# os.system(cmd)

|

| 152 |

+

# 读取视频

|

| 153 |

+

reader = imageio.get_reader(video_path)

|

| 154 |

+

|

| 155 |

+

# 保存图片

|

| 156 |

+

for i, im in enumerate(reader):

|

| 157 |

+

imageio.imwrite(f"{save_dir_full}/{i:08d}.png", im)

|

| 158 |

+

input_img_list = sorted(glob.glob(os.path.join(save_dir_full, '*.[jpJP][pnPN]*[gG]')))

|

| 159 |

+

fps = get_video_fps(video_path)

|

| 160 |

+

else: # input img folder

|

| 161 |

+

input_img_list = glob.glob(os.path.join(video_path, '*.[jpJP][pnPN]*[gG]'))

|

| 162 |

+

input_img_list = sorted(input_img_list, key=lambda x: int(os.path.splitext(os.path.basename(x))[0]))

|

| 163 |

+

fps = args.fps

|

| 164 |

+

#print(input_img_list)

|

| 165 |

+

############################################## extract audio feature ##############################################

|

| 166 |

+

whisper_feature = audio_processor.audio2feat(audio_path)

|

| 167 |

+

whisper_chunks = audio_processor.feature2chunks(feature_array=whisper_feature,fps=fps)

|

| 168 |

+

############################################## preprocess input image ##############################################

|

| 169 |

+

if os.path.exists(crop_coord_save_path) and args.use_saved_coord:

|

| 170 |

+

print("using extracted coordinates")

|

| 171 |

+

with open(crop_coord_save_path,'rb') as f:

|

| 172 |

+

coord_list = pickle.load(f)

|

| 173 |

+

frame_list = read_imgs(input_img_list)

|

| 174 |

+

else:

|

| 175 |

+

print("extracting landmarks...time consuming")

|

| 176 |

+

coord_list, frame_list = get_landmark_and_bbox(input_img_list, bbox_shift)

|

| 177 |

+

with open(crop_coord_save_path, 'wb') as f:

|

| 178 |

+

pickle.dump(coord_list, f)

|

| 179 |

+

bbox_shift_text=get_bbox_range(input_img_list, bbox_shift)

|

| 180 |

+

i = 0

|

| 181 |

+

input_latent_list = []

|

| 182 |

+

for bbox, frame in zip(coord_list, frame_list):

|

| 183 |

+

if bbox == coord_placeholder:

|

| 184 |

+

continue

|

| 185 |

+

x1, y1, x2, y2 = bbox

|

| 186 |

+

crop_frame = frame[y1:y2, x1:x2]

|

| 187 |

+

crop_frame = cv2.resize(crop_frame,(256,256),interpolation = cv2.INTER_LANCZOS4)

|

| 188 |

+

latents = vae.get_latents_for_unet(crop_frame)

|

| 189 |

+

input_latent_list.append(latents)

|

| 190 |

+

|

| 191 |

+

# to smooth the first and the last frame

|

| 192 |

+

frame_list_cycle = frame_list + frame_list[::-1]

|

| 193 |

+

coord_list_cycle = coord_list + coord_list[::-1]

|

| 194 |

+

input_latent_list_cycle = input_latent_list + input_latent_list[::-1]

|

| 195 |

+

############################################## inference batch by batch ##############################################

|

| 196 |

+

print("start inference")

|

| 197 |

+

video_num = len(whisper_chunks)

|

| 198 |

+

batch_size = args.batch_size

|

| 199 |

+

gen = datagen(whisper_chunks,input_latent_list_cycle,batch_size)

|

| 200 |

+

res_frame_list = []

|

| 201 |

+

for i, (whisper_batch,latent_batch) in enumerate(tqdm(gen,total=int(np.ceil(float(video_num)/batch_size)))):

|

| 202 |

+

|

| 203 |

+

tensor_list = [torch.FloatTensor(arr) for arr in whisper_batch]

|

| 204 |

+

audio_feature_batch = torch.stack(tensor_list).to(unet.device) # torch, B, 5*N,384

|

| 205 |

+

audio_feature_batch = pe(audio_feature_batch)

|

| 206 |

+

|

| 207 |

+

pred_latents = unet.model(latent_batch, timesteps, encoder_hidden_states=audio_feature_batch).sample

|

| 208 |

+

recon = vae.decode_latents(pred_latents)

|

| 209 |

+

for res_frame in recon:

|

| 210 |

+

res_frame_list.append(res_frame)

|

| 211 |

+

|

| 212 |

+

############################################## pad to full image ##############################################

|

| 213 |

+

print("pad talking image to original video")

|

| 214 |

+

for i, res_frame in enumerate(tqdm(res_frame_list)):

|

| 215 |

+

bbox = coord_list_cycle[i%(len(coord_list_cycle))]

|

| 216 |

+

ori_frame = copy.deepcopy(frame_list_cycle[i%(len(frame_list_cycle))])

|

| 217 |

+

x1, y1, x2, y2 = bbox

|

| 218 |

+

try:

|

| 219 |

+

res_frame = cv2.resize(res_frame.astype(np.uint8),(x2-x1,y2-y1))

|

| 220 |

+

except:

|

| 221 |

+

# print(bbox)

|

| 222 |

+

continue

|

| 223 |

+

|

| 224 |

+

combine_frame = get_image(ori_frame,res_frame,bbox)

|

| 225 |

+

cv2.imwrite(f"{result_img_save_path}/{str(i).zfill(8)}.png",combine_frame)

|

| 226 |

+

|

| 227 |

+

# cmd_img2video = f"ffmpeg -y -v fatal -r {fps} -f image2 -i {result_img_save_path}/%08d.png -vcodec libx264 -vf format=rgb24,scale=out_color_matrix=bt709,format=yuv420p temp.mp4"

|

| 228 |

+

# print(cmd_img2video)

|

| 229 |

+

# os.system(cmd_img2video)

|

| 230 |

+

# 帧率

|

| 231 |

+

fps = 25

|

| 232 |

+

# 图片路径

|

| 233 |

+

# 输出视频路径

|

| 234 |

+

output_video = 'temp.mp4'

|

| 235 |

+

|

| 236 |

+

# 读取图片

|

| 237 |

+

def is_valid_image(file):

|

| 238 |

+

pattern = re.compile(r'\d{8}\.png')

|

| 239 |

+

return pattern.match(file)

|

| 240 |

+

|

| 241 |

+

images = []

|

| 242 |

+

files = [file for file in os.listdir(result_img_save_path) if is_valid_image(file)]

|

| 243 |

+

files.sort(key=lambda x: int(x.split('.')[0]))

|

| 244 |

+

|

| 245 |

+

for file in files:

|

| 246 |

+

filename = os.path.join(result_img_save_path, file)

|

| 247 |

+

images.append(imageio.imread(filename))

|

| 248 |

+

|

| 249 |

+

|

| 250 |

+

# 保存视频

|

| 251 |

+

imageio.mimwrite(output_video, images, 'FFMPEG', fps=fps, codec='libx264', pixelformat='yuv420p')

|

| 252 |

+

|

| 253 |

+

# cmd_combine_audio = f"ffmpeg -y -v fatal -i {audio_path} -i temp.mp4 {output_vid_name}"

|

| 254 |

+

# print(cmd_combine_audio)

|

| 255 |

+

# os.system(cmd_combine_audio)

|

| 256 |

+

|

| 257 |

+

input_video = './temp.mp4'

|

| 258 |

+

# Check if the input_video and audio_path exist

|

| 259 |

+

if not os.path.exists(input_video):

|

| 260 |

+

raise FileNotFoundError(f"Input video file not found: {input_video}")

|

| 261 |

+

if not os.path.exists(audio_path):

|

| 262 |

+

raise FileNotFoundError(f"Audio file not found: {audio_path}")

|

| 263 |

+

|

| 264 |

+

# 读取视频

|

| 265 |

+

reader = imageio.get_reader(input_video)

|

| 266 |

+

fps = reader.get_meta_data()['fps'] # 获取原视频的帧率

|

| 267 |

+

|

| 268 |

+

# 将帧存储在列表中

|

| 269 |

+

frames = images

|

| 270 |

+

|

| 271 |

+

# 保存视频并添加音频

|

| 272 |

+

# imageio.mimwrite(output_vid_name, frames, 'FFMPEG', fps=fps, codec='libx264', audio_codec='aac', input_params=['-i', audio_path])

|

| 273 |

+

|

| 274 |

+

# input_video = ffmpeg.input(input_video)

|

| 275 |

+

|

| 276 |

+

# input_audio = ffmpeg.input(audio_path)

|

| 277 |

+

|

| 278 |

+

print(len(frames))

|

| 279 |

+

|

| 280 |

+

# imageio.mimwrite(

|

| 281 |

+

# output_video,

|

| 282 |

+

# frames,

|

| 283 |

+

# 'FFMPEG',

|

| 284 |

+

# fps=25,

|

| 285 |

+

# codec='libx264',

|

| 286 |

+

# audio_codec='aac',

|

| 287 |

+

# input_params=['-i', audio_path],

|

| 288 |

+

# output_params=['-y'], # Add the '-y' flag to overwrite the output file if it exists

|

| 289 |

+

# )

|

| 290 |

+

# writer = imageio.get_writer(output_vid_name, fps = 25, codec='libx264', quality=10, pixelformat='yuvj444p')

|

| 291 |

+

# for im in frames:

|

| 292 |

+

# writer.append_data(im)

|

| 293 |

+

# writer.close()

|

| 294 |

+

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

|

| 298 |

+

# Load the video

|

| 299 |

+

video_clip = VideoFileClip(input_video)

|

| 300 |

+

|

| 301 |

+

# Load the audio

|

| 302 |

+

audio_clip = AudioFileClip(audio_path)

|

| 303 |

+

|

| 304 |

+

# Set the audio to the video

|

| 305 |

+

video_clip = video_clip.set_audio(audio_clip)

|

| 306 |

+

|

| 307 |

+

# Write the output video

|

| 308 |

+

video_clip.write_videofile(output_vid_name, codec='libx264', audio_codec='aac',fps=25)

|

| 309 |

+

|

| 310 |

+

os.remove("temp.mp4")

|

| 311 |

+

#shutil.rmtree(result_img_save_path)

|

| 312 |

+

print(f"result is save to {output_vid_name}")

|

| 313 |

+

return output_vid_name,bbox_shift_text

|

| 314 |

+

|

| 315 |

+

|

| 316 |

+

|

| 317 |

+

# load model weights

|

| 318 |

+

audio_processor,vae,unet,pe = load_all_model()

|

| 319 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 320 |

+

timesteps = torch.tensor([0], device=device)

|

| 321 |

+

|

| 322 |

+

|

| 323 |

+

|

| 324 |

+

|

| 325 |

+

def check_video(video):

|

| 326 |

+

if not isinstance(video, str):

|

| 327 |

+

return video # in case of none type

|

| 328 |

+

# Define the output video file name

|

| 329 |

+

dir_path, file_name = os.path.split(video)

|

| 330 |

+

if file_name.startswith("outputxxx_"):

|

| 331 |

+

return video

|

| 332 |

+

# Add the output prefix to the file name

|

| 333 |

+

output_file_name = "outputxxx_" + file_name

|

| 334 |

+

|

| 335 |

+

os.makedirs('./results',exist_ok=True)

|

| 336 |

+

os.makedirs('./results/output',exist_ok=True)

|

| 337 |

+

os.makedirs('./results/input',exist_ok=True)

|

| 338 |

+

|

| 339 |

+

# Combine the directory path and the new file name

|

| 340 |

+

output_video = os.path.join('./results/input', output_file_name)

|

| 341 |

+

|

| 342 |

+

|

| 343 |

+

# # Run the ffmpeg command to change the frame rate to 25fps

|

| 344 |

+

# command = f"ffmpeg -i {video} -r 25 -vcodec libx264 -vtag hvc1 -pix_fmt yuv420p crf 18 {output_video} -y"

|

| 345 |

+

|

| 346 |

+

# read video

|

| 347 |

+

reader = imageio.get_reader(video)

|

| 348 |

+

fps = reader.get_meta_data()['fps'] # get fps from original video

|

| 349 |

+

|

| 350 |

+

# conver fps to 25

|

| 351 |

+

frames = [im for im in reader]

|

| 352 |

+

target_fps = 25

|

| 353 |

+

|

| 354 |

+

L = len(frames)

|

| 355 |

+

L_target = int(L / fps * target_fps)

|

| 356 |

+

original_t = [x / fps for x in range(1, L+1)]

|

| 357 |

+

t_idx = 0

|

| 358 |

+

target_frames = []

|

| 359 |

+

for target_t in range(1, L_target+1):

|

| 360 |

+

while target_t / target_fps > original_t[t_idx]:

|

| 361 |

+

t_idx += 1 # find the first t_idx so that target_t / target_fps <= original_t[t_idx]

|

| 362 |

+

if t_idx >= L:

|

| 363 |

+

break

|

| 364 |

+

target_frames.append(frames[t_idx])

|

| 365 |

+

|

| 366 |

+

# save video

|

| 367 |

+

imageio.mimwrite(output_video, target_frames, 'FFMPEG', fps=25, codec='libx264', quality=9, pixelformat='yuv420p')

|

| 368 |

+

return output_video

|

| 369 |

+

|

| 370 |

+

|

| 371 |

+

|

| 372 |

+

|

| 373 |

+

css = """#input_img {max-width: 1024px !important} #output_vid {max-width: 1024px; max-height: 576px}"""

|

| 374 |

+

|

| 375 |

+

with gr.Blocks(css=css) as demo:

|

| 376 |

+

gr.Markdown(

|

| 377 |

+

"<div align='center'> <h1>MuseTalk: Real-Time High Quality Lip Synchronization with Latent Space Inpainting </span> </h1> \

|

| 378 |

+

<h2 style='font-weight: 450; font-size: 1rem; margin: 0rem'>\

|

| 379 |

+

</br>\

|

| 380 |

+

Yue Zhang <sup>\*</sup>,\

|

| 381 |

+

Minhao Liu<sup>\*</sup>,\

|

| 382 |

+

Zhaokang Chen,\

|

| 383 |

+

Bin Wu<sup>†</sup>,\

|

| 384 |

+

Yingjie He,\

|

| 385 |

+

Chao Zhan,\

|

| 386 |

+

Wenjiang Zhou\

|

| 387 |

+

(<sup>*</sup>Equal Contribution, <sup>†</sup>Corresponding Author, benbinwu@tencent.com)\

|

| 388 |

+

Lyra Lab, Tencent Music Entertainment\

|

| 389 |

+

</h2> \

|

| 390 |

+

<a style='font-size:18px;color: #000000' href='https://github.com/TMElyralab/MuseTalk'>[Github Repo]</a>\

|

| 391 |

+

<a style='font-size:18px;color: #000000' href='https://github.com/TMElyralab/MuseTalk'>[Huggingface]</a>\

|

| 392 |

+

<a style='font-size:18px;color: #000000' href=''> [Technical report(Coming Soon)] </a>\

|

| 393 |

+

<a style='font-size:18px;color: #000000' href=''> [Project Page(Coming Soon)] </a> </div>"

|

| 394 |

+

)

|

| 395 |

+

|

| 396 |

+

with gr.Row():

|

| 397 |

+

with gr.Column():

|

| 398 |

+

audio = gr.Audio(label="Driven Audio",type="filepath")

|

| 399 |

+

video = gr.Video(label="Reference Video",sources=['upload'])

|

| 400 |

+

bbox_shift = gr.Number(label="BBox_shift value, px", value=0)

|

| 401 |

+

bbox_shift_scale = gr.Textbox(label="BBox_shift recommend value lower bound,The corresponding bbox range is generated after the initial result is generated. \n If the result is not good, it can be adjusted according to this reference value", value="",interactive=False)

|

| 402 |

+

|

| 403 |

+

btn = gr.Button("Generate")

|

| 404 |

+

out1 = gr.Video()

|

| 405 |

+

|

| 406 |

+

video.change(

|

| 407 |

+

fn=check_video, inputs=[video], outputs=[video]

|

| 408 |

+

)

|

| 409 |

+

btn.click(

|

| 410 |

+

fn=inference,

|

| 411 |

+

inputs=[

|

| 412 |

+

audio,

|

| 413 |

+

video,

|

| 414 |

+

bbox_shift,

|

| 415 |

+

],

|

| 416 |

+

outputs=[out1,bbox_shift_scale]

|

| 417 |

+

)

|

| 418 |

+

|

| 419 |

+

# Set the IP and port

|

| 420 |

+

ip_address = "0.0.0.0" # Replace with your desired IP address

|

| 421 |

+

port_number = 7860 # Replace with your desired port number

|

| 422 |

+

|

| 423 |

+

|

| 424 |

+

demo.queue().launch(

|

| 425 |

+

# share=False , debug=True, server_name=ip_address, server_port=port_number

|

| 426 |

+

)

|

assets/BBOX_SHIFT.md

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

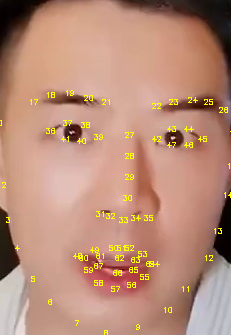

## Why is there a "bbox_shift" parameter?

|

| 2 |

+

When processing training data, we utilize the combination of face detection results (bbox) and facial landmarks to determine the region of the head segmentation box. Specifically, we use the upper bound of the bbox as the upper boundary of the segmentation box, the maximum y value of the facial landmarks coordinates as the lower boundary of the segmentation box, and the minimum and maximum x values of the landmarks coordinates as the left and right boundaries of the segmentation box. By processing the dataset in this way, we can ensure the integrity of the face.

|

| 3 |

+

|

| 4 |

+

However, we have observed that the masked ratio on the face varies across different images due to the varying face shapes of subjects. Furthermore, we found that the upper-bound of the mask mainly lies close to the landmark28, landmark29 and landmark30 landmark points (as shown in Fig.1), which correspond to proportions of 15%, 63%, and 22% in the dataset, respectively.

|

| 5 |

+

|

| 6 |

+

During the inference process, we discover that as the upper-bound of the mask gets closer to the mouth (near landmark30), the audio features contribute more to lip movements. Conversely, as the upper-bound of the mask moves away from the mouth (near landmark28), the audio features contribute more to generating details of facial appearance. Hence, we define this characteristic as a parameter that can adjust the contribution of audio features to generating lip movements, which users can modify according to their specific needs in practical scenarios.

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

Fig.1. Facial landmarks

|

| 11 |

+

### Step 0.

|

| 12 |

+

Running with the default configuration to obtain the adjustable value range.

|

| 13 |

+

```

|

| 14 |

+

python -m scripts.inference --inference_config configs/inference/test.yaml

|

| 15 |

+

```

|

| 16 |

+

```

|

| 17 |

+

********************************************bbox_shift parameter adjustment**********************************************************

|

| 18 |

+

Total frame:「838」 Manually adjust range : [ -9~9 ] , the current value: 0

|

| 19 |

+

*************************************************************************************************************************************

|

| 20 |

+

```

|

| 21 |

+

### Step 1.

|

| 22 |

+

Re-run the script within the above range.

|

| 23 |

+

```

|

| 24 |

+

python -m scripts.inference --inference_config configs/inference/test.yaml --bbox_shift xx # where xx is in [-9, 9].

|

| 25 |

+

```

|

| 26 |

+

In our experimental observations, we found that positive values (moving towards the lower half) generally increase mouth openness, while negative values (moving towards the upper half) generally decrease mouth openness. However, it's important to note that this is not an absolute rule, and users may need to adjust the parameter according to their specific needs and the desired effect.

|

assets/demo/man/man.png

ADDED

|

Git LFS Details

|

assets/demo/monalisa/monalisa.png

ADDED

|

assets/demo/musk/musk.png

ADDED

|

assets/demo/sit/sit.jpeg

ADDED

|

assets/demo/sun1/sun.png

ADDED

|

assets/demo/sun2/sun.png

ADDED

|

assets/demo/video1/video1.png

ADDED

|

assets/demo/yongen/yongen.jpeg

ADDED

|

assets/figs/landmark_ref.png

ADDED

|

assets/figs/musetalk_arc.jpg

ADDED

|

configs/inference/test.yaml

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task_0:

|

| 2 |

+

video_path: "data/video/fake.mp4"

|

| 3 |

+

audio_path: "data/audio/yongen.wav"

|

| 4 |

+

bbox_shift: -7

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

# task_1:

|

| 8 |

+

# video_path: "data/video/yongen.mp4"

|

| 9 |

+

# audio_path: "data/audio/yongen.wav"

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

data/audio/sun.wav

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3f163b0fe2f278504c15cab74cd37b879652749e2a8a69f7848ad32c847d8007

|

| 3 |

+

size 1983572

|

data/audio/yongen.wav

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2b775c363c968428d1d6df4456495e4c11f00e3204d3082e51caff415ec0e2ba

|

| 3 |

+

size 1536078

|

data/video/fake.mp4

ADDED

|

Binary file (351 kB). View file

|

|

|

data/video/sun.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9f240982090f4255a7589e3cd67b4219be7820f9eb9a7461fc915eb5f0c8e075

|

| 3 |

+

size 2217973

|

data/video/yongen.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1effa976d410571cd185554779d6d43a6ba636e0e3401385db1d607daa46441f

|

| 3 |

+

size 1870923

|

entrypoint.sh

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

echo "entrypoint.sh"

|

| 4 |

+

whoami

|

| 5 |

+

which python

|

| 6 |

+

echo "pythonpath" $PYTHONPATH

|

| 7 |

+

|

| 8 |

+

source /opt/conda/etc/profile.d/conda.sh

|

| 9 |

+

conda activate musev

|

| 10 |

+

which python

|

| 11 |

+

python app.py

|

insta.sh

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pip install --no-cache-dir -U openmim

|

| 2 |

+

mim install mmengine

|

| 3 |

+

mim install "mmcv>=2.0.1"

|

| 4 |

+

mim install "mmdet>=3.1.0"

|

| 5 |

+

mim install "mmpose>=1.1.0"

|

install_ffmpeg.sh

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

FFMPEG_PREFIX="$(echo $HOME/local)"

|

| 3 |

+

FFMPEG_SOURCES="$(echo $HOME/ffmpeg_sources)"

|

| 4 |

+

FFMPEG_BINDIR="$(echo $FFMPEG_PREFIX/bin)"

|

| 5 |

+

PATH=$FFMPEG_BINDIR:$PATH

|

| 6 |

+

|

| 7 |

+

mkdir -p $FFMPEG_PREFIX

|

| 8 |

+

mkdir -p $FFMPEG_SOURCES

|

| 9 |

+

|

| 10 |

+

cd $FFMPEG_SOURCES

|

| 11 |

+

wget http://www.tortall.net/projects/yasm/releases/yasm-1.2.0.tar.gz

|

| 12 |

+

tar xzvf yasm-1.2.0.tar.gz

|

| 13 |

+

cd yasm-1.2.0

|

| 14 |

+

./configure --prefix="$FFMPEG_PREFIX" --bindir="$FFMPEG_BINDIR"

|

| 15 |

+

make

|

| 16 |

+

make install

|

| 17 |

+

make distclean

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

cd $FFMPEG_SOURCES

|

| 22 |

+

wget http://download.videolan.org/pub/x264/snapshots/last_x264.tar.bz2

|

| 23 |

+

tar xjvf last_x264.tar.bz2

|

| 24 |

+

cd x264-snapshot*

|

| 25 |

+

./configure --prefix="$FFMPEG_PREFIX" --bindir="$FFMPEG_BINDIR" --enable-static

|

| 26 |

+

make

|

| 27 |

+

make install

|

| 28 |

+

make distclean

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

cd $FFMPEG_SOURCES

|

| 33 |

+

wget -O fdk-aac.tar.gz https://github.com/mstorsjo/fdk-aac/tarball/master

|

| 34 |

+

tar xzvf fdk-aac.tar.gz

|

| 35 |

+

cd mstorsjo-fdk-aac*

|

| 36 |

+

autoreconf -fiv

|

| 37 |

+

./configure --prefix="$FFMPEG_PREFIX" --disable-shared

|

| 38 |

+

make

|

| 39 |

+

make install

|

| 40 |

+

make distclean

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

cd $FFMPEG_SOURCES

|

| 45 |

+

wget http://webm.googlecode.com/files/libvpx-v1.3.0.tar.bz2

|

| 46 |

+

tar xjvf libvpx-v1.3.0.tar.bz2

|

| 47 |

+

cd libvpx-v1.3.0

|

| 48 |

+

./configure --prefix="$FFMPEG_PREFIX" --disable-examples

|

| 49 |

+

make

|

| 50 |

+

make install

|

| 51 |

+

make clean

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

cd $FFMPEG_SOURCES

|

| 56 |

+

wget https://github.com/FFmpeg/FFmpeg/tarball/master -O ffmpeg.tar.gz

|

| 57 |

+

rm -rf FFmpeg-FFmpeg*

|

| 58 |

+

tar -zxvf ffmpeg.tar.gz

|

| 59 |

+

cd FFmpeg-FFmpeg*

|

| 60 |

+

PKG_CONFIG_PATH="$FFMPEG_PREFIX/lib/pkgconfig"

|

| 61 |

+

export PKG_CONFIG_PATH

|

| 62 |

+

./configure --prefix="$FFMPEG_PREFIX" --extra-cflags="-I$FFMPEG_PREFIX/include" \

|

| 63 |

+

--extra-ldflags="-L$FFMPEG_PREFIX/lib" --bindir="$FFMPEG_BINDIR" --extra-libs="-ldl" --enable-gpl \

|

| 64 |

+

--enable-libass --enable-libfdk-aac --enable-libmp3lame --enable-libtheora \

|

| 65 |

+

--enable-libvorbis --enable-libvpx --enable-libx264 --enable-nonfree \

|

| 66 |

+

--enable-libopencore-amrnb --enable-libopencore-amrwb --enable-version3 --enable-libvo-amrwbenc

|

| 67 |

+

make

|

| 68 |

+

make install

|

| 69 |

+

make distclean

|

| 70 |

+

hash -r

|

models/.gitattributes

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

models/.huggingface/.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

*

|

models/.huggingface/download/.gitattributes.lock

ADDED

|

File without changes

|

models/.huggingface/download/.gitattributes.metadata

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

5e6f29eeef8d88c1ae8389316f122bcc36a430fe

|

| 2 |

+

a6344aac8c09253b3b630fb776ae94478aa0275b

|

| 3 |

+

1715152071.9456775

|

models/.huggingface/download/README.md.lock

ADDED

|

File without changes

|

models/.huggingface/download/README.md.metadata

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

5e6f29eeef8d88c1ae8389316f122bcc36a430fe

|

| 2 |

+

74e11fb4b681253f7fe73d9c4b80ec0021949213

|

| 3 |

+

1715152072.478483

|

models/.huggingface/download/musetalk/musetalk.json.lock

ADDED

|

File without changes

|

models/.huggingface/download/musetalk/musetalk.json.metadata

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

5e6f29eeef8d88c1ae8389316f122bcc36a430fe

|

| 2 |

+

b822db87e503a283fbbee73617f89dcd294cb91c

|

| 3 |

+

1715152071.9655983

|

models/.huggingface/download/musetalk/pytorch_model.bin.lock

ADDED

|

File without changes

|

models/.huggingface/download/musetalk/pytorch_model.bin.metadata

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

5e6f29eeef8d88c1ae8389316f122bcc36a430fe

|

| 2 |

+

0ee7d5ea03ea75d8dca50ea7a76df791e90633687a135c4a69393abfc0475ffe

|

| 3 |

+

1715152253.217898

|

models/README.md

ADDED

|

@@ -0,0 +1,259 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|