Commit

•

15483fc

1

Parent(s):

549fe9a

Results udpated

Browse files

README.md

CHANGED

|

@@ -31,6 +31,28 @@ The responses were graded irrespective of the student's ethnicity, race, or gend

|

|

| 31 |

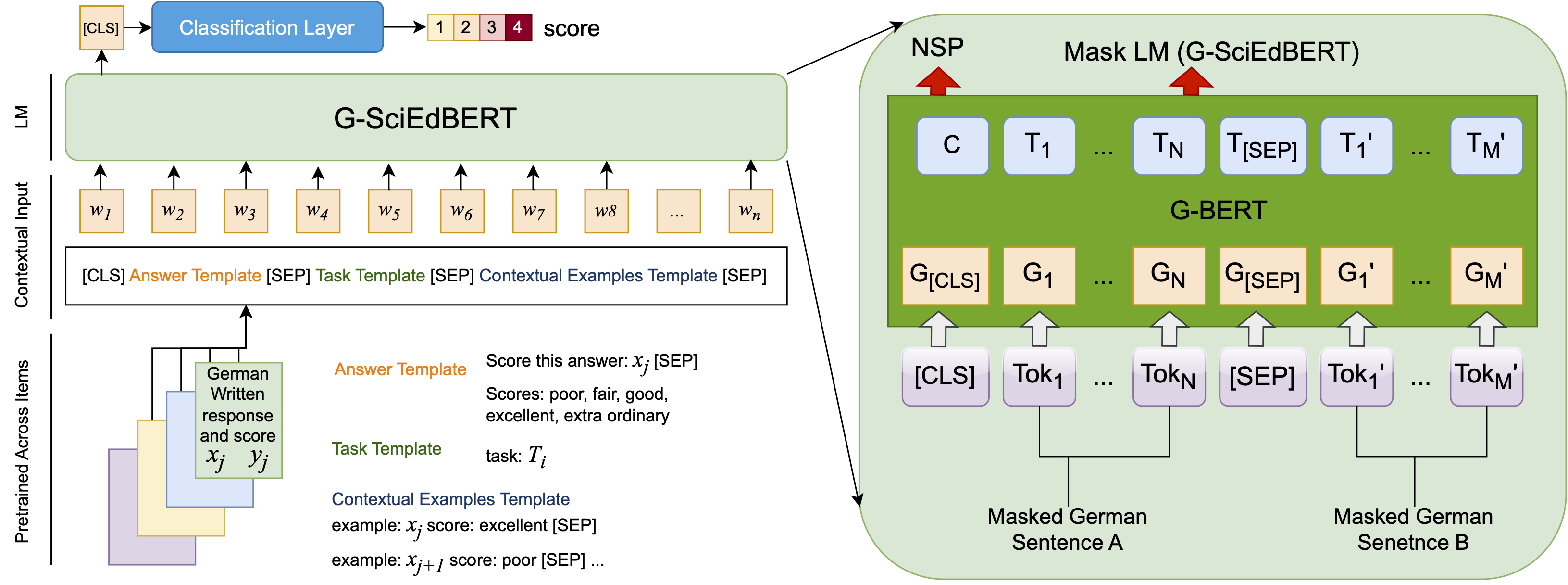

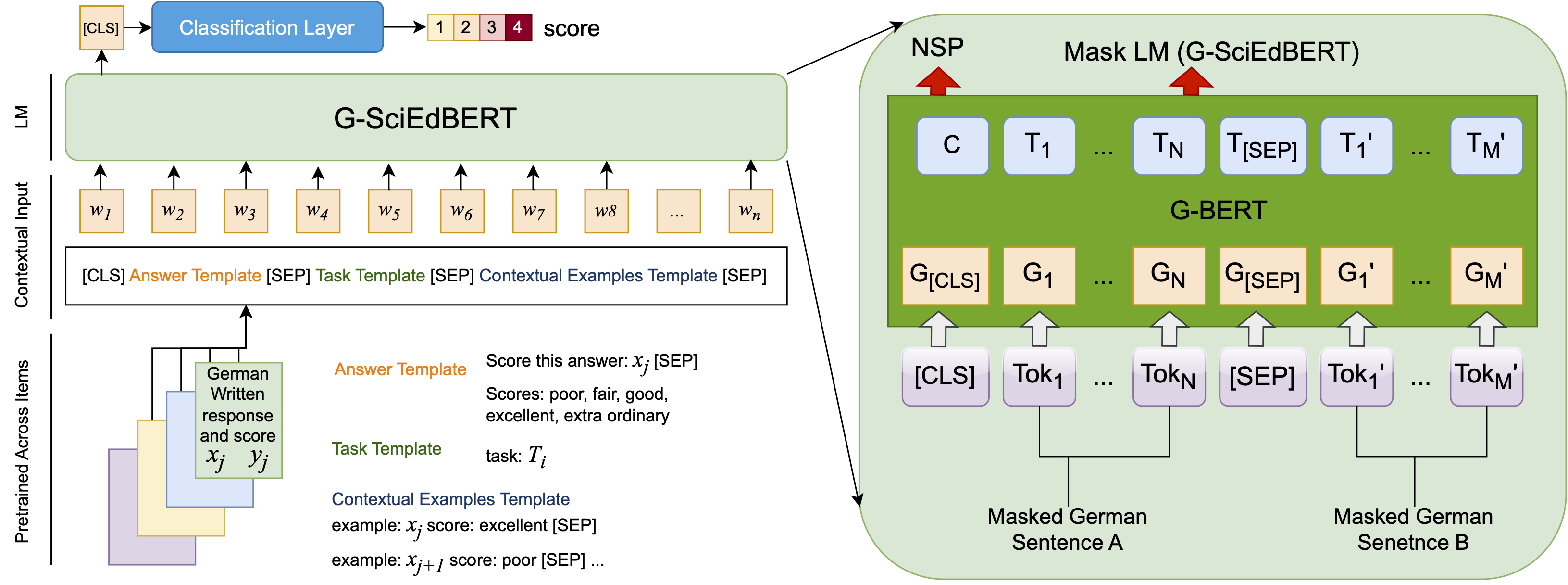

The model is pre-trained on [G-BERT](https://huggingface.co/dbmdz/bert-base-german-uncased?text=Ich+mag+dich.+Ich+liebe+%5BMASK%5D) and the pre-trainig method can be seen as:

|

| 32 |

|

| 33 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 34 |

## Usage

|

| 35 |

|

| 36 |

With Transformers >= 2.3 our German BERT models can be loaded like this:

|

|

|

|

| 31 |

The model is pre-trained on [G-BERT](https://huggingface.co/dbmdz/bert-base-german-uncased?text=Ich+mag+dich.+Ich+liebe+%5BMASK%5D) and the pre-trainig method can be seen as:

|

| 32 |

|

| 33 |

|

| 34 |

+

|

| 35 |

+

## Evaluation Results

|

| 36 |

+

The table below compares the outcomes between G-BERT and G-SciEdBERT for randomly picked five PISA assessment items and the average accuracy (QWK)

|

| 37 |

+

reported for all datasets combined. It shows that G-SciEdBERT significantly outperformed G-BERT on automatic scoring of student written responses.

|

| 38 |

+

Based on the QWK values, the percentage differences in accuracy vary from 4.2% to 13.6%, with an average increase of 10.0% in average (from .7136 to .8137).

|

| 39 |

+

Especially for item S268Q02, which saw the largest improvement at 13.6% (from .761 to .852), this improvement is noteworthy.

|

| 40 |

+

These findings demonstrate that G-SciEdBERT is more effective than G-BERT at comprehending and assessing complex science-related writings.

|

| 41 |

+

|

| 42 |

+

The results of our analysis strongly support the adoption of G-SciEdBERT for the automatic scoring of German-written science responses in large-scale

|

| 43 |

+

assessments such as PISA, given its superior accuracy over the general-purpose G-BERT model.

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

| Item | Training Samples | Testing Samples | Labels | G-BERT | G-SciEdBERT |

|

| 47 |

+

|---------|------------------|-----------------|--------------|--------|-------------|

|

| 48 |

+

| S131Q02 | 487 | 122 | 5 | 0.761 | **0.852** |

|

| 49 |

+

| S131Q04 | 478 | 120 | 5 | 0.683 | **0.725** |

|

| 50 |

+

| S268Q02 | 446 | 112 | 2 | 0.757 | **0.893** |

|

| 51 |

+

| S269Q01 | 508 | 127 | 2 | 0.837 | **0.953** |

|

| 52 |

+

| S269Q03 | 500 | 126 | 4 | 0.702 | **0.802** |

|

| 53 |

+

| Average | 665.95 | 166.49 | 2-5 (min-max) | 0.7136 | **0.8137** |

|

| 54 |

+

|

| 55 |

+

|

| 56 |

## Usage

|

| 57 |

|

| 58 |

With Transformers >= 2.3 our German BERT models can be loaded like this:

|