Antonio Cheong

commited on

Commit

·

4d7378e

1

Parent(s):

4f15858

structure

Browse files- CODE_OF_CONDUCT.md +4 -0

- CONTRIBUTING.md +59 -0

- LICENSE +175 -0

- NOTICE +1 -0

- evaluations.py +100 -0

- main.py +383 -0

- model.py +194 -0

- requirements.txt +11 -0

- run_inference.sh +17 -0

- run_training.sh +15 -0

- utils_data.py +228 -0

- utils_evaluate.py +108 -0

- utils_prompt.py +240 -0

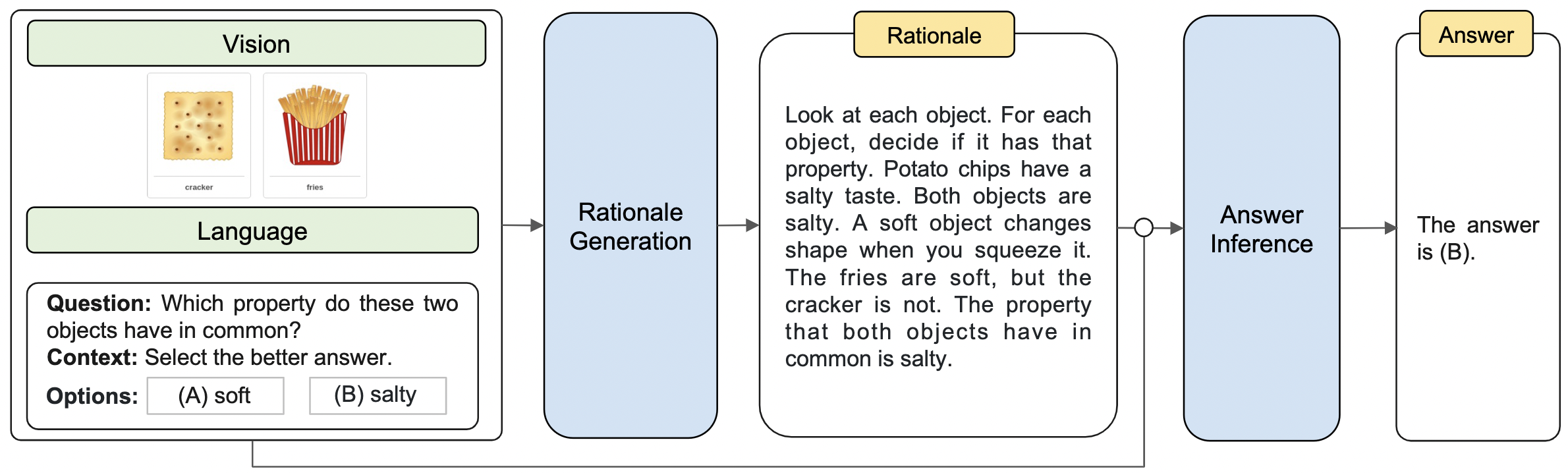

- vision_features/mm-cot.png +0 -0

CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Code of Conduct

|

| 2 |

+

This project has adopted the [Amazon Open Source Code of Conduct](https://aws.github.io/code-of-conduct).

|

| 3 |

+

For more information see the [Code of Conduct FAQ](https://aws.github.io/code-of-conduct-faq) or contact

|

| 4 |

+

opensource-codeofconduct@amazon.com with any additional questions or comments.

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Contributing Guidelines

|

| 2 |

+

|

| 3 |

+

Thank you for your interest in contributing to our project. Whether it's a bug report, new feature, correction, or additional

|

| 4 |

+

documentation, we greatly value feedback and contributions from our community.

|

| 5 |

+

|

| 6 |

+

Please read through this document before submitting any issues or pull requests to ensure we have all the necessary

|

| 7 |

+

information to effectively respond to your bug report or contribution.

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Reporting Bugs/Feature Requests

|

| 11 |

+

|

| 12 |

+

We welcome you to use the GitHub issue tracker to report bugs or suggest features.

|

| 13 |

+

|

| 14 |

+

When filing an issue, please check existing open, or recently closed, issues to make sure somebody else hasn't already

|

| 15 |

+

reported the issue. Please try to include as much information as you can. Details like these are incredibly useful:

|

| 16 |

+

|

| 17 |

+

* A reproducible test case or series of steps

|

| 18 |

+

* The version of our code being used

|

| 19 |

+

* Any modifications you've made relevant to the bug

|

| 20 |

+

* Anything unusual about your environment or deployment

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Contributing via Pull Requests

|

| 24 |

+

Contributions via pull requests are much appreciated. Before sending us a pull request, please ensure that:

|

| 25 |

+

|

| 26 |

+

1. You are working against the latest source on the *main* branch.

|

| 27 |

+

2. You check existing open, and recently merged, pull requests to make sure someone else hasn't addressed the problem already.

|

| 28 |

+

3. You open an issue to discuss any significant work - we would hate for your time to be wasted.

|

| 29 |

+

|

| 30 |

+

To send us a pull request, please:

|

| 31 |

+

|

| 32 |

+

1. Fork the repository.

|

| 33 |

+

2. Modify the source; please focus on the specific change you are contributing. If you also reformat all the code, it will be hard for us to focus on your change.

|

| 34 |

+

3. Ensure local tests pass.

|

| 35 |

+

4. Commit to your fork using clear commit messages.

|

| 36 |

+

5. Send us a pull request, answering any default questions in the pull request interface.

|

| 37 |

+

6. Pay attention to any automated CI failures reported in the pull request, and stay involved in the conversation.

|

| 38 |

+

|

| 39 |

+

GitHub provides additional document on [forking a repository](https://help.github.com/articles/fork-a-repo/) and

|

| 40 |

+

[creating a pull request](https://help.github.com/articles/creating-a-pull-request/).

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

## Finding contributions to work on

|

| 44 |

+

Looking at the existing issues is a great way to find something to contribute on. As our projects, by default, use the default GitHub issue labels (enhancement/bug/duplicate/help wanted/invalid/question/wontfix), looking at any 'help wanted' issues is a great place to start.

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

## Code of Conduct

|

| 48 |

+

This project has adopted the [Amazon Open Source Code of Conduct](https://aws.github.io/code-of-conduct).

|

| 49 |

+

For more information see the [Code of Conduct FAQ](https://aws.github.io/code-of-conduct-faq) or contact

|

| 50 |

+

opensource-codeofconduct@amazon.com with any additional questions or comments.

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

## Security issue notifications

|

| 54 |

+

If you discover a potential security issue in this project we ask that you notify AWS/Amazon Security via our [vulnerability reporting page](http://aws.amazon.com/security/vulnerability-reporting/). Please do **not** create a public github issue.

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

## Licensing

|

| 58 |

+

|

| 59 |

+

See the [LICENSE](LICENSE) file for our project's licensing. We will ask you to confirm the licensing of your contribution.

|

LICENSE

ADDED

|

@@ -0,0 +1,175 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Apache License

|

| 3 |

+

Version 2.0, January 2004

|

| 4 |

+

http://www.apache.org/licenses/

|

| 5 |

+

|

| 6 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 7 |

+

|

| 8 |

+

1. Definitions.

|

| 9 |

+

|

| 10 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 11 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 12 |

+

|

| 13 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 14 |

+

the copyright owner that is granting the License.

|

| 15 |

+

|

| 16 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 17 |

+

other entities that control, are controlled by, or are under common

|

| 18 |

+

control with that entity. For the purposes of this definition,

|

| 19 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 20 |

+

direction or management of such entity, whether by contract or

|

| 21 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 22 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 23 |

+

|

| 24 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 25 |

+

exercising permissions granted by this License.

|

| 26 |

+

|

| 27 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 28 |

+

including but not limited to software source code, documentation

|

| 29 |

+

source, and configuration files.

|

| 30 |

+

|

| 31 |

+

"Object" form shall mean any form resulting from mechanical

|

| 32 |

+

transformation or translation of a Source form, including but

|

| 33 |

+

not limited to compiled object code, generated documentation,

|

| 34 |

+

and conversions to other media types.

|

| 35 |

+

|

| 36 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 37 |

+

Object form, made available under the License, as indicated by a

|

| 38 |

+

copyright notice that is included in or attached to the work

|

| 39 |

+

(an example is provided in the Appendix below).

|

| 40 |

+

|

| 41 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 42 |

+

form, that is based on (or derived from) the Work and for which the

|

| 43 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 44 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 45 |

+

of this License, Derivative Works shall not include works that remain

|

| 46 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 47 |

+

the Work and Derivative Works thereof.

|

| 48 |

+

|

| 49 |

+

"Contribution" shall mean any work of authorship, including

|

| 50 |

+

the original version of the Work and any modifications or additions

|

| 51 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 52 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 53 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 54 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 55 |

+

means any form of electronic, verbal, or written communication sent

|

| 56 |

+

to the Licensor or its representatives, including but not limited to

|

| 57 |

+

communication on electronic mailing lists, source code control systems,

|

| 58 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 59 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 60 |

+

excluding communication that is conspicuously marked or otherwise

|

| 61 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 62 |

+

|

| 63 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 64 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 65 |

+

subsequently incorporated within the Work.

|

| 66 |

+

|

| 67 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 68 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 69 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 70 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 71 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 72 |

+

Work and such Derivative Works in Source or Object form.

|

| 73 |

+

|

| 74 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 75 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 76 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 77 |

+

(except as stated in this section) patent license to make, have made,

|

| 78 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 79 |

+

where such license applies only to those patent claims licensable

|

| 80 |

+

by such Contributor that are necessarily infringed by their

|

| 81 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 82 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 83 |

+

institute patent litigation against any entity (including a

|

| 84 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 85 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 86 |

+

or contributory patent infringement, then any patent licenses

|

| 87 |

+

granted to You under this License for that Work shall terminate

|

| 88 |

+

as of the date such litigation is filed.

|

| 89 |

+

|

| 90 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 91 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 92 |

+

modifications, and in Source or Object form, provided that You

|

| 93 |

+

meet the following conditions:

|

| 94 |

+

|

| 95 |

+

(a) You must give any other recipients of the Work or

|

| 96 |

+

Derivative Works a copy of this License; and

|

| 97 |

+

|

| 98 |

+

(b) You must cause any modified files to carry prominent notices

|

| 99 |

+

stating that You changed the files; and

|

| 100 |

+

|

| 101 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 102 |

+

that You distribute, all copyright, patent, trademark, and

|

| 103 |

+

attribution notices from the Source form of the Work,

|

| 104 |

+

excluding those notices that do not pertain to any part of

|

| 105 |

+

the Derivative Works; and

|

| 106 |

+

|

| 107 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 108 |

+

distribution, then any Derivative Works that You distribute must

|

| 109 |

+

include a readable copy of the attribution notices contained

|

| 110 |

+

within such NOTICE file, excluding those notices that do not

|

| 111 |

+

pertain to any part of the Derivative Works, in at least one

|

| 112 |

+

of the following places: within a NOTICE text file distributed

|

| 113 |

+

as part of the Derivative Works; within the Source form or

|

| 114 |

+

documentation, if provided along with the Derivative Works; or,

|

| 115 |

+

within a display generated by the Derivative Works, if and

|

| 116 |

+

wherever such third-party notices normally appear. The contents

|

| 117 |

+

of the NOTICE file are for informational purposes only and

|

| 118 |

+

do not modify the License. You may add Your own attribution

|

| 119 |

+

notices within Derivative Works that You distribute, alongside

|

| 120 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 121 |

+

that such additional attribution notices cannot be construed

|

| 122 |

+

as modifying the License.

|

| 123 |

+

|

| 124 |

+

You may add Your own copyright statement to Your modifications and

|

| 125 |

+

may provide additional or different license terms and conditions

|

| 126 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 127 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 128 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 129 |

+

the conditions stated in this License.

|

| 130 |

+

|

| 131 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 132 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 133 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 134 |

+

this License, without any additional terms or conditions.

|

| 135 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 136 |

+

the terms of any separate license agreement you may have executed

|

| 137 |

+

with Licensor regarding such Contributions.

|

| 138 |

+

|

| 139 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 140 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 141 |

+

except as required for reasonable and customary use in describing the

|

| 142 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 143 |

+

|

| 144 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 145 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 146 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 147 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 148 |

+

implied, including, without limitation, any warranties or conditions

|

| 149 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 150 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 151 |

+

appropriateness of using or redistributing the Work and assume any

|

| 152 |

+

risks associated with Your exercise of permissions under this License.

|

| 153 |

+

|

| 154 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 155 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 156 |

+

unless required by applicable law (such as deliberate and grossly

|

| 157 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 158 |

+

liable to You for damages, including any direct, indirect, special,

|

| 159 |

+

incidental, or consequential damages of any character arising as a

|

| 160 |

+

result of this License or out of the use or inability to use the

|

| 161 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 162 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 163 |

+

other commercial damages or losses), even if such Contributor

|

| 164 |

+

has been advised of the possibility of such damages.

|

| 165 |

+

|

| 166 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 167 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 168 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 169 |

+

or other liability obligations and/or rights consistent with this

|

| 170 |

+

License. However, in accepting such obligations, You may act only

|

| 171 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 172 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 173 |

+

defend, and hold each Contributor harmless for any liability

|

| 174 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 175 |

+

of your accepting any such warranty or additional liability.

|

NOTICE

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Copyright Amazon.com, Inc. or its affiliates. All Rights Reserved.

|

evaluations.py

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

Adapted from https://github.com/lupantech/ScienceQA

|

| 3 |

+

'''

|

| 4 |

+

|

| 5 |

+

import re

|

| 6 |

+

from rouge import Rouge

|

| 7 |

+

from nltk.translate.bleu_score import sentence_bleu

|

| 8 |

+

from sentence_transformers import util

|

| 9 |

+

|

| 10 |

+

########################

|

| 11 |

+

## BLEU

|

| 12 |

+

########################

|

| 13 |

+

def tokenize(text):

|

| 14 |

+

tokens = re.split(r'\s|\.', text)

|

| 15 |

+

tokens = [t for t in tokens if len(t) > 0]

|

| 16 |

+

return tokens

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def bleu_score(reference, hypothesis, gram):

|

| 20 |

+

reference_tokens = tokenize(reference)

|

| 21 |

+

hypothesis_tokens = tokenize(hypothesis)

|

| 22 |

+

|

| 23 |

+

if gram == 1:

|

| 24 |

+

bleu = sentence_bleu([reference_tokens], hypothesis_tokens, (1., )) # BELU-1

|

| 25 |

+

elif gram == 2:

|

| 26 |

+

bleu = sentence_bleu([reference_tokens], hypothesis_tokens, (1. / 2., 1. / 2.)) # BELU-2

|

| 27 |

+

elif gram == 3:

|

| 28 |

+

bleu = sentence_bleu([reference_tokens], hypothesis_tokens, (1. / 3., 1. / 3., 1. / 3.)) # BELU-3

|

| 29 |

+

elif gram == 4:

|

| 30 |

+

bleu = sentence_bleu([reference_tokens], hypothesis_tokens, (1. / 4., 1. / 4., 1. / 4., 1. / 4.)) # BELU-4

|

| 31 |

+

|

| 32 |

+

return bleu

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def caculate_bleu(results, data, gram):

|

| 36 |

+

bleus = []

|

| 37 |

+

for qid, output in results.items():

|

| 38 |

+

prediction = output

|

| 39 |

+

target = data[qid]

|

| 40 |

+

target = target.strip()

|

| 41 |

+

if target == "":

|

| 42 |

+

continue

|

| 43 |

+

bleu = bleu_score(target, prediction, gram)

|

| 44 |

+

bleus.append(bleu)

|

| 45 |

+

|

| 46 |

+

avg_bleu = sum(bleus) / len(bleus)

|

| 47 |

+

|

| 48 |

+

return avg_bleu

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

########################

|

| 52 |

+

## Rouge-L

|

| 53 |

+

########################

|

| 54 |

+

def score_rouge(str1, str2):

|

| 55 |

+

rouge = Rouge(metrics=["rouge-l"])

|

| 56 |

+

scores = rouge.get_scores(str1, str2, avg=True)

|

| 57 |

+

rouge_l = scores['rouge-l']['f']

|

| 58 |

+

return rouge_l

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

def caculate_rouge(results, data):

|

| 62 |

+

rouges = []

|

| 63 |

+

for qid, output in results.items():

|

| 64 |

+

prediction = output

|

| 65 |

+

target = data[qid]

|

| 66 |

+

target = target.strip()

|

| 67 |

+

if prediction == "":

|

| 68 |

+

continue

|

| 69 |

+

if target == "":

|

| 70 |

+

continue

|

| 71 |

+

rouge = score_rouge(target, prediction)

|

| 72 |

+

rouges.append(rouge)

|

| 73 |

+

|

| 74 |

+

avg_rouge = sum(rouges) / len(rouges)

|

| 75 |

+

return avg_rouge

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

########################

|

| 79 |

+

## Sentence Similarity

|

| 80 |

+

########################

|

| 81 |

+

def similariry_score(str1, str2, model):

|

| 82 |

+

# compute embedding for both lists

|

| 83 |

+

embedding_1 = model.encode(str1, convert_to_tensor=True)

|

| 84 |

+

embedding_2 = model.encode(str2, convert_to_tensor=True)

|

| 85 |

+

score = util.pytorch_cos_sim(embedding_1, embedding_2).item()

|

| 86 |

+

return score

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def caculate_similariry(results, data, model):

|

| 90 |

+

scores = []

|

| 91 |

+

for qid, output in results.items():

|

| 92 |

+

prediction = output

|

| 93 |

+

target = data[qid]

|

| 94 |

+

target = target.strip()

|

| 95 |

+

|

| 96 |

+

score = similariry_score(target, prediction, model)

|

| 97 |

+

scores.append(score)

|

| 98 |

+

|

| 99 |

+

avg_score = sum(scores) / len(scores)

|

| 100 |

+

return avg_score

|

main.py

ADDED

|

@@ -0,0 +1,383 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import numpy as np

|

| 3 |

+

import torch

|

| 4 |

+

import os

|

| 5 |

+

import re

|

| 6 |

+

import json

|

| 7 |

+

import argparse

|

| 8 |

+

import random

|

| 9 |

+

from transformers import T5Tokenizer, DataCollatorForSeq2Seq, Seq2SeqTrainingArguments, Seq2SeqTrainer, T5ForConditionalGeneration

|

| 10 |

+

from model import T5ForConditionalGeneration, T5ForMultimodalGeneration

|

| 11 |

+

from utils_data import img_shape, load_data_std, load_data_img, ScienceQADatasetStd, ScienceQADatasetImg

|

| 12 |

+

from utils_prompt import *

|

| 13 |

+

from utils_evaluate import get_scores

|

| 14 |

+

from rich.table import Column, Table

|

| 15 |

+

from rich import box

|

| 16 |

+

from rich.console import Console

|

| 17 |

+

console = Console(record=True)

|

| 18 |

+

from torch import cuda

|

| 19 |

+

import nltk

|

| 20 |

+

import evaluate

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def parse_args():

|

| 24 |

+

parser = argparse.ArgumentParser()

|

| 25 |

+

parser.add_argument('--data_root', type=str, default='data')

|

| 26 |

+

parser.add_argument('--output_dir', type=str, default='experiments')

|

| 27 |

+

parser.add_argument('--model', type=str, default='allenai/unifiedqa-t5-base')

|

| 28 |

+

parser.add_argument('--options', type=list, default=["A", "B", "C", "D", "E"])

|

| 29 |

+

parser.add_argument('--epoch', type=int, default=20)

|

| 30 |

+

parser.add_argument('--lr', type=float, default=5e-5)

|

| 31 |

+

parser.add_argument('--bs', type=int, default=16)

|

| 32 |

+

parser.add_argument('--input_len', type=int, default=512)

|

| 33 |

+

parser.add_argument('--output_len', type=int, default=64)

|

| 34 |

+

parser.add_argument('--eval_bs', type=int, default=16)

|

| 35 |

+

parser.add_argument('--eval_acc', type=int, default=None, help='evaluate accumulation step')

|

| 36 |

+

parser.add_argument('--train_split', type=str, default='train', choices=['train', 'trainval', 'minitrain'])

|

| 37 |

+

parser.add_argument('--val_split', type=str, default='val', choices=['test', 'val', 'minival'])

|

| 38 |

+

parser.add_argument('--test_split', type=str, default='test', choices=['test', 'minitest'])

|

| 39 |

+

|

| 40 |

+

parser.add_argument('--use_generate', action='store_true', help='only for baseline to improve inference speed')

|

| 41 |

+

parser.add_argument('--final_eval', action='store_true', help='only evaluate the model at the final epoch')

|

| 42 |

+

parser.add_argument('--user_msg', type=str, default="baseline", help='experiment type in the save_dir')

|

| 43 |

+

parser.add_argument('--img_type', type=str, default=None, choices=['detr', 'clip', 'resnet'], help='type of image features')

|

| 44 |

+

parser.add_argument('--eval_le', type=str, default=None, help='generated rationale for the dev set')

|

| 45 |

+

parser.add_argument('--test_le', type=str, default=None, help='generated rationale for the test set')

|

| 46 |

+

parser.add_argument('--evaluate_dir', type=str, default=None, help='the directory of model for evaluation')

|

| 47 |

+

parser.add_argument('--caption_file', type=str, default='data/captions.json')

|

| 48 |

+

parser.add_argument('--use_caption', action='store_true', help='use image captions or not')

|

| 49 |

+

parser.add_argument('--prompt_format', type=str, default='QCM-A', help='prompt format template',

|

| 50 |

+

choices=['QCM-A', 'QCM-LE', 'QCMG-A', 'QCM-LEA', 'QCM-ALE'])

|

| 51 |

+

parser.add_argument('--seed', type=int, default=42, help='random seed')

|

| 52 |

+

|

| 53 |

+

args = parser.parse_args()

|

| 54 |

+

return args

|

| 55 |

+

|

| 56 |

+

def T5Trainer(

|

| 57 |

+

dataframe, args,

|

| 58 |

+

):

|

| 59 |

+

torch.manual_seed(args.seed) # pytorch random seed

|

| 60 |

+

np.random.seed(args.seed) # numpy random seed

|

| 61 |

+

torch.backends.cudnn.deterministic = True

|

| 62 |

+

|

| 63 |

+

if args.evaluate_dir is not None:

|

| 64 |

+

args.model = args.evaluate_dir

|

| 65 |

+

|

| 66 |

+

tokenizer = T5Tokenizer.from_pretrained(args.model)

|

| 67 |

+

|

| 68 |

+

console.log(f"""[Model]: Loading {args.model}...\n""")

|

| 69 |

+

console.log(f"[Data]: Reading data...\n")

|

| 70 |

+

problems = dataframe['problems']

|

| 71 |

+

qids = dataframe['qids']

|

| 72 |

+

train_qids = qids['train']

|

| 73 |

+

test_qids = qids['test']

|

| 74 |

+

val_qids = qids['val']

|

| 75 |

+

|

| 76 |

+

if args.evaluate_dir is not None:

|

| 77 |

+

save_dir = args.evaluate_dir

|

| 78 |

+

else:

|

| 79 |

+

model_name = args.model.replace("/","-")

|

| 80 |

+

gpu_count = torch.cuda.device_count()

|

| 81 |

+

save_dir = f"{args.output_dir}/{args.user_msg}_{model_name}_{args.img_type}_{args.prompt_format}_lr{args.lr}_bs{args.bs * gpu_count}_op{args.output_len}_ep{args.epoch}"

|

| 82 |

+

if not os.path.exists(save_dir):

|

| 83 |

+

os.mkdir(save_dir)

|

| 84 |

+

|

| 85 |

+

padding_idx = tokenizer._convert_token_to_id(tokenizer.pad_token)

|

| 86 |

+

if args.img_type is not None:

|

| 87 |

+

patch_size = img_shape[args.img_type]

|

| 88 |

+

model = T5ForMultimodalGeneration.from_pretrained(args.model, patch_size=patch_size, padding_idx=padding_idx, save_dir=save_dir)

|

| 89 |

+

name_maps = dataframe['name_maps']

|

| 90 |

+

image_features = dataframe['image_features']

|

| 91 |

+

train_set = ScienceQADatasetImg(

|

| 92 |

+

problems,

|

| 93 |

+

train_qids,

|

| 94 |

+

name_maps,

|

| 95 |

+

tokenizer,

|

| 96 |

+

args.input_len,

|

| 97 |

+

args.output_len,

|

| 98 |

+

args,

|

| 99 |

+

image_features,

|

| 100 |

+

)

|

| 101 |

+

eval_set = ScienceQADatasetImg(

|

| 102 |

+

problems,

|

| 103 |

+

val_qids,

|

| 104 |

+

name_maps,

|

| 105 |

+

tokenizer,

|

| 106 |

+

args.input_len,

|

| 107 |

+

args.output_len,

|

| 108 |

+

args,

|

| 109 |

+

image_features,

|

| 110 |

+

args.eval_le,

|

| 111 |

+

)

|

| 112 |

+

test_set = ScienceQADatasetImg(

|

| 113 |

+

problems,

|

| 114 |

+

test_qids,

|

| 115 |

+

name_maps,

|

| 116 |

+

tokenizer,

|

| 117 |

+

args.input_len,

|

| 118 |

+

args.output_len,

|

| 119 |

+

args,

|

| 120 |

+

image_features,

|

| 121 |

+

args.test_le,

|

| 122 |

+

)

|

| 123 |

+

else:

|

| 124 |

+

model = T5ForConditionalGeneration.from_pretrained(args.model)

|

| 125 |

+

train_set = ScienceQADatasetStd(

|

| 126 |

+

problems,

|

| 127 |

+

train_qids,

|

| 128 |

+

tokenizer,

|

| 129 |

+

args.input_len,

|

| 130 |

+

args.output_len,

|

| 131 |

+

args,

|

| 132 |

+

)

|

| 133 |

+

eval_set = ScienceQADatasetStd(

|

| 134 |

+

problems,

|

| 135 |

+

val_qids,

|

| 136 |

+

tokenizer,

|

| 137 |

+

args.input_len,

|

| 138 |

+

args.output_len,

|

| 139 |

+

args,

|

| 140 |

+

args.eval_le,

|

| 141 |

+

)

|

| 142 |

+

|

| 143 |

+

test_set = ScienceQADatasetStd(

|

| 144 |

+

problems,

|

| 145 |

+

test_qids,

|

| 146 |

+

tokenizer,

|

| 147 |

+

args.input_len,

|

| 148 |

+

args.output_len,

|

| 149 |

+

args,

|

| 150 |

+

args.test_le,

|

| 151 |

+

)

|

| 152 |

+

|

| 153 |

+

datacollator = DataCollatorForSeq2Seq(tokenizer)

|

| 154 |

+

print("model parameters: ", model.num_parameters())

|

| 155 |

+

def extract_ans(ans):

|

| 156 |

+

pattern = re.compile(r'The answer is \(([A-Z])\)')

|

| 157 |

+

res = pattern.findall(ans)

|

| 158 |

+

|

| 159 |

+

if len(res) == 1:

|

| 160 |

+

answer = res[0] # 'A', 'B', ...

|

| 161 |

+

else:

|

| 162 |

+

answer = "FAILED"

|

| 163 |

+

return answer

|

| 164 |

+

|

| 165 |

+

# accuracy for answer inference

|

| 166 |

+

def compute_metrics_acc(eval_preds):

|

| 167 |

+

if args.use_generate:

|

| 168 |

+

preds, targets = eval_preds

|

| 169 |

+

if isinstance(preds, tuple):

|

| 170 |

+

preds = preds[0]

|

| 171 |

+

else:

|

| 172 |

+

preds = eval_preds.predictions[0]

|

| 173 |

+

targets = eval_preds.label_ids

|

| 174 |

+

preds = preds.argmax(axis=2)

|

| 175 |

+

preds = tokenizer.batch_decode(preds, skip_special_tokens=True, clean_up_tokenization_spaces=True)

|

| 176 |

+

targets = tokenizer.batch_decode(targets, skip_special_tokens=True, clean_up_tokenization_spaces=True)

|

| 177 |

+

correct = 0

|

| 178 |

+

assert len(preds) == len(targets)

|

| 179 |

+

for idx, pred in enumerate(preds):

|

| 180 |

+

reference = targets[idx]

|

| 181 |

+

reference = extract_ans(reference)

|

| 182 |

+

extract_pred = extract_ans(pred)

|

| 183 |

+

best_option = extract_pred

|

| 184 |

+

if reference == best_option:

|

| 185 |

+

correct +=1

|

| 186 |

+

return {'accuracy': 1.0*correct/len(targets)}

|

| 187 |

+

|

| 188 |

+

# rougel for rationale generation

|

| 189 |

+

metric = evaluate.load("rouge")

|

| 190 |

+

def postprocess_text(preds, labels):

|

| 191 |

+

preds = [pred.strip() for pred in preds]

|

| 192 |

+

labels = [label.strip() for label in labels]

|

| 193 |

+

preds = ["\n".join(nltk.sent_tokenize(pred)) for pred in preds]

|

| 194 |

+

labels = ["\n".join(nltk.sent_tokenize(label)) for label in labels]

|

| 195 |

+

return preds, labels

|

| 196 |

+

|

| 197 |

+

def compute_metrics_rougel(eval_preds):

|

| 198 |

+

if args.use_generate:

|

| 199 |

+

preds, targets = eval_preds

|

| 200 |

+

if isinstance(preds, tuple):

|

| 201 |

+

preds = preds[0]

|

| 202 |

+

else:

|

| 203 |

+

preds = eval_preds.predictions[0]

|

| 204 |

+

targets = eval_preds.label_ids

|

| 205 |

+

preds = preds.argmax(axis=2)

|

| 206 |

+

preds = tokenizer.batch_decode(preds, skip_special_tokens=True, clean_up_tokenization_spaces=True)

|

| 207 |

+

targets = tokenizer.batch_decode(targets, skip_special_tokens=True, clean_up_tokenization_spaces=True)

|

| 208 |

+

|

| 209 |

+

decoded_preds, decoded_labels = postprocess_text(preds, targets)

|

| 210 |

+

|

| 211 |

+

result = metric.compute(predictions=decoded_preds, references=decoded_labels, use_stemmer=True)

|

| 212 |

+

result = {k: round(v * 100, 4) for k, v in result.items()}

|

| 213 |

+

prediction_lens = [np.count_nonzero(pred != tokenizer.pad_token_id) for pred in preds]

|

| 214 |

+

result["gen_len"] = np.mean(prediction_lens)

|

| 215 |

+

return result

|

| 216 |

+

|

| 217 |

+

# only use the last model for evaluation to save time

|

| 218 |

+

if args.final_eval:

|

| 219 |

+

training_args = Seq2SeqTrainingArguments(

|

| 220 |

+

save_dir,

|

| 221 |

+

do_train=True if args.evaluate_dir is None else False,

|

| 222 |

+

do_eval=False,

|

| 223 |

+

evaluation_strategy="no",

|

| 224 |

+

logging_strategy="steps",

|

| 225 |

+

save_strategy="epoch",

|

| 226 |

+

save_total_limit = 2,

|

| 227 |

+

learning_rate= args.lr,

|

| 228 |

+

eval_accumulation_steps=args.eval_acc,

|

| 229 |

+

per_device_train_batch_size=args.bs,

|

| 230 |

+

per_device_eval_batch_size=args.eval_bs,

|

| 231 |

+

weight_decay=0.01,

|

| 232 |

+

num_train_epochs=args.epoch,

|

| 233 |

+

predict_with_generate=args.use_generate,

|

| 234 |

+

report_to="none",

|

| 235 |

+

)

|

| 236 |

+

# evaluate at each epoch

|

| 237 |

+

else:

|

| 238 |

+

training_args = Seq2SeqTrainingArguments(

|

| 239 |

+

save_dir,

|

| 240 |

+

do_train=True if args.evaluate_dir is None else False,

|

| 241 |

+

do_eval=True,

|

| 242 |

+

evaluation_strategy="epoch",

|

| 243 |

+

logging_strategy="steps",

|

| 244 |

+

save_strategy="epoch",

|

| 245 |

+

save_total_limit = 2,

|

| 246 |

+

learning_rate= args.lr,

|

| 247 |

+

eval_accumulation_steps=args.eval_acc,

|

| 248 |

+

per_device_train_batch_size=args.bs,

|

| 249 |

+

per_device_eval_batch_size=args.eval_bs,

|

| 250 |

+

weight_decay=0.01,

|

| 251 |

+

num_train_epochs=args.epoch,

|

| 252 |

+

metric_for_best_model="accuracy" if args.prompt_format != "QCM-LE" else "rougeL",

|

| 253 |

+

predict_with_generate=args.use_generate,

|

| 254 |

+

load_best_model_at_end=True,

|

| 255 |

+

report_to="none",

|

| 256 |

+

)

|

| 257 |

+

|

| 258 |

+

trainer = Seq2SeqTrainer(

|

| 259 |

+

model=model,

|

| 260 |

+

args=training_args,

|

| 261 |

+

train_dataset=train_set,

|

| 262 |

+

eval_dataset=eval_set,

|

| 263 |

+

data_collator=datacollator,

|

| 264 |

+

tokenizer=tokenizer,

|

| 265 |

+

compute_metrics = compute_metrics_acc if args.prompt_format != "QCM-LE" else compute_metrics_rougel

|

| 266 |

+

)

|

| 267 |

+

|

| 268 |

+

if args.evaluate_dir is None:

|

| 269 |

+

trainer.train()

|

| 270 |

+

trainer.save_model(save_dir)

|

| 271 |

+

|

| 272 |

+

metrics = trainer.evaluate(eval_dataset = test_set)

|

| 273 |

+

trainer.log_metrics("test", metrics)

|

| 274 |

+

trainer.save_metrics("test", metrics)

|

| 275 |

+

|

| 276 |

+

predict_results = trainer.predict(test_dataset=test_set, max_length=args.output_len)

|

| 277 |

+

if trainer.is_world_process_zero():

|

| 278 |

+

if args.use_generate:

|

| 279 |

+

preds, targets = predict_results.predictions, predict_results.label_ids

|

| 280 |

+

else:

|

| 281 |

+

preds = predict_results.predictions[0]

|

| 282 |

+

targets = predict_results.label_ids

|

| 283 |

+

preds = preds.argmax(axis=2)

|

| 284 |

+

|

| 285 |

+

preds = tokenizer.batch_decode(

|

| 286 |

+

preds, skip_special_tokens=True, clean_up_tokenization_spaces=True

|

| 287 |

+

)

|

| 288 |

+

targets = tokenizer.batch_decode(

|

| 289 |

+

targets, skip_special_tokens=True, clean_up_tokenization_spaces=True

|

| 290 |

+

)

|

| 291 |

+

|

| 292 |

+

results_ans = {}

|

| 293 |

+

results_rationale = {}

|

| 294 |

+

results_reference = {}

|

| 295 |

+

|

| 296 |

+

num_fail = 0

|

| 297 |

+

for idx, qid in enumerate(test_qids):

|

| 298 |

+

pred = preds[int(idx)]

|

| 299 |

+

ref = targets[int(idx)]

|

| 300 |

+

extract_pred = extract_ans(pred)

|

| 301 |

+

if extract_pred != "FAILED":

|

| 302 |

+

if extract_pred in args.options:

|

| 303 |

+

extract_pred = args.options.index(extract_pred)

|

| 304 |

+

else:

|

| 305 |

+

extract_pred = random.choice(range(0,len(args.options)))

|

| 306 |

+

else:

|

| 307 |

+

num_fail += 1

|

| 308 |

+

extract_pred = random.choice(range(len(args.options))) # random choose one option

|

| 309 |

+

results_ans[str(qid)] = extract_pred

|

| 310 |

+

results_rationale[str(qid)] = pred

|

| 311 |

+

results_reference[str(qid)] = ref

|

| 312 |

+

|

| 313 |

+

scores = get_scores(results_ans, results_rationale, results_reference, os.path.join(args.data_root, "scienceqa/problems.json"))

|

| 314 |

+

preds = [pred.strip() for pred in preds]

|

| 315 |

+

output_data = {

|

| 316 |

+

"num_fail": num_fail,

|

| 317 |

+

"scores": scores,

|

| 318 |

+

"preds": preds,

|

| 319 |

+

"labels": targets}

|

| 320 |

+

output_prediction_file = os.path.join(save_dir,"predictions_ans_test.json")

|

| 321 |

+

with open(output_prediction_file, "w") as writer:

|

| 322 |

+

writer.write(json.dumps(output_data, indent=4))

|

| 323 |

+

|

| 324 |

+

# generate the rationale for the eval set

|

| 325 |

+

if args.prompt_format == "QCM-LE":

|

| 326 |

+

torch.cuda.empty_cache()

|

| 327 |

+

del predict_results, preds, targets

|

| 328 |

+

predict_results = trainer.predict(test_dataset=eval_set, max_length=args.output_len)

|

| 329 |

+

if trainer.is_world_process_zero():

|

| 330 |

+

if args.use_generate:

|

| 331 |

+

preds, targets = predict_results.predictions, predict_results.label_ids

|

| 332 |

+

else:

|

| 333 |

+

preds = predict_results.predictions[0]

|

| 334 |

+

targets = predict_results.label_ids

|

| 335 |

+

preds = preds.argmax(axis=2)

|

| 336 |

+

|

| 337 |

+

preds = tokenizer.batch_decode(

|

| 338 |

+

preds, skip_special_tokens=True, clean_up_tokenization_spaces=True

|

| 339 |

+

)

|

| 340 |

+

targets = tokenizer.batch_decode(

|

| 341 |

+

targets, skip_special_tokens=True, clean_up_tokenization_spaces=True

|

| 342 |

+

)

|

| 343 |

+

preds = [pred.strip() for pred in preds]

|

| 344 |

+

output_data = {"preds": preds,

|

| 345 |

+

"labels": targets}

|

| 346 |

+

output_prediction_file = os.path.join(save_dir,"predictions_ans_eval.json")

|

| 347 |

+

with open(output_prediction_file, "w") as writer:

|

| 348 |

+

writer.write(json.dumps(output_data, indent=4))

|

| 349 |

+

|

| 350 |

+

|

| 351 |

+

if __name__ == '__main__':

|

| 352 |

+

|

| 353 |

+

# training logger to log training progress

|

| 354 |

+

training_logger = Table(

|

| 355 |

+

Column("Epoch", justify="center"),

|

| 356 |

+

Column("Steps", justify="center"),

|

| 357 |

+

Column("Loss", justify="center"),

|

| 358 |

+

title="Training Status",

|

| 359 |

+

pad_edge=False,

|

| 360 |

+

box=box.ASCII,

|

| 361 |

+

)

|

| 362 |

+

|

| 363 |

+

args = parse_args()

|

| 364 |

+

print("args",args)

|

| 365 |

+

print('====Input Arguments====')

|

| 366 |

+

print(json.dumps(vars(args), indent=2, sort_keys=False))

|

| 367 |

+

|

| 368 |

+

random.seed(args.seed)

|

| 369 |

+

|

| 370 |

+

if not os.path.exists(args.output_dir):

|

| 371 |

+

os.mkdir(args.output_dir)

|

| 372 |

+

|

| 373 |

+

if args.img_type is not None:

|

| 374 |

+

problems, qids, name_maps, image_features = load_data_img(args) # probelms, test question ids, shot example ids

|

| 375 |

+

dataframe = {'problems':problems, 'qids':qids, 'name_maps': name_maps, 'image_features': image_features}

|

| 376 |

+

else:

|

| 377 |

+

problems, qids = load_data_std(args) # probelms, test question ids, shot example ids

|

| 378 |

+

dataframe = {'problems':problems, 'qids':qids}

|

| 379 |

+

|

| 380 |

+

T5Trainer(

|

| 381 |

+

dataframe=dataframe,

|

| 382 |

+

args = args

|

| 383 |

+

)

|

model.py

ADDED

|

@@ -0,0 +1,194 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

Adapted from https://github.com/huggingface/transformers

|

| 3 |

+

'''

|

| 4 |