Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

# RoBERTa-base Korean

|

| 2 |

|

| 3 |

## 모델 설명

|

|

@@ -9,8 +20,8 @@

|

|

| 9 |

- **아키텍처**: RobertaForMaskedLM

|

| 10 |

- **모델 크기**: 256 hidden size, 8 hidden layers, 8 attention heads

|

| 11 |

- **max_position_embeddings**: 514

|

| 12 |

-

- **intermediate_size**:

|

| 13 |

-

- **vocab_size**:

|

| 14 |

|

| 15 |

## 학습 데이터

|

| 16 |

사용된 데이터셋은 다음과 같습니다:

|

|

@@ -18,12 +29,12 @@

|

|

| 18 |

- **AIHUB**: SNS, 유튜브 댓글, 도서 문장

|

| 19 |

- **기타**: 나무위키, 한국어 위키피디아

|

| 20 |

|

| 21 |

-

총 합산된 데이터는

|

| 22 |

|

| 23 |

## 학습 상세

|

| 24 |

- **BATCH_SIZE**: 112 (GPU당)

|

| 25 |

- **ACCUMULATE**: 36

|

| 26 |

-

- **Total_BATCH_SIZE**:

|

| 27 |

- **MAX_STEPS**: 12,500

|

| 28 |

- **TRAIN_STEPS * BATCH_SIZE**: **100M**

|

| 29 |

- **WARMUP_STEPS**: 2,400

|

|

@@ -33,12 +44,24 @@

|

|

| 33 |

|

| 34 |

|

| 35 |

|

| 36 |

-

|

| 37 |

|

| 38 |

|

| 39 |

## 사용 방법

|

| 40 |

### tokenizer의 경우 wordpiece가 아닌 syllable 단위이기에 AutoTokenizer가 아니라 SyllableTokenizer를 사용해야 합니다.

|

| 41 |

### (레포에서 제공하고 있는 syllabletokenizer.py를 가져와서 사용해야 합니다.)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 42 |

```python

|

| 43 |

from transformers import AutoModel, AutoTokenizer

|

| 44 |

from syllabletokenizer import SyllableTokenizer

|

|

@@ -50,3 +73,17 @@ tokenizer = SyllableTokenizer(vocab_file='vocab.json',**tokenizer_kwargs)

|

|

| 50 |

# 텍스트를 토큰으로 변환하고 예측 수행

|

| 51 |

inputs = tokenizer("여기에 한국어 텍스트 입력", return_tensors="pt")

|

| 52 |

outputs = model(**inputs)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

datasets:

|

| 4 |

+

- klue/klue

|

| 5 |

+

language:

|

| 6 |

+

- ko

|

| 7 |

+

metrics:

|

| 8 |

+

- f1

|

| 9 |

+

- accuracy

|

| 10 |

+

- pearsonr

|

| 11 |

+

---

|

| 12 |

# RoBERTa-base Korean

|

| 13 |

|

| 14 |

## 모델 설명

|

|

|

|

| 20 |

- **아키텍처**: RobertaForMaskedLM

|

| 21 |

- **모델 크기**: 256 hidden size, 8 hidden layers, 8 attention heads

|

| 22 |

- **max_position_embeddings**: 514

|

| 23 |

+

- **intermediate_size**: 2,048

|

| 24 |

+

- **vocab_size**: 1,428

|

| 25 |

|

| 26 |

## 학습 데이터

|

| 27 |

사용된 데이터셋은 다음과 같습니다:

|

|

|

|

| 29 |

- **AIHUB**: SNS, 유튜브 댓글, 도서 문장

|

| 30 |

- **기타**: 나무위키, 한국어 위키피디아

|

| 31 |

|

| 32 |

+

총 합산된 데이터는 **약 11GB** 입니다. **(4B tokens)**

|

| 33 |

|

| 34 |

## 학습 상세

|

| 35 |

- **BATCH_SIZE**: 112 (GPU당)

|

| 36 |

- **ACCUMULATE**: 36

|

| 37 |

+

- **Total_BATCH_SIZE**: 8,064

|

| 38 |

- **MAX_STEPS**: 12,500

|

| 39 |

- **TRAIN_STEPS * BATCH_SIZE**: **100M**

|

| 40 |

- **WARMUP_STEPS**: 2,400

|

|

|

|

| 44 |

|

| 45 |

|

| 46 |

|

|

|

|

| 47 |

|

| 48 |

|

| 49 |

## 사용 방법

|

| 50 |

### tokenizer의 경우 wordpiece가 아닌 syllable 단위이기에 AutoTokenizer가 아니라 SyllableTokenizer를 사용해야 합니다.

|

| 51 |

### (레포에서 제공하고 있는 syllabletokenizer.py를 가져와서 사용해야 합니다.)

|

| 52 |

+

|

| 53 |

+

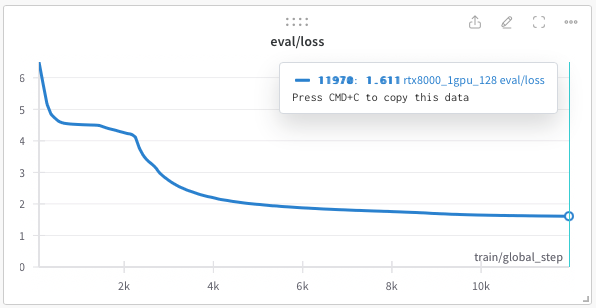

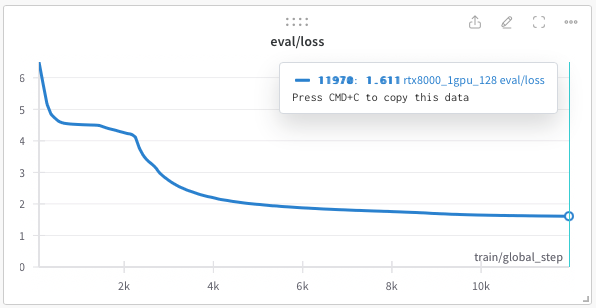

## 성능 평가

|

| 54 |

+

- **KLUE benchmark test를 통해서 성능을 평가했습니다.**

|

| 55 |

+

- klue-roberta-base에 비해서 매우 작은 크기라 성능이 낮기는 하지만 hidden size 512인 모델은 크기 대비 좋은 성능을 보였습니다.

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

## 사용 방법

|

| 62 |

+

### tokenizer의 경우 wordpiece가 아닌 syllable 단위이기에 AutoTokenizer가 아니라 SyllableTokenizer를 사용해야 합니다.

|

| 63 |

+

### (레포에서 제공하고 있는 syllabletokenizer.py를 가져와서 사용해야 합니다.)

|

| 64 |

+

|

| 65 |

```python

|

| 66 |

from transformers import AutoModel, AutoTokenizer

|

| 67 |

from syllabletokenizer import SyllableTokenizer

|

|

|

|

| 73 |

# 텍스트를 토큰으로 변환하고 예측 수행

|

| 74 |

inputs = tokenizer("여기에 한국어 텍스트 입력", return_tensors="pt")

|

| 75 |

outputs = model(**inputs)

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

## Citation

|

| 79 |

+

**klue**

|

| 80 |

+

```

|

| 81 |

+

@misc{park2021klue,

|

| 82 |

+

title={KLUE: Korean Language Understanding Evaluation},

|

| 83 |

+

author={Sungjoon Park and Jihyung Moon and Sungdong Kim and Won Ik Cho and Jiyoon Han and Jangwon Park and Chisung Song and Junseong Kim and Yongsook Song and Taehwan Oh and Joohong Lee and Juhyun Oh and Sungwon Lyu and Younghoon Jeong and Inkwon Lee and Sangwoo Seo and Dongjun Lee and Hyunwoo Kim and Myeonghwa Lee and Seongbo Jang and Seungwon Do and Sunkyoung Kim and Kyungtae Lim and Jongwon Lee and Kyumin Park and Jamin Shin and Seonghyun Kim and Lucy Park and Alice Oh and Jungwoo Ha and Kyunghyun Cho},

|

| 84 |

+

year={2021},

|

| 85 |

+

eprint={2105.09680},

|

| 86 |

+

archivePrefix={arXiv},

|

| 87 |

+

primaryClass={cs.CL}

|

| 88 |

+

}

|

| 89 |

+

```

|