Commit

·

fa8e2c2

1

Parent(s):

18905a7

update model card (#1)

Browse files- update model card (0533d9fa2852caae9ed823f04e5a1b06ab216f06)

- fix title (3e2bf94cf2b37a81820208b561dc2f415d816d1f)

Co-authored-by: Will Berman <williamberman@users.noreply.huggingface.co>

- README.md +264 -1

- images/seg_image_out.png +0 -0

- images/seg_input.jpeg +0 -0

- images/segment_image.png +0 -0

README.md

CHANGED

|

@@ -1,3 +1,266 @@

|

|

| 1 |

---

|

| 2 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

base_model: runwayml/stable-diffusion-v1-5

|

| 4 |

+

tags:

|

| 5 |

+

- art

|

| 6 |

+

- t2i-adapter

|

| 7 |

+

- controlnet

|

| 8 |

+

- stable-diffusion

|

| 9 |

+

- image-to-image

|

| 10 |

---

|

| 11 |

+

|

| 12 |

+

# T2I Adapter - Segment

|

| 13 |

+

|

| 14 |

+

T2I Adapter is a network providing additional conditioning to stable diffusion. Each t2i checkpoint takes a different type of conditioning as input and is used with a specific base stable diffusion checkpoint.

|

| 15 |

+

|

| 16 |

+

This checkpoint provides conditioning on semantic segmentation for the stable diffusion 1.4 checkpoint.

|

| 17 |

+

|

| 18 |

+

## Model Details

|

| 19 |

+

- **Developed by:** T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models

|

| 20 |

+

- **Model type:** Diffusion-based text-to-image generation model

|

| 21 |

+

- **Language(s):** English

|

| 22 |

+

- **License:** Apache 2.0

|

| 23 |

+

- **Resources for more information:** [GitHub Repository](https://github.com/TencentARC/T2I-Adapter), [Paper](https://arxiv.org/abs/2302.08453).

|

| 24 |

+

- **Cite as:**

|

| 25 |

+

|

| 26 |

+

@misc{

|

| 27 |

+

title={T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models},

|

| 28 |

+

author={Chong Mou, Xintao Wang, Liangbin Xie, Yanze Wu, Jian Zhang, Zhongang Qi, Ying Shan, Xiaohu Qie},

|

| 29 |

+

year={2023},

|

| 30 |

+

eprint={2302.08453},

|

| 31 |

+

archivePrefix={arXiv},

|

| 32 |

+

primaryClass={cs.CV}

|

| 33 |

+

}

|

| 34 |

+

|

| 35 |

+

### Checkpoints

|

| 36 |

+

|

| 37 |

+

| Model Name | Control Image Overview| Control Image Example | Generated Image Example |

|

| 38 |

+

|---|---|---|---|

|

| 39 |

+

|[TencentARC/t2iadapter_color_sd14v1](https://huggingface.co/TencentARC/t2iadapter_color_sd14v1)<br/> *Trained with spatial color palette* | A image with 8x8 color palette.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/color_sample_input.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/color_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/color_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/color_sample_output.png"/></a>|

|

| 40 |

+

|[TencentARC/t2iadapter_canny_sd14v1](https://huggingface.co/TencentARC/t2iadapter_canny_sd14v1)<br/> *Trained with canny edge detection* | A monochrome image with white edges on a black background.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/canny_sample_input.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/canny_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/canny_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/canny_sample_output.png"/></a>|

|

| 41 |

+

|[TencentARC/t2iadapter_sketch_sd14v1](https://huggingface.co/TencentARC/t2iadapter_sketch_sd14v1)<br/> *Trained with [PidiNet](https://github.com/zhuoinoulu/pidinet) edge detection* | A hand-drawn monochrome image with white outlines on a black background.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/sketch_sample_input.png"><img width="64" style="margin:0;padding:0;" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/sketch_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/sketch_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/sketch_sample_output.png"/></a>|

|

| 42 |

+

|[TencentARC/t2iadapter_depth_sd14v1](https://huggingface.co/TencentARC/t2iadapter_depth_sd14v1)<br/> *Trained with Midas depth estimation* | A grayscale image with black representing deep areas and white representing shallow areas.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/depth_sample_input.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/depth_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/depth_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/depth_sample_output.png"/></a>|

|

| 43 |

+

|[TencentARC/t2iadapter_openpose_sd14v1](https://huggingface.co/TencentARC/t2iadapter_openpose_sd14v1)<br/> *Trained with OpenPose bone image* | A [OpenPose bone](https://github.com/CMU-Perceptual-Computing-Lab/openpose) image.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/openpose_sample_input.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/openpose_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/openpose_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/openpose_sample_output.png"/></a>|

|

| 44 |

+

|[TencentARC/t2iadapter_keypose_sd14v1](https://huggingface.co/TencentARC/t2iadapter_keypose_sd14v1)<br/> *Trained with mmpose skeleton image* | A [mmpose skeleton](https://github.com/open-mmlab/mmpose) image.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/keypose_sample_input.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/keypose_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/keypose_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/keypose_sample_output.png"/></a>|

|

| 45 |

+

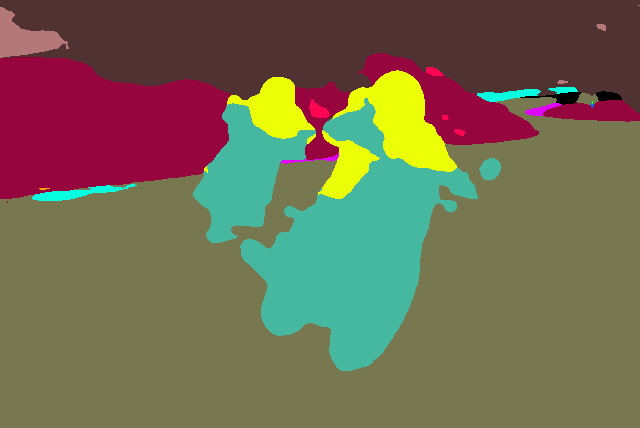

|[TencentARC/t2iadapter_seg_sd14v1](https://huggingface.co/TencentARC/t2iadapter_seg_sd14v1)<br/>*Trained with semantic segmentation* | An [custom](https://github.com/TencentARC/T2I-Adapter/discussions/25) segmentation protocol image.|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/seg_sample_input.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/seg_sample_input.png"/></a>|<a href="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/seg_sample_output.png"><img width="64" src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/seg_sample_output.png"/></a> |

|

| 46 |

+

|[TencentARC/t2iadapter_canny_sd15v2](https://huggingface.co/TencentARC/t2iadapter_canny_sd15v2)||

|

| 47 |

+

|[TencentARC/t2iadapter_depth_sd15v2](https://huggingface.co/TencentARC/t2iadapter_depth_sd15v2)||

|

| 48 |

+

|[TencentARC/t2iadapter_sketch_sd15v2](https://huggingface.co/TencentARC/t2iadapter_sketch_sd15v2)||

|

| 49 |

+

|[TencentARC/t2iadapter_zoedepth_sd15v1](https://huggingface.co/TencentARC/t2iadapter_zoedepth_sd15v1)||

|

| 50 |

+

|

| 51 |

+

## Example

|

| 52 |

+

|

| 53 |

+

1. Dependencies

|

| 54 |

+

|

| 55 |

+

```sh

|

| 56 |

+

pip install diffusers transformers

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

2. Run code:

|

| 60 |

+

|

| 61 |

+

```python

|

| 62 |

+

import torch

|

| 63 |

+

from PIL import Image

|

| 64 |

+

import numpy as np

|

| 65 |

+

from transformers import AutoImageProcessor, UperNetForSemanticSegmentation

|

| 66 |

+

|

| 67 |

+

from diffusers import (

|

| 68 |

+

T2IAdapter,

|

| 69 |

+

StableDiffusionAdapterPipeline

|

| 70 |

+

)

|

| 71 |

+

|

| 72 |

+

ada_palette = np.asarray([

|

| 73 |

+

[0, 0, 0],

|

| 74 |

+

[120, 120, 120],

|

| 75 |

+

[180, 120, 120],

|

| 76 |

+

[6, 230, 230],

|

| 77 |

+

[80, 50, 50],

|

| 78 |

+

[4, 200, 3],

|

| 79 |

+

[120, 120, 80],

|

| 80 |

+

[140, 140, 140],

|

| 81 |

+

[204, 5, 255],

|

| 82 |

+

[230, 230, 230],

|

| 83 |

+

[4, 250, 7],

|

| 84 |

+

[224, 5, 255],

|

| 85 |

+

[235, 255, 7],

|

| 86 |

+

[150, 5, 61],

|

| 87 |

+

[120, 120, 70],

|

| 88 |

+

[8, 255, 51],

|

| 89 |

+

[255, 6, 82],

|

| 90 |

+

[143, 255, 140],

|

| 91 |

+

[204, 255, 4],

|

| 92 |

+

[255, 51, 7],

|

| 93 |

+

[204, 70, 3],

|

| 94 |

+

[0, 102, 200],

|

| 95 |

+

[61, 230, 250],

|

| 96 |

+

[255, 6, 51],

|

| 97 |

+

[11, 102, 255],

|

| 98 |

+

[255, 7, 71],

|

| 99 |

+

[255, 9, 224],

|

| 100 |

+

[9, 7, 230],

|

| 101 |

+

[220, 220, 220],

|

| 102 |

+

[255, 9, 92],

|

| 103 |

+

[112, 9, 255],

|

| 104 |

+

[8, 255, 214],

|

| 105 |

+

[7, 255, 224],

|

| 106 |

+

[255, 184, 6],

|

| 107 |

+

[10, 255, 71],

|

| 108 |

+

[255, 41, 10],

|

| 109 |

+

[7, 255, 255],

|

| 110 |

+

[224, 255, 8],

|

| 111 |

+

[102, 8, 255],

|

| 112 |

+

[255, 61, 6],

|

| 113 |

+

[255, 194, 7],

|

| 114 |

+

[255, 122, 8],

|

| 115 |

+

[0, 255, 20],

|

| 116 |

+

[255, 8, 41],

|

| 117 |

+

[255, 5, 153],

|

| 118 |

+

[6, 51, 255],

|

| 119 |

+

[235, 12, 255],

|

| 120 |

+

[160, 150, 20],

|

| 121 |

+

[0, 163, 255],

|

| 122 |

+

[140, 140, 140],

|

| 123 |

+

[250, 10, 15],

|

| 124 |

+

[20, 255, 0],

|

| 125 |

+

[31, 255, 0],

|

| 126 |

+

[255, 31, 0],

|

| 127 |

+

[255, 224, 0],

|

| 128 |

+

[153, 255, 0],

|

| 129 |

+

[0, 0, 255],

|

| 130 |

+

[255, 71, 0],

|

| 131 |

+

[0, 235, 255],

|

| 132 |

+

[0, 173, 255],

|

| 133 |

+

[31, 0, 255],

|

| 134 |

+

[11, 200, 200],

|

| 135 |

+

[255, 82, 0],

|

| 136 |

+

[0, 255, 245],

|

| 137 |

+

[0, 61, 255],

|

| 138 |

+

[0, 255, 112],

|

| 139 |

+

[0, 255, 133],

|

| 140 |

+

[255, 0, 0],

|

| 141 |

+

[255, 163, 0],

|

| 142 |

+

[255, 102, 0],

|

| 143 |

+

[194, 255, 0],

|

| 144 |

+

[0, 143, 255],

|

| 145 |

+

[51, 255, 0],

|

| 146 |

+

[0, 82, 255],

|

| 147 |

+

[0, 255, 41],

|

| 148 |

+

[0, 255, 173],

|

| 149 |

+

[10, 0, 255],

|

| 150 |

+

[173, 255, 0],

|

| 151 |

+

[0, 255, 153],

|

| 152 |

+

[255, 92, 0],

|

| 153 |

+

[255, 0, 255],

|

| 154 |

+

[255, 0, 245],

|

| 155 |

+

[255, 0, 102],

|

| 156 |

+

[255, 173, 0],

|

| 157 |

+

[255, 0, 20],

|

| 158 |

+

[255, 184, 184],

|

| 159 |

+

[0, 31, 255],

|

| 160 |

+

[0, 255, 61],

|

| 161 |

+

[0, 71, 255],

|

| 162 |

+

[255, 0, 204],

|

| 163 |

+

[0, 255, 194],

|

| 164 |

+

[0, 255, 82],

|

| 165 |

+

[0, 10, 255],

|

| 166 |

+

[0, 112, 255],

|

| 167 |

+

[51, 0, 255],

|

| 168 |

+

[0, 194, 255],

|

| 169 |

+

[0, 122, 255],

|

| 170 |

+

[0, 255, 163],

|

| 171 |

+

[255, 153, 0],

|

| 172 |

+

[0, 255, 10],

|

| 173 |

+

[255, 112, 0],

|

| 174 |

+

[143, 255, 0],

|

| 175 |

+

[82, 0, 255],

|

| 176 |

+

[163, 255, 0],

|

| 177 |

+

[255, 235, 0],

|

| 178 |

+

[8, 184, 170],

|

| 179 |

+

[133, 0, 255],

|

| 180 |

+

[0, 255, 92],

|

| 181 |

+

[184, 0, 255],

|

| 182 |

+

[255, 0, 31],

|

| 183 |

+

[0, 184, 255],

|

| 184 |

+

[0, 214, 255],

|

| 185 |

+

[255, 0, 112],

|

| 186 |

+

[92, 255, 0],

|

| 187 |

+

[0, 224, 255],

|

| 188 |

+

[112, 224, 255],

|

| 189 |

+

[70, 184, 160],

|

| 190 |

+

[163, 0, 255],

|

| 191 |

+

[153, 0, 255],

|

| 192 |

+

[71, 255, 0],

|

| 193 |

+

[255, 0, 163],

|

| 194 |

+

[255, 204, 0],

|

| 195 |

+

[255, 0, 143],

|

| 196 |

+

[0, 255, 235],

|

| 197 |

+

[133, 255, 0],

|

| 198 |

+

[255, 0, 235],

|

| 199 |

+

[245, 0, 255],

|

| 200 |

+

[255, 0, 122],

|

| 201 |

+

[255, 245, 0],

|

| 202 |

+

[10, 190, 212],

|

| 203 |

+

[214, 255, 0],

|

| 204 |

+

[0, 204, 255],

|

| 205 |

+

[20, 0, 255],

|

| 206 |

+

[255, 255, 0],

|

| 207 |

+

[0, 153, 255],

|

| 208 |

+

[0, 41, 255],

|

| 209 |

+

[0, 255, 204],

|

| 210 |

+

[41, 0, 255],

|

| 211 |

+

[41, 255, 0],

|

| 212 |

+

[173, 0, 255],

|

| 213 |

+

[0, 245, 255],

|

| 214 |

+

[71, 0, 255],

|

| 215 |

+

[122, 0, 255],

|

| 216 |

+

[0, 255, 184],

|

| 217 |

+

[0, 92, 255],

|

| 218 |

+

[184, 255, 0],

|

| 219 |

+

[0, 133, 255],

|

| 220 |

+

[255, 214, 0],

|

| 221 |

+

[25, 194, 194],

|

| 222 |

+

[102, 255, 0],

|

| 223 |

+

[92, 0, 255],

|

| 224 |

+

])

|

| 225 |

+

|

| 226 |

+

|

| 227 |

+

image_processor = AutoImageProcessor.from_pretrained("openmmlab/upernet-convnext-small")

|

| 228 |

+

image_segmentor = UperNetForSemanticSegmentation.from_pretrained("openmmlab/upernet-convnext-small")

|

| 229 |

+

|

| 230 |

+

checkpoint = "lllyasviel/control_v11p_sd15_seg"

|

| 231 |

+

|

| 232 |

+

image = Image.open('./images/seg_input.jpeg')

|

| 233 |

+

|

| 234 |

+

pixel_values = image_processor(image, return_tensors="pt").pixel_values

|

| 235 |

+

with torch.no_grad():

|

| 236 |

+

outputs = image_segmentor(pixel_values)

|

| 237 |

+

|

| 238 |

+

seg = image_processor.post_process_semantic_segmentation(outputs, target_sizes=[image.size[::-1]])[0]

|

| 239 |

+

|

| 240 |

+

color_seg = np.zeros((seg.shape[0], seg.shape[1], 3), dtype=np.uint8) # height, width, 3

|

| 241 |

+

|

| 242 |

+

for label, color in enumerate(ada_palette):

|

| 243 |

+

color_seg[seg == label, :] = color

|

| 244 |

+

|

| 245 |

+

color_seg = color_seg.astype(np.uint8)

|

| 246 |

+

control_image = Image.fromarray(color_seg)

|

| 247 |

+

|

| 248 |

+

control_image.save("./images/segment_image.png")

|

| 249 |

+

|

| 250 |

+

adapter = T2IAdapter.from_pretrained("TencentARC/t2iadapter_seg_sd14v1", torch_dtype=torch.float16)

|

| 251 |

+

pipe = StableDiffusionAdapterPipeline.from_pretrained(

|

| 252 |

+

"CompVis/stable-diffusion-v1-4", adapter=adapter, safety_checker=None, torch_dtype=torch.float16, variant="fp16"

|

| 253 |

+

)

|

| 254 |

+

|

| 255 |

+

pipe.to('cuda')

|

| 256 |

+

|

| 257 |

+

generator = torch.Generator().manual_seed(0)

|

| 258 |

+

|

| 259 |

+

sketch_image_out = pipe(prompt="motorcycles driving", image=control_image, generator=generator).images[0]

|

| 260 |

+

|

| 261 |

+

sketch_image_out.save('./images/seg_image_out.png')

|

| 262 |

+

```

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

|

| 266 |

+

|

images/seg_image_out.png

ADDED

|

images/seg_input.jpeg

ADDED

|

images/segment_image.png

ADDED

|