Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,131 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: other

|

| 3 |

+

license_name: qwen

|

| 4 |

+

license_link: https://huggingface.co/Qwen/Qwen2.5-Math-PRM-72B/blob/main/LICENSE

|

| 5 |

+

language:

|

| 6 |

+

- en

|

| 7 |

+

- zh

|

| 8 |

+

pipeline_tag: text-classification

|

| 9 |

+

library_name: transformers

|

| 10 |

+

tags:

|

| 11 |

+

- reward model

|

| 12 |

+

base_model:

|

| 13 |

+

- Qwen/Qwen2.5-Math-72B-Instruct

|

| 14 |

+

---

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

# Qwen2.5-Math-PRM-72B

|

| 18 |

+

|

| 19 |

+

## Introduction

|

| 20 |

+

|

| 21 |

+

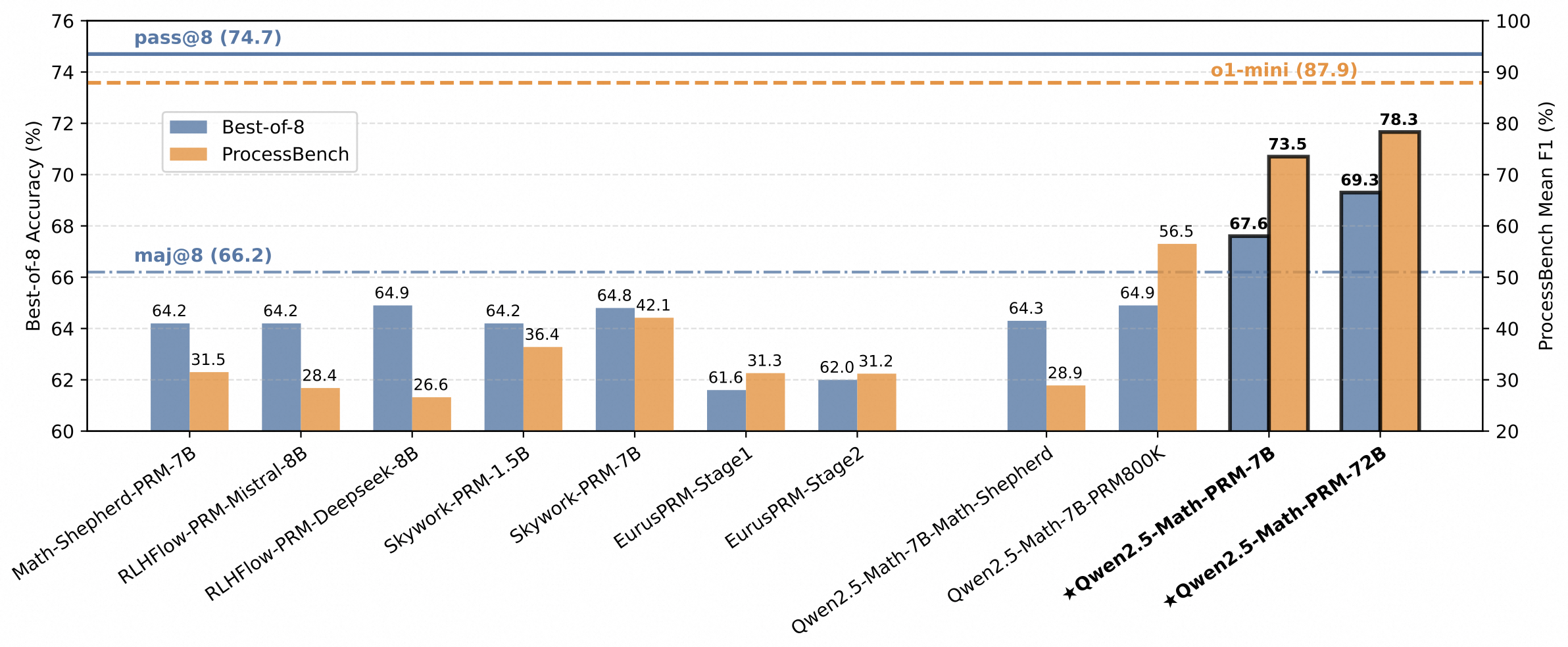

In addition to the mathematical Outcome Reward Model (ORM) Qwen2.5-Math-RM-72B, we release the Process Reward Model (PRM), namely Qwen2.5-Math-PRM-7B and Qwen2.5-Math-PRM-72B. PRMs emerge as a promising approach for process supervision in mathematical reasoning of Large Language Models (LLMs), aiming to identify and mitigate intermediate errors in the reasoning processes. Our trained PRMs exhibit both impressive performance in the Best-of-N (BoN) evaluation and stronger error identification performance in [ProcessBench](https://huggingface.co/papers/2412.06559).

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

## Requirements

|

| 29 |

+

* `transformers>=4.40.0` for Qwen2.5-Math models. The latest version is recommended.

|

| 30 |

+

|

| 31 |

+

> [!Warning]

|

| 32 |

+

> <div align="center">

|

| 33 |

+

> <b>

|

| 34 |

+

> 🚨 This is a must because `transformers` integrated Qwen2.5 codes since `4.37.0`.

|

| 35 |

+

> </b>

|

| 36 |

+

> </div>

|

| 37 |

+

|

| 38 |

+

For requirements on GPU memory and the respective throughput, see similar results of Qwen2 [here](https://qwen.readthedocs.io/en/latest/benchmark/speed_benchmark.html).

|

| 39 |

+

|

| 40 |

+

## Quick Start

|

| 41 |

+

|

| 42 |

+

> [!Important]

|

| 43 |

+

>

|

| 44 |

+

> **Qwen2.5-Math-PRM-72B** is a process reward model typically used for offering feedback on the quality of reasoning and intermediate steps rather than generation.

|

| 45 |

+

|

| 46 |

+

### Prerequisites

|

| 47 |

+

- Step Separation: We recommend using double line breaks ("\n\n") to separate individual steps within the solution.

|

| 48 |

+

- Reward Computation: After each step, we insert a special token "`<extra_0>`". For reward calculation, we extract the probability score of this token being classified as positive, resulting in a reward value between 0 and 1.

|

| 49 |

+

|

| 50 |

+

### 🤗 Hugging Face Transformers

|

| 51 |

+

|

| 52 |

+

Here we show a code snippet to show you how to use the Qwen2.5-Math-PRM-72B with `transformers`:

|

| 53 |

+

|

| 54 |

+

```python

|

| 55 |

+

import torch

|

| 56 |

+

from transformers import AutoModel, AutoTokenizer

|

| 57 |

+

import torch.nn.functional as F

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def make_step_rewards(logits, token_masks):

|

| 61 |

+

probabilities = F.softmax(logits, dim=-1)

|

| 62 |

+

probabilities = probabilities * token_masks.unsqueeze(-1) # bs, seq_len, num_labels

|

| 63 |

+

|

| 64 |

+

all_scores_res = []

|

| 65 |

+

for i in range(probabilities.size(0)):

|

| 66 |

+

sample = probabilities[i] # seq_len, num_labels

|

| 67 |

+

positive_probs = sample[sample != 0].view(-1, 2)[:, 1] # valid_tokens, num_labels

|

| 68 |

+

non_zero_elements_list = positive_probs.cpu().tolist()

|

| 69 |

+

all_scores_res.append(non_zero_elements_list)

|

| 70 |

+

return all_scores_res

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

model_name = "Qwen/Qwen2.5-Math-PRM-72B"

|

| 74 |

+

device = "auto"

|

| 75 |

+

|

| 76 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

|

| 77 |

+

model = AutoModel.from_pretrained(

|

| 78 |

+

model_name,

|

| 79 |

+

device_map=device,

|

| 80 |

+

torch_dtype=torch.bfloat16,

|

| 81 |

+

trust_remote_code=True,

|

| 82 |

+

).eval()

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

data = {

|

| 86 |

+

"system": "Please reason step by step, and put your final answer within \boxed{}.",

|

| 87 |

+

"query": "Sue lives in a fun neighborhood. One weekend, the neighbors decided to play a prank on Sue. On Friday morning, the neighbors placed 18 pink plastic flamingos out on Sue's front yard. On Saturday morning, the neighbors took back one third of the flamingos, painted them white, and put these newly painted white flamingos back out on Sue's front yard. Then, on Sunday morning, they added another 18 pink plastic flamingos to the collection. At noon on Sunday, how many more pink plastic flamingos were out than white plastic flamingos?",

|

| 88 |

+

"response": [

|

| 89 |

+

"To find out how many more pink plastic flamingos were out than white plastic flamingos at noon on Sunday, we can break down the problem into steps. First, on Friday, the neighbors start with 18 pink plastic flamingos.",

|

| 90 |

+

"On Saturday, they take back one third of the flamingos. Since there were 18 flamingos, (1/3 \times 18 = 6) flamingos are taken back. So, they have (18 - 6 = 12) flamingos left in their possession. Then, they paint these 6 flamingos white and put them back out on Sue's front yard. Now, Sue has the original 12 pink flamingos plus the 6 new white ones. Thus, by the end of Saturday, Sue has (12 + 6 = 18) pink flamingos and 6 white flamingos.",

|

| 91 |

+

"On Sunday, the neighbors add another 18 pink plastic flamingos to Sue's front yard. By the end of Sunday morning, Sue has (18 + 18 = 36) pink flamingos and still 6 white flamingos.",

|

| 92 |

+

"To find the difference, subtract the number of white flamingos from the number of pink flamingos: (36 - 6 = 30). Therefore, at noon on Sunday, there were 30 more pink plastic flamingos out than white plastic flamingos. The answer is (\boxed{30})."

|

| 93 |

+

]

|

| 94 |

+

}

|

| 95 |

+

|

| 96 |

+

messages = [

|

| 97 |

+

{"role": "system", "content": data['system']},

|

| 98 |

+

{"role": "user", "content": data['query']},

|

| 99 |

+

{"role": "assistant", "content": "<extra_0>".join(data['response']) + "<extra_0>"},

|

| 100 |

+

]

|

| 101 |

+

conversation_str = tokenizer.apply_chat_template(

|

| 102 |

+

messages,

|

| 103 |

+

tokenize=False,

|

| 104 |

+

add_generation_prompt=False

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

input_ids = tokenizer.encode(

|

| 108 |

+

conversation_str,

|

| 109 |

+

return_tensors="pt",

|

| 110 |

+

).to(model.device)

|

| 111 |

+

|

| 112 |

+

outputs = model(input_ids=input_ids)

|

| 113 |

+

|

| 114 |

+

step_sep_id = tokenizer.encode("<extra_0>")[0]

|

| 115 |

+

token_masks = (input_ids == step_sep_id)

|

| 116 |

+

step_reward = make_step_rewards(outputs[0], token_masks)

|

| 117 |

+

print(step_reward) # [[0.9921875, 0.0047607421875, 0.32421875, 0.8203125]]

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

## Citation

|

| 121 |

+

|

| 122 |

+

If you find our work helpful, feel free to give us a citation.

|

| 123 |

+

|

| 124 |

+

```

|

| 125 |

+

@article{yang2024qwen25mathtechnicalreportmathematical,

|

| 126 |

+

title={Qwen2.5-Math Technical Report: Toward Mathematical Expert Model via Self-Improvement},

|

| 127 |

+

author={An Yang and Beichen Zhang and Binyuan Hui and Bofei Gao and Bowen Yu and Chengpeng Li and Dayiheng Liu and Jianhong Tu and Jingren Zhou and Junyang Lin and Keming Lu and Mingfeng Xue and Runji Lin and Tianyu Liu and Xingzhang Ren and Zhenru Zhang},

|

| 128 |

+

journal={arXiv preprint arXiv:2409.12122},

|

| 129 |

+

year={2024}

|

| 130 |

+

}

|

| 131 |

+

```

|