Upload folder using huggingface_hub (#1)

Browse files- be49ea5b85a1c7d132fd96bd1d45cce355f821dc3cdd2aacd576e1fd0630ccd0 (2599112feb499db7d88cb18362924eb69304d8b5)

- 4af8818f4957fc414c60d666d7dd4811f302810ceec36d866e5943557a9b63ce (bee9f0e634412e210d81bd1a556e084712bd4338)

- .gitattributes +1 -0

- README.md +53 -0

- config.json +1 -0

- model +3 -0

- plots.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

model filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

library_name: pruna-engine

|

| 4 |

+

thumbnail: "https://assets-global.website-files.com/646b351987a8d8ce158d1940/64ec9e96b4334c0e1ac41504_Logo%20with%20white%20text.svg"

|

| 5 |

+

metrics:

|

| 6 |

+

- memory_disk

|

| 7 |

+

- memory_inference

|

| 8 |

+

- inference_latency

|

| 9 |

+

- inference_throughput

|

| 10 |

+

- inference_CO2_emissions

|

| 11 |

+

- inference_energy_consumption

|

| 12 |

+

---

|

| 13 |

+

<!-- header start -->

|

| 14 |

+

<!-- 200823 -->

|

| 15 |

+

<div style="width: auto; margin-left: auto; margin-right: auto">

|

| 16 |

+

<a href="https://www.pruna.ai/" target="_blank" rel="noopener noreferrer">

|

| 17 |

+

<img src="https://i.imgur.com/eDAlcgk.png" alt="PrunaAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

|

| 18 |

+

</a>

|

| 19 |

+

</div>

|

| 20 |

+

<!-- header end -->

|

| 21 |

+

|

| 22 |

+

# Simply make AI models cheaper, smaller, faster, and greener!

|

| 23 |

+

|

| 24 |

+

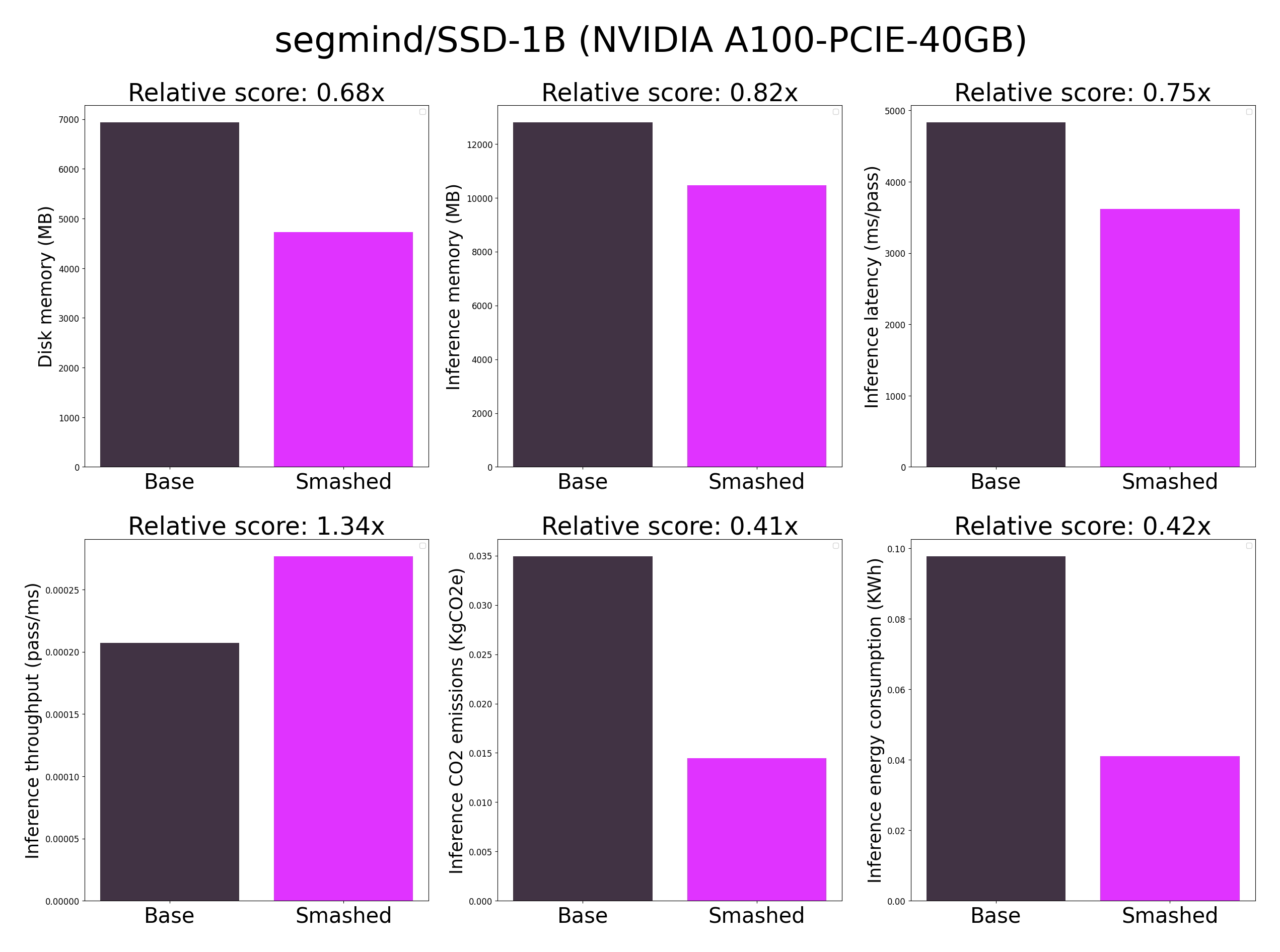

## Results

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

## Setup

|

| 29 |

+

|

| 30 |

+

You can run the smashed model by **(1)** installing and importing the `pruna-engine` (version 0.2.5) package with the [Pypi instructions](https://pypi.org/project/pruna-engine/),**(2)** downloading the model files at `model_path`. This can be done using huggingface with this repository name or with manual downloading, and **(3)** loading the model, **(4)** running the model. You can achieve this by running the following code:

|

| 31 |

+

|

| 32 |

+

```python

|

| 33 |

+

from transformers.utils.hub import cached_file

|

| 34 |

+

from pruna_engine.PrunaModel import PrunaModel # Step (1): install and import `pruna-engine` package.

|

| 35 |

+

|

| 36 |

+

...

|

| 37 |

+

model_path = cached_file("PrunaAI/REPO", "model") # Step (2): download the model files at `model_path`.

|

| 38 |

+

smashed_model = PrunaModel.load_model(model_path) # Step (3): load the model.

|

| 39 |

+

y = smashed_model(x) # Step (4): run the model.

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

## Configurations

|

| 43 |

+

|

| 44 |

+

The configuration info are in `config.json`.

|

| 45 |

+

|

| 46 |

+

## License

|

| 47 |

+

|

| 48 |

+

We follow the same license as the original model. Please check the license of the original model before using this model.

|

| 49 |

+

|

| 50 |

+

## Want to compress other models?

|

| 51 |

+

|

| 52 |

+

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 53 |

+

- Request access to easily compress your own AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

config.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"pruner": "None", "pruning_ratio": 0.0, "factorizer": "None", "quantizer": "None", "n_quantization_bits": 32, "output_deviation": 0.005, "compiler": "diffusers", "static_batch": true, "static_shape": true, "controlnet": "None", "unet_dim": 4, "cache_dir": "/ceph/hdd/staff/charpent/.cache/models", "max_batch_size": 1, "image_height": 1024, "image_width": 1024, "version": "xl-1.0"}

|

model

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d3d5fb056d0762f56ed4a437be6100d13ecf25a7cd47996f4c8c73eddb811de7

|

| 3 |

+

size 5588367240

|

plots.png

ADDED

|