SalazarPevelll

commited on

Commit

•

51c57f8

1

Parent(s):

4d3e4e0

model

Browse files- CoCoSoDa +0 -1

- Figure/CoCoSoDa.png +0 -0

- README.md +86 -0

- dataset/__pycache__/utils.cpython-36.pyc +0 -0

- dataset/get_data.sh +26 -0

- dataset/preprocess.py +47 -0

- dataset/utils.py +46 -0

- model.py +396 -0

- parser/DFG.py +1184 -0

- parser/__init__.py +5 -0

- parser/__pycache__/DFG.cpython-36.pyc +0 -0

- parser/__pycache__/__init__.cpython-36.pyc +0 -0

- parser/__pycache__/utils.cpython-36.pyc +0 -0

- parser/build.py +21 -0

- parser/build.sh +8 -0

- parser/utils.py +98 -0

- run.py +1420 -0

- run_cocosoda.sh +59 -0

- run_fine_tune.sh +55 -0

- run_zero-shot.sh +40 -0

CoCoSoDa

DELETED

|

@@ -1 +0,0 @@

|

|

| 1 |

-

Subproject commit 2f2bf8e7994acef846ede7c1078a0b18bc4154d9

|

|

|

|

|

|

Figure/CoCoSoDa.png

ADDED

|

README.md

ADDED

|

@@ -0,0 +1,86 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CoCoSoDa: Effective Contrastive Learning for Code Search

|

| 2 |

+

|

| 3 |

+

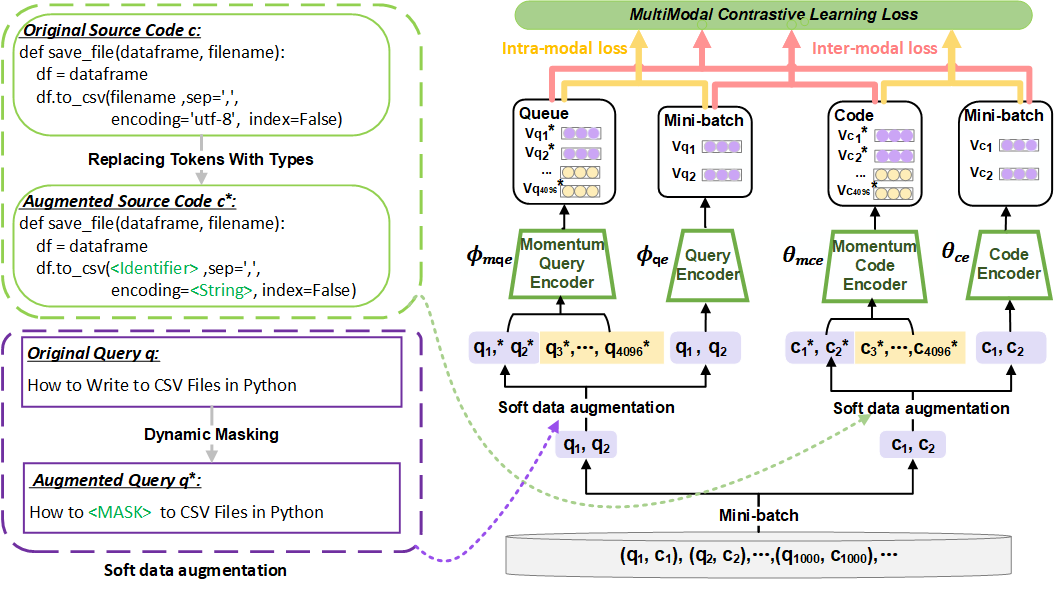

Our approach adopts the pre-trained model as the base code/query encoder and optimizes it using multimodal contrastive learning and soft data augmentation.

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

CoCoSoDa is comprised of the following four components:

|

| 8 |

+

* **Pre-trained code/query encoder** captures the semantic information of a code snippet or a natural language query and maps it into a high-dimensional embedding space.

|

| 9 |

+

as the code/query encoder.

|

| 10 |

+

* **Momentum code/query encoder** encodes the samples (code snippets or queries) of current and previous mini-batches to enrich the negative samples.

|

| 11 |

+

|

| 12 |

+

* **Soft data augmentation** is to dynamically mask or replace some tokens in a sample (code/query) to generate a similar sample as a form of data augmentation.

|

| 13 |

+

|

| 14 |

+

* **Multimodal contrastive learning loss function** is used as the optimization objective and consists of inter-modal and intra-modal contrastive learning loss. They are used to minimize the distance of the representations of similar samples and maximize the distance of different samples in the embedding space.

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## Source code

|

| 19 |

+

### Environment

|

| 20 |

+

```

|

| 21 |

+

conda create -n CoCoSoDa python=3.6 -y

|

| 22 |

+

conda activate CoCoSoDa

|

| 23 |

+

pip install torch==1.10 transformers==4.12.5 seaborn==0.11.2 fast-histogram nltk==3.6.5 networkx==2.5.1 tree_sitter tqdm prettytable gdown more-itertools tensorboardX sklearn

|

| 24 |

+

```

|

| 25 |

+

### Data

|

| 26 |

+

|

| 27 |

+

```

|

| 28 |

+

cd dataset

|

| 29 |

+

bash get_data.sh

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

Data statistic is shown in this Table.

|

| 33 |

+

|

| 34 |

+

| PL | Training | Validation | Test | Candidate Codes|

|

| 35 |

+

| :--------- | :------: | :----: | :----: |:----: |

|

| 36 |

+

| Ruby | 24,927 | 1,400 | 1,261 |4,360|

|

| 37 |

+

| JavaScript | 58,025 | 3,885 | 3,291 |13,981|

|

| 38 |

+

| Java | 164,923 | 5,183 | 10,955 |40,347|

|

| 39 |

+

| Go | 167,288 | 7,325 | 8,122 |28,120|

|

| 40 |

+

| PHP | 241,241 | 12,982 | 14,014 |52,660|

|

| 41 |

+

| Python | 251,820 | 13,914 | 14,918 |43,827|

|

| 42 |

+

|

| 43 |

+

It will take about 10min.

|

| 44 |

+

|

| 45 |

+

### Training and Evaualtion

|

| 46 |

+

|

| 47 |

+

We have uploaded the pre-trained model to [huggingface](https://huggingface.co/). You can directly download [DeepSoftwareAnalytics/CoCoSoDa](https://huggingface.co/DeepSoftwareAnalytics/CoCoSoDa) and fine-tune it.

|

| 48 |

+

#### Pre-training (Optional)

|

| 49 |

+

|

| 50 |

+

```

|

| 51 |

+

bash run_cocosoda.sh $lang

|

| 52 |

+

```

|

| 53 |

+

The optimized model is saved in `./saved_models/cocosoda/`. You can upload them to [huggingface](https://huggingface.co/).

|

| 54 |

+

|

| 55 |

+

It will take about 3 days.

|

| 56 |

+

|

| 57 |

+

#### Fine-tuning

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

lang=java

|

| 62 |

+

bash run_fine_tune.sh $lang

|

| 63 |

+

```

|

| 64 |

+

#### Zero-shot running

|

| 65 |

+

|

| 66 |

+

```

|

| 67 |

+

lang=python

|

| 68 |

+

bash run_zero-shot.sh $lang

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

### Results

|

| 73 |

+

|

| 74 |

+

#### The Model Evaluated with MRR

|

| 75 |

+

|

| 76 |

+

| Model | Ruby | Javascript | Go | Python | Java | PHP | Avg. |

|

| 77 |

+

| -------------- | :-------: | :--------: | :-------: | :-------: | :-------: | :-------: | :-------: |

|

| 78 |

+

| CoCoSoDa | **0.818**| **0.764**| **0.921** |**0.757**| **0.763**| **0.703** |**0.788**|

|

| 79 |

+

|

| 80 |

+

## Appendix

|

| 81 |

+

|

| 82 |

+

The description of baselines, addtional experimetal results and discussion are shown in `Appendix/Appendix.pdf`.

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## Contact

|

| 86 |

+

Feel free to contact Ensheng Shi (enshengshi@qq.com) if you have any further questions or no response to github issue for more than 1 day.

|

dataset/__pycache__/utils.cpython-36.pyc

ADDED

|

Binary file (1.53 kB). View file

|

|

|

dataset/get_data.sh

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/python.zip

|

| 2 |

+

# wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/java.zip

|

| 3 |

+

# wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/ruby.zip

|

| 4 |

+

# wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/javascript.zip

|

| 5 |

+

# wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/go.zip

|

| 6 |

+

# wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/php.zip

|

| 7 |

+

|

| 8 |

+

wget https://huggingface.co/datasets/code_search_net/resolve/main/data/python.zip

|

| 9 |

+

wget https://huggingface.co/datasets/code_search_net/resolve/main/data/java.zip

|

| 10 |

+

wget https://huggingface.co/datasets/code_search_net/resolve/main/data/ruby.zip

|

| 11 |

+

wget https://huggingface.co/datasets/code_search_net/resolve/main/data/javascript.zip

|

| 12 |

+

wget https://huggingface.co/datasets/code_search_net/resolve/main/data/go.zip

|

| 13 |

+

wget https://huggingface.co/datasets/code_search_net/resolve/main/data/php.zip

|

| 14 |

+

|

| 15 |

+

unzip python.zip

|

| 16 |

+

unzip java.zip

|

| 17 |

+

unzip ruby.zip

|

| 18 |

+

unzip javascript.zip

|

| 19 |

+

unzip go.zip

|

| 20 |

+

unzip php.zip

|

| 21 |

+

rm *.zip

|

| 22 |

+

rm *.pkl

|

| 23 |

+

|

| 24 |

+

python preprocess.py

|

| 25 |

+

rm -r */final

|

| 26 |

+

cd ..

|

dataset/preprocess.py

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import os

|

| 3 |

+

|

| 4 |

+

for language in ['ruby','go','java','javascript','php','python']:

|

| 5 |

+

print(language)

|

| 6 |

+

train,valid,test,codebase=[],[],[], []

|

| 7 |

+

for root, dirs, files in os.walk(language+'/final'):

|

| 8 |

+

for file in files:

|

| 9 |

+

temp=os.path.join(root,file)

|

| 10 |

+

if '.jsonl' in temp:

|

| 11 |

+

if 'train' in temp:

|

| 12 |

+

train.append(temp)

|

| 13 |

+

elif 'valid' in temp:

|

| 14 |

+

valid.append(temp)

|

| 15 |

+

codebase.append(temp)

|

| 16 |

+

elif 'test' in temp:

|

| 17 |

+

test.append(temp)

|

| 18 |

+

codebase.append(temp)

|

| 19 |

+

|

| 20 |

+

train_data,valid_data,test_data,codebase_data={},{},{},{}

|

| 21 |

+

for files,data in [[train,train_data],[valid,valid_data],[test,test_data],[codebase,codebase_data]]:

|

| 22 |

+

for file in files:

|

| 23 |

+

if '.gz' in file:

|

| 24 |

+

os.system("gzip -d {}".format(file))

|

| 25 |

+

file=file.replace('.gz','')

|

| 26 |

+

with open(file) as f:

|

| 27 |

+

for line in f:

|

| 28 |

+

line=line.strip()

|

| 29 |

+

js=json.loads(line)

|

| 30 |

+

data[js['url']]=js

|

| 31 |

+

|

| 32 |

+

with open('{}/codebase.jsonl'.format(language),'w') as f3:

|

| 33 |

+

for tag,data in [['train',train_data],['valid',valid_data],['test',test_data],['test',test_data],['codebase',codebase_data]]:

|

| 34 |

+

with open('{}/{}.jsonl'.format(language,tag),'w') as f1, open("{}/{}.txt".format(language,tag)) as f2:

|

| 35 |

+

for line in f2:

|

| 36 |

+

line=line.strip()

|

| 37 |

+

if line in data:

|

| 38 |

+

js=data[line]

|

| 39 |

+

if tag in ['valid','test']:

|

| 40 |

+

js['original_string']=''

|

| 41 |

+

js['code']=''

|

| 42 |

+

js['code_tokens']=[]

|

| 43 |

+

if tag=='codebase':

|

| 44 |

+

js['docstring']=''

|

| 45 |

+

js['docstring_tokens']=[]

|

| 46 |

+

f1.write(json.dumps(js)+'\n')

|

| 47 |

+

|

dataset/utils.py

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pickle

|

| 2 |

+

import os

|

| 3 |

+

import json

|

| 4 |

+

import prettytable as pt

|

| 5 |

+

import numpy as np

|

| 6 |

+

import math

|

| 7 |

+

import logging

|

| 8 |

+

logger = logging.getLogger(__name__)

|

| 9 |

+

|

| 10 |

+

def read_json_file(filename):

|

| 11 |

+

with open(filename, 'r') as fp:

|

| 12 |

+

data = fp.readlines()

|

| 13 |

+

if len(data) == 1:

|

| 14 |

+

data = json.loads(data[0])

|

| 15 |

+

else:

|

| 16 |

+

data = [json.loads(line) for line in data]

|

| 17 |

+

return data

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def save_json_data(data_dir, filename, data):

|

| 21 |

+

os.makedirs(data_dir, exist_ok=True)

|

| 22 |

+

file_name = os.path.join(data_dir, filename)

|

| 23 |

+

with open(file_name, 'w') as output:

|

| 24 |

+

if type(data) == list:

|

| 25 |

+

if type(data[0]) in [str, list,dict]:

|

| 26 |

+

for item in data:

|

| 27 |

+

output.write(json.dumps(item))

|

| 28 |

+

output.write('\n')

|

| 29 |

+

|

| 30 |

+

else:

|

| 31 |

+

json.dump(data, output)

|

| 32 |

+

elif type(data) == dict:

|

| 33 |

+

json.dump(data, output)

|

| 34 |

+

else:

|

| 35 |

+

raise RuntimeError('Unsupported type: %s' % type(data))

|

| 36 |

+

logger.info("saved dataset in " + file_name)

|

| 37 |

+

|

| 38 |

+

def save_pickle_data(path_dir, filename, data):

|

| 39 |

+

full_path = path_dir + '/' + filename

|

| 40 |

+

print("Save dataset to: %s" % full_path)

|

| 41 |

+

if not os.path.exists(path_dir):

|

| 42 |

+

os.makedirs(path_dir)

|

| 43 |

+

|

| 44 |

+

with open(full_path, 'wb') as output:

|

| 45 |

+

pickle.dump(data, output,protocol=4)

|

| 46 |

+

|

model.py

ADDED

|

@@ -0,0 +1,396 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

from prettytable import PrettyTable

|

| 4 |

+

from torch.nn.modules.activation import Tanh

|

| 5 |

+

import copy

|

| 6 |

+

import logging

|

| 7 |

+

logger = logging.getLogger(__name__)

|

| 8 |

+

from transformers import (WEIGHTS_NAME, AdamW, get_linear_schedule_with_warmup,

|

| 9 |

+

RobertaConfig, RobertaModel, RobertaTokenizer)

|

| 10 |

+

def whitening_torch_final(embeddings):

|

| 11 |

+

mu = torch.mean(embeddings, dim=0, keepdim=True)

|

| 12 |

+

cov = torch.mm((embeddings - mu).t(), embeddings - mu)

|

| 13 |

+

u, s, vt = torch.svd(cov)

|

| 14 |

+

W = torch.mm(u, torch.diag(1/torch.sqrt(s)))

|

| 15 |

+

embeddings = torch.mm(embeddings - mu, W)

|

| 16 |

+

return embeddings

|

| 17 |

+

|

| 18 |

+

class BaseModel(nn.Module):

|

| 19 |

+

def __init__(self, ):

|

| 20 |

+

super().__init__()

|

| 21 |

+

|

| 22 |

+

def model_parameters(self):

|

| 23 |

+

table = PrettyTable()

|

| 24 |

+

table.field_names = ["Layer Name", "Output Shape", "Param #"]

|

| 25 |

+

table.align["Layer Name"] = "l"

|

| 26 |

+

table.align["Output Shape"] = "r"

|

| 27 |

+

table.align["Param #"] = "r"

|

| 28 |

+

for name, parameters in self.named_parameters():

|

| 29 |

+

if parameters.requires_grad:

|

| 30 |

+

table.add_row([name, str(list(parameters.shape)), parameters.numel()])

|

| 31 |

+

return table

|

| 32 |

+

class Model(BaseModel):

|

| 33 |

+

def __init__(self, encoder):

|

| 34 |

+

super(Model, self).__init__()

|

| 35 |

+

self.encoder = encoder

|

| 36 |

+

|

| 37 |

+

def forward(self, code_inputs=None, nl_inputs=None):

|

| 38 |

+

# code_inputs [bs, seq]

|

| 39 |

+

if code_inputs is not None:

|

| 40 |

+

outputs = self.encoder(code_inputs,attention_mask=code_inputs.ne(1))[0] #[bs, seq_len, dim]

|

| 41 |

+

outputs = (outputs*code_inputs.ne(1)[:,:,None]).sum(1)/code_inputs.ne(1).sum(-1)[:,None] # None作为ndarray或tensor的索引作用是增加维度,

|

| 42 |

+

return torch.nn.functional.normalize(outputs, p=2, dim=1)

|

| 43 |

+

else:

|

| 44 |

+

outputs = self.encoder(nl_inputs,attention_mask=nl_inputs.ne(1))[0]

|

| 45 |

+

outputs = (outputs*nl_inputs.ne(1)[:,:,None]).sum(1)/nl_inputs.ne(1).sum(-1)[:,None]

|

| 46 |

+

return torch.nn.functional.normalize(outputs, p=2, dim=1)

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

class Multi_Loss_CoCoSoDa( BaseModel):

|

| 50 |

+

|

| 51 |

+

def __init__(self, base_encoder, args, mlp=False):

|

| 52 |

+

super(Multi_Loss_CoCoSoDa, self).__init__()

|

| 53 |

+

|

| 54 |

+

self.K = args.moco_k

|

| 55 |

+

self.m = args.moco_m

|

| 56 |

+

self.T = args.moco_t

|

| 57 |

+

dim= args.moco_dim

|

| 58 |

+

|

| 59 |

+

# create the encoders

|

| 60 |

+

# num_classes is the output fc dimension

|

| 61 |

+

self.code_encoder_q = base_encoder

|

| 62 |

+

self.code_encoder_k = copy.deepcopy(base_encoder)

|

| 63 |

+

self.nl_encoder_q = base_encoder

|

| 64 |

+

# self.nl_encoder_q = RobertaModel.from_pretrained("roberta-base")

|

| 65 |

+

self.nl_encoder_k = copy.deepcopy(self.nl_encoder_q)

|

| 66 |

+

self.mlp = mlp

|

| 67 |

+

self.time_score= args.time_score

|

| 68 |

+

self.do_whitening = args.do_whitening

|

| 69 |

+

self.do_ineer_loss = args.do_ineer_loss

|

| 70 |

+

self.agg_way = args.agg_way

|

| 71 |

+

self.args = args

|

| 72 |

+

|

| 73 |

+

for param_q, param_k in zip(self.code_encoder_q.parameters(), self.code_encoder_k.parameters()):

|

| 74 |

+

param_k.data.copy_(param_q.data) # initialize

|

| 75 |

+

param_k.requires_grad = False # not update by gradient

|

| 76 |

+

|

| 77 |

+

for param_q, param_k in zip(self.nl_encoder_q.parameters(), self.nl_encoder_k.parameters()):

|

| 78 |

+

param_k.data.copy_(param_q.data) # initialize

|

| 79 |

+

param_k.requires_grad = False # not update by gradient

|

| 80 |

+

|

| 81 |

+

# create the code queue

|

| 82 |

+

torch.manual_seed(3047)

|

| 83 |

+

torch.cuda.manual_seed(3047)

|

| 84 |

+

self.register_buffer("code_queue", torch.randn(dim,self.K ))

|

| 85 |

+

self.code_queue = nn.functional.normalize(self.code_queue, dim=0)

|

| 86 |

+

self.register_buffer("code_queue_ptr", torch.zeros(1, dtype=torch.long))

|

| 87 |

+

# create the masked code queue

|

| 88 |

+

self.register_buffer("masked_code_queue", torch.randn(dim, self.K ))

|

| 89 |

+

self.masked_code_queue = nn.functional.normalize(self.masked_code_queue, dim=0)

|

| 90 |

+

self.register_buffer("masked_code_queue_ptr", torch.zeros(1, dtype=torch.long))

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

# create the nl queue

|

| 94 |

+

self.register_buffer("nl_queue", torch.randn(dim, self.K ))

|

| 95 |

+

self.nl_queue = nn.functional.normalize(self.nl_queue, dim=0)

|

| 96 |

+

self.register_buffer("nl_queue_ptr", torch.zeros(1, dtype=torch.long))

|

| 97 |

+

# create the masked nl queue

|

| 98 |

+

self.register_buffer("masked_nl_queue", torch.randn(dim, self.K ))

|

| 99 |

+

self.masked_nl_queue= nn.functional.normalize(self.masked_nl_queue, dim=0)

|

| 100 |

+

self.register_buffer("masked_nl_queue_ptr", torch.zeros(1, dtype=torch.long))

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

@torch.no_grad()

|

| 106 |

+

def _momentum_update_key_encoder(self):

|

| 107 |

+

"""

|

| 108 |

+

Momentum update of the key encoder

|

| 109 |

+

% key encoder的Momentum update

|

| 110 |

+

"""

|

| 111 |

+

for param_q, param_k in zip(self.code_encoder_q.parameters(), self.code_encoder_k.parameters()):

|

| 112 |

+

param_k.data = param_k.data * self.m + param_q.data * (1. - self.m)

|

| 113 |

+

for param_q, param_k in zip(self.nl_encoder_q.parameters(), self.nl_encoder_k.parameters()):

|

| 114 |

+

param_k.data = param_k.data * self.m + param_q.data * (1. - self.m)

|

| 115 |

+

if self.mlp:

|

| 116 |

+

for param_q, param_k in zip(self.code_encoder_q_fc.parameters(), self.code_encoder_k_fc.parameters()):

|

| 117 |

+

param_k.data = param_k.data * self.m + param_q.data * (1. - self.m)

|

| 118 |

+

for param_q, param_k in zip(self.nl_encoder_q_fc.parameters(), self.nl_encoder_k_fc.parameters()):

|

| 119 |

+

param_k.data = param_k.data * self.m + param_q.data * (1. - self.m)

|

| 120 |

+

|

| 121 |

+

@torch.no_grad()

|

| 122 |

+

def _dequeue_and_enqueue(self, keys, option='code'):

|

| 123 |

+

# gather keys before updating queue

|

| 124 |

+

# keys = concat_all_gather(keys)

|

| 125 |

+

|

| 126 |

+

batch_size = keys.shape[0]

|

| 127 |

+

if option == 'code':

|

| 128 |

+

code_ptr = int(self.code_queue_ptr)

|

| 129 |

+

assert self.K % batch_size == 0 # for simplicity

|

| 130 |

+

|

| 131 |

+

# replace the keys at ptr (dequeue and enqueue)

|

| 132 |

+

try:

|

| 133 |

+

self.code_queue[:, code_ptr:code_ptr + batch_size] = keys.T

|

| 134 |

+

except:

|

| 135 |

+

print(code_ptr)

|

| 136 |

+

print(batch_size)

|

| 137 |

+

print(keys.shape)

|

| 138 |

+

exit(111)

|

| 139 |

+

code_ptr = (code_ptr + batch_size) % self.K # move pointer ptr->pointer

|

| 140 |

+

|

| 141 |

+

self.code_queue_ptr[0] = code_ptr

|

| 142 |

+

|

| 143 |

+

elif option == 'masked_code':

|

| 144 |

+

masked_code_ptr = int(self.masked_code_queue_ptr)

|

| 145 |

+

assert self.K % batch_size == 0 # for simplicity

|

| 146 |

+

|

| 147 |

+

# replace the keys at ptr (dequeue and enqueue)

|

| 148 |

+

try:

|

| 149 |

+

self.masked_code_queue[:, masked_code_ptr:masked_code_ptr + batch_size] = keys.T

|

| 150 |

+

except:

|

| 151 |

+

print(masked_code_ptr)

|

| 152 |

+

print(batch_size)

|

| 153 |

+

print(keys.shape)

|

| 154 |

+

exit(111)

|

| 155 |

+

masked_code_ptr = (masked_code_ptr + batch_size) % self.K # move pointer ptr->pointer

|

| 156 |

+

|

| 157 |

+

self.masked_code_queue_ptr[0] = masked_code_ptr

|

| 158 |

+

|

| 159 |

+

elif option == 'nl':

|

| 160 |

+

|

| 161 |

+

nl_ptr = int(self.nl_queue_ptr)

|

| 162 |

+

assert self.K % batch_size == 0 # for simplicity

|

| 163 |

+

|

| 164 |

+

# replace the keys at ptr (dequeue and enqueue)

|

| 165 |

+

self.nl_queue[:, nl_ptr:nl_ptr + batch_size] = keys.T

|

| 166 |

+

nl_ptr = (nl_ptr + batch_size) % self.K # move pointer ptr->pointer

|

| 167 |

+

|

| 168 |

+

self.nl_queue_ptr[0] = nl_ptr

|

| 169 |

+

elif option == 'masked_nl':

|

| 170 |

+

|

| 171 |

+

masked_nl_ptr = int(self.masked_nl_queue_ptr)

|

| 172 |

+

assert self.K % batch_size == 0 # for simplicity

|

| 173 |

+

|

| 174 |

+

# replace the keys at ptr (dequeue and enqueue)

|

| 175 |

+

self.masked_nl_queue[:, masked_nl_ptr:masked_nl_ptr + batch_size] = keys.T

|

| 176 |

+

masked_nl_ptr = (masked_nl_ptr + batch_size) % self.K # move pointer ptr->pointer

|

| 177 |

+

|

| 178 |

+

self.masked_nl_queue_ptr[0] = masked_nl_ptr

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

|

| 182 |

+

def forward(self, source_code_q, source_code_k, nl_q,nl_k):

|

| 183 |

+

"""

|

| 184 |

+

Input:

|

| 185 |

+

im_q: a batch of query images

|

| 186 |

+

im_k: a batch of key images

|

| 187 |

+

Output:

|

| 188 |

+

logits, targets

|

| 189 |

+

"""

|

| 190 |

+

if not self.args.do_multi_lang_continue_pre_train:

|

| 191 |

+

# logger.info(".do_multi_lang_continue_pre_train")

|

| 192 |

+

outputs = self.code_encoder_q(source_code_q, attention_mask=source_code_q.ne(1))[0]

|

| 193 |

+

code_q = (outputs*source_code_q.ne(1)[:,:,None]).sum(1)/source_code_q.ne(1).sum(-1)[:,None] # None作为ndarray或tensor的索引作用是增加维度,

|

| 194 |

+

code_q = torch.nn.functional.normalize(code_q, p=2, dim=1)

|

| 195 |

+

# compute query features for nl

|

| 196 |

+

outputs= self.nl_encoder_q(nl_q, attention_mask=nl_q.ne(1))[0] # queries: NxC bs*feature_dim

|

| 197 |

+

nl_q = (outputs*nl_q.ne(1)[:,:,None]).sum(1)/nl_q.ne(1).sum(-1)[:,None]

|

| 198 |

+

nl_q = torch.nn.functional.normalize(nl_q, p=2, dim=1)

|

| 199 |

+

code2nl_logits = torch.einsum("ab,cb->ac", code_q,nl_q )

|

| 200 |

+

# loss = self.loss_fct(scores*20, torch.arange(code_inputs.size(0), device=scores.device))

|

| 201 |

+

code2nl_logits /= self.T

|

| 202 |

+

# label

|

| 203 |

+

code2nl_label = torch.arange(code2nl_logits.size(0), device=code2nl_logits.device)

|

| 204 |

+

return code2nl_logits,code2nl_label, None, None

|

| 205 |

+

if self.agg_way == "avg":

|

| 206 |

+

# compute query features for source code

|

| 207 |

+

outputs = self.code_encoder_q(source_code_q, attention_mask=source_code_q.ne(1))[0]

|

| 208 |

+

code_q = (outputs*source_code_q.ne(1)[:,:,None]).sum(1)/source_code_q.ne(1).sum(-1)[:,None] # None作为ndarray或tensor的索引作用是增加维度,

|

| 209 |

+

code_q = torch.nn.functional.normalize(code_q, p=2, dim=1)

|

| 210 |

+

# compute query features for nl

|

| 211 |

+

outputs= self.nl_encoder_q(nl_q, attention_mask=nl_q.ne(1))[0] # queries: NxC bs*feature_dim

|

| 212 |

+

nl_q = (outputs*nl_q.ne(1)[:,:,None]).sum(1)/nl_q.ne(1).sum(-1)[:,None]

|

| 213 |

+

nl_q = torch.nn.functional.normalize(nl_q, p=2, dim=1)

|

| 214 |

+

|

| 215 |

+

# compute key features

|

| 216 |

+

with torch.no_grad(): # no gradient to keys

|

| 217 |

+

self._momentum_update_key_encoder() # update the key encoder

|

| 218 |

+

|

| 219 |

+

# shuffle for making use of BN

|

| 220 |

+

# im_k, idx_unshuffle = self._batch_shuffle_ddp(im_k)

|

| 221 |

+

|

| 222 |

+

# masked code

|

| 223 |

+

outputs = self.code_encoder_k(source_code_k, attention_mask=source_code_k.ne(1))[0] # keys: NxC

|

| 224 |

+

code_k = (outputs*source_code_k.ne(1)[:,:,None]).sum(1)/source_code_k.ne(1).sum(-1)[:,None] # None作为ndarray或tensor的索引作用是增加维度,

|

| 225 |

+

code_k = torch.nn.functional.normalize( code_k, p=2, dim=1)

|

| 226 |

+

# masked nl

|

| 227 |

+

outputs = self.nl_encoder_k(nl_k, attention_mask=nl_k.ne(1))[0] # keys: bs*dim

|

| 228 |

+

nl_k = (outputs*nl_k.ne(1)[:,:,None]).sum(1)/nl_k.ne(1).sum(-1)[:,None]

|

| 229 |

+

nl_k = torch.nn.functional.normalize(nl_k, p=2, dim=1)

|

| 230 |

+

|

| 231 |

+

elif self.agg_way == "cls_pooler":

|

| 232 |

+

# logger.info(self.agg_way )

|

| 233 |

+

# compute query features for source code

|

| 234 |

+

outputs = self.code_encoder_q(source_code_q, attention_mask=source_code_q.ne(1))[1]

|

| 235 |

+

code_q = torch.nn.functional.normalize(code_q, p=2, dim=1)

|

| 236 |

+

# compute query features for nl

|

| 237 |

+

outputs= self.nl_encoder_q(nl_q, attention_mask=nl_q.ne(1))[1] # queries: NxC bs*feature_dim

|

| 238 |

+

nl_q = torch.nn.functional.normalize(nl_q, p=2, dim=1)

|

| 239 |

+

|

| 240 |

+

# compute key features

|

| 241 |

+

with torch.no_grad(): # no gradient to keys

|

| 242 |

+

self._momentum_update_key_encoder() # update the key encoder

|

| 243 |

+

|

| 244 |

+

# shuffle for making use of BN

|

| 245 |

+

# im_k, idx_unshuffle = self._batch_shuffle_ddp(im_k)

|

| 246 |

+

|

| 247 |

+

# masked code

|

| 248 |

+

outputs = self.code_encoder_k(source_code_k, attention_mask=source_code_k.ne(1))[1] # keys: NxC

|

| 249 |

+

code_k = torch.nn.functional.normalize( code_k, p=2, dim=1)

|

| 250 |

+

# masked nl

|

| 251 |

+

outputs = self.nl_encoder_k(nl_k, attention_mask=nl_k.ne(1))[1] # keys: bs*dim

|

| 252 |

+

nl_k = torch.nn.functional.normalize(nl_k, p=2, dim=1)

|

| 253 |

+

|

| 254 |

+

elif self.agg_way == "avg_cls_pooler":

|

| 255 |

+

# logger.info(self.agg_way )

|

| 256 |

+

outputs = self.code_encoder_q(source_code_q, attention_mask=source_code_q.ne(1))

|

| 257 |

+

code_q_cls = outputs[1]

|

| 258 |

+

outputs = outputs[0]

|

| 259 |

+

code_q_avg = (outputs*source_code_q.ne(1)[:,:,None]).sum(1)/source_code_q.ne(1).sum(-1)[:,None] # None作为ndarray或tensor的索引作用是增加维度,

|

| 260 |

+

code_q = code_q_cls + code_q_avg

|

| 261 |

+

code_q = torch.nn.functional.normalize(code_q, p=2, dim=1)

|

| 262 |

+

# compute query features for nl

|

| 263 |

+

outputs= self.nl_encoder_q(nl_q, attention_mask=nl_q.ne(1))

|

| 264 |

+

nl_q_cls = outputs[1]

|

| 265 |

+

outputs= outputs[0] # queries: NxC bs*feature_dim

|

| 266 |

+

nl_q_avg = (outputs*nl_q.ne(1)[:,:,None]).sum(1)/nl_q.ne(1).sum(-1)[:,None]

|

| 267 |

+

nl_q = nl_q_avg + nl_q_cls

|

| 268 |

+

nl_q = torch.nn.functional.normalize(nl_q, p=2, dim=1)

|

| 269 |

+

|

| 270 |

+

# compute key features

|

| 271 |

+

with torch.no_grad(): # no gradient to keys

|

| 272 |

+

self._momentum_update_key_encoder() # update the key encoder

|

| 273 |

+

|

| 274 |

+

# shuffle for making use of BN

|

| 275 |

+

# im_k, idx_unshuffle = self._batch_shuffle_ddp(im_k)

|

| 276 |

+

|

| 277 |

+

# masked code

|

| 278 |

+

|

| 279 |

+

outputs = self.code_encoder_k(source_code_k, attention_mask=source_code_k.ne(1))

|

| 280 |

+

code_k_cls = outputs[1] # keys: NxC

|

| 281 |

+

outputs = outputs[0]

|

| 282 |

+

code_k_avg = (outputs*source_code_k.ne(1)[:,:,None]).sum(1)/source_code_k.ne(1).sum(-1)[:,None] # None作为ndarray或tensor的索引作用是增加维度,

|

| 283 |

+

code_k = code_k_cls + code_k_avg

|

| 284 |

+

code_k = torch.nn.functional.normalize( code_k, p=2, dim=1)

|

| 285 |

+

# masked nl

|

| 286 |

+

outputs = self.nl_encoder_k(nl_k, attention_mask=nl_k.ne(1))

|

| 287 |

+

nl_k_cls = outputs[1] # keys: bs*dim

|

| 288 |

+

outputs = outputs[0]

|

| 289 |

+

nl_k_avg = (outputs*nl_k.ne(1)[:,:,None]).sum(1)/nl_k.ne(1).sum(-1)[:,None]

|

| 290 |

+

nl_k = nl_k_cls + nl_k_avg

|

| 291 |

+

nl_k = torch.nn.functional.normalize(nl_k, p=2, dim=1)

|

| 292 |

+

|

| 293 |

+

# ## do_whitening

|

| 294 |

+

# if self.do_whitening:

|

| 295 |

+

# code_q = whitening_torch_final(code_q)

|

| 296 |

+

# code_k = whitening_torch_final(code_k)

|

| 297 |

+

# nl_q = whitening_torch_final(nl_q)

|

| 298 |

+

# nl_k = whitening_torch_final(nl_k)

|

| 299 |

+

|

| 300 |

+

|

| 301 |

+

## code vs nl

|

| 302 |

+

code2nl_pos = torch.einsum('nc,bc->nb', [code_q, nl_q])

|

| 303 |

+

# negative logits: NxK

|

| 304 |

+

code2nl_neg = torch.einsum('nc,ck->nk', [code_q, self.nl_queue.clone().detach()])

|

| 305 |

+

# logits: Nx(n+K)

|

| 306 |

+

code2nl_logits = torch.cat([self.time_score*code2nl_pos, code2nl_neg], dim=1)

|

| 307 |

+

# apply temperature

|

| 308 |

+

code2nl_logits /= self.T

|

| 309 |

+

# label

|

| 310 |

+

code2nl_label = torch.arange(code2nl_logits.size(0), device=code2nl_logits.device)

|

| 311 |

+

|

| 312 |

+

## code vs masked nl

|

| 313 |

+

code2maskednl_pos = torch.einsum('nc,bc->nb', [code_q, nl_k])

|

| 314 |

+

# negative logits: NxK

|

| 315 |

+

code2maskednl_neg = torch.einsum('nc,ck->nk', [code_q, self.masked_nl_queue.clone().detach()])

|

| 316 |

+

# logits: Nx(n+K)

|

| 317 |

+

code2maskednl_logits = torch.cat([self.time_score*code2maskednl_pos, code2maskednl_neg], dim=1)

|

| 318 |

+

# apply temperature

|

| 319 |

+

code2maskednl_logits /= self.T

|

| 320 |

+

# label

|

| 321 |

+

code2maskednl_label = torch.arange(code2maskednl_logits.size(0), device=code2maskednl_logits.device)

|

| 322 |

+

|

| 323 |

+

## nl vs code

|

| 324 |

+

# nl2code_pos = torch.einsum('nc,nc->n', [nl_q, code_k]).unsqueeze(-1)

|

| 325 |

+

nl2code_pos = torch.einsum('nc,bc->nb', [nl_q, code_q])

|

| 326 |

+

# negative logits: bsxK

|

| 327 |

+

nl2code_neg = torch.einsum('nc,ck->nk', [nl_q, self.code_queue.clone().detach()])

|

| 328 |

+

# nl2code_logits: bsx(n+K)

|

| 329 |

+

nl2code_logits = torch.cat([self.time_score*nl2code_pos, nl2code_neg], dim=1)

|

| 330 |

+

# apply temperature

|

| 331 |

+

nl2code_logits /= self.T

|

| 332 |

+

# label

|

| 333 |

+

nl2code_label = torch.arange(nl2code_logits.size(0), device=nl2code_logits.device)

|

| 334 |

+

|

| 335 |

+

## nl vs masked code

|

| 336 |

+

# nl2code_pos = torch.einsum('nc,nc->n', [nl_q, code_k]).unsqueeze(-1)

|

| 337 |

+

nl2maskedcode_pos = torch.einsum('nc,bc->nb', [nl_q, code_k])

|

| 338 |

+

# negative logits: bsxK

|

| 339 |

+

nl2maskedcode_neg = torch.einsum('nc,ck->nk', [nl_q, self.masked_code_queue.clone().detach()])

|

| 340 |

+

# nl2code_logits: bsx(n+K)

|

| 341 |

+

nl2maskedcode_logits = torch.cat([self.time_score*nl2maskedcode_pos, nl2maskedcode_neg], dim=1)

|

| 342 |

+

# apply temperature

|

| 343 |

+

nl2maskedcode_logits /= self.T

|

| 344 |

+

# label

|

| 345 |

+

nl2maskedcode_label = torch.arange(nl2maskedcode_logits.size(0), device=nl2maskedcode_logits.device)

|

| 346 |

+

|

| 347 |

+

#logit 4*bsx(1+K)

|

| 348 |

+

inter_logits = torch.cat((code2nl_logits, code2maskednl_logits, nl2code_logits ,nl2maskedcode_logits ), dim=0)

|

| 349 |

+

|

| 350 |

+

# labels: positive key indicators

|

| 351 |

+

# inter_labels = torch.zeros(inter_logits.shape[0], dtype=torch.long).cuda()

|

| 352 |

+

inter_labels = torch.cat((code2nl_label, code2maskednl_label, nl2code_label, nl2maskedcode_label), dim=0)

|

| 353 |

+

|

| 354 |

+

if self.do_ineer_loss:

|

| 355 |

+

# logger.info("do_ineer_loss")

|

| 356 |

+

## code vs masked code

|

| 357 |

+

code2maskedcode_pos = torch.einsum('nc,bc->nb', [code_q, code_k])

|

| 358 |

+

# negative logits: NxK

|

| 359 |

+

code2maskedcode_neg = torch.einsum('nc,ck->nk', [code_q, self.masked_code_queue.clone().detach()])

|

| 360 |

+

# logits: Nx(n+K)

|

| 361 |

+

code2maskedcode_logits = torch.cat([self.time_score*code2maskedcode_pos, code2maskedcode_neg], dim=1)

|

| 362 |

+

# apply temperature

|

| 363 |

+

code2maskedcode_logits /= self.T

|

| 364 |

+

# label

|

| 365 |

+

code2maskedcode_label = torch.arange(code2maskedcode_logits.size(0), device=code2maskedcode_logits.device)

|

| 366 |

+

|

| 367 |

+

|

| 368 |

+

## nl vs masked nl

|

| 369 |

+

# nl2code_pos = torch.einsum('nc,nc->n', [nl_q, code_k]).unsqueeze(-1)

|

| 370 |

+

nl2maskednl_pos = torch.einsum('nc,bc->nb', [nl_q, nl_k])

|

| 371 |

+

# negative logits: bsxK

|

| 372 |

+

nl2maskednl_neg = torch.einsum('nc,ck->nk', [nl_q, self.masked_nl_queue.clone().detach()])

|

| 373 |

+

# nl2code_logits: bsx(n+K)

|

| 374 |

+

nl2maskednl_logits = torch.cat([self.time_score*nl2maskednl_pos, nl2maskednl_neg], dim=1)

|

| 375 |

+

# apply temperature

|

| 376 |

+

nl2maskednl_logits /= self.T

|

| 377 |

+

# label

|

| 378 |

+

nl2maskednl_label = torch.arange(nl2maskednl_logits.size(0), device=nl2maskednl_logits.device)

|

| 379 |

+

|

| 380 |

+

|

| 381 |

+

#logit 6*bsx(1+K)

|

| 382 |

+

inter_logits = torch.cat((inter_logits, code2maskedcode_logits, nl2maskednl_logits), dim=0)

|

| 383 |

+

|

| 384 |

+

# labels: positive key indicators

|

| 385 |

+

# inter_labels = torch.zeros(inter_logits.shape[0], dtype=torch.long).cuda()

|

| 386 |

+

inter_labels = torch.cat(( inter_labels, code2maskedcode_label, nl2maskednl_label ), dim=0)

|

| 387 |

+

|

| 388 |

+

|

| 389 |

+

# dequeue and enqueue

|

| 390 |

+

self._dequeue_and_enqueue(code_q, option='code')

|

| 391 |

+

self._dequeue_and_enqueue(nl_q, option='nl')

|

| 392 |

+

self._dequeue_and_enqueue(code_k, option='masked_code')

|

| 393 |

+

self._dequeue_and_enqueue(nl_k, option='masked_nl')

|

| 394 |

+

|

| 395 |

+

return inter_logits, inter_labels, code_q, nl_q

|

| 396 |

+

|

parser/DFG.py

ADDED

|

@@ -0,0 +1,1184 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|