diffusion-deploy

Browse files

README.md

CHANGED

|

@@ -14,8 +14,10 @@ pipeline_tag: text-to-image

|

|

| 14 |

---

|

| 15 |

|

| 16 |

# Small Stable Diffusion Model Card

|

|

|

|

|

|

|

| 17 |

|

| 18 |

-

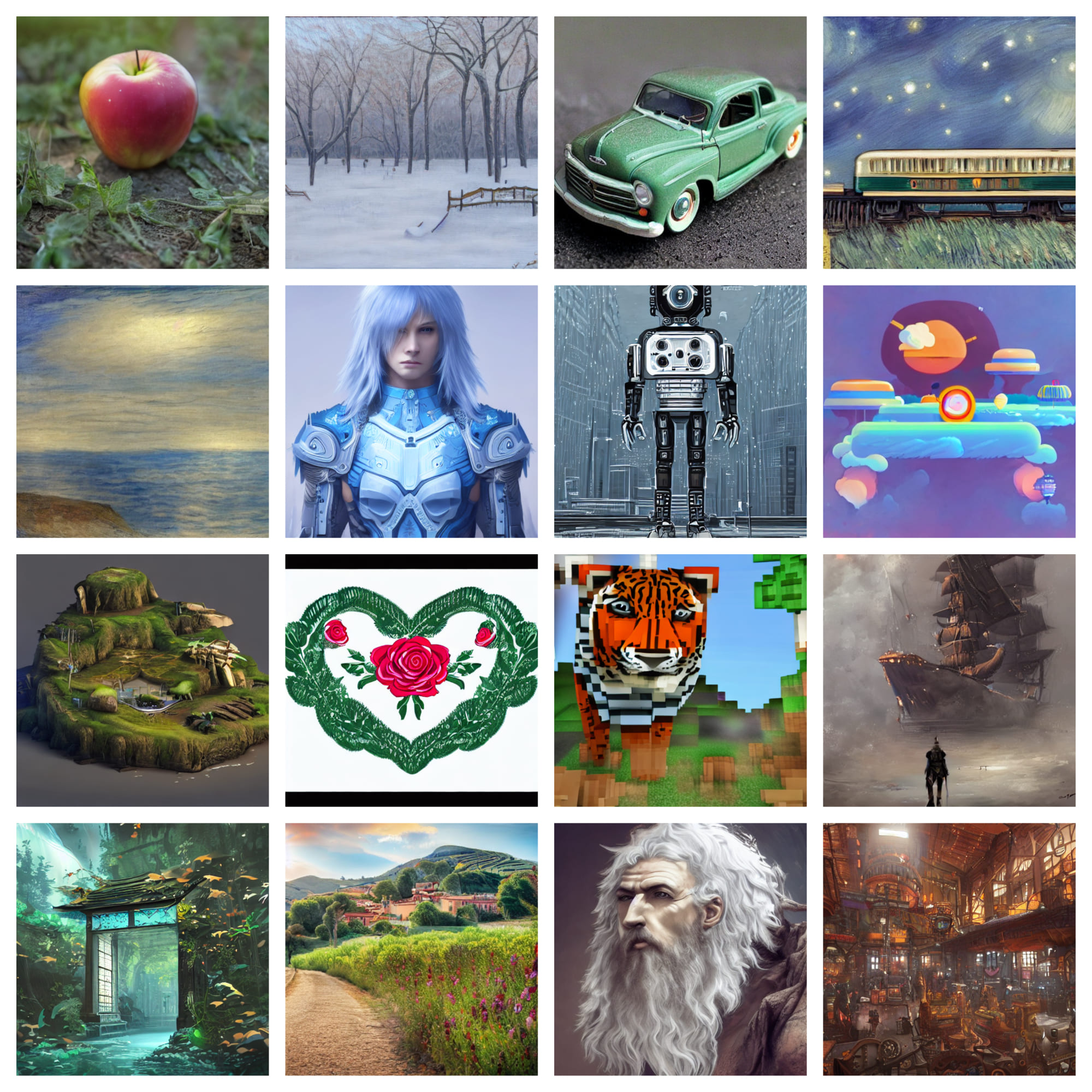

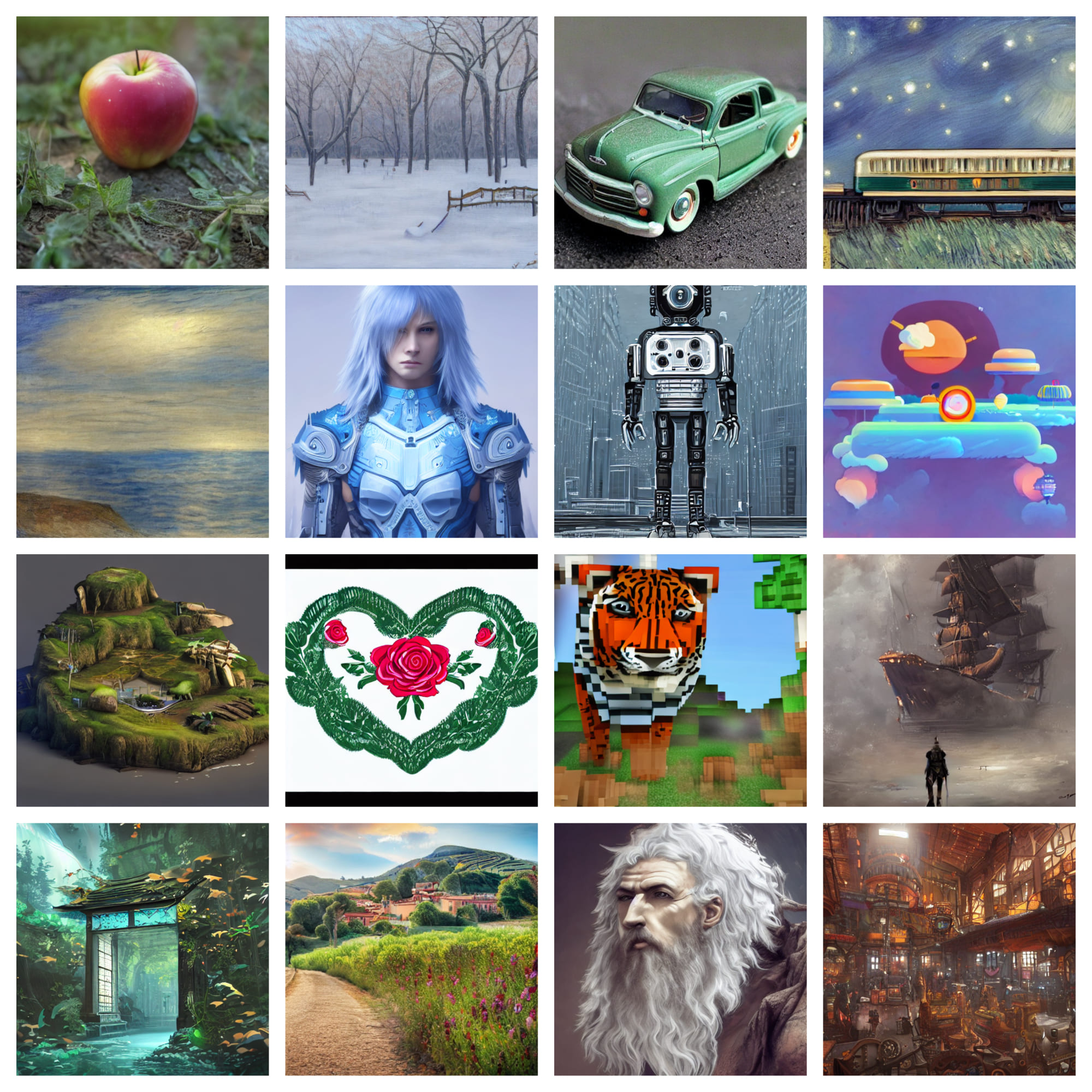

Similar image generation quality, but nearly 1/2 smaller!

|

| 19 |

Here are some samples:

|

| 20 |

|

| 21 |

|

|

@@ -52,13 +54,13 @@ This model is initialized from stable-diffusion v1-4. As the model structure is

|

|

| 52 |

|

| 53 |

### Training Procedure

|

| 54 |

|

| 55 |

-

After the initialization, the model

|

| 56 |

|

| 57 |

|

| 58 |

- **Hardware:** 8 x A100-80GB GPUs

|

| 59 |

- **Optimizer:** AdamW

|

| 60 |

|

| 61 |

-

- **Stage 1** - Pretrain the unet part of model.

|

| 62 |

- **Steps**: 500,000

|

| 63 |

- **Batch:** batch size=8, GPUs=8, Gradient Accumulations=2. Total batch size=128

|

| 64 |

- **Learning rate:** warmup to 1e-5 for 10,000 steps and then kept constant

|

|

|

|

| 14 |

---

|

| 15 |

|

| 16 |

# Small Stable Diffusion Model Card

|

| 17 |

+

【Update 2023/02/07】 Recently, we have released [a diffusion deployment repo](https://github.com/OFA-Sys/diffusion-deploy) to speedup the inference on both GPU (\~4x speedup, based on TensorRT) and CPU (\~12x speedup, based on IntelOpenVINO).

|

| 18 |

+

Integrated with this repo, small-stable-diffusion could generate images in just **5 seconds on the CPU**.

|

| 19 |

|

| 20 |

+

Similar image generation quality, but is nearly 1/2 smaller!

|

| 21 |

Here are some samples:

|

| 22 |

|

| 23 |

|

|

|

|

| 54 |

|

| 55 |

### Training Procedure

|

| 56 |

|

| 57 |

+

After the initialization, the model has been trained for 1100k steps in 8xA100 GPUS. The training progress consists of three stages. The first stage is a simple pre-training precedure. In the last two stages, the original stable diffusion was utilized to distill knowledge to small model as a teacher model. In all stages, only the parameters in unet were trained and other parameters were frozen.

|

| 58 |

|

| 59 |

|

| 60 |

- **Hardware:** 8 x A100-80GB GPUs

|

| 61 |

- **Optimizer:** AdamW

|

| 62 |

|

| 63 |

+

- **Stage 1** - Pretrain the unet part of the model.

|

| 64 |

- **Steps**: 500,000

|

| 65 |

- **Batch:** batch size=8, GPUs=8, Gradient Accumulations=2. Total batch size=128

|

| 66 |

- **Learning rate:** warmup to 1e-5 for 10,000 steps and then kept constant

|