Commit

•

05d3718

1

Parent(s):

e27890b

Update README.md

Browse files

README.md

CHANGED

|

@@ -47,6 +47,13 @@ scores = model.predict([(Query, Paragraph1), (Query, Paragraph2)])

|

|

| 47 |

|

| 48 |

## Evaluation

|

| 49 |

|

| 50 |

-

We

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 51 |

|

| 52 |

|

|

|

|

| 47 |

|

| 48 |

## Evaluation

|

| 49 |

|

| 50 |

+

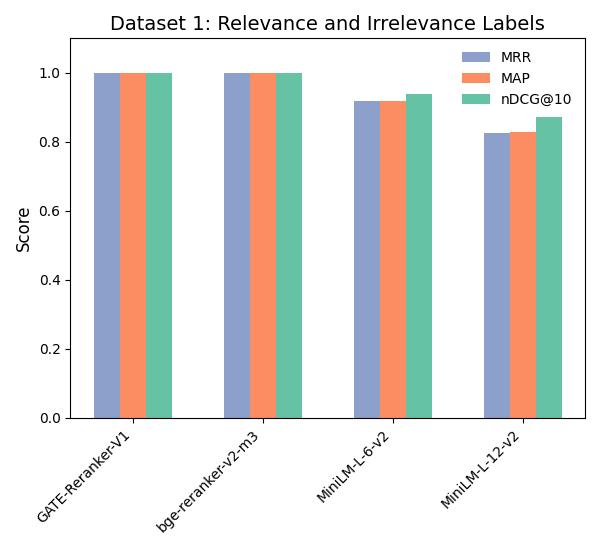

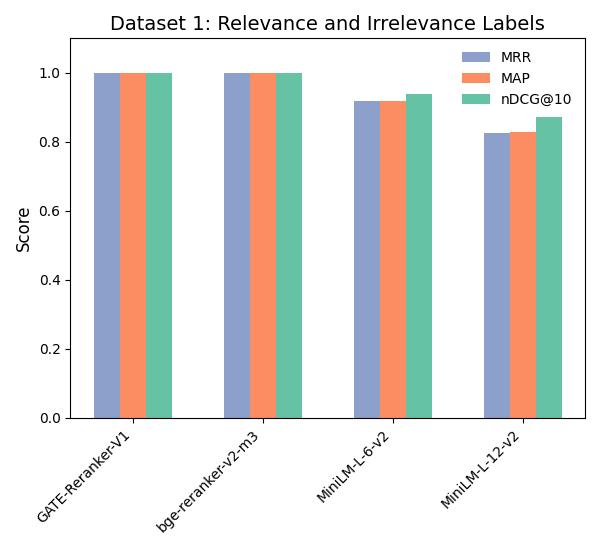

We evaluate our model on two different datasets using the metrics **MAP**, **MRR** and **NDCG@10**:

|

| 51 |

+

|

| 52 |

+

The purpose of this evaluation is to highlight the performance of our model with regards to: Relevant/Irrelevant labels and

|

| 53 |

+

|

| 54 |

+

1 - Dataset 1: [NAMAA-Space/Arabic-Reranking-Triplet-5-Eval](https://huggingface.co/datasets/NAMAA-Space/Arabic-Reranking-Triplet-5-Eval)

|

| 55 |

+

2 - Dataset 2: [NAMAA-Space/Ar-Reranking-Eval](https://huggingface.co/datasets/NAMAA-Space/Ar-Reranking-Eval)

|

| 56 |

+

|

| 57 |

+

and compare it to other famous models on the hub

|

| 58 |

|

| 59 |

|