Upload 14 files

Browse files- README.md +61 -0

- examples/castle.jpeg +0 -0

- examples/cat.jpeg +0 -0

- examples/cyberpunk.jpeg +0 -0

- examples/ds.jpeg +0 -0

- examples/gf.jpeg +0 -0

- examples/robot.jpeg +0 -0

- examples/shiba.jpeg +0 -0

- examples/ssh.jpeg +0 -0

- examples/waitan.jpeg +0 -0

- feature_extractor/preprocessor_config.json +20 -0

- model_index.json +33 -0

- vae/config.json +30 -0

- vae/diffusion_pytorch_model.bin +3 -0

README.md

CHANGED

|

@@ -1,3 +1,64 @@

|

|

| 1 |

---

|

|

|

|

| 2 |

license: creativeml-openrail-m

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language: zh

|

| 3 |

license: creativeml-openrail-m

|

| 4 |

+

|

| 5 |

+

tags:

|

| 6 |

+

|

| 7 |

+

- diffusion

|

| 8 |

+

- zh

|

| 9 |

+

- Chinese

|

| 10 |

---

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

# Midu-Stable-Diffusion-2-Chinese-Style-v0.1

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## Brief Introduction

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|  |  |  |

|

| 23 |

+

| ------------------------------------- | ----------------------------- | ------------------------------- |

|

| 24 |

+

|  |  |  |

|

| 25 |

+

|  |  |  |

|

| 26 |

+

|

| 27 |

+

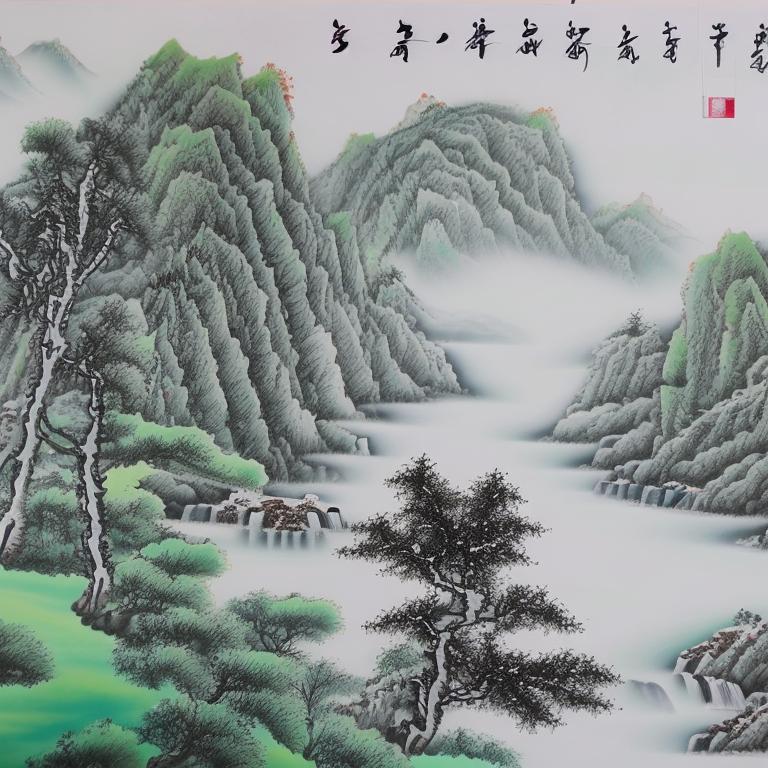

大概是huggingface 社区首个开源的Stable diffusion 2 中文模型。该模型基于stable diffusion V2.1模型,在约500万条的中国风格特挑中文数据上进行微调,数据来源于多个开源数据集如[LAION-5B](https://laion.ai/blog/laion-5b/), [Noah-Wukong](https://wukong-dataset.github.io/wukong-dataset/), [Zero](https://zero.so.com/)和一些网络数据。

|

| 28 |

+

|

| 29 |

+

Probably the first open sourced Chinese Stable Diffusion 2 model. This model is finetuned based on stable diffusion V2.1 with 5M chinese style filtered data. Dataset is composed of several different chinese open source dataset such as [LAION-5B](https://laion.ai/blog/laion-5b/), [Noah-Wukong](https://wukong-dataset.github.io/wukong-dataset/), [Zero](https://zero.so.com/) and some web data.

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

## Model Details

|

| 34 |

+

|

| 35 |

+

### 文本编码器

|

| 36 |

+

|

| 37 |

+

文本编码器使用冻结参数的[lyua1225/clip-huge-zh-75k-steps-bs4096](https://huggingface.co/lyua1225/clip-huge-zh-75k-steps-bs4096)。

|

| 38 |

+

|

| 39 |

+

Text encoder is frozen [lyua1225/clip-huge-zh-75k-steps-bs4096](https://huggingface.co/lyua1225/clip-huge-zh-75k-steps-bs4096) .

|

| 40 |

+

|

| 41 |

+

### Unet

|

| 42 |

+

|

| 43 |

+

在特挑的500万中文数据集上训练了150K steps,使用指数移动平均值(EMA)做原绘画能力保留,使模型能够在中文风格和原绘画能力之间获得权衡。

|

| 44 |

+

|

| 45 |

+

Training on 5M chinese style filtered data for 150k steps. Exponential moving average(EMA) is applied to keep the original Stable Diffusion 2 drawing capability and reach a balance between chinese style and original drawing capability.

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

## Usage

|

| 49 |

+

|

| 50 |

+

因为使用了customed tokenizer, 所以需要优先加载一下tokenizer

|

| 51 |

+

|

| 52 |

+

```py

|

| 53 |

+

# !pip install git+https://github.com/huggingface/accelerate

|

| 54 |

+

import torch

|

| 55 |

+

from diffusers import StableDiffusionPipeline

|

| 56 |

+

torch.backends.cudnn.benchmark = True

|

| 57 |

+

pipe = StableDiffusionPipeline.from_pretrained("IDEA-CCNL/Taiyi-Stable-Diffusion-1B-Chinese-v0.1", torch_dtype=torch.float16)

|

| 58 |

+

pipe.to('cuda')

|

| 59 |

+

|

| 60 |

+

prompt = '飞流直下三千尺,油画'

|

| 61 |

+

image = pipe(prompt, guidance_scale=7.5).images[0]

|

| 62 |

+

image.save("飞流.png")

|

| 63 |

+

```

|

| 64 |

+

|

examples/castle.jpeg

ADDED

|

examples/cat.jpeg

ADDED

|

examples/cyberpunk.jpeg

ADDED

|

examples/ds.jpeg

ADDED

|

examples/gf.jpeg

ADDED

|

examples/robot.jpeg

ADDED

|

examples/shiba.jpeg

ADDED

|

examples/ssh.jpeg

ADDED

|

examples/waitan.jpeg

ADDED

|

feature_extractor/preprocessor_config.json

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": 224,

|

| 3 |

+

"do_center_crop": true,

|

| 4 |

+

"do_convert_rgb": true,

|

| 5 |

+

"do_normalize": true,

|

| 6 |

+

"do_resize": true,

|

| 7 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 8 |

+

"image_mean": [

|

| 9 |

+

0.48145466,

|

| 10 |

+

0.4578275,

|

| 11 |

+

0.40821073

|

| 12 |

+

],

|

| 13 |

+

"image_std": [

|

| 14 |

+

0.26862954,

|

| 15 |

+

0.26130258,

|

| 16 |

+

0.27577711

|

| 17 |

+

],

|

| 18 |

+

"resample": 3,

|

| 19 |

+

"size": 224

|

| 20 |

+

}

|

model_index.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableDiffusionPipeline",

|

| 3 |

+

"_diffusers_version": "0.9.0",

|

| 4 |

+

"feature_extractor": [

|

| 5 |

+

null,

|

| 6 |

+

null

|

| 7 |

+

],

|

| 8 |

+

"requires_safety_checker": false,

|

| 9 |

+

"safety_checker": [

|

| 10 |

+

null,

|

| 11 |

+

null

|

| 12 |

+

],

|

| 13 |

+

"scheduler": [

|

| 14 |

+

"diffusers",

|

| 15 |

+

"DDIMScheduler"

|

| 16 |

+

],

|

| 17 |

+

"text_encoder": [

|

| 18 |

+

"transformers",

|

| 19 |

+

"CLIPTextModel"

|

| 20 |

+

],

|

| 21 |

+

"tokenizer": [

|

| 22 |

+

"transformers",

|

| 23 |

+

"CLIPTokenizer"

|

| 24 |

+

],

|

| 25 |

+

"unet": [

|

| 26 |

+

"diffusers",

|

| 27 |

+

"UNet2DConditionModel"

|

| 28 |

+

],

|

| 29 |

+

"vae": [

|

| 30 |

+

"diffusers",

|

| 31 |

+

"AutoencoderKL"

|

| 32 |

+

]

|

| 33 |

+

}

|

vae/config.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "AutoencoderKL",

|

| 3 |

+

"_diffusers_version": "0.9.0",

|

| 4 |

+

"_name_or_path": "/data/pretrained_weights/stable-diffusion-2-1-zh-v0",

|

| 5 |

+

"act_fn": "silu",

|

| 6 |

+

"block_out_channels": [

|

| 7 |

+

128,

|

| 8 |

+

256,

|

| 9 |

+

512,

|

| 10 |

+

512

|

| 11 |

+

],

|

| 12 |

+

"down_block_types": [

|

| 13 |

+

"DownEncoderBlock2D",

|

| 14 |

+

"DownEncoderBlock2D",

|

| 15 |

+

"DownEncoderBlock2D",

|

| 16 |

+

"DownEncoderBlock2D"

|

| 17 |

+

],

|

| 18 |

+

"in_channels": 3,

|

| 19 |

+

"latent_channels": 4,

|

| 20 |

+

"layers_per_block": 2,

|

| 21 |

+

"norm_num_groups": 32,

|

| 22 |

+

"out_channels": 3,

|

| 23 |

+

"sample_size": 768,

|

| 24 |

+

"up_block_types": [

|

| 25 |

+

"UpDecoderBlock2D",

|

| 26 |

+

"UpDecoderBlock2D",

|

| 27 |

+

"UpDecoderBlock2D",

|

| 28 |

+

"UpDecoderBlock2D"

|

| 29 |

+

]

|

| 30 |

+

}

|

vae/diffusion_pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:11bc15ceb385823b4adb68bd5bdd7568d0c706c3de5ea9ebcb0b807092fc9030

|

| 3 |

+

size 167407601

|