Upload folder using huggingface_hub

Browse files- .gitignore +5 -0

- LICENSE +7 -0

- README.md +147 -3

- demo.ipynb +331 -0

- generate.ipynb +166 -0

- mingpt.jpg +0 -0

- mingpt/__init__.py +0 -0

- mingpt/bpe.py +319 -0

- mingpt/model.py +310 -0

- mingpt/trainer.py +109 -0

- mingpt/utils.py +103 -0

- projects/adder/adder.py +207 -0

- projects/adder/readme.md +4 -0

- projects/chargpt/chargpt.py +133 -0

- projects/chargpt/readme.md +9 -0

- projects/readme.md +4 -0

- setup.py +12 -0

- tests/test_huggingface_import.py +57 -0

.gitignore

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.ipynb_checkpoints/

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.swp

|

| 4 |

+

.env

|

| 5 |

+

.pylintrc

|

LICENSE

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The MIT License (MIT) Copyright (c) 2020 Andrej Karpathy

|

| 2 |

+

|

| 3 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

|

| 4 |

+

|

| 5 |

+

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

| 6 |

+

|

| 7 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

README.md

CHANGED

|

@@ -1,3 +1,147 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

# minGPT

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

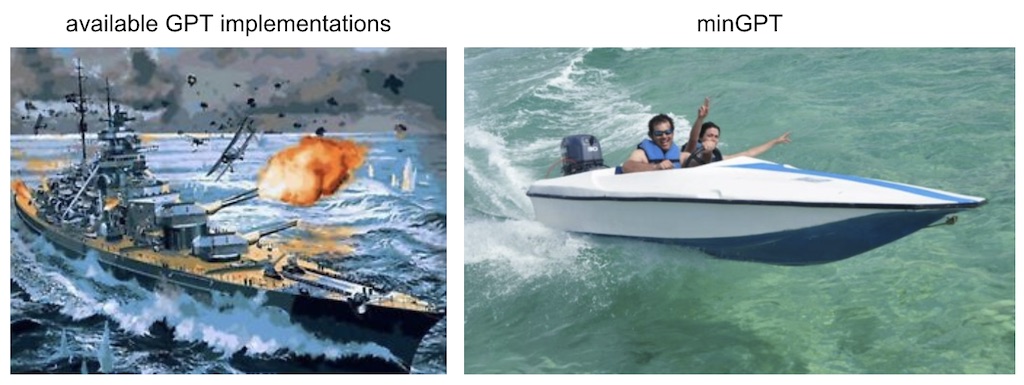

A PyTorch re-implementation of [GPT](https://github.com/openai/gpt-2), both training and inference. minGPT tries to be small, clean, interpretable and educational, as most of the currently available GPT model implementations can a bit sprawling. GPT is not a complicated model and this implementation is appropriately about 300 lines of code (see [mingpt/model.py](mingpt/model.py)). All that's going on is that a sequence of indices feeds into a [Transformer](https://arxiv.org/abs/1706.03762), and a probability distribution over the next index in the sequence comes out. The majority of the complexity is just being clever with batching (both across examples and over sequence length) for efficiency.

|

| 7 |

+

|

| 8 |

+

**note (Jan 2023)**: though I may continue to accept and change some details, minGPT is in a semi-archived state. For more recent developments see my rewrite [nanoGPT](https://github.com/karpathy/nanoGPT). Basically, minGPT became referenced across a wide variety of places (notebooks, blogs, courses, books, etc.) which made me less willing to make the bigger changes I wanted to make to move the code forward. I also wanted to change the direction a bit, from a sole focus on education to something that is still simple and hackable but has teeth (reproduces medium-sized industry benchmarks, accepts some tradeoffs to gain runtime efficiency, etc).

|

| 9 |

+

|

| 10 |

+

The minGPT library is three files: [mingpt/model.py](mingpt/model.py) contains the actual Transformer model definition, [mingpt/bpe.py](mingpt/bpe.py) contains a mildly refactored Byte Pair Encoder that translates between text and sequences of integers exactly like OpenAI did in GPT, [mingpt/trainer.py](mingpt/trainer.py) is (GPT-independent) PyTorch boilerplate code that trains the model. Then there are a number of demos and projects that use the library in the `projects` folder:

|

| 11 |

+

|

| 12 |

+

- `projects/adder` trains a GPT from scratch to add numbers (inspired by the addition section in the GPT-3 paper)

|

| 13 |

+

- `projects/chargpt` trains a GPT to be a character-level language model on some input text file

|

| 14 |

+

- `demo.ipynb` shows a minimal usage of the `GPT` and `Trainer` in a notebook format on a simple sorting example

|

| 15 |

+

- `generate.ipynb` shows how one can load a pretrained GPT2 and generate text given some prompt

|

| 16 |

+

|

| 17 |

+

### Library Installation

|

| 18 |

+

|

| 19 |

+

If you want to `import mingpt` into your project:

|

| 20 |

+

|

| 21 |

+

```

|

| 22 |

+

git clone https://github.com/karpathy/minGPT.git

|

| 23 |

+

cd minGPT

|

| 24 |

+

pip install -e .

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

### Usage

|

| 28 |

+

|

| 29 |

+

Here's how you'd instantiate a GPT-2 (124M param version):

|

| 30 |

+

|

| 31 |

+

```python

|

| 32 |

+

from mingpt.model import GPT

|

| 33 |

+

model_config = GPT.get_default_config()

|

| 34 |

+

model_config.model_type = 'gpt2'

|

| 35 |

+

model_config.vocab_size = 50257 # openai's model vocabulary

|

| 36 |

+

model_config.block_size = 1024 # openai's model block_size (i.e. input context length)

|

| 37 |

+

model = GPT(model_config)

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

And here's how you'd train it:

|

| 41 |

+

|

| 42 |

+

```python

|

| 43 |

+

# your subclass of torch.utils.data.Dataset that emits example

|

| 44 |

+

# torch LongTensor of lengths up to 1024, with integers from [0,50257)

|

| 45 |

+

train_dataset = YourDataset()

|

| 46 |

+

|

| 47 |

+

from mingpt.trainer import Trainer

|

| 48 |

+

train_config = Trainer.get_default_config()

|

| 49 |

+

train_config.learning_rate = 5e-4 # many possible options, see the file

|

| 50 |

+

train_config.max_iters = 1000

|

| 51 |

+

train_config.batch_size = 32

|

| 52 |

+

trainer = Trainer(train_config, model, train_dataset)

|

| 53 |

+

trainer.run()

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

See `demo.ipynb` for a more concrete example.

|

| 57 |

+

|

| 58 |

+

### Unit tests

|

| 59 |

+

|

| 60 |

+

Coverage is not super amazing just yet but:

|

| 61 |

+

|

| 62 |

+

```

|

| 63 |

+

python -m unittest discover tests

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

### todos

|

| 67 |

+

|

| 68 |

+

- add gpt-2 finetuning demo on arbitrary given text file

|

| 69 |

+

- add dialog agent demo

|

| 70 |

+

- better docs of outcomes for existing projects (adder, chargpt)

|

| 71 |

+

- add mixed precision and related training scaling goodies

|

| 72 |

+

- distributed training support

|

| 73 |

+

- reproduce some benchmarks in projects/, e.g. text8 or other language modeling

|

| 74 |

+

- proper logging instead of print statement amateur hour haha

|

| 75 |

+

- i probably should have a requirements.txt file...

|

| 76 |

+

- it should be possible to load in many other model weights other than just gpt2-\*

|

| 77 |

+

|

| 78 |

+

### References

|

| 79 |

+

|

| 80 |

+

Code:

|

| 81 |

+

|

| 82 |

+

- [openai/gpt-2](https://github.com/openai/gpt-2) has the model definition in TensorFlow, but not the training code

|

| 83 |

+

- [openai/image-gpt](https://github.com/openai/image-gpt) has some more modern gpt-3 like modification in its code, good reference as well

|

| 84 |

+

- [huggingface/transformers](https://github.com/huggingface/transformers) has a [language-modeling example](https://github.com/huggingface/transformers/tree/master/examples/pytorch/language-modeling). It is full-featured but as a result also somewhat challenging to trace. E.g. some large functions have as much as 90% unused code behind various branching statements that is unused in the default setting of simple language modeling

|

| 85 |

+

|

| 86 |

+

Papers + some implementation notes:

|

| 87 |

+

|

| 88 |

+

#### Improving Language Understanding by Generative Pre-Training (GPT-1)

|

| 89 |

+

|

| 90 |

+

- Our model largely follows the original transformer work

|

| 91 |

+

- We trained a 12-layer decoder-only transformer with masked self-attention heads (768 dimensional states and 12 attention heads). For the position-wise feed-forward networks, we used 3072 dimensional inner states.

|

| 92 |

+

- Adam max learning rate of 2.5e-4. (later GPT-3 for this model size uses 6e-4)

|

| 93 |

+

- LR decay: increased linearly from zero over the first 2000 updates and annealed to 0 using a cosine schedule

|

| 94 |

+

- We train for 100 epochs on minibatches of 64 randomly sampled, contiguous sequences of 512 tokens.

|

| 95 |

+

- Since layernorm is used extensively throughout the model, a simple weight initialization of N(0, 0.02) was sufficient

|

| 96 |

+

- bytepair encoding (BPE) vocabulary with 40,000 merges

|

| 97 |

+

- residual, embedding, and attention dropouts with a rate of 0.1 for regularization.

|

| 98 |

+

- modified version of L2 regularization proposed in (37), with w = 0.01 on all non bias or gain weights

|

| 99 |

+

- For the activation function, we used the Gaussian Error Linear Unit (GELU).

|

| 100 |

+

- We used learned position embeddings instead of the sinusoidal version proposed in the original work

|

| 101 |

+

- For finetuning: We add dropout to the classifier with a rate of 0.1. learning rate of 6.25e-5 and a batchsize of 32. 3 epochs. We use a linear learning rate decay schedule with warmup over 0.2% of training. λ was set to 0.5.

|

| 102 |

+

- GPT-1 model is 12 layers and d_model 768, ~117M params

|

| 103 |

+

|

| 104 |

+

#### Language Models are Unsupervised Multitask Learners (GPT-2)

|

| 105 |

+

|

| 106 |

+

- LayerNorm was moved to the input of each sub-block, similar to a pre-activation residual network

|

| 107 |

+

- an additional layer normalization was added after the final self-attention block.

|

| 108 |

+

- modified initialization which accounts for the accumulation on the residual path with model depth is used. We scale the weights of residual layers at initialization by a factor of 1/√N where N is the number of residual layers. (weird because in their released code i can only find a simple use of the old 0.02... in their release of image-gpt I found it used for c_proj, and even then only for attn, not for mlp. huh. https://github.com/openai/image-gpt/blob/master/src/model.py)

|

| 109 |

+

- the vocabulary is expanded to 50,257

|

| 110 |

+

- increase the context size from 512 to 1024 tokens

|

| 111 |

+

- larger batchsize of 512 is used

|

| 112 |

+

- GPT-2 used 48 layers and d_model 1600 (vs. original 12 layers and d_model 768). ~1.542B params

|

| 113 |

+

|

| 114 |

+

#### Language Models are Few-Shot Learners (GPT-3)

|

| 115 |

+

|

| 116 |

+

- GPT-3: 96 layers, 96 heads, with d_model of 12,288 (175B parameters).

|

| 117 |

+

- GPT-1-like: 12 layers, 12 heads, d_model 768 (125M)

|

| 118 |

+

- We use the same model and architecture as GPT-2, including the modified initialization, pre-normalization, and reversible tokenization described therein

|

| 119 |

+

- we use alternating dense and locally banded sparse attention patterns in the layers of the transformer, similar to the Sparse Transformer

|

| 120 |

+

- we always have the feedforward layer four times the size of the bottleneck layer, dff = 4 ∗ dmodel

|

| 121 |

+

- all models use a context window of nctx = 2048 tokens.

|

| 122 |

+

- Adam with β1 = 0.9, β2 = 0.95, and eps = 10−8

|

| 123 |

+

- All models use weight decay of 0.1 to provide a small amount of regularization. (NOTE: GPT-1 used 0.01 I believe, see above)

|

| 124 |

+

- clip the global norm of the gradient at 1.0

|

| 125 |

+

- Linear LR warmup over the first 375 million tokens. Then use cosine decay for learning rate down to 10% of its value, over 260 billion tokens.

|

| 126 |

+

- gradually increase the batch size linearly from a small value (32k tokens) to the full value over the first 4-12 billion tokens of training, depending on the model size.

|

| 127 |

+

- full 2048-sized time context window is always used, with a special END OF DOCUMENT token delimiter

|

| 128 |

+

|

| 129 |

+

#### Generative Pretraining from Pixels (Image GPT)

|

| 130 |

+

|

| 131 |

+

- When working with images, we pick the identity permutation πi = i for 1 ≤ i ≤ n, also known as raster order.

|

| 132 |

+

- we create our own 9-bit color palette by clustering (R, G, B) pixel values using k-means with k = 512.

|

| 133 |

+

- Our largest model, iGPT-XL, contains L = 60 layers and uses an embedding size of d = 3072 for a total of 6.8B parameters.

|

| 134 |

+

- Our next largest model, iGPT-L, is essentially identical to GPT-2 with L = 48 layers, but contains a slightly smaller embedding size of d = 1536 (vs 1600) for a total of 1.4B parameters.

|

| 135 |

+

- We use the same model code as GPT-2, except that we initialize weights in the layerdependent fashion as in Sparse Transformer (Child et al., 2019) and zero-initialize all projections producing logits.

|

| 136 |

+

- We also train iGPT-M, a 455M parameter model with L = 36 and d = 1024

|

| 137 |

+

- iGPT-S, a 76M parameter model with L = 24 and d = 512 (okay, and how many heads? looks like the Github code claims 8)

|

| 138 |

+

- When pre-training iGPT-XL, we use a batch size of 64 and train for 2M iterations, and for all other models we use a batch size of 128 and train for 1M iterations.

|

| 139 |

+

- Adam with β1 = 0.9 and β2 = 0.95

|

| 140 |

+

- The learning rate is warmed up for one epoch, and then decays to 0

|

| 141 |

+

- We did not use weight decay because applying a small weight decay of 0.01 did not change representation quality.

|

| 142 |

+

- iGPT-S lr 0.003

|

| 143 |

+

- No dropout is used.

|

| 144 |

+

|

| 145 |

+

### License

|

| 146 |

+

|

| 147 |

+

MIT

|

demo.ipynb

ADDED

|

@@ -0,0 +1,331 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"A cute little demo showing the simplest usage of minGPT. Configured to run fine on Macbook Air in like a minute."

|

| 8 |

+

]

|

| 9 |

+

},

|

| 10 |

+

{

|

| 11 |

+

"cell_type": "code",

|

| 12 |

+

"execution_count": 1,

|

| 13 |

+

"metadata": {},

|

| 14 |

+

"outputs": [],

|

| 15 |

+

"source": [

|

| 16 |

+

"import torch\n",

|

| 17 |

+

"from torch.utils.data import Dataset\n",

|

| 18 |

+

"from torch.utils.data.dataloader import DataLoader\n",

|

| 19 |

+

"from mingpt.utils import set_seed\n",

|

| 20 |

+

"set_seed(3407)"

|

| 21 |

+

]

|

| 22 |

+

},

|

| 23 |

+

{

|

| 24 |

+

"cell_type": "code",

|

| 25 |

+

"execution_count": 2,

|

| 26 |

+

"metadata": {},

|

| 27 |

+

"outputs": [],

|

| 28 |

+

"source": [

|

| 29 |

+

"import pickle\n",

|

| 30 |

+

"\n",

|

| 31 |

+

"class SortDataset(Dataset):\n",

|

| 32 |

+

" \"\"\" \n",

|

| 33 |

+

" Dataset for the Sort problem. E.g. for problem length 6:\n",

|

| 34 |

+

" Input: 0 0 2 1 0 1 -> Output: 0 0 0 1 1 2\n",

|

| 35 |

+

" Which will feed into the transformer concatenated as:\n",

|

| 36 |

+

" input: 0 0 2 1 0 1 0 0 0 1 1\n",

|

| 37 |

+

" output: I I I I I 0 0 0 1 1 2\n",

|

| 38 |

+

" where I is \"ignore\", as the transformer is reading the input sequence\n",

|

| 39 |

+

" \"\"\"\n",

|

| 40 |

+

"\n",

|

| 41 |

+

" def __init__(self, split, length=6, num_digits=3):\n",

|

| 42 |

+

" assert split in {'train', 'test'}\n",

|

| 43 |

+

" self.split = split\n",

|

| 44 |

+

" self.length = length\n",

|

| 45 |

+

" self.num_digits = num_digits\n",

|

| 46 |

+

" \n",

|

| 47 |

+

" def __len__(self):\n",

|

| 48 |

+

" return 10000 # ...\n",

|

| 49 |

+

" \n",

|

| 50 |

+

" def get_vocab_size(self):\n",

|

| 51 |

+

" return self.num_digits\n",

|

| 52 |

+

" \n",

|

| 53 |

+

" def get_block_size(self):\n",

|

| 54 |

+

" # the length of the sequence that will feed into transformer, \n",

|

| 55 |

+

" # containing concatenated input and the output, but -1 because\n",

|

| 56 |

+

" # the transformer starts making predictions at the last input element\n",

|

| 57 |

+

" return self.length * 2 - 1\n",

|

| 58 |

+

"\n",

|

| 59 |

+

" def __getitem__(self, idx):\n",

|

| 60 |

+

" \n",

|

| 61 |

+

" # use rejection sampling to generate an input example from the desired split\n",

|

| 62 |

+

" while True:\n",

|

| 63 |

+

" # generate some random integers\n",

|

| 64 |

+

" inp = torch.randint(self.num_digits, size=(self.length,), dtype=torch.long)\n",

|

| 65 |

+

" # half of the time let's try to boost the number of examples that \n",

|

| 66 |

+

" # have a large number of repeats, as this is what the model seems to struggle\n",

|

| 67 |

+

" # with later in training, and they are kind of rate\n",

|

| 68 |

+

" if torch.rand(1).item() < 0.5:\n",

|

| 69 |

+

" if inp.unique().nelement() > self.length // 2:\n",

|

| 70 |

+

" # too many unqiue digits, re-sample\n",

|

| 71 |

+

" continue\n",

|

| 72 |

+

" # figure out if this generated example is train or test based on its hash\n",

|

| 73 |

+

" h = hash(pickle.dumps(inp.tolist()))\n",

|

| 74 |

+

" inp_split = 'test' if h % 4 == 0 else 'train' # designate 25% of examples as test\n",

|

| 75 |

+

" if inp_split == self.split:\n",

|

| 76 |

+

" break # ok\n",

|

| 77 |

+

" \n",

|

| 78 |

+

" # solve the task: i.e. sort\n",

|

| 79 |

+

" sol = torch.sort(inp)[0]\n",

|

| 80 |

+

"\n",

|

| 81 |

+

" # concatenate the problem specification and the solution\n",

|

| 82 |

+

" cat = torch.cat((inp, sol), dim=0)\n",

|

| 83 |

+

"\n",

|

| 84 |

+

" # the inputs to the transformer will be the offset sequence\n",

|

| 85 |

+

" x = cat[:-1].clone()\n",

|

| 86 |

+

" y = cat[1:].clone()\n",

|

| 87 |

+

" # we only want to predict at output locations, mask out the loss at the input locations\n",

|

| 88 |

+

" y[:self.length-1] = -1\n",

|

| 89 |

+

" return x, y\n"

|

| 90 |

+

]

|

| 91 |

+

},

|

| 92 |

+

{

|

| 93 |

+

"cell_type": "code",

|

| 94 |

+

"execution_count": 3,

|

| 95 |

+

"metadata": {},

|

| 96 |

+

"outputs": [

|

| 97 |

+

{

|

| 98 |

+

"name": "stdout",

|

| 99 |

+

"output_type": "stream",

|

| 100 |

+

"text": [

|

| 101 |

+

"1 -1\n",

|

| 102 |

+

"0 -1\n",

|

| 103 |

+

"1 -1\n",

|

| 104 |

+

"0 -1\n",

|

| 105 |

+

"0 -1\n",

|

| 106 |

+

"0 0\n",

|

| 107 |

+

"0 0\n",

|

| 108 |

+

"0 0\n",

|

| 109 |

+

"0 0\n",

|

| 110 |

+

"0 1\n",

|

| 111 |

+

"1 1\n"

|

| 112 |

+

]

|

| 113 |

+

}

|

| 114 |

+

],

|

| 115 |

+

"source": [

|

| 116 |

+

"# print an example instance of the dataset\n",

|

| 117 |

+

"train_dataset = SortDataset('train')\n",

|

| 118 |

+

"test_dataset = SortDataset('test')\n",

|

| 119 |

+

"x, y = train_dataset[0]\n",

|

| 120 |

+

"for a, b in zip(x,y):\n",

|

| 121 |

+

" print(int(a),int(b))"

|

| 122 |

+

]

|

| 123 |

+

},

|

| 124 |

+

{

|

| 125 |

+

"cell_type": "code",

|

| 126 |

+

"execution_count": 4,

|

| 127 |

+

"metadata": {},

|

| 128 |

+

"outputs": [

|

| 129 |

+

{

|

| 130 |

+

"name": "stdout",

|

| 131 |

+

"output_type": "stream",

|

| 132 |

+

"text": [

|

| 133 |

+

"number of parameters: 0.09M\n"

|

| 134 |

+

]

|

| 135 |

+

}

|

| 136 |

+

],

|

| 137 |

+

"source": [

|

| 138 |

+

"# create a GPT instance\n",

|

| 139 |

+

"from mingpt.model import GPT\n",

|

| 140 |

+

"\n",

|

| 141 |

+

"model_config = GPT.get_default_config()\n",

|

| 142 |

+

"model_config.model_type = 'gpt-nano'\n",

|

| 143 |

+

"model_config.vocab_size = train_dataset.get_vocab_size()\n",

|

| 144 |

+

"model_config.block_size = train_dataset.get_block_size()\n",

|

| 145 |

+

"model = GPT(model_config)"

|

| 146 |

+

]

|

| 147 |

+

},

|

| 148 |

+

{

|

| 149 |

+

"cell_type": "code",

|

| 150 |

+

"execution_count": 5,

|

| 151 |

+

"metadata": {},

|

| 152 |

+

"outputs": [

|

| 153 |

+

{

|

| 154 |

+

"name": "stdout",

|

| 155 |

+

"output_type": "stream",

|

| 156 |

+

"text": [

|

| 157 |

+

"running on device cuda\n"

|

| 158 |

+

]

|

| 159 |

+

}

|

| 160 |

+

],

|

| 161 |

+

"source": [

|

| 162 |

+

"# create a Trainer object\n",

|

| 163 |

+

"from mingpt.trainer import Trainer\n",

|

| 164 |

+

"\n",

|

| 165 |

+

"train_config = Trainer.get_default_config()\n",

|

| 166 |

+

"train_config.learning_rate = 5e-4 # the model we're using is so small that we can go a bit faster\n",

|

| 167 |

+

"train_config.max_iters = 2000\n",

|

| 168 |

+

"train_config.num_workers = 0\n",

|

| 169 |

+

"trainer = Trainer(train_config, model, train_dataset)"

|

| 170 |

+

]

|

| 171 |

+

},

|

| 172 |

+

{

|

| 173 |

+

"cell_type": "code",

|

| 174 |

+

"execution_count": 6,

|

| 175 |

+

"metadata": {},

|

| 176 |

+

"outputs": [

|

| 177 |

+

{

|

| 178 |

+

"name": "stdout",

|

| 179 |

+

"output_type": "stream",

|

| 180 |

+

"text": [

|

| 181 |

+

"iter_dt 0.00ms; iter 0: train loss 1.06407\n",

|

| 182 |

+

"iter_dt 18.17ms; iter 100: train loss 0.14712\n",

|

| 183 |

+

"iter_dt 18.70ms; iter 200: train loss 0.05315\n",

|

| 184 |

+

"iter_dt 19.65ms; iter 300: train loss 0.04404\n",

|

| 185 |

+

"iter_dt 31.64ms; iter 400: train loss 0.04724\n",

|

| 186 |

+

"iter_dt 18.43ms; iter 500: train loss 0.02521\n",

|

| 187 |

+

"iter_dt 19.83ms; iter 600: train loss 0.03352\n",

|

| 188 |

+

"iter_dt 19.58ms; iter 700: train loss 0.00539\n",

|

| 189 |

+

"iter_dt 18.72ms; iter 800: train loss 0.02057\n",

|

| 190 |

+

"iter_dt 18.26ms; iter 900: train loss 0.00360\n",

|

| 191 |

+

"iter_dt 18.50ms; iter 1000: train loss 0.00788\n",

|

| 192 |

+

"iter_dt 20.64ms; iter 1100: train loss 0.01162\n",

|

| 193 |

+

"iter_dt 18.63ms; iter 1200: train loss 0.00963\n",

|

| 194 |

+

"iter_dt 18.32ms; iter 1300: train loss 0.02066\n",

|

| 195 |

+

"iter_dt 18.40ms; iter 1400: train loss 0.01739\n",

|

| 196 |

+

"iter_dt 18.37ms; iter 1500: train loss 0.00376\n",

|

| 197 |

+

"iter_dt 18.67ms; iter 1600: train loss 0.00133\n",

|

| 198 |

+

"iter_dt 18.38ms; iter 1700: train loss 0.00179\n",

|

| 199 |

+

"iter_dt 18.66ms; iter 1800: train loss 0.00079\n",

|

| 200 |

+

"iter_dt 18.48ms; iter 1900: train loss 0.00042\n"

|

| 201 |

+

]

|

| 202 |

+

}

|

| 203 |

+

],

|

| 204 |

+

"source": [

|

| 205 |

+

"def batch_end_callback(trainer):\n",

|

| 206 |

+

" if trainer.iter_num % 100 == 0:\n",

|

| 207 |

+

" print(f\"iter_dt {trainer.iter_dt * 1000:.2f}ms; iter {trainer.iter_num}: train loss {trainer.loss.item():.5f}\")\n",

|

| 208 |

+

"trainer.set_callback('on_batch_end', batch_end_callback)\n",

|

| 209 |

+

"\n",

|

| 210 |

+

"trainer.run()"

|

| 211 |

+

]

|

| 212 |

+

},

|

| 213 |

+

{

|

| 214 |

+

"cell_type": "code",

|

| 215 |

+

"execution_count": 7,

|

| 216 |

+

"metadata": {},

|

| 217 |

+

"outputs": [],

|

| 218 |

+

"source": [

|

| 219 |

+

"# now let's perform some evaluation\n",

|

| 220 |

+

"model.eval();"

|

| 221 |

+

]

|

| 222 |

+

},

|

| 223 |

+

{

|

| 224 |

+

"cell_type": "code",

|

| 225 |

+

"execution_count": 8,

|

| 226 |

+

"metadata": {},

|

| 227 |

+

"outputs": [

|

| 228 |

+

{

|

| 229 |

+

"name": "stdout",

|

| 230 |

+

"output_type": "stream",

|

| 231 |

+

"text": [

|

| 232 |

+

"train final score: 5000/5000 = 100.00% correct\n",

|

| 233 |

+

"test final score: 5000/5000 = 100.00% correct\n"

|

| 234 |

+

]

|

| 235 |

+

}

|

| 236 |

+

],

|

| 237 |

+

"source": [

|

| 238 |

+

"def eval_split(trainer, split, max_batches):\n",

|

| 239 |

+

" dataset = {'train':train_dataset, 'test':test_dataset}[split]\n",

|

| 240 |

+

" n = train_dataset.length # naugy direct access shrug\n",

|

| 241 |

+

" results = []\n",

|

| 242 |

+

" mistakes_printed_already = 0\n",

|

| 243 |

+

" loader = DataLoader(dataset, batch_size=100, num_workers=0, drop_last=False)\n",

|

| 244 |

+

" for b, (x, y) in enumerate(loader):\n",

|

| 245 |

+

" x = x.to(trainer.device)\n",

|

| 246 |

+

" y = y.to(trainer.device)\n",

|

| 247 |

+

" # isolate the input pattern alone\n",

|

| 248 |

+

" inp = x[:, :n]\n",

|

| 249 |

+

" sol = y[:, -n:]\n",

|

| 250 |

+

" # let the model sample the rest of the sequence\n",

|

| 251 |

+

" cat = model.generate(inp, n, do_sample=False) # using greedy argmax, not sampling\n",

|

| 252 |

+

" sol_candidate = cat[:, n:] # isolate the filled in sequence\n",

|

| 253 |

+

" # compare the predicted sequence to the true sequence\n",

|

| 254 |

+

" correct = (sol == sol_candidate).all(1).cpu() # Software 1.0 vs. Software 2.0 fight RIGHT on this line haha\n",

|

| 255 |

+

" for i in range(x.size(0)):\n",

|

| 256 |

+

" results.append(int(correct[i]))\n",

|

| 257 |

+

" if not correct[i] and mistakes_printed_already < 3: # only print up to 5 mistakes to get a sense\n",

|

| 258 |

+

" mistakes_printed_already += 1\n",

|

| 259 |

+

" print(\"GPT claims that %s sorted is %s but gt is %s\" % (inp[i].tolist(), sol_candidate[i].tolist(), sol[i].tolist()))\n",

|

| 260 |

+

" if max_batches is not None and b+1 >= max_batches:\n",

|

| 261 |

+

" break\n",

|

| 262 |

+

" rt = torch.tensor(results, dtype=torch.float)\n",

|

| 263 |

+

" print(\"%s final score: %d/%d = %.2f%% correct\" % (split, rt.sum(), len(results), 100*rt.mean()))\n",

|

| 264 |

+

" return rt.sum()\n",

|

| 265 |

+

"\n",

|

| 266 |

+

"# run a lot of examples from both train and test through the model and verify the output correctness\n",

|

| 267 |

+

"with torch.no_grad():\n",

|

| 268 |

+

" train_score = eval_split(trainer, 'train', max_batches=50)\n",

|

| 269 |

+

" test_score = eval_split(trainer, 'test', max_batches=50)"

|

| 270 |

+

]

|

| 271 |

+

},

|

| 272 |

+

{

|

| 273 |

+

"cell_type": "code",

|

| 274 |

+

"execution_count": 9,

|

| 275 |

+

"metadata": {},

|

| 276 |

+

"outputs": [

|

| 277 |

+

{

|

| 278 |

+

"name": "stdout",

|

| 279 |

+

"output_type": "stream",

|

| 280 |

+

"text": [

|

| 281 |

+

"input sequence : [[0, 0, 2, 1, 0, 1]]\n",

|

| 282 |

+

"predicted sorted: [[0, 0, 0, 1, 1, 2]]\n",

|

| 283 |

+

"gt sort : [0, 0, 0, 1, 1, 2]\n",

|

| 284 |

+

"matches : True\n"

|

| 285 |

+

]

|

| 286 |

+

}

|

| 287 |

+

],

|

| 288 |

+

"source": [

|

| 289 |

+

"# let's run a random given sequence through the model as well\n",

|

| 290 |

+

"n = train_dataset.length # naugy direct access shrug\n",

|

| 291 |

+

"inp = torch.tensor([[0, 0, 2, 1, 0, 1]], dtype=torch.long).to(trainer.device)\n",

|

| 292 |

+

"assert inp[0].nelement() == n\n",

|

| 293 |

+

"with torch.no_grad():\n",

|

| 294 |

+

" cat = model.generate(inp, n, do_sample=False)\n",

|

| 295 |

+

"sol = torch.sort(inp[0])[0]\n",

|

| 296 |

+

"sol_candidate = cat[:, n:]\n",

|

| 297 |

+

"print('input sequence :', inp.tolist())\n",

|

| 298 |

+

"print('predicted sorted:', sol_candidate.tolist())\n",

|

| 299 |

+

"print('gt sort :', sol.tolist())\n",

|

| 300 |

+

"print('matches :', bool((sol == sol_candidate).all()))"

|

| 301 |

+

]

|

| 302 |

+

}

|

| 303 |

+

],

|

| 304 |

+

"metadata": {

|

| 305 |

+

"kernelspec": {

|

| 306 |

+

"display_name": "Python 3.10.4 64-bit",

|

| 307 |

+

"language": "python",

|

| 308 |

+

"name": "python3"

|

| 309 |

+

},

|

| 310 |

+

"language_info": {

|

| 311 |

+

"codemirror_mode": {

|

| 312 |

+

"name": "ipython",

|

| 313 |

+

"version": 3

|

| 314 |

+

},

|

| 315 |

+

"file_extension": ".py",

|

| 316 |

+

"mimetype": "text/x-python",

|

| 317 |

+

"name": "python",

|

| 318 |

+

"nbconvert_exporter": "python",

|

| 319 |

+

"pygments_lexer": "ipython3",

|

| 320 |

+

"version": "3.10.4"

|

| 321 |

+

},

|

| 322 |

+

"orig_nbformat": 4,

|

| 323 |

+

"vscode": {

|

| 324 |

+

"interpreter": {

|

| 325 |

+

"hash": "3ad933181bd8a04b432d3370b9dc3b0662ad032c4dfaa4e4f1596c548f763858"

|

| 326 |

+

}

|

| 327 |

+

}

|

| 328 |

+

},

|

| 329 |

+

"nbformat": 4,

|

| 330 |

+

"nbformat_minor": 2

|

| 331 |

+

}

|

generate.ipynb

ADDED

|

@@ -0,0 +1,166 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"Shows how one can generate text given a prompt and some hyperparameters, using either minGPT or huggingface/transformers"

|

| 8 |

+

]

|

| 9 |

+

},

|

| 10 |

+

{

|

| 11 |

+

"cell_type": "code",

|

| 12 |

+

"execution_count": 1,

|

| 13 |

+

"metadata": {},

|

| 14 |

+

"outputs": [],

|

| 15 |

+

"source": [

|

| 16 |

+

"import torch\n",

|

| 17 |

+

"from transformers import GPT2Tokenizer, GPT2LMHeadModel\n",

|

| 18 |

+

"from mingpt.model import GPT\n",

|

| 19 |

+

"from mingpt.utils import set_seed\n",

|

| 20 |

+

"from mingpt.bpe import BPETokenizer\n",

|

| 21 |

+

"set_seed(3407)"

|

| 22 |

+

]

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"cell_type": "code",

|

| 26 |

+

"execution_count": 2,

|

| 27 |

+

"metadata": {},

|

| 28 |

+

"outputs": [],

|

| 29 |

+

"source": [

|

| 30 |

+

"use_mingpt = True # use minGPT or huggingface/transformers model?\n",

|

| 31 |

+

"model_type = 'gpt2-xl'\n",

|

| 32 |

+

"device = 'cuda'"

|

| 33 |

+

]

|

| 34 |

+

},

|

| 35 |

+

{

|

| 36 |

+

"cell_type": "code",

|

| 37 |

+

"execution_count": 3,

|

| 38 |

+

"metadata": {},

|

| 39 |

+

"outputs": [

|

| 40 |

+

{

|

| 41 |

+

"name": "stdout",

|

| 42 |

+

"output_type": "stream",

|

| 43 |

+

"text": [

|

| 44 |

+

"number of parameters: 1557.61M\n"

|

| 45 |

+

]

|

| 46 |

+

}

|

| 47 |

+

],

|

| 48 |

+

"source": [

|

| 49 |

+

"if use_mingpt:\n",

|

| 50 |

+

" model = GPT.from_pretrained(model_type)\n",

|

| 51 |

+

"else:\n",

|

| 52 |

+

" model = GPT2LMHeadModel.from_pretrained(model_type)\n",

|

| 53 |

+

" model.config.pad_token_id = model.config.eos_token_id # suppress a warning\n",

|

| 54 |

+

"\n",

|

| 55 |

+

"# ship model to device and set to eval mode\n",

|

| 56 |

+

"model.to(device)\n",

|

| 57 |

+

"model.eval();"

|

| 58 |

+

]

|

| 59 |

+

},

|

| 60 |

+

{

|

| 61 |

+

"cell_type": "code",

|

| 62 |

+

"execution_count": 4,

|

| 63 |

+

"metadata": {},

|

| 64 |

+

"outputs": [],

|

| 65 |

+

"source": [

|

| 66 |

+

"\n",

|

| 67 |

+

"def generate(prompt='', num_samples=10, steps=20, do_sample=True):\n",

|

| 68 |

+

" \n",

|

| 69 |

+

" # tokenize the input prompt into integer input sequence\n",

|

| 70 |

+

" if use_mingpt:\n",

|

| 71 |

+

" tokenizer = BPETokenizer()\n",

|

| 72 |

+

" if prompt == '':\n",

|

| 73 |

+

" # to create unconditional samples...\n",

|

| 74 |

+

" # manually create a tensor with only the special <|endoftext|> token\n",

|

| 75 |

+

" # similar to what openai's code does here https://github.com/openai/gpt-2/blob/master/src/generate_unconditional_samples.py\n",

|

| 76 |

+

" x = torch.tensor([[tokenizer.encoder.encoder['<|endoftext|>']]], dtype=torch.long)\n",

|

| 77 |

+

" else:\n",

|

| 78 |

+

" x = tokenizer(prompt).to(device)\n",

|

| 79 |

+

" else:\n",

|

| 80 |

+

" tokenizer = GPT2Tokenizer.from_pretrained(model_type)\n",

|

| 81 |

+

" if prompt == '': \n",

|

| 82 |

+

" # to create unconditional samples...\n",

|

| 83 |

+

" # huggingface/transformers tokenizer special cases these strings\n",

|

| 84 |

+

" prompt = '<|endoftext|>'\n",

|

| 85 |

+

" encoded_input = tokenizer(prompt, return_tensors='pt').to(device)\n",

|

| 86 |

+

" x = encoded_input['input_ids']\n",

|

| 87 |

+

" \n",

|

| 88 |

+

" # we'll process all desired num_samples in a batch, so expand out the batch dim\n",

|

| 89 |

+

" x = x.expand(num_samples, -1)\n",

|

| 90 |

+

"\n",

|

| 91 |

+

" # forward the model `steps` times to get samples, in a batch\n",

|

| 92 |

+

" y = model.generate(x, max_new_tokens=steps, do_sample=do_sample, top_k=40)\n",

|

| 93 |

+

" \n",

|

| 94 |

+

" for i in range(num_samples):\n",

|

| 95 |

+

" out = tokenizer.decode(y[i].cpu().squeeze())\n",

|

| 96 |

+

" print('-'*80)\n",

|

| 97 |

+

" print(out)\n",

|

| 98 |

+

" "

|

| 99 |

+

]

|

| 100 |

+

},

|

| 101 |

+

{

|

| 102 |

+

"cell_type": "code",

|

| 103 |

+

"execution_count": 5,

|

| 104 |

+

"metadata": {},

|

| 105 |

+

"outputs": [

|

| 106 |

+

{

|

| 107 |

+

"name": "stdout",

|

| 108 |

+

"output_type": "stream",

|

| 109 |

+

"text": [

|

| 110 |

+

"--------------------------------------------------------------------------------\n",

|

| 111 |

+

"Andrej Karpathy, the chief of the criminal investigation department, said during a news conference, \"We still have a lot of\n",

|

| 112 |

+

"--------------------------------------------------------------------------------\n",

|

| 113 |

+

"Andrej Karpathy, the man whom most of America believes is the architect of the current financial crisis. He runs the National Council\n",

|

| 114 |

+

"--------------------------------------------------------------------------------\n",

|

| 115 |

+

"Andrej Karpathy, the head of the Department for Regional Reform of Bulgaria and an MP in the centre-right GERB party\n",

|

| 116 |

+

"--------------------------------------------------------------------------------\n",

|

| 117 |

+

"Andrej Karpathy, the former head of the World Bank's IMF department, who worked closely with the IMF. The IMF had\n",

|

| 118 |

+

"--------------------------------------------------------------------------------\n",

|

| 119 |

+

"Andrej Karpathy, the vice president for innovation and research at Citi who oversaw the team's work to make sense of the\n",

|

| 120 |

+

"--------------------------------------------------------------------------------\n",

|

| 121 |

+

"Andrej Karpathy, the CEO of OOAK Research, said that the latest poll indicates that it won't take much to\n",

|

| 122 |

+

"--------------------------------------------------------------------------------\n",

|

| 123 |

+

"Andrej Karpathy, the former prime minister of Estonia was at the helm of a three-party coalition when parliament met earlier this\n",

|

| 124 |

+

"--------------------------------------------------------------------------------\n",

|

| 125 |

+

"Andrej Karpathy, the director of the Institute of Economic and Social Research, said if the rate of return is only 5 per\n",

|

| 126 |

+

"--------------------------------------------------------------------------------\n",

|

| 127 |

+

"Andrej Karpathy, the minister of commerce for Latvia's western neighbour: \"The deal means that our two countries have reached more\n",

|

| 128 |

+

"--------------------------------------------------------------------------------\n",

|

| 129 |

+

"Andrej Karpathy, the state's environmental protection commissioner. \"That's why we have to keep these systems in place.\"\n",

|

| 130 |

+

"\n"

|

| 131 |

+

]

|

| 132 |

+

}

|

| 133 |

+

],

|

| 134 |

+

"source": [

|

| 135 |

+

"generate(prompt='Andrej Karpathy, the', num_samples=10, steps=20)"

|

| 136 |

+

]

|

| 137 |

+

}

|

| 138 |

+

],

|

| 139 |

+

"metadata": {

|

| 140 |

+

"kernelspec": {

|

| 141 |

+

"display_name": "Python 3.10.4 64-bit",

|

| 142 |

+

"language": "python",

|

| 143 |

+

"name": "python3"

|

| 144 |

+

},

|

| 145 |

+

"language_info": {

|

| 146 |

+

"codemirror_mode": {

|

| 147 |

+

"name": "ipython",

|

| 148 |

+

"version": 3

|

| 149 |

+

},

|

| 150 |

+

"file_extension": ".py",

|

| 151 |

+

"mimetype": "text/x-python",

|

| 152 |

+

"name": "python",

|

| 153 |

+

"nbconvert_exporter": "python",

|

| 154 |

+

"pygments_lexer": "ipython3",

|

| 155 |

+

"version": "3.10.4"

|

| 156 |

+

},

|

| 157 |

+

"orig_nbformat": 4,

|

| 158 |

+

"vscode": {

|

| 159 |

+

"interpreter": {

|

| 160 |

+

"hash": "3ad933181bd8a04b432d3370b9dc3b0662ad032c4dfaa4e4f1596c548f763858"

|

| 161 |

+

}

|

| 162 |

+

}

|

| 163 |

+

},

|

| 164 |

+

"nbformat": 4,

|

| 165 |

+

"nbformat_minor": 2

|

| 166 |

+

}

|

mingpt.jpg

ADDED

|

mingpt/__init__.py

ADDED

|

File without changes

|

mingpt/bpe.py

ADDED

|

@@ -0,0 +1,319 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

bpe is short for Byte Pair Encoder. It translates arbitrary utf-8 strings into

|

| 3 |

+

sequences of integers, where each integer represents small chunks of commonly

|

| 4 |

+

occuring characters. This implementation is based on openai's gpt2 encoder.py:

|

| 5 |

+

https://github.com/openai/gpt-2/blob/master/src/encoder.py

|

| 6 |

+

but was mildly modified because the original implementation is a bit confusing.

|

| 7 |

+

I also tried to add as many comments as possible, my own understanding of what's

|

| 8 |

+

going on.

|

| 9 |

+

"""

|

| 10 |

+

|

| 11 |

+

import os

|

| 12 |

+

import json

|

| 13 |

+

import regex as re

|

| 14 |

+

import requests

|

| 15 |

+

|

| 16 |

+

import torch

|

| 17 |

+

|

| 18 |

+

# -----------------------------------------------------------------------------

|

| 19 |

+

|

| 20 |

+

def bytes_to_unicode():

|

| 21 |

+

"""

|

| 22 |

+

Every possible byte (really an integer 0..255) gets mapped by OpenAI to a unicode

|

| 23 |

+

character that represents it visually. Some bytes have their appearance preserved

|

| 24 |

+

because they don't cause any trouble. These are defined in list bs. For example:

|

| 25 |

+

chr(33) returns "!", so in the returned dictionary we simply have d[33] -> "!".

|