Commit

·

aa69275

1

Parent(s):

e724d71

Upload 55 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- .gitignore +133 -0

- LICENSE +201 -0

- README.md +91 -3

- assets/demo.gif +3 -0

- assets/demo_short.gif +3 -0

- assets/figure.jpg +3 -0

- attentions.py +300 -0

- commons.py +96 -0

- hf_models/download.md +33 -0

- hubert_model.py +221 -0

- image/01cc9083.png +0 -0

- image/1d988a81.png +0 -0

- image/307ade76.png +0 -0

- image/5ebacb6a.png +0 -0

- image/cdb4d5e5.png +0 -0

- mel_processing.py +101 -0

- models.py +404 -0

- modules/__init__.py +0 -0

- modules/controlnet_canny.py +60 -0

- modules/controlnet_depth.py +59 -0

- modules/controlnet_hed.py +58 -0

- modules/controlnet_line.py +58 -0

- modules/controlnet_normal.py +71 -0

- modules/controlnet_pose.py +58 -0

- modules/controlnet_scibble.py +56 -0

- modules/controlnet_seg.py +104 -0

- modules/image_captioning.py +21 -0

- modules/image_editing.py +40 -0

- modules/instruct_px2pix.py +28 -0

- modules/mask_former.py +30 -0

- modules/text2img.py +26 -0

- modules/utils.py +75 -0

- modules/visual_question_answering.py +24 -0

- requirement.txt +48 -0

- text/__init__.py +32 -0

- text/cantonese.py +59 -0

- text/cleaners.py +145 -0

- text/english.py +188 -0

- text/japanese.py +153 -0

- text/korean.py +210 -0

- text/mandarin.py +330 -0

- text/ngu_dialect.py +30 -0

- text/sanskrit.py +62 -0

- text/shanghainese.py +64 -0

- text/thai.py +44 -0

- transforms.py +193 -0

- utils_vits.py +75 -0

- visual_chatgpt.py +908 -0

- visual_chatgpt_zh.py +171 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

assets/demo_short.gif filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/demo.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/figure.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

image/

|

| 16 |

+

eggs/

|

| 17 |

+

.eggs/

|

| 18 |

+

lib/

|

| 19 |

+

lib64/

|

| 20 |

+

parts/

|

| 21 |

+

sdist/

|

| 22 |

+

var/

|

| 23 |

+

wheels/

|

| 24 |

+

pip-wheel-metadata/

|

| 25 |

+

share/python-wheels/

|

| 26 |

+

*.egg-info/

|

| 27 |

+

.installed.cfg

|

| 28 |

+

*.egg

|

| 29 |

+

MANIFEST

|

| 30 |

+

|

| 31 |

+

# PyInstaller

|

| 32 |

+

# Usually these files are written by a python script from a template

|

| 33 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 34 |

+

*.manifest

|

| 35 |

+

*.spec

|

| 36 |

+

|

| 37 |

+

# Installer logs

|

| 38 |

+

pip-log.txt

|

| 39 |

+

pip-delete-this-directory.txt

|

| 40 |

+

|

| 41 |

+

# Unit test / coverage reports

|

| 42 |

+

htmlcov/

|

| 43 |

+

.tox/

|

| 44 |

+

.nox/

|

| 45 |

+

.coverage

|

| 46 |

+

.coverage.*

|

| 47 |

+

.cache

|

| 48 |

+

nosetests.xml

|

| 49 |

+

coverage.xml

|

| 50 |

+

*.cover

|

| 51 |

+

*.py,cover

|

| 52 |

+

.hypothesis/

|

| 53 |

+

.pytest_cache/

|

| 54 |

+

|

| 55 |

+

# Translations

|

| 56 |

+

*.mo

|

| 57 |

+

*.pot

|

| 58 |

+

|

| 59 |

+

# Django stuff:

|

| 60 |

+

*.log

|

| 61 |

+

local_settings.py

|

| 62 |

+

db.sqlite3

|

| 63 |

+

db.sqlite3-journal

|

| 64 |

+

|

| 65 |

+

# Flask stuff:

|

| 66 |

+

instance/

|

| 67 |

+

.webassets-cache

|

| 68 |

+

|

| 69 |

+

# Scrapy stuff:

|

| 70 |

+

.scrapy

|

| 71 |

+

|

| 72 |

+

# Sphinx documentation

|

| 73 |

+

docs/_build/

|

| 74 |

+

|

| 75 |

+

# PyBuilder

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

.python-version

|

| 87 |

+

|

| 88 |

+

# pipenv

|

| 89 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 90 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 91 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 92 |

+

# install all needed dependencies.

|

| 93 |

+

#Pipfile.lock

|

| 94 |

+

|

| 95 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 96 |

+

__pypackages__/

|

| 97 |

+

|

| 98 |

+

# Celery stuff

|

| 99 |

+

celerybeat-schedule

|

| 100 |

+

celerybeat.pid

|

| 101 |

+

|

| 102 |

+

# SageMath parsed files

|

| 103 |

+

*.sage.py

|

| 104 |

+

|

| 105 |

+

# Environments

|

| 106 |

+

.env

|

| 107 |

+

.venv

|

| 108 |

+

env/

|

| 109 |

+

venv/

|

| 110 |

+

ENV/

|

| 111 |

+

env.bak/

|

| 112 |

+

venv.bak/

|

| 113 |

+

|

| 114 |

+

# Spyder project settings

|

| 115 |

+

.spyderproject

|

| 116 |

+

.spyproject

|

| 117 |

+

|

| 118 |

+

# Rope project settings

|

| 119 |

+

.ropeproject

|

| 120 |

+

|

| 121 |

+

# mkdocs documentation

|

| 122 |

+

/site

|

| 123 |

+

|

| 124 |

+

# mypy

|

| 125 |

+

.mypy_cache/

|

| 126 |

+

.dmypy.json

|

| 127 |

+

dmypy.json

|

| 128 |

+

|

| 129 |

+

# Pyre type checker

|

| 130 |

+

.pyre/

|

| 131 |

+

annotator

|

| 132 |

+

cldm

|

| 133 |

+

ldm

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,3 +1,91 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

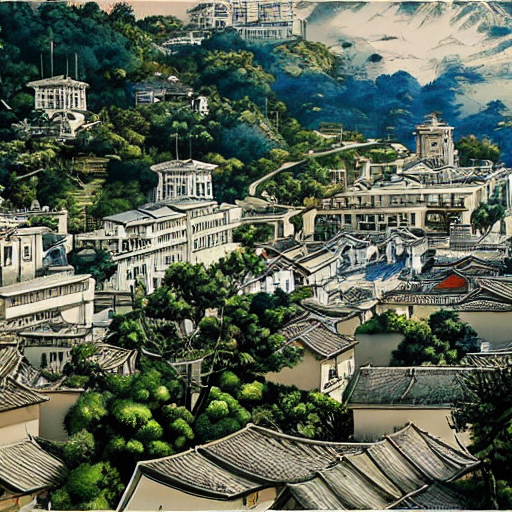

+

# visual-chatgpt-zh-vits

|

| 2 |

+

visual-chatgpt支持中文的windows版本

|

| 3 |

+

|

| 4 |

+

融合vits推断模块

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

官方论文: [<font size=5>Visual ChatGPT: Talking, Drawing and Editing with Visual Foundation Models</font>](https://arxiv.org/abs/2303.04671)

|

| 8 |

+

|

| 9 |

+

官方仓库:[visual-chatgpt](https://github.com/microsoft/visual-chatgpt)

|

| 10 |

+

|

| 11 |

+

fork from:[visual-chatgpt-zh](https://github.com/wxj630/visual-chatgpt-zh)

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

## Demo

|

| 15 |

+

<img src="./assets/demo_short.gif" width="750">

|

| 16 |

+

|

| 17 |

+

## System Architecture

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

<p align="center"><img src="./assets/figure.jpg" alt="Logo"></p>

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Quick Start

|

| 24 |

+

|

| 25 |

+

```

|

| 26 |

+

# 1、下载代码

|

| 27 |

+

git clone https://github.com/FrankZxShen/visual-chatgpt-zh-vits.git

|

| 28 |

+

|

| 29 |

+

# 2、进入项目目录

|

| 30 |

+

cd visual-chatgpt-zh-vits

|

| 31 |

+

|

| 32 |

+

# 3、创建python环境并激活环境

|

| 33 |

+

conda create -n visgpt python=3.8

|

| 34 |

+

activate visgpt

|

| 35 |

+

|

| 36 |

+

# 4、安装环境依赖

|

| 37 |

+

pip install -r requirement.txt

|

| 38 |

+

|

| 39 |

+

# 5、确认api key

|

| 40 |

+

export OPENAI_API_KEY={Your_Private_Openai_Key}

|

| 41 |

+

# windows系统用set命令而不是export

|

| 42 |

+

set OPENAI_API_KEY={Your_Private_Openai_Key}

|

| 43 |

+

|

| 44 |

+

# 6、下载hf模型到指定目录

|

| 45 |

+

# 具体模型文件地址于hf_models

|

| 46 |

+

# 若需要vits推断功能将G.pth config.json放于vits_models下(目前仅支持日语?)

|

| 47 |

+

# Windows:下载pyopenjtalk Windows于text下

|

| 48 |

+

|

| 49 |

+

# 7、启动系统,这个例子我们加载了ImageCaptioning和Text2Image两个模型,

|

| 50 |

+

python visual_chatgpt_zh_vits.py

|

| 51 |

+

# 想要用哪个功能就可增加一些模型加载

|

| 52 |

+

python visual_chatgpt_zh_vits.py

|

| 53 |

+

--load ImageCaptioning_cuda:0,Text2Image_cuda:0 \

|

| 54 |

+

--pretrained_model_dir {your_hf_models_path} \

|

| 55 |

+

|

| 56 |

+

# 8、可以直接在visual_chatgpt_zh_vits.py 38行修改key 若需要vits 39行设定True

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

原作者:根据官方建议,不同显卡可以指定不同“--load”参数,显存不够的就可以时间换空间,把不重要的模型加载到cpu上,虽然推理慢但是好歹能跑不是?(手动狗头):

|

| 60 |

+

```

|

| 61 |

+

# Advice for CPU Users

|

| 62 |

+

python visual_chatgpt.py --load ImageCaptioning_cpu,Text2Image_cpu

|

| 63 |

+

|

| 64 |

+

# Advice for 1 Tesla T4 15GB (Google Colab)

|

| 65 |

+

python visual_chatgpt.py --load "ImageCaptioning_cuda:0,Text2Image_cuda:0"

|

| 66 |

+

|

| 67 |

+

# Advice for 4 Tesla V100 32GB

|

| 68 |

+

python visual_chatgpt.py --load "ImageCaptioning_cuda:0,ImageEditing_cuda:0,

|

| 69 |

+

Text2Image_cuda:1,Image2Canny_cpu,CannyText2Image_cuda:1,

|

| 70 |

+

Image2Depth_cpu,DepthText2Image_cuda:1,VisualQuestionAnswering_cuda:2,

|

| 71 |

+

InstructPix2Pix_cuda:2,Image2Scribble_cpu,ScribbleText2Image_cuda:2,

|

| 72 |

+

Image2Seg_cpu,SegText2Image_cuda:2,Image2Pose_cpu,PoseText2Image_cuda:2,

|

| 73 |

+

Image2Hed_cpu,HedText2Image_cuda:3,Image2Normal_cpu,

|

| 74 |

+

NormalText2Image_cuda:3,Image2Line_cpu,LineText2Image_cuda:3"

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

实测环境 Windows RTX3070 8G:若只需要ImageCaptioning和Text2Image两个模型的功能,对显存要求极低,理论上能跑AI绘图均可以(>4G,但速度很慢)?

|

| 78 |

+

|

| 79 |

+

## limitations

|

| 80 |

+

|

| 81 |

+

img无法显示在gradio上?

|

| 82 |

+

|

| 83 |

+

## Acknowledgement

|

| 84 |

+

|

| 85 |

+

We appreciate the open source of the following projects:

|

| 86 |

+

|

| 87 |

+

- HuggingFace [[Project]](https://github.com/huggingface/transformers)

|

| 88 |

+

|

| 89 |

+

- ControlNet [[Paper]](https://arxiv.org/abs/2302.05543) [[Project]](https://github.com/lllyasviel/ControlNet)

|

| 90 |

+

|

| 91 |

+

- Stable Diffusion [[Paper]](https://arxiv.org/abs/2112.10752) [[Project]](https://github.com/CompVis/stable-diffusion)

|

assets/demo.gif

ADDED

|

Git LFS Details

|

assets/demo_short.gif

ADDED

|

Git LFS Details

|

assets/figure.jpg

ADDED

|

Git LFS Details

|

attentions.py

ADDED

|

@@ -0,0 +1,300 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

import torch

|

| 3 |

+

from torch import nn

|

| 4 |

+

from torch.nn import functional as F

|

| 5 |

+

|

| 6 |

+

import commons

|

| 7 |

+

from vits_modules import LayerNorm

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

class Encoder(nn.Module):

|

| 11 |

+

def __init__(self, hidden_channels, filter_channels, n_heads, n_layers, kernel_size=1, p_dropout=0., window_size=4, **kwargs):

|

| 12 |

+

super().__init__()

|

| 13 |

+

self.hidden_channels = hidden_channels

|

| 14 |

+

self.filter_channels = filter_channels

|

| 15 |

+

self.n_heads = n_heads

|

| 16 |

+

self.n_layers = n_layers

|

| 17 |

+

self.kernel_size = kernel_size

|

| 18 |

+

self.p_dropout = p_dropout

|

| 19 |

+

self.window_size = window_size

|

| 20 |

+

|

| 21 |

+

self.drop = nn.Dropout(p_dropout)

|

| 22 |

+

self.attn_layers = nn.ModuleList()

|

| 23 |

+

self.norm_layers_1 = nn.ModuleList()

|

| 24 |

+

self.ffn_layers = nn.ModuleList()

|

| 25 |

+

self.norm_layers_2 = nn.ModuleList()

|

| 26 |

+

for i in range(self.n_layers):

|

| 27 |

+

self.attn_layers.append(MultiHeadAttention(hidden_channels, hidden_channels, n_heads, p_dropout=p_dropout, window_size=window_size))

|

| 28 |

+

self.norm_layers_1.append(LayerNorm(hidden_channels))

|

| 29 |

+

self.ffn_layers.append(FFN(hidden_channels, hidden_channels, filter_channels, kernel_size, p_dropout=p_dropout))

|

| 30 |

+

self.norm_layers_2.append(LayerNorm(hidden_channels))

|

| 31 |

+

|

| 32 |

+

def forward(self, x, x_mask):

|

| 33 |

+

attn_mask = x_mask.unsqueeze(2) * x_mask.unsqueeze(-1)

|

| 34 |

+

x = x * x_mask

|

| 35 |

+

for i in range(self.n_layers):

|

| 36 |

+

y = self.attn_layers[i](x, x, attn_mask)

|

| 37 |

+

y = self.drop(y)

|

| 38 |

+

x = self.norm_layers_1[i](x + y)

|

| 39 |

+

|

| 40 |

+

y = self.ffn_layers[i](x, x_mask)

|

| 41 |

+

y = self.drop(y)

|

| 42 |

+

x = self.norm_layers_2[i](x + y)

|

| 43 |

+

x = x * x_mask

|

| 44 |

+

return x

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

class Decoder(nn.Module):

|

| 48 |

+

def __init__(self, hidden_channels, filter_channels, n_heads, n_layers, kernel_size=1, p_dropout=0., proximal_bias=False, proximal_init=True, **kwargs):

|

| 49 |

+

super().__init__()

|

| 50 |

+

self.hidden_channels = hidden_channels

|

| 51 |

+

self.filter_channels = filter_channels

|

| 52 |

+

self.n_heads = n_heads

|

| 53 |

+

self.n_layers = n_layers

|

| 54 |

+

self.kernel_size = kernel_size

|

| 55 |

+

self.p_dropout = p_dropout

|

| 56 |

+

self.proximal_bias = proximal_bias

|

| 57 |

+

self.proximal_init = proximal_init

|

| 58 |

+

|

| 59 |

+

self.drop = nn.Dropout(p_dropout)

|

| 60 |

+

self.self_attn_layers = nn.ModuleList()

|

| 61 |

+

self.norm_layers_0 = nn.ModuleList()

|

| 62 |

+

self.encdec_attn_layers = nn.ModuleList()

|

| 63 |

+

self.norm_layers_1 = nn.ModuleList()

|

| 64 |

+

self.ffn_layers = nn.ModuleList()

|

| 65 |

+

self.norm_layers_2 = nn.ModuleList()

|

| 66 |

+

for i in range(self.n_layers):

|

| 67 |

+

self.self_attn_layers.append(MultiHeadAttention(hidden_channels, hidden_channels, n_heads, p_dropout=p_dropout, proximal_bias=proximal_bias, proximal_init=proximal_init))

|

| 68 |

+

self.norm_layers_0.append(LayerNorm(hidden_channels))

|

| 69 |

+

self.encdec_attn_layers.append(MultiHeadAttention(hidden_channels, hidden_channels, n_heads, p_dropout=p_dropout))

|

| 70 |

+

self.norm_layers_1.append(LayerNorm(hidden_channels))

|

| 71 |

+

self.ffn_layers.append(FFN(hidden_channels, hidden_channels, filter_channels, kernel_size, p_dropout=p_dropout, causal=True))

|

| 72 |

+

self.norm_layers_2.append(LayerNorm(hidden_channels))

|

| 73 |

+

|

| 74 |

+

def forward(self, x, x_mask, h, h_mask):

|

| 75 |

+

"""

|

| 76 |

+

x: decoder input

|

| 77 |

+

h: encoder output

|

| 78 |

+

"""

|

| 79 |

+

self_attn_mask = commons.subsequent_mask(x_mask.size(2)).to(device=x.device, dtype=x.dtype)

|

| 80 |

+

encdec_attn_mask = h_mask.unsqueeze(2) * x_mask.unsqueeze(-1)

|

| 81 |

+

x = x * x_mask

|

| 82 |

+

for i in range(self.n_layers):

|

| 83 |

+

y = self.self_attn_layers[i](x, x, self_attn_mask)

|

| 84 |

+

y = self.drop(y)

|

| 85 |

+

x = self.norm_layers_0[i](x + y)

|

| 86 |

+

|

| 87 |

+

y = self.encdec_attn_layers[i](x, h, encdec_attn_mask)

|

| 88 |

+

y = self.drop(y)

|

| 89 |

+

x = self.norm_layers_1[i](x + y)

|

| 90 |

+

|

| 91 |

+

y = self.ffn_layers[i](x, x_mask)

|

| 92 |

+

y = self.drop(y)

|

| 93 |

+

x = self.norm_layers_2[i](x + y)

|

| 94 |

+

x = x * x_mask

|

| 95 |

+

return x

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

class MultiHeadAttention(nn.Module):

|

| 99 |

+

def __init__(self, channels, out_channels, n_heads, p_dropout=0., window_size=None, heads_share=True, block_length=None, proximal_bias=False, proximal_init=False):

|

| 100 |

+

super().__init__()

|

| 101 |

+

assert channels % n_heads == 0

|

| 102 |

+

|

| 103 |

+

self.channels = channels

|

| 104 |

+

self.out_channels = out_channels

|

| 105 |

+

self.n_heads = n_heads

|

| 106 |

+

self.p_dropout = p_dropout

|

| 107 |

+

self.window_size = window_size

|

| 108 |

+

self.heads_share = heads_share

|

| 109 |

+

self.block_length = block_length

|

| 110 |

+

self.proximal_bias = proximal_bias

|

| 111 |

+

self.proximal_init = proximal_init

|

| 112 |

+

self.attn = None

|

| 113 |

+

|

| 114 |

+

self.k_channels = channels // n_heads

|

| 115 |

+

self.conv_q = nn.Conv1d(channels, channels, 1)

|

| 116 |

+

self.conv_k = nn.Conv1d(channels, channels, 1)

|

| 117 |

+

self.conv_v = nn.Conv1d(channels, channels, 1)

|

| 118 |

+

self.conv_o = nn.Conv1d(channels, out_channels, 1)

|

| 119 |

+

self.drop = nn.Dropout(p_dropout)

|

| 120 |

+

|

| 121 |

+

if window_size is not None:

|

| 122 |

+

n_heads_rel = 1 if heads_share else n_heads

|

| 123 |

+

rel_stddev = self.k_channels**-0.5

|

| 124 |

+

self.emb_rel_k = nn.Parameter(torch.randn(n_heads_rel, window_size * 2 + 1, self.k_channels) * rel_stddev)

|

| 125 |

+

self.emb_rel_v = nn.Parameter(torch.randn(n_heads_rel, window_size * 2 + 1, self.k_channels) * rel_stddev)

|

| 126 |

+

|

| 127 |

+

nn.init.xavier_uniform_(self.conv_q.weight)

|

| 128 |

+

nn.init.xavier_uniform_(self.conv_k.weight)

|

| 129 |

+

nn.init.xavier_uniform_(self.conv_v.weight)

|

| 130 |

+

if proximal_init:

|

| 131 |

+

with torch.no_grad():

|

| 132 |

+

self.conv_k.weight.copy_(self.conv_q.weight)

|

| 133 |

+

self.conv_k.bias.copy_(self.conv_q.bias)

|

| 134 |

+

|

| 135 |

+

def forward(self, x, c, attn_mask=None):

|

| 136 |

+

q = self.conv_q(x)

|

| 137 |

+

k = self.conv_k(c)

|

| 138 |

+

v = self.conv_v(c)

|

| 139 |

+

|

| 140 |

+

x, self.attn = self.attention(q, k, v, mask=attn_mask)

|

| 141 |

+

|

| 142 |

+

x = self.conv_o(x)

|

| 143 |

+

return x

|

| 144 |

+

|

| 145 |

+

def attention(self, query, key, value, mask=None):

|

| 146 |

+

# reshape [b, d, t] -> [b, n_h, t, d_k]

|

| 147 |

+

b, d, t_s, t_t = (*key.size(), query.size(2))

|

| 148 |

+

query = query.view(b, self.n_heads, self.k_channels, t_t).transpose(2, 3)

|

| 149 |

+

key = key.view(b, self.n_heads, self.k_channels, t_s).transpose(2, 3)

|

| 150 |

+

value = value.view(b, self.n_heads, self.k_channels, t_s).transpose(2, 3)

|

| 151 |

+

|

| 152 |

+

scores = torch.matmul(query / math.sqrt(self.k_channels), key.transpose(-2, -1))

|

| 153 |

+

if self.window_size is not None:

|

| 154 |

+

assert t_s == t_t, "Relative attention is only available for self-attention."

|

| 155 |

+

key_relative_embeddings = self._get_relative_embeddings(self.emb_rel_k, t_s)

|

| 156 |

+

rel_logits = self._matmul_with_relative_keys(query /math.sqrt(self.k_channels), key_relative_embeddings)

|

| 157 |

+

scores_local = self._relative_position_to_absolute_position(rel_logits)

|

| 158 |

+

scores = scores + scores_local

|

| 159 |

+

if self.proximal_bias:

|

| 160 |

+

assert t_s == t_t, "Proximal bias is only available for self-attention."

|

| 161 |

+

scores = scores + self._attention_bias_proximal(t_s).to(device=scores.device, dtype=scores.dtype)

|

| 162 |

+

if mask is not None:

|

| 163 |

+

scores = scores.masked_fill(mask == 0, -1e4)

|

| 164 |

+

if self.block_length is not None:

|

| 165 |

+

assert t_s == t_t, "Local attention is only available for self-attention."

|

| 166 |

+

block_mask = torch.ones_like(scores).triu(-self.block_length).tril(self.block_length)

|

| 167 |

+

scores = scores.masked_fill(block_mask == 0, -1e4)

|

| 168 |

+

p_attn = F.softmax(scores, dim=-1) # [b, n_h, t_t, t_s]

|

| 169 |

+

p_attn = self.drop(p_attn)

|

| 170 |

+

output = torch.matmul(p_attn, value)

|

| 171 |

+

if self.window_size is not None:

|

| 172 |

+

relative_weights = self._absolute_position_to_relative_position(p_attn)

|

| 173 |

+

value_relative_embeddings = self._get_relative_embeddings(self.emb_rel_v, t_s)

|

| 174 |

+

output = output + self._matmul_with_relative_values(relative_weights, value_relative_embeddings)

|

| 175 |

+

output = output.transpose(2, 3).contiguous().view(b, d, t_t) # [b, n_h, t_t, d_k] -> [b, d, t_t]

|

| 176 |

+

return output, p_attn

|

| 177 |

+

|

| 178 |

+

def _matmul_with_relative_values(self, x, y):

|

| 179 |

+

"""

|

| 180 |

+

x: [b, h, l, m]

|

| 181 |

+

y: [h or 1, m, d]

|

| 182 |

+

ret: [b, h, l, d]

|

| 183 |

+

"""

|

| 184 |

+

ret = torch.matmul(x, y.unsqueeze(0))

|

| 185 |

+

return ret

|

| 186 |

+

|

| 187 |

+

def _matmul_with_relative_keys(self, x, y):

|

| 188 |

+

"""

|

| 189 |

+

x: [b, h, l, d]

|

| 190 |

+

y: [h or 1, m, d]

|

| 191 |

+

ret: [b, h, l, m]

|

| 192 |

+

"""

|

| 193 |

+

ret = torch.matmul(x, y.unsqueeze(0).transpose(-2, -1))

|

| 194 |

+

return ret

|

| 195 |

+

|

| 196 |

+

def _get_relative_embeddings(self, relative_embeddings, length):

|

| 197 |

+

max_relative_position = 2 * self.window_size + 1

|

| 198 |

+

# Pad first before slice to avoid using cond ops.

|

| 199 |

+

pad_length = max(length - (self.window_size + 1), 0)

|

| 200 |

+

slice_start_position = max((self.window_size + 1) - length, 0)

|

| 201 |

+

slice_end_position = slice_start_position + 2 * length - 1

|

| 202 |

+

if pad_length > 0:

|

| 203 |

+

padded_relative_embeddings = F.pad(

|

| 204 |

+

relative_embeddings,

|

| 205 |

+

commons.convert_pad_shape([[0, 0], [pad_length, pad_length], [0, 0]]))

|

| 206 |

+

else:

|

| 207 |

+

padded_relative_embeddings = relative_embeddings

|

| 208 |

+

used_relative_embeddings = padded_relative_embeddings[:,slice_start_position:slice_end_position]

|

| 209 |

+

return used_relative_embeddings

|

| 210 |

+

|

| 211 |

+

def _relative_position_to_absolute_position(self, x):

|

| 212 |

+

"""

|

| 213 |

+

x: [b, h, l, 2*l-1]

|

| 214 |

+

ret: [b, h, l, l]

|

| 215 |

+

"""

|

| 216 |

+

batch, heads, length, _ = x.size()

|

| 217 |

+

# Concat columns of pad to shift from relative to absolute indexing.

|

| 218 |

+

x = F.pad(x, commons.convert_pad_shape([[0,0],[0,0],[0,0],[0,1]]))

|

| 219 |

+

|

| 220 |

+

# Concat extra elements so to add up to shape (len+1, 2*len-1).

|

| 221 |

+

x_flat = x.view([batch, heads, length * 2 * length])

|

| 222 |

+

x_flat = F.pad(x_flat, commons.convert_pad_shape([[0,0],[0,0],[0,length-1]]))

|

| 223 |

+

|

| 224 |

+

# Reshape and slice out the padded elements.

|

| 225 |

+

x_final = x_flat.view([batch, heads, length+1, 2*length-1])[:, :, :length, length-1:]

|

| 226 |

+

return x_final

|

| 227 |

+

|

| 228 |

+

def _absolute_position_to_relative_position(self, x):

|

| 229 |

+

"""

|

| 230 |

+

x: [b, h, l, l]

|

| 231 |

+

ret: [b, h, l, 2*l-1]

|

| 232 |

+

"""

|

| 233 |

+

batch, heads, length, _ = x.size()

|

| 234 |

+

# padd along column

|

| 235 |

+

x = F.pad(x, commons.convert_pad_shape([[0, 0], [0, 0], [0, 0], [0, length-1]]))

|

| 236 |

+

x_flat = x.view([batch, heads, length**2 + length*(length -1)])

|

| 237 |

+

# add 0's in the beginning that will skew the elements after reshape

|

| 238 |

+

x_flat = F.pad(x_flat, commons.convert_pad_shape([[0, 0], [0, 0], [length, 0]]))

|

| 239 |

+

x_final = x_flat.view([batch, heads, length, 2*length])[:,:,:,1:]

|

| 240 |

+

return x_final

|

| 241 |

+

|

| 242 |

+

def _attention_bias_proximal(self, length):

|

| 243 |

+

"""Bias for self-attention to encourage attention to close positions.

|

| 244 |

+

Args:

|

| 245 |

+

length: an integer scalar.

|

| 246 |

+

Returns:

|

| 247 |

+

a Tensor with shape [1, 1, length, length]

|

| 248 |

+

"""

|

| 249 |

+

r = torch.arange(length, dtype=torch.float32)

|

| 250 |

+

diff = torch.unsqueeze(r, 0) - torch.unsqueeze(r, 1)

|

| 251 |

+

return torch.unsqueeze(torch.unsqueeze(-torch.log1p(torch.abs(diff)), 0), 0)

|

| 252 |

+

|

| 253 |

+

|

| 254 |

+

class FFN(nn.Module):

|

| 255 |

+

def __init__(self, in_channels, out_channels, filter_channels, kernel_size, p_dropout=0., activation=None, causal=False):

|

| 256 |

+

super().__init__()

|

| 257 |

+

self.in_channels = in_channels

|

| 258 |

+

self.out_channels = out_channels

|

| 259 |

+

self.filter_channels = filter_channels

|

| 260 |

+

self.kernel_size = kernel_size

|

| 261 |

+

self.p_dropout = p_dropout

|

| 262 |

+

self.activation = activation

|

| 263 |

+

self.causal = causal

|

| 264 |

+

|

| 265 |

+

if causal:

|

| 266 |

+

self.padding = self._causal_padding

|

| 267 |

+

else:

|

| 268 |

+

self.padding = self._same_padding

|

| 269 |

+

|

| 270 |

+

self.conv_1 = nn.Conv1d(in_channels, filter_channels, kernel_size)

|

| 271 |

+

self.conv_2 = nn.Conv1d(filter_channels, out_channels, kernel_size)

|

| 272 |

+

self.drop = nn.Dropout(p_dropout)

|

| 273 |

+

|

| 274 |

+

def forward(self, x, x_mask):

|

| 275 |

+

x = self.conv_1(self.padding(x * x_mask))

|

| 276 |

+

if self.activation == "gelu":

|

| 277 |

+

x = x * torch.sigmoid(1.702 * x)

|

| 278 |

+

else:

|

| 279 |

+

x = torch.relu(x)

|

| 280 |

+

x = self.drop(x)

|

| 281 |

+

x = self.conv_2(self.padding(x * x_mask))

|

| 282 |

+

return x * x_mask

|

| 283 |

+

|

| 284 |

+

def _causal_padding(self, x):

|

| 285 |

+

if self.kernel_size == 1:

|

| 286 |

+

return x

|

| 287 |

+

pad_l = self.kernel_size - 1

|

| 288 |

+

pad_r = 0

|

| 289 |

+

padding = [[0, 0], [0, 0], [pad_l, pad_r]]

|

| 290 |

+

x = F.pad(x, commons.convert_pad_shape(padding))

|

| 291 |

+

return x

|

| 292 |

+

|

| 293 |

+

def _same_padding(self, x):

|

| 294 |

+

if self.kernel_size == 1:

|

| 295 |

+

return x

|

| 296 |

+

pad_l = (self.kernel_size - 1) // 2

|

| 297 |

+

pad_r = self.kernel_size // 2

|

| 298 |

+

padding = [[0, 0], [0, 0], [pad_l, pad_r]]

|

| 299 |

+

x = F.pad(x, commons.convert_pad_shape(padding))

|

| 300 |

+

return x

|

commons.py

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch.nn import functional as F

|

| 3 |

+

import torch.jit

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def script_method(fn, _rcb=None):

|

| 7 |

+

return fn

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def script(obj, optimize=True, _frames_up=0, _rcb=None):

|

| 11 |

+

return obj

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

torch.jit.script_method = script_method

|

| 15 |

+

torch.jit.script = script

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def init_weights(m, mean=0.0, std=0.01):

|

| 19 |

+

classname = m.__class__.__name__

|

| 20 |

+

if classname.find("Conv") != -1:

|

| 21 |

+

m.weight.data.normal_(mean, std)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def get_padding(kernel_size, dilation=1):

|

| 25 |

+

return int((kernel_size*dilation - dilation)/2)

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

def intersperse(lst, item):

|

| 29 |

+

result = [item] * (len(lst) * 2 + 1)

|

| 30 |

+

result[1::2] = lst

|

| 31 |

+

return result

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

def slice_segments(x, ids_str, segment_size=4):

|

| 35 |

+

ret = torch.zeros_like(x[:, :, :segment_size])

|

| 36 |

+

for i in range(x.size(0)):

|

| 37 |

+

idx_str = ids_str[i]

|

| 38 |

+

idx_end = idx_str + segment_size

|

| 39 |

+

ret[i] = x[i, :, idx_str:idx_end]

|

| 40 |

+

return ret

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+