Upload 190 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- MiDaS-master/.gitignore +110 -0

- MiDaS-master/Dockerfile +29 -0

- MiDaS-master/LICENSE +21 -0

- MiDaS-master/README.md +300 -0

- MiDaS-master/environment.yaml +16 -0

- MiDaS-master/figures/Comparison.png +3 -0

- MiDaS-master/figures/Improvement_vs_FPS.png +0 -0

- MiDaS-master/hubconf.py +435 -0

- MiDaS-master/input/.placeholder +0 -0

- MiDaS-master/midas/backbones/beit.py +196 -0

- MiDaS-master/midas/backbones/levit.py +106 -0

- MiDaS-master/midas/backbones/next_vit.py +39 -0

- MiDaS-master/midas/backbones/swin.py +13 -0

- MiDaS-master/midas/backbones/swin2.py +34 -0

- MiDaS-master/midas/backbones/swin_common.py +52 -0

- MiDaS-master/midas/backbones/utils.py +249 -0

- MiDaS-master/midas/backbones/vit.py +221 -0

- MiDaS-master/midas/base_model.py +16 -0

- MiDaS-master/midas/blocks.py +439 -0

- MiDaS-master/midas/dpt_depth.py +166 -0

- MiDaS-master/midas/midas_net.py +76 -0

- MiDaS-master/midas/midas_net_custom.py +128 -0

- MiDaS-master/midas/model_loader.py +242 -0

- MiDaS-master/midas/transforms.py +234 -0

- MiDaS-master/mobile/README.md +70 -0

- MiDaS-master/mobile/android/.gitignore +13 -0

- MiDaS-master/mobile/android/EXPLORE_THE_CODE.md +414 -0

- MiDaS-master/mobile/android/LICENSE +21 -0

- MiDaS-master/mobile/android/README.md +21 -0

- MiDaS-master/mobile/android/app/.gitignore +3 -0

- MiDaS-master/mobile/android/app/build.gradle +56 -0

- MiDaS-master/mobile/android/app/proguard-rules.pro +21 -0

- MiDaS-master/mobile/android/app/src/androidTest/assets/fox-mobilenet_v1_1.0_224_support.txt +3 -0

- MiDaS-master/mobile/android/app/src/androidTest/assets/fox-mobilenet_v1_1.0_224_task_api.txt +3 -0

- MiDaS-master/mobile/android/app/src/androidTest/java/AndroidManifest.xml +5 -0

- MiDaS-master/mobile/android/app/src/androidTest/java/org/tensorflow/lite/examples/classification/ClassifierTest.java +121 -0

- MiDaS-master/mobile/android/app/src/main/AndroidManifest.xml +28 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/CameraActivity.java +717 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/CameraConnectionFragment.java +575 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/ClassifierActivity.java +238 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/LegacyCameraConnectionFragment.java +203 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/customview/AutoFitTextureView.java +72 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/customview/OverlayView.java +48 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/customview/RecognitionScoreView.java +67 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/customview/ResultsView.java +23 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/env/BorderedText.java +115 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/env/ImageUtils.java +152 -0

- MiDaS-master/mobile/android/app/src/main/java/org/tensorflow/lite/examples/classification/env/Logger.java +186 -0

- MiDaS-master/mobile/android/app/src/main/res/drawable-hdpi/ic_launcher.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

MiDaS-master/figures/Comparison.png filter=lfs diff=lfs merge=lfs -text

|

MiDaS-master/.gitignore

ADDED

|

@@ -0,0 +1,110 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

*.egg-info/

|

| 24 |

+

.installed.cfg

|

| 25 |

+

*.egg

|

| 26 |

+

MANIFEST

|

| 27 |

+

|

| 28 |

+

# PyInstaller

|

| 29 |

+

# Usually these files are written by a python script from a template

|

| 30 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 31 |

+

*.manifest

|

| 32 |

+

*.spec

|

| 33 |

+

|

| 34 |

+

# Installer logs

|

| 35 |

+

pip-log.txt

|

| 36 |

+

pip-delete-this-directory.txt

|

| 37 |

+

|

| 38 |

+

# Unit test / coverage reports

|

| 39 |

+

htmlcov/

|

| 40 |

+

.tox/

|

| 41 |

+

.coverage

|

| 42 |

+

.coverage.*

|

| 43 |

+

.cache

|

| 44 |

+

nosetests.xml

|

| 45 |

+

coverage.xml

|

| 46 |

+

*.cover

|

| 47 |

+

.hypothesis/

|

| 48 |

+

.pytest_cache/

|

| 49 |

+

|

| 50 |

+

# Translations

|

| 51 |

+

*.mo

|

| 52 |

+

*.pot

|

| 53 |

+

|

| 54 |

+

# Django stuff:

|

| 55 |

+

*.log

|

| 56 |

+

local_settings.py

|

| 57 |

+

db.sqlite3

|

| 58 |

+

|

| 59 |

+

# Flask stuff:

|

| 60 |

+

instance/

|

| 61 |

+

.webassets-cache

|

| 62 |

+

|

| 63 |

+

# Scrapy stuff:

|

| 64 |

+

.scrapy

|

| 65 |

+

|

| 66 |

+

# Sphinx documentation

|

| 67 |

+

docs/_build/

|

| 68 |

+

|

| 69 |

+

# PyBuilder

|

| 70 |

+

target/

|

| 71 |

+

|

| 72 |

+

# Jupyter Notebook

|

| 73 |

+

.ipynb_checkpoints

|

| 74 |

+

|

| 75 |

+

# pyenv

|

| 76 |

+

.python-version

|

| 77 |

+

|

| 78 |

+

# celery beat schedule file

|

| 79 |

+

celerybeat-schedule

|

| 80 |

+

|

| 81 |

+

# SageMath parsed files

|

| 82 |

+

*.sage.py

|

| 83 |

+

|

| 84 |

+

# Environments

|

| 85 |

+

.env

|

| 86 |

+

.venv

|

| 87 |

+

env/

|

| 88 |

+

venv/

|

| 89 |

+

ENV/

|

| 90 |

+

env.bak/

|

| 91 |

+

venv.bak/

|

| 92 |

+

|

| 93 |

+

# Spyder project settings

|

| 94 |

+

.spyderproject

|

| 95 |

+

.spyproject

|

| 96 |

+

|

| 97 |

+

# Rope project settings

|

| 98 |

+

.ropeproject

|

| 99 |

+

|

| 100 |

+

# mkdocs documentation

|

| 101 |

+

/site

|

| 102 |

+

|

| 103 |

+

# mypy

|

| 104 |

+

.mypy_cache/

|

| 105 |

+

|

| 106 |

+

*.png

|

| 107 |

+

*.pfm

|

| 108 |

+

*.jpg

|

| 109 |

+

*.jpeg

|

| 110 |

+

*.pt

|

MiDaS-master/Dockerfile

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# enables cuda support in docker

|

| 2 |

+

FROM nvidia/cuda:10.2-cudnn7-runtime-ubuntu18.04

|

| 3 |

+

|

| 4 |

+

# install python 3.6, pip and requirements for opencv-python

|

| 5 |

+

# (see https://github.com/NVIDIA/nvidia-docker/issues/864)

|

| 6 |

+

RUN apt-get update && apt-get -y install \

|

| 7 |

+

python3 \

|

| 8 |

+

python3-pip \

|

| 9 |

+

libsm6 \

|

| 10 |

+

libxext6 \

|

| 11 |

+

libxrender-dev \

|

| 12 |

+

curl \

|

| 13 |

+

&& rm -rf /var/lib/apt/lists/*

|

| 14 |

+

|

| 15 |

+

# install python dependencies

|

| 16 |

+

RUN pip3 install --upgrade pip

|

| 17 |

+

RUN pip3 install torch~=1.8 torchvision opencv-python-headless~=3.4 timm

|

| 18 |

+

|

| 19 |

+

# copy inference code

|

| 20 |

+

WORKDIR /opt/MiDaS

|

| 21 |

+

COPY ./midas ./midas

|

| 22 |

+

COPY ./*.py ./

|

| 23 |

+

|

| 24 |

+

# download model weights so the docker image can be used offline

|

| 25 |

+

RUN cd weights && {curl -OL https://github.com/isl-org/MiDaS/releases/download/v3/dpt_hybrid_384.pt; cd -; }

|

| 26 |

+

RUN python3 run.py --model_type dpt_hybrid; exit 0

|

| 27 |

+

|

| 28 |

+

# entrypoint (dont forget to mount input and output directories)

|

| 29 |

+

CMD python3 run.py --model_type dpt_hybrid

|

MiDaS-master/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2019 Intel ISL (Intel Intelligent Systems Lab)

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

MiDaS-master/README.md

ADDED

|

@@ -0,0 +1,300 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer

|

| 2 |

+

|

| 3 |

+

This repository contains code to compute depth from a single image. It accompanies our [paper](https://arxiv.org/abs/1907.01341v3):

|

| 4 |

+

|

| 5 |

+

>Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer

|

| 6 |

+

René Ranftl, Katrin Lasinger, David Hafner, Konrad Schindler, Vladlen Koltun

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

and our [preprint](https://arxiv.org/abs/2103.13413):

|

| 10 |

+

|

| 11 |

+

> Vision Transformers for Dense Prediction

|

| 12 |

+

> René Ranftl, Alexey Bochkovskiy, Vladlen Koltun

|

| 13 |

+

|

| 14 |

+

For the latest release MiDaS 3.1, a [technical report](https://arxiv.org/pdf/2307.14460.pdf) and [video](https://www.youtube.com/watch?v=UjaeNNFf9sE&t=3s) are available.

|

| 15 |

+

|

| 16 |

+

MiDaS was trained on up to 12 datasets (ReDWeb, DIML, Movies, MegaDepth, WSVD, TartanAir, HRWSI, ApolloScape, BlendedMVS, IRS, KITTI, NYU Depth V2) with

|

| 17 |

+

multi-objective optimization.

|

| 18 |

+

The original model that was trained on 5 datasets (`MIX 5` in the paper) can be found [here](https://github.com/isl-org/MiDaS/releases/tag/v2).

|

| 19 |

+

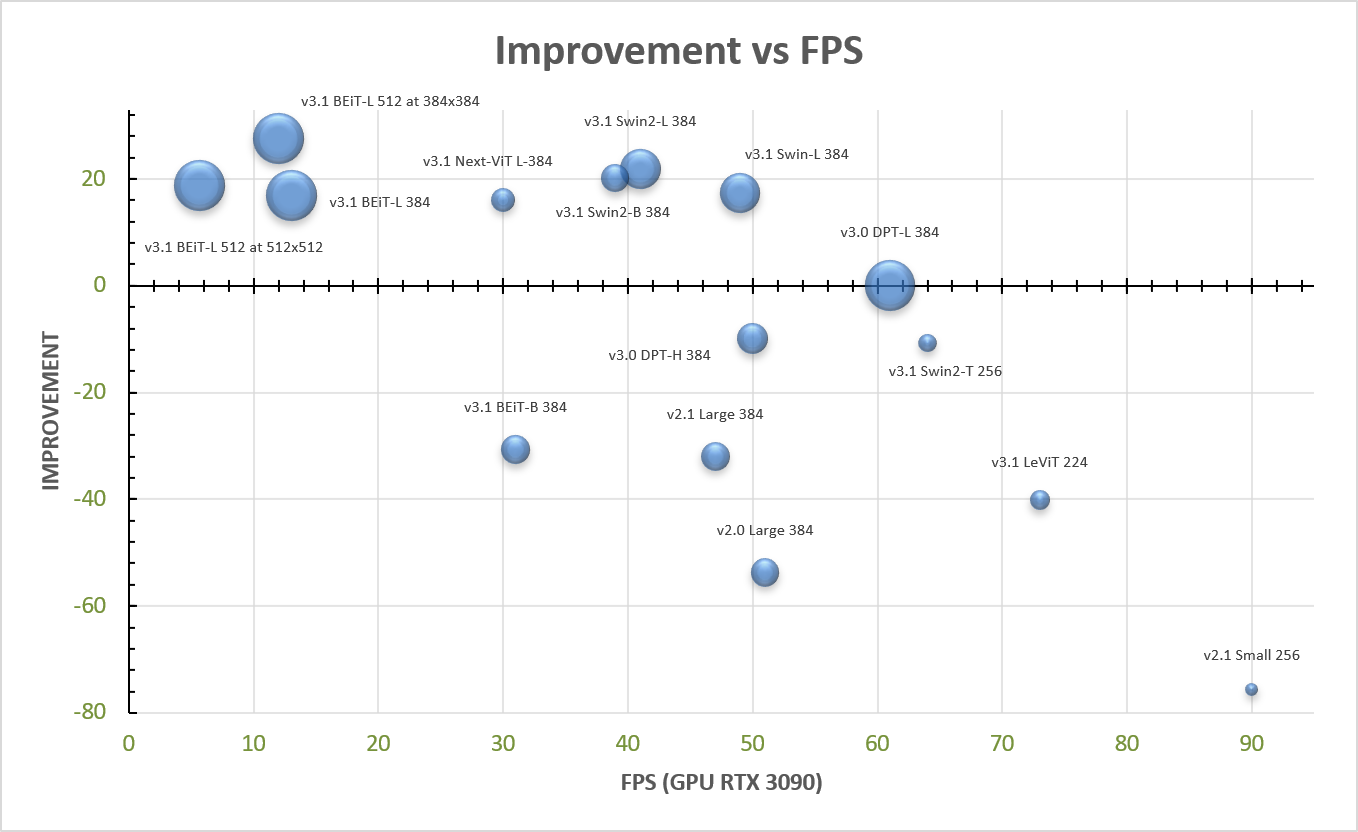

The figure below shows an overview of the different MiDaS models; the bubble size scales with number of parameters.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

### Setup

|

| 24 |

+

|

| 25 |

+

1) Pick one or more models and download the corresponding weights to the `weights` folder:

|

| 26 |

+

|

| 27 |

+

MiDaS 3.1

|

| 28 |

+

- For highest quality: [dpt_beit_large_512](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_512.pt)

|

| 29 |

+

- For moderately less quality, but better speed-performance trade-off: [dpt_swin2_large_384](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_large_384.pt)

|

| 30 |

+

- For embedded devices: [dpt_swin2_tiny_256](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_tiny_256.pt), [dpt_levit_224](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_levit_224.pt)

|

| 31 |

+

- For inference on Intel CPUs, OpenVINO may be used for the small legacy model: openvino_midas_v21_small [.xml](https://github.com/isl-org/MiDaS/releases/download/v3_1/openvino_midas_v21_small_256.xml), [.bin](https://github.com/isl-org/MiDaS/releases/download/v3_1/openvino_midas_v21_small_256.bin)

|

| 32 |

+

|

| 33 |

+

MiDaS 3.0: Legacy transformer models [dpt_large_384](https://github.com/isl-org/MiDaS/releases/download/v3/dpt_large_384.pt) and [dpt_hybrid_384](https://github.com/isl-org/MiDaS/releases/download/v3/dpt_hybrid_384.pt)

|

| 34 |

+

|

| 35 |

+

MiDaS 2.1: Legacy convolutional models [midas_v21_384](https://github.com/isl-org/MiDaS/releases/download/v2_1/midas_v21_384.pt) and [midas_v21_small_256](https://github.com/isl-org/MiDaS/releases/download/v2_1/midas_v21_small_256.pt)

|

| 36 |

+

|

| 37 |

+

1) Set up dependencies:

|

| 38 |

+

|

| 39 |

+

```shell

|

| 40 |

+

conda env create -f environment.yaml

|

| 41 |

+

conda activate midas-py310

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

#### optional

|

| 45 |

+

|

| 46 |

+

For the Next-ViT model, execute

|

| 47 |

+

|

| 48 |

+

```shell

|

| 49 |

+

git submodule add https://github.com/isl-org/Next-ViT midas/external/next_vit

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

For the OpenVINO model, install

|

| 53 |

+

|

| 54 |

+

```shell

|

| 55 |

+

pip install openvino

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

### Usage

|

| 59 |

+

|

| 60 |

+

1) Place one or more input images in the folder `input`.

|

| 61 |

+

|

| 62 |

+

2) Run the model with

|

| 63 |

+

|

| 64 |

+

```shell

|

| 65 |

+

python run.py --model_type <model_type> --input_path input --output_path output

|

| 66 |

+

```

|

| 67 |

+

where ```<model_type>``` is chosen from [dpt_beit_large_512](#model_type), [dpt_beit_large_384](#model_type),

|

| 68 |

+

[dpt_beit_base_384](#model_type), [dpt_swin2_large_384](#model_type), [dpt_swin2_base_384](#model_type),

|

| 69 |

+

[dpt_swin2_tiny_256](#model_type), [dpt_swin_large_384](#model_type), [dpt_next_vit_large_384](#model_type),

|

| 70 |

+

[dpt_levit_224](#model_type), [dpt_large_384](#model_type), [dpt_hybrid_384](#model_type),

|

| 71 |

+

[midas_v21_384](#model_type), [midas_v21_small_256](#model_type), [openvino_midas_v21_small_256](#model_type).

|

| 72 |

+

|

| 73 |

+

3) The resulting depth maps are written to the `output` folder.

|

| 74 |

+

|

| 75 |

+

#### optional

|

| 76 |

+

|

| 77 |

+

1) By default, the inference resizes the height of input images to the size of a model to fit into the encoder. This

|

| 78 |

+

size is given by the numbers in the model names of the [accuracy table](#accuracy). Some models do not only support a single

|

| 79 |

+

inference height but a range of different heights. Feel free to explore different heights by appending the extra

|

| 80 |

+

command line argument `--height`. Unsupported height values will throw an error. Note that using this argument may

|

| 81 |

+

decrease the model accuracy.

|

| 82 |

+

2) By default, the inference keeps the aspect ratio of input images when feeding them into the encoder if this is

|

| 83 |

+

supported by a model (all models except for Swin, Swin2, LeViT). In order to resize to a square resolution,

|

| 84 |

+

disregarding the aspect ratio while preserving the height, use the command line argument `--square`.

|

| 85 |

+

|

| 86 |

+

#### via Camera

|

| 87 |

+

|

| 88 |

+

If you want the input images to be grabbed from the camera and shown in a window, leave the input and output paths

|

| 89 |

+

away and choose a model type as shown above:

|

| 90 |

+

|

| 91 |

+

```shell

|

| 92 |

+

python run.py --model_type <model_type> --side

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

The argument `--side` is optional and causes both the input RGB image and the output depth map to be shown

|

| 96 |

+

side-by-side for comparison.

|

| 97 |

+

|

| 98 |

+

#### via Docker

|

| 99 |

+

|

| 100 |

+

1) Make sure you have installed Docker and the

|

| 101 |

+

[NVIDIA Docker runtime](https://github.com/NVIDIA/nvidia-docker/wiki/Installation-\(Native-GPU-Support\)).

|

| 102 |

+

|

| 103 |

+

2) Build the Docker image:

|

| 104 |

+

|

| 105 |

+

```shell

|

| 106 |

+

docker build -t midas .

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

3) Run inference:

|

| 110 |

+

|

| 111 |

+

```shell

|

| 112 |

+

docker run --rm --gpus all -v $PWD/input:/opt/MiDaS/input -v $PWD/output:/opt/MiDaS/output -v $PWD/weights:/opt/MiDaS/weights midas

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

This command passes through all of your NVIDIA GPUs to the container, mounts the

|

| 116 |

+

`input` and `output` directories and then runs the inference.

|

| 117 |

+

|

| 118 |

+

#### via PyTorch Hub

|

| 119 |

+

|

| 120 |

+

The pretrained model is also available on [PyTorch Hub](https://pytorch.org/hub/intelisl_midas_v2/)

|

| 121 |

+

|

| 122 |

+

#### via TensorFlow or ONNX

|

| 123 |

+

|

| 124 |

+

See [README](https://github.com/isl-org/MiDaS/tree/master/tf) in the `tf` subdirectory.

|

| 125 |

+

|

| 126 |

+

Currently only supports MiDaS v2.1.

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

#### via Mobile (iOS / Android)

|

| 130 |

+

|

| 131 |

+

See [README](https://github.com/isl-org/MiDaS/tree/master/mobile) in the `mobile` subdirectory.

|

| 132 |

+

|

| 133 |

+

#### via ROS1 (Robot Operating System)

|

| 134 |

+

|

| 135 |

+

See [README](https://github.com/isl-org/MiDaS/tree/master/ros) in the `ros` subdirectory.

|

| 136 |

+

|

| 137 |

+

Currently only supports MiDaS v2.1. DPT-based models to be added.

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

### Accuracy

|

| 141 |

+

|

| 142 |

+

We provide a **zero-shot error** $\epsilon_d$ which is evaluated for 6 different datasets

|

| 143 |

+

(see [paper](https://arxiv.org/abs/1907.01341v3)). **Lower error values are better**.

|

| 144 |

+

$\color{green}{\textsf{Overall model quality is represented by the improvement}}$ ([Imp.](#improvement)) with respect to

|

| 145 |

+

MiDaS 3.0 DPT<sub>L-384</sub>. The models are grouped by the height used for inference, whereas the square training resolution is given by

|

| 146 |

+

the numbers in the model names. The table also shows the **number of parameters** (in millions) and the

|

| 147 |

+

**frames per second** for inference at the training resolution (for GPU RTX 3090):

|

| 148 |

+

|

| 149 |

+

| MiDaS Model | DIW </br><sup>WHDR</sup> | Eth3d </br><sup>AbsRel</sup> | Sintel </br><sup>AbsRel</sup> | TUM </br><sup>δ1</sup> | KITTI </br><sup>δ1</sup> | NYUv2 </br><sup>δ1</sup> | $\color{green}{\textsf{Imp.}}$ </br><sup>%</sup> | Par.</br><sup>M</sup> | FPS</br><sup> </sup> |

|

| 150 |

+

|-----------------------------------------------------------------------------------------------------------------------|-------------------------:|-----------------------------:|------------------------------:|-------------------------:|-------------------------:|-------------------------:|-------------------------------------------------:|----------------------:|--------------------------:|

|

| 151 |

+

| **Inference height 512** | | | | | | | | | |

|

| 152 |

+

| [v3.1 BEiT<sub>L-512</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_512.pt) | 0.1137 | 0.0659 | 0.2366 | **6.13** | 11.56* | **1.86*** | $\color{green}{\textsf{19}}$ | **345** | **5.7** |

|

| 153 |

+

| [v3.1 BEiT<sub>L-512</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_512.pt)$\tiny{\square}$ | **0.1121** | **0.0614** | **0.2090** | 6.46 | **5.00*** | 1.90* | $\color{green}{\textsf{34}}$ | **345** | **5.7** |

|

| 154 |

+

| | | | | | | | | | |

|

| 155 |

+

| **Inference height 384** | | | | | | | | | |

|

| 156 |

+

| [v3.1 BEiT<sub>L-512</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_512.pt) | 0.1245 | 0.0681 | **0.2176** | **6.13** | 6.28* | **2.16*** | $\color{green}{\textsf{28}}$ | 345 | 12 |

|

| 157 |

+

| [v3.1 Swin2<sub>L-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_large_384.pt)$\tiny{\square}$ | 0.1106 | 0.0732 | 0.2442 | 8.87 | **5.84*** | 2.92* | $\color{green}{\textsf{22}}$ | 213 | 41 |

|

| 158 |

+

| [v3.1 Swin2<sub>B-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_base_384.pt)$\tiny{\square}$ | 0.1095 | 0.0790 | 0.2404 | 8.93 | 5.97* | 3.28* | $\color{green}{\textsf{22}}$ | 102 | 39 |

|

| 159 |

+

| [v3.1 Swin<sub>L-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin_large_384.pt)$\tiny{\square}$ | 0.1126 | 0.0853 | 0.2428 | 8.74 | 6.60* | 3.34* | $\color{green}{\textsf{17}}$ | 213 | 49 |

|

| 160 |

+

| [v3.1 BEiT<sub>L-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_384.pt) | 0.1239 | **0.0667** | 0.2545 | 7.17 | 9.84* | 2.21* | $\color{green}{\textsf{17}}$ | 344 | 13 |

|

| 161 |

+

| [v3.1 Next-ViT<sub>L-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_next_vit_large_384.pt) | **0.1031** | 0.0954 | 0.2295 | 9.21 | 6.89* | 3.47* | $\color{green}{\textsf{16}}$ | **72** | 30 |

|

| 162 |

+

| [v3.1 BEiT<sub>B-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_base_384.pt) | 0.1159 | 0.0967 | 0.2901 | 9.88 | 26.60* | 3.91* | $\color{green}{\textsf{-31}}$ | 112 | 31 |

|

| 163 |

+

| [v3.0 DPT<sub>L-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3/dpt_large_384.pt) | 0.1082 | 0.0888 | 0.2697 | 9.97 | 8.46 | 8.32 | $\color{green}{\textsf{0}}$ | 344 | **61** |

|

| 164 |

+

| [v3.0 DPT<sub>H-384</sub>](https://github.com/isl-org/MiDaS/releases/download/v3/dpt_hybrid_384.pt) | 0.1106 | 0.0934 | 0.2741 | 10.89 | 11.56 | 8.69 | $\color{green}{\textsf{-10}}$ | 123 | 50 |

|

| 165 |

+

| [v2.1 Large<sub>384</sub>](https://github.com/isl-org/MiDaS/releases/download/v2_1/midas_v21_384.pt) | 0.1295 | 0.1155 | 0.3285 | 12.51 | 16.08 | 8.71 | $\color{green}{\textsf{-32}}$ | 105 | 47 |

|

| 166 |

+

| | | | | | | | | | |

|

| 167 |

+

| **Inference height 256** | | | | | | | | | |

|

| 168 |

+

| [v3.1 Swin2<sub>T-256</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_tiny_256.pt)$\tiny{\square}$ | **0.1211** | **0.1106** | **0.2868** | **13.43** | **10.13*** | **5.55*** | $\color{green}{\textsf{-11}}$ | 42 | 64 |

|

| 169 |

+

| [v2.1 Small<sub>256</sub>](https://github.com/isl-org/MiDaS/releases/download/v2_1/midas_v21_small_256.pt) | 0.1344 | 0.1344 | 0.3370 | 14.53 | 29.27 | 13.43 | $\color{green}{\textsf{-76}}$ | **21** | **90** |

|

| 170 |

+

| | | | | | | | | | |

|

| 171 |

+

| **Inference height 224** | | | | | | | | | |

|

| 172 |

+

| [v3.1 LeViT<sub>224</sub>](https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_levit_224.pt)$\tiny{\square}$ | **0.1314** | **0.1206** | **0.3148** | **18.21** | **15.27*** | **8.64*** | $\color{green}{\textsf{-40}}$ | **51** | **73** |

|

| 173 |

+

|

| 174 |

+

* No zero-shot error, because models are also trained on KITTI and NYU Depth V2\

|

| 175 |

+

$\square$ Validation performed at **square resolution**, either because the transformer encoder backbone of a model

|

| 176 |

+

does not support non-square resolutions (Swin, Swin2, LeViT) or for comparison with these models. All other

|

| 177 |

+

validations keep the aspect ratio. A difference in resolution limits the comparability of the zero-shot error and the

|

| 178 |

+

improvement, because these quantities are averages over the pixels of an image and do not take into account the

|

| 179 |

+

advantage of more details due to a higher resolution.\

|

| 180 |

+

Best values per column and same validation height in bold

|

| 181 |

+

|

| 182 |

+

#### Improvement

|

| 183 |

+

|

| 184 |

+

The improvement in the above table is defined as the relative zero-shot error with respect to MiDaS v3.0

|

| 185 |

+

DPT<sub>L-384</sub> and averaging over the datasets. So, if $\epsilon_d$ is the zero-shot error for dataset $d$, then

|

| 186 |

+

the $\color{green}{\textsf{improvement}}$ is given by $100(1-(1/6)\sum_d\epsilon_d/\epsilon_{d,\rm{DPT_{L-384}}})$%.

|

| 187 |

+

|

| 188 |

+

Note that the improvements of 10% for MiDaS v2.0 → v2.1 and 21% for MiDaS v2.1 → v3.0 are not visible from the

|

| 189 |

+

improvement column (Imp.) in the table but would require an evaluation with respect to MiDaS v2.1 Large<sub>384</sub>

|

| 190 |

+

and v2.0 Large<sub>384</sub> respectively instead of v3.0 DPT<sub>L-384</sub>.

|

| 191 |

+

|

| 192 |

+

### Depth map comparison

|

| 193 |

+

|

| 194 |

+

Zoom in for better visibility

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

### Speed on Camera Feed

|

| 198 |

+

|

| 199 |

+

Test configuration

|

| 200 |

+

- Windows 10

|

| 201 |

+

- 11th Gen Intel Core i7-1185G7 3.00GHz

|

| 202 |

+

- 16GB RAM

|

| 203 |

+

- Camera resolution 640x480

|

| 204 |

+

- openvino_midas_v21_small_256

|

| 205 |

+

|

| 206 |

+

Speed: 22 FPS

|

| 207 |

+

|

| 208 |

+

### Applications

|

| 209 |

+

|

| 210 |

+

MiDaS is used in the following other projects from Intel Labs:

|

| 211 |

+

|

| 212 |

+

- [ZoeDepth](https://arxiv.org/pdf/2302.12288.pdf) (code available [here](https://github.com/isl-org/ZoeDepth)): MiDaS computes the relative depth map given an image. For metric depth estimation, ZoeDepth can be used, which combines MiDaS with a metric depth binning module appended to the decoder.

|

| 213 |

+

- [LDM3D](https://arxiv.org/pdf/2305.10853.pdf) (Hugging Face model available [here](https://huggingface.co/Intel/ldm3d-4c)): LDM3D is an extension of vanilla stable diffusion designed to generate joint image and depth data from a text prompt. The depth maps used for supervision when training LDM3D have been computed using MiDaS.

|

| 214 |

+

|

| 215 |

+

### Changelog

|

| 216 |

+

|

| 217 |

+

* [Dec 2022] Released [MiDaS v3.1](https://arxiv.org/pdf/2307.14460.pdf):

|

| 218 |

+

- New models based on 5 different types of transformers ([BEiT](https://arxiv.org/pdf/2106.08254.pdf), [Swin2](https://arxiv.org/pdf/2111.09883.pdf), [Swin](https://arxiv.org/pdf/2103.14030.pdf), [Next-ViT](https://arxiv.org/pdf/2207.05501.pdf), [LeViT](https://arxiv.org/pdf/2104.01136.pdf))

|

| 219 |

+

- Training datasets extended from 10 to 12, including also KITTI and NYU Depth V2 using [BTS](https://github.com/cleinc/bts) split

|

| 220 |

+

- Best model, BEiT<sub>Large 512</sub>, with resolution 512x512, is on average about [28% more accurate](#Accuracy) than MiDaS v3.0

|

| 221 |

+

- Integrated live depth estimation from camera feed

|

| 222 |

+

* [Sep 2021] Integrated to [Huggingface Spaces](https://huggingface.co/spaces) with [Gradio](https://github.com/gradio-app/gradio). See [Gradio Web Demo](https://huggingface.co/spaces/akhaliq/DPT-Large).

|

| 223 |

+

* [Apr 2021] Released MiDaS v3.0:

|

| 224 |

+

- New models based on [Dense Prediction Transformers](https://arxiv.org/abs/2103.13413) are on average [21% more accurate](#Accuracy) than MiDaS v2.1

|

| 225 |

+

- Additional models can be found [here](https://github.com/isl-org/DPT)

|

| 226 |

+

* [Nov 2020] Released MiDaS v2.1:

|

| 227 |

+

- New model that was trained on 10 datasets and is on average about [10% more accurate](#Accuracy) than [MiDaS v2.0](https://github.com/isl-org/MiDaS/releases/tag/v2)

|

| 228 |

+

- New light-weight model that achieves [real-time performance](https://github.com/isl-org/MiDaS/tree/master/mobile) on mobile platforms.

|

| 229 |

+

- Sample applications for [iOS](https://github.com/isl-org/MiDaS/tree/master/mobile/ios) and [Android](https://github.com/isl-org/MiDaS/tree/master/mobile/android)

|

| 230 |

+

- [ROS package](https://github.com/isl-org/MiDaS/tree/master/ros) for easy deployment on robots

|

| 231 |

+

* [Jul 2020] Added TensorFlow and ONNX code. Added [online demo](http://35.202.76.57/).

|

| 232 |

+

* [Dec 2019] Released new version of MiDaS - the new model is significantly more accurate and robust

|

| 233 |

+

* [Jul 2019] Initial release of MiDaS ([Link](https://github.com/isl-org/MiDaS/releases/tag/v1))

|

| 234 |

+

|

| 235 |

+

### Citation

|

| 236 |

+

|

| 237 |

+

Please cite our paper if you use this code or any of the models:

|

| 238 |

+

```

|

| 239 |

+

@ARTICLE {Ranftl2022,

|

| 240 |

+

author = "Ren\'{e} Ranftl and Katrin Lasinger and David Hafner and Konrad Schindler and Vladlen Koltun",

|

| 241 |

+

title = "Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-Shot Cross-Dataset Transfer",

|

| 242 |

+

journal = "IEEE Transactions on Pattern Analysis and Machine Intelligence",

|

| 243 |

+

year = "2022",

|

| 244 |

+

volume = "44",

|

| 245 |

+

number = "3"

|

| 246 |

+

}

|

| 247 |

+

```

|

| 248 |

+

|

| 249 |

+

If you use a DPT-based model, please also cite:

|

| 250 |

+

|

| 251 |

+

```

|

| 252 |

+

@article{Ranftl2021,

|

| 253 |

+

author = {Ren\'{e} Ranftl and Alexey Bochkovskiy and Vladlen Koltun},

|

| 254 |

+

title = {Vision Transformers for Dense Prediction},

|

| 255 |

+

journal = {ICCV},

|

| 256 |

+

year = {2021},

|

| 257 |

+

}

|

| 258 |

+

```

|

| 259 |

+

|

| 260 |

+

Please cite the technical report for MiDaS 3.1 models:

|

| 261 |

+

|

| 262 |

+

```

|

| 263 |

+

@article{birkl2023midas,

|

| 264 |

+

title={MiDaS v3.1 -- A Model Zoo for Robust Monocular Relative Depth Estimation},

|

| 265 |

+

author={Reiner Birkl and Diana Wofk and Matthias M{\"u}ller},

|

| 266 |

+

journal={arXiv preprint arXiv:2307.14460},

|

| 267 |

+

year={2023}

|

| 268 |

+

}

|

| 269 |

+

```

|

| 270 |

+

|

| 271 |

+

For ZoeDepth, please use

|

| 272 |

+

|

| 273 |

+

```

|

| 274 |

+

@article{bhat2023zoedepth,

|

| 275 |

+

title={Zoedepth: Zero-shot transfer by combining relative and metric depth},

|

| 276 |

+

author={Bhat, Shariq Farooq and Birkl, Reiner and Wofk, Diana and Wonka, Peter and M{\"u}ller, Matthias},

|

| 277 |

+

journal={arXiv preprint arXiv:2302.12288},

|

| 278 |

+

year={2023}

|

| 279 |

+

}

|

| 280 |

+

```

|

| 281 |

+

|

| 282 |

+

and for LDM3D

|

| 283 |

+

|

| 284 |

+

```

|

| 285 |

+

@article{stan2023ldm3d,

|

| 286 |

+

title={LDM3D: Latent Diffusion Model for 3D},

|

| 287 |

+

author={Stan, Gabriela Ben Melech and Wofk, Diana and Fox, Scottie and Redden, Alex and Saxton, Will and Yu, Jean and Aflalo, Estelle and Tseng, Shao-Yen and Nonato, Fabio and Muller, Matthias and others},

|

| 288 |

+

journal={arXiv preprint arXiv:2305.10853},

|

| 289 |

+

year={2023}

|

| 290 |

+

}

|

| 291 |

+

```

|

| 292 |

+

|

| 293 |

+

### Acknowledgements

|

| 294 |

+

|

| 295 |

+

Our work builds on and uses code from [timm](https://github.com/rwightman/pytorch-image-models) and [Next-ViT](https://github.com/bytedance/Next-ViT).

|

| 296 |

+

We'd like to thank the authors for making these libraries available.

|

| 297 |

+

|

| 298 |

+

### License

|

| 299 |

+

|

| 300 |

+

MIT License

|

MiDaS-master/environment.yaml

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: midas-py310

|

| 2 |

+

channels:

|

| 3 |

+

- pytorch

|

| 4 |

+

- defaults

|

| 5 |

+

dependencies:

|

| 6 |

+

- nvidia::cudatoolkit=11.7

|

| 7 |

+

- python=3.10.8

|

| 8 |

+

- pytorch::pytorch=1.13.0

|

| 9 |

+

- torchvision=0.14.0

|

| 10 |

+

- pip=22.3.1

|

| 11 |

+

- numpy=1.23.4

|

| 12 |

+

- pip:

|

| 13 |

+

- opencv-python==4.6.0.66

|

| 14 |

+

- imutils==0.5.4

|

| 15 |

+

- timm==0.6.12

|

| 16 |

+

- einops==0.6.0

|

MiDaS-master/figures/Comparison.png

ADDED

|

Git LFS Details

|

MiDaS-master/figures/Improvement_vs_FPS.png

ADDED

|

MiDaS-master/hubconf.py

ADDED

|

@@ -0,0 +1,435 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

dependencies = ["torch"]

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

from midas.dpt_depth import DPTDepthModel

|

| 6 |

+

from midas.midas_net import MidasNet

|

| 7 |

+

from midas.midas_net_custom import MidasNet_small

|

| 8 |

+

|

| 9 |

+

def DPT_BEiT_L_512(pretrained=True, **kwargs):

|

| 10 |

+

""" # This docstring shows up in hub.help()

|

| 11 |

+

MiDaS DPT_BEiT_L_512 model for monocular depth estimation

|

| 12 |

+

pretrained (bool): load pretrained weights into model

|

| 13 |

+

"""

|

| 14 |

+

|

| 15 |

+

model = DPTDepthModel(

|

| 16 |

+

path=None,

|

| 17 |

+

backbone="beitl16_512",

|

| 18 |

+

non_negative=True,

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

if pretrained:

|

| 22 |

+

checkpoint = (

|

| 23 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_512.pt"

|

| 24 |

+

)

|

| 25 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 26 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 27 |

+

)

|

| 28 |

+

model.load_state_dict(state_dict)

|

| 29 |

+

|

| 30 |

+

return model

|

| 31 |

+

|

| 32 |

+

def DPT_BEiT_L_384(pretrained=True, **kwargs):

|

| 33 |

+

""" # This docstring shows up in hub.help()

|

| 34 |

+

MiDaS DPT_BEiT_L_384 model for monocular depth estimation

|

| 35 |

+

pretrained (bool): load pretrained weights into model

|

| 36 |

+

"""

|

| 37 |

+

|

| 38 |

+

model = DPTDepthModel(

|

| 39 |

+

path=None,

|

| 40 |

+

backbone="beitl16_384",

|

| 41 |

+

non_negative=True,

|

| 42 |

+

)

|

| 43 |

+

|

| 44 |

+

if pretrained:

|

| 45 |

+

checkpoint = (

|

| 46 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_large_384.pt"

|

| 47 |

+

)

|

| 48 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 49 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 50 |

+

)

|

| 51 |

+

model.load_state_dict(state_dict)

|

| 52 |

+

|

| 53 |

+

return model

|

| 54 |

+

|

| 55 |

+

def DPT_BEiT_B_384(pretrained=True, **kwargs):

|

| 56 |

+

""" # This docstring shows up in hub.help()

|

| 57 |

+

MiDaS DPT_BEiT_B_384 model for monocular depth estimation

|

| 58 |

+

pretrained (bool): load pretrained weights into model

|

| 59 |

+

"""

|

| 60 |

+

|

| 61 |

+

model = DPTDepthModel(

|

| 62 |

+

path=None,

|

| 63 |

+

backbone="beitb16_384",

|

| 64 |

+

non_negative=True,

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |

+

if pretrained:

|

| 68 |

+

checkpoint = (

|

| 69 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_beit_base_384.pt"

|

| 70 |

+

)

|

| 71 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 72 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 73 |

+

)

|

| 74 |

+

model.load_state_dict(state_dict)

|

| 75 |

+

|

| 76 |

+

return model

|

| 77 |

+

|

| 78 |

+

def DPT_SwinV2_L_384(pretrained=True, **kwargs):

|

| 79 |

+

""" # This docstring shows up in hub.help()

|

| 80 |

+

MiDaS DPT_SwinV2_L_384 model for monocular depth estimation

|

| 81 |

+

pretrained (bool): load pretrained weights into model

|

| 82 |

+

"""

|

| 83 |

+

|

| 84 |

+

model = DPTDepthModel(

|

| 85 |

+

path=None,

|

| 86 |

+

backbone="swin2l24_384",

|

| 87 |

+

non_negative=True,

|

| 88 |

+

)

|

| 89 |

+

|

| 90 |

+

if pretrained:

|

| 91 |

+

checkpoint = (

|

| 92 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_large_384.pt"

|

| 93 |

+

)

|

| 94 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 95 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 96 |

+

)

|

| 97 |

+

model.load_state_dict(state_dict)

|

| 98 |

+

|

| 99 |

+

return model

|

| 100 |

+

|

| 101 |

+

def DPT_SwinV2_B_384(pretrained=True, **kwargs):

|

| 102 |

+

""" # This docstring shows up in hub.help()

|

| 103 |

+

MiDaS DPT_SwinV2_B_384 model for monocular depth estimation

|

| 104 |

+

pretrained (bool): load pretrained weights into model

|

| 105 |

+

"""

|

| 106 |

+

|

| 107 |

+

model = DPTDepthModel(

|

| 108 |

+

path=None,

|

| 109 |

+

backbone="swin2b24_384",

|

| 110 |

+

non_negative=True,

|

| 111 |

+

)

|

| 112 |

+

|

| 113 |

+

if pretrained:

|

| 114 |

+

checkpoint = (

|

| 115 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_base_384.pt"

|

| 116 |

+

)

|

| 117 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 118 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 119 |

+

)

|

| 120 |

+

model.load_state_dict(state_dict)

|

| 121 |

+

|

| 122 |

+

return model

|

| 123 |

+

|

| 124 |

+

def DPT_SwinV2_T_256(pretrained=True, **kwargs):

|

| 125 |

+

""" # This docstring shows up in hub.help()

|

| 126 |

+

MiDaS DPT_SwinV2_T_256 model for monocular depth estimation

|

| 127 |

+

pretrained (bool): load pretrained weights into model

|

| 128 |

+

"""

|

| 129 |

+

|

| 130 |

+

model = DPTDepthModel(

|

| 131 |

+

path=None,

|

| 132 |

+

backbone="swin2t16_256",

|

| 133 |

+

non_negative=True,

|

| 134 |

+

)

|

| 135 |

+

|

| 136 |

+

if pretrained:

|

| 137 |

+

checkpoint = (

|

| 138 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin2_tiny_256.pt"

|

| 139 |

+

)

|

| 140 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 141 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 142 |

+

)

|

| 143 |

+

model.load_state_dict(state_dict)

|

| 144 |

+

|

| 145 |

+

return model

|

| 146 |

+

|

| 147 |

+

def DPT_Swin_L_384(pretrained=True, **kwargs):

|

| 148 |

+

""" # This docstring shows up in hub.help()

|

| 149 |

+

MiDaS DPT_Swin_L_384 model for monocular depth estimation

|

| 150 |

+

pretrained (bool): load pretrained weights into model

|

| 151 |

+

"""

|

| 152 |

+

|

| 153 |

+

model = DPTDepthModel(

|

| 154 |

+

path=None,

|

| 155 |

+

backbone="swinl12_384",

|

| 156 |

+

non_negative=True,

|

| 157 |

+

)

|

| 158 |

+

|

| 159 |

+

if pretrained:

|

| 160 |

+

checkpoint = (

|

| 161 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_swin_large_384.pt"

|

| 162 |

+

)

|

| 163 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 164 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 165 |

+

)

|

| 166 |

+

model.load_state_dict(state_dict)

|

| 167 |

+

|

| 168 |

+

return model

|

| 169 |

+

|

| 170 |

+

def DPT_Next_ViT_L_384(pretrained=True, **kwargs):

|

| 171 |

+

""" # This docstring shows up in hub.help()

|

| 172 |

+

MiDaS DPT_Next_ViT_L_384 model for monocular depth estimation

|

| 173 |

+

pretrained (bool): load pretrained weights into model

|

| 174 |

+

"""

|

| 175 |

+

|

| 176 |

+

model = DPTDepthModel(

|

| 177 |

+

path=None,

|

| 178 |

+

backbone="next_vit_large_6m",

|

| 179 |

+

non_negative=True,

|

| 180 |

+

)

|

| 181 |

+

|

| 182 |

+

if pretrained:

|

| 183 |

+

checkpoint = (

|

| 184 |

+

"https://github.com/isl-org/MiDaS/releases/download/v3_1/dpt_next_vit_large_384.pt"

|

| 185 |

+

)

|

| 186 |

+

state_dict = torch.hub.load_state_dict_from_url(

|

| 187 |

+

checkpoint, map_location=torch.device('cpu'), progress=True, check_hash=True

|

| 188 |

+

)

|

| 189 |

+

model.load_state_dict(state_dict)

|

| 190 |

+

|

| 191 |

+

return model

|

| 192 |

+

|

| 193 |

+

def DPT_LeViT_224(pretrained=True, **kwargs):

|

| 194 |

+

""" # This docstring shows up in hub.help()

|

| 195 |

+

MiDaS DPT_LeViT_224 model for monocular depth estimation

|

| 196 |

+

pretrained (bool): load pretrained weights into model

|

| 197 |

+

"""

|

| 198 |

+

|

| 199 |

+

model = DPTDepthModel(

|

| 200 |

+

path=None,