update readme

Browse files- .gitignore +1 -0

- README.md +28 -14

- assets/method.png +0 -0

- assets/results.png +0 -0

- inference_global.sh +2 -2

- inference_local.sh +2 -2

- train_global.sh +1 -1

.gitignore

CHANGED

|

@@ -5,6 +5,7 @@ _sc.py

|

|

| 5 |

*.ckpt

|

| 6 |

*.bin

|

| 7 |

|

|

|

|

| 8 |

.idea

|

| 9 |

.idea/workspace.xml

|

| 10 |

.DS_Store

|

| 5 |

*.ckpt

|

| 6 |

*.bin

|

| 7 |

|

| 8 |

+

checkpoints

|

| 9 |

.idea

|

| 10 |

.idea/workspace.xml

|

| 11 |

.DS_Store

|

README.md

CHANGED

|

@@ -4,9 +4,17 @@

|

|

| 4 |

<a href="https://arxiv.org/pdf/2302.13848.pdf"><img src="https://img.shields.io/badge/arXiv-2302.13848-b31b1b.svg" height=22.5></a>

|

| 5 |

<a href="https://huggingface.co/spaces/ELITE-library/ELITE"><img src="https://img.shields.io/static/v1?label=HuggingFace&message=gradio demo&color=darkgreen" height=22.5></a>

|

| 6 |

|

| 7 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

|

| 9 |

-

|

| 10 |

|

| 11 |

### Environment Setup

|

| 12 |

|

|

@@ -22,13 +30,21 @@ pip install -r requirements.txt

|

|

| 22 |

|

| 23 |

We provide the pretrained checkpoints in [Google Drive](https://drive.google.com/drive/folders/1VkiVZzA_i9gbfuzvHaLH2VYh7kOTzE0x?usp=sharing). One can download them and save to the directory `checkpoints`.

|

| 24 |

|

| 25 |

-

### Setting up

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 28 |

|

| 29 |

### Customized Generation

|

| 30 |

|

| 31 |

-

We provide

|

| 32 |

```

|

| 33 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 34 |

export DATA_DIR='./test_datasets/'

|

|

@@ -42,16 +58,14 @@ CUDA_VISIBLE_DEVICES=0 python inference_local.py \

|

|

| 42 |

--global_mapper_path="./checkpoints/global_mapper.pt" \

|

| 43 |

--local_mapper_path="./checkpoints/local_mapper.pt"

|

| 44 |

```

|

| 45 |

-

or you can use the shell script

|

| 46 |

```

|

| 47 |

bash inference_local.sh

|

| 48 |

```

|

| 49 |

-

If you want to test your customized dataset, you should align the image to ensure the object is at the center of image, and also provide the corresponding object mask. The object mask can be obtained by [image-matting-app](https://huggingface.co/spaces/SankarSrin/image-matting-app), or other image matting methods.

|

| 50 |

|

| 51 |

## Training

|

| 52 |

|

| 53 |

-

----

|

| 54 |

-

|

| 55 |

### Preparing Dataset

|

| 56 |

|

| 57 |

We use the **test** dataset of Open-Images V6 to train our ELITE. You can prepare the dataset as follows:

|

|

@@ -87,7 +101,7 @@ datasets

|

|

| 87 |

|

| 88 |

### Training Global Mapping Network

|

| 89 |

|

| 90 |

-

To train the global mapping network,

|

| 91 |

|

| 92 |

```Shell

|

| 93 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

|

@@ -106,14 +120,14 @@ CUDA_VISIBLE_DEVICES=0,1,2,3 accelerate launch --config_file 4_gpu.json --main_p

|

|

| 106 |

--output_dir="./elite_experiments/global_mapping" \

|

| 107 |

--save_steps 200

|

| 108 |

```

|

| 109 |

-

or you can use the shell script

|

| 110 |

```shell

|

| 111 |

bash train_global.sh

|

| 112 |

```

|

| 113 |

|

| 114 |

### Training Local Mapping Network

|

| 115 |

|

| 116 |

-

After the global mapping is trained, you can train the local mapping by running

|

| 117 |

|

| 118 |

```Shell

|

| 119 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

|

@@ -133,7 +147,7 @@ CUDA_VISIBLE_DEVICES=0,1,2,3 accelerate launch --config_file 4_gpu.json --main_p

|

|

| 133 |

--output_dir="./elite_experiments/local_mapping" \

|

| 134 |

--save_steps 200

|

| 135 |

```

|

| 136 |

-

or you can use the shell script

|

| 137 |

```shell

|

| 138 |

bash train_local.sh

|

| 139 |

```

|

|

@@ -152,4 +166,4 @@ bash train_local.sh

|

|

| 152 |

|

| 153 |

## Acknowledgements

|

| 154 |

|

| 155 |

-

This code is built on [diffusers](https://github.com/huggingface/diffusers/). We thank the authors for sharing the codes.

|

| 4 |

<a href="https://arxiv.org/pdf/2302.13848.pdf"><img src="https://img.shields.io/badge/arXiv-2302.13848-b31b1b.svg" height=22.5></a>

|

| 5 |

<a href="https://huggingface.co/spaces/ELITE-library/ELITE"><img src="https://img.shields.io/static/v1?label=HuggingFace&message=gradio demo&color=darkgreen" height=22.5></a>

|

| 6 |

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Method Details

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

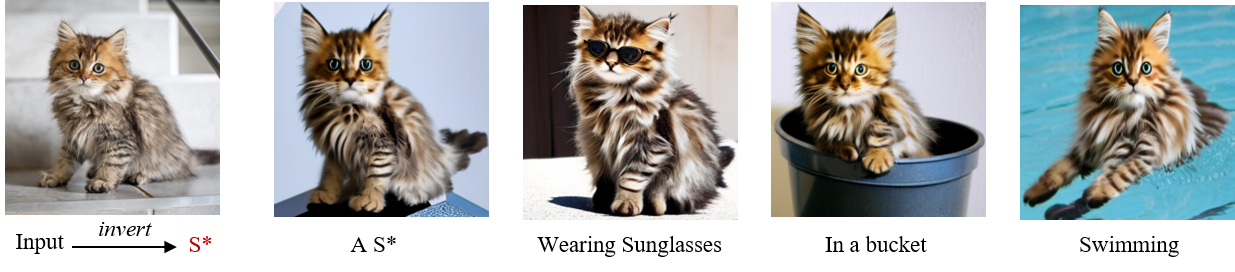

Given an image indicates the target concept (usually an object), we propose a learning-based encoder ELITE to encode the visual concept into the textual embeddings, which can be further flexibly composed into new scenes. It consists of two modules: (a) a global mapping network is first trained to encode a concept image into multiple textual word embeddings, where one primary word (w0) for well-editable concept and other auxiliary words (w1···N) to exclude irrelevant disturbances. (b) A local mapping network is further trained, which projects the foreground object into textual feature space to provide local details.

|

| 15 |

+

|

| 16 |

|

| 17 |

+

## Getting Started

|

| 18 |

|

| 19 |

### Environment Setup

|

| 20 |

|

| 30 |

|

| 31 |

We provide the pretrained checkpoints in [Google Drive](https://drive.google.com/drive/folders/1VkiVZzA_i9gbfuzvHaLH2VYh7kOTzE0x?usp=sharing). One can download them and save to the directory `checkpoints`.

|

| 32 |

|

| 33 |

+

### Setting up HuggingFace

|

| 34 |

+

|

| 35 |

+

Our code is built on the [diffusers](https://github.com/huggingface/diffusers/) version of Stable Diffusion, you need to accept the [model license](https://huggingface.co/CompVis/stable-diffusion-v1-4) before downloading or using the weights. In our experiments, we use model version v1-4.

|

| 36 |

+

|

| 37 |

+

You have to be a registered user in Hugging Face Hub, and you'll also need to use an access token for the code to work. For more information on access tokens, please refer to [this section of the documentation](https://huggingface.co/docs/hub/security-tokens).

|

| 38 |

|

| 39 |

+

Run the following command to authenticate your token

|

| 40 |

+

```shell

|

| 41 |

+

huggingface-cli login

|

| 42 |

+

```

|

| 43 |

+

If you have already cloned the repo, then you won't need to go through these steps.

|

| 44 |

|

| 45 |

### Customized Generation

|

| 46 |

|

| 47 |

+

We provide some testing images in [test_datasets](./test_datasets), which contains both images and object masks. For testing, you can run,

|

| 48 |

```

|

| 49 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 50 |

export DATA_DIR='./test_datasets/'

|

| 58 |

--global_mapper_path="./checkpoints/global_mapper.pt" \

|

| 59 |

--local_mapper_path="./checkpoints/local_mapper.pt"

|

| 60 |

```

|

| 61 |

+

or you can use the shell script,

|

| 62 |

```

|

| 63 |

bash inference_local.sh

|

| 64 |

```

|

| 65 |

+

If you want to test your customized dataset, you should align the image to ensure the object is at the center of image, and also provide the corresponding object mask. The object mask can be obtained by [image-matting-app](https://huggingface.co/spaces/SankarSrin/image-matting-app), or other image matting methods.

|

| 66 |

|

| 67 |

## Training

|

| 68 |

|

|

|

|

|

|

|

| 69 |

### Preparing Dataset

|

| 70 |

|

| 71 |

We use the **test** dataset of Open-Images V6 to train our ELITE. You can prepare the dataset as follows:

|

| 101 |

|

| 102 |

### Training Global Mapping Network

|

| 103 |

|

| 104 |

+

To train the global mapping network, you can run,

|

| 105 |

|

| 106 |

```Shell

|

| 107 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 120 |

--output_dir="./elite_experiments/global_mapping" \

|

| 121 |

--save_steps 200

|

| 122 |

```

|

| 123 |

+

or you can use the shell script,

|

| 124 |

```shell

|

| 125 |

bash train_global.sh

|

| 126 |

```

|

| 127 |

|

| 128 |

### Training Local Mapping Network

|

| 129 |

|

| 130 |

+

After the global mapping network is trained, you can train the local mapping network by running,

|

| 131 |

|

| 132 |

```Shell

|

| 133 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 147 |

--output_dir="./elite_experiments/local_mapping" \

|

| 148 |

--save_steps 200

|

| 149 |

```

|

| 150 |

+

or you can use the shell script,

|

| 151 |

```shell

|

| 152 |

bash train_local.sh

|

| 153 |

```

|

| 166 |

|

| 167 |

## Acknowledgements

|

| 168 |

|

| 169 |

+

This code is built on [diffusers](https://github.com/huggingface/diffusers/) version of [Stable Diffusion](https://github.com/CompVis/stable-diffusion). We thank the authors for sharing the codes.

|

assets/method.png

ADDED

|

assets/results.png

ADDED

|

inference_global.sh

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 2 |

export DATA_DIR='./test_datasets/'

|

| 3 |

|

| 4 |

-

CUDA_VISIBLE_DEVICES=

|

| 5 |

--pretrained_model_name_or_path=$MODEL_NAME \

|

| 6 |

--test_data_dir=$DATA_DIR \

|

| 7 |

--output_dir="./outputs/global_mapping" \

|

|

@@ -9,5 +9,5 @@ CUDA_VISIBLE_DEVICES=7 python inference_global.py \

|

|

| 9 |

--token_index="0" \

|

| 10 |

--template="a photo of a S" \

|

| 11 |

--global_mapper_path="./checkpoints/global_mapper.pt" \

|

| 12 |

-

--seed

|

| 13 |

|

| 1 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 2 |

export DATA_DIR='./test_datasets/'

|

| 3 |

|

| 4 |

+

CUDA_VISIBLE_DEVICES=0 python inference_global.py \

|

| 5 |

--pretrained_model_name_or_path=$MODEL_NAME \

|

| 6 |

--test_data_dir=$DATA_DIR \

|

| 7 |

--output_dir="./outputs/global_mapping" \

|

| 9 |

--token_index="0" \

|

| 10 |

--template="a photo of a S" \

|

| 11 |

--global_mapper_path="./checkpoints/global_mapper.pt" \

|

| 12 |

+

--seed=42

|

| 13 |

|

inference_local.sh

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 2 |

export DATA_DIR='./test_datasets/'

|

| 3 |

-

CUDA_VISIBLE_DEVICES=

|

| 4 |

--pretrained_model_name_or_path=$MODEL_NAME \

|

| 5 |

--test_data_dir=$DATA_DIR \

|

| 6 |

--output_dir="./outputs/local_mapping" \

|

|

@@ -9,5 +9,5 @@ CUDA_VISIBLE_DEVICES=7 python inference_local.py \

|

|

| 9 |

--llambda="0.8" \

|

| 10 |

--global_mapper_path="./checkpoints/global_mapper.pt" \

|

| 11 |

--local_mapper_path="./checkpoints/local_mapper.pt" \

|

| 12 |

-

--seed

|

| 13 |

|

| 1 |

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

|

| 2 |

export DATA_DIR='./test_datasets/'

|

| 3 |

+

CUDA_VISIBLE_DEVICES=0 python inference_local.py \

|

| 4 |

--pretrained_model_name_or_path=$MODEL_NAME \

|

| 5 |

--test_data_dir=$DATA_DIR \

|

| 6 |

--output_dir="./outputs/local_mapping" \

|

| 9 |

--llambda="0.8" \

|

| 10 |

--global_mapper_path="./checkpoints/global_mapper.pt" \

|

| 11 |

--local_mapper_path="./checkpoints/local_mapper.pt" \

|

| 12 |

+

--seed=42

|

| 13 |

|

train_global.sh

CHANGED

|

@@ -11,5 +11,5 @@ CUDA_VISIBLE_DEVICES=0,1,2,3 accelerate launch --config_file 4_gpu.json --main_p

|

|

| 11 |

--learning_rate=1e-06 --scale_lr \

|

| 12 |

--lr_scheduler="constant" \

|

| 13 |

--lr_warmup_steps=0 \

|

| 14 |

-

--output_dir="./elite_experiments/

|

| 15 |

--save_steps 200

|

| 11 |

--learning_rate=1e-06 --scale_lr \

|

| 12 |

--lr_scheduler="constant" \

|

| 13 |

--lr_warmup_steps=0 \

|

| 14 |

+

--output_dir="./elite_experiments/global_mapping" \

|

| 15 |

--save_steps 200

|