Upload folder using huggingface_hub

Browse files- args.yaml +91 -0

- events.out.tfevents.1701741678.ced569c9f804.1.0 +3 -0

- results.csv +46 -0

- train_batch0.jpg +0 -0

- train_batch1.jpg +0 -0

- train_batch2.jpg +0 -0

- weights/best.pt +3 -0

- weights/last.pt +3 -0

args.yaml

ADDED

|

@@ -0,0 +1,91 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: detect

|

| 2 |

+

mode: train

|

| 3 |

+

model: yolov8s.pt

|

| 4 |

+

data: data/drug_class_2023_12_5_s_640/drug_class_2023_12_5_s_640.yaml

|

| 5 |

+

epochs: 1000

|

| 6 |

+

patience: 50

|

| 7 |

+

batch: 64

|

| 8 |

+

imgsz: 640

|

| 9 |

+

save: true

|

| 10 |

+

cache: false

|

| 11 |

+

device: null

|

| 12 |

+

workers: 8

|

| 13 |

+

project: runs/detect

|

| 14 |

+

name: drug_class_2023_12_5_s_640

|

| 15 |

+

exist_ok: false

|

| 16 |

+

pretrained: false

|

| 17 |

+

optimizer: SGD

|

| 18 |

+

verbose: true

|

| 19 |

+

seed: 0

|

| 20 |

+

deterministic: true

|

| 21 |

+

single_cls: false

|

| 22 |

+

image_weights: false

|

| 23 |

+

rect: false

|

| 24 |

+

cos_lr: false

|

| 25 |

+

close_mosaic: 10

|

| 26 |

+

resume: false

|

| 27 |

+

overlap_mask: true

|

| 28 |

+

mask_ratio: 4

|

| 29 |

+

dropout: 0.0

|

| 30 |

+

val: true

|

| 31 |

+

save_json: false

|

| 32 |

+

save_hybrid: false

|

| 33 |

+

conf: null

|

| 34 |

+

iou: 0.7

|

| 35 |

+

max_det: 300

|

| 36 |

+

half: false

|

| 37 |

+

dnn: false

|

| 38 |

+

plots: true

|

| 39 |

+

source: null

|

| 40 |

+

show: false

|

| 41 |

+

save_txt: false

|

| 42 |

+

save_conf: false

|

| 43 |

+

save_crop: false

|

| 44 |

+

hide_labels: false

|

| 45 |

+

hide_conf: false

|

| 46 |

+

vid_stride: 1

|

| 47 |

+

line_thickness: 3

|

| 48 |

+

visualize: false

|

| 49 |

+

augment: false

|

| 50 |

+

agnostic_nms: false

|

| 51 |

+

classes: null

|

| 52 |

+

retina_masks: false

|

| 53 |

+

boxes: true

|

| 54 |

+

format: torchscript

|

| 55 |

+

keras: false

|

| 56 |

+

optimize: false

|

| 57 |

+

int8: false

|

| 58 |

+

dynamic: false

|

| 59 |

+

simplify: false

|

| 60 |

+

opset: null

|

| 61 |

+

workspace: 4

|

| 62 |

+

nms: false

|

| 63 |

+

lr0: 0.01

|

| 64 |

+

lrf: 0.01

|

| 65 |

+

momentum: 0.937

|

| 66 |

+

weight_decay: 0.0005

|

| 67 |

+

warmup_epochs: 3.0

|

| 68 |

+

warmup_momentum: 0.8

|

| 69 |

+

warmup_bias_lr: 0.1

|

| 70 |

+

box: 7.5

|

| 71 |

+

cls: 0.5

|

| 72 |

+

dfl: 1.5

|

| 73 |

+

fl_gamma: 0.0

|

| 74 |

+

label_smoothing: 0.0

|

| 75 |

+

nbs: 64

|

| 76 |

+

hsv_h: 0.015

|

| 77 |

+

hsv_s: 0.7

|

| 78 |

+

hsv_v: 0.4

|

| 79 |

+

degrees: 0.0

|

| 80 |

+

translate: 0.1

|

| 81 |

+

scale: 0.5

|

| 82 |

+

shear: 0.0

|

| 83 |

+

perspective: 0.0

|

| 84 |

+

flipud: 0.0

|

| 85 |

+

fliplr: 0.5

|

| 86 |

+

mosaic: 1.0

|

| 87 |

+

mixup: 0.0

|

| 88 |

+

copy_paste: 0.0

|

| 89 |

+

cfg: null

|

| 90 |

+

v5loader: false

|

| 91 |

+

save_dir: runs/detect/drug_class_2023_12_5_s_640

|

events.out.tfevents.1701741678.ced569c9f804.1.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7e9016ef5284553c6548c6fdeac5d2fde371637c7bb8326a1ecfc432d9897f86

|

| 3 |

+

size 9625534

|

results.csv

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

0, 1.0648, 0.95599, 1.1481, 0.84447, 0.65332, 0.71167, 0.47169, 1.0361, 0.85667, 1.0629, 0.070022, 0.0033308, 0.0033308

|

| 3 |

+

1, 1.1018, 0.86778, 1.1585, 0.85809, 0.67569, 0.72911, 0.48727, 1.1097, 0.94623, 1.1066, 0.040016, 0.0066576, 0.0066576

|

| 4 |

+

2, 1.2159, 1.0068, 1.2307, 0.79804, 0.66629, 0.70557, 0.46379, 1.2251, 1.0844, 1.1897, 0.010003, 0.0099777, 0.0099777

|

| 5 |

+

3, 1.2384, 1.0304, 1.251, 0.86486, 0.69854, 0.72594, 0.48123, 1.1207, 0.95431, 1.1177, 0.0099703, 0.0099703, 0.0099703

|

| 6 |

+

4, 1.1852, 0.96311, 1.2181, 0.89192, 0.7057, 0.73796, 0.50947, 1.0681, 0.88188, 1.0974, 0.0099703, 0.0099703, 0.0099703

|

| 7 |

+

5, 1.1546, 0.92271, 1.1987, 0.905, 0.69582, 0.73812, 0.5128, 1.0366, 0.83952, 1.077, 0.0099604, 0.0099604, 0.0099604

|

| 8 |

+

6, 1.1374, 0.89981, 1.1863, 0.89674, 0.70683, 0.74243, 0.51734, 1.0237, 0.8102, 1.067, 0.0099505, 0.0099505, 0.0099505

|

| 9 |

+

7, 1.1212, 0.88004, 1.1778, 0.91234, 0.7089, 0.74775, 0.52638, 1.0052, 0.78836, 1.0592, 0.0099406, 0.0099406, 0.0099406

|

| 10 |

+

8, 1.1131, 0.87043, 1.1714, 0.91572, 0.71724, 0.75255, 0.52934, 0.99458, 0.77485, 1.055, 0.0099307, 0.0099307, 0.0099307

|

| 11 |

+

9, 1.1039, 0.86022, 1.1676, 0.92091, 0.71783, 0.75049, 0.52717, 0.98567, 0.76516, 1.0489, 0.0099208, 0.0099208, 0.0099208

|

| 12 |

+

10, 1.0978, 0.85174, 1.1623, 0.91134, 0.71968, 0.75394, 0.53421, 0.98246, 0.75802, 1.0475, 0.0099109, 0.0099109, 0.0099109

|

| 13 |

+

11, 1.0921, 0.8481, 1.1582, 0.94051, 0.71896, 0.75489, 0.53865, 0.97662, 0.75198, 1.0451, 0.009901, 0.009901, 0.009901

|

| 14 |

+

12, 1.0915, 0.84095, 1.156, 0.90711, 0.72445, 0.75626, 0.54249, 0.97299, 0.74766, 1.0432, 0.0098911, 0.0098911, 0.0098911

|

| 15 |

+

13, 1.0829, 0.83623, 1.1529, 0.90561, 0.71878, 0.75688, 0.54374, 0.96976, 0.74514, 1.0416, 0.0098812, 0.0098812, 0.0098812

|

| 16 |

+

14, 1.0819, 0.83302, 1.1521, 0.90486, 0.72128, 0.7563, 0.54388, 0.96764, 0.74254, 1.0405, 0.0098713, 0.0098713, 0.0098713

|

| 17 |

+

15, 1.0781, 0.82918, 1.1497, 0.9295, 0.71771, 0.75691, 0.54315, 0.96667, 0.74057, 1.0398, 0.0098614, 0.0098614, 0.0098614

|

| 18 |

+

16, 1.0759, 0.82793, 1.1496, 0.92544, 0.72114, 0.75697, 0.54201, 0.96537, 0.73818, 1.0389, 0.0098515, 0.0098515, 0.0098515

|

| 19 |

+

17, 1.0767, 0.82274, 1.1475, 0.9155, 0.72519, 0.75709, 0.54154, 0.9647, 0.73707, 1.0385, 0.0098416, 0.0098416, 0.0098416

|

| 20 |

+

18, 1.0742, 0.8232, 1.1474, 0.9171, 0.72574, 0.75717, 0.5408, 0.96388, 0.73565, 1.0384, 0.0098317, 0.0098317, 0.0098317

|

| 21 |

+

19, 1.071, 0.82129, 1.1465, 0.9012, 0.7261, 0.75735, 0.54106, 0.96281, 0.73424, 1.0381, 0.0098218, 0.0098218, 0.0098218

|

| 22 |

+

20, 1.07, 0.81875, 1.1462, 0.9027, 0.72612, 0.75799, 0.54112, 0.96196, 0.73294, 1.0377, 0.0098119, 0.0098119, 0.0098119

|

| 23 |

+

21, 1.0692, 0.81699, 1.1446, 0.90038, 0.7254, 0.75835, 0.54018, 0.96118, 0.73217, 1.0375, 0.009802, 0.009802, 0.009802

|

| 24 |

+

22, 1.0677, 0.81591, 1.1454, 0.9013, 0.726, 0.75819, 0.54018, 0.9606, 0.73186, 1.0373, 0.0097921, 0.0097921, 0.0097921

|

| 25 |

+

23, 1.0678, 0.81458, 1.1433, 0.90351, 0.72402, 0.75828, 0.54126, 0.95997, 0.73108, 1.037, 0.0097822, 0.0097822, 0.0097822

|

| 26 |

+

24, 1.0671, 0.8147, 1.1433, 0.90935, 0.72463, 0.75856, 0.54265, 0.95985, 0.73079, 1.037, 0.0097723, 0.0097723, 0.0097723

|

| 27 |

+

25, 1.0663, 0.81264, 1.1405, 0.91141, 0.7262, 0.75897, 0.54266, 0.95905, 0.73033, 1.0362, 0.0097624, 0.0097624, 0.0097624

|

| 28 |

+

26, 1.0651, 0.81122, 1.1409, 0.91052, 0.72685, 0.7598, 0.54305, 0.95842, 0.73004, 1.036, 0.0097525, 0.0097525, 0.0097525

|

| 29 |

+

27, 1.063, 0.8108, 1.1412, 0.91227, 0.72705, 0.76014, 0.54366, 0.95789, 0.72885, 1.036, 0.0097426, 0.0097426, 0.0097426

|

| 30 |

+

28, 1.0636, 0.81191, 1.1417, 0.92087, 0.72191, 0.7603, 0.54391, 0.95695, 0.72845, 1.0354, 0.0097327, 0.0097327, 0.0097327

|

| 31 |

+

29, 1.0629, 0.8115, 1.142, 0.92209, 0.72162, 0.76106, 0.54422, 0.95646, 0.72843, 1.0352, 0.0097228, 0.0097228, 0.0097228

|

| 32 |

+

30, 1.0623, 0.8109, 1.1408, 0.93031, 0.71713, 0.76105, 0.54522, 0.95558, 0.72827, 1.0346, 0.0097129, 0.0097129, 0.0097129

|

| 33 |

+

31, 1.0627, 0.8076, 1.1414, 0.93165, 0.71765, 0.7616, 0.54703, 0.95509, 0.72748, 1.0343, 0.009703, 0.009703, 0.009703

|

| 34 |

+

32, 1.0606, 0.80575, 1.1398, 0.93323, 0.71792, 0.76163, 0.5464, 0.95444, 0.72703, 1.034, 0.0096931, 0.0096931, 0.0096931

|

| 35 |

+

33, 1.0612, 0.80738, 1.1388, 0.92669, 0.72094, 0.762, 0.54661, 0.9542, 0.72645, 1.0339, 0.0096832, 0.0096832, 0.0096832

|

| 36 |

+

34, 1.0614, 0.80976, 1.1399, 0.92535, 0.7261, 0.76296, 0.54611, 0.95366, 0.72612, 1.0336, 0.0096733, 0.0096733, 0.0096733

|

| 37 |

+

35, 1.0604, 0.80585, 1.1386, 0.92623, 0.72619, 0.76335, 0.54783, 0.95317, 0.72618, 1.0333, 0.0096634, 0.0096634, 0.0096634

|

| 38 |

+

36, 1.06, 0.80504, 1.1371, 0.92379, 0.72615, 0.76363, 0.54722, 0.95246, 0.72625, 1.0327, 0.0096535, 0.0096535, 0.0096535

|

| 39 |

+

37, 1.0586, 0.80369, 1.1372, 0.92384, 0.72603, 0.76435, 0.54712, 0.95194, 0.7263, 1.0323, 0.0096436, 0.0096436, 0.0096436

|

| 40 |

+

38, 1.0584, 0.80316, 1.137, 0.92846, 0.72626, 0.76416, 0.54692, 0.95195, 0.72551, 1.0324, 0.0096337, 0.0096337, 0.0096337

|

| 41 |

+

39, 1.058, 0.80411, 1.1379, 0.92875, 0.72817, 0.76395, 0.54604, 0.95189, 0.72603, 1.0323, 0.0096238, 0.0096238, 0.0096238

|

| 42 |

+

40, 1.0587, 0.80307, 1.137, 0.9278, 0.72805, 0.76356, 0.54596, 0.9516, 0.72566, 1.0319, 0.0096139, 0.0096139, 0.0096139

|

| 43 |

+

41, 1.0584, 0.80226, 1.1368, 0.92754, 0.72825, 0.76386, 0.54721, 0.95175, 0.72518, 1.0317, 0.009604, 0.009604, 0.009604

|

| 44 |

+

42, 1.0597, 0.8032, 1.137, 0.92811, 0.72794, 0.76357, 0.54904, 0.95181, 0.72478, 1.0314, 0.0095941, 0.0095941, 0.0095941

|

| 45 |

+

43, 1.0559, 0.80144, 1.136, 0.92927, 0.72756, 0.76391, 0.54766, 0.95169, 0.7248, 1.0313, 0.0095842, 0.0095842, 0.0095842

|

| 46 |

+

44, 1.0566, 0.80112, 1.1351, 0.93607, 0.72721, 0.76381, 0.5479, 0.95159, 0.72483, 1.0314, 0.0095743, 0.0095743, 0.0095743

|

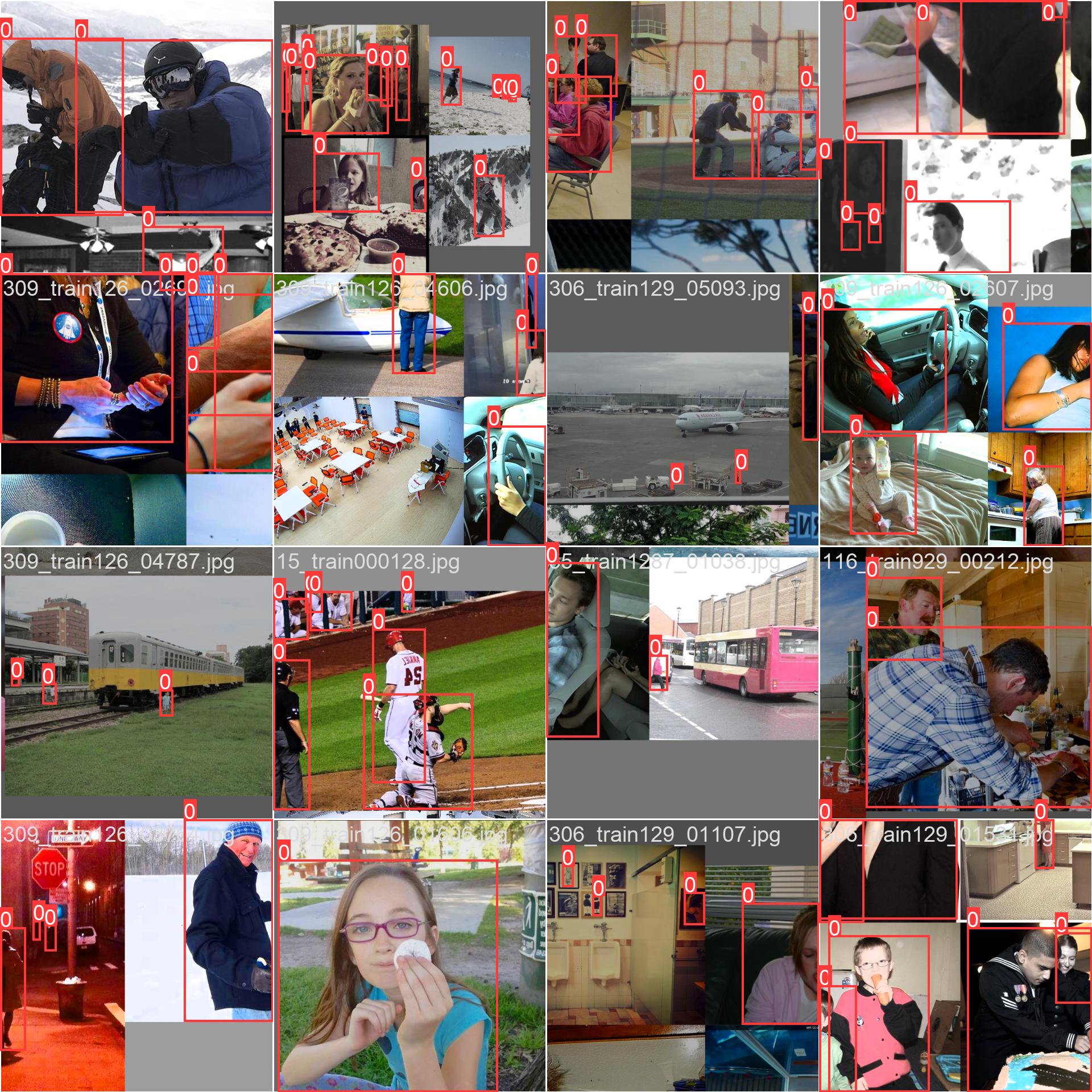

train_batch0.jpg

ADDED

|

train_batch1.jpg

ADDED

|

train_batch2.jpg

ADDED

|

weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:26b767be5771bf579c3f11793e07b5c56c4b898c8c1ee4ce7d6f1d3b9e5d9a5d

|

| 3 |

+

size 89533858

|

weights/last.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6606d721cf18579f5b69ffa65f9abbef93468162300748f2e9c28c8a43df8126

|

| 3 |

+

size 89533858

|