Commit

•

adc5a6a

1

Parent(s):

4f36c95

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,128 @@

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

| 3 |

+

datasets:

|

| 4 |

+

- imagenet-1k

|

| 5 |

+

- ade20k

|

| 6 |

+

metrics:

|

| 7 |

+

- mean_iou

|

| 8 |

+

pipeline_tag: image-segmentation

|

| 9 |

+

tags:

|

| 10 |

+

- vision

|

| 11 |

+

language:

|

| 12 |

+

- en

|

| 13 |

+

library_name: pytorch

|

| 14 |

---

|

| 15 |

+

|

| 16 |

+

# TransNeXt

|

| 17 |

+

|

| 18 |

+

Official Model release

|

| 19 |

+

for ["TransNeXt: Robust Foveal Visual Perception for Vision Transformers"](https://arxiv.org/pdf/2311.17132.pdf) [CVPR 2024]

|

| 20 |

+

.

|

| 21 |

+

## Model Details

|

| 22 |

+

- **Code:** https://github.com/DaiShiResearch/TransNeXt

|

| 23 |

+

- **Paper:** [TransNeXt: Robust Foveal Visual Perception for Vision Transformers](https://arxiv.org/abs/2311.17132)

|

| 24 |

+

- **Author:** [Dai Shi](https://github.com/DaiShiResearch)

|

| 25 |

+

- **Email:** daishiresearch@gmail.com

|

| 26 |

+

|

| 27 |

+

## Methods

|

| 28 |

+

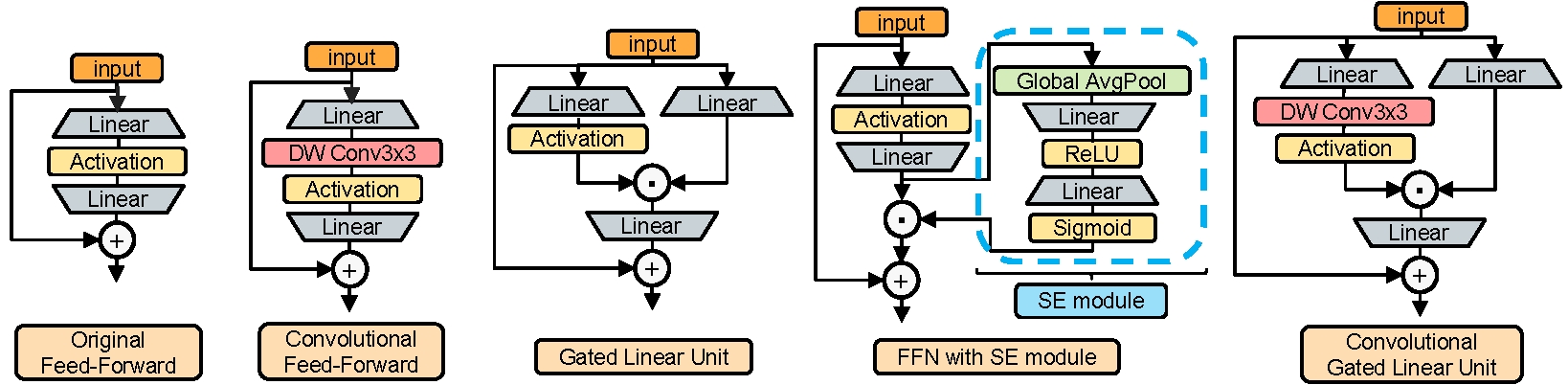

#### Pixel-focused attention (Left) & aggregated attention (Right):

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

#### Convolutional GLU (First on the right):

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

## Results

|

| 37 |

+

|

| 38 |

+

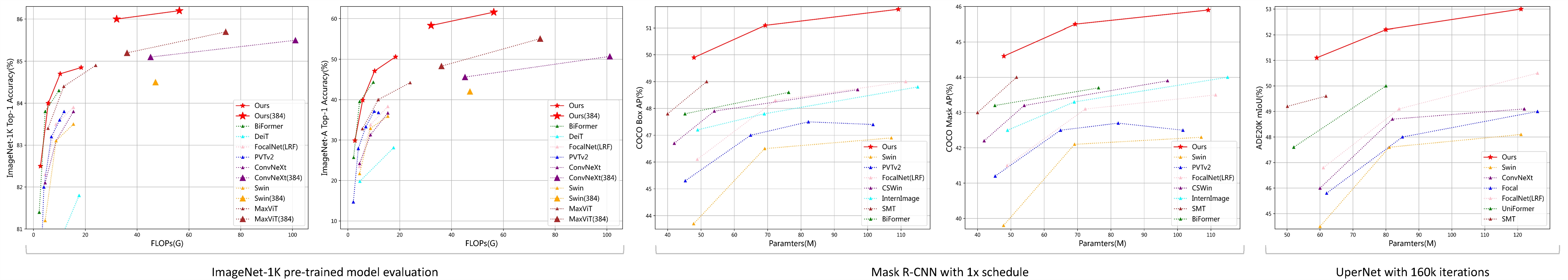

#### Image Classification, Detection and Segmentation:

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

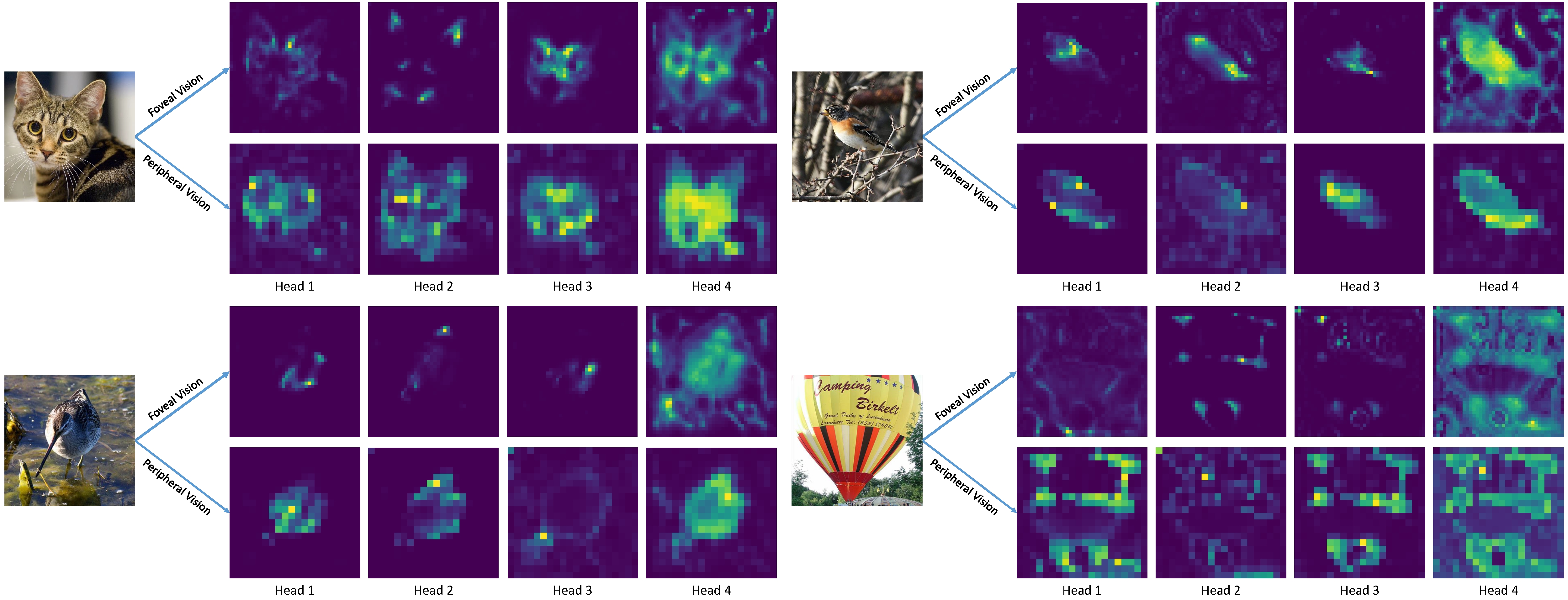

#### Attention Visualization:

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

## Model Zoo

|

| 47 |

+

|

| 48 |

+

### Image Classification

|

| 49 |

+

|

| 50 |

+

***Classification code & weights & configs & training logs are >>>[here](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/ )<<<.***

|

| 51 |

+

|

| 52 |

+

**ImageNet-1K 224x224 pre-trained models:**

|

| 53 |

+

|

| 54 |

+

| Model | #Params | #FLOPs |IN-1K | IN-A | IN-C↓ |IN-R|Sketch|IN-V2|Download |Config| Log |

|

| 55 |

+

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|

|

| 56 |

+

| TransNeXt-Micro|12.8M|2.7G| 82.5 | 29.9 | 50.8|45.8|33.0|72.6|[model](https://huggingface.co/DaiShiResearch/transnext-micro-224-1k/resolve/main/transnext_micro_224_1k.pth?download=true) |[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_micro.py)|[log](https://huggingface.co/DaiShiResearch/transnext-micro-224-1k/raw/main/transnext_micro_224_1k.txt) |

|

| 57 |

+

| TransNeXt-Tiny |28.2M|5.7G| 84.0| 39.9| 46.5|49.6|37.6|73.8|[model](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_tiny.py)|[log](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/raw/main/transnext_tiny_224_1k.txt)|

|

| 58 |

+

| TransNeXt-Small |49.7M|10.3G| 84.7| 47.1| 43.9|52.5| 39.7|74.8 |[model](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_small.py)|[log](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/raw/main/transnext_small_224_1k.txt)|

|

| 59 |

+

| TransNeXt-Base |89.7M|18.4G| 84.8| 50.6|43.5|53.9|41.4|75.1| [model](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_base.py)|[log](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/raw/main/transnext_base_224_1k.txt)|

|

| 60 |

+

|

| 61 |

+

**ImageNet-1K 384x384 fine-tuned models:**

|

| 62 |

+

|

| 63 |

+

| Model | #Params | #FLOPs |IN-1K | IN-A |IN-R|Sketch|IN-V2| Download |Config|

|

| 64 |

+

|:---:|:---:|:---:|:---:| :---:|:---:|:---:| :---:|:---:|:---:|

|

| 65 |

+

| TransNeXt-Small |49.7M|32.1G| 86.0| 58.3|56.4|43.2|76.8| [model](https://huggingface.co/DaiShiResearch/transnext-small-384-1k-ft-1k/resolve/main/transnext_small_384_1k_ft_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/finetune/transnext_small_384.py)|

|

| 66 |

+

| TransNeXt-Base |89.7M|56.3G| 86.2| 61.6|57.7|44.7|77.0| [model](https://huggingface.co/DaiShiResearch/transnext-base-384-1k-ft-1k/resolve/main/transnext_base_384_1k_ft_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/finetune/transnext_base_384.py)|

|

| 67 |

+

|

| 68 |

+

**ImageNet-1K 256x256 pre-trained model fully utilizing aggregated attention at all stages:**

|

| 69 |

+

|

| 70 |

+

*(See Table.9 in Appendix D.6 for details)*

|

| 71 |

+

|

| 72 |

+

| Model |Token mixer| #Params | #FLOPs |IN-1K |Download |Config| Log |

|

| 73 |

+

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|

|

| 74 |

+

|TransNeXt-Micro|**A-A-A-A**|13.1M|3.3G| 82.6 |[model](https://huggingface.co/DaiShiResearch/transnext-micro-AAAA-256-1k/resolve/main/transnext_micro_AAAA_256_1k.pth?download=true) |[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_micro_AAAA_256.py)|[log](https://huggingface.co/DaiShiResearch/transnext-micro-AAAA-256-1k/blob/main/transnext_micro_AAAA_256_1k.txt) |

|

| 75 |

+

|

| 76 |

+

### Object Detection

|

| 77 |

+

***Object detection code & weights & configs & training logs are >>>[here](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/ )<<<.***

|

| 78 |

+

|

| 79 |

+

**COCO object detection and instance segmentation results using the Mask R-CNN method:**

|

| 80 |

+

|

| 81 |

+

| Backbone | Pretrained Model| Lr Schd| box mAP | mask mAP | #Params | Download |Config| Log |

|

| 82 |

+

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|:---:|

|

| 83 |

+

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true) |1x|49.9|44.6|47.9M|[model](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-tiny-coco/resolve/main/mask_rcnn_transnext_tiny_fpn_1x_coco_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/maskrcnn/configs/mask_rcnn_transnext_tiny_fpn_1x_coco.py)|[log](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-tiny-coco/raw/main/mask_rcnn_transnext_tiny_fpn_1x_coco_in1k.log.json)|

|

| 84 |

+

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true) |1x|51.1|45.5|69.3M|[model](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-small-coco/resolve/main/mask_rcnn_transnext_small_fpn_1x_coco_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/maskrcnn/configs/mask_rcnn_transnext_small_fpn_1x_coco.py)|[log](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-small-coco/raw/main/mask_rcnn_transnext_small_fpn_1x_coco_in1k.log.json)|

|

| 85 |

+

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true) |1x|51.7|45.9|109.2M|[model](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-base-coco/resolve/main/mask_rcnn_transnext_base_fpn_1x_coco_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/maskrcnn/configs/mask_rcnn_transnext_base_fpn_1x_coco.py)|[log](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-base-coco/raw/main/mask_rcnn_transnext_base_fpn_1x_coco_in1k.log.json)|

|

| 86 |

+

|

| 87 |

+

**COCO object detection results using the DINO method:**

|

| 88 |

+

|

| 89 |

+

| Backbone | Pretrained Model| scales | epochs | box mAP | #Params | Download |Config| Log |

|

| 90 |

+

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|:---:|

|

| 91 |

+

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|4scale | 12|55.1|47.8M|[model](https://huggingface.co/DaiShiResearch/dino-4scale-transnext-tiny-coco/resolve/main/dino_4scale_transnext_tiny_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-4scale_transnext_tiny-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-4scale-transnext-tiny-coco/raw/main/dino_4scale_transnext_tiny_12e_in1k.json)|

|

| 92 |

+

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|5scale | 12|55.7|48.1M|[model](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-tiny-coco/resolve/main/dino_5scale_transnext_tiny_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-5scale_transnext_tiny-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-tiny-coco/raw/main/dino_5scale_transnext_tiny_12e_in1k.json)|

|

| 93 |

+

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|5scale | 12|56.6|69.6M|[model](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-small-coco/resolve/main/dino_5scale_transnext_small_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-5scale_transnext_small-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-small-coco/raw/main/dino_5scale_transnext_small_12e_in1k.json)|

|

| 94 |

+

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|5scale | 12|57.1|110M|[model](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-base-coco/resolve/main/dino_5scale_transnext_base_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-5scale_transnext_base-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-base-coco/raw/main/dino_5scale_transnext_base_12e_in1k.json)|

|

| 95 |

+

|

| 96 |

+

### Semantic Segmentation

|

| 97 |

+

***Semantic segmentation code & weights & configs & training logs are >>>[here](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/ )<<<.***

|

| 98 |

+

|

| 99 |

+

**ADE20K semantic segmentation results using the UPerNet method:**

|

| 100 |

+

|

| 101 |

+

| Backbone | Pretrained Model| Crop Size |Lr Schd| mIoU|mIoU (ms+flip)| #Params | Download |Config| Log |

|

| 102 |

+

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

|

| 103 |

+

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|512x512|160K|51.1|51.5/51.7|59M|[model](https://huggingface.co/DaiShiResearch/upernet-transnext-tiny-ade/resolve/main/upernet_transnext_tiny_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/upernet/configs/upernet_transnext_tiny_512x512_160k_ade20k_ss.py)|[log](https://huggingface.co/DaiShiResearch/upernet-transnext-tiny-ade/blob/main/upernet_transnext_tiny_512x512_160k_ade20k_ss.log.json)|

|

| 104 |

+

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|512x512|160K|52.2|52.5/51.8|80M|[model](https://huggingface.co/DaiShiResearch/upernet-transnext-small-ade/resolve/main/upernet_transnext_small_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/upernet/configs/upernet_transnext_small_512x512_160k_ade20k_ss.py)|[log](https://huggingface.co/DaiShiResearch/upernet-transnext-small-ade/blob/main/upernet_transnext_small_512x512_160k_ade20k_ss.log.json)|

|

| 105 |

+

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|512x512|160K|53.0|53.5/53.7|121M|[model](https://huggingface.co/DaiShiResearch/upernet-transnext-base-ade/resolve/main/upernet_transnext_base_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/upernet/configs/upernet_transnext_base_512x512_160k_ade20k_ss.py)|[log](https://huggingface.co/DaiShiResearch/upernet-transnext-base-ade/blob/main/upernet_transnext_base_512x512_160k_ade20k_ss.log.json)|

|

| 106 |

+

* In the context of multi-scale evaluation, TransNeXt reports test results under two distinct scenarios: **interpolation** and **extrapolation** of relative position bias.

|

| 107 |

+

|

| 108 |

+

**ADE20K semantic segmentation results using the Mask2Former method:**

|

| 109 |

+

|

| 110 |

+

| Backbone | Pretrained Model| Crop Size |Lr Schd| mIoU| #Params | Download |Config| Log |

|

| 111 |

+

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

|

| 112 |

+

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|512x512|160K|53.4|47.5M|[model](https://huggingface.co/DaiShiResearch/mask2former-transnext-tiny-ade/resolve/main/mask2former_transnext_tiny_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/mask2former/configs/mask2former_transnext_tiny_160k_ade20k-512x512.py)|[log](https://huggingface.co/DaiShiResearch/mask2former-transnext-tiny-ade/raw/main/mask2former_transnext_tiny_512x512_160k_ade20k_in1k.json)|

|

| 113 |

+

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|512x512|160K|54.1|69.0M|[model](https://huggingface.co/DaiShiResearch/mask2former-transnext-small-ade/resolve/main/mask2former_transnext_small_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/mask2former/configs/mask2former_transnext_small_160k_ade20k-512x512.py)|[log](https://huggingface.co/DaiShiResearch/mask2former-transnext-small-ade/raw/main/mask2former_transnext_small_512x512_160k_ade20k_in1k.json)|

|

| 114 |

+

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|512x512|160K|54.7|109M|[model](https://huggingface.co/DaiShiResearch/mask2former-transnext-base-ade/resolve/main/mask2former_transnext_base_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/mask2former/configs/mask2former_transnext_base_160k_ade20k-512x512.py)|[log](https://huggingface.co/DaiShiResearch/mask2former-transnext-base-ade/raw/main/mask2former_transnext_base_512x512_160k_ade20k_in1k.json)|

|

| 115 |

+

|

| 116 |

+

## Citation

|

| 117 |

+

|

| 118 |

+

If you find our work helpful, please consider citing the following bibtex. We would greatly appreciate a star for this

|

| 119 |

+

project.

|

| 120 |

+

|

| 121 |

+

@misc{shi2023transnext,

|

| 122 |

+

author = {Dai Shi},

|

| 123 |

+

title = {TransNeXt: Robust Foveal Visual Perception for Vision Transformers},

|

| 124 |

+

year = {2023},

|

| 125 |

+

eprint = {arXiv:2311.17132},

|

| 126 |

+

archivePrefix={arXiv},

|

| 127 |

+

primaryClass={cs.CV}

|

| 128 |

+

}

|