Update README.md

Browse files

README.md

CHANGED

|

@@ -1,9 +1,31 @@

|

|

| 1 |

-

# TiBERT

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

|

| 3 |

## Citation

|

| 4 |

|

| 5 |

-

|

|

|

|

| 6 |

|

|

|

|

| 7 |

```

|

| 8 |

@INPROCEEDINGS{9945074,

|

| 9 |

author={Liu, Sisi and Deng, Junjie and Sun, Yuan and Zhao, Xiaobing},

|

|

@@ -15,5 +37,4 @@ Please cite our [paper](https://ieeexplore.ieee.org/document/9945074) if you use

|

|

| 15 |

pages={2956-2961},

|

| 16 |

keywords={Vocabulary;Text categorization;Training data;Natural language processing;Data models;Task analysis;Cybernetics;Pre-trained language model;Tibetan;Sentencepiece;TiBERT;Text classification;Question generation},

|

| 17 |

doi={10.1109/SMC53654.2022.9945074}}

|

| 18 |

-

|

| 19 |

```

|

|

|

|

| 1 |

+

# TiBERT

|

| 2 |

+

## Introduction

|

| 3 |

+

To promote the development of Tibetan natural language processing tasks, we collects the large-scale training data from Tibetan websites and constructs a vocabulary that can cover 99.95% of the words in the corpus by using Sentencepiece. Then, we train the Tibetan monolingual pre-trained language model named TiBERT on the data and vocabulary. Finally, we apply TiBERT to the downstream tasks of text classification and question generation, and compare it with classic models and multilingual pre-trained models, the experimental results show that TiBERT can achieve the best performance.

|

| 4 |

+

|

| 5 |

+

## Contributions

|

| 6 |

+

(1) To better express the semantic information of Tibetan and reduce the problem of OOV, We uses the unigram language model of Sentencepiece to segment Tibetan words and constructs a vocabulary that can cover 99.95% of the words in the corpus.

|

| 7 |

+

(2) To further promote the development of various downstream tasks of Tibetan natural language processing, We collected a large-scale Tibetan dataset and trained the monolingual Tibetan pre-trained language model named TiBERT.

|

| 8 |

+

(3) To evaluate the performance of TiBERT, We conducts comparative experiments on the two downstream tasks of text classification and question generation. The experimental results show that the TiBERT is effective.

|

| 9 |

+

|

| 10 |

+

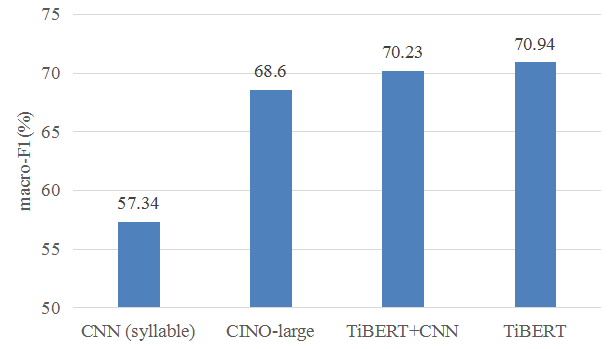

## Experimental Result

|

| 11 |

+

We conduct text classification experiments on the Tibetan News Classification Corpus (TNCC:https://github.com/FudanNLP/Tibetan-Classification) which released by the Natural Language Processing Laboratory of Fudan University for text classification.

|

| 12 |

+

#### Performances on title classification

|

| 13 |

+

|

| 14 |

+

#### Performances on document classification

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

## Download

|

| 18 |

+

|

| 19 |

+

TiBERT Model: https://huggingface.co/CMLI-NLP/TiBERT

|

| 20 |

+

|

| 21 |

+

TiBERT Paper: https://ieeexplore.ieee.org/document/9945074

|

| 22 |

|

| 23 |

## Citation

|

| 24 |

|

| 25 |

+

Plain Text:

|

| 26 |

+

S. Liu, J. Deng, Y. Sun and X. Zhao, "TiBERT: Tibetan Pre-trained Language Model," 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 2022, pp. 2956-2961, doi: 10.1109/SMC53654.2022.9945074.

|

| 27 |

|

| 28 |

+

BibTeX:

|

| 29 |

```

|

| 30 |

@INPROCEEDINGS{9945074,

|

| 31 |

author={Liu, Sisi and Deng, Junjie and Sun, Yuan and Zhao, Xiaobing},

|

|

|

|

| 37 |

pages={2956-2961},

|

| 38 |

keywords={Vocabulary;Text categorization;Training data;Natural language processing;Data models;Task analysis;Cybernetics;Pre-trained language model;Tibetan;Sentencepiece;TiBERT;Text classification;Question generation},

|

| 39 |

doi={10.1109/SMC53654.2022.9945074}}

|

|

|

|

| 40 |

```

|