code

stringlengths 38

801k

| repo_path

stringlengths 6

263

|

|---|---|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # 09 Strain Gage

#

# This is one of the most commonly used sensor. It is used in many transducers. Its fundamental operating principle is fairly easy to understand and it will be the purpose of this lecture.

#

# A strain gage is essentially a thin wire that is wrapped on film of plastic.

# <img src="img/StrainGage.png" width="200">

# The strain gage is then mounted (glued) on the part for which the strain must be measured.

# <img src="img/Strain_gauge_2.jpg" width="200">

#

# ## Stress, Strain

# When a beam is under axial load, the axial stress, $\sigma_a$, is defined as:

# \begin{align*}

# \sigma_a = \frac{F}{A}

# \end{align*}

# with $F$ the axial load, and $A$ the cross sectional area of the beam under axial load.

#

# <img src="img/BeamUnderStrain.png" width="200">

#

# Under the load, the beam of length $L$ will extend by $dL$, giving rise to the definition of strain, $\epsilon_a$:

# \begin{align*}

# \epsilon_a = \frac{dL}{L}

# \end{align*}

# The beam will also contract laterally: the cross sectional area is reduced by $dA$. This results in a transverval strain $\epsilon_t$. The transversal and axial strains are related by the Poisson's ratio:

# \begin{align*}

# \nu = - \frac{\epsilon_t }{\epsilon_a}

# \end{align*}

# For a metal the Poission's ratio is typically $\nu = 0.3$, for an incompressible material, such as rubber (or water), $\nu = 0.5$.

#

# Within the elastic limit, the axial stress and axial strain are related through Hooke's law by the Young's modulus, $E$:

# \begin{align*}

# \sigma_a = E \epsilon_a

# \end{align*}

#

# <img src="img/ElasticRegime.png" width="200">

# ## Resistance of a wire

#

# The electrical resistance of a wire $R$ is related to its physical properties (the electrical resistiviy, $\rho$ in $\Omega$/m) and its geometry: length $L$ and cross sectional area $A$.

#

# \begin{align*}

# R = \frac{\rho L}{A}

# \end{align*}

#

# Mathematically, the change in wire dimension will result inchange in its electrical resistance. This can be derived from first principle:

# \begin{align}

# \frac{dR}{R} = \frac{d\rho}{\rho} + \frac{dL}{L} - \frac{dA}{A}

# \end{align}

# If the wire has a square cross section, then:

# \begin{align*}

# A & = L'^2 \\

# \frac{dA}{A} & = \frac{d(L'^2)}{L'^2} = \frac{2L'dL'}{L'^2} = 2 \frac{dL'}{L'}

# \end{align*}

# We have related the change in cross sectional area to the transversal strain.

# \begin{align*}

# \epsilon_t = \frac{dL'}{L'}

# \end{align*}

# Using the Poisson's ratio, we can relate then relate the change in cross-sectional area ($dA/A$) to axial strain $\epsilon_a = dL/L$.

# \begin{align*}

# \epsilon_t &= - \nu \epsilon_a \\

# \frac{dL'}{L'} &= - \nu \frac{dL}{L} \; \text{or}\\

# \frac{dA}{A} & = 2\frac{dL'}{L'} = -2 \nu \frac{dL}{L}

# \end{align*}

# Finally we can substitute express $dA/A$ in eq. for $dR/R$ and relate change in resistance to change of wire geometry, remembering that for a metal $\nu =0.3$:

# \begin{align}

# \frac{dR}{R} & = \frac{d\rho}{\rho} + \frac{dL}{L} - \frac{dA}{A} \\

# & = \frac{d\rho}{\rho} + \frac{dL}{L} - (-2\nu \frac{dL}{L}) \\

# & = \frac{d\rho}{\rho} + 1.6 \frac{dL}{L} = \frac{d\rho}{\rho} + 1.6 \epsilon_a

# \end{align}

# It also happens that for most metals, the resistivity increases with axial strain. In general, one can then related the change in resistance to axial strain by defining the strain gage factor:

# \begin{align}

# S = 1.6 + \frac{d\rho}{\rho}\cdot \frac{1}{\epsilon_a}

# \end{align}

# and finally, we have:

# \begin{align*}

# \frac{dR}{R} = S \epsilon_a

# \end{align*}

# $S$ is materials dependent and is typically equal to 2.0 for most commercially availabe strain gages. It is dimensionless.

#

# Strain gages are made of thin wire that is wraped in several loops, effectively increasing the length of the wire and therefore the sensitivity of the sensor.

#

# _Question:

#

# Explain why a longer wire is necessary to increase the sensitivity of the sensor_.

#

# Most commercially available strain gages have a nominal resistance (resistance under no load, $R_{ini}$) of 120 or 350 $\Omega$.

#

# Within the elastic regime, strain is typically within the range $10^{-6} - 10^{-3}$, in fact strain is expressed in unit of microstrain, with a 1 microstrain = $10^{-6}$. Therefore, changes in resistances will be of the same order. If one were to measure resistances, we will need a dynamic range of 120 dB, whih is typically very expensive. Instead, one uses the Wheatstone bridge to transform the change in resistance to a voltage, which is easier to measure and does not require such a large dynamic range.

# ## Wheatstone bridge:

# <img src="img/WheatstoneBridge.png" width="200">

#

# The output voltage is related to the difference in resistances in the bridge:

# \begin{align*}

# \frac{V_o}{V_s} = \frac{R_1R_3-R_2R_4}{(R_1+R_4)(R_2+R_3)}

# \end{align*}

#

# If the bridge is balanced, then $V_o = 0$, it implies: $R_1/R_2 = R_4/R_3$.

#

# In practice, finding a set of resistors that balances the bridge is challenging, and a potentiometer is used as one of the resistances to do minor adjustement to balance the bridge. If one did not do the adjustement (ie if we did not zero the bridge) then all the measurement will have an offset or bias that could be removed in a post-processing phase, as long as the bias stayed constant.

#

# If each resistance $R_i$ is made to vary slightly around its initial value, ie $R_i = R_{i,ini} + dR_i$. For simplicity, we will assume that the initial value of the four resistances are equal, ie $R_{1,ini} = R_{2,ini} = R_{3,ini} = R_{4,ini} = R_{ini}$. This implies that the bridge was initially balanced, then the output voltage would be:

#

# \begin{align*}

# \frac{V_o}{V_s} = \frac{1}{4} \left( \frac{dR_1}{R_{ini}} - \frac{dR_2}{R_{ini}} + \frac{dR_3}{R_{ini}} - \frac{dR_4}{R_{ini}} \right)

# \end{align*}

#

# Note here that the changes in $R_1$ and $R_3$ have a positive effect on $V_o$, while the changes in $R_2$ and $R_4$ have a negative effect on $V_o$. In practice, this means that is a beam is a in tension, then a strain gage mounted on the branch 1 or 3 of the Wheatstone bridge will produce a positive voltage, while a strain gage mounted on branch 2 or 4 will produce a negative voltage. One takes advantage of this to increase sensitivity to measure strain.

#

# ### Quarter bridge

# One uses only one quarter of the bridge, ie strain gages are only mounted on one branch of the bridge.

#

# \begin{align*}

# \frac{V_o}{V_s} = \pm \frac{1}{4} \epsilon_a S

# \end{align*}

# Sensitivity, $G$:

# \begin{align*}

# G = \frac{V_o}{\epsilon_a} = \pm \frac{1}{4}S V_s

# \end{align*}

#

#

# ### Half bridge

# One uses half of the bridge, ie strain gages are mounted on two branches of the bridge.

#

# \begin{align*}

# \frac{V_o}{V_s} = \pm \frac{1}{2} \epsilon_a S

# \end{align*}

#

# ### Full bridge

#

# One uses of the branches of the bridge, ie strain gages are mounted on each branch.

#

# \begin{align*}

# \frac{V_o}{V_s} = \pm \epsilon_a S

# \end{align*}

#

# Therefore, as we increase the order of bridge, the sensitivity of the instrument increases. However, one should be carefull how we mount the strain gages as to not cancel out their measurement.

# _Exercise_

#

# 1- Wheatstone bridge

#

# <img src="img/WheatstoneBridge.png" width="200">

#

# > How important is it to know \& match the resistances of the resistors you employ to create your bridge?

# > How would you do that practically?

# > Assume $R_1=120\,\Omega$, $R_2=120\,\Omega$, $R_3=120\,\Omega$, $R_4=110\,\Omega$, $V_s=5.00\,\text{V}$. What is $V_\circ$?

Vs = 5.00

Vo = (120**2-120*110)/(230*240) * Vs

print('Vo = ',Vo, ' V')

# typical range in strain a strain gauge can measure

# 1 -1000 micro-Strain

AxialStrain = 1000*10**(-6) # axial strain

StrainGageFactor = 2

R_ini = 120 # Ohm

R_1 = R_ini+R_ini*StrainGageFactor*AxialStrain

print(R_1)

Vo = (120**2-120*(R_1))/((120+R_1)*240) * Vs

print('Vo = ', Vo, ' V')

# > How important is it to know \& match the resistances of the resistors you employ to create your bridge?

# > How would you do that practically?

# > Assume $R_1= R_2 =R_3=120\,\Omega$, $R_4=120.01\,\Omega$, $V_s=5.00\,\text{V}$. What is $V_\circ$?

Vs = 5.00

Vo = (120**2-120*120.01)/(240.01*240) * Vs

print(Vo)

# 2- Strain gage 1:

#

# One measures the strain on a bridge steel beam. The modulus of elasticity is $E=190$ GPa. Only one strain gage is mounted on the bottom of the beam; the strain gage factor is $S=2.02$.

#

# > a) What kind of electronic circuit will you use? Draw a sketch of it.

#

# > b) Assume all your resistors including the unloaded strain gage are balanced and measure $120\,\Omega$, and that the strain gage is at location $R_2$. The supply voltage is $5.00\,\text{VDC}$. Will $V_\circ$ be positive or negative when a downward load is added?

# In practice, we cannot have all resistances = 120 $\Omega$. at zero load, the bridge will be unbalanced (show $V_o \neq 0$). How could we balance our bridge?

#

# Use a potentiometer to balance bridge, for the load cell, we ''zero'' the instrument.

#

# Other option to zero-out our instrument? Take data at zero-load, record the voltage, $V_{o,noload}$. Substract $V_{o,noload}$ to my data.

# > c) For a loading in which $V_\circ = -1.25\,\text{mV}$, calculate the strain $\epsilon_a$ in units of microstrain.

# \begin{align*}

# \frac{V_o}{V_s} & = - \frac{1}{4} \epsilon_a S\\

# \epsilon_a & = -\frac{4}{S} \frac{V_o}{V_s}

# \end{align*}

S = 2.02

Vo = -0.00125

Vs = 5

eps_a = -1*(4/S)*(Vo/Vs)

print(eps_a)

# > d) Calculate the axial stress (in MPa) in the beam under this load.

# > e) You now want more sensitivity in your measurement, you install a second strain gage on to

# p of the beam. Which resistor should you use for this second active strain gage?

#

# > f) With this new setup and the same applied load than previously, what should be the output voltage?

# 3- Strain Gage with Long Lead Wires

#

# <img src="img/StrainGageLongWires.png" width="360">

#

# A quarter bridge strain gage Wheatstone bridge circuit is constructed with $120\,\Omega$ resistors and a $120\,\Omega$ strain gage. For this practical application, the strain gage is located very far away form the DAQ station and the lead wires to the strain gage are $10\,\text{m}$ long and the lead wire have a resistance of $0.080\,\Omega/\text{m}$. The lead wire resistance can lead to problems since $R_{lead}$ changes with temperature.

#

# > Design a modified circuit that will cancel out the effect of the lead wires.

# ## Homework

#

|

Lectures/09_StrainGage.ipynb

|

# ---

# jupyter:

# jupytext:

# split_at_heading: true

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

#export

from fastai.basics import *

from fastai.tabular.core import *

from fastai.tabular.model import *

from fastai.tabular.data import *

#hide

from nbdev.showdoc import *

# +

#default_exp tabular.learner

# -

# # Tabular learner

#

# > The function to immediately get a `Learner` ready to train for tabular data

# The main function you probably want to use in this module is `tabular_learner`. It will automatically create a `TabulaModel` suitable for your data and infer the irght loss function. See the [tabular tutorial](http://docs.fast.ai/tutorial.tabular) for an example of use in context.

# ## Main functions

#export

@log_args(but_as=Learner.__init__)

class TabularLearner(Learner):

"`Learner` for tabular data"

def predict(self, row):

tst_to = self.dls.valid_ds.new(pd.DataFrame(row).T)

tst_to.process()

tst_to.conts = tst_to.conts.astype(np.float32)

dl = self.dls.valid.new(tst_to)

inp,preds,_,dec_preds = self.get_preds(dl=dl, with_input=True, with_decoded=True)

i = getattr(self.dls, 'n_inp', -1)

b = (*tuplify(inp),*tuplify(dec_preds))

full_dec = self.dls.decode((*tuplify(inp),*tuplify(dec_preds)))

return full_dec,dec_preds[0],preds[0]

show_doc(TabularLearner, title_level=3)

# It works exactly as a normal `Learner`, the only difference is that it implements a `predict` method specific to work on a row of data.

#export

@log_args(to_return=True, but_as=Learner.__init__)

@delegates(Learner.__init__)

def tabular_learner(dls, layers=None, emb_szs=None, config=None, n_out=None, y_range=None, **kwargs):

"Get a `Learner` using `dls`, with `metrics`, including a `TabularModel` created using the remaining params."

if config is None: config = tabular_config()

if layers is None: layers = [200,100]

to = dls.train_ds

emb_szs = get_emb_sz(dls.train_ds, {} if emb_szs is None else emb_szs)

if n_out is None: n_out = get_c(dls)

assert n_out, "`n_out` is not defined, and could not be infered from data, set `dls.c` or pass `n_out`"

if y_range is None and 'y_range' in config: y_range = config.pop('y_range')

model = TabularModel(emb_szs, len(dls.cont_names), n_out, layers, y_range=y_range, **config)

return TabularLearner(dls, model, **kwargs)

# If your data was built with fastai, you probably won't need to pass anything to `emb_szs` unless you want to change the default of the library (produced by `get_emb_sz`), same for `n_out` which should be automatically inferred. `layers` will default to `[200,100]` and is passed to `TabularModel` along with the `config`.

#

# Use `tabular_config` to create a `config` and cusotmize the model used. There is just easy access to `y_range` because this argument is often used.

#

# All the other arguments are passed to `Learner`.

path = untar_data(URLs.ADULT_SAMPLE)

df = pd.read_csv(path/'adult.csv')

cat_names = ['workclass', 'education', 'marital-status', 'occupation', 'relationship', 'race']

cont_names = ['age', 'fnlwgt', 'education-num']

procs = [Categorify, FillMissing, Normalize]

dls = TabularDataLoaders.from_df(df, path, procs=procs, cat_names=cat_names, cont_names=cont_names,

y_names="salary", valid_idx=list(range(800,1000)), bs=64)

learn = tabular_learner(dls)

#hide

tst = learn.predict(df.iloc[0])

# +

#hide

#test y_range is passed

learn = tabular_learner(dls, y_range=(0,32))

assert isinstance(learn.model.layers[-1], SigmoidRange)

test_eq(learn.model.layers[-1].low, 0)

test_eq(learn.model.layers[-1].high, 32)

learn = tabular_learner(dls, config = tabular_config(y_range=(0,32)))

assert isinstance(learn.model.layers[-1], SigmoidRange)

test_eq(learn.model.layers[-1].low, 0)

test_eq(learn.model.layers[-1].high, 32)

# -

#export

@typedispatch

def show_results(x:Tabular, y:Tabular, samples, outs, ctxs=None, max_n=10, **kwargs):

df = x.all_cols[:max_n]

for n in x.y_names: df[n+'_pred'] = y[n][:max_n].values

display_df(df)

# ## Export -

#hide

from nbdev.export import notebook2script

notebook2script()

|

nbs/43_tabular.learner.ipynb

|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# <table class="ee-notebook-buttons" align="left">

# <td><a target="_blank" href="https://github.com/giswqs/earthengine-py-notebooks/tree/master/Algorithms/landsat_radiance.ipynb"><img width=32px src="https://www.tensorflow.org/images/GitHub-Mark-32px.png" /> View source on GitHub</a></td>

# <td><a target="_blank" href="https://nbviewer.jupyter.org/github/giswqs/earthengine-py-notebooks/blob/master/Algorithms/landsat_radiance.ipynb"><img width=26px src="https://upload.wikimedia.org/wikipedia/commons/thumb/3/38/Jupyter_logo.svg/883px-Jupyter_logo.svg.png" />Notebook Viewer</a></td>

# <td><a target="_blank" href="https://colab.research.google.com/github/giswqs/earthengine-py-notebooks/blob/master/Algorithms/landsat_radiance.ipynb"><img src="https://www.tensorflow.org/images/colab_logo_32px.png" /> Run in Google Colab</a></td>

# </table>

# ## Install Earth Engine API and geemap

# Install the [Earth Engine Python API](https://developers.google.com/earth-engine/python_install) and [geemap](https://geemap.org). The **geemap** Python package is built upon the [ipyleaflet](https://github.com/jupyter-widgets/ipyleaflet) and [folium](https://github.com/python-visualization/folium) packages and implements several methods for interacting with Earth Engine data layers, such as `Map.addLayer()`, `Map.setCenter()`, and `Map.centerObject()`.

# The following script checks if the geemap package has been installed. If not, it will install geemap, which automatically installs its [dependencies](https://github.com/giswqs/geemap#dependencies), including earthengine-api, folium, and ipyleaflet.

# +

# Installs geemap package

import subprocess

try:

import geemap

except ImportError:

print('Installing geemap ...')

subprocess.check_call(["python", '-m', 'pip', 'install', 'geemap'])

# -

import ee

import geemap

# ## Create an interactive map

# The default basemap is `Google Maps`. [Additional basemaps](https://github.com/giswqs/geemap/blob/master/geemap/basemaps.py) can be added using the `Map.add_basemap()` function.

Map = geemap.Map(center=[40,-100], zoom=4)

Map

# ## Add Earth Engine Python script

# +

# Add Earth Engine dataset

# Load a raw Landsat scene and display it.

raw = ee.Image('LANDSAT/LC08/C01/T1/LC08_044034_20140318')

Map.centerObject(raw, 10)

Map.addLayer(raw, {'bands': ['B4', 'B3', 'B2'], 'min': 6000, 'max': 12000}, 'raw')

# Convert the raw data to radiance.

radiance = ee.Algorithms.Landsat.calibratedRadiance(raw)

Map.addLayer(radiance, {'bands': ['B4', 'B3', 'B2'], 'max': 90}, 'radiance')

# Convert the raw data to top-of-atmosphere reflectance.

toa = ee.Algorithms.Landsat.TOA(raw)

Map.addLayer(toa, {'bands': ['B4', 'B3', 'B2'], 'max': 0.2}, 'toa reflectance')

# -

# ## Display Earth Engine data layers

Map.addLayerControl() # This line is not needed for ipyleaflet-based Map.

Map

|

Algorithms/landsat_radiance.ipynb

|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# +

# Copyright 2020 NVIDIA Corporation. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

# -

# <img src="http://developer.download.nvidia.com/compute/machine-learning/frameworks/nvidia_logo.png" style="width: 90px; float: right;">

#

# # Object Detection with TRTorch (SSD)

# ---

# ## Overview

#

#

# In PyTorch 1.0, TorchScript was introduced as a method to separate your PyTorch model from Python, make it portable and optimizable.

#

# TRTorch is a compiler that uses TensorRT (NVIDIA's Deep Learning Optimization SDK and Runtime) to optimize TorchScript code. It compiles standard TorchScript modules into ones that internally run with TensorRT optimizations.

#

# TensorRT can take models from any major framework and specifically tune them to perform better on specific target hardware in the NVIDIA family, and TRTorch enables us to continue to remain in the PyTorch ecosystem whilst doing so. This allows us to leverage the great features in PyTorch, including module composability, its flexible tensor implementation, data loaders and more. TRTorch is available to use with both PyTorch and LibTorch.

#

# To get more background information on this, we suggest the **lenet-getting-started** notebook as a primer for getting started with TRTorch.

# ### Learning objectives

#

# This notebook demonstrates the steps for compiling a TorchScript module with TRTorch on a pretrained SSD network, and running it to test the speedup obtained.

#

# ## Contents

# 1. [Requirements](#1)

# 2. [SSD Overview](#2)

# 3. [Creating TorchScript modules](#3)

# 4. [Compiling with TRTorch](#4)

# 5. [Running Inference](#5)

# 6. [Measuring Speedup](#6)

# 7. [Conclusion](#7)

# ---

# <a id="1"></a>

# ## 1. Requirements

#

# Follow the steps in `notebooks/README` to prepare a Docker container, within which you can run this demo notebook.

#

# In addition to that, run the following cell to obtain additional libraries specific to this demo.

# Known working versions

# !pip install numpy==1.21.2 scipy==1.5.2 Pillow==6.2.0 scikit-image==0.17.2 matplotlib==3.3.0

# ---

# <a id="2"></a>

# ## 2. SSD

#

# ### Single Shot MultiBox Detector model for object detection

#

# _ | _

# - | -

#  |

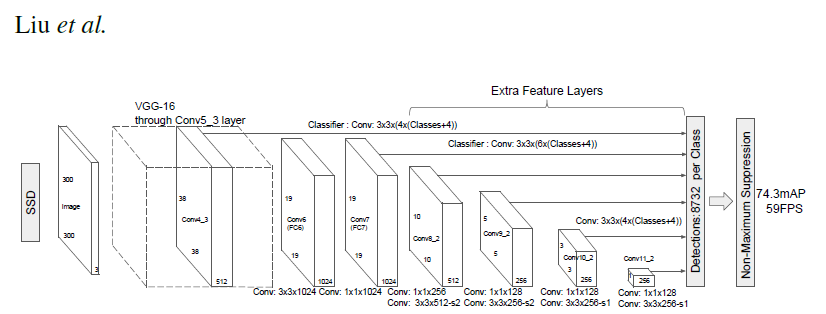

# PyTorch has a model repository called the PyTorch Hub, which is a source for high quality implementations of common models. We can get our SSD model pretrained on [COCO](https://cocodataset.org/#home) from there.

#

# ### Model Description

#

# This SSD300 model is based on the

# [SSD: Single Shot MultiBox Detector](https://arxiv.org/abs/1512.02325) paper, which

# describes SSD as “a method for detecting objects in images using a single deep neural network".

# The input size is fixed to 300x300.

#

# The main difference between this model and the one described in the paper is in the backbone.

# Specifically, the VGG model is obsolete and is replaced by the ResNet-50 model.

#

# From the

# [Speed/accuracy trade-offs for modern convolutional object detectors](https://arxiv.org/abs/1611.10012)

# paper, the following enhancements were made to the backbone:

# * The conv5_x, avgpool, fc and softmax layers were removed from the original classification model.

# * All strides in conv4_x are set to 1x1.

#

# The backbone is followed by 5 additional convolutional layers.

# In addition to the convolutional layers, we attached 6 detection heads:

# * The first detection head is attached to the last conv4_x layer.

# * The other five detection heads are attached to the corresponding 5 additional layers.

#

# Detector heads are similar to the ones referenced in the paper, however,

# they are enhanced by additional BatchNorm layers after each convolution.

#

# More information about this SSD model is available at Nvidia's "DeepLearningExamples" Github [here](https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Detection/SSD).

import torch

torch.hub._validate_not_a_forked_repo=lambda a,b,c: True

# List of available models in PyTorch Hub from Nvidia/DeepLearningExamples

torch.hub.list('NVIDIA/DeepLearningExamples:torchhub')

# load SSD model pretrained on COCO from Torch Hub

precision = 'fp32'

ssd300 = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd', model_math=precision);

# Setting `precision="fp16"` will load a checkpoint trained with mixed precision

# into architecture enabling execution on Tensor Cores. Handling mixed precision data requires the Apex library.

# ### Sample Inference

# We can now run inference on the model. This is demonstrated below using sample images from the COCO 2017 Validation set.

# +

# Sample images from the COCO validation set

uris = [

'http://images.cocodataset.org/val2017/000000397133.jpg',

'http://images.cocodataset.org/val2017/000000037777.jpg',

'http://images.cocodataset.org/val2017/000000252219.jpg'

]

# For convenient and comprehensive formatting of input and output of the model, load a set of utility methods.

utils = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_ssd_processing_utils')

# Format images to comply with the network input

inputs = [utils.prepare_input(uri) for uri in uris]

tensor = utils.prepare_tensor(inputs, False)

# The model was trained on COCO dataset, which we need to access in order to

# translate class IDs into object names.

classes_to_labels = utils.get_coco_object_dictionary()

# +

# Next, we run object detection

model = ssd300.eval().to("cuda")

detections_batch = model(tensor)

# By default, raw output from SSD network per input image contains 8732 boxes with

# localization and class probability distribution.

# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

results_per_input = utils.decode_results(detections_batch)

best_results_per_input = [utils.pick_best(results, 0.40) for results in results_per_input]

# -

# ### Visualize results

# +

from matplotlib import pyplot as plt

import matplotlib.patches as patches

# The utility plots the images and predicted bounding boxes (with confidence scores).

def plot_results(best_results):

for image_idx in range(len(best_results)):

fig, ax = plt.subplots(1)

# Show original, denormalized image...

image = inputs[image_idx] / 2 + 0.5

ax.imshow(image)

# ...with detections

bboxes, classes, confidences = best_results[image_idx]

for idx in range(len(bboxes)):

left, bot, right, top = bboxes[idx]

x, y, w, h = [val * 300 for val in [left, bot, right - left, top - bot]]

rect = patches.Rectangle((x, y), w, h, linewidth=1, edgecolor='r', facecolor='none')

ax.add_patch(rect)

ax.text(x, y, "{} {:.0f}%".format(classes_to_labels[classes[idx] - 1], confidences[idx]*100), bbox=dict(facecolor='white', alpha=0.5))

plt.show()

# -

# Visualize results without TRTorch/TensorRT

plot_results(best_results_per_input)

# ### Benchmark utility

# +

import time

import numpy as np

import torch.backends.cudnn as cudnn

cudnn.benchmark = True

# Helper function to benchmark the model

def benchmark(model, input_shape=(1024, 1, 32, 32), dtype='fp32', nwarmup=50, nruns=1000):

input_data = torch.randn(input_shape)

input_data = input_data.to("cuda")

if dtype=='fp16':

input_data = input_data.half()

print("Warm up ...")

with torch.no_grad():

for _ in range(nwarmup):

features = model(input_data)

torch.cuda.synchronize()

print("Start timing ...")

timings = []

with torch.no_grad():

for i in range(1, nruns+1):

start_time = time.time()

pred_loc, pred_label = model(input_data)

torch.cuda.synchronize()

end_time = time.time()

timings.append(end_time - start_time)

if i%10==0:

print('Iteration %d/%d, avg batch time %.2f ms'%(i, nruns, np.mean(timings)*1000))

print("Input shape:", input_data.size())

print("Output location prediction size:", pred_loc.size())

print("Output label prediction size:", pred_label.size())

print('Average batch time: %.2f ms'%(np.mean(timings)*1000))

# -

# We check how well the model performs **before** we use TRTorch/TensorRT

# Model benchmark without TRTorch/TensorRT

model = ssd300.eval().to("cuda")

benchmark(model, input_shape=(128, 3, 300, 300), nruns=100)

# ---

# <a id="3"></a>

# ## 3. Creating TorchScript modules

# To compile with TRTorch, the model must first be in **TorchScript**. TorchScript is a programming language included in PyTorch which removes the Python dependency normal PyTorch models have. This conversion is done via a JIT compiler which given a PyTorch Module will generate an equivalent TorchScript Module. There are two paths that can be used to generate TorchScript: **Tracing** and **Scripting**. <br>

# - Tracing follows execution of PyTorch generating ops in TorchScript corresponding to what it sees. <br>

# - Scripting does an analysis of the Python code and generates TorchScript, this allows the resulting graph to include control flow which tracing cannot do.

#

# Tracing however due to its simplicity is more likely to compile successfully with TRTorch (though both systems are supported).

model = ssd300.eval().to("cuda")

traced_model = torch.jit.trace(model, [torch.randn((1,3,300,300)).to("cuda")])

# If required, we can also save this model and use it independently of Python.

# This is just an example, and not required for the purposes of this demo

torch.jit.save(traced_model, "ssd_300_traced.jit.pt")

# Obtain the average time taken by a batch of input with Torchscript compiled modules

benchmark(traced_model, input_shape=(128, 3, 300, 300), nruns=100)

# ---

# <a id="4"></a>

# ## 4. Compiling with TRTorch

# TorchScript modules behave just like normal PyTorch modules and are intercompatible. From TorchScript we can now compile a TensorRT based module. This module will still be implemented in TorchScript but all the computation will be done in TensorRT.

# +

import trtorch

# The compiled module will have precision as specified by "op_precision".

# Here, it will have FP16 precision.

trt_model = trtorch.compile(traced_model, {

"inputs": [trtorch.Input((3, 3, 300, 300))],

"enabled_precisions": {torch.float, torch.half}, # Run with FP16

"workspace_size": 1 << 20

})

# -

# ---

# <a id="5"></a>

# ## 5. Running Inference

# Next, we run object detection

# +

# using a TRTorch module is exactly the same as how we usually do inference in PyTorch i.e. model(inputs)

detections_batch = trt_model(tensor.to(torch.half)) # convert the input to half precision

# By default, raw output from SSD network per input image contains 8732 boxes with

# localization and class probability distribution.

# Let’s filter this output to only get reasonable detections (confidence>40%) in a more comprehensive format.

results_per_input = utils.decode_results(detections_batch)

best_results_per_input_trt = [utils.pick_best(results, 0.40) for results in results_per_input]

# -

# Now, let's visualize our predictions!

#

# Visualize results with TRTorch/TensorRT

plot_results(best_results_per_input_trt)

# We get similar results as before!

# ---

# ## 6. Measuring Speedup

# We can run the benchmark function again to see the speedup gained! Compare this result with the same batch-size of input in the case without TRTorch/TensorRT above.

# +

batch_size = 128

# Recompiling with batch_size we use for evaluating performance

trt_model = trtorch.compile(traced_model, {

"inputs": [trtorch.Input((batch_size, 3, 300, 300))],

"enabled_precisions": {torch.float, torch.half}, # Run with FP16

"workspace_size": 1 << 20

})

benchmark(trt_model, input_shape=(batch_size, 3, 300, 300), nruns=100, dtype="fp16")

# -

# ---

# ## 7. Conclusion

#

# In this notebook, we have walked through the complete process of compiling a TorchScript SSD300 model with TRTorch, and tested the performance impact of the optimization. We find that using the TRTorch compiled model, we gain significant speedup in inference without any noticeable drop in performance!

# ### Details

# For detailed information on model input and output,

# training recipies, inference and performance visit:

# [github](https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Detection/SSD)

# and/or [NGC](https://ngc.nvidia.com/catalog/model-scripts/nvidia:ssd_for_pytorch)

#

# ### References

#

# - [SSD: Single Shot MultiBox Detector](https://arxiv.org/abs/1512.02325) paper

# - [Speed/accuracy trade-offs for modern convolutional object detectors](https://arxiv.org/abs/1611.10012) paper

# - [SSD on NGC](https://ngc.nvidia.com/catalog/model-scripts/nvidia:ssd_for_pytorch)

# - [SSD on github](https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Detection/SSD)

|

notebooks/ssd-object-detection-demo.ipynb

|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # Setting up

# +

# Dependencies

# %matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import seaborn as sns

from scipy.stats import sem

plt.style.use('seaborn')

# Hide warning messages in notebook

# import warnings

# warnings.filterwarnings('ignore')

# -

# # Importing 4 csv files and merging them into one

# Import datasets

demo_2016 = pd.read_csv("assets/data/2016_demo_data.csv")

demo_2017 = pd.read_csv("assets/data/2017_demo_data.csv")

demo_2018 = pd.read_csv("assets/data/2018_demo_data.csv")

demo_2019 = pd.read_csv("assets/data/2019_demo_data.csv")

# Append datasets

final_df = demo_2016.append(demo_2017, ignore_index=True)

final_df = final_df.append(demo_2018, ignore_index=True)

final_df = final_df.append(demo_2019, ignore_index=True)

final_df

# +

# Export the dataframe (do this Only Once!)

# final_df.to_csv("assets/data/final_demo_data.csv", index=False)

# -

# # Importing the final csv file

final_demo = pd.read_csv("assets/data/final_demo_data.csv")

final_demo.head()

# # Checking the dataset

# Type of variables

final_demo.dtypes

# Any NaN in the dataset

final_demo.isnull().sum()

# Any uplicates (or similarities, mis-spellings) in ethnicity and city

ethnicity = final_demo["ethnicity"].unique()

city = final_demo["city"].unique()

# # Cleaning the dataset

# Change the type of "student_id" to string

final_demo["student_id"] = final_demo["student_id"].astype(str)

# Drop NaN in the dataset

final_demo.dropna(inplace=True)

# Replace ethnicity categories

final_demo.replace({"Asian Indian": "General Asian",

"Cambodian": "General Asian",

"Chinese": "General Asian",

"Filipino": "General Asian",

"Hmong": "General Asian",

"Japanese": "General Asian",

"Korean": "General Asian",

"Laotian": "General Asian",

"Other Asian": "General Asian",

"Vietnamese": "General Asian",

"Samoan": "Pacific Islander",

"Other Pacific Islander": "Pacific Islander",

"Guamanian": "Pacific Islander",

"Tahitian": "Pacific Islander",

"Laotian": "Pacific Islander",

"Hawaiian": "Pacific Islander"}, inplace=True)

# Replace city categories

final_demo.replace({"So San Francisco": "South SF",

"South San Francisco": "South SF",

"So. San Francisco": "South SF",

"So San Francisco ": "South SF",

"So San Francisco": "South SF",

"So Sn Francisco": "South SF",

"So SanFrancisco": "South SF",

"So San Francisco": "South SF",

"So San Francico": "South SF",

"S San Francisco": "South SF",

"So San Fran": "South SF",

"south San Francisco": "South SF",

"South San Francisco ": "South SF",

"South San Francico": "South SF",

"So San Francsico": "South SF",

"So San Franicsco": "South SF",

"Concord ": "Concord",

"Burlingame ": "Burlingame",

"Pacifica ": "Pacifica",

"Daly cITY": "Daly City",

"Daly City ": "Daly City",

"Daly City ": "Daly City",

"Daly Citiy": "Daly City",

"Daly Ciy": "Daly City",

"Daly CIty": "Daly City",

"San Mateo ": "San Mateo"

}, inplace=True)

# # Creating yearly enrollment group

# Year subgroups

enroll2016 = final_demo.loc[final_demo["year"]==2016]

enroll2017 = final_demo.loc[final_demo["year"]==2017]

enroll2018 = final_demo.loc[final_demo["year"]==2018]

enroll2019 = final_demo.loc[final_demo["year"]==2019]

# ## + Creating subgroups - Ethnicity

# +

### YEAR 2016 ###

# Calcaulte number of enrollment based on ethnicity

enrollRace2016 = pd.DataFrame(enroll2016.groupby(["ethnicity"])["student_id"].count())

# Add year column

enrollRace2016["year"] = 2016

# Rename column name

enrollRace2016.rename({"student_id": "enrollment"}, axis=1, inplace=True)

# +

### YEAR 2017 ###

# Calcaulte number of enrollment based on ethnicity

enrollRace2017 = pd.DataFrame(enroll2017.groupby(["ethnicity"])["student_id"].count())

# Add year column

enrollRace2017["year"] = 2017

# Rename column name

enrollRace2017.rename({"student_id": "enrollment"}, axis=1, inplace=True)

# +

### YEAR 2018 ###

# Calcaulte number of enrollment based on ethnicity

enrollRace2018 = pd.DataFrame(enroll2018.groupby(["ethnicity"])["student_id"].count())

# Add year column

enrollRace2018["year"] = 2018

# Rename column name

enrollRace2018.rename({"student_id": "enrollment"}, axis=1, inplace=True)

# +

### YEAR 2019 ###

# Calcaulte number of enrollment based on ethnicity

enrollRace2019 = pd.DataFrame(enroll2019.groupby(["ethnicity"])["student_id"].count())

# Add year column

enrollRace2019["year"] = 2019

# Rename column name

enrollRace2019.rename({"student_id": "enrollment"}, axis=1, inplace=True)

# -

# Append 4 dataframes into one

enrollRace = enrollRace2016.append(enrollRace2017)

enrollRace = enrollRace.append(enrollRace2018)

enrollRace = enrollRace.append(enrollRace2019)

# Export to csv file

enrollRace.to_csv("assets/data/race_data.csv", index=True)

# ## + Creating subgroups - City

# +

### YEAR 2016 ###

# Calcaulte number of enrollment based on city

enrollCity2016 = pd.DataFrame(enroll2016.groupby(["city"])["student_id"].count())

# Add year column

enrollCity2016["year"] = 2016

# Rename column name

enrollCity2016.rename({"student_id": "enrollment"}, axis=1, inplace=True)

# -

enrollCity2016

|

jupyter/ethnicity.ipynb

|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # Disambiguation

# +

import pprint

import subprocess

import sys

sys.path.append('../')

import numpy as np

import scipy as sp

import matplotlib.pyplot as plt

import matplotlib

import matplotlib.gridspec as gridspec

from mpl_toolkits.axes_grid1 import make_axes_locatable

import seaborn as sns

# %matplotlib inline

plt.rcParams['figure.figsize'] = (12.9, 12)

np.set_printoptions(suppress=True, precision=5)

sns.set(font_scale=3.5)

from network import Protocol, NetworkManager, BCPNNPerfect, TimedInput

from connectivity_functions import create_orthogonal_canonical_representation, build_network_representation

from connectivity_functions import get_weights_from_probabilities, get_probabilities_from_network_representation

from analysis_functions import calculate_recall_time_quantities, get_weights

from analysis_functions import get_weights_collections

from plotting_functions import plot_network_activity_angle, plot_weight_matrix

from analysis_functions import calculate_angle_from_history, calculate_winning_pattern_from_distances

from analysis_functions import calculate_patterns_timings

# -

epsilon = 10e-20

# +

def produce_overlaped_sequences(minicolumns, hypercolumns, n_patterns, s, r, mixed_start=False, contiguous=True):

n_r = int(r * n_patterns/2)

n_s = int(s * hypercolumns)

n_size = int(n_patterns / 2)

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)[:n_patterns]

sequence1 = matrix[:n_size]

sequence2 = matrix[n_size:]

if mixed_start:

start_index = 0

end_index = n_r

else:

start_index = max(int(0.5 * (n_size - n_r)), 0)

end_index = min(start_index + n_r, n_size)

for index in range(start_index, end_index):

if contiguous:

sequence2[index, :n_s] = sequence1[index, :n_s]

else:

sequence2[index, ...] = sequence1[index, ...]

sequence2[index, n_s:] = n_patterns + index

if False:

print(n_r)

print(n_size)

print(start_index)

print(end_index)

return sequence1, sequence2

def create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval, inter_sequence_interval,

epochs, resting_time):

filtered = True

minicolumns = nn.minicolumns

hypercolumns = nn.hypercolumns

tau_z_pre_ampa = nn.tau_z_pre_ampa

tau_z_post_ampa = nn.tau_z_post_ampa

seq1, seq2 = produce_overlaped_sequences(minicolumns, hypercolumns, n_patterns, s, r,

mixed_start=mixed_start, contiguous=contiguous)

nr1 = build_network_representation(seq1, minicolumns, hypercolumns)

nr2 = build_network_representation(seq2, minicolumns, hypercolumns)

# Get the first

timed_input = TimedInput(nr1, dt, training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_pulse_interval, epochs=epochs,

resting_time=resting_time)

S = timed_input.build_timed_input()

z_pre = timed_input.build_filtered_input_pre(tau_z_pre_ampa)

z_post = timed_input.build_filtered_input_post(tau_z_post_ampa)

pi1, pj1, P1 = timed_input.calculate_probabilities_from_time_signal(filtered=filtered)

w_timed1 = get_weights_from_probabilities(pi1, pj1, P1, minicolumns, hypercolumns)

t1 = timed_input.T_total

# Get the second

timed_input = TimedInput(nr2, dt, training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_pulse_interval, epochs=epochs,

resting_time=resting_time)

S = timed_input.build_timed_input()

z_pre = timed_input.build_filtered_input_pre(tau_z_pre_ampa)

z_post = timed_input.build_filtered_input_post(tau_z_post_ampa)

t2 = timed_input.T_total

pi2, pj2, P2 = timed_input.calculate_probabilities_from_time_signal(filtered=filtered)

w_timed2 = get_weights_from_probabilities(pi2, pj2, P2, minicolumns, hypercolumns)

t_total = t1 + t2

# Mix

pi_total = (t1 / t_total) * pi1 + ((t_total - t1)/ t_total) * pi2

pj_total = (t1 / t_total) * pj1 + ((t_total - t1)/ t_total) * pj2

P_total = (t1 / t_total) * P1 + ((t_total - t1)/ t_total) * P2

w_total, beta = get_weights_from_probabilities(pi_total, pj_total, P_total, minicolumns, hypercolumns)

return seq1, seq2, nr1, nr2, w_total, beta

def calculate_recall_success_nr(manager, nr, T_recall, T_cue, debug=False, remove=0.020):

n_seq = nr.shape[0]

I_cue = nr[0]

# Do the recall

manager.run_network_recall(T_recall=T_recall, I_cue=I_cue, T_cue=T_cue,

reset=True, empty_history=True)

distances = calculate_angle_from_history(manager)

winning = calculate_winning_pattern_from_distances(distances)

timings = calculate_patterns_timings(winning, manager.dt, remove=remove)

pattern_sequence = [x[0] for x in timings]

# Calculate whether it was succesfull

success = 1.0

for index, pattern_index in enumerate(pattern_sequence[:n_seq]):

pattern = manager.patterns_dic[pattern_index]

goal_pattern = nr[index]

if not np.array_equal(pattern, goal_pattern):

success = 0.0

break

if debug:

return success, timings, pattern_sequence

else:

return success

# -

# ## An example

# +

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

G = 1.0

sigma = 0.0

tau_m = 0.020

tau_z_pre_ampa = 0.025

tau_z_post_ampa = 0.025

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o', 'i_ampa', 'a']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

resting_time = 2.0

epochs = 1

# Recall

T_recall = 1.0

T_cue = 0.020

# Patterns parameters

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# Build the protocol for training

mixed_start = False

contiguous = True

s = 1.0

r = 0.3

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

nn.w_ampa = w_total

manager.patterns_dic = patterns_dic

s = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

print('s1=', s)

plot_network_activity_angle(manager)

s = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

print('s2=', s)

plot_network_activity_angle(manager)

# -

plot_weight_matrix(nn, ampa=True)

# ## More systematic

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

g_beta = 1.0

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.050

tau_z_post_ampa = 0.005

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o', 'i_ampa', 'a']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 10

r_space = np.linspace(0, 0.9, num=num)

success_vector = np.zeros(num)

factor = 0.2

g_w_ampa * (w_total[0, 0] - w_total[2, 0])

for r_index, r in enumerate(r_space):

print('r_index', r_index)

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents, g_beta=g_beta)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

nn.w_ampa = w_total

nn.beta = beta

manager.patterns_dic = patterns_dic

current = g_w_ampa * (w_total[0, 0] - w_total[2, 0])

noise = factor * current

nn.sigma = noise

# Recall

aux = calculate_recall_success_nr(manager, nr1, T_recall, T_cue, debug=True, remove=0.020)

s1, timings, pattern_sequence = aux

print('1', s1, pattern_sequence, seq1)

aux = calculate_recall_success_nr(manager, nr2, T_recall, T_cue, debug=True, remove=0.020)

s2, timings, pattern_sequence = aux

print('2', s2, pattern_sequence, seq2)

success_vector[r_index] = 0.5 * (s1 + s2)

# +

markersize = 15

linewdith = 8

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

ax.plot(r_space, success_vector, 'o-', lw=linewdith, ms=markersize)

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

# -

# #### tau_z

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.025

tau_z_post_ampa = 0.025

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 10

r_space = np.linspace(0, 0.9, num=num)

success_vector = np.zeros(num)

tau_z_list = [0.025, 0.035, 0.050, 0.075]

#tau_z_list = [0.025, 0.100, 0.250]

#tau_z_list = [0.025, 0.050]

success_list = []

for tau_z_pre_ampa in tau_z_list:

success_vector = np.zeros(num)

print(tau_z_pre_ampa)

for r_index, r in enumerate(r_space):

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

nn.w_ampa = w_total

manager.patterns_dic = patterns_dic

# Recall

s1 = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

s2 = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

success_vector[r_index] = 0.5 * (s1 + s2)

success_list.append(np.copy(success_vector))

# +

markersize = 15

linewdith = 8

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

for tau_z, success_vector in zip(tau_z_list, success_list):

ax.plot(r_space, success_vector, 'o-', lw=linewdith, ms=markersize, label=str(tau_z))

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

ax.legend();

# -

# #### Scale

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.025

tau_z_post_ampa = 0.025

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 10

r_space = np.linspace(0, 0.9, num=num)

success_vector = np.zeros(num)

hypercolumns_list = [1, 3, 7, 10]

#tau_z_list = [0.025, 0.100, 0.250]

#tau_z_list = [0.025, 0.050]

success_list = []

for hypercolumns in hypercolumns_list:

success_vector = np.zeros(num)

print(hypercolumns)

for r_index, r in enumerate(r_space):

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

nn.w_ampa = w_total

manager.patterns_dic = patterns_dic

# Recall

s1 = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

s2 = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

success_vector[r_index] = 0.5 * (s1 + s2)

success_list.append(np.copy(success_vector))

# +

markersize = 15

linewdith = 8

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

for hypercolumns, success_vector in zip(hypercolumns_list, success_list):

ax.plot(r_space, success_vector, 'o-', lw=linewdith, ms=markersize, label=str(hypercolumns))

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

ax.legend();

# -

# #### tau_m

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.025

tau_z_post_ampa = 0.025

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 10

r_space = np.linspace(0, 0.9, num=num)

success_vector = np.zeros(num)

tau_m_list = [0.001, 0.008, 0.020]

success_list = []

for tau_m in tau_m_list:

success_vector = np.zeros(num)

print(tau_m)

for r_index, r in enumerate(r_space):

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

nn.w_ampa = w_total

manager.patterns_dic = patterns_dic

# Recall

s1 = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

s2 = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

success_vector[r_index] = 0.5 * (s1 + s2)

success_list.append(np.copy(success_vector))

# +

markersize = 15

linewdith = 8

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

for tau_m, success_vector in zip(tau_m_list, success_list):

ax.plot(r_space, success_vector, 'o-', lw=linewdith, ms=markersize, label=str(tau_m))

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

ax.legend();

# -

# #### training time

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.025

tau_z_post_ampa = 0.025

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 10

r_space = np.linspace(0, 0.9, num=num)

success_vector = np.zeros(num)

training_time_list = [0.050, 0.100, 0.250, 0.500]

success_list = []

for training_time in training_time_list:

success_vector = np.zeros(num)

print(training_time)

for r_index, r in enumerate(r_space):

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

nn.w_ampa = w_total

manager.patterns_dic = patterns_dic

# Recall

s1 = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

s2 = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

success_vector[r_index] = 0.5 * (s1 + s2)

success_list.append(np.copy(success_vector))

# +

markersize = 15

linewdith = 8

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

for training_time, success_vector in zip(training_time_list, success_list):

ax.plot(r_space, success_vector, 'o-', lw=linewdith, ms=markersize, label=str(training_time))

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

ax.legend();

# -

# ## Systematic with noise

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

g_beta = 0.0

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.050

tau_z_post_ampa = 0.005

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o', 'i_ampa', 'a']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 15

trials = 25

r_space = np.linspace(0, 0.6, num=num)

success_vector = np.zeros((num, trials))

factor = 0.1

for r_index, r in enumerate(r_space):

print(r_index)

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents, g_beta=g_beta)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

manager.patterns_dic = patterns_dic

nn.w_ampa = w_total

nn.beta = beta

current = g_w_ampa * (w_total[0, 0] - w_total[2, 0])

noise = factor * current

nn.sigma = noise

print(nn.sigma)

# Recall

for trial in range(trials):

s1 = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

s2 = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

success_vector[r_index, trial] = 0.5 * (s1 + s2)

# +

markersize = 15

linewdith = 8

current_palette = sns.color_palette()

index = 0

alpha = 0.5

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

mean_success = success_vector.mean(axis=1)

std = success_vector.std(axis=1)

ax.plot(r_space, mean_success, 'o-', lw=linewdith, ms=markersize)

ax.fill_between(r_space, mean_success - std, mean_success + std,

color=current_palette[index], alpha=alpha)

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

# +

# %%time

always_learning = False

strict_maximum = True

perfect = False

z_transfer = False

k_perfect = True

diagonal_zero = False

normalized_currents = True

g_w_ampa = 2.0

g_w = 0.0

g_a = 10.0

tau_a = 0.250

g_beta = 0.0

G = 1.0

sigma = 0.0

tau_m = 0.010

tau_z_pre_ampa = 0.050

tau_z_post_ampa = 0.005

tau_p = 10.0

hypercolumns = 1

minicolumns = 20

n_patterns = 20

# Manager properties

dt = 0.001

values_to_save = ['o', 'i_ampa', 'a']

# Protocol

training_time = 0.100

inter_sequence_interval = 0.0

inter_pulse_interval = 0.0

epochs = 1

mixed_start = False

contiguous = True

s = 1.0

r = 0.25

# Recall

T_recall = 1.0

T_cue = 0.020

num = 15

trials = 25

r_space = np.linspace(0, 0.6, num=num)

success_vector = np.zeros((num, trials))

successes = []

factors = [0.0, 0.1, 0.2, 0.3]

for factor in factors:

print(factor)

for r_index, r in enumerate(r_space):

print(r_index)

# The network

nn = BCPNNPerfect(hypercolumns, minicolumns, g_w_ampa=g_w_ampa, g_w=g_w, g_a=g_a, tau_a=tau_a, tau_m=tau_m,

sigma=sigma, G=G, tau_z_pre_ampa=tau_z_pre_ampa, tau_z_post_ampa=tau_z_post_ampa, tau_p=tau_p,

z_transfer=z_transfer, diagonal_zero=diagonal_zero, strict_maximum=strict_maximum,

perfect=perfect, k_perfect=k_perfect, always_learning=always_learning,

normalized_currents=normalized_currents, g_beta=g_beta)

# Build the manager

manager = NetworkManager(nn=nn, dt=dt, values_to_save=values_to_save)

# The sequences

matrix = create_orthogonal_canonical_representation(minicolumns, hypercolumns)

aux = create_weights_from_two_sequences(nn, dt, n_patterns, s, r, mixed_start, contiguous,

training_time, inter_pulse_interval=inter_pulse_interval,

inter_sequence_interval=inter_sequence_interval, epochs=epochs,

resting_time=resting_time)

seq1, seq2, nr1, nr2, w_total, beta = aux

nr = np.concatenate((nr1, nr2))

aux, indexes = np.unique(nr, axis=0, return_index=True)

patterns_dic = {index:pattern for (index, pattern) in zip(indexes, aux)}

manager.patterns_dic = patterns_dic

nn.w_ampa = w_total

nn.beta = beta

current = g_w_ampa * (w_total[0, 0] - w_total[2, 0])

noise = factor * current

nn.sigma = noise

# Recall

for trial in range(trials):

s1 = calculate_recall_success_nr(manager, nr1, T_recall, T_cue)

s2 = calculate_recall_success_nr(manager, nr2, T_recall, T_cue)

success_vector[r_index, trial] = 0.5 * (s1 + s2)

successes.append(np.copy(success_vector))

# +

markersize = 15

linewdith = 8

current_palette = sns.color_palette()

index = 0

alpha = 0.5

fig = plt.figure(figsize=(16, 12))

ax = fig.add_subplot(111)

for index, success_vector in enumerate(successes):

mean_success = success_vector.mean(axis=1)

std = success_vector.std(axis=1)

ax.plot(r_space, mean_success, 'o-', lw=linewdith, ms=markersize, label=str(factors[index]))

ax.fill_between(r_space, mean_success - std, mean_success + std,

color=current_palette[index], alpha=alpha)

ax.axhline(0, ls='--', color='gray')

ax.axvline(0, ls='--', color='gray')

ax.set_xlabel('Overlap')

ax.set_ylabel('Recall')

ax.legend();

# -

|

jupyter/2018-05-23(Disambiguation).ipynb

|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# **Run the following two cells before you begin.**

# %autosave 10

# ______________________________________________________________________

# **First, import your data set and define the sigmoid function.**

# <details>

# <summary>Hint:</summary>

# The definition of the sigmoid is $f(x) = \frac{1}{1 + e^{-X}}$.

# </details>

# +

# Import the data set

import pandas as pd

import numpy as np

import matplotlib as mpl

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

import seaborn as sns

df = pd.read_csv('cleaned_data.csv')

# -

# Define the sigmoid function

def sigmoid(X):

Y = 1 / (1 + np.exp(-X))

return Y

# **Now, create a train/test split (80/20) with `PAY_1` and `LIMIT_BAL` as features and `default payment next month` as values. Use a random state of 24.**

# Create a train/test split

X_train, X_test, y_train, y_test = train_test_split(df[['PAY_1', 'LIMIT_BAL']].values, df['default payment next month'].values,test_size=0.2, random_state=24)

# ______________________________________________________________________

# **Next, import LogisticRegression, with the default options, but set the solver to `'liblinear'`.**

lr_model = LogisticRegression(solver='liblinear')

lr_model

# ______________________________________________________________________

# **Now, train on the training data and obtain predicted classes, as well as class probabilities, using the testing data.**

# Fit the logistic regression model on training data

lr_model.fit(X_train,y_train)

# Make predictions using `.predict()`

y_pred = lr_model.predict(X_test)

# Find class probabilities using `.predict_proba()`

y_pred_proba = lr_model.predict_proba(X_test)

# ______________________________________________________________________

# **Then, pull out the coefficients and intercept from the trained model and manually calculate predicted probabilities. You'll need to add a column of 1s to your features, to multiply by the intercept.**

# Add column of 1s to features

ones_and_features = np.hstack([np.ones((X_test.shape[0],1)), X_test])

print(ones_and_features)

np.ones((X_test.shape[0],1)).shape

# Get coefficients and intercepts from trained model

intercept_and_coefs = np.concatenate([lr_model.intercept_.reshape(1,1), lr_model.coef_], axis=1)

intercept_and_coefs

# Manually calculate predicted probabilities

X_lin_comb = np.dot(intercept_and_coefs, np.transpose(ones_and_features))

y_pred_proba_manual = sigmoid(X_lin_comb)

# ______________________________________________________________________

# **Next, using a threshold of `0.5`, manually calculate predicted classes. Compare this to the class predictions output by scikit-learn.**

# Manually calculate predicted classes

y_pred_manual = y_pred_proba_manual >= 0.5

y_pred_manual.shape

y_pred.shape

# Compare to scikit-learn's predicted classes

np.array_equal(y_pred.reshape(1,-1), y_pred_manual)

y_test.shape

y_pred_proba_manual.shape

# ______________________________________________________________________

# **Finally, calculate ROC AUC using both scikit-learn's predicted probabilities, and your manually predicted probabilities, and compare.**

# + eid="e7697"

# Use scikit-learn's predicted probabilities to calculate ROC AUC

from sklearn.metrics import roc_auc_score

roc_auc_score(y_test, y_pred_proba_manual.reshape(y_pred_proba_manual.shape[1],))

# -

# Use manually calculated predicted probabilities to calculate ROC AUC

roc_auc_score(y_test, y_pred_proba[:,1])

|

Mini-Project-2/Project 4/Fitting_a_Logistic_Regression_Model.ipynb

|

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# + [markdown] pycharm={"name": "#%% md\n"}

# # ProVis: Attention Visualizer for Proteins

# + pycharm={"is_executing": false, "name": "#%%\n"}

import io

import urllib

import torch

from Bio.Data import SCOPData

from Bio.PDB import PDBParser, PPBuilder

from tape import TAPETokenizer, ProteinBertModel

import nglview

attn_color = [0.937, .522, 0.212]

# + pycharm={"name": "#%%\n"}

def get_structure(pdb_id):

resource = urllib.request.urlopen(f'https://files.rcsb.org/download/{pdb_id}.pdb')

content = resource.read().decode('utf8')

handle = io.StringIO(content)

parser = PDBParser(QUIET=True)

return parser.get_structure(pdb_id, handle)

# + pycharm={"name": "#%%\n"}

def get_attn_data(chain, layer, head, min_attn, start_index=0, end_index=None, max_seq_len=1024):

tokens = []

coords = []

for res in chain:

t = SCOPData.protein_letters_3to1.get(res.get_resname(), "X")

tokens += t

if t == 'X':

coord = None

else:

coord = res['CA'].coord.tolist()

coords.append(coord)

last_non_x = None

for i in reversed(range(len(tokens))):

if tokens[i] != 'X':

last_non_x = i

break

assert last_non_x is not None

tokens = tokens[:last_non_x + 1]

coords = coords[:last_non_x + 1]

tokenizer = TAPETokenizer()

model = ProteinBertModel.from_pretrained('bert-base', output_attentions=True)

if max_seq_len:

tokens = tokens[:max_seq_len - 2] # Account for SEP, CLS tokens (added in next step)

token_idxs = tokenizer.encode(tokens).tolist()

if max_seq_len:

assert len(token_idxs) == min(len(tokens) + 2, max_seq_len)

else:

assert len(token_idxs) == len(tokens) + 2

inputs = torch.tensor(token_idxs).unsqueeze(0)

with torch.no_grad():

attns = model(inputs)[-1]

# Remove attention from <CLS> (first) and <SEP> (last) token

attns = [attn[:, :, 1:-1, 1:-1] for attn in attns]

attns = torch.stack([attn.squeeze(0) for attn in attns])

attn = attns[layer, head]

if end_index is None:

end_index = len(tokens)

attn_data = []

for i in range(start_index, end_index):

for j in range(i, end_index):