repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

sequencelengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

dynaconf/dynaconf | fastapi | 478 | Make `environment` an alias to `environments` | A common mistake is to pass `environment=True` when it should be `environments`

Solutions:

1. Warn the user

**Did you mean `environments`?**

2. make an alias the same way we did with `settings_file` and `settings_files` | closed | 2020-11-24T17:39:51Z | 2021-03-02T21:26:54Z | https://github.com/dynaconf/dynaconf/issues/478 | [

"Not a Bug",

"RFC"

] | rochacbruno | 0 |

ultralytics/yolov5 | pytorch | 12,909 | Calculate gradients for YOLOv5 - XAI for object detection | ### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

Hi,

I am trying to calculate gradients for the YOLOv5 model, which is needed for some AI explainability methods. I am not sure if I am using the right approach, but so far I was trying to use DetectMultiBackend class for the model and ComputeLoss class for computing the loss (see the code below). But I am getting an error about the model being not subscriptable, can you tell me how can I calculate the gradient?

`model = DetectMultiBackend(weights=weights, device=device, dnn=dnn, data=data, fp16=half).float().eval()

# We want gradients with respect to the input, so we need to enable it

inputs.requires_grad = True

# Forward pass

pred= model(inputs)

amp = check_amp(model) # check AMP

compute_loss = ComputeLoss(model) # init loss class

loss, loss_items = compute_loss(pred, target.to(device))

scaler = torch.cuda.amp.GradScaler(enabled=amp)

# Backward

scaler.scale(loss).backward()

#scaler.unscale_(optimizer) # unscale gradients - is this step necessary?

gradients = inputs.grad`

### Additional

_No response_ | closed | 2024-04-12T15:48:26Z | 2024-10-20T19:43:34Z | https://github.com/ultralytics/yolov5/issues/12909 | [

"question",

"Stale"

] | zuzell | 7 |

globaleaks/globaleaks-whistleblowing-software | sqlalchemy | 3,933 | Change translation for a question from the provided models | ### What version of GlobaLeaks are you using?

4.13.22

### What browser(s) are you seeing the problem on?

All

### What operating system(s) are you seeing the problem on?

Windows, Linux

### Describe the issue

Is it possible to change the "Would you like to tell us who you are?" translation for PT? I've tried to change it from "Gostaria de nos dizer quem você é?" to "Gostaria de nos dizer quem é?" inside de platform, but i can't do it.

### Proposed solution

_No response_ | closed | 2024-01-06T00:54:54Z | 2024-01-06T15:13:40Z | https://github.com/globaleaks/globaleaks-whistleblowing-software/issues/3933 | [] | pcinacio | 1 |

marcomusy/vedo | numpy | 44 | jupyter lab support | Thanks for this amazing software. However, it appears that the plot functionality only works for jupyter notebook, and will not work for jupyter lab. When trying to execute "show" command for function plotting, it will simply fail with an error message saying "error displaying widget module not found". Any possibility to correct this issue? Thanks! | open | 2019-08-04T00:31:56Z | 2019-11-09T09:52:00Z | https://github.com/marcomusy/vedo/issues/44 | [

"bug",

"jupyter",

"long-term"

] | jacobdang | 4 |

nvbn/thefuck | python | 1,076 | PytestUnknownMarkWarning | <!-- If you have any issue with The Fuck, sorry about that, but we will do what we

can to fix that. Actually, maybe we already have, so first thing to do is to

update The Fuck and see if the bug is still there. -->

<!-- If it is (sorry again), check if the problem has not already been reported and

if not, just open an issue on [GitHub](https://github.com/nvbn/thefuck) with

the following basic information: -->

The output of `thefuck --version` (something like `The Fuck 3.1 using Python

3.5.0 and Bash 4.4.12(1)-release`):

`master` version, commit 6975d30818792f1b37de702fc93c66023c4c50d5, Python 3.8.2

Your system (Debian 7, ArchLinux, Windows, etc.):

Arch Linux

How to reproduce the bug:

py.test

Anything else you think is relevant:

```

tests/test_conf.py:46

/home/alex/Projects/thefuck/tests/test_conf.py:46: PytestUnknownMarkWarning: Unknown pytest.mark.usefixture - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/latest/mark.html

@pytest.mark.usefixture('load_source')

tests/functional/test_bash.py:41

/home/alex/Projects/thefuck/tests/functional/test_bash.py:41: PytestUnknownMarkWarning: Unknown pytest.mark.functional - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/latest/mark.html

@pytest.mark.functional

tests/functional/test_bash.py:47

/home/alex/Projects/thefuck/tests/functional/test_bash.py:47: PytestUnknownMarkWarning: Unknown pytest.mark.functional - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/latest/mark.html

@pytest.mark.functional

tests/functional/test_bash.py:53

/home/alex/Projects/thefuck/tests/functional/test_bash.py:53: PytestUnknownMarkWarning: Unknown pytest.mark.functional - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/latest/mark.html

@pytest.mark.functional

tests/functional/test_bash.py:59

/home/alex/Projects/thefuck/tests/functional/test_bash.py:59: PytestUnknownMarkWarning: Unknown pytest.mark.functional - is this a typo? You can register custom marks to avoid this warning - for details, see https://docs.pytest.org/en/latest/mark.html

@pytest.mark.functional

...

```

<!-- It's only with enough information that we can do something to fix the problem. -->

| open | 2020-04-09T13:24:29Z | 2020-04-17T15:05:13Z | https://github.com/nvbn/thefuck/issues/1076 | [] | AlexWayfer | 1 |

onnx/onnx | scikit-learn | 6,297 | No Adapter From Version $14 for Mul | # Bug Report

### Is the issue related to model conversion?

<!-- If the ONNX checker reports issues with this model then this is most probably related to the converter used to convert the original framework model to ONNX. Please create this bug in the appropriate converter's GitHub repo (pytorch, tensorflow-onnx, sklearn-onnx, keras-onnx, onnxmltools) to get the best help. -->

Yes.

### Describe the bug

<!-- Please describe the bug clearly and concisely -->

<img width="1481" alt="image" src="https://github.com/user-attachments/assets/b9234fd4-12f8-40b9-a84d-389f71c86d76">

### System information

<!--

- OS Platform and Distribution (*e.g. Linux Ubuntu 20.04*): Mac os and Linux

- ONNX version (*e.g. 1.13*): 1.16.2

- Python version: 3.10.2

- GCC/Compiler version (if compiling from source):

- CMake version:

- Protobuf version:

- Visual Studio version (if applicable):-->

### Reproduction instructions

<!--

- Describe the code to reproduce the behavior.

```

import onnx

model = onnx.load('model.onnx')

...

```

- Attach the ONNX model to the issue (where applicable)-->

### Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

### Notes

<!-- Any additional information -->

| open | 2024-08-16T13:10:10Z | 2024-09-05T01:48:49Z | https://github.com/onnx/onnx/issues/6297 | [

"bug",

"module: version converter"

] | roachsinai | 3 |

yt-dlp/yt-dlp | python | 12,693 | ERROR: [youtube] Failed to extract any player response | ### Checklist

- [x] I'm asking a question and **not** reporting a bug or requesting a feature

- [x] I've looked through the [README](https://github.com/yt-dlp/yt-dlp#readme)

- [x] I've verified that I have **updated yt-dlp to nightly or master** ([update instructions](https://github.com/yt-dlp/yt-dlp#update-channels))

- [x] I've searched [known issues](https://github.com/yt-dlp/yt-dlp/issues/3766), [the FAQ](https://github.com/yt-dlp/yt-dlp/wiki/FAQ), and the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=is%3Aissue%20-label%3Aspam%20%20) for similar questions **including closed ones**. DO NOT post duplicates

### Please make sure the question is worded well enough to be understood

I got this error when trying to make a bot that can play music on discord (used youtube)

ERROR: [youtube] : Failed to extract any player response; please report this issue on https://github.com/yt-dlp/yt-dlp/issues?q= , filling out the appropriate issue template. Confirm you are on the latest version using yt-dlp -U

### Provide verbose output that clearly demonstrates the problem

- [ ] Run **your** yt-dlp command with **-vU** flag added (`yt-dlp -vU <your command line>`)

- [ ] If using API, add `'verbose': True` to `YoutubeDL` params instead

- [ ] Copy the WHOLE output (starting with `[debug] Command-line config`) and insert it below

### Complete Verbose Output

```shell

``` | open | 2025-03-22T05:38:58Z | 2025-03-22T12:51:28Z | https://github.com/yt-dlp/yt-dlp/issues/12693 | [

"question",

"incomplete"

] | HyIsNoob | 5 |

MagicStack/asyncpg | asyncio | 876 | notice callback not awaited | * **asyncpg version**: 0.23.0

* **Python version**: 3.9.2

* **Platform**: Linux

* **Do you use pgbouncer?**: no

* **Did you install asyncpg with pip?**: yes

* **Can the issue be reproduced under both asyncio and

[uvloop](https://github.com/magicstack/uvloop)?**: only experienced it once, without uvloop

```

/root/.local/lib/python3.9/site-packages/asyncpg/connection.py:1435: RuntimeWarning: coroutine 'vacuum_job.<locals>.async_notice_callb' was never awaited

cb(con_ref, message)

RuntimeWarning: Enable tracemalloc to get the object allocation traceback

```

| open | 2022-01-15T02:48:48Z | 2023-12-17T03:01:35Z | https://github.com/MagicStack/asyncpg/issues/876 | [] | ale-dd | 1 |

miguelgrinberg/microblog | flask | 317 | Infinite edit_profile redirect | Thanks for the great tutorial!!

I'm working through chapter 6 and as far as I can tell, my code is the same as v0.6, but my packages may be newer (Flask 2.0.3, flask-wtf 0.15.1).

When I define the edit_profile route, I get an infinite redirect loop after clicking the 'edit profile' link. This additionally has the effect of deleting my username but not email from the database, which causes a bunch of other weird problems. But, if I switch the order of the if and elif statements so that it checks whether the request is a GET first, everything works again:

```

@app.route('/edit_profile', methods=['GET','POST'])

@login_required

def edit_profile():

form=EditProfileForm()

if request.method=='GET':

form.username.data=current_user.username

form.about_me.data=current_user.about_me

elif form.validate_on_submit:

current_user.username = form.username.data

current_user.about_me = form.about_me.data

db.session.commit()

flash('Your changes have been saved.')

return redirect(url_for('edit_profile'))

return render_template('edit_profile.html', title='Edit Profile', form=form)

```

My conda env:

[conda_packages.txt](https://github.com/miguelgrinberg/microblog/files/8189501/conda_packages.txt)

| closed | 2022-03-05T00:59:57Z | 2022-03-09T00:41:53Z | https://github.com/miguelgrinberg/microblog/issues/317 | [

"question"

] | paulsonak | 2 |

microsoft/qlib | machine-learning | 1,628 | descriptor 'lower' for 'str' objects doesn't apply to a 'float' object | I tried updating data on existing datasets retrieved from Yahoo Finance using collector.py but received the following error.

The code used is:

python scripts/data_collector/yahoo/collector.py update_data_to_bin --qlib_data_1d_dir ~/desktop/quant_engine/qlib_data/us_data --trading_date 2023-08-16 --end_date 2023-08-17 --region US

"us_data" folder saved those three folders (calendar, features, and instruments) which contain data collected, normalized, and dumped following the instruction.

| closed | 2023-08-18T09:51:57Z | 2024-01-29T15:01:45Z | https://github.com/microsoft/qlib/issues/1628 | [

"question",

"stale"

] | guoz14 | 7 |

pytest-dev/pytest-django | pytest | 448 | Move pypy3 to allowed failures for now | It should not make PRs red. | closed | 2017-01-18T21:47:44Z | 2017-01-20T17:49:33Z | https://github.com/pytest-dev/pytest-django/issues/448 | [] | blueyed | 0 |

deeppavlov/DeepPavlov | tensorflow | 1,462 | levenshtein searcher works incorrectly |

**DeepPavlov version** (you can look it up by running `pip show deeppavlov`): 0.15.0

**Python version**: 3.7.10

**Operating system** (ubuntu linux, windows, ...): ubuntu 20.04

**Issue**: Levenshtein searcher does not take symbols replacements into account

**Command that led to error**:

```

>>> from deeppavlov.models.spelling_correction.levenshtein.searcher_component import LevenshteinSearcher

>>> vocab = set("the cat sat on the mat".split())

>>> abet = set(c for w in vocab for c in w)

>>> searcher = LevenshteinSearcher(abet, vocab)

>>> 'cat' in searcher

True

>>> searcher.transducer.distance('rat', 'cat'),\

... searcher.transducer.distance('cat', 'rat')

(inf, inf)

>>> searcher.transducer.distance('at', 'cat')

1.0

>>> searcher.transducer.distance('rat', 'cat')

inf

>>> searcher.search("rat", 1)

[]

>>> searcher.search("at", 1)

[('mat', 1.0), ('cat', 1.0), ('sat', 1.0)]

```

**Expected output**:

```

>>> searcher.transducer.distance('rat', 'cat')

1.0

>>> searcher.search("rat", 1)

[('mat', 1.0), ('cat', 1.0), ('sat', 1.0)]

```

| closed | 2021-07-04T21:39:27Z | 2023-07-10T11:45:40Z | https://github.com/deeppavlov/DeepPavlov/issues/1462 | [

"bug"

] | oserikov | 1 |

yunjey/pytorch-tutorial | pytorch | 187 | Typos in language model line 79 and generative_adversarial_network line 25-28 | Typos in language model line 79: should initialize optimizer instead of model

Generative_adversarial_network line 25-28: MNIST dataset is greyscale (one channel), transforms need to be modified. | closed | 2019-08-27T09:07:38Z | 2020-01-27T15:11:10Z | https://github.com/yunjey/pytorch-tutorial/issues/187 | [] | qy-yang | 0 |

alteryx/featuretools | scikit-learn | 1,878 | `pd_to_ks_clean` koalas util broken with Woodwork 0.12.0 latlong changes | The broken koalas unit tests (https://github.com/alteryx/featuretools/runs/4973841366?check_suite_focus=true#step:8:147) are all erroring in the setup of `ks_es`, where fully null tuples `(nan, nan)` are getting converted to `(-1, -1)` at some point in `pd_to_ks_clean`, which is causing `TypeError: element in array field latlong: DoubleType can not accept object -1 in type <class 'int'>`. | closed | 2022-02-01T17:13:28Z | 2022-04-01T20:19:56Z | https://github.com/alteryx/featuretools/issues/1878 | [

"bug"

] | tamargrey | 0 |

FactoryBoy/factory_boy | django | 1,028 | 3.3.0 Release | #### Question

In looking through the latest documentation I see there has been an addition to the SQLAlchemy options that can be passed to the meta class. Specifically, [sqlalchemy_session_factory](https://factoryboy.readthedocs.io/en/latest/orms.html#factory.alchemy.SQLAlchemyOptions.sqlalchemy_session_factory) would be very useful. I see that documentation was last updated 5 months ago. I'm curious if you have a sense of when the `3.3.0` release will go out?

I'm also curious if this project is still being activity maintained since the last release was 2 years ago? It might just be stable so that a lot of activity isn't needed but I also wanted to double check that while I'm at it.

Thanks for your support on this project! Factory boy is a fantastic library. | closed | 2023-07-08T00:37:18Z | 2023-07-08T00:38:33Z | https://github.com/FactoryBoy/factory_boy/issues/1028 | [] | ascrookes | 1 |

Kludex/mangum | fastapi | 159 | How can I log the response? | I'd like to log both the full request and full response.

Currently I am logging the full request by using a custom middleware as below:

```

class AWSLoggingMiddleware(BaseHTTPMiddleware):

async def dispatch(self, request, call_next):

logger.info(f'{request.scope.get("aws.event")}')

response = await call_next(request)

return response

```

How could I also log the full response back to aws? | closed | 2021-02-07T02:14:53Z | 2021-11-09T01:44:21Z | https://github.com/Kludex/mangum/issues/159 | [] | erichaus | 4 |

skforecast/skforecast | scikit-learn | 377 | Consider applying for pyOpenSci open peer review | pyOpenSci https://www.pyopensci.org/about-peer-review/ is modeled after rOpenSci and offers a community-led, open peer review process for scientific Python packages. Maybe they would be happy to review skforecast!

(See https://github.com/pyOpenSci/pyopensci.github.io/issues/150) | closed | 2023-03-27T20:13:10Z | 2024-08-08T08:43:12Z | https://github.com/skforecast/skforecast/issues/377 | [] | astrojuanlu | 2 |

seleniumbase/SeleniumBase | pytest | 3,575 | ERR_CONNECTION_RESET with Driver(uc=True) but fine with undetected_chromedriver | Hello everyone and the developer team,

I am experiencing an issue where I get an ERR_CONNECTION_RESET error when using the Driver with `uc=True`. I have tried using the original undetected_chromedriver, and that version works without any problems.

This error is causing the website to function incorrectly, and I discovered it while checking the Network tab.

```

from seleniumbase import Driver

driver = Driver(uc=True)

driver.get('https://sso.dancuquocgia.gov.vn/')

```

<img width="1894" alt="Image" src="https://github.com/user-attachments/assets/48109160-5e93-433a-8ea8-9c59a27e6926" />

Website: https://sso.dancuquocgia.gov.vn/

Please test your driver and improve it for situations like this. Thank you very much! | closed | 2025-03-02T15:16:43Z | 2025-03-03T01:52:39Z | https://github.com/seleniumbase/SeleniumBase/issues/3575 | [

"external",

"UC Mode / CDP Mode"

] | tranngocminhhieu | 2 |

Miserlou/Zappa | django | 1,576 | Feature: Ability to use existing API Gateway | ## Context

Hi, we're a small company in the process of gradually expanding our monolithic elasticbeanstalk application with smaller targeted microservices based on ApiStar / Zappa. We're getting good mileage so far and have deployed a small selection of background task-type services using SNS messaging as triggers, however we'd really like to start adding actual endpoints to our stack that call lambda functions. I've been trying for a couple of days to figure out how to properly setup an API gateway that will proxy to both our existing stack and newer services deployed with zappa. I've tried manually setting up a custom domain for the zappa apps and a cloudfront distro in front of both the existing stack and the new api gateway but am not getting very far. It would be a lot simpler IMO if we could specify an existing API gateway ARN in the zappa_settings.json and elect to use that so that it could integrate with an existing configuration.

## Expected Behavior

Would be great if I could specify "api_gateway_arn" in the zappa_settings.json file to use an existing api gateway

## Actual Behavior

There is no option to do this

## Possible Fix

I guess this is not straightforward given the API gateway is created via a cloudformation template, I guess it'd require moving some of that to boto functions instead ?

## Steps to Reproduce

N/A

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa version used: 0.46.2

* Operating System and Python version: 3.6

* The output of `pip freeze`:

ably==1.0.1

apistar==0.5.40

argcomplete==1.9.3

attrs==18.1.0

awscli==1.15.19

backcall==0.1.0

base58==1.0.0

boto3==1.7.0

botocore==1.10.36

cairocffi==0.8.1

CairoSVG==2.1.3

certifi==2018.4.16

cffi==1.11.5

cfn-flip==1.0.3

chardet==3.0.4

click==6.7

colorama==0.3.7

cookies==2.2.1

coverage==4.5.1

cssselect2==0.2.1

decorator==4.3.0

defusedxml==0.5.0

docutils==0.14

durationpy==0.5

et-xmlfile==1.0.1

Faker==0.8.15

Flask==0.12.2

future==0.16.0

hjson==3.0.1

html5lib==1.0.1

humanize==0.5.1

idna==2.7

ipython==6.4.0

ipython-genutils==0.2.0

itsdangerous==0.24

jdcal==1.4

jedi==0.12.0

Jinja2==2.10

jmespath==0.9.3

kappa==0.6.0

lambda-packages==0.20.0

MarkupSafe==1.0

more-itertools==4.1.0

msgpack-python==0.5.6

openpyxl==2.5.3

parso==0.2.1

pdfrw==0.4

pexpect==4.5.0

pickleshare==0.7.4

Pillow==5.1.0

placebo==0.8.1

pluggy==0.6.0

prompt-toolkit==1.0.15

psycopg2==2.7.4

ptyprocess==0.5.2

py==1.5.3

pyasn1==0.4.2

pycparser==2.18

Pygments==2.2.0

PyNaCl==1.2.1

Pyphen==0.9.4

pytest==3.5.1

pytest-cov==2.5.1

pytest-cover==3.0.0

pytest-coverage==0.0

pytest-faker==2.0.0

pytest-mock==1.10.0

-e git+https://github.com/crowdcomms/pytest-sqlalchemy.git@f9c666196d59edd289ecf57704ec7b51cff4440a#egg=pytest_sqlalchemy

python-dateutil==2.6.1

python-slugify==1.2.4

PyYAML==3.12

requests==2.19.0

responses==0.9.0

rsa==3.4.2

s3transfer==0.1.13

simplegeneric==0.8.1

six==1.11.0

SQLAlchemy==1.2.7

text-unidecode==1.2

tinycss2==0.6.1

toml==0.9.4

tqdm==4.19.1

traitlets==4.3.2

troposphere==2.3.0

Unidecode==1.0.22

urllib3==1.23

virtualenv==15.2.0

wcwidth==0.1.7

WeasyPrint==0.42.3

webencodings==0.5.1

Werkzeug==0.14.1

whitenoise==3.3.1

wsgi-request-logger==0.4.6

zappa==0.46.2

| open | 2018-08-01T07:31:55Z | 2020-08-20T21:06:21Z | https://github.com/Miserlou/Zappa/issues/1576 | [] | bharling | 1 |

KevinMusgrave/pytorch-metric-learning | computer-vision | 63 | Request for Cosine Metric Learning | Hi, I'm a newbie to deep learning.

Currently I'm working on a tracking-by-detection project related to [deep sort](https://github.com/nwojke/deep_sort).

In order to customize deepsort for my own classes, I need to train [Cosine Metric Learning](https://github.com/nwojke/cosine_metric_learning).

I've searched around for a pytorch implementation of cosine metric learning on google and it leads me to your repo.

So I was wondering if this is the one that meets my requirement?

If yes, how can I use cosine metric learning from your repo?

Many thanks! | closed | 2020-04-18T17:15:17Z | 2020-04-19T03:40:27Z | https://github.com/KevinMusgrave/pytorch-metric-learning/issues/63 | [] | pvti | 2 |

2noise/ChatTTS | python | 624 | Linux 运行内存飙升 | Linux 下源码部署 没有GPU 的情况下 只用CPU 内存一直飙升 每执行一次 内存增加一次 直到把内存撑爆

Windows 下运行使用GPU 内存比较稳定

Linux 下是什么情况 有人遇到么 | closed | 2024-07-24T06:14:20Z | 2024-07-24T06:59:48Z | https://github.com/2noise/ChatTTS/issues/624 | [] | cpa519904 | 1 |

modin-project/modin | pandas | 6,489 | REFACTOR: __invert__ should raise a TypeError at API layer for non-integer types | We raise the correct TypeError, but we do at the execution layer. | closed | 2023-08-18T19:29:59Z | 2023-08-24T10:05:55Z | https://github.com/modin-project/modin/issues/6489 | [

"Code Quality 💯",

"P2"

] | mvashishtha | 1 |

2noise/ChatTTS | python | 477 | 出色的工作,请问100k hours 的主模型是否有开源计划 | closed | 2024-06-27T08:41:10Z | 2024-06-28T09:46:16Z | https://github.com/2noise/ChatTTS/issues/477 | [

"documentation"

] | XintaoZhao0805 | 5 |

|

gunthercox/ChatterBot | machine-learning | 1,667 | AttributeError: 'str' object has no attribute 'text' | """

Created on Thu Mar 14 23:59:27 2019

@author: anjarul

"""

from chatterbot import ChatBot

from chatterbot.comparisons import LevenshteinDistance

bot2 = ChatBot('Eagle',

logic_adapters=['chatterbot.logic.BestMatch'

],

preprocessors=[

'chatterbot.preprocessors.clean_whitespace'

],

read_only=True

)

while True:

message = input ('You: ')

reply = bot2.get_response(message)

dist = LevenshteinDistance().compare(message,reply)

print (dist,' sdd')

print('Eagle: ',reply)

if message.strip()=='bye':

print('Eagle: bye')

break;

when i run this, i got an error.

Traceback (most recent call last):

File "two.py", line 51, in <module>

dist = LevenshteinDistance().compare(message,reply)

File "/home/anjarul/anaconda3/lib/python3.6/site-packages/chatterbot/comparisons.py", line 44, in compare

if not statement.text or not other_statement.text:

AttributeError: 'str' object has no attribute 'text'

how can I solve this issue | closed | 2019-03-14T20:44:39Z | 2020-01-10T10:29:14Z | https://github.com/gunthercox/ChatterBot/issues/1667 | [] | anjarul | 3 |

paulpierre/RasaGPT | fastapi | 68 | Telegram no response my message | I did follow all the setting including telegram bot,

but when i send me message, api got response and actions did success, but my telegram din get any response

Chat API:

```

DEBUG:config:Input: hey

19/01/2024

21:27:45

Input: hey

19/01/2024

21:27:45

DEBUG:config:💬 Query received: hey

19/01/2024

21:27:45

💬 Query received: hey

19/01/2024

21:27:45

DEBUG:config:[Document(page_content='hey')]

19/01/2024

21:27:45

[Document(page_content='hey')]

19/01/2024

21:27:46

DEBUG:openai:message='Request to OpenAI API' method=post path=https://api.openai.com/v1/embeddings

19/01/2024

21:27:46

message='Request to OpenAI API' method=post path=https://api.openai.com/v1/embeddings

19/01/2024

21:27:46

DEBUG:openai:api_version=None

19/01/2024

21:27:46

api_version=None

19/01/2024

21:27:46

DEBUG:urllib3.util.retry:Converted retries value: 2 -> Retry(total=2, connect=None, read=None, redirect=None, status=None)

19/01/2024

21:27:46

Converted retries value: 2 -> Retry(total=2, connect=None, read=None, redirect=None, status=None)

19/01/2024

21:27:46

DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): api.openai.com:443

19/01/2024

21:27:46

Starting new HTTPS connection (1): api.openai.com:443

19/01/2024

21:27:46

DEBUG:urllib3.connectionpool:https://api.openai.com:443 "POST /v1/embeddings HTTP/1.1" 200 None

19/01/2024

21:27:46

https://api.openai.com:443 "POST /v1/embeddings HTTP/1.1" 200 None

19/01/2024

21:27:46

DEBUG:openai:message='OpenAI API response' path=https://api.openai.com/v1/embeddings processing_ms=20 request_id=7467ad6e233b8da46c4434d2d726e2eb response_code=200

19/01/2024

21:27:46

message='OpenAI API response' path=https://api.openai.com/v1/embeddings processing_ms=20 request_id=7467ad6e233b8da46c4434d2d726e2eb response_code=200

19/01/2024

21:27:46

/usr/local/lib/python3.9/site-packages/langchain/llms/openai.py:216: UserWarning: You are trying to use a chat model. This way of initializing it is no longer supported. Instead, please use: `from langchain.chat_models import ChatOpenAI`

19/01/2024

21:27:46

warnings.warn(

19/01/2024

21:27:46

/usr/local/lib/python3.9/site-packages/langchain/llms/openai.py:811: UserWarning: You are trying to use a chat model. This way of initializing it is no longer supported. Instead, please use: `from langchain.chat_models import ChatOpenAI`

19/01/2024

21:27:46

warnings.warn(

19/01/2024

21:27:46

DEBUG:openai:message='Request to OpenAI API' method=post path=https://api.openai.com/v1/chat/completions

19/01/2024

21:27:46

message='Request to OpenAI API' method=post path=https://api.openai.com/v1/chat/completions

19/01/2024

21:27:46

DEBUG:openai:api_version=None

19/01/2024

21:27:46

api_version=None

19/01/2024

21:27:49

DEBUG:urllib3.connectionpool:https://api.openai.com:443 "POST /v1/chat/completions HTTP/1.1" 200 None

19/01/2024

21:27:49

https://api.openai.com:443 "POST /v1/chat/completions HTTP/1.1" 200 None

19/01/2024

21:27:49

DEBUG:openai:message='OpenAI API response' path=https://api.openai.com/v1/chat/completions processing_ms=2486 request_id=99c1f207c677c83dee1a91abe5a65b66 response_code=200

19/01/2024

21:27:49

message='OpenAI API response' path=https://api.openai.com/v1/chat/completions processing_ms=2486 request_id=99c1f207c677c83dee1a91abe5a65b66 response_code=200

19/01/2024

21:27:49

DEBUG:config:💬 LLM result: {

19/01/2024

21:27:49

"message": "Hello! How can I assist you today?",

19/01/2024

21:27:49

"tags": ["greeting"],

19/01/2024

21:27:49

"is_escalate": false

19/01/2024

21:27:49

}

19/01/2024

21:27:49

💬 LLM result: {

19/01/2024

21:27:49

"message": "Hello! How can I assist you today?",

19/01/2024

21:27:49

"tags": ["greeting"],

19/01/2024

21:27:49

"is_escalate": false

19/01/2024

21:27:49

}

19/01/2024

21:27:49

DEBUG:config:Output: { "message": "Hello! How can I assist you today?", "tags": ["greeting"], "is_escalate": false}

19/01/2024

21:27:49

Output: { "message": "Hello! How can I assist you today?", "tags": ["greeting"], "is_escalate": false}

19/01/2024

21:27:49

DEBUG:config:👤 User found: identifier_type=<CHANNEL_TYPE.TELEGRAM: 2> uuid=UUID('65038b55-3012-4a87-b43f-5ff5e8b418b0') identifier='373384804' last_name=None phone=None device_fingerprint=None updated_at=datetime.datetime(2024, 1, 18, 18, 55, 23, 469251) id=1 first_name='Kenny' email=None dob=None created_at=datetime.datetime(2024, 1, 18, 18, 55, 23, 469243)

19/01/2024

21:27:49

👤 User found: identifier_type=<CHANNEL_TYPE.TELEGRAM: 2> uuid=UUID('65038b55-3012-4a87-b43f-5ff5e8b418b0') identifier='373384804' last_name=None phone=None device_fingerprint=None updated_at=datetime.datetime(2024, 1, 18, 18, 55, 23, 469251) id=1 first_name='Kenny' email=None dob=None created_at=datetime.datetime(2024, 1, 18, 18, 55, 23, 469243)

19/01/2024

21:27:49

DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): rasa-core:5005

19/01/2024

21:27:49

Starting new HTTP connection (1): rasa-core:5005

19/01/2024

21:27:50

DEBUG:urllib3.connectionpool:http://rasa-core:5005 "POST /webhooks/telegram/webhook HTTP/1.1" 200 7

19/01/2024

21:27:50

http://rasa-core:5005 "POST /webhooks/telegram/webhook HTTP/1.1" 200 7

19/01/2024

21:27:50

DEBUG:config:[🤖 RasaGPT API webhook]

19/01/2024

21:27:50

Posting data: {"update_id": "125010864", "message": {"message_id": 14, "from": {"id": 373384804, "is_bot": false, "first_name": "Kenny", "last_name": "Ong", "username": "youyi1314", "language_code": "en", "is_premium": true}, "chat": {"id": 373384804, "first_name": "Kenny", "last_name": "Ong", "username": "youyi1314", "type": "private"}, "date": 1705670865, "text": "hey", "meta": {"response": "Hello! How can I assist you today?", "tags": ["greeting"], "is_escalate": false, "session_id": "3ad102ef-71a6-466f-99c2-9b144835ed42"}}}

19/01/2024

21:27:50

19/01/2024

21:27:50

[🤖 RasaGPT API webhook]

19/01/2024

21:27:50

Rasa webhook response: success

19/01/2024

21:27:50

[🤖 RasaGPT API webhook]

19/01/2024

21:27:50

Posting data: {"update_id": "125010864", "message": {"message_id": 14, "from": {"id": 373384804, "is_bot": false, "first_name": "Kenny", "last_name": "Ong", "username": "youyi1314", "language_code": "en", "is_premium": true}, "chat": {"id": 373384804, "first_name": "Kenny", "last_name": "Ong", "username": "youyi1314", "type": "private"}, "date": 1705670865, "text": "hey", "meta": {"response": "Hello! How can I assist you today?", "tags": ["greeting"], "is_escalate": false, "session_id": "3ad102ef-71a6-466f-99c2-9b144835ed42"}}}

19/01/2024

21:27:50

19/01/2024

21:27:50

[🤖 RasaGPT API webhook]

19/01/2024

21:27:50

Rasa webhook response: success

```

Chat Actions:

<img width="1728" alt="Screenshot 2024-01-19 at 9 31 46 PM" src="https://github.com/paulpierre/RasaGPT/assets/8391468/7e64efdc-a690-433a-8db4-7437999514ce">

and my telegram din get any message

<img width="1746" alt="Screenshot 2024-01-19 at 9 25 18 PM" src="https://github.com/paulpierre/RasaGPT/assets/8391468/ef6af710-4458-4d18-b173-0d5d62f904bf">

| open | 2024-01-19T13:32:19Z | 2024-01-31T08:02:05Z | https://github.com/paulpierre/RasaGPT/issues/68 | [] | youyi1314 | 0 |

jupyterhub/zero-to-jupyterhub-k8s | jupyter | 2,937 | Migrate from v1.23 kube-scheduler binary to v1.25 | We run into this issue trying to do it though.

```

E1110 14:36:04.801876 1 run.go:74] "command failed" err="couldn't create resource lock: endpoints lock is removed, migrate to endpointsleases"

``` | closed | 2022-11-10T14:38:59Z | 2022-11-14T10:18:48Z | https://github.com/jupyterhub/zero-to-jupyterhub-k8s/issues/2937 | [

"maintenance"

] | consideRatio | 1 |

vaexio/vaex | data-science | 1,562 | vaex groupby agg sum of all columns for a larger dataset | I have a dataset consisting of 1800000 rows and 45 columns the operation that I am trying to perform is group by one column, the sum of other columns

the 1st step I did is considering data_df as my data frame and all the columns are numeric

```

columns= data_df.column_names

df_result = df.groupby(columns,agg='sum')

```

the result is Kernal getting restarted the RAM of the system is 32 GB

another approach that I tried

```

df=None

for col in colm:

print("the col is ",col)

if df is None:

df= data_df.groupby(data_df.MSISDN, agg=[vaex.agg.sum(col)])

else:

dfTemp= data_df.groupby(data_df.MSISDN, agg=[vaex.agg.sum(col)])

df =df.join(dfTemp,left_on="MSISDN",right_on ="MSISDN",how ="inner",allow_duplication=True)

del dfTemp

```

here I am able to find the sum up to 11 columns then the kernel gets restarted again is there any other way to get the results using vaex ?

thanks! | closed | 2021-09-02T12:19:20Z | 2022-08-07T19:40:38Z | https://github.com/vaexio/vaex/issues/1562 | [] | spb722 | 2 |

amidaware/tacticalrmm | django | 2,061 | [UI ISSUE] Ping checks output nonsensical | **Is your feature request related to a problem? Please describe.**

The outputs of ping checks are unusable at best.

There is no Y axis explanation, the output does not explain why it failed

**Describe the solution you'd like**

just like cpu and ram the output should be on a chart with the answer time as the X value

output should be readable

and it should do only 1 ping not the default 3 or set the amount and average

**Describe alternatives you've considered**

back to scripting this one :/

| open | 2024-11-04T12:17:19Z | 2024-11-13T10:57:13Z | https://github.com/amidaware/tacticalrmm/issues/2061 | [] | P6g9YHK6 | 2 |

pytorch/pytorch | deep-learning | 149,872 | Do all lazy imports for torch.compile in one place? | When benchmarking torch.compile with warm start, I noticed 2s of time in the backend before pre-grad passes were called. Upon further investigation I discovered this is just the time of lazy imports.

Lazy imports can distort profiles and hide problems, especially when torch.compile behavior changes on the first iteration vs next iterations.

Strawman: put all of the lazy compiles for torch.compile into one function (named "lazy_imports"), call this from somewhere (maybe on the first torch.compile call...), and ensure that it shows up on profiles aptly named | open | 2025-03-24T19:23:54Z | 2025-03-24T19:23:54Z | https://github.com/pytorch/pytorch/issues/149872 | [] | zou3519 | 0 |

microsoft/MMdnn | tensorflow | 58 | [Group convolution in Keras] ResNeXt mxnet -> IR -> keras | Hi

Thank you for a great covert tool.

I am trying to convert from mxnet resnext to keras.

symbol file: http://data.mxnet.io/models/imagenet/resnext/101-layers/resnext-101-64x4d-symbol.json

param file: http://data.mxnet.io/models/imagenet/resnext/101-layers/resnext-101-64x4d-0000.params

I could convert from mxnet to IR with no error,

>python -m mmdnn.conversion._script.convertToIR -f mxnet -n resnext-101-64x4d-symbol.json -w resnext-101-64x4d-0000.params -d resnext-101-64x4d --inputShape 3 224 224

but failed to convert from IR to keras with an error below.

Would you support this model?

Regards,

-----

>python -m mmdnn.conversion._script.IRToCode -f keras --IRModelPath resnext-101-64x4d.pb --dstModelPath keras_resnext-101-64x4d.py

Parse file [resnext-101-64x4d.pb] with binary format successfully.

Traceback (most recent call last):

File "C:\Anaconda3\lib\runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "C:\Anaconda3\lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\_script\IRToCode.py", line 120, in <module>

_main()

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\_script\IRToCode.py", line 115, in _main

ret = _convert(args)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\_script\IRToCode.py", line 56, in _convert

emitter.run(args.dstModelPath, args.dstWeightPath, args.phase)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\common\DataStructure\emitter.py", line 21, in run

self.save_code(dstNetworkPath, phase)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\common\DataStructure\emitter.py", line 53, in save_code

code = self.gen_code(phase)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\keras\keras2_emitter.py", line 95, in gen_code

func(current_node)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\keras\keras2_emitter.py", line 194, in emit_Conv

return self._emit_convolution(IR_node, 'layers.Conv{}D'.format(dim))

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\keras\keras2_emitter.py", line 179, in _emit_convolution

input_node, padding = self._defuse_padding(IR_node)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\keras\keras2_emitter.py", line 160, in _defuse_padding

padding = self._convert_padding(padding)

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\keras\keras2_emitter.py", line 139, in _convert_padding

padding = convert_onnx_pad_to_tf(padding)[1:-1]

File "C:\Anaconda3\lib\site-packages\mmdnn\conversion\common\utils.py", line 62, in convert_onnx_pad_to_tf

return np.transpose(np.array(pads).reshape([2, -1])).reshape(-1, 2).tolist()

ValueError: cannot reshape array of size 1 into shape (2,newaxis)

| closed | 2018-01-18T13:38:09Z | 2018-12-27T07:32:09Z | https://github.com/microsoft/MMdnn/issues/58 | [

"bug",

"enhancement",

"help wanted"

] | kamikawa | 14 |

HIT-SCIR/ltp | nlp | 58 | 词性"z" | 在PoSTagging的结果中含有“z”词性(依照北大标注规范),但是在Parser的训练数据中没有"z"词性。

一个解决方法是用自动词性+god dep-relation训练一个parser model。

另外,是否需要z词性还是需要再讨论。

| closed | 2014-04-10T02:57:43Z | 2018-06-30T13:52:37Z | https://github.com/HIT-SCIR/ltp/issues/58 | [

"bug"

] | Oneplus | 1 |

davidsandberg/facenet | computer-vision | 1,157 | Liveness detection | Is it possible to do a liveness check and ensure that it is not a printed photo that the user is holding in front of a webcam? | open | 2020-05-30T13:52:49Z | 2020-05-31T21:15:02Z | https://github.com/davidsandberg/facenet/issues/1157 | [] | dan-developer | 1 |

zappa/Zappa | django | 838 | [Migrated] binary_support logic in handler.py (0.51.0) broke compressed text response | Originally from: https://github.com/Miserlou/Zappa/issues/2080 by [martinv13](https://github.com/martinv13)

<!--- Provide a general summary of the issue in the Title above -->

## Context

The `binary_support` setting used to allow compressing response at application level (for instance using `flask_compress`) in version 0.50.0. As of 0.51.0 it no longer works.

## Expected Behavior

<!--- Tell us what should happen -->

Compressed response using flask_compress should be possible.

## Actual Behavior

<!--- Tell us what happens instead -->

In `handler.py`, response is forced through `response.get_data(as_text=True)`, which fails for compressed payload, thus throwing an error. This is due to the modifications in #2029 which fixed a bug (previously all responses where base64 encoded), but introduced this one.

## Possible Fix

<!--- Not obligatory, but suggest a fix or reason for the bug -->

A possibility would be to partially revert to the previous version and just change in `handler.py` the "or" for a "and" in the following condition: `not response.mimetype.startswith("text/") or response.mimetype != "application/json")`. I can propose a simple PR for this.

## Steps to Reproduce

Configure Flask with flask_compress; any text or json response will fail with the following error: `'utf-8' codec can't decode byte 0x8b in position 1: invalid start byte`

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa version used: 0.51.0

* Operating System and Python version: Python 3.7 on Lambda

* Your `zappa_settings.json`: relevant option: binary_support: true

| closed | 2021-02-20T12:52:18Z | 2022-08-16T05:15:29Z | https://github.com/zappa/Zappa/issues/838 | [

"duplicate"

] | jneves | 1 |

feder-cr/Jobs_Applier_AI_Agent_AIHawk | automation | 163 | Use chtgpt or smth else to parse existing cv and fill plain_text_resume.yaml | Make prompt or smth to use chtgpt or smth else to parse existing cv and fill plain_text_resume.yaml. Automate yaml creating | closed | 2024-08-30T15:40:07Z | 2024-09-01T03:55:57Z | https://github.com/feder-cr/Jobs_Applier_AI_Agent_AIHawk/issues/163 | [] | air55555 | 3 |

cvat-ai/cvat | tensorflow | 8,528 | logo, plate and shape recognition | show the system running and codigos | closed | 2024-10-10T08:59:55Z | 2024-10-10T09:17:54Z | https://github.com/cvat-ai/cvat/issues/8528 | [

"invalid"

] | 26092423 | 1 |

iperov/DeepFaceLab | deep-learning | 5,420 | BrokenPipeError, 2xRTX5000, Windows 10 | Hello iperov! I am getting the following error on Windows 10 and 2x RTX 5000.

I have tried:

DeepFaceLab_NVIDIA_build_08_02_2020 (Works on both RTX5000 and 2xRTX5000 )

DeepFaceLab_NVIDIA_RTX3000_series_build_10_09_2021 (Doesn't work on 2xRTX5000, works on single RTX5000)

DeepFaceLab_NVIDIA_RTX3000_series_build_10_20_2021 (Doesn't work on 2xRTX5000, works on single RTX5000)

I am getting the following error after running the "6) train SAEHD.bat" file:

----------------------------------------------------------------

Running trainer.

[new] No saved models found. Enter a name of a new model :

new

Model first run.

Choose one or several GPU idxs (separated by comma).

[CPU] : CPU

[0] : Quadro RTX 5000

[1] : Quadro RTX 5000

[0,1] Which GPU indexes to choose? :

0,1

[0] Autobackup every N hour ( 0..24 ?:help ) :

0

[n] Write preview history ( y/n ?:help ) :

n

[0] Target iteration :

0

[n] Flip SRC faces randomly ( y/n ?:help ) :

n

[y] Flip DST faces randomly ( y/n ?:help ) :

y

[16] Batch_size ( ?:help ) :

16

[128] Resolution ( 64-640 ?:help ) :

128

[wf] Face type ( h/mf/f/wf/head ?:help ) :

wf

[liae-ud] AE architecture ( ?:help ) :

liae-ud

[256] AutoEncoder dimensions ( 32-1024 ?:help ) :

256

[64] Encoder dimensions ( 16-256 ?:help ) :

64

[64] Decoder dimensions ( 16-256 ?:help ) :

64

[22] Decoder mask dimensions ( 16-256 ?:help ) :

22

[y] Masked training ( y/n ?:help ) :

y

[n] Eyes and mouth priority ( y/n ?:help ) :

n

[n] Uniform yaw distribution of samples ( y/n ?:help ) :

n

[n] Blur out mask ( y/n ?:help ) :

n

[y] Place models and optimizer on GPU ( y/n ?:help ) :

y

[y] Use AdaBelief optimizer? ( y/n ?:help ) :

y

[n] Use learning rate dropout ( n/y/cpu ?:help ) :

n

[y] Enable random warp of samples ( y/n ?:help ) :

y

[0.0] Random hue/saturation/light intensity ( 0.0 .. 0.3 ?:help ) :

0.0

[0.0] GAN power ( 0.0 .. 5.0 ?:help ) :

0.0

[0.0] Face style power ( 0.0..100.0 ?:help ) :

0.0

[0.0] Background style power ( 0.0..100.0 ?:help ) :

0.0

[none] Color transfer for src faceset ( none/rct/lct/mkl/idt/sot ?:help ) :

none

[n] Enable gradient clipping ( y/n ?:help ) :

n

[n] Enable pretraining mode ( y/n ?:help ) :

n

Initializing models: 100%|###############################################################| 5/5 [00:01<00:00, 2.83it/s]

Loading samples: 100%|##############################################################| 654/654 [00:01<00:00, 470.29it/s]

Loading samples: 100%|############################################################| 1619/1619 [00:03<00:00, 498.11it/s]

Exception in thread Thread-37:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-32:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-30:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-50:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-51:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-47:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-44:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-48:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-52:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-41:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-42:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-46:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-38:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-33:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-49:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-40:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-29:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-43:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-31:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-36:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-35:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-34:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-39:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

Exception in thread Thread-45:

Traceback (most recent call last):

File "threading.py", line 916, in _bootstrap_inner

File "threading.py", line 864, in run

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 11, in launch_thread

generator._start()

File "E:\DeepFaceLab_NVIDIA_RTX3000_series\_internal\DeepFaceLab\core\joblib\SubprocessGenerator.py", line 43, in _start

p.start()

File "multiprocessing\process.py", line 105, in start

File "multiprocessing\context.py", line 223, in _Popen

File "multiprocessing\context.py", line 322, in _Popen

File "multiprocessing\popen_spawn_win32.py", line 65, in __init__

File "multiprocessing\reduction.py", line 60, in dump

BrokenPipeError: [Errno 32] Broken pipe

| closed | 2021-11-01T01:44:40Z | 2021-11-17T11:08:33Z | https://github.com/iperov/DeepFaceLab/issues/5420 | [] | asesli | 5 |

mwaskom/seaborn | data-visualization | 3,491 | Pointplot dodge magnitude appears to have changed | Based on one of the gallery examples; the strip and point plots no longer align:

```python

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

sns.set_theme(style="whitegrid")

iris = sns.load_dataset("iris")

# "Melt" the dataset to "long-form" or "tidy" representation

iris = pd.melt(iris, "species", var_name="measurement")

# Show each observation with a scatterplot

sns.stripplot(

data=iris, x="value", y="measurement", hue="species",

dodge=True, alpha=.25, zorder=1, legend=False

)

# Show the conditional means, aligning each pointplot in the

# center of the strips by adjusting the width allotted to each

# category (.8 by default) by the number of hue levels

sns.pointplot(

data=iris, x="value", y="measurement", hue="species",

dodge=.8 - .8 / 3, palette="dark",

linestyle="none", markers="d", errorbar=None

)

```

| closed | 2023-09-24T18:31:46Z | 2023-09-25T00:20:14Z | https://github.com/mwaskom/seaborn/issues/3491 | [

"bug",

"mod:categorical"

] | mwaskom | 0 |

donnemartin/system-design-primer | python | 949 | Dbms | open | 2024-09-27T00:23:10Z | 2024-12-02T01:13:13Z | https://github.com/donnemartin/system-design-primer/issues/949 | [

"needs-review"

] | Ashishkushwaha7273 | 0 |

|

jpadilla/django-rest-framework-jwt | django | 379 | JWT_PAYLOAD_GET_USER_ID_HANDLER setting ignored in jwt_get_secret_key | In function jwt_get_secret_key() "user_id" is read directly from by payload.get('user_id') which leads to problems when using custom encoding on payload | open | 2017-09-22T08:36:42Z | 2017-12-18T20:15:45Z | https://github.com/jpadilla/django-rest-framework-jwt/issues/379 | [] | SPKorhonen | 1 |

microsoft/qlib | deep-learning | 1,446 | Normalize with the Error:ValueError: need at most 63 handles, got a sequence of length 72 | ## 🐛 Bug Description

When I was tring to normalize the 1min data, use following code,

(env38) C:\Users\Anani>python scripts/data_collector/yahoo/collector.py normalize_data --qlib_data_1d_dir ~/.qlib/qlib_data/cn_data --source_dir ~/.qlib/stock_data/source/cn_data_1min --normalize_dir ~/.qlib/stock_data/source/cn_1min_nor --region CN --interval 1min --max_workers 8

I got this ERROR code,

2023-02-22 21:54:27.234 | INFO | data_collector.utils:get_calendar_list:106 - end of get calendar list: ALL.

[6924:MainThread](2023-02-22 21:55:01,958) INFO - qlib.Initialization - [config.py:416] - default_conf: client.

[6924:MainThread](2023-02-22 21:55:02,858) INFO - qlib.Initialization - [__init__.py:74] - qlib successfully initialized based on client settings.

[6924:MainThread](2023-02-22 21:55:02,859) INFO - qlib.Initialization - [__init__.py:76] - data_path={'__DEFAULT_FREQ': WindowsPath('C:/Users/Anani/.qlib/qlib_data/cn_data')}

Exception in thread Thread-1:

Traceback (most recent call last):

File "C:\Users\Anani\anaconda3\envs\env38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Users\Anani\anaconda3\envs\env38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\Anani\anaconda3\envs\env38\lib\multiprocessing\pool.py", line 519, in _handle_workers

cls._wait_for_updates(current_sentinels, change_notifier)

File "C:\Users\Anani\anaconda3\envs\env38\lib\multiprocessing\pool.py", line 499, in _wait_for_updates

wait(sentinels, timeout=timeout)

File "C:\Users\Anani\anaconda3\envs\env38\lib\multiprocessing\connection.py", line 879, in wait

ready_handles = _exhaustive_wait(waithandle_to_obj.keys(), timeout)

File "C:\Users\Anani\anaconda3\envs\env38\lib\multiprocessing\connection.py", line 811, in _exhaustive_wait

res = _winapi.WaitForMultipleObjects(L, False, timeout)

ValueError: need at most 63 handles, got a sequence of length 72

## Environment

- Qlib version:0.9.1.99

- Python version: 3.8.12

- OS (`Windows`, `Linux`, `MacOS`): win10 LTSC 1809

- CPU: E5 2696 v3*2, 36C72T

## Additional Notes

I have learned that problem may be caused by the CPU cores >60,

Is it true? if so, how do I limited the cpu cores when do this project?

SIncerely,

| open | 2023-02-22T14:19:49Z | 2024-03-05T04:05:25Z | https://github.com/microsoft/qlib/issues/1446 | [

"bug"

] | louyuenan | 3 |

huggingface/datasets | pandas | 7,168 | sd1.5 diffusers controlnet training script gives new error | ### Describe the bug

This will randomly pop up during training now

```

Traceback (most recent call last):

File "/workspace/diffusers/examples/controlnet/train_controlnet.py", line 1192, in <module>

main(args)

File "/workspace/diffusers/examples/controlnet/train_controlnet.py", line 1041, in main

for step, batch in enumerate(train_dataloader):

File "/usr/local/lib/python3.11/dist-packages/accelerate/data_loader.py", line 561, in __iter__

next_batch = next(dataloader_iter)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/torch/utils/data/dataloader.py", line 630, in __next__

data = self._next_data()

^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/torch/utils/data/dataloader.py", line 673, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/torch/utils/data/_utils/fetch.py", line 50, in fetch

data = self.dataset.__getitems__(possibly_batched_index)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 2746, in __getitems__

batch = self.__getitem__(keys)

^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 2742, in __getitem__

return self._getitem(key)

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 2727, in _getitem

formatted_output = format_table(

^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 639, in format_table

return formatter(pa_table, query_type=query_type)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 407, in __call__

return self.format_batch(pa_table)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 521, in format_batch

batch = self.python_features_decoder.decode_batch(batch)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 228, in decode_batch

return self.features.decode_batch(batch) if self.features else batch

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/features/features.py", line 2084, in decode_batch

[

File "/usr/local/lib/python3.11/dist-packages/datasets/features/features.py", line 2085, in <listcomp>

decode_nested_example(self[column_name], value, token_per_repo_id=token_per_repo_id)

File "/usr/local/lib/python3.11/dist-packages/datasets/features/features.py", line 1403, in decode_nested_example

return schema.decode_example(obj, token_per_repo_id=token_per_repo_id)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/features/image.py", line 188, in decode_example

image.load() # to avoid "Too many open files" errors

```

### Steps to reproduce the bug

Train on diffusers sd1.5 controlnet example script

This will pop up randomly, you can see in wandb below when i manually resume run everytime this error appears

### Expected behavior

Training to continue without above error

### Environment info

- datasets version: 3.0.0

- Platform: Linux-6.5.0-44-generic-x86_64-with-glibc2.35

- Python version: 3.11.9

- huggingface_hub version: 0.25.1

- PyArrow version: 17.0.0

- Pandas version: 2.2.3

- fsspec version: 2024.6.1

Training on 4090 | closed | 2024-09-25T01:42:49Z | 2024-09-30T05:24:03Z | https://github.com/huggingface/datasets/issues/7168 | [] | Night1099 | 3 |

junyanz/pytorch-CycleGAN-and-pix2pix | computer-vision | 1,472 | How to use a pre-trained model for training own dataset? | How to use a pre-trained model for training own dataset? | open | 2022-08-23T06:55:03Z | 2022-09-06T20:35:34Z | https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/1472 | [] | TinkingLoeng | 1 |

google-research/bert | nlp | 971 | 请问获得训练好的词向量的值? How to get the pretrained embedding_table? | I wanted to know how to get the word embedding after I pretrain the model. Are there someone kindly tell me? | closed | 2019-12-27T14:03:25Z | 2021-04-26T14:13:54Z | https://github.com/google-research/bert/issues/971 | [] | WHQ1111 | 2 |

microsoft/nlp-recipes | nlp | 73 | [Example] Text classification using MT-DNN | closed | 2019-05-28T16:50:01Z | 2020-01-14T17:51:36Z | https://github.com/microsoft/nlp-recipes/issues/73 | [

"example"

] | saidbleik | 7 |

|

pytest-dev/pytest-html | pytest | 817 | In Selenium pyTest framework getting error as "fixture 'setup_and_teardown' not found" | Working on one selenium script using pyTest framework but while passing setup and teardown method from conftest.py file getting error

PFA script for reference

-

@pytest.mark.usefixtures("setup_and_teardown")

class TestSearch:

def test_search_for_a_valid_product(self):

self.driver.find_element(By.NAME, "search").send_keys("HP")

self.driver.find_element(By.XPATH, "//button\[contains(@class,'btn-default')\]").click()

assert self.driver.find_element(By.LINK_TEXT, "HP LP3065").is_displayed()

-

@pytest.fixture()

def setup_and_teardown(request):

driver = webdriver.Chrome()

driver.maximize_window()

driver.get("https://tutorialsninja.com/demo/")

request.cls.driver = driver

yield

driver.quit()

-

All error are getting to self.driver as to minimize the code delicacy I have written setup and teardown function in conftest.py file

\` | closed | 2024-06-27T08:00:16Z | 2024-06-27T12:19:11Z | https://github.com/pytest-dev/pytest-html/issues/817 | [] | Tushar7337 | 2 |

healthchecks/healthchecks | django | 127 | C# usage example | ```

using (var client = new System.Net.WebClient())

{

client.DownloadString("ping url");

}

```

I know nothing about C#. Does the above look OK? | closed | 2017-07-19T17:39:28Z | 2018-08-20T09:40:16Z | https://github.com/healthchecks/healthchecks/issues/127 | [] | cuu508 | 3 |

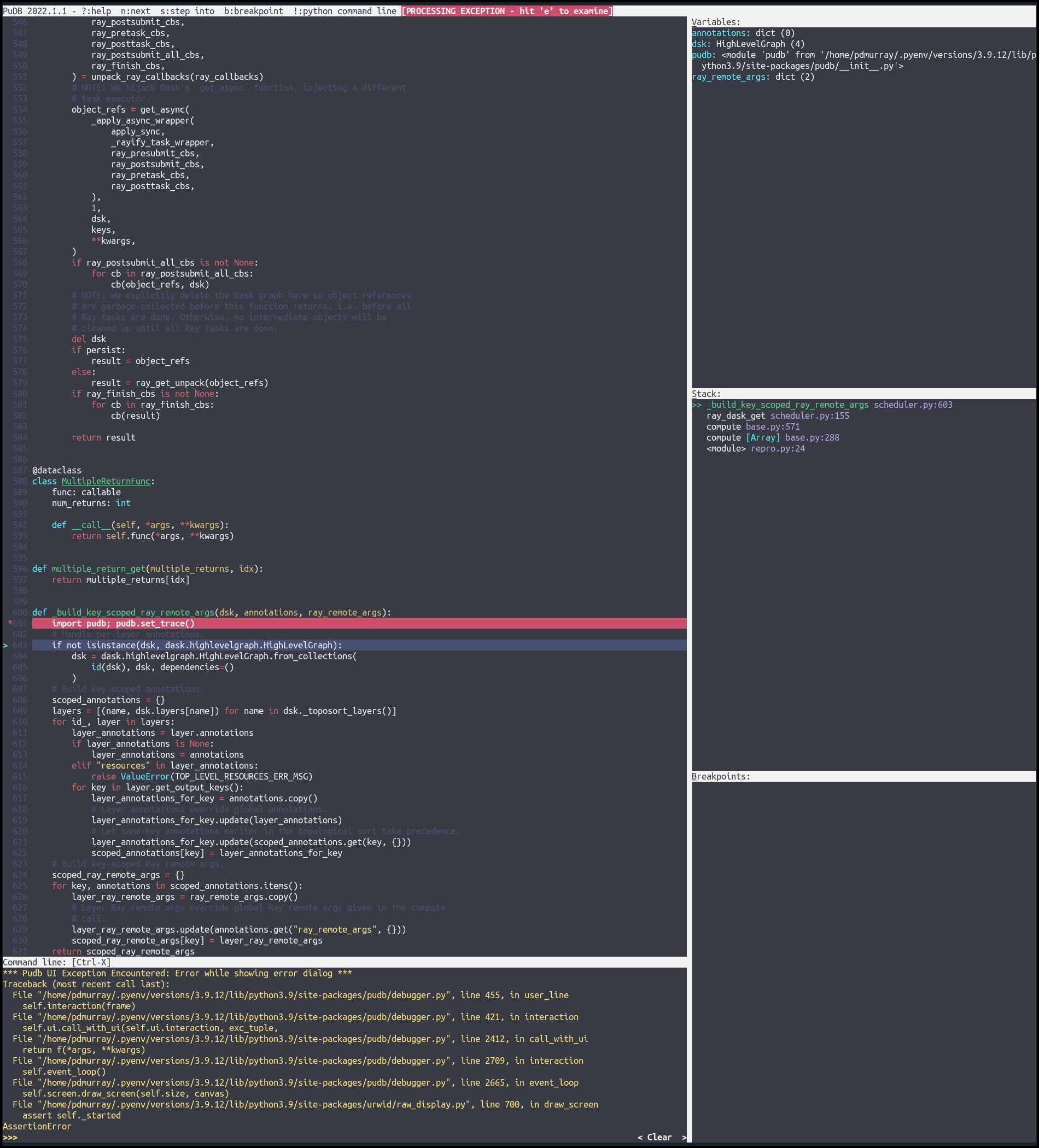

inducer/pudb | pytest | 518 | Exception upon leaving ipython prompt to return to pudb TUI | **Describe the bug**

When I type `!` to drop into an ipython prompt from pudb, upon typing `exit` to return to the pudb TUI I get an error:

```python

*** Pudb UI Exception Encountered: Error while showing error dialog ***

Traceback (most recent call last):